research

The Center for Noise and Vibration Control at KAIST announced that their coughing detection camera recognizes where coughing happens, visualizing the locations. The resulting cough recognition camera can track and record information about the person who coughed, their location, and the number of coughs on a real-time basis.

Professor Yong-Hwa Park from the Department of Mechanical Engineering developed a deep learning-based cough recognition model to classify a coughing sound in real time. The coughing event classification model is combined with a sound camera that visualizes their locations in public places. The research team said they achieved a best test accuracy of 87.4 %.

Professor Park said that it will be useful medical equipment during epidemics in public places such as schools, offices, and restaurants, and to constantly monitor patients’ conditions in a hospital room.

Fever and coughing are the most relevant respiratory disease symptoms, among which fever can be recognized remotely using thermal cameras. This new technology is expected to be very helpful for detecting epidemic transmissions in a non-contact way. The cough event classification model is combined with a sound camera that visualizes the cough event and indicates the location in the video image.

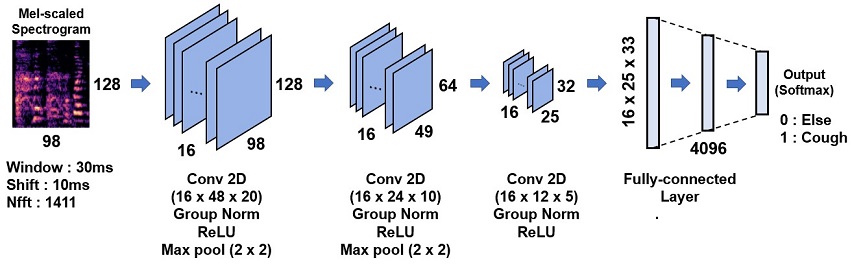

To develop a cough recognition model, a supervised learning was conducted with a convolutional neural network (CNN). The model performs binary classification with an input of a one-second sound profile feature, generating output to be either a cough event or something else.

In the training and evaluation, various datasets were collected from Audioset, DEMAND, ETSI, and TIMIT. Coughing and others sounds were extracted from Audioset, and the rest of the datasets were used as background noises for data augmentation so that this model could be generalized for various background noises in public places.

The dataset was augmented by mixing coughing sounds and other sounds from Audioset and background noises with the ratio of 0.15 to 0.75, then the overall volume was adjusted to 0.25 to 1.0 times to generalize the model for various distances.

The training and evaluation datasets were constructed by dividing the augmented dataset by 9:1, and the test dataset was recorded separately in a real office environment.

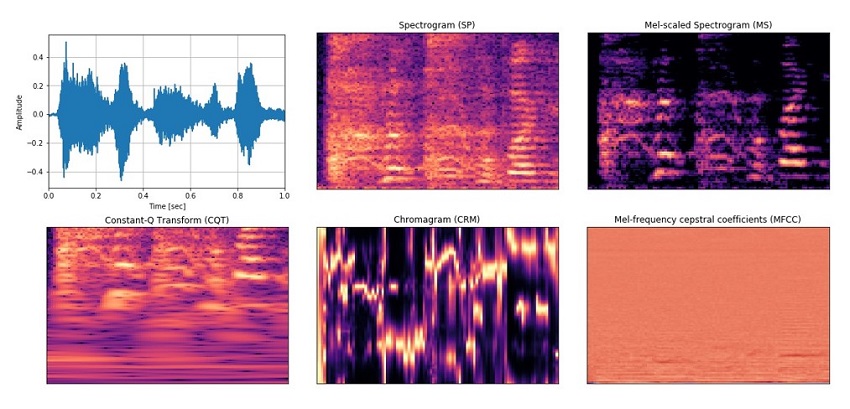

In the optimization procedure of the network model, training was conducted with various combinations of five acoustic features including spectrogram, Mel-scaled spectrogram and Mel-frequency cepstrum coefficients with seven optimizers. The performance of each combination was compared with the test dataset. The best test accuracy of 87.4% was achieved with Mel-scaled Spectrogram as the acoustic feature and ASGD as the optimizer.

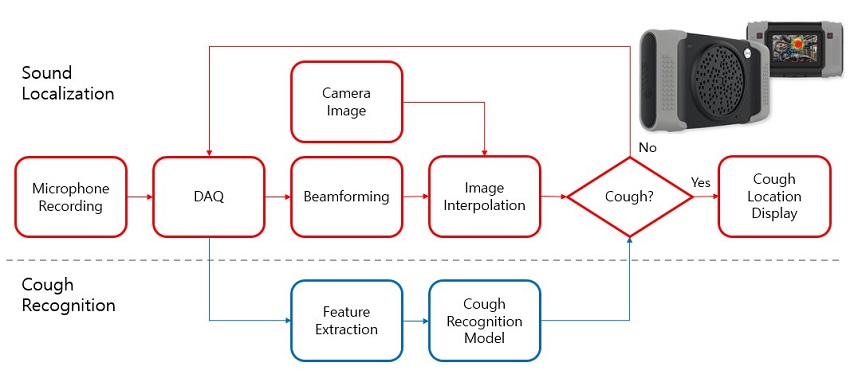

The trained cough recognition model was combined with a sound camera. The sound camera is composed of a microphone array and a camera module. A beamforming process is applied to a collected set of acoustic data to find out the direction of incoming sound source. The integrated cough recognition model determines whether the sound is cough or not. If it is, the location of cough is visualized as a contour image with a ‘cough’ label at the location of the coughing sound source in a video image.

A pilot test of the cough recognition camera in an office environment shows that it successfully distinguishes cough events and other events even in a noisy environment. In addition, it can track the location of the person who coughed and count the number of coughs in real time. The performance will be improved further with additional training data obtained from other real environments such as hospitals and classrooms.

Professor Park said, “In a pandemic situation like we are experiencing with COVID-19, a cough detection camera can contribute to the prevention and early detection of epidemics in public places. Especially when applied to a hospital room, the patient's condition can be tracked 24 hours a day and support more accurate diagnoses while reducing the effort of the medical staff."

This study was conducted in collaboration with SM Instruments Inc.

< Figure 1. Architecture of the cough recognition model based on CNN. >

< Figure 2. Examples of sound features used to train the cough recognition model. >

< Figure 3. Cough detection camera and its signal processing block diagram. >

Profile: Yong-Hwa Park, Ph.D.

Associate Professor

yhpark@kaist.ac.kr

http://human.kaist.ac.kr/

Human-Machine Interaction Laboratory (HuMaN Lab.)

Department of Mechanical Engineering (ME)

Korea Advanced Institute of Science and Technology (KAIST)

https://www.kaist.ac.kr/en/

Daejeon 34141, Korea

Profile: Gyeong Tae Lee

PhD Candidate

hansaram@kaist.ac.kr

HuMaN Lab., ME, KAIST

Profile: Seong Hu Kim

PhD Candidate

tjdgnkim@kaist.ac.kr

HuMaN Lab., ME, KAIST

Profile: Hyeonuk Nam

PhD Candidate

frednam@kaist.ac.kr

HuMaN Lab., ME, KAIST

Profile: Young-Key Kim

CEO

sales@smins.co.kr

http://en.smins.co.kr/

SM Instruments Inc.

Daejeon 34109, Korea

(END)

-

event Research Day Highlights the Most Impactful Technologies of the Year

Technology Converting Full HD Image to 4-Times Higher UHD Via Deep Learning Cited as the Research of the Year The technology converting a full HD image into a four-times higher UHD image in real time via AI deep learning was recognized as the Research of the Year. Professor Munchurl Kim from the School of Electrical Engineering who developed the technology won the Research of the Year Grand Prize during the 2021 KAIST Research Day ceremony on May 25. Professor Kim was lauded for conducting cr

2021-05-31 -

research A Deep-Learned E-Skin Decodes Complex Human Motion

A deep-learning powered single-strained electronic skin sensor can capture human motion from a distance. The single strain sensor placed on the wrist decodes complex five-finger motions in real time with a virtual 3D hand that mirrors the original motions. The deep neural network boosted by rapid situation learning (RSL) ensures stable operation regardless of its position on the surface of the skin. Conventional approaches require many sensor networks that cover the entire curvilinear surfac

2020-06-10