Se-Bum+Paik

-

KAIST Proposes a New Way to Circumvent a Long-time Frustration in Neural Computing

The human brain begins learning through spontaneous random activities even before it receives sensory information from the external world. The technology developed by the KAIST research team enables much faster and more accurate learning when exposed to actual data by pre-learning random information in a brain-mimicking artificial neural network, and is expected to be a breakthrough in the development of brain-based artificial intelligence and neuromorphic computing technology in the future.

KAIST (President Kwang-Hyung Lee) announced on the 16th of December that Professor Se-Bum Paik 's research team in the Department of Brain Cognitive Sciences solved the weight transport problem*, a long-standing challenge in neural network learning, and through this, explained the principles that enable resource-efficient learning in biological brain neural networks.

*Weight transport problem: This is the biggest obstacle to the development of artificial intelligence that mimics the biological brain. It is the fundamental reason why large-scale memory and computational work are required in the learning of general artificial neural networks, unlike biological brains.

Over the past several decades, the development of artificial intelligence has been based on error backpropagation learning proposed by Geoffery Hinton, who won the Nobel Prize in Physics this year. However, error backpropagation learning was thought to be impossible in biological brains because it requires the unrealistic assumption that individual neurons must know all the connected information across multiple layers in order to calculate the error signal for learning.

< Figure 1. Illustration depicting the method of random noise training and its effects >

This difficult problem, called the weight transport problem, was raised by Francis Crick, who won the Nobel Prize in Physiology or Medicine for the discovery of the structure of DNA, after the error backpropagation learning was proposed by Hinton in 1986. Since then, it has been considered the reason why the operating principles of natural neural networks and artificial neural networks will forever be fundamentally different.

At the borderline of artificial intelligence and neuroscience, researchers including Hinton have continued to attempt to create biologically plausible models that can implement the learning principles of the brain by solving the weight transport problem.

In 2016, a joint research team from Oxford University and DeepMind in the UK first proposed the concept of error backpropagation learning being possible without weight transport, drawing attention from the academic world. However, biologically plausible error backpropagation learning without weight transport was inefficient, with slow learning speeds and low accuracy, making it difficult to apply in reality.

KAIST research team noted that the biological brain begins learning through internal spontaneous random neural activity even before experiencing external sensory experiences. To mimic this, the research team pre-trained a biologically plausible neural network without weight transport with meaningless random information (random noise).

As a result, they showed that the symmetry of the forward and backward neural cell connections of the neural network, which is an essential condition for error backpropagation learning, can be created. In other words, learning without weight transport is possible through random pre-training.

< Figure 2. Illustration depicting the meta-learning effect of random noise training >

The research team revealed that learning random information before learning actual data has the property of meta-learning, which is ‘learning how to learn.’ It was shown that neural networks that pre-learned random noise perform much faster and more accurate learning when exposed to actual data, and can achieve high learning efficiency without weight transport.

< Figure 3. Illustration depicting research on understanding the brain's operating principles through artificial neural networks >

Professor Se-Bum Paik said, “It breaks the conventional understanding of existing machine learning that only data learning is important, and provides a new perspective that focuses on the neuroscience principles of creating appropriate conditions before learning,” and added, “It is significant in that it solves important problems in artificial neural network learning through clues from developmental neuroscience, and at the same time provides insight into the brain’s learning principles through artificial neural network models.”

This study, in which Jeonghwan Cheon, a Master’s candidate of KAIST Department of Brain and Cognitive Sciences participated as the first author and Professor Sang Wan Lee of the same department as a co-author, was presented at the 38th Neural Information Processing Systems (NeurIPS), the world's top artificial intelligence conference, on December 14th in Vancouver, Canada. (Paper title: Pretraining with random noise for fast and robust learning without weight transport)

This study was conducted with the support of the National Research Foundation of Korea's Basic Research Program in Science and Engineering, the Information and Communications Technology Planning and Evaluation Institute's Talent Development Program, and the KAIST Singularity Professor Program.

2024.12.16 View 7274

KAIST Proposes a New Way to Circumvent a Long-time Frustration in Neural Computing

The human brain begins learning through spontaneous random activities even before it receives sensory information from the external world. The technology developed by the KAIST research team enables much faster and more accurate learning when exposed to actual data by pre-learning random information in a brain-mimicking artificial neural network, and is expected to be a breakthrough in the development of brain-based artificial intelligence and neuromorphic computing technology in the future.

KAIST (President Kwang-Hyung Lee) announced on the 16th of December that Professor Se-Bum Paik 's research team in the Department of Brain Cognitive Sciences solved the weight transport problem*, a long-standing challenge in neural network learning, and through this, explained the principles that enable resource-efficient learning in biological brain neural networks.

*Weight transport problem: This is the biggest obstacle to the development of artificial intelligence that mimics the biological brain. It is the fundamental reason why large-scale memory and computational work are required in the learning of general artificial neural networks, unlike biological brains.

Over the past several decades, the development of artificial intelligence has been based on error backpropagation learning proposed by Geoffery Hinton, who won the Nobel Prize in Physics this year. However, error backpropagation learning was thought to be impossible in biological brains because it requires the unrealistic assumption that individual neurons must know all the connected information across multiple layers in order to calculate the error signal for learning.

< Figure 1. Illustration depicting the method of random noise training and its effects >

This difficult problem, called the weight transport problem, was raised by Francis Crick, who won the Nobel Prize in Physiology or Medicine for the discovery of the structure of DNA, after the error backpropagation learning was proposed by Hinton in 1986. Since then, it has been considered the reason why the operating principles of natural neural networks and artificial neural networks will forever be fundamentally different.

At the borderline of artificial intelligence and neuroscience, researchers including Hinton have continued to attempt to create biologically plausible models that can implement the learning principles of the brain by solving the weight transport problem.

In 2016, a joint research team from Oxford University and DeepMind in the UK first proposed the concept of error backpropagation learning being possible without weight transport, drawing attention from the academic world. However, biologically plausible error backpropagation learning without weight transport was inefficient, with slow learning speeds and low accuracy, making it difficult to apply in reality.

KAIST research team noted that the biological brain begins learning through internal spontaneous random neural activity even before experiencing external sensory experiences. To mimic this, the research team pre-trained a biologically plausible neural network without weight transport with meaningless random information (random noise).

As a result, they showed that the symmetry of the forward and backward neural cell connections of the neural network, which is an essential condition for error backpropagation learning, can be created. In other words, learning without weight transport is possible through random pre-training.

< Figure 2. Illustration depicting the meta-learning effect of random noise training >

The research team revealed that learning random information before learning actual data has the property of meta-learning, which is ‘learning how to learn.’ It was shown that neural networks that pre-learned random noise perform much faster and more accurate learning when exposed to actual data, and can achieve high learning efficiency without weight transport.

< Figure 3. Illustration depicting research on understanding the brain's operating principles through artificial neural networks >

Professor Se-Bum Paik said, “It breaks the conventional understanding of existing machine learning that only data learning is important, and provides a new perspective that focuses on the neuroscience principles of creating appropriate conditions before learning,” and added, “It is significant in that it solves important problems in artificial neural network learning through clues from developmental neuroscience, and at the same time provides insight into the brain’s learning principles through artificial neural network models.”

This study, in which Jeonghwan Cheon, a Master’s candidate of KAIST Department of Brain and Cognitive Sciences participated as the first author and Professor Sang Wan Lee of the same department as a co-author, was presented at the 38th Neural Information Processing Systems (NeurIPS), the world's top artificial intelligence conference, on December 14th in Vancouver, Canada. (Paper title: Pretraining with random noise for fast and robust learning without weight transport)

This study was conducted with the support of the National Research Foundation of Korea's Basic Research Program in Science and Engineering, the Information and Communications Technology Planning and Evaluation Institute's Talent Development Program, and the KAIST Singularity Professor Program.

2024.12.16 View 7274 -

Face Detection in Untrained Deep Neural Networks

A KAIST team shows that primitive visual selectivity of faces can arise spontaneously in completely untrained deep neural networks

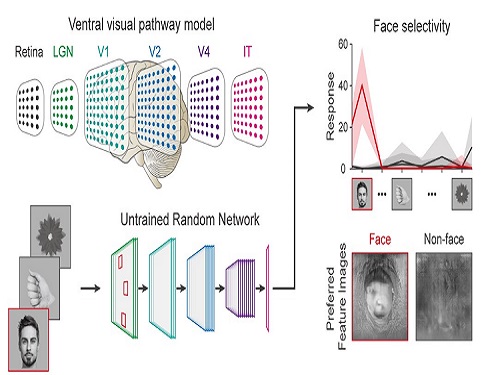

Researchers have found that higher visual cognitive functions can arise spontaneously in untrained neural networks. A KAIST research team led by Professor Se-Bum Paik from the Department of Bio and Brain Engineering has shown that visual selectivity of facial images can arise even in completely untrained deep neural networks.

This new finding has provided revelatory insights into mechanisms underlying the development of cognitive functions in both biological and artificial neural networks, also making a significant impact on our understanding of the origin of early brain functions before sensory experiences.

The study published in Nature Communications on December 16 demonstrates that neuronal activities selective to facial images are observed in randomly initialized deep neural networks in the complete absence of learning, and that they show the characteristics of those observed in biological brains.

The ability to identify and recognize faces is a crucial function for social behavior, and this ability is thought to originate from neuronal tuning at the single or multi-neuronal level. Neurons that selectively respond to faces are observed in young animals of various species, and this raises intense debate whether face-selective neurons can arise innately in the brain or if they require visual experience.

Using a model neural network that captures properties of the ventral stream of the visual cortex, the research team found that face-selectivity can emerge spontaneously from random feedforward wirings in untrained deep neural networks. The team showed that the character of this innate face-selectivity is comparable to that observed with face-selective neurons in the brain, and that this spontaneous neuronal tuning for faces enables the network to perform face detection tasks.

These results imply a possible scenario in which the random feedforward connections that develop in early, untrained networks may be sufficient for initializing primitive visual cognitive functions.

Professor Paik said, “Our findings suggest that innate cognitive functions can emerge spontaneously from the statistical complexity embedded in the hierarchical feedforward projection circuitry, even in the complete absence of learning”.

He continued, “Our results provide a broad conceptual advance as well as advanced insight into the mechanisms underlying the development of innate functions in both biological and artificial neural networks, which may unravel the mystery of the generation and evolution of intelligence.” This work was supported by the National Research Foundation of Korea (NRF) and by the KAIST singularity research project.

-PublicationSeungdae Baek, Min Song, Jaeson Jang, Gwangsu Kim, and Se-Bum Baik, “Face detection in untrained deep neural network,” Nature Communications 12, 7328 on Dec.16, 2021

(https://doi.org/10.1038/s41467-021-27606-9)

-ProfileProfessor Se-Bum PaikVisual System and Neural Network LaboratoryProgram of Brain and Cognitive EngineeringDepartment of Bio and Brain EngineeringCollege of EngineeringKAIST

2021.12.21 View 11312

Face Detection in Untrained Deep Neural Networks

A KAIST team shows that primitive visual selectivity of faces can arise spontaneously in completely untrained deep neural networks

Researchers have found that higher visual cognitive functions can arise spontaneously in untrained neural networks. A KAIST research team led by Professor Se-Bum Paik from the Department of Bio and Brain Engineering has shown that visual selectivity of facial images can arise even in completely untrained deep neural networks.

This new finding has provided revelatory insights into mechanisms underlying the development of cognitive functions in both biological and artificial neural networks, also making a significant impact on our understanding of the origin of early brain functions before sensory experiences.

The study published in Nature Communications on December 16 demonstrates that neuronal activities selective to facial images are observed in randomly initialized deep neural networks in the complete absence of learning, and that they show the characteristics of those observed in biological brains.

The ability to identify and recognize faces is a crucial function for social behavior, and this ability is thought to originate from neuronal tuning at the single or multi-neuronal level. Neurons that selectively respond to faces are observed in young animals of various species, and this raises intense debate whether face-selective neurons can arise innately in the brain or if they require visual experience.

Using a model neural network that captures properties of the ventral stream of the visual cortex, the research team found that face-selectivity can emerge spontaneously from random feedforward wirings in untrained deep neural networks. The team showed that the character of this innate face-selectivity is comparable to that observed with face-selective neurons in the brain, and that this spontaneous neuronal tuning for faces enables the network to perform face detection tasks.

These results imply a possible scenario in which the random feedforward connections that develop in early, untrained networks may be sufficient for initializing primitive visual cognitive functions.

Professor Paik said, “Our findings suggest that innate cognitive functions can emerge spontaneously from the statistical complexity embedded in the hierarchical feedforward projection circuitry, even in the complete absence of learning”.

He continued, “Our results provide a broad conceptual advance as well as advanced insight into the mechanisms underlying the development of innate functions in both biological and artificial neural networks, which may unravel the mystery of the generation and evolution of intelligence.” This work was supported by the National Research Foundation of Korea (NRF) and by the KAIST singularity research project.

-PublicationSeungdae Baek, Min Song, Jaeson Jang, Gwangsu Kim, and Se-Bum Baik, “Face detection in untrained deep neural network,” Nature Communications 12, 7328 on Dec.16, 2021

(https://doi.org/10.1038/s41467-021-27606-9)

-ProfileProfessor Se-Bum PaikVisual System and Neural Network LaboratoryProgram of Brain and Cognitive EngineeringDepartment of Bio and Brain EngineeringCollege of EngineeringKAIST

2021.12.21 View 11312 -

A Biological Strategy Reveals How Efficient Brain Circuitry Develops Spontaneously

- A KAIST team’s mathematical modelling shows that the topographic tiling of cortical maps originates from bottom-up projections from the periphery. -

Researchers have explained how the regularly structured topographic maps in the visual cortex of the brain could arise spontaneously to efficiently process visual information. This research provides a new framework for understanding functional architectures in the visual cortex during early developmental stages.

A KAIST research team led by Professor Se-Bum Paik from the Department of Bio and Brain Engineering has demonstrated that the orthogonal organization of retinal mosaics in the periphery is mirrored onto the primary visual cortex and initiates the clustered topography of higher visual areas in the brain.

This new finding provides advanced insights into the mechanisms underlying a biological strategy of brain circuitry for the efficient tiling of sensory modules. The study was published in Cell Reports on January 5.

In higher mammals, the primary visual cortex is organized into various functional maps for neural tuning such as ocular dominance, orientation selectivity, and spatial frequency selectivity. Correlations between the topographies of different maps have been observed, implying their systematic organizations for the efficient tiling of sensory modules across cortical areas.

These observations have suggested that a common principle for developing individual functional maps may exist. However, it has remained unclear how such topographical organizations could arise spontaneously in the primary visual cortex of various species.

The research team found that the orthogonal organization in the primary visual cortex of the brain originates from the spatial organization in bottom-up feedforward projections. The team showed that an orthogonal relationship among sensory modules already exists in the retinal mosaics, and that this is mirrored onto the primary visual cortex to initiate the clustered topography.

By analyzing the retinal ganglion cell mosaics data in cats and monkeys, the researchers found that the structure of ON-OFF feedforward afferents is organized into a topographic tiling, analogous to the orthogonal intersection of cortical tuning maps.

Furthermore, the team’s analysis of previously published data collected on cats also showed that the ocular dominance, orientation selectivity, and spatial frequency selectivity in the primary visual cortex are correlated with the spatial profiles of the retinal inputs, implying that efficient tiling of cortical domains can originate from the regularly structured retinal patterns.

Professor Paik said, “Our study suggests that the structure of the periphery with simple feedforward wiring can provide the basis for a mechanism by which the early visual circuitry is assembled.”

He continued, “This is the first report that spatially organized retinal inputs from the periphery provide a common blueprint for multi-modal sensory modules in the visual cortex during the early developmental stages. Our findings would make a significant impact on our understanding the developmental strategy of brain circuitry for efficient sensory information processing.”

This work was supported by the National Research Foundation of Korea (NRF).

Image credit: Professor Se-Bum Paik, KAIST

Image usage restrictions: News organizations may use or redistribute this image, with proper attribution, as part of news coverage of this paper only.

Publication:

Song, M, et al. (2021) Projection of orthogonal tiling from the retina to the visual cortex. Cell Reports 34, 108581. Available online at https://doi.org/10.1016/j.celrep.2020.108581

Profile:

Se-Bum Paik, Ph.D

Assistant Professor

sbpaik@kaist.ac.kr

http://vs.kaist.ac.kr/

VSNN Laboratory

Department of Bio and Brain Engineering

Program of Brain and Cognitive Engineering

http://kaist.ac.kr

Korea Advanced Institute of Science and Technology (KAIST) Daejeon, Republic of Korea

Profile:

Min Song

Ph.D. Candidate

night@kaist.ac.kr

Program of Brain and Cognitive Engineering

Profile:

Jaeson Jang, Ph.D.

Researcher

jaesonjang@kaist.ac.kr

Department of Bio and Brain Engineering, KAIST

(END)

2021.01.14 View 8660

A Biological Strategy Reveals How Efficient Brain Circuitry Develops Spontaneously

- A KAIST team’s mathematical modelling shows that the topographic tiling of cortical maps originates from bottom-up projections from the periphery. -

Researchers have explained how the regularly structured topographic maps in the visual cortex of the brain could arise spontaneously to efficiently process visual information. This research provides a new framework for understanding functional architectures in the visual cortex during early developmental stages.

A KAIST research team led by Professor Se-Bum Paik from the Department of Bio and Brain Engineering has demonstrated that the orthogonal organization of retinal mosaics in the periphery is mirrored onto the primary visual cortex and initiates the clustered topography of higher visual areas in the brain.

This new finding provides advanced insights into the mechanisms underlying a biological strategy of brain circuitry for the efficient tiling of sensory modules. The study was published in Cell Reports on January 5.

In higher mammals, the primary visual cortex is organized into various functional maps for neural tuning such as ocular dominance, orientation selectivity, and spatial frequency selectivity. Correlations between the topographies of different maps have been observed, implying their systematic organizations for the efficient tiling of sensory modules across cortical areas.

These observations have suggested that a common principle for developing individual functional maps may exist. However, it has remained unclear how such topographical organizations could arise spontaneously in the primary visual cortex of various species.

The research team found that the orthogonal organization in the primary visual cortex of the brain originates from the spatial organization in bottom-up feedforward projections. The team showed that an orthogonal relationship among sensory modules already exists in the retinal mosaics, and that this is mirrored onto the primary visual cortex to initiate the clustered topography.

By analyzing the retinal ganglion cell mosaics data in cats and monkeys, the researchers found that the structure of ON-OFF feedforward afferents is organized into a topographic tiling, analogous to the orthogonal intersection of cortical tuning maps.

Furthermore, the team’s analysis of previously published data collected on cats also showed that the ocular dominance, orientation selectivity, and spatial frequency selectivity in the primary visual cortex are correlated with the spatial profiles of the retinal inputs, implying that efficient tiling of cortical domains can originate from the regularly structured retinal patterns.

Professor Paik said, “Our study suggests that the structure of the periphery with simple feedforward wiring can provide the basis for a mechanism by which the early visual circuitry is assembled.”

He continued, “This is the first report that spatially organized retinal inputs from the periphery provide a common blueprint for multi-modal sensory modules in the visual cortex during the early developmental stages. Our findings would make a significant impact on our understanding the developmental strategy of brain circuitry for efficient sensory information processing.”

This work was supported by the National Research Foundation of Korea (NRF).

Image credit: Professor Se-Bum Paik, KAIST

Image usage restrictions: News organizations may use or redistribute this image, with proper attribution, as part of news coverage of this paper only.

Publication:

Song, M, et al. (2021) Projection of orthogonal tiling from the retina to the visual cortex. Cell Reports 34, 108581. Available online at https://doi.org/10.1016/j.celrep.2020.108581

Profile:

Se-Bum Paik, Ph.D

Assistant Professor

sbpaik@kaist.ac.kr

http://vs.kaist.ac.kr/

VSNN Laboratory

Department of Bio and Brain Engineering

Program of Brain and Cognitive Engineering

http://kaist.ac.kr

Korea Advanced Institute of Science and Technology (KAIST) Daejeon, Republic of Korea

Profile:

Min Song

Ph.D. Candidate

night@kaist.ac.kr

Program of Brain and Cognitive Engineering

Profile:

Jaeson Jang, Ph.D.

Researcher

jaesonjang@kaist.ac.kr

Department of Bio and Brain Engineering, KAIST

(END)

2021.01.14 View 8660 -

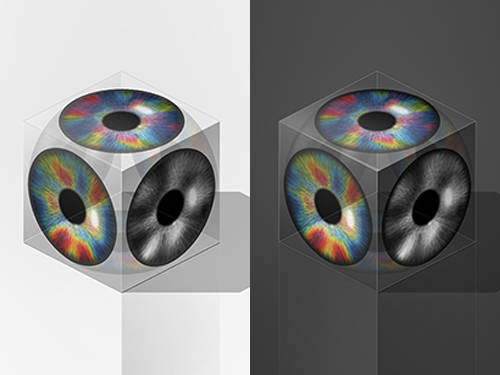

Before Eyes Open, They Get Ready to See

- Spontaneous retinal waves can generate long-range horizontal connectivity in visual cortex. -

A KAIST research team’s computational simulations demonstrated that the waves of spontaneous neural activity in the retinas of still-closed eyes in mammals develop long-range horizontal connections in the visual cortex during early developmental stages.

This new finding featured in the August 19 edition of Journal of Neuroscience as a cover article has resolved a long-standing puzzle for understanding visual neuroscience regarding the early organization of functional architectures in the mammalian visual cortex before eye-opening, especially the long-range horizontal connectivity known as “feature-specific” circuitry.

To prepare the animal to see when its eyes open, neural circuits in the brain’s visual system must begin developing earlier. However, the proper development of many brain regions involved in vision generally requires sensory input through the eyes.

In the primary visual cortex of the higher mammalian taxa, cortical neurons of similar functional tuning to a visual feature are linked together by long-range horizontal circuits that play a crucial role in visual information processing.

Surprisingly, these long-range horizontal connections in the primary visual cortex of higher mammals emerge before the onset of sensory experience, and the mechanism underlying this phenomenon has remained elusive.

To investigate this mechanism, a group of researchers led by Professor Se-Bum Paik from the Department of Bio and Brain Engineering at KAIST implemented computational simulations of early visual pathways using data obtained from the retinal circuits in young animals before eye-opening, including cats, monkeys, and mice.

From these simulations, the researchers found that spontaneous waves propagating in ON and OFF retinal mosaics can initialize the wiring of long-range horizontal connections by selectively co-activating cortical neurons of similar functional tuning, whereas equivalent random activities cannot induce such organizations.

The simulations also showed that emerged long-range horizontal connections can induce the patterned cortical activities, matching the topography of underlying functional maps even in salt-and-pepper type organizations observed in rodents. This result implies that the model developed by Professor Paik and his group can provide a universal principle for the developmental mechanism of long-range horizontal connections in both higher mammals as well as rodents.

Professor Paik said, “Our model provides a deeper understanding of how the functional architectures in the visual cortex can originate from the spatial organization of the periphery, without sensory experience during early developmental periods.”

He continued, “We believe that our findings will be of great interest to scientists working in a wide range of fields such as neuroscience, vision science, and developmental biology.”

This work was supported by the National Research Foundation of Korea (NRF). Undergraduate student Jinwoo Kim participated in this research project and presented the findings as the lead author as part of the Undergraduate Research Participation (URP) Program at KAIST.

Figures and image credit: Professor Se-Bum Paik, KAIST

Image usage restrictions: News organizations may use or redistribute these figures and image, with proper attribution, as part of news coverage of this paper only.

Publication:

Jinwoo Kim, Min Song, and Se-Bum Paik. (2020). Spontaneous retinal waves generate long-range horizontal connectivity in visual cortex. Journal of Neuroscience, Available online athttps://www.jneurosci.org/content/early/2020/07/17/JNEUROSCI.0649-20.2020

Profile: Se-Bum Paik

Assistant Professor

sbpaik@kaist.ac.kr

http://vs.kaist.ac.kr/

VSNN Laboratory

Department of Bio and Brain Engineering

Program of Brain and Cognitive Engineering

http://kaist.ac.kr

Korea Advanced Institute of Science and Technology (KAIST) Daejeon, Republic of Korea

Profile: Jinwoo Kim

Undergraduate Student

bugkjw@kaist.ac.kr

Department of Bio and Brain Engineering, KAIST

Profile: Min Song

Ph.D. Candidate

night@kaist.ac.kr

Program of Brain and Cognitive Engineering, KAIST

(END)

2020.08.25 View 14624

Before Eyes Open, They Get Ready to See

- Spontaneous retinal waves can generate long-range horizontal connectivity in visual cortex. -

A KAIST research team’s computational simulations demonstrated that the waves of spontaneous neural activity in the retinas of still-closed eyes in mammals develop long-range horizontal connections in the visual cortex during early developmental stages.

This new finding featured in the August 19 edition of Journal of Neuroscience as a cover article has resolved a long-standing puzzle for understanding visual neuroscience regarding the early organization of functional architectures in the mammalian visual cortex before eye-opening, especially the long-range horizontal connectivity known as “feature-specific” circuitry.

To prepare the animal to see when its eyes open, neural circuits in the brain’s visual system must begin developing earlier. However, the proper development of many brain regions involved in vision generally requires sensory input through the eyes.

In the primary visual cortex of the higher mammalian taxa, cortical neurons of similar functional tuning to a visual feature are linked together by long-range horizontal circuits that play a crucial role in visual information processing.

Surprisingly, these long-range horizontal connections in the primary visual cortex of higher mammals emerge before the onset of sensory experience, and the mechanism underlying this phenomenon has remained elusive.

To investigate this mechanism, a group of researchers led by Professor Se-Bum Paik from the Department of Bio and Brain Engineering at KAIST implemented computational simulations of early visual pathways using data obtained from the retinal circuits in young animals before eye-opening, including cats, monkeys, and mice.

From these simulations, the researchers found that spontaneous waves propagating in ON and OFF retinal mosaics can initialize the wiring of long-range horizontal connections by selectively co-activating cortical neurons of similar functional tuning, whereas equivalent random activities cannot induce such organizations.

The simulations also showed that emerged long-range horizontal connections can induce the patterned cortical activities, matching the topography of underlying functional maps even in salt-and-pepper type organizations observed in rodents. This result implies that the model developed by Professor Paik and his group can provide a universal principle for the developmental mechanism of long-range horizontal connections in both higher mammals as well as rodents.

Professor Paik said, “Our model provides a deeper understanding of how the functional architectures in the visual cortex can originate from the spatial organization of the periphery, without sensory experience during early developmental periods.”

He continued, “We believe that our findings will be of great interest to scientists working in a wide range of fields such as neuroscience, vision science, and developmental biology.”

This work was supported by the National Research Foundation of Korea (NRF). Undergraduate student Jinwoo Kim participated in this research project and presented the findings as the lead author as part of the Undergraduate Research Participation (URP) Program at KAIST.

Figures and image credit: Professor Se-Bum Paik, KAIST

Image usage restrictions: News organizations may use or redistribute these figures and image, with proper attribution, as part of news coverage of this paper only.

Publication:

Jinwoo Kim, Min Song, and Se-Bum Paik. (2020). Spontaneous retinal waves generate long-range horizontal connectivity in visual cortex. Journal of Neuroscience, Available online athttps://www.jneurosci.org/content/early/2020/07/17/JNEUROSCI.0649-20.2020

Profile: Se-Bum Paik

Assistant Professor

sbpaik@kaist.ac.kr

http://vs.kaist.ac.kr/

VSNN Laboratory

Department of Bio and Brain Engineering

Program of Brain and Cognitive Engineering

http://kaist.ac.kr

Korea Advanced Institute of Science and Technology (KAIST) Daejeon, Republic of Korea

Profile: Jinwoo Kim

Undergraduate Student

bugkjw@kaist.ac.kr

Department of Bio and Brain Engineering, KAIST

Profile: Min Song

Ph.D. Candidate

night@kaist.ac.kr

Program of Brain and Cognitive Engineering, KAIST

(END)

2020.08.25 View 14624 -

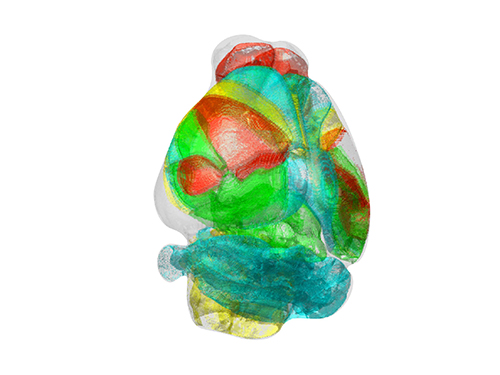

Unravelling Complex Brain Networks with Automated 3-D Neural Mapping

-Automated 3-D brain imaging data analysis technology offers more reliable and standardized analysis of the spatial organization of complex neural circuits.-

KAIST researchers developed a new algorithm for brain imaging data analysis that enables the precise and quantitative mapping of complex neural circuits onto a standardized 3-D reference atlas.

Brain imaging data analysis is indispensable in the studies of neuroscience. However, analysis of obtained brain imaging data has been heavily dependent on manual processing, which cannot guarantee the accuracy, consistency, and reliability of the results.

Conventional brain imaging data analysis typically begins with finding a 2-D brain atlas image that is visually similar to the experimentally obtained brain image. Then, the region-of-interest (ROI) of the atlas image is matched manually with the obtained image, and the number of labeled neurons in the ROI is counted.

Such a visual matching process between experimentally obtained brain images and 2-D brain atlas images has been one of the major sources of error in brain imaging data analysis, as the process is highly subjective, sample-specific, and susceptible to human error. Manual analysis processes for brain images are also laborious, and thus studying the complete 3-D neuronal organization on a whole-brain scale is a formidable task.

To address these issues, a KAIST research team led by Professor Se-Bum Paik from the Department of Bio and Brain Engineering developed new brain imaging data analysis software named 'AMaSiNe (Automated 3-D Mapping of Single Neurons)', and introduced the algorithm in the May 26 issue of Cell Reports.

AMaSiNe automatically detects the positions of single neurons from multiple brain images, and accurately maps all the data onto a common standard 3-D reference space. The algorithm allows the direct comparison of brain data from different animals by automatically matching similar features from the images, and computing the image similarity score.

This feature-based quantitative image-to-image comparison technology improves the accuracy, consistency, and reliability of analysis results using only a small number of brain slice image samples, and helps standardize brain imaging data analyses.

Unlike other existing brain imaging data analysis methods, AMaSiNe can also automatically find the alignment conditions from misaligned and distorted brain images, and draw an accurate ROI, without any cumbersome manual validation process.

AMaSiNe has been further proved to produce consistent results with brain slice images stained utilizing various methods including DAPI, Nissl, and autofluorescence.

The two co-lead authors of this study, Jun Ho Song and Woochul Choi, exploited these benefits of AMaSiNe to investigate the topographic organization of neurons that project to the primary visual area (VISp) in various ROIs, such as the dorsal lateral geniculate nucleus (LGd), which could hardly be addressed without proper calibration and standardization of the brain slice image samples.

In collaboration with Professor Seung-Hee Lee's group of the Department of Biological Science, the researchers successfully observed the 3-D topographic neural projections to the VISp from LGd, and also demonstrated that these projections could not be observed when the slicing angle was not properly corrected by AMaSiNe. The results suggest that the precise correction of a slicing angle is essential for the investigation of complex and important brain structures.

AMaSiNe is widely applicable in the studies of various brain regions and other experimental conditions. For example, in the research team’s previous study jointly conducted with Professor Yang Dan’s group at UC Berkeley, the algorithm enabled the accurate analysis of the neuronal subsets in the substantia nigra and their projections to the whole brain. Their findings were published in Science on January 24.

AMaSiNe is of great interest to many neuroscientists in Korea and abroad, and is being actively used by a number of other research groups at KAIST, MIT, Harvard, Caltech, and UC San Diego.

Professor Paik said, “Our new algorithm allows the spatial organization of complex neural circuits to be found in a standardized 3-D reference atlas on a whole-brain scale. This will bring brain imaging data analysis to a new level.”

He continued, “More in-depth insights for understanding the function of brain circuits can be achieved by facilitating more reliable and standardized analysis of the spatial organization of neural circuits in various regions of the brain.”

This work was supported by KAIST and the National Research Foundation of Korea (NRF).

Figure and Image Credit: Professor Se-Bum Paik, KAIST

Figure and Image Usage Restrictions: News organizations may use or redistribute these figures and images, with proper attribution, as part of news coverage of this paper only.

Publication:

Song, J. H., et al. (2020). Precise Mapping of Single Neurons by Calibrated 3D Reconstruction of Brain Slices Reveals Topographic Projection in Mouse Visual Cortex. Cell Reports. Volume 31, 107682. Available online at https://doi.org/10.1016/j.celrep.2020.107682

Profile:

Se-Bum Paik

Assistant Professor

sbpaik@kaist.ac.kr

http://vs.kaist.ac.kr/

VSNN Laboratory

Department of Bio and Brain Engineering

Program of Brain and Cognitive Engineering

http://kaist.ac.kr

Korea Advanced Institute of Science and Technology (KAIST)

Daejeon, Republic of Korea

(END)

2020.06.08 View 15167

Unravelling Complex Brain Networks with Automated 3-D Neural Mapping

-Automated 3-D brain imaging data analysis technology offers more reliable and standardized analysis of the spatial organization of complex neural circuits.-

KAIST researchers developed a new algorithm for brain imaging data analysis that enables the precise and quantitative mapping of complex neural circuits onto a standardized 3-D reference atlas.

Brain imaging data analysis is indispensable in the studies of neuroscience. However, analysis of obtained brain imaging data has been heavily dependent on manual processing, which cannot guarantee the accuracy, consistency, and reliability of the results.

Conventional brain imaging data analysis typically begins with finding a 2-D brain atlas image that is visually similar to the experimentally obtained brain image. Then, the region-of-interest (ROI) of the atlas image is matched manually with the obtained image, and the number of labeled neurons in the ROI is counted.

Such a visual matching process between experimentally obtained brain images and 2-D brain atlas images has been one of the major sources of error in brain imaging data analysis, as the process is highly subjective, sample-specific, and susceptible to human error. Manual analysis processes for brain images are also laborious, and thus studying the complete 3-D neuronal organization on a whole-brain scale is a formidable task.

To address these issues, a KAIST research team led by Professor Se-Bum Paik from the Department of Bio and Brain Engineering developed new brain imaging data analysis software named 'AMaSiNe (Automated 3-D Mapping of Single Neurons)', and introduced the algorithm in the May 26 issue of Cell Reports.

AMaSiNe automatically detects the positions of single neurons from multiple brain images, and accurately maps all the data onto a common standard 3-D reference space. The algorithm allows the direct comparison of brain data from different animals by automatically matching similar features from the images, and computing the image similarity score.

This feature-based quantitative image-to-image comparison technology improves the accuracy, consistency, and reliability of analysis results using only a small number of brain slice image samples, and helps standardize brain imaging data analyses.

Unlike other existing brain imaging data analysis methods, AMaSiNe can also automatically find the alignment conditions from misaligned and distorted brain images, and draw an accurate ROI, without any cumbersome manual validation process.

AMaSiNe has been further proved to produce consistent results with brain slice images stained utilizing various methods including DAPI, Nissl, and autofluorescence.

The two co-lead authors of this study, Jun Ho Song and Woochul Choi, exploited these benefits of AMaSiNe to investigate the topographic organization of neurons that project to the primary visual area (VISp) in various ROIs, such as the dorsal lateral geniculate nucleus (LGd), which could hardly be addressed without proper calibration and standardization of the brain slice image samples.

In collaboration with Professor Seung-Hee Lee's group of the Department of Biological Science, the researchers successfully observed the 3-D topographic neural projections to the VISp from LGd, and also demonstrated that these projections could not be observed when the slicing angle was not properly corrected by AMaSiNe. The results suggest that the precise correction of a slicing angle is essential for the investigation of complex and important brain structures.

AMaSiNe is widely applicable in the studies of various brain regions and other experimental conditions. For example, in the research team’s previous study jointly conducted with Professor Yang Dan’s group at UC Berkeley, the algorithm enabled the accurate analysis of the neuronal subsets in the substantia nigra and their projections to the whole brain. Their findings were published in Science on January 24.

AMaSiNe is of great interest to many neuroscientists in Korea and abroad, and is being actively used by a number of other research groups at KAIST, MIT, Harvard, Caltech, and UC San Diego.

Professor Paik said, “Our new algorithm allows the spatial organization of complex neural circuits to be found in a standardized 3-D reference atlas on a whole-brain scale. This will bring brain imaging data analysis to a new level.”

He continued, “More in-depth insights for understanding the function of brain circuits can be achieved by facilitating more reliable and standardized analysis of the spatial organization of neural circuits in various regions of the brain.”

This work was supported by KAIST and the National Research Foundation of Korea (NRF).

Figure and Image Credit: Professor Se-Bum Paik, KAIST

Figure and Image Usage Restrictions: News organizations may use or redistribute these figures and images, with proper attribution, as part of news coverage of this paper only.

Publication:

Song, J. H., et al. (2020). Precise Mapping of Single Neurons by Calibrated 3D Reconstruction of Brain Slices Reveals Topographic Projection in Mouse Visual Cortex. Cell Reports. Volume 31, 107682. Available online at https://doi.org/10.1016/j.celrep.2020.107682

Profile:

Se-Bum Paik

Assistant Professor

sbpaik@kaist.ac.kr

http://vs.kaist.ac.kr/

VSNN Laboratory

Department of Bio and Brain Engineering

Program of Brain and Cognitive Engineering

http://kaist.ac.kr

Korea Advanced Institute of Science and Technology (KAIST)

Daejeon, Republic of Korea

(END)

2020.06.08 View 15167 -

A Single Biological Factor Predicts Distinct Cortical Organizations across Mammalian Species

-A KAIST team’s mathematical sampling model shows that retino-cortical mapping is a prime determinant in the topography of cortical organization.-

Researchers have explained how visual cortexes develop uniquely across the brains of different mammalian species. A KAIST research team led by Professor Se-Bum Paik from the Department of Bio and Brain Engineering has identified a single biological factor, the retino-cortical mapping ratio, that predicts distinct cortical organizations across mammalian species.

This new finding has resolved a long-standing puzzle in understanding visual neuroscience regarding the origin of functional architectures in the visual cortex. The study published in Cell Reports on March 10 demonstrates that the evolutionary variation of biological parameters may induce the development of distinct functional circuits in the visual cortex, even without species-specific developmental mechanisms.

In the primary visual cortex (V1) of mammals, neural tuning to visual stimulus orientation is organized into one of two distinct topographic patterns across species. While primates have columnar orientation maps, a salt-and-pepper type organization is observed in rodents.

For decades, this sharp contrast between cortical organizations has spawned fundamental questions about the origin of functional architectures in the V1. However, it remained unknown whether these patterns reflect disparate developmental mechanisms across mammalian taxa, or simply originate from variations in biological parameters under a universal development process.

To identify a determinant predicting distinct cortical organizations, Professor Paik and his researchers Jaeson Jang and Min Song examined the exact condition that generates columnar and salt-and-pepper organizations, respectively. Next, they applied a mathematical model to investigate how the topographic information of the underlying retinal mosaics pattern could be differently mapped onto a cortical space, depending on the mapping condition.

The research team proved that the retino-cortical feedforwarding mapping ratio appeared to be correlated to the cortical organization of each species. In the model simulations, the team found that distinct cortical circuitries can arise from different V1 areas and retinal ganglion cell (RGC) mosaic sizes. The team’s mathematical sampling model shows that retino-cortical mapping is a prime determinant in the topography of cortical organization, and this prediction was confirmed by neural parameter analysis of the data from eight phylogenetically distinct mammalian species.

Furthermore, the researchers proved that the Nyquist sampling theorem explains this parametric division of cortical organization with high accuracy. They showed that a mathematical model predicts that the organization of cortical orientation tuning makes a sharp transition around the Nyquist sampling frequency, explaining why cortical organizations can be observed in either columnar or salt-and-pepper organizations, but not in intermediates between these two stages.

Professor Paik said, “Our findings make a significant impact for understanding the origin of functional architectures in the visual cortex of the brain, and will provide a broad conceptual advancement as well as advanced insights into the mechanism underlying neural development in evolutionarily divergent species.”

He continued, “We believe that our findings will be of great interest to scientists working in a wide range of fields such as neuroscience, vision science, and developmental biology.”

This work was supported by the National Research Foundation of Korea (NRF).

Image credit: Professor Se-Bum Paik, KAIST

Image usage restrictions: News organizations may use or redistribute this image, with proper attribution, as part of news coverage of this paper only.

Publication:

Jaeson Jang, Min Song, and Se-Bum Paik. (2020). Retino-cortical mapping ratio predicts columnar and salt-and-pepper organization in mammalian visual cortex. Cell Reports. Volume 30. Issue 10. pp. 3270-3279. Available online at https://doi.org/10.1016/j.celrep.2020.02.038

Profile:

Se-Bum Paik

Assistant Professor

sbpaik@kaist.ac.kr

http://vs.kaist.ac.kr/

VSNN Laboratory

Department of Bio and Brain Engineering

Program of Brain and Cognitive Engineering

http://kaist.ac.kr

Korea Advanced Institute of Science and Technology (KAIST)

Daejeon, Republic of Korea

Profile:

Jaeson Jang

Ph.D. Candidate

jaesonjang@kaist.ac.kr

Department of Bio and Brain Engineering, KAIST

Profile:

Min Song

Ph.D. Candidate

night@kaist.ac.kr

Program of Brain and Cognitive Engineering, KAIST

(END)

2020.03.11 View 15584

A Single Biological Factor Predicts Distinct Cortical Organizations across Mammalian Species

-A KAIST team’s mathematical sampling model shows that retino-cortical mapping is a prime determinant in the topography of cortical organization.-

Researchers have explained how visual cortexes develop uniquely across the brains of different mammalian species. A KAIST research team led by Professor Se-Bum Paik from the Department of Bio and Brain Engineering has identified a single biological factor, the retino-cortical mapping ratio, that predicts distinct cortical organizations across mammalian species.

This new finding has resolved a long-standing puzzle in understanding visual neuroscience regarding the origin of functional architectures in the visual cortex. The study published in Cell Reports on March 10 demonstrates that the evolutionary variation of biological parameters may induce the development of distinct functional circuits in the visual cortex, even without species-specific developmental mechanisms.

In the primary visual cortex (V1) of mammals, neural tuning to visual stimulus orientation is organized into one of two distinct topographic patterns across species. While primates have columnar orientation maps, a salt-and-pepper type organization is observed in rodents.

For decades, this sharp contrast between cortical organizations has spawned fundamental questions about the origin of functional architectures in the V1. However, it remained unknown whether these patterns reflect disparate developmental mechanisms across mammalian taxa, or simply originate from variations in biological parameters under a universal development process.

To identify a determinant predicting distinct cortical organizations, Professor Paik and his researchers Jaeson Jang and Min Song examined the exact condition that generates columnar and salt-and-pepper organizations, respectively. Next, they applied a mathematical model to investigate how the topographic information of the underlying retinal mosaics pattern could be differently mapped onto a cortical space, depending on the mapping condition.

The research team proved that the retino-cortical feedforwarding mapping ratio appeared to be correlated to the cortical organization of each species. In the model simulations, the team found that distinct cortical circuitries can arise from different V1 areas and retinal ganglion cell (RGC) mosaic sizes. The team’s mathematical sampling model shows that retino-cortical mapping is a prime determinant in the topography of cortical organization, and this prediction was confirmed by neural parameter analysis of the data from eight phylogenetically distinct mammalian species.

Furthermore, the researchers proved that the Nyquist sampling theorem explains this parametric division of cortical organization with high accuracy. They showed that a mathematical model predicts that the organization of cortical orientation tuning makes a sharp transition around the Nyquist sampling frequency, explaining why cortical organizations can be observed in either columnar or salt-and-pepper organizations, but not in intermediates between these two stages.

Professor Paik said, “Our findings make a significant impact for understanding the origin of functional architectures in the visual cortex of the brain, and will provide a broad conceptual advancement as well as advanced insights into the mechanism underlying neural development in evolutionarily divergent species.”

He continued, “We believe that our findings will be of great interest to scientists working in a wide range of fields such as neuroscience, vision science, and developmental biology.”

This work was supported by the National Research Foundation of Korea (NRF).

Image credit: Professor Se-Bum Paik, KAIST

Image usage restrictions: News organizations may use or redistribute this image, with proper attribution, as part of news coverage of this paper only.

Publication:

Jaeson Jang, Min Song, and Se-Bum Paik. (2020). Retino-cortical mapping ratio predicts columnar and salt-and-pepper organization in mammalian visual cortex. Cell Reports. Volume 30. Issue 10. pp. 3270-3279. Available online at https://doi.org/10.1016/j.celrep.2020.02.038

Profile:

Se-Bum Paik

Assistant Professor

sbpaik@kaist.ac.kr

http://vs.kaist.ac.kr/

VSNN Laboratory

Department of Bio and Brain Engineering

Program of Brain and Cognitive Engineering

http://kaist.ac.kr

Korea Advanced Institute of Science and Technology (KAIST)

Daejeon, Republic of Korea

Profile:

Jaeson Jang

Ph.D. Candidate

jaesonjang@kaist.ac.kr

Department of Bio and Brain Engineering, KAIST

Profile:

Min Song

Ph.D. Candidate

night@kaist.ac.kr

Program of Brain and Cognitive Engineering, KAIST

(END)

2020.03.11 View 15584