computing

-

KAIST & LG U+ Team Up for Quantum Computing Solution for Ultra-Space 6G Satellite Networking

KAIST quantum computer scientists have optimized ultra-space 6G Low-Earth Orbit (LEO) satellite networking, finding the shortest path to transfer data from a city to another place via multi-satellite hops.

The research team led by Professor June-Koo Kevin Rhee and Professor Dongsu Han in partnership with LG U+ verified the possibility of ultra-performance and precision communication with satellite networks using D-Wave, the first commercialized quantum computer.

Satellite network optimization has remained challenging since the network needs to be reconfigured whenever satellites approach other satellites within the connection range in a three-dimensional space. Moreover, LEO satellites orbiting at 200~2000 km above the Earth change their positions dynamically, whereas Geo-Stationary Orbit (GSO) satellites do not change their positions. Thus, LEO satellite network optimization needs to be solved in real time.

The research groups formulated the problem as a Quadratic Unconstrained Binary Optimization (QUBO) problem and managed to solve the problem, incorporating the connectivity and link distance limits as the constraints.

The proposed optimization algorithm is reported to be much more efficient in terms of hop counts and path length than previously reported studies using classical solutions. These results verify that a satellite network can provide ultra-performance (over 1Gbps user-perceived speed), and ultra-precision (less than 5ms end-to-end latency) network services, which are comparable to terrestrial communication.

Once QUBO is applied, “ultra-space networking” is expected to be realized with 6G. Researchers said that an ultra-space network provides communication services for an object moving at up to 10 km altitude with an extreme speed (~ 1000 km/h). Optimized LEO satellite networks can provide 6G communication services to currently unavailable areas such as air flights and deserts.

Professor Rhee, who is also the CEO of Qunova Computing, noted, “Collaboration with LG U+ was meaningful as we were able to find an industrial application for a quantum computer. We look forward to more quantum application research on real problems such as in communications, drug and material discovery, logistics, and fintech industries.”

2022.06.17 View 11126

KAIST & LG U+ Team Up for Quantum Computing Solution for Ultra-Space 6G Satellite Networking

KAIST quantum computer scientists have optimized ultra-space 6G Low-Earth Orbit (LEO) satellite networking, finding the shortest path to transfer data from a city to another place via multi-satellite hops.

The research team led by Professor June-Koo Kevin Rhee and Professor Dongsu Han in partnership with LG U+ verified the possibility of ultra-performance and precision communication with satellite networks using D-Wave, the first commercialized quantum computer.

Satellite network optimization has remained challenging since the network needs to be reconfigured whenever satellites approach other satellites within the connection range in a three-dimensional space. Moreover, LEO satellites orbiting at 200~2000 km above the Earth change their positions dynamically, whereas Geo-Stationary Orbit (GSO) satellites do not change their positions. Thus, LEO satellite network optimization needs to be solved in real time.

The research groups formulated the problem as a Quadratic Unconstrained Binary Optimization (QUBO) problem and managed to solve the problem, incorporating the connectivity and link distance limits as the constraints.

The proposed optimization algorithm is reported to be much more efficient in terms of hop counts and path length than previously reported studies using classical solutions. These results verify that a satellite network can provide ultra-performance (over 1Gbps user-perceived speed), and ultra-precision (less than 5ms end-to-end latency) network services, which are comparable to terrestrial communication.

Once QUBO is applied, “ultra-space networking” is expected to be realized with 6G. Researchers said that an ultra-space network provides communication services for an object moving at up to 10 km altitude with an extreme speed (~ 1000 km/h). Optimized LEO satellite networks can provide 6G communication services to currently unavailable areas such as air flights and deserts.

Professor Rhee, who is also the CEO of Qunova Computing, noted, “Collaboration with LG U+ was meaningful as we were able to find an industrial application for a quantum computer. We look forward to more quantum application research on real problems such as in communications, drug and material discovery, logistics, and fintech industries.”

2022.06.17 View 11126 -

Neuromorphic Memory Device Simulates Neurons and Synapses

Simultaneous emulation of neuronal and synaptic properties promotes the development of brain-like artificial intelligence

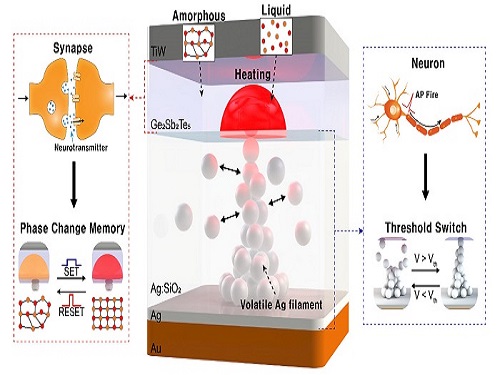

Researchers have reported a nano-sized neuromorphic memory device that emulates neurons and synapses simultaneously in a unit cell, another step toward completing the goal of neuromorphic computing designed to rigorously mimic the human brain with semiconductor devices.

Neuromorphic computing aims to realize artificial intelligence (AI) by mimicking the mechanisms of neurons and synapses that make up the human brain. Inspired by the cognitive functions of the human brain that current computers cannot provide, neuromorphic devices have been widely investigated. However, current Complementary Metal-Oxide Semiconductor (CMOS)-based neuromorphic circuits simply connect artificial neurons and synapses without synergistic interactions, and the concomitant implementation of neurons and synapses still remains a challenge. To address these issues, a research team led by Professor Keon Jae Lee from the Department of Materials Science and Engineering implemented the biological working mechanisms of humans by introducing the neuron-synapse interactions in a single memory cell, rather than the conventional approach of electrically connecting artificial neuronal and synaptic devices.

Similar to commercial graphics cards, the artificial synaptic devices previously studied often used to accelerate parallel computations, which shows clear differences from the operational mechanisms of the human brain. The research team implemented the synergistic interactions between neurons and synapses in the neuromorphic memory device, emulating the mechanisms of the biological neural network. In addition, the developed neuromorphic device can replace complex CMOS neuron circuits with a single device, providing high scalability and cost efficiency.

The human brain consists of a complex network of 100 billion neurons and 100 trillion synapses. The functions and structures of neurons and synapses can flexibly change according to the external stimuli, adapting to the surrounding environment. The research team developed a neuromorphic device in which short-term and long-term memories coexist using volatile and non-volatile memory devices that mimic the characteristics of neurons and synapses, respectively. A threshold switch device is used as volatile memory and phase-change memory is used as a non-volatile device. Two thin-film devices are integrated without intermediate electrodes, implementing the functional adaptability of neurons and synapses in the neuromorphic memory.

Professor Keon Jae Lee explained, "Neurons and synapses interact with each other to establish cognitive functions such as memory and learning, so simulating both is an essential element for brain-inspired artificial intelligence. The developed neuromorphic memory device also mimics the retraining effect that allows quick learning of the forgotten information by implementing a positive feedback effect between neurons and synapses.”

This result entitled “Simultaneous emulation of synaptic and intrinsic plasticity using a memristive synapse” was published in the May 19, 2022 issue of Nature Communications.

-Publication:Sang Hyun Sung, Tae Jin Kim, Hyera Shin, Tae Hong Im, and Keon Jae Lee (2022) “Simultaneous emulation of synaptic and intrinsic plasticity using a memristive synapse,” Nature Communications May 19, 2022 (DOI: 10.1038/s41467-022-30432-2)

-Profile:Professor Keon Jae Leehttp://fand.kaist.ac.kr

Department of Materials Science and EngineeringKAIST

2022.05.20 View 16225

Neuromorphic Memory Device Simulates Neurons and Synapses

Simultaneous emulation of neuronal and synaptic properties promotes the development of brain-like artificial intelligence

Researchers have reported a nano-sized neuromorphic memory device that emulates neurons and synapses simultaneously in a unit cell, another step toward completing the goal of neuromorphic computing designed to rigorously mimic the human brain with semiconductor devices.

Neuromorphic computing aims to realize artificial intelligence (AI) by mimicking the mechanisms of neurons and synapses that make up the human brain. Inspired by the cognitive functions of the human brain that current computers cannot provide, neuromorphic devices have been widely investigated. However, current Complementary Metal-Oxide Semiconductor (CMOS)-based neuromorphic circuits simply connect artificial neurons and synapses without synergistic interactions, and the concomitant implementation of neurons and synapses still remains a challenge. To address these issues, a research team led by Professor Keon Jae Lee from the Department of Materials Science and Engineering implemented the biological working mechanisms of humans by introducing the neuron-synapse interactions in a single memory cell, rather than the conventional approach of electrically connecting artificial neuronal and synaptic devices.

Similar to commercial graphics cards, the artificial synaptic devices previously studied often used to accelerate parallel computations, which shows clear differences from the operational mechanisms of the human brain. The research team implemented the synergistic interactions between neurons and synapses in the neuromorphic memory device, emulating the mechanisms of the biological neural network. In addition, the developed neuromorphic device can replace complex CMOS neuron circuits with a single device, providing high scalability and cost efficiency.

The human brain consists of a complex network of 100 billion neurons and 100 trillion synapses. The functions and structures of neurons and synapses can flexibly change according to the external stimuli, adapting to the surrounding environment. The research team developed a neuromorphic device in which short-term and long-term memories coexist using volatile and non-volatile memory devices that mimic the characteristics of neurons and synapses, respectively. A threshold switch device is used as volatile memory and phase-change memory is used as a non-volatile device. Two thin-film devices are integrated without intermediate electrodes, implementing the functional adaptability of neurons and synapses in the neuromorphic memory.

Professor Keon Jae Lee explained, "Neurons and synapses interact with each other to establish cognitive functions such as memory and learning, so simulating both is an essential element for brain-inspired artificial intelligence. The developed neuromorphic memory device also mimics the retraining effect that allows quick learning of the forgotten information by implementing a positive feedback effect between neurons and synapses.”

This result entitled “Simultaneous emulation of synaptic and intrinsic plasticity using a memristive synapse” was published in the May 19, 2022 issue of Nature Communications.

-Publication:Sang Hyun Sung, Tae Jin Kim, Hyera Shin, Tae Hong Im, and Keon Jae Lee (2022) “Simultaneous emulation of synaptic and intrinsic plasticity using a memristive synapse,” Nature Communications May 19, 2022 (DOI: 10.1038/s41467-022-30432-2)

-Profile:Professor Keon Jae Leehttp://fand.kaist.ac.kr

Department of Materials Science and EngineeringKAIST

2022.05.20 View 16225 -

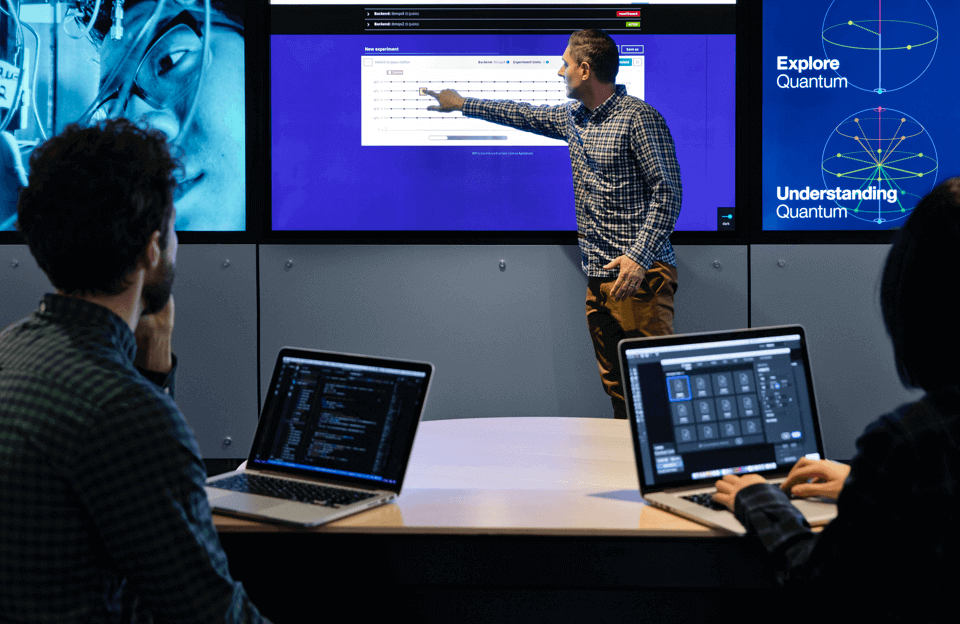

Quantum Technology: the Next Game Changer?

The 6th KAIST Global Strategy Institute Forum explores how quantum technology has evolved into a new growth engine for the future

The participants of the 6th KAIST Global Strategy Institute (GSI) Forum on April 20 agreed that the emerging technology of quantum computing will be a game changer of the future. As KAIST President Kwang Hyung Lee said in his opening remarks, the future is quantum and that future is rapidly approaching. Keynote speakers and panelists presented their insights on the disruptive innovations we are already experiencing.

The three keynote speakers included Dr. Jerry M. Chow, IBM fellow and director of quantum infrastructure, Professor John Preskill from Caltech, and Professor Jungsang Kim from Duke University. They discussed the academic impact and industrial applications of quantum technology, and its prospects for the future.

Dr. Chow leads IBM Quantum’s hardware system development efforts, focusing on research and system deployment. Professor Preskill is one of the leading quantum information science and quantum computation scholars. He coined the term “quantum supremacy.” Professor Kim is the co-founder and CTO of IonQ Inc., which develops general-purpose trapped ion quantum computers and software to generate, optimize, and execute quantum circuits.

Two leading quantum scholars from KAIST, Professor June-Koo Kevin Rhee and Professor Youngik Sohn, and Professor Andreas Heinrich from the IBS Center for Quantum Nanoscience also participated in the forum as panelists. Professor Rhee is the founder of the nation’s first quantum computing software company and leads the AI Quantum Computing IT Research Center at KAIST.

During the panel session, Professor Rhee said that although it is undeniable the quantum computing will be a game changer, there are several challenges. For instance, the first actual quantum computer is NISQ (Noisy intermediate-scale quantum era), which is still incomplete. However, it is expected to outperform a supercomputer. Until then, it is important to advance the accuracy of quantum computation in order to offer super computation speeds.

Professor Sohn, who worked at PsiQuantum, detailed how quantum computers will affect our society. He said that PsiQuantum is developing silicon photonics that will control photons. We can’t begin to imagine how silicon photonics will transform our society. Things will change slowly but the scale would be massive.

The keynote speakers presented how quantum cryptography communications and its sensing technology will serve as disruptive innovations. Dr. Chow stressed that to realize the potential growth and innovation, additional R&D is needed. More research groups and scholars should be nurtured. Only then will the rich R&D resources be able to create breakthroughs in quantum-related industries. Lastly, the commercialization of quantum computing must be advanced, which will enable the provision of diverse services. In addition, more technological and industrial infrastructure must be built to better accommodate quantum computing.

Professor Preskill believes that quantum computing will benefit humanity. He cited two basic reasons for his optimistic prediction: quantum complexity and quantum error corrections. He explained why quantum computing is so powerful: quantum computer can easily solve the problems classically considered difficult, such as factorization. In addition, quantum computer has the potential to efficiently simulate all of the physical processes taking place in nature.

Despite such dramatic advantages, why does it seem so difficult? Professor Preskill believes this is because we want qubits (quantum bits or ‘qubits’ are the basic unit of quantum information) to interact very strongly with each other. Because computations can fail, we don’t want qubits to interact with the environment unless we can control and predict them.

As for quantum computing in the NISQ era, he said that NISQ will be an exciting tool for exploring physics. Professor Preskill does not believe that NISQ will change the world alone, rather it is a step forward toward more powerful quantum technologies in the future. He added that a potentially transformable, scalable quantum computer could still be decades away.

Professor Preskill said that a large number of qubits, higher accuracy, and better quality will require a significant investment. He said if we expand with better ideas, we can make a better system. In the longer term, quantum technology will bring significant benefits to the technological sectors and society in the fields of materials, physics, chemistry, and energy production.

Professor Kim from Duke University presented on the practical applications of quantum computing, especially in the startup environment. He said that although there is no right answer for the early applications of quantum computing, developing new approaches to solve difficult problems and raising the accessibility of the technology will be important for ensuring the growth of technology-based startups.

2022.04.21 View 14023

Quantum Technology: the Next Game Changer?

The 6th KAIST Global Strategy Institute Forum explores how quantum technology has evolved into a new growth engine for the future

The participants of the 6th KAIST Global Strategy Institute (GSI) Forum on April 20 agreed that the emerging technology of quantum computing will be a game changer of the future. As KAIST President Kwang Hyung Lee said in his opening remarks, the future is quantum and that future is rapidly approaching. Keynote speakers and panelists presented their insights on the disruptive innovations we are already experiencing.

The three keynote speakers included Dr. Jerry M. Chow, IBM fellow and director of quantum infrastructure, Professor John Preskill from Caltech, and Professor Jungsang Kim from Duke University. They discussed the academic impact and industrial applications of quantum technology, and its prospects for the future.

Dr. Chow leads IBM Quantum’s hardware system development efforts, focusing on research and system deployment. Professor Preskill is one of the leading quantum information science and quantum computation scholars. He coined the term “quantum supremacy.” Professor Kim is the co-founder and CTO of IonQ Inc., which develops general-purpose trapped ion quantum computers and software to generate, optimize, and execute quantum circuits.

Two leading quantum scholars from KAIST, Professor June-Koo Kevin Rhee and Professor Youngik Sohn, and Professor Andreas Heinrich from the IBS Center for Quantum Nanoscience also participated in the forum as panelists. Professor Rhee is the founder of the nation’s first quantum computing software company and leads the AI Quantum Computing IT Research Center at KAIST.

During the panel session, Professor Rhee said that although it is undeniable the quantum computing will be a game changer, there are several challenges. For instance, the first actual quantum computer is NISQ (Noisy intermediate-scale quantum era), which is still incomplete. However, it is expected to outperform a supercomputer. Until then, it is important to advance the accuracy of quantum computation in order to offer super computation speeds.

Professor Sohn, who worked at PsiQuantum, detailed how quantum computers will affect our society. He said that PsiQuantum is developing silicon photonics that will control photons. We can’t begin to imagine how silicon photonics will transform our society. Things will change slowly but the scale would be massive.

The keynote speakers presented how quantum cryptography communications and its sensing technology will serve as disruptive innovations. Dr. Chow stressed that to realize the potential growth and innovation, additional R&D is needed. More research groups and scholars should be nurtured. Only then will the rich R&D resources be able to create breakthroughs in quantum-related industries. Lastly, the commercialization of quantum computing must be advanced, which will enable the provision of diverse services. In addition, more technological and industrial infrastructure must be built to better accommodate quantum computing.

Professor Preskill believes that quantum computing will benefit humanity. He cited two basic reasons for his optimistic prediction: quantum complexity and quantum error corrections. He explained why quantum computing is so powerful: quantum computer can easily solve the problems classically considered difficult, such as factorization. In addition, quantum computer has the potential to efficiently simulate all of the physical processes taking place in nature.

Despite such dramatic advantages, why does it seem so difficult? Professor Preskill believes this is because we want qubits (quantum bits or ‘qubits’ are the basic unit of quantum information) to interact very strongly with each other. Because computations can fail, we don’t want qubits to interact with the environment unless we can control and predict them.

As for quantum computing in the NISQ era, he said that NISQ will be an exciting tool for exploring physics. Professor Preskill does not believe that NISQ will change the world alone, rather it is a step forward toward more powerful quantum technologies in the future. He added that a potentially transformable, scalable quantum computer could still be decades away.

Professor Preskill said that a large number of qubits, higher accuracy, and better quality will require a significant investment. He said if we expand with better ideas, we can make a better system. In the longer term, quantum technology will bring significant benefits to the technological sectors and society in the fields of materials, physics, chemistry, and energy production.

Professor Kim from Duke University presented on the practical applications of quantum computing, especially in the startup environment. He said that although there is no right answer for the early applications of quantum computing, developing new approaches to solve difficult problems and raising the accessibility of the technology will be important for ensuring the growth of technology-based startups.

2022.04.21 View 14023 -

Professor June-Koo Rhee’s Team Wins the QHack Open Hackathon Science Challenge

The research team consisting of three master students Ju-Young Ryu, Jeung-rak Lee, and Eyel Elala in Professor June-Koo Rhee’s group from the KAIST IRTC of Quantum Computing for AI has won the first place at the QHack 2022 Open Hackathon Science Challenge.

The QHack 2022 Open Hackathon is one of the world’s prestigious quantum software hackathon events held by US Xanadu, in which 250 people from 100 countries participate. Major sponsors such as IBM Quantum, AWS, CERN QTI, and Google Quantum AI proposed challenging problems, and a winning team is selected judged on team projects in each of the 13 challenges.

The KAIST team supervised by Professor Rhee received the First Place prize on the Science Challenge which was organized by the CERN QTI of the European Communities. The team will be awarded an opportunity to tour CERN’s research lab in Europe for one week along with an online internship.

The students on the team presented a method for “Leaning Based Error Mitigation for VQE,” in which they implemented an LBEM protocol to lower the error in quantum computing, and leveraged the protocol in the VQU algorithm which is used to calculate the ground state energy of a given molecule.

Their research successfully demonstrated the ability to effectively mitigate the error in IBM Quantum hardware and the virtual error model. In conjunction, Professor June-Koo (Kevin) Rhee founded a quantum computing venture start-up, Qunova Computing(https://qunovacomputing.com), with technology tranfer from the KAIST ITRC of Quantum Computing for AI. Qunova Computing is one of the frontier of the quantum software industry in Korea.

2022.04.08 View 8200

Professor June-Koo Rhee’s Team Wins the QHack Open Hackathon Science Challenge

The research team consisting of three master students Ju-Young Ryu, Jeung-rak Lee, and Eyel Elala in Professor June-Koo Rhee’s group from the KAIST IRTC of Quantum Computing for AI has won the first place at the QHack 2022 Open Hackathon Science Challenge.

The QHack 2022 Open Hackathon is one of the world’s prestigious quantum software hackathon events held by US Xanadu, in which 250 people from 100 countries participate. Major sponsors such as IBM Quantum, AWS, CERN QTI, and Google Quantum AI proposed challenging problems, and a winning team is selected judged on team projects in each of the 13 challenges.

The KAIST team supervised by Professor Rhee received the First Place prize on the Science Challenge which was organized by the CERN QTI of the European Communities. The team will be awarded an opportunity to tour CERN’s research lab in Europe for one week along with an online internship.

The students on the team presented a method for “Leaning Based Error Mitigation for VQE,” in which they implemented an LBEM protocol to lower the error in quantum computing, and leveraged the protocol in the VQU algorithm which is used to calculate the ground state energy of a given molecule.

Their research successfully demonstrated the ability to effectively mitigate the error in IBM Quantum hardware and the virtual error model. In conjunction, Professor June-Koo (Kevin) Rhee founded a quantum computing venture start-up, Qunova Computing(https://qunovacomputing.com), with technology tranfer from the KAIST ITRC of Quantum Computing for AI. Qunova Computing is one of the frontier of the quantum software industry in Korea.

2022.04.08 View 8200 -

Professor Sung-Ju Lee’s Team Wins the Best Paper and the Methods Recognition Awards at the ACM CSCW

A research team led by Professor Sung-Ju Lee at the School of Electrical Engineering won the Best Paper Award and the Methods Recognition Award from ACM CSCW (International Conference on Computer-Supported Cooperative Work and Social Computing) 2021 for their paper “Reflect, not Regret: Understanding Regretful Smartphone Use with App Feature-Level Analysis”.

Founded in 1986, CSCW has been a premier conference on HCI (Human Computer Interaction) and Social Computing. This year, 340 full papers were presented and the best paper awards are given to the top 1% papers of the submitted. Methods Recognition, which is a new award, is given “for strong examples of work that includes well developed, explained, or implemented methods, and methodological innovation.”

Hyunsung Cho (KAIST alumus and currently a PhD candidate at Carnegie Mellon University), Daeun Choi (KAIST undergraduate researcher), Donghwi Kim (KAIST PhD Candidate), Wan Ju Kang (KAIST PhD Candidate), and Professor Eun Kyoung Choe (University of Maryland and KAIST alumna) collaborated on this research.

The authors developed a tool that tracks and analyzes which features of a mobile app (e.g., Instagram’s following post, following story, recommended post, post upload, direct messaging, etc.) are in use based on a smartphone’s User Interface (UI) layout. Utilizing this novel method, the authors revealed which feature usage patterns result in regretful smartphone use.

Professor Lee said, “Although many people enjoy the benefits of smartphones, issues have emerged from the overuse of smartphones. With this feature level analysis, users can reflect on their smartphone usage based on finer grained analysis and this could contribute to digital wellbeing.”

2021.11.22 View 9634

Professor Sung-Ju Lee’s Team Wins the Best Paper and the Methods Recognition Awards at the ACM CSCW

A research team led by Professor Sung-Ju Lee at the School of Electrical Engineering won the Best Paper Award and the Methods Recognition Award from ACM CSCW (International Conference on Computer-Supported Cooperative Work and Social Computing) 2021 for their paper “Reflect, not Regret: Understanding Regretful Smartphone Use with App Feature-Level Analysis”.

Founded in 1986, CSCW has been a premier conference on HCI (Human Computer Interaction) and Social Computing. This year, 340 full papers were presented and the best paper awards are given to the top 1% papers of the submitted. Methods Recognition, which is a new award, is given “for strong examples of work that includes well developed, explained, or implemented methods, and methodological innovation.”

Hyunsung Cho (KAIST alumus and currently a PhD candidate at Carnegie Mellon University), Daeun Choi (KAIST undergraduate researcher), Donghwi Kim (KAIST PhD Candidate), Wan Ju Kang (KAIST PhD Candidate), and Professor Eun Kyoung Choe (University of Maryland and KAIST alumna) collaborated on this research.

The authors developed a tool that tracks and analyzes which features of a mobile app (e.g., Instagram’s following post, following story, recommended post, post upload, direct messaging, etc.) are in use based on a smartphone’s User Interface (UI) layout. Utilizing this novel method, the authors revealed which feature usage patterns result in regretful smartphone use.

Professor Lee said, “Although many people enjoy the benefits of smartphones, issues have emerged from the overuse of smartphones. With this feature level analysis, users can reflect on their smartphone usage based on finer grained analysis and this could contribute to digital wellbeing.”

2021.11.22 View 9634 -

Quantum Emitters: Beyond Crystal Clear to Single-Photon Pure

‘Nanoscale Focus Pinspot’ can quench only the background noise without changing the optical properties of the quantum emitter and the built-in photonic structure

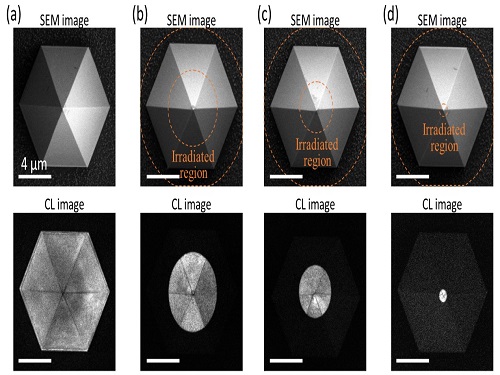

Photons, fundamental particles of light, are carrying these words to your eyes via the light from your computer screen or phone. Photons play a key role in the next-generation quantum information technology, such as quantum computing and communications. A quantum emitter, capable of producing a single, pure photon, is the crux of such technology but has many issues that have yet to be solved, according to KAIST researchers.

A research team under Professor Yong-Hoon Cho has developed a technique that can isolate the desired quality emitter by reducing the noise surrounding the target with what they have dubbed a ‘nanoscale focus pinspot.’ They published their results on June 24 in ACS Nano.

“The nanoscale focus pinspot is a structurally nondestructive technique under an extremely low dose ion beam and is generally applicable for various platforms to improve their single-photon purity while retaining the integrated photonic structures,” said lead author Yong-Hoon Cho from the Department of Physics at KAIST.

To produce single photons from solid state materials, the researchers used wide-bandgap semiconductor quantum dots — fabricated nanoparticles with specialized potential properties, such as the ability to directly inject current into a small chip and to operate at room temperature for practical applications. By making a quantum dot in a photonic structure that propagates light, and then irradiating it with helium ions, researchers theorized that they could develop a quantum emitter that could reduce the unwanted noisy background and produce a single, pure photon on demand.

Professor Cho explained, “Despite its high resolution and versatility, a focused ion beam typically suppresses the optical properties around the bombarded area due to the accelerated ion beam’s high momentum. We focused on the fact that, if the focused ion beam is well controlled, only the background noise can be selectively quenched with high spatial resolution without destroying the structure.”

In other words, the researchers focused the ion beam on a mere pin prick, effectively cutting off the interactions around the quantum dot and removing the physical properties that could negatively interact with and degrade the photon purity emitted from the quantum dot.

“It is the first developed technique that can quench the background noise without changing the optical properties of the quantum emitter and the built-in photonic structure,” Professor Cho asserted.

Professor Cho compared it to stimulated emission depletion microscopy, a technique used to decrease the light around the area of focus, but leaving the focal point illuminated. The result is increased resolution of the desired visual target.

“By adjusting the focused ion beam-irradiated region, we can select the target emitter with nanoscale resolution by quenching the surrounding emitter,” Professor Cho said. “This nanoscale selective-quenching technique can be applied to various material and structural platforms and further extended for applications such as optical memory and high-resolution micro displays.” Korea’s National Research Foundation and the Samsung Science and Technology Foundation supported this work.

-PublicationMinho Choi, Seongmoon Jun, and Yong-Hoon Cho et al. ACS Nano‘Nanoscale Focus Pinspot for High-Purity Quantum Emitters via Focused-Ion-Beam-Induced Luminescence Quenching,’(https://pubs.acs.org/doi/10.1021/acsnano.1c00587)

-ProfileProfessor Yong-Hoon ChoQuantum & Nanobio Photonics Laboratoryhttp://qnp.kaist.ac.kr/

Department of PhysicsKAIST

2021.09.02 View 12841

Quantum Emitters: Beyond Crystal Clear to Single-Photon Pure

‘Nanoscale Focus Pinspot’ can quench only the background noise without changing the optical properties of the quantum emitter and the built-in photonic structure

Photons, fundamental particles of light, are carrying these words to your eyes via the light from your computer screen or phone. Photons play a key role in the next-generation quantum information technology, such as quantum computing and communications. A quantum emitter, capable of producing a single, pure photon, is the crux of such technology but has many issues that have yet to be solved, according to KAIST researchers.

A research team under Professor Yong-Hoon Cho has developed a technique that can isolate the desired quality emitter by reducing the noise surrounding the target with what they have dubbed a ‘nanoscale focus pinspot.’ They published their results on June 24 in ACS Nano.

“The nanoscale focus pinspot is a structurally nondestructive technique under an extremely low dose ion beam and is generally applicable for various platforms to improve their single-photon purity while retaining the integrated photonic structures,” said lead author Yong-Hoon Cho from the Department of Physics at KAIST.

To produce single photons from solid state materials, the researchers used wide-bandgap semiconductor quantum dots — fabricated nanoparticles with specialized potential properties, such as the ability to directly inject current into a small chip and to operate at room temperature for practical applications. By making a quantum dot in a photonic structure that propagates light, and then irradiating it with helium ions, researchers theorized that they could develop a quantum emitter that could reduce the unwanted noisy background and produce a single, pure photon on demand.

Professor Cho explained, “Despite its high resolution and versatility, a focused ion beam typically suppresses the optical properties around the bombarded area due to the accelerated ion beam’s high momentum. We focused on the fact that, if the focused ion beam is well controlled, only the background noise can be selectively quenched with high spatial resolution without destroying the structure.”

In other words, the researchers focused the ion beam on a mere pin prick, effectively cutting off the interactions around the quantum dot and removing the physical properties that could negatively interact with and degrade the photon purity emitted from the quantum dot.

“It is the first developed technique that can quench the background noise without changing the optical properties of the quantum emitter and the built-in photonic structure,” Professor Cho asserted.

Professor Cho compared it to stimulated emission depletion microscopy, a technique used to decrease the light around the area of focus, but leaving the focal point illuminated. The result is increased resolution of the desired visual target.

“By adjusting the focused ion beam-irradiated region, we can select the target emitter with nanoscale resolution by quenching the surrounding emitter,” Professor Cho said. “This nanoscale selective-quenching technique can be applied to various material and structural platforms and further extended for applications such as optical memory and high-resolution micro displays.” Korea’s National Research Foundation and the Samsung Science and Technology Foundation supported this work.

-PublicationMinho Choi, Seongmoon Jun, and Yong-Hoon Cho et al. ACS Nano‘Nanoscale Focus Pinspot for High-Purity Quantum Emitters via Focused-Ion-Beam-Induced Luminescence Quenching,’(https://pubs.acs.org/doi/10.1021/acsnano.1c00587)

-ProfileProfessor Yong-Hoon ChoQuantum & Nanobio Photonics Laboratoryhttp://qnp.kaist.ac.kr/

Department of PhysicsKAIST

2021.09.02 View 12841 -

KAIST Joins IBM Q Network to Accelerate Quantum Computing Research and Foster Quantum Industry

KAIST has joined the IBM Q Network, a community of Fortune 500 companies, academic institutions, startups, and research labs working with IBM to advance quantum computing for business and science.

As the IBM Q Network’s first academic partner in Korea, KAIST will use IBM's advanced quantum computing systems to carry out research projects that advance quantum information science and explore early applications. KAIST will also utilize IBM Quantum resources for talent training and education in preparation for building a quantum workforce for the quantum computing era that will bring huge changes to science and business. By joining the network, KAIST will take a leading role in fostering the ecosystem of quantum computing in Korea, which is expected to be a necessary enabler to realize the Fourth Industrial Revolution.

Professor June-Koo Rhee who also serves as Director of the KAIST Information Technology Research Center (ITRC) of Quantum Computing for AI has led the agreement on KAIST’s joining the IBM Q Network. Director Rhee described quantum computing as "a new technology that can calculate mathematical challenges at very high speed and low power” and also as “one that will change the future.”

Director Rhee said, “Korea started investment in quantum computing relatively late, and thus requires to take bold steps with innovative R&D strategies to pave the roadmap for the next technological leap in the field”. With KAIST joining the IBM Q Network, “Korea will be better equipped to establish a quantum industry, an important foundation for securing national competitiveness,” he added.

The KAIST ITRC of Quantum Computing for AI has been using the publicly available IBM Quantum Experience delivered over the IBM Cloud for research, development and training of quantum algorithms such as quantum artificial intelligence, quantum chemical calculation, and quantum computing education.

KAIST will have access to the most advanced IBM Quantum systems to explore practical research and experiments such as diagnosis of diseases based on quantum artificial intelligence, quantum computational chemistry, and quantum machine learning technology. In addition, knowledge exchanges and sharing with overseas universities and companies under the IBM Q Network will help KAIST strengthen the global presence of Korean technology in quantum computing.

About IBM Quantum

IBM Quantum is an industry-first initiative to build quantum systems for business and science applications. For more information about IBM's quantum computing efforts, please visit www.ibm.com/ibmq.

For more information about the IBM Q Network, as well as a full list of all partners, members, and hubs, visit https://www.research.ibm.com/ibm-q/network/

©Thumbnail Image: IBM.

(END)

2020.09.29 View 10236

KAIST Joins IBM Q Network to Accelerate Quantum Computing Research and Foster Quantum Industry

KAIST has joined the IBM Q Network, a community of Fortune 500 companies, academic institutions, startups, and research labs working with IBM to advance quantum computing for business and science.

As the IBM Q Network’s first academic partner in Korea, KAIST will use IBM's advanced quantum computing systems to carry out research projects that advance quantum information science and explore early applications. KAIST will also utilize IBM Quantum resources for talent training and education in preparation for building a quantum workforce for the quantum computing era that will bring huge changes to science and business. By joining the network, KAIST will take a leading role in fostering the ecosystem of quantum computing in Korea, which is expected to be a necessary enabler to realize the Fourth Industrial Revolution.

Professor June-Koo Rhee who also serves as Director of the KAIST Information Technology Research Center (ITRC) of Quantum Computing for AI has led the agreement on KAIST’s joining the IBM Q Network. Director Rhee described quantum computing as "a new technology that can calculate mathematical challenges at very high speed and low power” and also as “one that will change the future.”

Director Rhee said, “Korea started investment in quantum computing relatively late, and thus requires to take bold steps with innovative R&D strategies to pave the roadmap for the next technological leap in the field”. With KAIST joining the IBM Q Network, “Korea will be better equipped to establish a quantum industry, an important foundation for securing national competitiveness,” he added.

The KAIST ITRC of Quantum Computing for AI has been using the publicly available IBM Quantum Experience delivered over the IBM Cloud for research, development and training of quantum algorithms such as quantum artificial intelligence, quantum chemical calculation, and quantum computing education.

KAIST will have access to the most advanced IBM Quantum systems to explore practical research and experiments such as diagnosis of diseases based on quantum artificial intelligence, quantum computational chemistry, and quantum machine learning technology. In addition, knowledge exchanges and sharing with overseas universities and companies under the IBM Q Network will help KAIST strengthen the global presence of Korean technology in quantum computing.

About IBM Quantum

IBM Quantum is an industry-first initiative to build quantum systems for business and science applications. For more information about IBM's quantum computing efforts, please visit www.ibm.com/ibmq.

For more information about the IBM Q Network, as well as a full list of all partners, members, and hubs, visit https://www.research.ibm.com/ibm-q/network/

©Thumbnail Image: IBM.

(END)

2020.09.29 View 10236 -

Quantum Classifiers with Tailored Quantum Kernel

Quantum information scientists have introduced a new method for machine learning classifications in quantum computing. The non-linear quantum kernels in a quantum binary classifier provide new insights for improving the accuracy of quantum machine learning, deemed able to outperform the current AI technology.

The research team led by Professor June-Koo Kevin Rhee from the School of Electrical Engineering, proposed a quantum classifier based on quantum state fidelity by using a different initial state and replacing the Hadamard classification with a swap test. Unlike the conventional approach, this method is expected to significantly enhance the classification tasks when the training dataset is small, by exploiting the quantum advantage in finding non-linear features in a large feature space.

Quantum machine learning holds promise as one of the imperative applications for quantum computing. In machine learning, one fundamental problem for a wide range of applications is classification, a task needed for recognizing patterns in labeled training data in order to assign a label to new, previously unseen data; and the kernel method has been an invaluable classification tool for identifying non-linear relationships in complex data.

More recently, the kernel method has been introduced in quantum machine learning with great success. The ability of quantum computers to efficiently access and manipulate data in the quantum feature space can open opportunities for quantum techniques to enhance various existing machine learning methods.

The idea of the classification algorithm with a nonlinear kernel is that given a quantum test state, the protocol calculates the weighted power sum of the fidelities of quantum data in quantum parallel via a swap-test circuit followed by two single-qubit measurements (see Figure 1). This requires only a small number of quantum data operations regardless of the size of data. The novelty of this approach lies in the fact that labeled training data can be densely packed into a quantum state and then compared to the test data.

The KAIST team, in collaboration with researchers from the University of KwaZulu-Natal (UKZN) in South Africa and Data Cybernetics in Germany, has further advanced the rapidly evolving field of quantum machine learning by introducing quantum classifiers with tailored quantum kernels.This study was reported at npj Quantum Information in May.

The input data is either represented by classical data via a quantum feature map or intrinsic quantum data, and the classification is based on the kernel function that measures the closeness of the test data to training data.

Dr. Daniel Park at KAIST, one of the lead authors of this research, said that the quantum kernel can be tailored systematically to an arbitrary power sum, which makes it an excellent candidate for real-world applications.

Professor Rhee said that quantum forking, a technique that was invented by the team previously, makes it possible to start the protocol from scratch, even when all the labeled training data and the test data are independently encoded in separate qubits.

Professor Francesco Petruccione from UKZN explained, “The state fidelity of two quantum states includes the imaginary parts of the probability amplitudes, which enables use of the full quantum feature space.”

To demonstrate the usefulness of the classification protocol, Carsten Blank from Data Cybernetics implemented the classifier and compared classical simulations using the five-qubit IBM quantum computer that is freely available to public users via cloud service. “This is a promising sign that the field is progressing,” Blank noted.

Link to download the full-text paper:

https://www.nature.com/articles/s41534-020-0272-6

-Profile

Professor June-Koo Kevin Rhee

rhee.jk@kaist.ac.kr

Professor, School of Electrical Engineering

Director, ITRC of Quantum Computing for AIKAIST

Daniel Kyungdeock Parkkpark10@kaist.ac.krResearch Assistant ProfessorSchool of Electrical EngineeringKAIST

2020.07.07 View 14213

Quantum Classifiers with Tailored Quantum Kernel

Quantum information scientists have introduced a new method for machine learning classifications in quantum computing. The non-linear quantum kernels in a quantum binary classifier provide new insights for improving the accuracy of quantum machine learning, deemed able to outperform the current AI technology.

The research team led by Professor June-Koo Kevin Rhee from the School of Electrical Engineering, proposed a quantum classifier based on quantum state fidelity by using a different initial state and replacing the Hadamard classification with a swap test. Unlike the conventional approach, this method is expected to significantly enhance the classification tasks when the training dataset is small, by exploiting the quantum advantage in finding non-linear features in a large feature space.

Quantum machine learning holds promise as one of the imperative applications for quantum computing. In machine learning, one fundamental problem for a wide range of applications is classification, a task needed for recognizing patterns in labeled training data in order to assign a label to new, previously unseen data; and the kernel method has been an invaluable classification tool for identifying non-linear relationships in complex data.

More recently, the kernel method has been introduced in quantum machine learning with great success. The ability of quantum computers to efficiently access and manipulate data in the quantum feature space can open opportunities for quantum techniques to enhance various existing machine learning methods.

The idea of the classification algorithm with a nonlinear kernel is that given a quantum test state, the protocol calculates the weighted power sum of the fidelities of quantum data in quantum parallel via a swap-test circuit followed by two single-qubit measurements (see Figure 1). This requires only a small number of quantum data operations regardless of the size of data. The novelty of this approach lies in the fact that labeled training data can be densely packed into a quantum state and then compared to the test data.

The KAIST team, in collaboration with researchers from the University of KwaZulu-Natal (UKZN) in South Africa and Data Cybernetics in Germany, has further advanced the rapidly evolving field of quantum machine learning by introducing quantum classifiers with tailored quantum kernels.This study was reported at npj Quantum Information in May.

The input data is either represented by classical data via a quantum feature map or intrinsic quantum data, and the classification is based on the kernel function that measures the closeness of the test data to training data.

Dr. Daniel Park at KAIST, one of the lead authors of this research, said that the quantum kernel can be tailored systematically to an arbitrary power sum, which makes it an excellent candidate for real-world applications.

Professor Rhee said that quantum forking, a technique that was invented by the team previously, makes it possible to start the protocol from scratch, even when all the labeled training data and the test data are independently encoded in separate qubits.

Professor Francesco Petruccione from UKZN explained, “The state fidelity of two quantum states includes the imaginary parts of the probability amplitudes, which enables use of the full quantum feature space.”

To demonstrate the usefulness of the classification protocol, Carsten Blank from Data Cybernetics implemented the classifier and compared classical simulations using the five-qubit IBM quantum computer that is freely available to public users via cloud service. “This is a promising sign that the field is progressing,” Blank noted.

Link to download the full-text paper:

https://www.nature.com/articles/s41534-020-0272-6

-Profile

Professor June-Koo Kevin Rhee

rhee.jk@kaist.ac.kr

Professor, School of Electrical Engineering

Director, ITRC of Quantum Computing for AIKAIST

Daniel Kyungdeock Parkkpark10@kaist.ac.krResearch Assistant ProfessorSchool of Electrical EngineeringKAIST

2020.07.07 View 14213 -

A Deep-Learned E-Skin Decodes Complex Human Motion

A deep-learning powered single-strained electronic skin sensor can capture human motion from a distance. The single strain sensor placed on the wrist decodes complex five-finger motions in real time with a virtual 3D hand that mirrors the original motions. The deep neural network boosted by rapid situation learning (RSL) ensures stable operation regardless of its position on the surface of the skin.

Conventional approaches require many sensor networks that cover the entire curvilinear surfaces of the target area. Unlike conventional wafer-based fabrication, this laser fabrication provides a new sensing paradigm for motion tracking.

The research team, led by Professor Sungho Jo from the School of Computing, collaborated with Professor Seunghwan Ko from Seoul National University to design this new measuring system that extracts signals corresponding to multiple finger motions by generating cracks in metal nanoparticle films using laser technology. The sensor patch was then attached to a user’s wrist to detect the movement of the fingers.

The concept of this research started from the idea that pinpointing a single area would be more efficient for identifying movements than affixing sensors to every joint and muscle. To make this targeting strategy work, it needs to accurately capture the signals from different areas at the point where they all converge, and then decoupling the information entangled in the converged signals. To maximize users’ usability and mobility, the research team used a single-channeled sensor to generate the signals corresponding to complex hand motions.

The rapid situation learning (RSL) system collects data from arbitrary parts on the wrist and automatically trains the model in a real-time demonstration with a virtual 3D hand that mirrors the original motions. To enhance the sensitivity of the sensor, researchers used laser-induced nanoscale cracking.

This sensory system can track the motion of the entire body with a small sensory network and facilitate the indirect remote measurement of human motions, which is applicable for wearable VR/AR systems.

The research team said they focused on two tasks while developing the sensor. First, they analyzed the sensor signal patterns into a latent space encapsulating temporal sensor behavior and then they mapped the latent vectors to finger motion metric spaces.

Professor Jo said, “Our system is expandable to other body parts. We already confirmed that the sensor is also capable of extracting gait motions from a pelvis. This technology is expected to provide a turning point in health-monitoring, motion tracking, and soft robotics.”

This study was featured in Nature Communications.

Publication:

Kim, K. K., et al. (2020) A deep-learned skin sensor decoding the epicentral human motions. Nature Communications. 11. 2149. https://doi.org/10.1038/s41467-020-16040-y29

Link to download the full-text paper:

https://www.nature.com/articles/s41467-020-16040-y.pdf

Profile: Professor Sungho Jo

shjo@kaist.ac.kr

http://nmail.kaist.ac.kr

Neuro-Machine Augmented Intelligence Lab

School of Computing

College of Engineering

KAIST

2020.06.10 View 14460

A Deep-Learned E-Skin Decodes Complex Human Motion

A deep-learning powered single-strained electronic skin sensor can capture human motion from a distance. The single strain sensor placed on the wrist decodes complex five-finger motions in real time with a virtual 3D hand that mirrors the original motions. The deep neural network boosted by rapid situation learning (RSL) ensures stable operation regardless of its position on the surface of the skin.

Conventional approaches require many sensor networks that cover the entire curvilinear surfaces of the target area. Unlike conventional wafer-based fabrication, this laser fabrication provides a new sensing paradigm for motion tracking.

The research team, led by Professor Sungho Jo from the School of Computing, collaborated with Professor Seunghwan Ko from Seoul National University to design this new measuring system that extracts signals corresponding to multiple finger motions by generating cracks in metal nanoparticle films using laser technology. The sensor patch was then attached to a user’s wrist to detect the movement of the fingers.

The concept of this research started from the idea that pinpointing a single area would be more efficient for identifying movements than affixing sensors to every joint and muscle. To make this targeting strategy work, it needs to accurately capture the signals from different areas at the point where they all converge, and then decoupling the information entangled in the converged signals. To maximize users’ usability and mobility, the research team used a single-channeled sensor to generate the signals corresponding to complex hand motions.

The rapid situation learning (RSL) system collects data from arbitrary parts on the wrist and automatically trains the model in a real-time demonstration with a virtual 3D hand that mirrors the original motions. To enhance the sensitivity of the sensor, researchers used laser-induced nanoscale cracking.

This sensory system can track the motion of the entire body with a small sensory network and facilitate the indirect remote measurement of human motions, which is applicable for wearable VR/AR systems.

The research team said they focused on two tasks while developing the sensor. First, they analyzed the sensor signal patterns into a latent space encapsulating temporal sensor behavior and then they mapped the latent vectors to finger motion metric spaces.

Professor Jo said, “Our system is expandable to other body parts. We already confirmed that the sensor is also capable of extracting gait motions from a pelvis. This technology is expected to provide a turning point in health-monitoring, motion tracking, and soft robotics.”

This study was featured in Nature Communications.

Publication:

Kim, K. K., et al. (2020) A deep-learned skin sensor decoding the epicentral human motions. Nature Communications. 11. 2149. https://doi.org/10.1038/s41467-020-16040-y29

Link to download the full-text paper:

https://www.nature.com/articles/s41467-020-16040-y.pdf

Profile: Professor Sungho Jo

shjo@kaist.ac.kr

http://nmail.kaist.ac.kr

Neuro-Machine Augmented Intelligence Lab

School of Computing

College of Engineering

KAIST

2020.06.10 View 14460 -

Professor Dongsu Han Named Program Chair for ACM CoNEXT 2020

Professor Dongsu Han from the School of Electrical Engineering has been appointed as the program chair for the 16th Association for Computing Machinery’s International Conference on emerging Networking EXperiments and Technologies (ACM CoNEXT 2020). Professor Han is the first program chair to be appointed from an Asian institution.

ACM CoNEXT is hosted by ACM SIGCOMM, ACM's Special Interest Group on Data Communications, which specializes in the field of communication and computer networks.

Professor Han will serve as program co-chair along with Professor Anja Feldmann from the Max Planck Institute for Informatics. Together, they have appointed 40 world-leading researchers as program committee members for this conference, including Professor Song Min Kim from KAIST School of Electrical Engineering.

Paper submissions for the conference can be made by the end of June, and the event itself is to take place from the 1st to 4th of December.

Conference Website: https://conferences2.sigcomm.org/co-next/2020/#!/home

(END)

2020.06.02 View 12851

Professor Dongsu Han Named Program Chair for ACM CoNEXT 2020

Professor Dongsu Han from the School of Electrical Engineering has been appointed as the program chair for the 16th Association for Computing Machinery’s International Conference on emerging Networking EXperiments and Technologies (ACM CoNEXT 2020). Professor Han is the first program chair to be appointed from an Asian institution.

ACM CoNEXT is hosted by ACM SIGCOMM, ACM's Special Interest Group on Data Communications, which specializes in the field of communication and computer networks.

Professor Han will serve as program co-chair along with Professor Anja Feldmann from the Max Planck Institute for Informatics. Together, they have appointed 40 world-leading researchers as program committee members for this conference, including Professor Song Min Kim from KAIST School of Electrical Engineering.

Paper submissions for the conference can be made by the end of June, and the event itself is to take place from the 1st to 4th of December.

Conference Website: https://conferences2.sigcomm.org/co-next/2020/#!/home

(END)

2020.06.02 View 12851 -

AI to Determine When to Intervene with Your Driving

(Professor Uichin Lee (left) and PhD candidate Auk Kim)

Can your AI agent judge when to talk to you while you are driving? According to a KAIST research team, their in-vehicle conservation service technology will judge when it is appropriate to contact you to ensure your safety.

Professor Uichin Lee from the Department of Industrial and Systems Engineering at KAIST and his research team have developed AI technology that automatically detects safe moments for AI agents to provide conversation services to drivers.

Their research focuses on solving the potential problems of distraction created by in-vehicle conversation services. If an AI agent talks to a driver at an inopportune moment, such as while making a turn, a car accident will be more likely to occur.

In-vehicle conversation services need to be convenient as well as safe. However, the cognitive burden of multitasking negatively influences the quality of the service. Users tend to be more distracted during certain traffic conditions. To address this long-standing challenge of the in-vehicle conversation services, the team introduced a composite cognitive model that considers both safe driving and auditory-verbal service performance and used a machine-learning model for all collected data.

The combination of these individual measures is able to determine the appropriate moments for conversation and most appropriate types of conversational services. For instance, in the case of delivering simple-context information, such as a weather forecast, driver safety alone would be the most appropriate consideration. Meanwhile, when delivering information that requires a driver response, such as a “Yes” or “No,” the combination of driver safety and auditory-verbal performance should be considered.

The research team developed a prototype of an in-vehicle conversation service based on a navigation app that can be used in real driving environments. The app was also connected to the vehicle to collect in-vehicle OBD-II/CAN data, such as the steering wheel angle and brake pedal position, and mobility and environmental data such as the distance between successive cars and traffic flow.

Using pseudo-conversation services, the research team collected a real-world driving dataset consisting of 1,388 interactions and sensor data from 29 drivers who interacted with AI conversational agents. Machine learning analysis based on the dataset demonstrated that the opportune moments for driver interruption could be correctly inferred with 87% accuracy.

The safety enhancement technology developed by the team is expected to minimize driver distractions caused by in-vehicle conversation services. This technology can be directly applied to current in-vehicle systems that provide conversation services. It can also be extended and applied to the real-time detection of driver distraction problems caused by the use of a smartphone while driving.

Professor Lee said, “In the near future, cars will proactively deliver various in-vehicle conversation services. This technology will certainly help vehicles interact with their drivers safely as it can fairly accurately determine when to provide conversation services using only basic sensor data generated by cars.”

The researchers presented their findings at the ACM International Joint Conference on Pervasive and Ubiquitous Computing (Ubicomp’19) in London, UK. This research was supported in part by Hyundai NGV and by the Next-Generation Information Computing Development Program through the National Research Foundation of Korea (NRF) funded by the Ministry of Science and ICT.

(Figure: Visual description of safe enhancement technology for in-vehicle conversation services)

2019.11.13 View 20549

AI to Determine When to Intervene with Your Driving

(Professor Uichin Lee (left) and PhD candidate Auk Kim)

Can your AI agent judge when to talk to you while you are driving? According to a KAIST research team, their in-vehicle conservation service technology will judge when it is appropriate to contact you to ensure your safety.

Professor Uichin Lee from the Department of Industrial and Systems Engineering at KAIST and his research team have developed AI technology that automatically detects safe moments for AI agents to provide conversation services to drivers.

Their research focuses on solving the potential problems of distraction created by in-vehicle conversation services. If an AI agent talks to a driver at an inopportune moment, such as while making a turn, a car accident will be more likely to occur.

In-vehicle conversation services need to be convenient as well as safe. However, the cognitive burden of multitasking negatively influences the quality of the service. Users tend to be more distracted during certain traffic conditions. To address this long-standing challenge of the in-vehicle conversation services, the team introduced a composite cognitive model that considers both safe driving and auditory-verbal service performance and used a machine-learning model for all collected data.

The combination of these individual measures is able to determine the appropriate moments for conversation and most appropriate types of conversational services. For instance, in the case of delivering simple-context information, such as a weather forecast, driver safety alone would be the most appropriate consideration. Meanwhile, when delivering information that requires a driver response, such as a “Yes” or “No,” the combination of driver safety and auditory-verbal performance should be considered.

The research team developed a prototype of an in-vehicle conversation service based on a navigation app that can be used in real driving environments. The app was also connected to the vehicle to collect in-vehicle OBD-II/CAN data, such as the steering wheel angle and brake pedal position, and mobility and environmental data such as the distance between successive cars and traffic flow.

Using pseudo-conversation services, the research team collected a real-world driving dataset consisting of 1,388 interactions and sensor data from 29 drivers who interacted with AI conversational agents. Machine learning analysis based on the dataset demonstrated that the opportune moments for driver interruption could be correctly inferred with 87% accuracy.

The safety enhancement technology developed by the team is expected to minimize driver distractions caused by in-vehicle conversation services. This technology can be directly applied to current in-vehicle systems that provide conversation services. It can also be extended and applied to the real-time detection of driver distraction problems caused by the use of a smartphone while driving.

Professor Lee said, “In the near future, cars will proactively deliver various in-vehicle conversation services. This technology will certainly help vehicles interact with their drivers safely as it can fairly accurately determine when to provide conversation services using only basic sensor data generated by cars.”

The researchers presented their findings at the ACM International Joint Conference on Pervasive and Ubiquitous Computing (Ubicomp’19) in London, UK. This research was supported in part by Hyundai NGV and by the Next-Generation Information Computing Development Program through the National Research Foundation of Korea (NRF) funded by the Ministry of Science and ICT.

(Figure: Visual description of safe enhancement technology for in-vehicle conversation services)

2019.11.13 View 20549 -

Image Analysis to Automatically Quantify Gender Bias in Movies

Many commercial films worldwide continue to express womanhood in a stereotypical manner, a recent study using image analysis showed. A KAIST research team developed a novel image analysis method for automatically quantifying the degree of gender bias in films.

The ‘Bechdel Test’ has been the most representative and general method of evaluating gender bias in films. This test indicates the degree of gender bias in a film by measuring how active the presence of women is in a film. A film passes the Bechdel Test if the film (1) has at least two female characters, (2) who talk to each other, and (3) their conversation is not related to the male characters.

However, the Bechdel Test has fundamental limitations regarding the accuracy and practicality of the evaluation. Firstly, the Bechdel Test requires considerable human resources, as it is performed subjectively by a person. More importantly, the Bechdel Test analyzes only a single aspect of the film, the dialogues between characters in the script, and provides only a dichotomous result of passing the test, neglecting the fact that a film is a visual art form reflecting multi-layered and complicated gender bias phenomena. It is also difficult to fully represent today’s various discourse on gender bias, which is much more diverse than in 1985 when the Bechdel Test was first presented.

Inspired by these limitations, a KAIST research team led by Professor Byungjoo Lee from the Graduate School of Culture Technology proposed an advanced system that uses computer vision technology to automatically analyzes the visual information of each frame of the film. This allows the system to more accurately and practically evaluate the degree to which female and male characters are discriminatingly depicted in a film in quantitative terms, and further enables the revealing of gender bias that conventional analysis methods could not yet detect.

Professor Lee and his researchers Ji Yoon Jang and Sangyoon Lee analyzed 40 films from Hollywood and South Korea released between 2017 and 2018. They downsampled the films from 24 to 3 frames per second, and used Microsoft’s Face API facial recognition technology and object detection technology YOLO9000 to verify the details of the characters and their surrounding objects in the scenes.

Using the new system, the team computed eight quantitative indices that describe the representation of a particular gender in the films. They are: emotional diversity, spatial staticity, spatial occupancy, temporal occupancy, mean age, intellectual image, emphasis on appearance, and type and frequency of surrounding objects.

Figure 1. System Diagram

Figure 2. 40 Hollywood and Korean Films Analyzed in the Study

According to the emotional diversity index, the depicted women were found to be more prone to expressing passive emotions, such as sadness, fear, and surprise. In contrast, male characters in the same films were more likely to demonstrate active emotions, such as anger and hatred.

Figure 3. Difference in Emotional Diversity between Female and Male Characters

The type and frequency of surrounding objects index revealed that female characters and automobiles were tracked together only 55.7 % as much as that of male characters, while they were more likely to appear with furniture and in a household, with 123.9% probability.

In cases of temporal occupancy and mean age, female characters appeared less frequently in films than males at the rate of 56%, and were on average younger in 79.1% of the cases. These two indices were especially conspicuous in Korean films.

Professor Lee said, “Our research confirmed that many commercial films depict women from a stereotypical perspective. I hope this result promotes public awareness of the importance of taking prudence when filmmakers create characters in films.”

This study was supported by KAIST College of Liberal Arts and Convergence Science as part of the Venture Research Program for Master’s and PhD Students, and will be presented at the 22nd ACM Conference on Computer-Supported Cooperative Work and Social Computing (CSCW) on November 11 to be held in Austin, Texas.

Publication:

Ji Yoon Jang, Sangyoon Lee, and Byungjoo Lee. 2019. Quantification of Gender Representation Bias in Commercial Films based on Image Analysis. In Proceedings of the 22nd ACM Conference on Computer-Supported Cooperative Work and Social Computing (CSCW). ACM, New York, NY, USA, Article 198, 29 pages. https://doi.org/10.1145/3359300

Link to download the full-text paper:

https://files.cargocollective.com/611692/cscw198-jangA--1-.pdf

Profile: Prof. Byungjoo Lee, MD, PhD

byungjoo.lee@kaist.ac.kr

http://kiml.org/

Assistant Professor

Graduate School of Culture Technology (CT)

Korea Advanced Institute of Science and Technology (KAIST)

https://www.kaist.ac.kr Daejeon 34141, Korea

Profile: Ji Yoon Jang, M.S.

yoone3422@kaist.ac.kr

Interactive Media Lab

Graduate School of Culture Technology (CT)

Korea Advanced Institute of Science and Technology (KAIST)

https://www.kaist.ac.kr Daejeon 34141, Korea

Profile: Sangyoon Lee, M.S. Candidate

sl2820@kaist.ac.kr

Interactive Media Lab

Graduate School of Culture Technology (CT)

Korea Advanced Institute of Science and Technology (KAIST)

https://www.kaist.ac.kr Daejeon 34141, Korea

(END)

2019.10.17 View 28848

Image Analysis to Automatically Quantify Gender Bias in Movies

Many commercial films worldwide continue to express womanhood in a stereotypical manner, a recent study using image analysis showed. A KAIST research team developed a novel image analysis method for automatically quantifying the degree of gender bias in films.

The ‘Bechdel Test’ has been the most representative and general method of evaluating gender bias in films. This test indicates the degree of gender bias in a film by measuring how active the presence of women is in a film. A film passes the Bechdel Test if the film (1) has at least two female characters, (2) who talk to each other, and (3) their conversation is not related to the male characters.

However, the Bechdel Test has fundamental limitations regarding the accuracy and practicality of the evaluation. Firstly, the Bechdel Test requires considerable human resources, as it is performed subjectively by a person. More importantly, the Bechdel Test analyzes only a single aspect of the film, the dialogues between characters in the script, and provides only a dichotomous result of passing the test, neglecting the fact that a film is a visual art form reflecting multi-layered and complicated gender bias phenomena. It is also difficult to fully represent today’s various discourse on gender bias, which is much more diverse than in 1985 when the Bechdel Test was first presented.

Inspired by these limitations, a KAIST research team led by Professor Byungjoo Lee from the Graduate School of Culture Technology proposed an advanced system that uses computer vision technology to automatically analyzes the visual information of each frame of the film. This allows the system to more accurately and practically evaluate the degree to which female and male characters are discriminatingly depicted in a film in quantitative terms, and further enables the revealing of gender bias that conventional analysis methods could not yet detect.

Professor Lee and his researchers Ji Yoon Jang and Sangyoon Lee analyzed 40 films from Hollywood and South Korea released between 2017 and 2018. They downsampled the films from 24 to 3 frames per second, and used Microsoft’s Face API facial recognition technology and object detection technology YOLO9000 to verify the details of the characters and their surrounding objects in the scenes.

Using the new system, the team computed eight quantitative indices that describe the representation of a particular gender in the films. They are: emotional diversity, spatial staticity, spatial occupancy, temporal occupancy, mean age, intellectual image, emphasis on appearance, and type and frequency of surrounding objects.

Figure 1. System Diagram

Figure 2. 40 Hollywood and Korean Films Analyzed in the Study

According to the emotional diversity index, the depicted women were found to be more prone to expressing passive emotions, such as sadness, fear, and surprise. In contrast, male characters in the same films were more likely to demonstrate active emotions, such as anger and hatred.

Figure 3. Difference in Emotional Diversity between Female and Male Characters

The type and frequency of surrounding objects index revealed that female characters and automobiles were tracked together only 55.7 % as much as that of male characters, while they were more likely to appear with furniture and in a household, with 123.9% probability.

In cases of temporal occupancy and mean age, female characters appeared less frequently in films than males at the rate of 56%, and were on average younger in 79.1% of the cases. These two indices were especially conspicuous in Korean films.

Professor Lee said, “Our research confirmed that many commercial films depict women from a stereotypical perspective. I hope this result promotes public awareness of the importance of taking prudence when filmmakers create characters in films.”

This study was supported by KAIST College of Liberal Arts and Convergence Science as part of the Venture Research Program for Master’s and PhD Students, and will be presented at the 22nd ACM Conference on Computer-Supported Cooperative Work and Social Computing (CSCW) on November 11 to be held in Austin, Texas.

Publication:

Ji Yoon Jang, Sangyoon Lee, and Byungjoo Lee. 2019. Quantification of Gender Representation Bias in Commercial Films based on Image Analysis. In Proceedings of the 22nd ACM Conference on Computer-Supported Cooperative Work and Social Computing (CSCW). ACM, New York, NY, USA, Article 198, 29 pages. https://doi.org/10.1145/3359300

Link to download the full-text paper:

https://files.cargocollective.com/611692/cscw198-jangA--1-.pdf

Profile: Prof. Byungjoo Lee, MD, PhD

byungjoo.lee@kaist.ac.kr

http://kiml.org/

Assistant Professor

Graduate School of Culture Technology (CT)

Korea Advanced Institute of Science and Technology (KAIST)

https://www.kaist.ac.kr Daejeon 34141, Korea

Profile: Ji Yoon Jang, M.S.

yoone3422@kaist.ac.kr

Interactive Media Lab

Graduate School of Culture Technology (CT)

Korea Advanced Institute of Science and Technology (KAIST)

https://www.kaist.ac.kr Daejeon 34141, Korea

Profile: Sangyoon Lee, M.S. Candidate

sl2820@kaist.ac.kr

Interactive Media Lab

Graduate School of Culture Technology (CT)

Korea Advanced Institute of Science and Technology (KAIST)

https://www.kaist.ac.kr Daejeon 34141, Korea

(END)