TOM

-

KAIST debuts “DreamWaQer” - a quadrupedal robot that can walk in the dark

- The team led by Professor Hyun Myung of the School of Electrical Engineering developed “DreamWaQ”, a deep reinforcement learning-based walking robot control technology that can walk in an atypical environment without visual and/or tactile information

- Utilization of “DreamWaQ” technology can enable mass production of various types of “DreamWaQers”

- Expected to be used in exploration of atypical environment involving unique circumstances such as disasters by fire.

A team of Korean engineering researchers has developed a quadrupedal robot technology that can climb up and down the steps and moves without falling over in uneven environments such as tree roots without the help of visual or tactile sensors even in disastrous situations in which visual confirmation is impeded due to darkness or thick smoke from the flames.

KAIST (President Kwang Hyung Lee) announced on the 29th of March that Professor Hyun Myung's research team at the Urban Robotics Lab in the School of Electrical Engineering developed a walking robot control technology that enables robust 'blind locomotion' in various atypical environments.

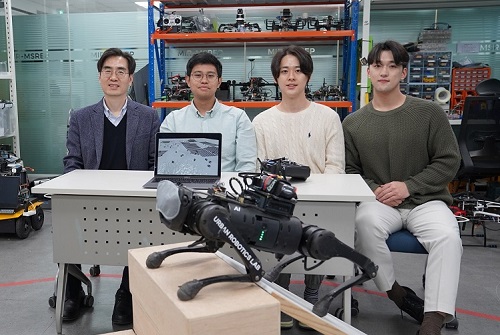

< (From left) Prof. Hyun Myung, Doctoral Candidates I Made Aswin Nahrendra, Byeongho Yu, and Minho Oh. In the foreground is the DreamWaQer, a quadrupedal robot equipped with DreamWaQ technology. >

The KAIST research team developed "DreamWaQ" technology, which was named so as it enables walking robots to move about even in the dark, just as a person can walk without visual help fresh out of bed and going to the bathroom in the dark. With this technology installed atop any legged robots, it will be possible to create various types of "DreamWaQers".

Existing walking robot controllers are based on kinematics and/or dynamics models. This is expressed as a model-based control method. In particular, on atypical environments like the open, uneven fields, it is necessary to obtain the feature information of the terrain more quickly in order to maintain stability as it walks. However, it has been shown to depend heavily on the cognitive ability to survey the surrounding environment.

In contrast, the controller developed by Professor Hyun Myung's research team based on deep reinforcement learning (RL) methods can quickly calculate appropriate control commands for each motor of the walking robot through data of various environments obtained from the simulator. Whereas the existing controllers that learned from simulations required a separate re-orchestration to make it work with an actual robot, this controller developed by the research team is expected to be easily applied to various walking robots because it does not require an additional tuning process.

DreamWaQ, the controller developed by the research team, is largely composed of a context estimation network that estimates the ground and robot information and a policy network that computes control commands. The context-aided estimator network estimates the ground information implicitly and the robot’s status explicitly through inertial information and joint information. This information is fed into the policy network to be used to generate optimal control commands. Both networks are learned together in the simulation.

While the context-aided estimator network is learned through supervised learning, the policy network is learned through an actor-critic architecture, a deep RL methodology. The actor network can only implicitly infer surrounding terrain information. In the simulation, the surrounding terrain information is known, and the critic, or the value network, that has the exact terrain information evaluates the policy of the actor network.

This whole learning process takes only about an hour in a GPU-enabled PC, and the actual robot is equipped with only the network of learned actors. Without looking at the surrounding terrain, it goes through the process of imagining which environment is similar to one of the various environments learned in the simulation using only the inertial sensor (IMU) inside the robot and the measurement of joint angles. If it suddenly encounters an offset, such as a staircase, it will not know until its foot touches the step, but it will quickly draw up terrain information the moment its foot touches the surface. Then the control command suitable for the estimated terrain information is transmitted to each motor, enabling rapidly adapted walking.

The DreamWaQer robot walked not only in the laboratory environment, but also in an outdoor environment around the campus with many curbs and speed bumps, and over a field with many tree roots and gravel, demonstrating its abilities by overcoming a staircase with a difference of a height that is two-thirds of its body. In addition, regardless of the environment, the research team confirmed that it was capable of stable walking ranging from a slow speed of 0.3 m/s to a rather fast speed of 1.0 m/s.

The results of this study were produced by a student in doctorate course, I Made Aswin Nahrendra, as the first author, and his colleague Byeongho Yu as a co-author. It has been accepted to be presented at the upcoming IEEE International Conference on Robotics and Automation (ICRA) scheduled to be held in London at the end of May. (Paper title: DreamWaQ: Learning Robust Quadrupedal Locomotion With Implicit Terrain Imagination via Deep Reinforcement Learning)

The videos of the walking robot DreamWaQer equipped with the developed DreamWaQ can be found at the address below.

Main Introduction: https://youtu.be/JC1_bnTxPiQ Experiment Sketches: https://youtu.be/mhUUZVbeDA0

Meanwhile, this research was carried out with the support from the Robot Industry Core Technology Development Program of the Ministry of Trade, Industry and Energy (MOTIE). (Task title: Development of Mobile Intelligence SW for Autonomous Navigation of Legged Robots in Dynamic and Atypical Environments for Real Application)

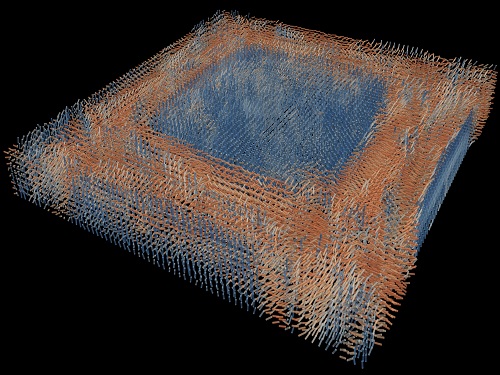

< Figure 1. Overview of DreamWaQ, a controller developed by this research team. This network consists of an estimator network that learns implicit and explicit estimates together, a policy network that acts as a controller, and a value network that provides guides to the policies during training. When implemented in a real robot, only the estimator and policy network are used. Both networks run in less than 1 ms on the robot's on-board computer. >

< Figure 2. Since the estimator can implicitly estimate the ground information as the foot touches the surface, it is possible to adapt quickly to rapidly changing ground conditions. >

< Figure 3. Results showing that even a small walking robot was able to overcome steps with height differences of about 20cm. >

2023.05.18 View 9337

KAIST debuts “DreamWaQer” - a quadrupedal robot that can walk in the dark

- The team led by Professor Hyun Myung of the School of Electrical Engineering developed “DreamWaQ”, a deep reinforcement learning-based walking robot control technology that can walk in an atypical environment without visual and/or tactile information

- Utilization of “DreamWaQ” technology can enable mass production of various types of “DreamWaQers”

- Expected to be used in exploration of atypical environment involving unique circumstances such as disasters by fire.

A team of Korean engineering researchers has developed a quadrupedal robot technology that can climb up and down the steps and moves without falling over in uneven environments such as tree roots without the help of visual or tactile sensors even in disastrous situations in which visual confirmation is impeded due to darkness or thick smoke from the flames.

KAIST (President Kwang Hyung Lee) announced on the 29th of March that Professor Hyun Myung's research team at the Urban Robotics Lab in the School of Electrical Engineering developed a walking robot control technology that enables robust 'blind locomotion' in various atypical environments.

< (From left) Prof. Hyun Myung, Doctoral Candidates I Made Aswin Nahrendra, Byeongho Yu, and Minho Oh. In the foreground is the DreamWaQer, a quadrupedal robot equipped with DreamWaQ technology. >

The KAIST research team developed "DreamWaQ" technology, which was named so as it enables walking robots to move about even in the dark, just as a person can walk without visual help fresh out of bed and going to the bathroom in the dark. With this technology installed atop any legged robots, it will be possible to create various types of "DreamWaQers".

Existing walking robot controllers are based on kinematics and/or dynamics models. This is expressed as a model-based control method. In particular, on atypical environments like the open, uneven fields, it is necessary to obtain the feature information of the terrain more quickly in order to maintain stability as it walks. However, it has been shown to depend heavily on the cognitive ability to survey the surrounding environment.

In contrast, the controller developed by Professor Hyun Myung's research team based on deep reinforcement learning (RL) methods can quickly calculate appropriate control commands for each motor of the walking robot through data of various environments obtained from the simulator. Whereas the existing controllers that learned from simulations required a separate re-orchestration to make it work with an actual robot, this controller developed by the research team is expected to be easily applied to various walking robots because it does not require an additional tuning process.

DreamWaQ, the controller developed by the research team, is largely composed of a context estimation network that estimates the ground and robot information and a policy network that computes control commands. The context-aided estimator network estimates the ground information implicitly and the robot’s status explicitly through inertial information and joint information. This information is fed into the policy network to be used to generate optimal control commands. Both networks are learned together in the simulation.

While the context-aided estimator network is learned through supervised learning, the policy network is learned through an actor-critic architecture, a deep RL methodology. The actor network can only implicitly infer surrounding terrain information. In the simulation, the surrounding terrain information is known, and the critic, or the value network, that has the exact terrain information evaluates the policy of the actor network.

This whole learning process takes only about an hour in a GPU-enabled PC, and the actual robot is equipped with only the network of learned actors. Without looking at the surrounding terrain, it goes through the process of imagining which environment is similar to one of the various environments learned in the simulation using only the inertial sensor (IMU) inside the robot and the measurement of joint angles. If it suddenly encounters an offset, such as a staircase, it will not know until its foot touches the step, but it will quickly draw up terrain information the moment its foot touches the surface. Then the control command suitable for the estimated terrain information is transmitted to each motor, enabling rapidly adapted walking.

The DreamWaQer robot walked not only in the laboratory environment, but also in an outdoor environment around the campus with many curbs and speed bumps, and over a field with many tree roots and gravel, demonstrating its abilities by overcoming a staircase with a difference of a height that is two-thirds of its body. In addition, regardless of the environment, the research team confirmed that it was capable of stable walking ranging from a slow speed of 0.3 m/s to a rather fast speed of 1.0 m/s.

The results of this study were produced by a student in doctorate course, I Made Aswin Nahrendra, as the first author, and his colleague Byeongho Yu as a co-author. It has been accepted to be presented at the upcoming IEEE International Conference on Robotics and Automation (ICRA) scheduled to be held in London at the end of May. (Paper title: DreamWaQ: Learning Robust Quadrupedal Locomotion With Implicit Terrain Imagination via Deep Reinforcement Learning)

The videos of the walking robot DreamWaQer equipped with the developed DreamWaQ can be found at the address below.

Main Introduction: https://youtu.be/JC1_bnTxPiQ Experiment Sketches: https://youtu.be/mhUUZVbeDA0

Meanwhile, this research was carried out with the support from the Robot Industry Core Technology Development Program of the Ministry of Trade, Industry and Energy (MOTIE). (Task title: Development of Mobile Intelligence SW for Autonomous Navigation of Legged Robots in Dynamic and Atypical Environments for Real Application)

< Figure 1. Overview of DreamWaQ, a controller developed by this research team. This network consists of an estimator network that learns implicit and explicit estimates together, a policy network that acts as a controller, and a value network that provides guides to the policies during training. When implemented in a real robot, only the estimator and policy network are used. Both networks run in less than 1 ms on the robot's on-board computer. >

< Figure 2. Since the estimator can implicitly estimate the ground information as the foot touches the surface, it is possible to adapt quickly to rapidly changing ground conditions. >

< Figure 3. Results showing that even a small walking robot was able to overcome steps with height differences of about 20cm. >

2023.05.18 View 9337 -

Using light to throw and catch atoms to open up a new chapter for quantum computing

The technology to move and arrange atoms, the most basic component of a quantum computer, is very important to Rydberg quantum computing research. However, to place the atoms at the desired location, the atoms must be captured and transported one by one using a highly focused laser beam, commonly referred to as an optical tweezer. and, the quantum information of the atoms is likely to change midway.

KAIST (President Kwang Hyung Lee) announced on the 27th that a research team led by Professor Jaewook Ahn of the Department of Physics developed a technology to throw and receive rubidium atoms one by one using a laser beam.

The research team developed a method to throw and receive atoms which would minimize the time the optical tweezers are in contact with the atoms in which the quantum information the atoms carry may change. The research team used the characteristic that the rubidium atoms, which are kept at a very low temperature of 40μK below absolute zero, move very sensitively to the electromagnetic force applied by light along the focal point of the light tweezers.

The research team accelerated the laser of an optical tweezer to give an optical kick to an atom to send it to a target, then caught the flying atom with another optical tweezer to stop it. The atom flew at a speed of 65 cm/s, and traveled up to 4.2 μm. Compared to the existing technique of guiding the atoms with the optical tweezers, the technique of throwing and receiving atoms eliminates the need to calculate the transporting path for the tweezers, and makes it easier to fix the defects in the atomic arrangement. As a result, it is effective in generating and maintaining a large number of atomic arrangements, and when the technology is used to throw and receive flying atom qubits, it will be used in studying new and more powerful quantum computing methods that presupposes the structural changes in quantum arrangements.

"This technology will be used to develop larger and more powerful Rydberg quantum computers," says Professor Jaewook Ahn. “In a Rydberg quantum computer,” he continues, “atoms are arranged to store quantum information and interact with neighboring atoms through electromagnetic forces to perform quantum computing. The method of throwing an atom away for quick reconstruction the quantum array can be an effective way to fix an error in a quantum computer that requires a removal or replacement of an atom.”

The research, which was conducted by doctoral students Hansub Hwang and Andrew Byun of the Department of Physics at KAIST and Sylvain de Léséleuc, a researcher at the National Institute of Natural Sciences in Japan, was published in the international journal, Optica, 0n March 9th. (Paper title: Optical tweezers throw and catch single atoms).

This research was carried out with the support of the Samsung Science & Technology Foundation.

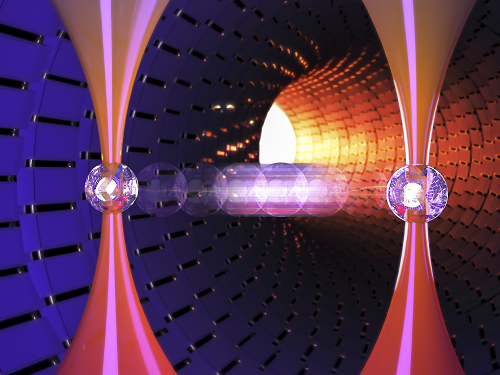

<Figure 1> A schematic diagram of the atom catching and throwing technique. The optical tweezer on the left kicks the atom to throw it into a trajectory to have the tweezer on the right catch it to stop it.

2023.03.28 View 6058

Using light to throw and catch atoms to open up a new chapter for quantum computing

The technology to move and arrange atoms, the most basic component of a quantum computer, is very important to Rydberg quantum computing research. However, to place the atoms at the desired location, the atoms must be captured and transported one by one using a highly focused laser beam, commonly referred to as an optical tweezer. and, the quantum information of the atoms is likely to change midway.

KAIST (President Kwang Hyung Lee) announced on the 27th that a research team led by Professor Jaewook Ahn of the Department of Physics developed a technology to throw and receive rubidium atoms one by one using a laser beam.

The research team developed a method to throw and receive atoms which would minimize the time the optical tweezers are in contact with the atoms in which the quantum information the atoms carry may change. The research team used the characteristic that the rubidium atoms, which are kept at a very low temperature of 40μK below absolute zero, move very sensitively to the electromagnetic force applied by light along the focal point of the light tweezers.

The research team accelerated the laser of an optical tweezer to give an optical kick to an atom to send it to a target, then caught the flying atom with another optical tweezer to stop it. The atom flew at a speed of 65 cm/s, and traveled up to 4.2 μm. Compared to the existing technique of guiding the atoms with the optical tweezers, the technique of throwing and receiving atoms eliminates the need to calculate the transporting path for the tweezers, and makes it easier to fix the defects in the atomic arrangement. As a result, it is effective in generating and maintaining a large number of atomic arrangements, and when the technology is used to throw and receive flying atom qubits, it will be used in studying new and more powerful quantum computing methods that presupposes the structural changes in quantum arrangements.

"This technology will be used to develop larger and more powerful Rydberg quantum computers," says Professor Jaewook Ahn. “In a Rydberg quantum computer,” he continues, “atoms are arranged to store quantum information and interact with neighboring atoms through electromagnetic forces to perform quantum computing. The method of throwing an atom away for quick reconstruction the quantum array can be an effective way to fix an error in a quantum computer that requires a removal or replacement of an atom.”

The research, which was conducted by doctoral students Hansub Hwang and Andrew Byun of the Department of Physics at KAIST and Sylvain de Léséleuc, a researcher at the National Institute of Natural Sciences in Japan, was published in the international journal, Optica, 0n March 9th. (Paper title: Optical tweezers throw and catch single atoms).

This research was carried out with the support of the Samsung Science & Technology Foundation.

<Figure 1> A schematic diagram of the atom catching and throwing technique. The optical tweezer on the left kicks the atom to throw it into a trajectory to have the tweezer on the right catch it to stop it.

2023.03.28 View 6058 -

KAIST team develops smart immune system that can pin down on malignant tumors

A joint research team led by Professor Jung Kyoon Choi of the KAIST Department of Bio and Brain Engineering and Professor Jong-Eun Park of the KAIST Graduate School of Medical Science and Engineering (GSMSE) announced the development of the key technologies to treat cancers using smart immune cells designed based on AI and big data analysis. This technology is expected to be a next-generation immunotherapy that allows precision targeting of tumor cells by having the chimeric antigen receptors (CARs) operate through a logical circuit. Professor Hee Jung An of CHA Bundang Medical Center and Professor Hae-Ock Lee of the Catholic University of Korea also participated in this research to contribute joint effort.

Professor Jung Kyoon Choi’s team built a gene expression database from millions of cells, and used this to successfully develop and verify a deep-learning algorithm that could detect the differences in gene expression patterns between tumor cells and normal cells through a logical circuit. CAR immune cells that were fitted with the logic circuits discovered through this methodology could distinguish between tumorous and normal cells as a computer would, and therefore showed potentials to strike only on tumor cells accurately without causing unwanted side effects.

This research, conducted by co-first authors Dr. Joonha Kwon of the KAIST Department of Bio and Brain Engineering and Ph.D. candidate Junho Kang of KAIST GSMSE, was published by Nature Biotechnology on February 16, under the title Single-cell mapping of combinatorial target antigens for CAR switches using logic gates.

An area in cancer research where the most attempts and advances have been made in recent years is immunotherapy. This field of treatment, which utilizes the patient’s own immune system in order to overcome cancer, has several methods including immune checkpoint inhibitors, cancer vaccines and cellular treatments. Immune cells like CAR-T or CAR-NK equipped with chimera antigen receptors, in particular, can recognize cancer antigens and directly destroy cancer cells.

Starting with its success in blood cancer treatment, scientists have been trying to expand the application of CAR cell therapy to treat solid cancer. But there have been difficulties to develop CAR cells with effective killing abilities against solid cancer cells with minimized side effects. Accordingly, in recent years, the development of smarter CAR engineering technologies, i.e., computational logic gates such as AND, OR, and NOT, to effectively target cancer cells has been underway.

At this point in time, the research team built a large-scale database for cancer and normal cells to discover the exact genes that are expressed only from cancer cells at a single-cell level. The team followed this up by developing an AI algorithm that could search for a combination of genes that best distinguishes cancer cells from normal cells. This algorithm, in particular, has been used to find a logic circuit that can specifically target cancer cells through cell-level simulations of all gene combinations. CAR-T cells equipped with logic circuits discovered through this methodology are expected to distinguish cancerous cells from normal cells like computers, thereby minimizing side effects and maximizing the effects of chemotherapy.

Dr. Joonha Kwon, who is the first author of this paper, said, “this research suggests a new method that hasn’t been tried before. What’s particularly noteworthy is the process in which we found the optimal CAR cell circuit through simulations of millions of individual tumors and normal cells.” He added, “This is an innovative technology that can apply AI and computer logic circuits to immune cell engineering. It would contribute greatly to expanding CAR therapy, which is being successfully used for blood cancer, to solid cancers as well.”

This research was funded by the Original Technology Development Project and Research Program for Next Generation Applied Omic of the Korea Research Foundation.

Figure 1. A schematic diagram of manufacturing and administration process of CAR therapy and of cancer cell-specific dual targeting using CAR.

Figure 2. Deep learning (convolutional neural networks, CNNs) algorithm for selection of dual targets based on gene combination (left) and algorithm for calculating expressing cell fractions by gene combination according to logical circuit (right).

2023.03.09 View 8788

KAIST team develops smart immune system that can pin down on malignant tumors

A joint research team led by Professor Jung Kyoon Choi of the KAIST Department of Bio and Brain Engineering and Professor Jong-Eun Park of the KAIST Graduate School of Medical Science and Engineering (GSMSE) announced the development of the key technologies to treat cancers using smart immune cells designed based on AI and big data analysis. This technology is expected to be a next-generation immunotherapy that allows precision targeting of tumor cells by having the chimeric antigen receptors (CARs) operate through a logical circuit. Professor Hee Jung An of CHA Bundang Medical Center and Professor Hae-Ock Lee of the Catholic University of Korea also participated in this research to contribute joint effort.

Professor Jung Kyoon Choi’s team built a gene expression database from millions of cells, and used this to successfully develop and verify a deep-learning algorithm that could detect the differences in gene expression patterns between tumor cells and normal cells through a logical circuit. CAR immune cells that were fitted with the logic circuits discovered through this methodology could distinguish between tumorous and normal cells as a computer would, and therefore showed potentials to strike only on tumor cells accurately without causing unwanted side effects.

This research, conducted by co-first authors Dr. Joonha Kwon of the KAIST Department of Bio and Brain Engineering and Ph.D. candidate Junho Kang of KAIST GSMSE, was published by Nature Biotechnology on February 16, under the title Single-cell mapping of combinatorial target antigens for CAR switches using logic gates.

An area in cancer research where the most attempts and advances have been made in recent years is immunotherapy. This field of treatment, which utilizes the patient’s own immune system in order to overcome cancer, has several methods including immune checkpoint inhibitors, cancer vaccines and cellular treatments. Immune cells like CAR-T or CAR-NK equipped with chimera antigen receptors, in particular, can recognize cancer antigens and directly destroy cancer cells.

Starting with its success in blood cancer treatment, scientists have been trying to expand the application of CAR cell therapy to treat solid cancer. But there have been difficulties to develop CAR cells with effective killing abilities against solid cancer cells with minimized side effects. Accordingly, in recent years, the development of smarter CAR engineering technologies, i.e., computational logic gates such as AND, OR, and NOT, to effectively target cancer cells has been underway.

At this point in time, the research team built a large-scale database for cancer and normal cells to discover the exact genes that are expressed only from cancer cells at a single-cell level. The team followed this up by developing an AI algorithm that could search for a combination of genes that best distinguishes cancer cells from normal cells. This algorithm, in particular, has been used to find a logic circuit that can specifically target cancer cells through cell-level simulations of all gene combinations. CAR-T cells equipped with logic circuits discovered through this methodology are expected to distinguish cancerous cells from normal cells like computers, thereby minimizing side effects and maximizing the effects of chemotherapy.

Dr. Joonha Kwon, who is the first author of this paper, said, “this research suggests a new method that hasn’t been tried before. What’s particularly noteworthy is the process in which we found the optimal CAR cell circuit through simulations of millions of individual tumors and normal cells.” He added, “This is an innovative technology that can apply AI and computer logic circuits to immune cell engineering. It would contribute greatly to expanding CAR therapy, which is being successfully used for blood cancer, to solid cancers as well.”

This research was funded by the Original Technology Development Project and Research Program for Next Generation Applied Omic of the Korea Research Foundation.

Figure 1. A schematic diagram of manufacturing and administration process of CAR therapy and of cancer cell-specific dual targeting using CAR.

Figure 2. Deep learning (convolutional neural networks, CNNs) algorithm for selection of dual targets based on gene combination (left) and algorithm for calculating expressing cell fractions by gene combination according to logical circuit (right).

2023.03.09 View 8788 -

A Quick but Clingy Creepy-Crawler that will MARVEL You

Engineered by KAIST Mechanics, a quadrupedal robot climbs steel walls and crawls across metal ceilings at the fastest speed that the world has ever seen.

< Photo 1. (From left) KAIST ME Prof. Hae-Won Park, Ph.D. Student Yong Um, Ph.D. Student Seungwoo Hong >

- Professor Hae-Won Park's team at the Department of Mechanical Engineering developed a quadrupedal robot that can move at a high speed on ferrous walls and ceilings.

- It is expected to make a wide variety of contributions as it is to be used to conduct inspections and repairs of large steel structures such as ships, bridges, and transmission towers, offering an alternative to dangerous or risky activities required in hazardous environments while maintaining productivity and efficiency through automation and unmanning of such operations.

- The study was published as the cover paper of the December issue of Science Robotics.

KAIST (President Kwang Hyung Lee) announced on the 26th that a research team led by Professor Hae-Won Park of the Department of Mechanical Engineering developed a quadrupedal walking robot that can move at high speed on steel walls and ceilings named M.A.R.V.E.L. - rightly so as it is a Magnetically Adhesive Robot for Versatile and Expeditious Locomotion as described in their paper, “Agile and Versatile Climbing on Ferromagnetic Surfaces with a Quadrupedal Robot.” (DOI: 10.1126/scirobotics.add1017)

To make this happen, Professor Park's research team developed a foot pad that can quickly turn the magnetic adhesive force on and off while retaining high adhesive force even on an uneven surface through the use of the Electro-Permanent Magnet (EPM), a device that can magnetize and demagnetize an electromagnet with little power, and the Magneto-Rheological Elastomer (MRE), an elastic material made by mixing a magnetic response factor, such as iron powder, with an elastic material, such as rubber, which they mounted on a small quadrupedal robot they made in-house, at their own laboratory. These walking robots are expected to be put into a wide variety of usage, including being programmed to perform inspections, repairs, and maintenance tasks on large structures made of steel, such as ships, bridges, transmission towers, large storage areas, and construction sites.

This study, in which Seungwoo Hong and Yong Um of the Department of Mechanical Engineering participated as co-first authors, was published as the cover paper in the December issue of Science Robotics.

< Image on the Cover of 2022 December issue of Science Robotics >

Existing wall-climbing robots use wheels or endless tracks, so their mobility is limited on surfaces with steps or irregularities. On the other hand, walking robots for climbing can expect improved mobility in obstacle terrain, but have disadvantages in that they have significantly slower moving speeds or cannot perform various movements.

In order to enable fast movement of the walking robot, the sole of the foot must have strong adhesion force and be able to control the adhesion to quickly switch from sticking to the surface or to be off of it. In addition, it is necessary to maintain the adhesion force even on a rough or uneven surface.

To solve this problem, the research team used the EPM and MRE for the first time in designing the soles of walking robots. An EPM is a magnet that can turn on and off the electromagnetic force with a short current pulse. Unlike general electromagnets, it has the advantage that it does not require energy to maintain the magnetic force. The research team proposed a new EPM with a rectangular structure arrangement, enabling faster switching while significantly lowering the voltage required for switching compared to existing electromagnets.

In addition, the research team was able to increase the frictional force without significantly reducing the magnetic force of the sole by covering the sole with an MRE. The proposed sole weighs only 169 g, but provides a vertical gripping force of about *535 Newtons (N) and a frictional force of 445 N, which is sufficient gripping force for a quadrupedal robot weighing 8 kg.

* 535 N converted to kg is 54.5 kg, and 445 N is 45.4 kg. In other words, even if an external force of up to 54.5 kg in the vertical direction and up to 45.4 kg in the horizontal direction is applied (or even if a corresponding weight is hung), the sole of the foot does not come off the steel plate.

MARVEL climbed up a vertical wall at high speed at a speed of 70 cm per second, and was able to walk while hanging upside down from the ceiling at a maximum speed of 50 cm per second. This is the world's fastest speed for a walking climbing robot. In addition, the research team demonstrated that the robot can climb at a speed of up to 35 cm even on a surface that is painted, dirty with dust and the rust-tainted surfaces of water tanks, proving the robot's performance in a real environment. It was experimentally demonstrated that the robot not only exhibited high speed, but also can switch from floor to wall and from wall to ceiling, and overcome 5-cm high obstacles protruding from walls without difficulty.

The new climbing quadrupedal robot is expected to be widely used for inspection, repair, and maintenance of large steel structures such as ships, bridges, transmission towers, oil pipelines, large storage areas, and construction sites. As the works required in these places involves risks such as falls, suffocation and other accidents that may result in serious injuries or casualties, the need for automation is of utmost urgency.

One of the first co-authors of the paper, a Ph.D. student, Yong Um of KAIST’s Department of Mechanical Engineering, said, "By the use of the magnetic soles made up of the EPM and MRE and the non-linear model predictive controller suitable for climbing, the robot can speedily move through a variety of ferromagnetic surfaces including walls and ceilings, not just level grounds. We believe this would become a cornerstone that will expand the mobility and the places of pedal-mobile robots can venture into." He added, “These robots can be put into good use in executing dangerous and difficult tasks on steel structures in places like the shipbuilding yards.”

This research was carried out with support from the National Research Foundation of Korea's Basic Research in Science & Engineering Program for Mid-Career Researchers and Korea Shipbuilding & Offshore Engineering Co., Ltd..

< Figure 1. The quadrupedal robot (MARVEL) walking over various ferrous surfaces. (A) vertical wall (B) ceiling. (C) over obstacles on a vertical wall (D) making floor-to-wall and wall-to-ceiling transitions (E) moving over a storage tank (F) walking on a wall with a 2-kg weight and over a ceiling with a 3-kg load. >

< Figure 2. Description of the magnetic foot (A) Components of the magnet sole: ankle, Square Eletro-Permanent Magnet(S-EPM), MRE footpad. (B) Components of the S-EPM and MRE footpad. (C) Working principle of the S-EPM. When the magnetization direction is aligned as shown in the left figure, magnetic flux comes out of the keeper and circulates through the steel plate, generating holding force (ON state). Conversely, if the magnetization direction is aligned as shown in the figure on the right, the magnetic flux circulates inside the S-EPM and the holding force disappears (OFF state). >

Video Introduction: Agile and versatile climbing on ferromagnetic surfaces with a quadrupedal robot - YouTube

2022.12.30 View 15613

A Quick but Clingy Creepy-Crawler that will MARVEL You

Engineered by KAIST Mechanics, a quadrupedal robot climbs steel walls and crawls across metal ceilings at the fastest speed that the world has ever seen.

< Photo 1. (From left) KAIST ME Prof. Hae-Won Park, Ph.D. Student Yong Um, Ph.D. Student Seungwoo Hong >

- Professor Hae-Won Park's team at the Department of Mechanical Engineering developed a quadrupedal robot that can move at a high speed on ferrous walls and ceilings.

- It is expected to make a wide variety of contributions as it is to be used to conduct inspections and repairs of large steel structures such as ships, bridges, and transmission towers, offering an alternative to dangerous or risky activities required in hazardous environments while maintaining productivity and efficiency through automation and unmanning of such operations.

- The study was published as the cover paper of the December issue of Science Robotics.

KAIST (President Kwang Hyung Lee) announced on the 26th that a research team led by Professor Hae-Won Park of the Department of Mechanical Engineering developed a quadrupedal walking robot that can move at high speed on steel walls and ceilings named M.A.R.V.E.L. - rightly so as it is a Magnetically Adhesive Robot for Versatile and Expeditious Locomotion as described in their paper, “Agile and Versatile Climbing on Ferromagnetic Surfaces with a Quadrupedal Robot.” (DOI: 10.1126/scirobotics.add1017)

To make this happen, Professor Park's research team developed a foot pad that can quickly turn the magnetic adhesive force on and off while retaining high adhesive force even on an uneven surface through the use of the Electro-Permanent Magnet (EPM), a device that can magnetize and demagnetize an electromagnet with little power, and the Magneto-Rheological Elastomer (MRE), an elastic material made by mixing a magnetic response factor, such as iron powder, with an elastic material, such as rubber, which they mounted on a small quadrupedal robot they made in-house, at their own laboratory. These walking robots are expected to be put into a wide variety of usage, including being programmed to perform inspections, repairs, and maintenance tasks on large structures made of steel, such as ships, bridges, transmission towers, large storage areas, and construction sites.

This study, in which Seungwoo Hong and Yong Um of the Department of Mechanical Engineering participated as co-first authors, was published as the cover paper in the December issue of Science Robotics.

< Image on the Cover of 2022 December issue of Science Robotics >

Existing wall-climbing robots use wheels or endless tracks, so their mobility is limited on surfaces with steps or irregularities. On the other hand, walking robots for climbing can expect improved mobility in obstacle terrain, but have disadvantages in that they have significantly slower moving speeds or cannot perform various movements.

In order to enable fast movement of the walking robot, the sole of the foot must have strong adhesion force and be able to control the adhesion to quickly switch from sticking to the surface or to be off of it. In addition, it is necessary to maintain the adhesion force even on a rough or uneven surface.

To solve this problem, the research team used the EPM and MRE for the first time in designing the soles of walking robots. An EPM is a magnet that can turn on and off the electromagnetic force with a short current pulse. Unlike general electromagnets, it has the advantage that it does not require energy to maintain the magnetic force. The research team proposed a new EPM with a rectangular structure arrangement, enabling faster switching while significantly lowering the voltage required for switching compared to existing electromagnets.

In addition, the research team was able to increase the frictional force without significantly reducing the magnetic force of the sole by covering the sole with an MRE. The proposed sole weighs only 169 g, but provides a vertical gripping force of about *535 Newtons (N) and a frictional force of 445 N, which is sufficient gripping force for a quadrupedal robot weighing 8 kg.

* 535 N converted to kg is 54.5 kg, and 445 N is 45.4 kg. In other words, even if an external force of up to 54.5 kg in the vertical direction and up to 45.4 kg in the horizontal direction is applied (or even if a corresponding weight is hung), the sole of the foot does not come off the steel plate.

MARVEL climbed up a vertical wall at high speed at a speed of 70 cm per second, and was able to walk while hanging upside down from the ceiling at a maximum speed of 50 cm per second. This is the world's fastest speed for a walking climbing robot. In addition, the research team demonstrated that the robot can climb at a speed of up to 35 cm even on a surface that is painted, dirty with dust and the rust-tainted surfaces of water tanks, proving the robot's performance in a real environment. It was experimentally demonstrated that the robot not only exhibited high speed, but also can switch from floor to wall and from wall to ceiling, and overcome 5-cm high obstacles protruding from walls without difficulty.

The new climbing quadrupedal robot is expected to be widely used for inspection, repair, and maintenance of large steel structures such as ships, bridges, transmission towers, oil pipelines, large storage areas, and construction sites. As the works required in these places involves risks such as falls, suffocation and other accidents that may result in serious injuries or casualties, the need for automation is of utmost urgency.

One of the first co-authors of the paper, a Ph.D. student, Yong Um of KAIST’s Department of Mechanical Engineering, said, "By the use of the magnetic soles made up of the EPM and MRE and the non-linear model predictive controller suitable for climbing, the robot can speedily move through a variety of ferromagnetic surfaces including walls and ceilings, not just level grounds. We believe this would become a cornerstone that will expand the mobility and the places of pedal-mobile robots can venture into." He added, “These robots can be put into good use in executing dangerous and difficult tasks on steel structures in places like the shipbuilding yards.”

This research was carried out with support from the National Research Foundation of Korea's Basic Research in Science & Engineering Program for Mid-Career Researchers and Korea Shipbuilding & Offshore Engineering Co., Ltd..

< Figure 1. The quadrupedal robot (MARVEL) walking over various ferrous surfaces. (A) vertical wall (B) ceiling. (C) over obstacles on a vertical wall (D) making floor-to-wall and wall-to-ceiling transitions (E) moving over a storage tank (F) walking on a wall with a 2-kg weight and over a ceiling with a 3-kg load. >

< Figure 2. Description of the magnetic foot (A) Components of the magnet sole: ankle, Square Eletro-Permanent Magnet(S-EPM), MRE footpad. (B) Components of the S-EPM and MRE footpad. (C) Working principle of the S-EPM. When the magnetization direction is aligned as shown in the left figure, magnetic flux comes out of the keeper and circulates through the steel plate, generating holding force (ON state). Conversely, if the magnetization direction is aligned as shown in the figure on the right, the magnetic flux circulates inside the S-EPM and the holding force disappears (OFF state). >

Video Introduction: Agile and versatile climbing on ferromagnetic surfaces with a quadrupedal robot - YouTube

2022.12.30 View 15613 -

Tomographic Measurement of Dielectric Tensors

Dielectric tensor tomography allows the direct measurement of the 3D dielectric tensors of optically anisotropic structures

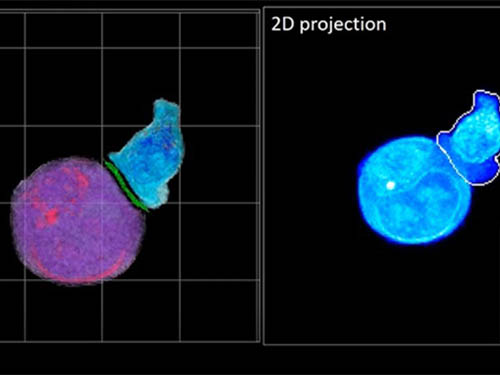

A research team reported the direct measurement of dielectric tensors of anisotropic structures including the spatial variations of principal refractive indices and directors. The group also demonstrated quantitative tomographic measurements of various nematic liquid-crystal structures and their fast 3D nonequilibrium dynamics using a 3D label-free tomographic method. The method was described in Nature Materials.

Light-matter interactions are described by the dielectric tensor. Despite their importance in basic science and applications, it has not been possible to measure 3D dielectric tensors directly. The main challenge was due to the vectorial nature of light scattering from a 3D anisotropic structure. Previous approaches only addressed 3D anisotropic information indirectly and were limited to two-dimensional, qualitative, strict sample conditions or assumptions.

The research team developed a method enabling the tomographic reconstruction of 3D dielectric tensors without any preparation or assumptions. A sample is illuminated with a laser beam with various angles and circularly polarization states. Then, the light fields scattered from a sample are holographically measured and converted into vectorial diffraction components. Finally, by inversely solving a vectorial wave equation, the 3D dielectric tensor is reconstructed.

Professor YongKeun Park said, “There were a greater number of unknowns in direct measuring than with the conventional approach. We applied our approach to measure additional holographic images by slightly tilting the incident angle.”

He said that the slightly tilted illumination provides an additional orthogonal polarization, which makes the underdetermined problem become the determined problem. “Although scattered fields are dependent on the illumination angle, the Fourier differentiation theorem enables the extraction of the same dielectric tensor for the slightly tilted illumination,” Professor Park added.

His team’s method was validated by reconstructing well-known liquid crystal (LC) structures, including the twisted nematic, hybrid aligned nematic, radial, and bipolar configurations. Furthermore, the research team demonstrated the experimental measurements of the non-equilibrium dynamics of annihilating, nucleating, and merging LC droplets, and the LC polymer network with repeating 3D topological defects.

“This is the first experimental measurement of non-equilibrium dynamics and 3D topological defects in LC structures in a label-free manner. Our method enables the exploration of inaccessible nematic structures and interactions in non-equilibrium dynamics,” first author Dr. Seungwoo Shin explained.

-PublicationSeungwoo Shin, Jonghee Eun, Sang Seok Lee, Changjae Lee, Herve Hugonnet, Dong Ki Yoon, Shin-Hyun Kim, Jongwoo Jeong, YongKeun Park, “Tomographic Measurement ofDielectric Tensors at Optical Frequency,” Nature Materials March 02, 2022 (https://doi.org/10/1038/s41563-022-01202-8)

-ProfileProfessor YongKeun ParkBiomedical Optics Laboratory (http://bmol.kaist.ac.kr)Department of PhysicsCollege of Natural SciencesKAIST

2022.03.22 View 7762

Tomographic Measurement of Dielectric Tensors

Dielectric tensor tomography allows the direct measurement of the 3D dielectric tensors of optically anisotropic structures

A research team reported the direct measurement of dielectric tensors of anisotropic structures including the spatial variations of principal refractive indices and directors. The group also demonstrated quantitative tomographic measurements of various nematic liquid-crystal structures and their fast 3D nonequilibrium dynamics using a 3D label-free tomographic method. The method was described in Nature Materials.

Light-matter interactions are described by the dielectric tensor. Despite their importance in basic science and applications, it has not been possible to measure 3D dielectric tensors directly. The main challenge was due to the vectorial nature of light scattering from a 3D anisotropic structure. Previous approaches only addressed 3D anisotropic information indirectly and were limited to two-dimensional, qualitative, strict sample conditions or assumptions.

The research team developed a method enabling the tomographic reconstruction of 3D dielectric tensors without any preparation or assumptions. A sample is illuminated with a laser beam with various angles and circularly polarization states. Then, the light fields scattered from a sample are holographically measured and converted into vectorial diffraction components. Finally, by inversely solving a vectorial wave equation, the 3D dielectric tensor is reconstructed.

Professor YongKeun Park said, “There were a greater number of unknowns in direct measuring than with the conventional approach. We applied our approach to measure additional holographic images by slightly tilting the incident angle.”

He said that the slightly tilted illumination provides an additional orthogonal polarization, which makes the underdetermined problem become the determined problem. “Although scattered fields are dependent on the illumination angle, the Fourier differentiation theorem enables the extraction of the same dielectric tensor for the slightly tilted illumination,” Professor Park added.

His team’s method was validated by reconstructing well-known liquid crystal (LC) structures, including the twisted nematic, hybrid aligned nematic, radial, and bipolar configurations. Furthermore, the research team demonstrated the experimental measurements of the non-equilibrium dynamics of annihilating, nucleating, and merging LC droplets, and the LC polymer network with repeating 3D topological defects.

“This is the first experimental measurement of non-equilibrium dynamics and 3D topological defects in LC structures in a label-free manner. Our method enables the exploration of inaccessible nematic structures and interactions in non-equilibrium dynamics,” first author Dr. Seungwoo Shin explained.

-PublicationSeungwoo Shin, Jonghee Eun, Sang Seok Lee, Changjae Lee, Herve Hugonnet, Dong Ki Yoon, Shin-Hyun Kim, Jongwoo Jeong, YongKeun Park, “Tomographic Measurement ofDielectric Tensors at Optical Frequency,” Nature Materials March 02, 2022 (https://doi.org/10/1038/s41563-022-01202-8)

-ProfileProfessor YongKeun ParkBiomedical Optics Laboratory (http://bmol.kaist.ac.kr)Department of PhysicsCollege of Natural SciencesKAIST

2022.03.22 View 7762 -

Label-Free Multiplexed Microtomography of Endogenous Subcellular Dynamics Using Deep Learning

AI-based holographic microscopy allows molecular imaging without introducing exogenous labeling agents

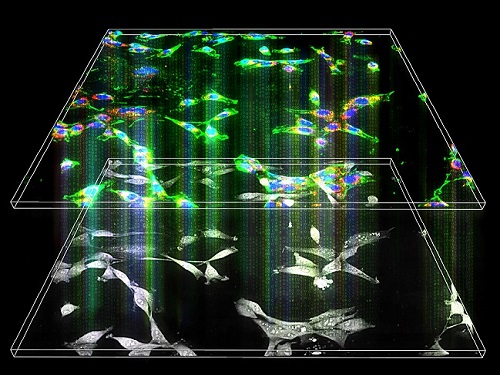

A research team upgraded the 3D microtomography observing dynamics of label-free live cells in multiplexed fluorescence imaging. The AI-powered 3D holotomographic microscopy extracts various molecular information from live unlabeled biological cells in real time without exogenous labeling or staining agents.

Professor YongKeum Park’s team and the startup Tomocube encoded 3D refractive index tomograms using the refractive index as a means of measurement. Then they decoded the information with a deep learning-based model that infers multiple 3D fluorescence tomograms from the refractive index measurements of the corresponding subcellular targets, thereby achieving multiplexed micro tomography. This study was reported in Nature Cell Biology online on December 7, 2021.

Fluorescence microscopy is the most widely used optical microscopy technique due to its high biochemical specificity. However, it needs to genetically manipulate or to stain cells with fluorescent labels in order to express fluorescent proteins. These labeling processes inevitably affect the intrinsic physiology of cells. It also has challenges in long-term measuring due to photobleaching and phototoxicity. The overlapped spectra of multiplexed fluorescence signals also hinder the viewing of various structures at the same time. More critically, it took several hours to observe the cells after preparing them.

3D holographic microscopy, also known as holotomography, is providing new ways to quantitatively image live cells without pretreatments such as staining. Holotomography can accurately and quickly measure the morphological and structural information of cells, but only provides limited biochemical and molecular information.

The 'AI microscope' created in this process takes advantage of the features of both holographic microscopy and fluorescence microscopy. That is, a specific image from a fluorescence microscope can be obtained without a fluorescent label. Therefore, the microscope can observe many types of cellular structures in their natural state in 3D and at the same time as fast as one millisecond, and long-term measurements over several days are also possible.

The Tomocube-KAIST team showed that fluorescence images can be directly and precisely predicted from holotomographic images in various cells and conditions. Using the quantitative relationship between the spatial distribution of the refractive index found by AI and the major structures in cells, it was possible to decipher the spatial distribution of the refractive index. And surprisingly, it confirmed that this relationship is constant regardless of cell type.

Professor Park said, “We were able to develop a new concept microscope that combines the advantages of several microscopes with the multidisciplinary research of AI, optics, and biology. It will be immediately applicable for new types of cells not included in the existing data and is expected to be widely applicable for various biological and medical research.”

When comparing the molecular image information extracted by AI with the molecular image information physically obtained by fluorescence staining in 3D space, it showed a 97% or more conformity, which is a level that is difficult to distinguish with the naked eye.

“Compared to the sub-60% accuracy of the fluorescence information extracted from the model developed by the Google AI team, it showed significantly higher performance,” Professor Park added.

This work was supported by the KAIST Up program, the BK21+ program, Tomocube, the National Research Foundation of Korea, and the Ministry of Science and ICT, and the Ministry of Health & Welfare.

-Publication

Hyun-seok Min, Won-Do Heo, YongKeun Park, et al. “Label-free multiplexed microtomography of endogenous subcellular dynamics using generalizable deep learning,” Nature Cell Biology (doi.org/10.1038/s41556-021-00802-x) published online December 07 2021.

-Profile

Professor YongKeun Park

Biomedical Optics Laboratory

Department of Physics

KAIST

2022.02.09 View 10338

Label-Free Multiplexed Microtomography of Endogenous Subcellular Dynamics Using Deep Learning

AI-based holographic microscopy allows molecular imaging without introducing exogenous labeling agents

A research team upgraded the 3D microtomography observing dynamics of label-free live cells in multiplexed fluorescence imaging. The AI-powered 3D holotomographic microscopy extracts various molecular information from live unlabeled biological cells in real time without exogenous labeling or staining agents.

Professor YongKeum Park’s team and the startup Tomocube encoded 3D refractive index tomograms using the refractive index as a means of measurement. Then they decoded the information with a deep learning-based model that infers multiple 3D fluorescence tomograms from the refractive index measurements of the corresponding subcellular targets, thereby achieving multiplexed micro tomography. This study was reported in Nature Cell Biology online on December 7, 2021.

Fluorescence microscopy is the most widely used optical microscopy technique due to its high biochemical specificity. However, it needs to genetically manipulate or to stain cells with fluorescent labels in order to express fluorescent proteins. These labeling processes inevitably affect the intrinsic physiology of cells. It also has challenges in long-term measuring due to photobleaching and phototoxicity. The overlapped spectra of multiplexed fluorescence signals also hinder the viewing of various structures at the same time. More critically, it took several hours to observe the cells after preparing them.

3D holographic microscopy, also known as holotomography, is providing new ways to quantitatively image live cells without pretreatments such as staining. Holotomography can accurately and quickly measure the morphological and structural information of cells, but only provides limited biochemical and molecular information.

The 'AI microscope' created in this process takes advantage of the features of both holographic microscopy and fluorescence microscopy. That is, a specific image from a fluorescence microscope can be obtained without a fluorescent label. Therefore, the microscope can observe many types of cellular structures in their natural state in 3D and at the same time as fast as one millisecond, and long-term measurements over several days are also possible.

The Tomocube-KAIST team showed that fluorescence images can be directly and precisely predicted from holotomographic images in various cells and conditions. Using the quantitative relationship between the spatial distribution of the refractive index found by AI and the major structures in cells, it was possible to decipher the spatial distribution of the refractive index. And surprisingly, it confirmed that this relationship is constant regardless of cell type.

Professor Park said, “We were able to develop a new concept microscope that combines the advantages of several microscopes with the multidisciplinary research of AI, optics, and biology. It will be immediately applicable for new types of cells not included in the existing data and is expected to be widely applicable for various biological and medical research.”

When comparing the molecular image information extracted by AI with the molecular image information physically obtained by fluorescence staining in 3D space, it showed a 97% or more conformity, which is a level that is difficult to distinguish with the naked eye.

“Compared to the sub-60% accuracy of the fluorescence information extracted from the model developed by the Google AI team, it showed significantly higher performance,” Professor Park added.

This work was supported by the KAIST Up program, the BK21+ program, Tomocube, the National Research Foundation of Korea, and the Ministry of Science and ICT, and the Ministry of Health & Welfare.

-Publication

Hyun-seok Min, Won-Do Heo, YongKeun Park, et al. “Label-free multiplexed microtomography of endogenous subcellular dynamics using generalizable deep learning,” Nature Cell Biology (doi.org/10.1038/s41556-021-00802-x) published online December 07 2021.

-Profile

Professor YongKeun Park

Biomedical Optics Laboratory

Department of Physics

KAIST

2022.02.09 View 10338 -

Team KAIST Makes Its Presence Felt in the Self-Driving Tech Industry

Team KAIST finishes 4th at the inaugural CES Autonomous Racing Competition

Team KAIST led by Professor Hyunchul Shim and Unmanned Systems Research Group (USRG) placed fourth in an autonomous race car competition in Las Vegas last week, making its presence felt in the self-driving automotive tech industry.

Team KAIST, beat its first competitor, Auburn University, with speeds of up to 131 mph at the Autonomous Challenge at CES held at the Las Vegas Motor Speedway. However, the team failed to advance to the final round when it lost to PoliMOVE, comprised of the Polytechnic University of Milan and the University of Alabama, the final winner of the $150,000 USD race.

A total of eight teams competed in the self-driving race. The race was conducted as a single elimination tournament consisting of multiple rounds of matches. Two cars took turns playing the role of defender and attacker, and each car attempted to outpace the other until one of them was unable to complete the mission.

Each team designed the algorithm to control its racecar, the Dallara-built AV-21, which can reach a speed of up to 173 mph, and make it safely drive around the track at high speeds without crashing into the other.

The event is the CES version of the Indy Autonomous Challenge, a competition that took place for the first time in October last year to encourage university students from around the world to develop complicated software for autonomous driving and advance relevant technologies. Team KAIST placed 4th at the Indy Autonomous Challenge, which qualified it to participate in this race.

“The technical level of the CES race is much higher than last October’s and we had a very tough race. We advanced to the semifinals for two consecutive races. I think our autonomous vehicle technology is proving itself to the world,” said Professor Shim.

Professor Shim’s research group has been working on the development of autonomous aerial and ground vehicles for the past 12 years. A self-driving car developed by the lab was certified by the South Korean government to run on public roads.

The vehicle the team used cost more than 1 million USD to build. Many of the other teams had to repair their vehicle more than once due to accidents and had to spend a lot on repairs. “We are the only one who did not have any accidents, and this is a testament to our technological prowess,” said Professor Shim.

He said the financial funding to purchase pricy parts and equipment for the racecar is always a challenge given the very tight research budget and absence of corporate sponsorships.

However, Professor Shim and his research group plan to participate in the next race in September and in the 2023 CES race.

“I think we need more systemic and proactive research and support systems to earn better results but there is nothing better than the group of passionate students who are taking part in this project with us,” Shim added.

2022.01.12 View 10234

Team KAIST Makes Its Presence Felt in the Self-Driving Tech Industry

Team KAIST finishes 4th at the inaugural CES Autonomous Racing Competition

Team KAIST led by Professor Hyunchul Shim and Unmanned Systems Research Group (USRG) placed fourth in an autonomous race car competition in Las Vegas last week, making its presence felt in the self-driving automotive tech industry.

Team KAIST, beat its first competitor, Auburn University, with speeds of up to 131 mph at the Autonomous Challenge at CES held at the Las Vegas Motor Speedway. However, the team failed to advance to the final round when it lost to PoliMOVE, comprised of the Polytechnic University of Milan and the University of Alabama, the final winner of the $150,000 USD race.

A total of eight teams competed in the self-driving race. The race was conducted as a single elimination tournament consisting of multiple rounds of matches. Two cars took turns playing the role of defender and attacker, and each car attempted to outpace the other until one of them was unable to complete the mission.

Each team designed the algorithm to control its racecar, the Dallara-built AV-21, which can reach a speed of up to 173 mph, and make it safely drive around the track at high speeds without crashing into the other.

The event is the CES version of the Indy Autonomous Challenge, a competition that took place for the first time in October last year to encourage university students from around the world to develop complicated software for autonomous driving and advance relevant technologies. Team KAIST placed 4th at the Indy Autonomous Challenge, which qualified it to participate in this race.

“The technical level of the CES race is much higher than last October’s and we had a very tough race. We advanced to the semifinals for two consecutive races. I think our autonomous vehicle technology is proving itself to the world,” said Professor Shim.

Professor Shim’s research group has been working on the development of autonomous aerial and ground vehicles for the past 12 years. A self-driving car developed by the lab was certified by the South Korean government to run on public roads.

The vehicle the team used cost more than 1 million USD to build. Many of the other teams had to repair their vehicle more than once due to accidents and had to spend a lot on repairs. “We are the only one who did not have any accidents, and this is a testament to our technological prowess,” said Professor Shim.

He said the financial funding to purchase pricy parts and equipment for the racecar is always a challenge given the very tight research budget and absence of corporate sponsorships.

However, Professor Shim and his research group plan to participate in the next race in September and in the 2023 CES race.

“I think we need more systemic and proactive research and support systems to earn better results but there is nothing better than the group of passionate students who are taking part in this project with us,” Shim added.

2022.01.12 View 10234 -

Two Researchers Designated as SUHF Fellows

Professor Taeyun Ku from the Graduate School of Medical Science and Engineering and Professor Hanseul Yang from the Department of Biological Sciences were nominated as 2021 fellows of the Suh Kyungbae Foundation (SUHF).

SUHF selected three young promising scientists from 53 researchers who are less than five years into their careers. A panel of judges comprised of scholars from home and abroad made the final selection based on the candidates’ innovativeness and power to influence. Professor You-Bong Hyun from Seoul National University also won the fellowship.

Professor Ku’s main topic is opto-connectomics. He will study ways to visualize the complex brain network using innovative technology that transforms neurons into optical elements.

Professor Yang will research the possibility of helping patients recover from skin diseases or injuries without scars by studying spiny mouse genes.

SUHF was established by Amorepacific Group Chairman Suh Kyungbae in 2016 with 300 billion KRW of his private funds. Under the vision of ‘contributing to humanity by supporting innovative discoveries of bioscience researchers,’ the foundation supports promising Korean scientists who pioneer new fields of research in biological sciences.

From 2017 to this year, SUHF has selected 20 promising scientists in the field of biological sciences. Selected scientists are provided with up to KRW 500 million each year for five years. The foundation has provided a total of KRW 48.5 billion in research funds to date.

2021.09.15 View 8256

Two Researchers Designated as SUHF Fellows

Professor Taeyun Ku from the Graduate School of Medical Science and Engineering and Professor Hanseul Yang from the Department of Biological Sciences were nominated as 2021 fellows of the Suh Kyungbae Foundation (SUHF).

SUHF selected three young promising scientists from 53 researchers who are less than five years into their careers. A panel of judges comprised of scholars from home and abroad made the final selection based on the candidates’ innovativeness and power to influence. Professor You-Bong Hyun from Seoul National University also won the fellowship.

Professor Ku’s main topic is opto-connectomics. He will study ways to visualize the complex brain network using innovative technology that transforms neurons into optical elements.

Professor Yang will research the possibility of helping patients recover from skin diseases or injuries without scars by studying spiny mouse genes.

SUHF was established by Amorepacific Group Chairman Suh Kyungbae in 2016 with 300 billion KRW of his private funds. Under the vision of ‘contributing to humanity by supporting innovative discoveries of bioscience researchers,’ the foundation supports promising Korean scientists who pioneer new fields of research in biological sciences.

From 2017 to this year, SUHF has selected 20 promising scientists in the field of biological sciences. Selected scientists are provided with up to KRW 500 million each year for five years. The foundation has provided a total of KRW 48.5 billion in research funds to date.

2021.09.15 View 8256 -

3D Visualization and Quantification of Bioplastic PHA in a Living Bacterial Cell

3D holographic microscopy leads to in-depth analysis of bacterial cells accumulating the bacterial bioplastic, polyhydroxyalkanoate (PHA)

A research team at KAIST has observed how bioplastic granule is being accumulated in living bacteria cells through 3D holographic microscopy. Their 3D imaging and quantitative analysis of the bioplastic ‘polyhydroxyalkanoate’ (PHA) via optical diffraction tomography provides insights into biosynthesizing sustainable substitutes for petroleum-based plastics.

The bio-degradable polyester polyhydroxyalkanoate (PHA) is being touted as an eco-friendly bioplastic to replace existing synthetic plastics. While carrying similar properties to general-purpose plastics such as polyethylene and polypropylene, PHA can be used in various industrial applications such as container packaging and disposable products.

PHA is synthesized by numerous bacteria as an energy and carbon storage material under unbalanced growth conditions in the presence of excess carbon sources. PHA exists in the form of insoluble granules in the cytoplasm. Previous studies on investigating in vivo PHA granules have been performed by using fluorescence microscopy, transmission electron microscopy (TEM), and electron cryotomography.

These techniques have generally relied on the statistical analysis of multiple 2D snapshots of fixed cells or the short-time monitoring of the cells. For the TEM analysis, cells need to be fixed and sectioned, and thus the investigation of living cells was not possible. Fluorescence-based techniques require fluorescence labeling or dye staining. Thus, indirect imaging with the use of reporter proteins cannot show the native state of PHAs or cells, and invasive exogenous dyes can affect the physiology and viability of the cells. Therefore, it was difficult to fully understand the formation of PHA granules in cells due to the technical limitations, and thus several mechanism models based on the observations have been only proposed.

The team of metabolic engineering researchers led by Distinguished Professor Sang Yup Lee and Physics Professor YongKeun Park, who established the startup Tomocube with his 3D holographic microscopy, reported the results of 3D quantitative label-free analysis of PHA granules in individual live bacterial cells by measuring the refractive index distributions using optical diffraction tomography. The formation and growth of PHA granules in the cells of Cupriavidus necator, the most-studied native PHA (specifically, poly(3-hydroxybutyrate), also known as PHB) producer, and recombinant Escherichia coli harboring C. necator PHB biosynthesis pathway were comparatively examined.

From the reconstructed 3D refractive index distribution of the cells, the team succeeded in the 3D visualization and quantitative analysis of cells and intracellular PHA granules at a single-cell level. In particular, the team newly presented the concept of “in vivo PHA granule density.” Through the statistical analysis of hundreds of single cells accumulating PHA granules, the distinctive differences of density and localization of PHA granules in the two micro-organisms were found. Furthermore, the team identified the key protein that plays a major role in making the difference that enabled the characteristics of PHA granules in the recombinant E. coli to become similar to those of C. necator.

The research team also presented 3D time-lapse movies showing the actual processes of PHA granule formation combined with cell growth and division. Movies showing the living cells synthesizing and accumulating PHA granules in their native state had never been reported before.

Professor Lee said, “This study provides insights into the morphological and physical characteristics of in vivo PHA as well as the unique mechanisms of PHA granule formation that undergo the phase transition from soluble monomers into the insoluble polymer, followed by granule formation. Through this study, a deeper understanding of PHA granule formation within the bacterial cells is now possible, which has great significance in that a convergence study of biology and physics was achieved. This study will help develop various bioplastics production processes in the future.”

This work was supported by the Technology Development Program to Solve Climate Changes on Systems Metabolic Engineering for Biorefineries (Grants NRF-2012M1A2A2026556 and NRF-2012M1A2A2026557) and the Bio & Medical Technology Development Program (Grant No. 2021M3A9I4022740) from the Ministry of Science and ICT (MSIT) through the National Research Foundation (NRF) of Korea to S.Y.L. This work was also supported by the KAIST Cross-Generation Collaborative Laboratory project.

-PublicationSo Young Choi, Jeonghun Oh, JaeHwang Jung, YongKeun Park, and Sang Yup Lee. Three-dimensional label-free visualization and quantification of polyhydroxyalkanoates in individualbacterial cell in its native state. PNAS(https://doi.org./10.1073/pnas.2103956118)

-ProfileDistinguished Professor Sang Yup LeeMetabolic Engineering and Synthetic Biologyhttp://mbel.kaist.ac.kr/

Department of Chemical and Biomolecular Engineering KAIST

Endowed Chair Professor YongKeun ParkBiomedical Optics Laboratoryhttps://bmokaist.wordpress.com/

Department of PhysicsKAIST

2021.07.28 View 12827

3D Visualization and Quantification of Bioplastic PHA in a Living Bacterial Cell

3D holographic microscopy leads to in-depth analysis of bacterial cells accumulating the bacterial bioplastic, polyhydroxyalkanoate (PHA)

A research team at KAIST has observed how bioplastic granule is being accumulated in living bacteria cells through 3D holographic microscopy. Their 3D imaging and quantitative analysis of the bioplastic ‘polyhydroxyalkanoate’ (PHA) via optical diffraction tomography provides insights into biosynthesizing sustainable substitutes for petroleum-based plastics.

The bio-degradable polyester polyhydroxyalkanoate (PHA) is being touted as an eco-friendly bioplastic to replace existing synthetic plastics. While carrying similar properties to general-purpose plastics such as polyethylene and polypropylene, PHA can be used in various industrial applications such as container packaging and disposable products.

PHA is synthesized by numerous bacteria as an energy and carbon storage material under unbalanced growth conditions in the presence of excess carbon sources. PHA exists in the form of insoluble granules in the cytoplasm. Previous studies on investigating in vivo PHA granules have been performed by using fluorescence microscopy, transmission electron microscopy (TEM), and electron cryotomography.

These techniques have generally relied on the statistical analysis of multiple 2D snapshots of fixed cells or the short-time monitoring of the cells. For the TEM analysis, cells need to be fixed and sectioned, and thus the investigation of living cells was not possible. Fluorescence-based techniques require fluorescence labeling or dye staining. Thus, indirect imaging with the use of reporter proteins cannot show the native state of PHAs or cells, and invasive exogenous dyes can affect the physiology and viability of the cells. Therefore, it was difficult to fully understand the formation of PHA granules in cells due to the technical limitations, and thus several mechanism models based on the observations have been only proposed.

The team of metabolic engineering researchers led by Distinguished Professor Sang Yup Lee and Physics Professor YongKeun Park, who established the startup Tomocube with his 3D holographic microscopy, reported the results of 3D quantitative label-free analysis of PHA granules in individual live bacterial cells by measuring the refractive index distributions using optical diffraction tomography. The formation and growth of PHA granules in the cells of Cupriavidus necator, the most-studied native PHA (specifically, poly(3-hydroxybutyrate), also known as PHB) producer, and recombinant Escherichia coli harboring C. necator PHB biosynthesis pathway were comparatively examined.

From the reconstructed 3D refractive index distribution of the cells, the team succeeded in the 3D visualization and quantitative analysis of cells and intracellular PHA granules at a single-cell level. In particular, the team newly presented the concept of “in vivo PHA granule density.” Through the statistical analysis of hundreds of single cells accumulating PHA granules, the distinctive differences of density and localization of PHA granules in the two micro-organisms were found. Furthermore, the team identified the key protein that plays a major role in making the difference that enabled the characteristics of PHA granules in the recombinant E. coli to become similar to those of C. necator.

The research team also presented 3D time-lapse movies showing the actual processes of PHA granule formation combined with cell growth and division. Movies showing the living cells synthesizing and accumulating PHA granules in their native state had never been reported before.

Professor Lee said, “This study provides insights into the morphological and physical characteristics of in vivo PHA as well as the unique mechanisms of PHA granule formation that undergo the phase transition from soluble monomers into the insoluble polymer, followed by granule formation. Through this study, a deeper understanding of PHA granule formation within the bacterial cells is now possible, which has great significance in that a convergence study of biology and physics was achieved. This study will help develop various bioplastics production processes in the future.”

This work was supported by the Technology Development Program to Solve Climate Changes on Systems Metabolic Engineering for Biorefineries (Grants NRF-2012M1A2A2026556 and NRF-2012M1A2A2026557) and the Bio & Medical Technology Development Program (Grant No. 2021M3A9I4022740) from the Ministry of Science and ICT (MSIT) through the National Research Foundation (NRF) of Korea to S.Y.L. This work was also supported by the KAIST Cross-Generation Collaborative Laboratory project.