neural+network

-

KAIST Proposes a New Way to Circumvent a Long-time Frustration in Neural Computing

The human brain begins learning through spontaneous random activities even before it receives sensory information from the external world. The technology developed by the KAIST research team enables much faster and more accurate learning when exposed to actual data by pre-learning random information in a brain-mimicking artificial neural network, and is expected to be a breakthrough in the development of brain-based artificial intelligence and neuromorphic computing technology in the future.

KAIST (President Kwang-Hyung Lee) announced on the 16th of December that Professor Se-Bum Paik 's research team in the Department of Brain Cognitive Sciences solved the weight transport problem*, a long-standing challenge in neural network learning, and through this, explained the principles that enable resource-efficient learning in biological brain neural networks.

*Weight transport problem: This is the biggest obstacle to the development of artificial intelligence that mimics the biological brain. It is the fundamental reason why large-scale memory and computational work are required in the learning of general artificial neural networks, unlike biological brains.

Over the past several decades, the development of artificial intelligence has been based on error backpropagation learning proposed by Geoffery Hinton, who won the Nobel Prize in Physics this year. However, error backpropagation learning was thought to be impossible in biological brains because it requires the unrealistic assumption that individual neurons must know all the connected information across multiple layers in order to calculate the error signal for learning.

< Figure 1. Illustration depicting the method of random noise training and its effects >

This difficult problem, called the weight transport problem, was raised by Francis Crick, who won the Nobel Prize in Physiology or Medicine for the discovery of the structure of DNA, after the error backpropagation learning was proposed by Hinton in 1986. Since then, it has been considered the reason why the operating principles of natural neural networks and artificial neural networks will forever be fundamentally different.

At the borderline of artificial intelligence and neuroscience, researchers including Hinton have continued to attempt to create biologically plausible models that can implement the learning principles of the brain by solving the weight transport problem.

In 2016, a joint research team from Oxford University and DeepMind in the UK first proposed the concept of error backpropagation learning being possible without weight transport, drawing attention from the academic world. However, biologically plausible error backpropagation learning without weight transport was inefficient, with slow learning speeds and low accuracy, making it difficult to apply in reality.

KAIST research team noted that the biological brain begins learning through internal spontaneous random neural activity even before experiencing external sensory experiences. To mimic this, the research team pre-trained a biologically plausible neural network without weight transport with meaningless random information (random noise).

As a result, they showed that the symmetry of the forward and backward neural cell connections of the neural network, which is an essential condition for error backpropagation learning, can be created. In other words, learning without weight transport is possible through random pre-training.

< Figure 2. Illustration depicting the meta-learning effect of random noise training >

The research team revealed that learning random information before learning actual data has the property of meta-learning, which is ‘learning how to learn.’ It was shown that neural networks that pre-learned random noise perform much faster and more accurate learning when exposed to actual data, and can achieve high learning efficiency without weight transport.

< Figure 3. Illustration depicting research on understanding the brain's operating principles through artificial neural networks >

Professor Se-Bum Paik said, “It breaks the conventional understanding of existing machine learning that only data learning is important, and provides a new perspective that focuses on the neuroscience principles of creating appropriate conditions before learning,” and added, “It is significant in that it solves important problems in artificial neural network learning through clues from developmental neuroscience, and at the same time provides insight into the brain’s learning principles through artificial neural network models.”

This study, in which Jeonghwan Cheon, a Master’s candidate of KAIST Department of Brain and Cognitive Sciences participated as the first author and Professor Sang Wan Lee of the same department as a co-author, was presented at the 38th Neural Information Processing Systems (NeurIPS), the world's top artificial intelligence conference, on December 14th in Vancouver, Canada. (Paper title: Pretraining with random noise for fast and robust learning without weight transport)

This study was conducted with the support of the National Research Foundation of Korea's Basic Research Program in Science and Engineering, the Information and Communications Technology Planning and Evaluation Institute's Talent Development Program, and the KAIST Singularity Professor Program.

2024.12.16 View 4167

KAIST Proposes a New Way to Circumvent a Long-time Frustration in Neural Computing

The human brain begins learning through spontaneous random activities even before it receives sensory information from the external world. The technology developed by the KAIST research team enables much faster and more accurate learning when exposed to actual data by pre-learning random information in a brain-mimicking artificial neural network, and is expected to be a breakthrough in the development of brain-based artificial intelligence and neuromorphic computing technology in the future.

KAIST (President Kwang-Hyung Lee) announced on the 16th of December that Professor Se-Bum Paik 's research team in the Department of Brain Cognitive Sciences solved the weight transport problem*, a long-standing challenge in neural network learning, and through this, explained the principles that enable resource-efficient learning in biological brain neural networks.

*Weight transport problem: This is the biggest obstacle to the development of artificial intelligence that mimics the biological brain. It is the fundamental reason why large-scale memory and computational work are required in the learning of general artificial neural networks, unlike biological brains.

Over the past several decades, the development of artificial intelligence has been based on error backpropagation learning proposed by Geoffery Hinton, who won the Nobel Prize in Physics this year. However, error backpropagation learning was thought to be impossible in biological brains because it requires the unrealistic assumption that individual neurons must know all the connected information across multiple layers in order to calculate the error signal for learning.

< Figure 1. Illustration depicting the method of random noise training and its effects >

This difficult problem, called the weight transport problem, was raised by Francis Crick, who won the Nobel Prize in Physiology or Medicine for the discovery of the structure of DNA, after the error backpropagation learning was proposed by Hinton in 1986. Since then, it has been considered the reason why the operating principles of natural neural networks and artificial neural networks will forever be fundamentally different.

At the borderline of artificial intelligence and neuroscience, researchers including Hinton have continued to attempt to create biologically plausible models that can implement the learning principles of the brain by solving the weight transport problem.

In 2016, a joint research team from Oxford University and DeepMind in the UK first proposed the concept of error backpropagation learning being possible without weight transport, drawing attention from the academic world. However, biologically plausible error backpropagation learning without weight transport was inefficient, with slow learning speeds and low accuracy, making it difficult to apply in reality.

KAIST research team noted that the biological brain begins learning through internal spontaneous random neural activity even before experiencing external sensory experiences. To mimic this, the research team pre-trained a biologically plausible neural network without weight transport with meaningless random information (random noise).

As a result, they showed that the symmetry of the forward and backward neural cell connections of the neural network, which is an essential condition for error backpropagation learning, can be created. In other words, learning without weight transport is possible through random pre-training.

< Figure 2. Illustration depicting the meta-learning effect of random noise training >

The research team revealed that learning random information before learning actual data has the property of meta-learning, which is ‘learning how to learn.’ It was shown that neural networks that pre-learned random noise perform much faster and more accurate learning when exposed to actual data, and can achieve high learning efficiency without weight transport.

< Figure 3. Illustration depicting research on understanding the brain's operating principles through artificial neural networks >

Professor Se-Bum Paik said, “It breaks the conventional understanding of existing machine learning that only data learning is important, and provides a new perspective that focuses on the neuroscience principles of creating appropriate conditions before learning,” and added, “It is significant in that it solves important problems in artificial neural network learning through clues from developmental neuroscience, and at the same time provides insight into the brain’s learning principles through artificial neural network models.”

This study, in which Jeonghwan Cheon, a Master’s candidate of KAIST Department of Brain and Cognitive Sciences participated as the first author and Professor Sang Wan Lee of the same department as a co-author, was presented at the 38th Neural Information Processing Systems (NeurIPS), the world's top artificial intelligence conference, on December 14th in Vancouver, Canada. (Paper title: Pretraining with random noise for fast and robust learning without weight transport)

This study was conducted with the support of the National Research Foundation of Korea's Basic Research Program in Science and Engineering, the Information and Communications Technology Planning and Evaluation Institute's Talent Development Program, and the KAIST Singularity Professor Program.

2024.12.16 View 4167 -

KAIST Proposes AI Training Method that will Drastically Shorten Time for Complex Quantum Mechanical Calculations

- Professor Yong-Hoon Kim's team from the School of Electrical Engineering succeeded for the first time in accelerating quantum mechanical electronic structure calculations using a convolutional neural network (CNN) model

- Presenting an AI learning principle of quantum mechanical 3D chemical bonding information, the work is expected to accelerate the computer-assisted designing of next-generation materials and devices

The close relationship between AI and high-performance scientific computing can be seen in the fact that both the 2024 Nobel Prizes in Physics and Chemistry were awarded to scientists for their AI-related research contributions in their respective fields of study. KAIST researchers succeeded in dramatically reducing the computation time for highly sophisticated quantum mechanical computer simulations by predicting atomic-level chemical bonding information distributed in 3D space using a novel AI approach.

KAIST (President Kwang-Hyung Lee) announced on the 30th of October that Professor Yong-Hoon Kim's team from the School of Electrical Engineering developed a 3D computer vision artificial neural network-based computation methodology that bypasses the complex algorithms required for atomic-level quantum mechanical calculations traditionally performed using supercomputers to derive the properties of materials.

< Figure 1. Various methodologies are utilized in the simulation of materials and materials, such as quantum mechanical calculations at the nanometer (nm) level, classical mechanical force fields at the scale of tens to hundreds of nanometers, continuum dynamics calculations at the macroscopic scale, and calculations that mix simulations at different scales. These simulations are already playing a key role in a wide range of basic research and application development fields in combination with informatics techniques. Recently, there have been active efforts to introduce machine learning techniques to radically accelerate simulations, but research on introducing machine learning techniques to quantum mechanical electronic structure calculations, which form the basis of high-scale simulations, is still insufficient. >

The quantum mechanical density functional theory (DFT) calculations using supercomputers have become an essential and standard tool in a wide range of research and development fields, including advanced materials and drug design, as they allow fast and accurate prediction of material properties.

*Density functional theory (DFT): A representative theory of ab initio (first principles) calculations that calculate quantum mechanical properties from the atomic level.

However, practical DFT calculations require generating 3D electron density and solving quantum mechanical equations through a complex, iterative self-consistent field (SCF)* process that must be repeated tens to hundreds of times. This restricts its application to systems with only a few hundred to a few thousand atoms.

*Self-consistent field (SCF): A scientific computing method widely used to solve complex many-body problems that must be described by a number of interconnected simultaneous differential equations.

Professor Yong-Hoon Kim’s research team questioned whether recent advancements in AI techniques could be used to bypass the SCF process. As a result, they developed the DeepSCF model, which accelerates calculations by learning chemical bonding information distributed in a 3D space using neural network algorithms from the field of computer vision.

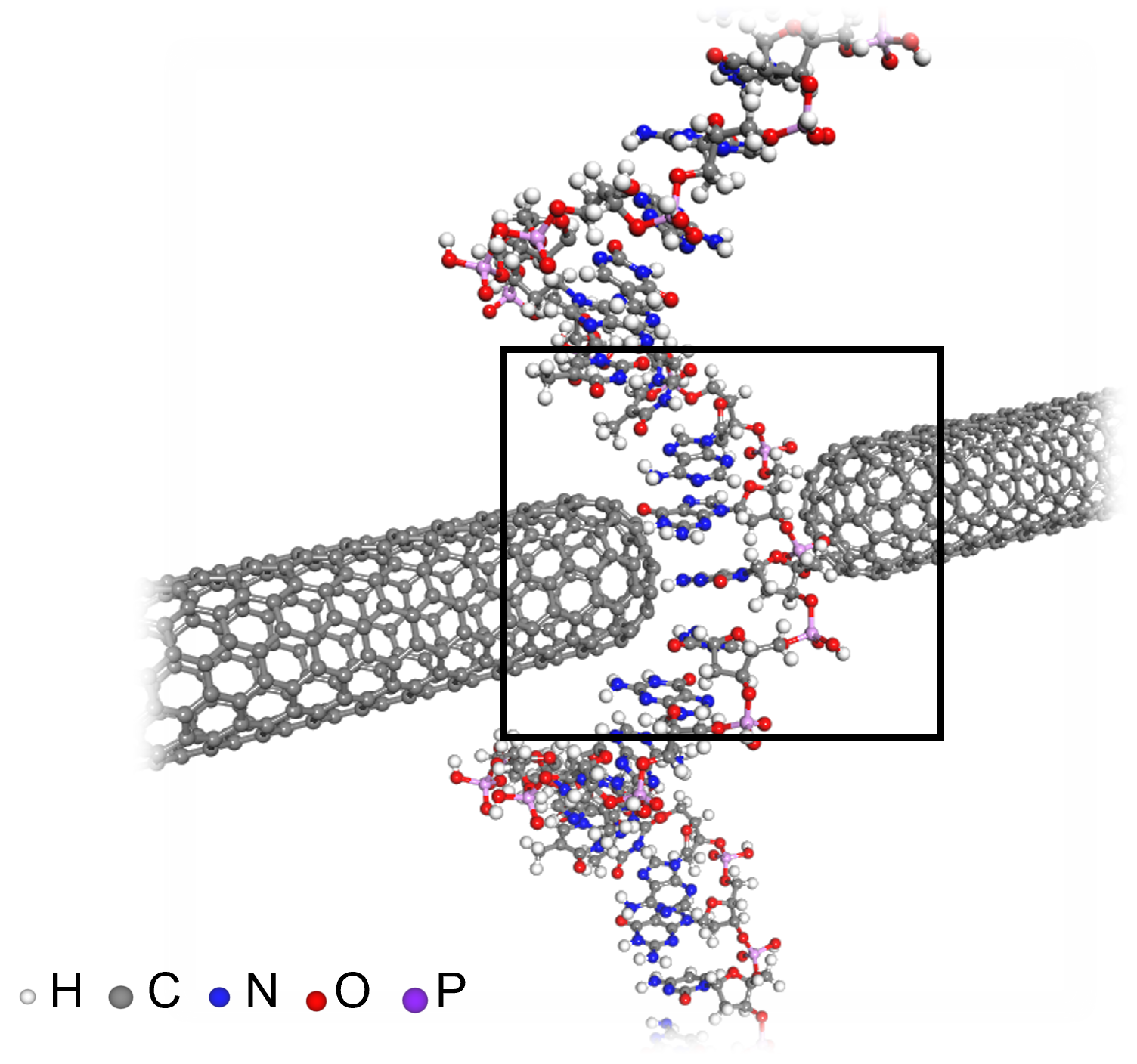

< Figure 2. The deepSCF methodology developed in this study provides a way to rapidly accelerate DFT calculations by avoiding the self-consistent field process (orange box) that had to be performed repeatedly in traditional quantum mechanical electronic structure calculations through artificial neural network techniques (green box). The self-consistent field process is a process of predicting the 3D electron density, constructing the corresponding potential, and then solving the quantum mechanical Cohn-Sham equations, repeating tens to hundreds of times. The core idea of the deepSCF methodology is that the residual electron density (δρ), which is the difference between the electron density (ρ) and the sum of the electron densities of the constituent atoms (ρ0), corresponds to chemical bonding information, so the self-consistent field process is replaced with a 3D convolutional neural network model. >

The research team focused on the fact that, according to density functional theory, electron density contains all quantum mechanical information of electrons, and that the residual electron density — the difference between the total electron density and the sum of the electron densities of the constituent atoms — contains chemical bonding information. They used this as the target for machine learning.

They then adopted a dataset of organic molecules with various chemical bonding characteristics, and applied random rotations and deformations to the atomic structures of these molecules to further enhance the model’s accuracy and generalization capabilities. Ultimately, the research team demonstrated the validity and efficiency of the DeepSCF methodology on large, complex systems.

< Figure 3. An example of applying the deepSCF methodology to a carbon nanotube-based DNA sequence analysis device model (top left). In addition to classical mechanical interatomic forces (bottom right), the residual electron density (top right) and quantum mechanical electronic structure properties such as the electronic density of states (DOS) (bottom left) containing information on chemical bonding are rapidly predicted with an accuracy corresponding to the standard DFT calculation results that perform the SCF process. >

Professor Yong-Hoon Kim, who supervised the research, explained that his team had found a way to map quantum mechanical chemical bonding information in a 3D space onto artificial neural networks. He noted, “Since quantum mechanical electron structure calculations underpin materials simulations across all scales, this research establishes a foundational principle for accelerating material calculations using artificial intelligence.”

Ryong-Gyu Lee, a PhD candidate in the School of Electrical Engineering, served as the first author of this research, which was published online on October 24 in Npj Computational Materials, a prestigious journal in the field of material computation. (Paper title: “Convolutional network learning of self-consistent electron density via grid-projected atomic fingerprints”)

This research was conducted with support from the KAIST High-Risk Research Program for Graduate Students and the National Research Foundation of Korea’s Mid-career Researcher Support Program.

2024.10.30 View 3316

KAIST Proposes AI Training Method that will Drastically Shorten Time for Complex Quantum Mechanical Calculations

- Professor Yong-Hoon Kim's team from the School of Electrical Engineering succeeded for the first time in accelerating quantum mechanical electronic structure calculations using a convolutional neural network (CNN) model

- Presenting an AI learning principle of quantum mechanical 3D chemical bonding information, the work is expected to accelerate the computer-assisted designing of next-generation materials and devices

The close relationship between AI and high-performance scientific computing can be seen in the fact that both the 2024 Nobel Prizes in Physics and Chemistry were awarded to scientists for their AI-related research contributions in their respective fields of study. KAIST researchers succeeded in dramatically reducing the computation time for highly sophisticated quantum mechanical computer simulations by predicting atomic-level chemical bonding information distributed in 3D space using a novel AI approach.

KAIST (President Kwang-Hyung Lee) announced on the 30th of October that Professor Yong-Hoon Kim's team from the School of Electrical Engineering developed a 3D computer vision artificial neural network-based computation methodology that bypasses the complex algorithms required for atomic-level quantum mechanical calculations traditionally performed using supercomputers to derive the properties of materials.

< Figure 1. Various methodologies are utilized in the simulation of materials and materials, such as quantum mechanical calculations at the nanometer (nm) level, classical mechanical force fields at the scale of tens to hundreds of nanometers, continuum dynamics calculations at the macroscopic scale, and calculations that mix simulations at different scales. These simulations are already playing a key role in a wide range of basic research and application development fields in combination with informatics techniques. Recently, there have been active efforts to introduce machine learning techniques to radically accelerate simulations, but research on introducing machine learning techniques to quantum mechanical electronic structure calculations, which form the basis of high-scale simulations, is still insufficient. >

The quantum mechanical density functional theory (DFT) calculations using supercomputers have become an essential and standard tool in a wide range of research and development fields, including advanced materials and drug design, as they allow fast and accurate prediction of material properties.

*Density functional theory (DFT): A representative theory of ab initio (first principles) calculations that calculate quantum mechanical properties from the atomic level.

However, practical DFT calculations require generating 3D electron density and solving quantum mechanical equations through a complex, iterative self-consistent field (SCF)* process that must be repeated tens to hundreds of times. This restricts its application to systems with only a few hundred to a few thousand atoms.

*Self-consistent field (SCF): A scientific computing method widely used to solve complex many-body problems that must be described by a number of interconnected simultaneous differential equations.

Professor Yong-Hoon Kim’s research team questioned whether recent advancements in AI techniques could be used to bypass the SCF process. As a result, they developed the DeepSCF model, which accelerates calculations by learning chemical bonding information distributed in a 3D space using neural network algorithms from the field of computer vision.

< Figure 2. The deepSCF methodology developed in this study provides a way to rapidly accelerate DFT calculations by avoiding the self-consistent field process (orange box) that had to be performed repeatedly in traditional quantum mechanical electronic structure calculations through artificial neural network techniques (green box). The self-consistent field process is a process of predicting the 3D electron density, constructing the corresponding potential, and then solving the quantum mechanical Cohn-Sham equations, repeating tens to hundreds of times. The core idea of the deepSCF methodology is that the residual electron density (δρ), which is the difference between the electron density (ρ) and the sum of the electron densities of the constituent atoms (ρ0), corresponds to chemical bonding information, so the self-consistent field process is replaced with a 3D convolutional neural network model. >

The research team focused on the fact that, according to density functional theory, electron density contains all quantum mechanical information of electrons, and that the residual electron density — the difference between the total electron density and the sum of the electron densities of the constituent atoms — contains chemical bonding information. They used this as the target for machine learning.

They then adopted a dataset of organic molecules with various chemical bonding characteristics, and applied random rotations and deformations to the atomic structures of these molecules to further enhance the model’s accuracy and generalization capabilities. Ultimately, the research team demonstrated the validity and efficiency of the DeepSCF methodology on large, complex systems.

< Figure 3. An example of applying the deepSCF methodology to a carbon nanotube-based DNA sequence analysis device model (top left). In addition to classical mechanical interatomic forces (bottom right), the residual electron density (top right) and quantum mechanical electronic structure properties such as the electronic density of states (DOS) (bottom left) containing information on chemical bonding are rapidly predicted with an accuracy corresponding to the standard DFT calculation results that perform the SCF process. >

Professor Yong-Hoon Kim, who supervised the research, explained that his team had found a way to map quantum mechanical chemical bonding information in a 3D space onto artificial neural networks. He noted, “Since quantum mechanical electron structure calculations underpin materials simulations across all scales, this research establishes a foundational principle for accelerating material calculations using artificial intelligence.”

Ryong-Gyu Lee, a PhD candidate in the School of Electrical Engineering, served as the first author of this research, which was published online on October 24 in Npj Computational Materials, a prestigious journal in the field of material computation. (Paper title: “Convolutional network learning of self-consistent electron density via grid-projected atomic fingerprints”)

This research was conducted with support from the KAIST High-Risk Research Program for Graduate Students and the National Research Foundation of Korea’s Mid-career Researcher Support Program.

2024.10.30 View 3316 -

KAIST Employs Image-recognition AI to Determine Battery Composition and Conditions

An international collaborative research team has developed an image recognition technology that can accurately determine the elemental composition and the number of charge and discharge cycles of a battery by examining only its surface morphology using AI learning.

KAIST (President Kwang-Hyung Lee) announced on July 2nd that Professor Seungbum Hong from the Department of Materials Science and Engineering, in collaboration with the Electronics and Telecommunications Research Institute (ETRI) and Drexel University in the United States, has developed a method to predict the major elemental composition and charge-discharge state of NCM cathode materials with 99.6% accuracy using convolutional neural networks (CNN)*.

*Convolutional Neural Network (CNN): A type of multi-layer, feed-forward, artificial neural network used for analyzing visual images.

The research team noted that while scanning electron microscopy (SEM) is used in semiconductor manufacturing to inspect wafer defects, it is rarely used in battery inspections. SEM is used for batteries to analyze the size of particles only at research sites, and reliability is predicted from the broken particles and the shape of the breakage in the case of deteriorated battery materials.

The research team decided that it would be groundbreaking if an automated SEM can be used in the process of battery production, just like in the semiconductor manufacturing, to inspect the surface of the cathode material to determine whether it was synthesized according to the desired composition and that the lifespan would be reliable, thereby reducing the defect rate.

< Figure 1. Example images of true cases and their grad-CAM overlays from the best trained network. >

The researchers trained a CNN-based AI applicable to autonomous vehicles to learn the surface images of battery materials, enabling it to predict the major elemental composition and charge-discharge cycle states of the cathode materials. They found that while the method could accurately predict the composition of materials with additives, it had lower accuracy for predicting charge-discharge states. The team plans to further train the AI with various battery material morphologies produced through different processes and ultimately use it for inspecting the compositional uniformity and predicting the lifespan of next-generation batteries.

Professor Joshua C. Agar, one of the collaborating researchers of the project from the Department of Mechanical Engineering and Mechanics of Drexel University, said, "In the future, artificial intelligence is expected to be applied not only to battery materials but also to various dynamic processes in functional materials synthesis, clean energy generation in fusion, and understanding foundations of particles and the universe."

Professor Seungbum Hong from KAIST, who led the research, stated, "This research is significant as it is the first in the world to develop an AI-based methodology that can quickly and accurately predict the major elemental composition and the state of the battery from the structural data of micron-scale SEM images. The methodology developed in this study for identifying the composition and state of battery materials based on microscopic images is expected to play a crucial role in improving the performance and quality of battery materials in the future."

< Figure 2. Accuracies of CNN Model predictions on SEM images of NCM cathode materials with additives under various conditions. >

This research was conducted by KAIST’s Materials Science and Engineering Department graduates Dr. Jimin Oh and Dr. Jiwon Yeom, the co-first authors, in collaboration with Professor Josh Agar and Dr. Kwang Man Kim from ETRI. It was supported by the National Research Foundation of Korea, the KAIST Global Singularity project, and international collaboration with the US research team. The results were published in the international journal npj Computational Materials on May 4. (Paper Title: “Composition and state prediction of lithium-ion cathode via convolutional neural network trained on scanning electron microscopy images”)

2024.07.02 View 4407

KAIST Employs Image-recognition AI to Determine Battery Composition and Conditions

An international collaborative research team has developed an image recognition technology that can accurately determine the elemental composition and the number of charge and discharge cycles of a battery by examining only its surface morphology using AI learning.

KAIST (President Kwang-Hyung Lee) announced on July 2nd that Professor Seungbum Hong from the Department of Materials Science and Engineering, in collaboration with the Electronics and Telecommunications Research Institute (ETRI) and Drexel University in the United States, has developed a method to predict the major elemental composition and charge-discharge state of NCM cathode materials with 99.6% accuracy using convolutional neural networks (CNN)*.

*Convolutional Neural Network (CNN): A type of multi-layer, feed-forward, artificial neural network used for analyzing visual images.

The research team noted that while scanning electron microscopy (SEM) is used in semiconductor manufacturing to inspect wafer defects, it is rarely used in battery inspections. SEM is used for batteries to analyze the size of particles only at research sites, and reliability is predicted from the broken particles and the shape of the breakage in the case of deteriorated battery materials.

The research team decided that it would be groundbreaking if an automated SEM can be used in the process of battery production, just like in the semiconductor manufacturing, to inspect the surface of the cathode material to determine whether it was synthesized according to the desired composition and that the lifespan would be reliable, thereby reducing the defect rate.

< Figure 1. Example images of true cases and their grad-CAM overlays from the best trained network. >

The researchers trained a CNN-based AI applicable to autonomous vehicles to learn the surface images of battery materials, enabling it to predict the major elemental composition and charge-discharge cycle states of the cathode materials. They found that while the method could accurately predict the composition of materials with additives, it had lower accuracy for predicting charge-discharge states. The team plans to further train the AI with various battery material morphologies produced through different processes and ultimately use it for inspecting the compositional uniformity and predicting the lifespan of next-generation batteries.

Professor Joshua C. Agar, one of the collaborating researchers of the project from the Department of Mechanical Engineering and Mechanics of Drexel University, said, "In the future, artificial intelligence is expected to be applied not only to battery materials but also to various dynamic processes in functional materials synthesis, clean energy generation in fusion, and understanding foundations of particles and the universe."

Professor Seungbum Hong from KAIST, who led the research, stated, "This research is significant as it is the first in the world to develop an AI-based methodology that can quickly and accurately predict the major elemental composition and the state of the battery from the structural data of micron-scale SEM images. The methodology developed in this study for identifying the composition and state of battery materials based on microscopic images is expected to play a crucial role in improving the performance and quality of battery materials in the future."

< Figure 2. Accuracies of CNN Model predictions on SEM images of NCM cathode materials with additives under various conditions. >

This research was conducted by KAIST’s Materials Science and Engineering Department graduates Dr. Jimin Oh and Dr. Jiwon Yeom, the co-first authors, in collaboration with Professor Josh Agar and Dr. Kwang Man Kim from ETRI. It was supported by the National Research Foundation of Korea, the KAIST Global Singularity project, and international collaboration with the US research team. The results were published in the international journal npj Computational Materials on May 4. (Paper Title: “Composition and state prediction of lithium-ion cathode via convolutional neural network trained on scanning electron microscopy images”)

2024.07.02 View 4407 -

KAIST Research Team Breaks Down Musical Instincts with AI

Music, often referred to as the universal language, is known to be a common component in all cultures. Then, could ‘musical instinct’ be something that is shared to some degree despite the extensive environmental differences amongst cultures?

On January 16, a KAIST research team led by Professor Hawoong Jung from the Department of Physics announced to have identified the principle by which musical instincts emerge from the human brain without special learning using an artificial neural network model.

Previously, many researchers have attempted to identify the similarities and differences between the music that exist in various different cultures, and tried to understand the origin of the universality. A paper published in Science in 2019 had revealed that music is produced in all ethnographically distinct cultures, and that similar forms of beats and tunes are used. Neuroscientist have also previously found out that a specific part of the human brain, namely the auditory cortex, is responsible for processing musical information.

Professor Jung’s team used an artificial neural network model to show that cognitive functions for music forms spontaneously as a result of processing auditory information received from nature, without being taught music. The research team utilized AudioSet, a large-scale collection of sound data provided by Google, and taught the artificial neural network to learn the various sounds. Interestingly, the research team discovered that certain neurons within the network model would respond selectively to music. In other words, they observed the spontaneous generation of neurons that reacted minimally to various other sounds like those of animals, nature, or machines, but showed high levels of response to various forms of music including both instrumental and vocal.

The neurons in the artificial neural network model showed similar reactive behaviours to those in the auditory cortex of a real brain. For example, artificial neurons responded less to the sound of music that was cropped into short intervals and were rearranged. This indicates that the spontaneously-generated music-selective neurons encode the temporal structure of music. This property was not limited to a specific genre of music, but emerged across 25 different genres including classic, pop, rock, jazz, and electronic.

< Figure 1. Illustration of the musicality of the brain and artificial neural network (created with DALL·E3 AI based on the paper content) >

Furthermore, suppressing the activity of the music-selective neurons was found to greatly impede the cognitive accuracy for other natural sounds. That is to say, the neural function that processes musical information helps process other sounds, and that ‘musical ability’ may be an instinct formed as a result of an evolutionary adaptation acquired to better process sounds from nature.

Professor Hawoong Jung, who advised the research, said, “The results of our study imply that evolutionary pressure has contributed to forming the universal basis for processing musical information in various cultures.” As for the significance of the research, he explained, “We look forward for this artificially built model with human-like musicality to become an original model for various applications including AI music generation, musical therapy, and for research in musical cognition.” He also commented on its limitations, adding, “This research however does not take into consideration the developmental process that follows the learning of music, and it must be noted that this is a study on the foundation of processing musical information in early development.”

< Figure 2. The artificial neural network that learned to recognize non-musical natural sounds in the cyber space distinguishes between music and non-music. >

This research, conducted by first author Dr. Gwangsu Kim of the KAIST Department of Physics (current affiliation: MIT Department of Brain and Cognitive Sciences) and Dr. Dong-Kyum Kim (current affiliation: IBS) was published in Nature Communications under the title, “Spontaneous emergence of rudimentary music detectors in deep neural networks”.

This research was supported by the National Research Foundation of Korea.

2024.01.23 View 5437

KAIST Research Team Breaks Down Musical Instincts with AI

Music, often referred to as the universal language, is known to be a common component in all cultures. Then, could ‘musical instinct’ be something that is shared to some degree despite the extensive environmental differences amongst cultures?

On January 16, a KAIST research team led by Professor Hawoong Jung from the Department of Physics announced to have identified the principle by which musical instincts emerge from the human brain without special learning using an artificial neural network model.

Previously, many researchers have attempted to identify the similarities and differences between the music that exist in various different cultures, and tried to understand the origin of the universality. A paper published in Science in 2019 had revealed that music is produced in all ethnographically distinct cultures, and that similar forms of beats and tunes are used. Neuroscientist have also previously found out that a specific part of the human brain, namely the auditory cortex, is responsible for processing musical information.

Professor Jung’s team used an artificial neural network model to show that cognitive functions for music forms spontaneously as a result of processing auditory information received from nature, without being taught music. The research team utilized AudioSet, a large-scale collection of sound data provided by Google, and taught the artificial neural network to learn the various sounds. Interestingly, the research team discovered that certain neurons within the network model would respond selectively to music. In other words, they observed the spontaneous generation of neurons that reacted minimally to various other sounds like those of animals, nature, or machines, but showed high levels of response to various forms of music including both instrumental and vocal.

The neurons in the artificial neural network model showed similar reactive behaviours to those in the auditory cortex of a real brain. For example, artificial neurons responded less to the sound of music that was cropped into short intervals and were rearranged. This indicates that the spontaneously-generated music-selective neurons encode the temporal structure of music. This property was not limited to a specific genre of music, but emerged across 25 different genres including classic, pop, rock, jazz, and electronic.

< Figure 1. Illustration of the musicality of the brain and artificial neural network (created with DALL·E3 AI based on the paper content) >

Furthermore, suppressing the activity of the music-selective neurons was found to greatly impede the cognitive accuracy for other natural sounds. That is to say, the neural function that processes musical information helps process other sounds, and that ‘musical ability’ may be an instinct formed as a result of an evolutionary adaptation acquired to better process sounds from nature.

Professor Hawoong Jung, who advised the research, said, “The results of our study imply that evolutionary pressure has contributed to forming the universal basis for processing musical information in various cultures.” As for the significance of the research, he explained, “We look forward for this artificially built model with human-like musicality to become an original model for various applications including AI music generation, musical therapy, and for research in musical cognition.” He also commented on its limitations, adding, “This research however does not take into consideration the developmental process that follows the learning of music, and it must be noted that this is a study on the foundation of processing musical information in early development.”

< Figure 2. The artificial neural network that learned to recognize non-musical natural sounds in the cyber space distinguishes between music and non-music. >

This research, conducted by first author Dr. Gwangsu Kim of the KAIST Department of Physics (current affiliation: MIT Department of Brain and Cognitive Sciences) and Dr. Dong-Kyum Kim (current affiliation: IBS) was published in Nature Communications under the title, “Spontaneous emergence of rudimentary music detectors in deep neural networks”.

This research was supported by the National Research Foundation of Korea.

2024.01.23 View 5437 -

KAIST team develops smart immune system that can pin down on malignant tumors

A joint research team led by Professor Jung Kyoon Choi of the KAIST Department of Bio and Brain Engineering and Professor Jong-Eun Park of the KAIST Graduate School of Medical Science and Engineering (GSMSE) announced the development of the key technologies to treat cancers using smart immune cells designed based on AI and big data analysis. This technology is expected to be a next-generation immunotherapy that allows precision targeting of tumor cells by having the chimeric antigen receptors (CARs) operate through a logical circuit. Professor Hee Jung An of CHA Bundang Medical Center and Professor Hae-Ock Lee of the Catholic University of Korea also participated in this research to contribute joint effort.

Professor Jung Kyoon Choi’s team built a gene expression database from millions of cells, and used this to successfully develop and verify a deep-learning algorithm that could detect the differences in gene expression patterns between tumor cells and normal cells through a logical circuit. CAR immune cells that were fitted with the logic circuits discovered through this methodology could distinguish between tumorous and normal cells as a computer would, and therefore showed potentials to strike only on tumor cells accurately without causing unwanted side effects.

This research, conducted by co-first authors Dr. Joonha Kwon of the KAIST Department of Bio and Brain Engineering and Ph.D. candidate Junho Kang of KAIST GSMSE, was published by Nature Biotechnology on February 16, under the title Single-cell mapping of combinatorial target antigens for CAR switches using logic gates.

An area in cancer research where the most attempts and advances have been made in recent years is immunotherapy. This field of treatment, which utilizes the patient’s own immune system in order to overcome cancer, has several methods including immune checkpoint inhibitors, cancer vaccines and cellular treatments. Immune cells like CAR-T or CAR-NK equipped with chimera antigen receptors, in particular, can recognize cancer antigens and directly destroy cancer cells.

Starting with its success in blood cancer treatment, scientists have been trying to expand the application of CAR cell therapy to treat solid cancer. But there have been difficulties to develop CAR cells with effective killing abilities against solid cancer cells with minimized side effects. Accordingly, in recent years, the development of smarter CAR engineering technologies, i.e., computational logic gates such as AND, OR, and NOT, to effectively target cancer cells has been underway.

At this point in time, the research team built a large-scale database for cancer and normal cells to discover the exact genes that are expressed only from cancer cells at a single-cell level. The team followed this up by developing an AI algorithm that could search for a combination of genes that best distinguishes cancer cells from normal cells. This algorithm, in particular, has been used to find a logic circuit that can specifically target cancer cells through cell-level simulations of all gene combinations. CAR-T cells equipped with logic circuits discovered through this methodology are expected to distinguish cancerous cells from normal cells like computers, thereby minimizing side effects and maximizing the effects of chemotherapy.

Dr. Joonha Kwon, who is the first author of this paper, said, “this research suggests a new method that hasn’t been tried before. What’s particularly noteworthy is the process in which we found the optimal CAR cell circuit through simulations of millions of individual tumors and normal cells.” He added, “This is an innovative technology that can apply AI and computer logic circuits to immune cell engineering. It would contribute greatly to expanding CAR therapy, which is being successfully used for blood cancer, to solid cancers as well.”

This research was funded by the Original Technology Development Project and Research Program for Next Generation Applied Omic of the Korea Research Foundation.

Figure 1. A schematic diagram of manufacturing and administration process of CAR therapy and of cancer cell-specific dual targeting using CAR.

Figure 2. Deep learning (convolutional neural networks, CNNs) algorithm for selection of dual targets based on gene combination (left) and algorithm for calculating expressing cell fractions by gene combination according to logical circuit (right).

2023.03.09 View 8477

KAIST team develops smart immune system that can pin down on malignant tumors

A joint research team led by Professor Jung Kyoon Choi of the KAIST Department of Bio and Brain Engineering and Professor Jong-Eun Park of the KAIST Graduate School of Medical Science and Engineering (GSMSE) announced the development of the key technologies to treat cancers using smart immune cells designed based on AI and big data analysis. This technology is expected to be a next-generation immunotherapy that allows precision targeting of tumor cells by having the chimeric antigen receptors (CARs) operate through a logical circuit. Professor Hee Jung An of CHA Bundang Medical Center and Professor Hae-Ock Lee of the Catholic University of Korea also participated in this research to contribute joint effort.

Professor Jung Kyoon Choi’s team built a gene expression database from millions of cells, and used this to successfully develop and verify a deep-learning algorithm that could detect the differences in gene expression patterns between tumor cells and normal cells through a logical circuit. CAR immune cells that were fitted with the logic circuits discovered through this methodology could distinguish between tumorous and normal cells as a computer would, and therefore showed potentials to strike only on tumor cells accurately without causing unwanted side effects.

This research, conducted by co-first authors Dr. Joonha Kwon of the KAIST Department of Bio and Brain Engineering and Ph.D. candidate Junho Kang of KAIST GSMSE, was published by Nature Biotechnology on February 16, under the title Single-cell mapping of combinatorial target antigens for CAR switches using logic gates.

An area in cancer research where the most attempts and advances have been made in recent years is immunotherapy. This field of treatment, which utilizes the patient’s own immune system in order to overcome cancer, has several methods including immune checkpoint inhibitors, cancer vaccines and cellular treatments. Immune cells like CAR-T or CAR-NK equipped with chimera antigen receptors, in particular, can recognize cancer antigens and directly destroy cancer cells.

Starting with its success in blood cancer treatment, scientists have been trying to expand the application of CAR cell therapy to treat solid cancer. But there have been difficulties to develop CAR cells with effective killing abilities against solid cancer cells with minimized side effects. Accordingly, in recent years, the development of smarter CAR engineering technologies, i.e., computational logic gates such as AND, OR, and NOT, to effectively target cancer cells has been underway.

At this point in time, the research team built a large-scale database for cancer and normal cells to discover the exact genes that are expressed only from cancer cells at a single-cell level. The team followed this up by developing an AI algorithm that could search for a combination of genes that best distinguishes cancer cells from normal cells. This algorithm, in particular, has been used to find a logic circuit that can specifically target cancer cells through cell-level simulations of all gene combinations. CAR-T cells equipped with logic circuits discovered through this methodology are expected to distinguish cancerous cells from normal cells like computers, thereby minimizing side effects and maximizing the effects of chemotherapy.

Dr. Joonha Kwon, who is the first author of this paper, said, “this research suggests a new method that hasn’t been tried before. What’s particularly noteworthy is the process in which we found the optimal CAR cell circuit through simulations of millions of individual tumors and normal cells.” He added, “This is an innovative technology that can apply AI and computer logic circuits to immune cell engineering. It would contribute greatly to expanding CAR therapy, which is being successfully used for blood cancer, to solid cancers as well.”

This research was funded by the Original Technology Development Project and Research Program for Next Generation Applied Omic of the Korea Research Foundation.

Figure 1. A schematic diagram of manufacturing and administration process of CAR therapy and of cancer cell-specific dual targeting using CAR.

Figure 2. Deep learning (convolutional neural networks, CNNs) algorithm for selection of dual targets based on gene combination (left) and algorithm for calculating expressing cell fractions by gene combination according to logical circuit (right).

2023.03.09 View 8477 -

Professor Jae-Woong Jeong Receives Hyonwoo KAIST Academic Award

Professor Jae-Woong Jeong from the School of Electrical Engineering was selected for the Hyonwoo KAIST Academic Award, funded by the HyonWoo Cultural Foundation (Chairman Soo-il Kwak, honorary professor at Seoul National University Business School).

The Hyonwoo KAIST Academic Award, presented for the first time in 2021, is an award newly founded by the donations of Chairman Soo-il Kwak of the HyonWoo Cultural Foundation, who aims to reward excellent KAIST scholars who have made outstanding academic achievements.

Every year, through the strict evaluations of the selection committee of the HyonWoo Cultural Foundation and the faculty reward recommendation board, KAIST will choose one faculty member that may represent the school with their excellent academic achievement, and reward them with a plaque and 100 million won.

Professor Jae-Woong Jeong, the winner of this year’s award, developed the first IoT-based wireless remote brain neural network control system to overcome brain diseases, and has been leading the field. The research was published in 2021 in Nature Biomedical Engineering, one of world’s best scientific journals, and has been recognized as a novel technology that suggested a new vision for the automation of brain research and disease treatment. This study, led by Professor Jeong’s research team, was part of the KAIST College of Engineering Global Initiative Interdisciplinary Research Project, and was jointly studied by Washington University School of Medicine through an international research collaboration. The technology was introduced more than 60 times through both domestic and international media, including Medical Xpress, MBC News, and Maeil Business News.

Professor Jeong has also developed a wirelessly chargeable soft machine for brain transplants, and the results were published in Nature Communications. He thereby opened a new paradigm for implantable semi-permanent devices for transplants, and is making unprecedented research achievements.

2022.06.13 View 7312

Professor Jae-Woong Jeong Receives Hyonwoo KAIST Academic Award

Professor Jae-Woong Jeong from the School of Electrical Engineering was selected for the Hyonwoo KAIST Academic Award, funded by the HyonWoo Cultural Foundation (Chairman Soo-il Kwak, honorary professor at Seoul National University Business School).

The Hyonwoo KAIST Academic Award, presented for the first time in 2021, is an award newly founded by the donations of Chairman Soo-il Kwak of the HyonWoo Cultural Foundation, who aims to reward excellent KAIST scholars who have made outstanding academic achievements.

Every year, through the strict evaluations of the selection committee of the HyonWoo Cultural Foundation and the faculty reward recommendation board, KAIST will choose one faculty member that may represent the school with their excellent academic achievement, and reward them with a plaque and 100 million won.

Professor Jae-Woong Jeong, the winner of this year’s award, developed the first IoT-based wireless remote brain neural network control system to overcome brain diseases, and has been leading the field. The research was published in 2021 in Nature Biomedical Engineering, one of world’s best scientific journals, and has been recognized as a novel technology that suggested a new vision for the automation of brain research and disease treatment. This study, led by Professor Jeong’s research team, was part of the KAIST College of Engineering Global Initiative Interdisciplinary Research Project, and was jointly studied by Washington University School of Medicine through an international research collaboration. The technology was introduced more than 60 times through both domestic and international media, including Medical Xpress, MBC News, and Maeil Business News.

Professor Jeong has also developed a wirelessly chargeable soft machine for brain transplants, and the results were published in Nature Communications. He thereby opened a new paradigm for implantable semi-permanent devices for transplants, and is making unprecedented research achievements.

2022.06.13 View 7312 -

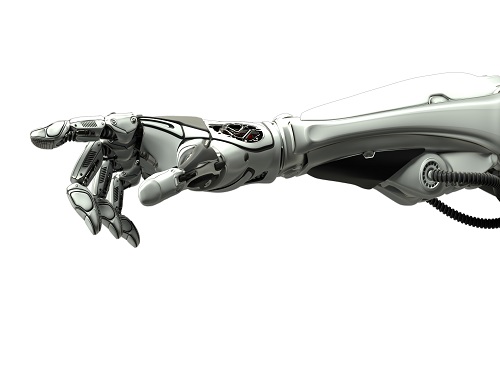

Decoding Brain Signals to Control a Robotic Arm

Advanced brain-machine interface system successfully interprets arm movement directions from neural signals in the brain

Researchers have developed a mind-reading system for decoding neural signals from the brain during arm movement. The method, described in the journal Applied Soft Computing, can be used by a person to control a robotic arm through a brain-machine interface (BMI).

A BMI is a device that translates nerve signals into commands to control a machine, such as a computer or a robotic limb. There are two main techniques for monitoring neural signals in BMIs: electroencephalography (EEG) and electrocorticography (ECoG).

The EEG exhibits signals from electrodes on the surface of the scalp and is widely employed because it is non-invasive, relatively cheap, safe and easy to use. However, the EEG has low spatial resolution and detects irrelevant neural signals, which makes it difficult to interpret the intentions of individuals from the EEG.

On the other hand, the ECoG is an invasive method that involves placing electrodes directly on the surface of the cerebral cortex below the scalp. Compared with the EEG, the ECoG can monitor neural signals with much higher spatial resolution and less background noise. However, this technique has several drawbacks.

“The ECoG is primarily used to find potential sources of epileptic seizures, meaning the electrodes are placed in different locations for different patients and may not be in the optimal regions of the brain for detecting sensory and movement signals,” explained Professor Jaeseung Jeong, a brain scientist at KAIST. “This inconsistency makes it difficult to decode brain signals to predict movements.”

To overcome these problems, Professor Jeong’s team developed a new method for decoding ECoG neural signals during arm movement. The system is based on a machine-learning system for analysing and predicting neural signals called an ‘echo-state network’ and a mathematical probability model called the Gaussian distribution.

In the study, the researchers recorded ECoG signals from four individuals with epilepsy while they were performing a reach-and-grasp task. Because the ECoG electrodes were placed according to the potential sources of each patient’s epileptic seizures, only 22% to 44% of the electrodes were located in the regions of the brain responsible for controlling movement.

During the movement task, the participants were given visual cues, either by placing a real tennis ball in front of them, or via a virtual reality headset showing a clip of a human arm reaching forward in first-person view. They were asked to reach forward, grasp an object, then return their hand and release the object, while wearing motion sensors on their wrists and fingers. In a second task, they were instructed to imagine reaching forward without moving their arms.

The researchers monitored the signals from the ECoG electrodes during real and imaginary arm movements, and tested whether the new system could predict the direction of this movement from the neural signals. They found that the novel decoder successfully classified arm movements in 24 directions in three-dimensional space, both in the real and virtual tasks, and that the results were at least five times more accurate than chance. They also used a computer simulation to show that the novel ECoG decoder could control the movements of a robotic arm.

Overall, the results suggest that the new machine learning-based BCI system successfully used ECoG signals to interpret the direction of the intended movements. The next steps will be to improve the accuracy and efficiency of the decoder. In the future, it could be used in a real-time BMI device to help people with movement or sensory impairments.

This research was supported by the KAIST Global Singularity Research Program of 2021, Brain Research Program of the National Research Foundation of Korea funded by the Ministry of Science, ICT, and Future Planning, and the Basic Science Research Program through the National Research Foundation of Korea funded by the Ministry of Education.

-PublicationHoon-Hee Kim, Jaeseung Jeong, “An electrocorticographic decoder for arm movement for brain-machine interface using an echo state network and Gaussian readout,” Applied SoftComputing online December 31, 2021 (doi.org/10.1016/j.asoc.2021.108393)

-ProfileProfessor Jaeseung JeongDepartment of Bio and Brain EngineeringCollege of EngineeringKAIST

2022.03.18 View 11243

Decoding Brain Signals to Control a Robotic Arm

Advanced brain-machine interface system successfully interprets arm movement directions from neural signals in the brain

Researchers have developed a mind-reading system for decoding neural signals from the brain during arm movement. The method, described in the journal Applied Soft Computing, can be used by a person to control a robotic arm through a brain-machine interface (BMI).

A BMI is a device that translates nerve signals into commands to control a machine, such as a computer or a robotic limb. There are two main techniques for monitoring neural signals in BMIs: electroencephalography (EEG) and electrocorticography (ECoG).

The EEG exhibits signals from electrodes on the surface of the scalp and is widely employed because it is non-invasive, relatively cheap, safe and easy to use. However, the EEG has low spatial resolution and detects irrelevant neural signals, which makes it difficult to interpret the intentions of individuals from the EEG.

On the other hand, the ECoG is an invasive method that involves placing electrodes directly on the surface of the cerebral cortex below the scalp. Compared with the EEG, the ECoG can monitor neural signals with much higher spatial resolution and less background noise. However, this technique has several drawbacks.

“The ECoG is primarily used to find potential sources of epileptic seizures, meaning the electrodes are placed in different locations for different patients and may not be in the optimal regions of the brain for detecting sensory and movement signals,” explained Professor Jaeseung Jeong, a brain scientist at KAIST. “This inconsistency makes it difficult to decode brain signals to predict movements.”

To overcome these problems, Professor Jeong’s team developed a new method for decoding ECoG neural signals during arm movement. The system is based on a machine-learning system for analysing and predicting neural signals called an ‘echo-state network’ and a mathematical probability model called the Gaussian distribution.

In the study, the researchers recorded ECoG signals from four individuals with epilepsy while they were performing a reach-and-grasp task. Because the ECoG electrodes were placed according to the potential sources of each patient’s epileptic seizures, only 22% to 44% of the electrodes were located in the regions of the brain responsible for controlling movement.

During the movement task, the participants were given visual cues, either by placing a real tennis ball in front of them, or via a virtual reality headset showing a clip of a human arm reaching forward in first-person view. They were asked to reach forward, grasp an object, then return their hand and release the object, while wearing motion sensors on their wrists and fingers. In a second task, they were instructed to imagine reaching forward without moving their arms.

The researchers monitored the signals from the ECoG electrodes during real and imaginary arm movements, and tested whether the new system could predict the direction of this movement from the neural signals. They found that the novel decoder successfully classified arm movements in 24 directions in three-dimensional space, both in the real and virtual tasks, and that the results were at least five times more accurate than chance. They also used a computer simulation to show that the novel ECoG decoder could control the movements of a robotic arm.

Overall, the results suggest that the new machine learning-based BCI system successfully used ECoG signals to interpret the direction of the intended movements. The next steps will be to improve the accuracy and efficiency of the decoder. In the future, it could be used in a real-time BMI device to help people with movement or sensory impairments.

This research was supported by the KAIST Global Singularity Research Program of 2021, Brain Research Program of the National Research Foundation of Korea funded by the Ministry of Science, ICT, and Future Planning, and the Basic Science Research Program through the National Research Foundation of Korea funded by the Ministry of Education.

-PublicationHoon-Hee Kim, Jaeseung Jeong, “An electrocorticographic decoder for arm movement for brain-machine interface using an echo state network and Gaussian readout,” Applied SoftComputing online December 31, 2021 (doi.org/10.1016/j.asoc.2021.108393)

-ProfileProfessor Jaeseung JeongDepartment of Bio and Brain EngineeringCollege of EngineeringKAIST

2022.03.18 View 11243 -

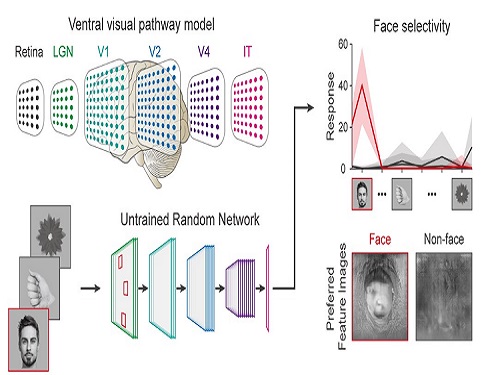

Face Detection in Untrained Deep Neural Networks

A KAIST team shows that primitive visual selectivity of faces can arise spontaneously in completely untrained deep neural networks

Researchers have found that higher visual cognitive functions can arise spontaneously in untrained neural networks. A KAIST research team led by Professor Se-Bum Paik from the Department of Bio and Brain Engineering has shown that visual selectivity of facial images can arise even in completely untrained deep neural networks.

This new finding has provided revelatory insights into mechanisms underlying the development of cognitive functions in both biological and artificial neural networks, also making a significant impact on our understanding of the origin of early brain functions before sensory experiences.

The study published in Nature Communications on December 16 demonstrates that neuronal activities selective to facial images are observed in randomly initialized deep neural networks in the complete absence of learning, and that they show the characteristics of those observed in biological brains.

The ability to identify and recognize faces is a crucial function for social behavior, and this ability is thought to originate from neuronal tuning at the single or multi-neuronal level. Neurons that selectively respond to faces are observed in young animals of various species, and this raises intense debate whether face-selective neurons can arise innately in the brain or if they require visual experience.

Using a model neural network that captures properties of the ventral stream of the visual cortex, the research team found that face-selectivity can emerge spontaneously from random feedforward wirings in untrained deep neural networks. The team showed that the character of this innate face-selectivity is comparable to that observed with face-selective neurons in the brain, and that this spontaneous neuronal tuning for faces enables the network to perform face detection tasks.

These results imply a possible scenario in which the random feedforward connections that develop in early, untrained networks may be sufficient for initializing primitive visual cognitive functions.

Professor Paik said, “Our findings suggest that innate cognitive functions can emerge spontaneously from the statistical complexity embedded in the hierarchical feedforward projection circuitry, even in the complete absence of learning”.

He continued, “Our results provide a broad conceptual advance as well as advanced insight into the mechanisms underlying the development of innate functions in both biological and artificial neural networks, which may unravel the mystery of the generation and evolution of intelligence.” This work was supported by the National Research Foundation of Korea (NRF) and by the KAIST singularity research project.

-PublicationSeungdae Baek, Min Song, Jaeson Jang, Gwangsu Kim, and Se-Bum Baik, “Face detection in untrained deep neural network,” Nature Communications 12, 7328 on Dec.16, 2021

(https://doi.org/10.1038/s41467-021-27606-9)

-ProfileProfessor Se-Bum PaikVisual System and Neural Network LaboratoryProgram of Brain and Cognitive EngineeringDepartment of Bio and Brain EngineeringCollege of EngineeringKAIST

2021.12.21 View 9775

Face Detection in Untrained Deep Neural Networks

A KAIST team shows that primitive visual selectivity of faces can arise spontaneously in completely untrained deep neural networks

Researchers have found that higher visual cognitive functions can arise spontaneously in untrained neural networks. A KAIST research team led by Professor Se-Bum Paik from the Department of Bio and Brain Engineering has shown that visual selectivity of facial images can arise even in completely untrained deep neural networks.

This new finding has provided revelatory insights into mechanisms underlying the development of cognitive functions in both biological and artificial neural networks, also making a significant impact on our understanding of the origin of early brain functions before sensory experiences.

The study published in Nature Communications on December 16 demonstrates that neuronal activities selective to facial images are observed in randomly initialized deep neural networks in the complete absence of learning, and that they show the characteristics of those observed in biological brains.

The ability to identify and recognize faces is a crucial function for social behavior, and this ability is thought to originate from neuronal tuning at the single or multi-neuronal level. Neurons that selectively respond to faces are observed in young animals of various species, and this raises intense debate whether face-selective neurons can arise innately in the brain or if they require visual experience.

Using a model neural network that captures properties of the ventral stream of the visual cortex, the research team found that face-selectivity can emerge spontaneously from random feedforward wirings in untrained deep neural networks. The team showed that the character of this innate face-selectivity is comparable to that observed with face-selective neurons in the brain, and that this spontaneous neuronal tuning for faces enables the network to perform face detection tasks.

These results imply a possible scenario in which the random feedforward connections that develop in early, untrained networks may be sufficient for initializing primitive visual cognitive functions.

Professor Paik said, “Our findings suggest that innate cognitive functions can emerge spontaneously from the statistical complexity embedded in the hierarchical feedforward projection circuitry, even in the complete absence of learning”.

He continued, “Our results provide a broad conceptual advance as well as advanced insight into the mechanisms underlying the development of innate functions in both biological and artificial neural networks, which may unravel the mystery of the generation and evolution of intelligence.” This work was supported by the National Research Foundation of Korea (NRF) and by the KAIST singularity research project.

-PublicationSeungdae Baek, Min Song, Jaeson Jang, Gwangsu Kim, and Se-Bum Baik, “Face detection in untrained deep neural network,” Nature Communications 12, 7328 on Dec.16, 2021

(https://doi.org/10.1038/s41467-021-27606-9)

-ProfileProfessor Se-Bum PaikVisual System and Neural Network LaboratoryProgram of Brain and Cognitive EngineeringDepartment of Bio and Brain EngineeringCollege of EngineeringKAIST

2021.12.21 View 9775 -

KAIST ISPI Releases Report on the Global AI Innovation Landscape

Providing key insights for building a successful AI ecosystem

The KAIST Innovation Strategy and Policy Institute (ISPI) has launched a report on the global innovation landscape of artificial intelligence in collaboration with Clarivate Plc. The report shows that AI has become a key technology and that cross-industry learning is an important AI innovation. It also stresses that the quality of innovation, not volume, is a critical success factor in technological competitiveness.

Key findings of the report include:

• Neural networks and machine learning have been unrivaled in terms of scale and growth (more than 46%), and most other AI technologies show a growth rate of more than 20%.

• Although Mainland China has shown the highest growth rate in terms of AI inventions, the influence of Chinese AI is relatively low. In contrast, the United States holds a leading position in AI-related inventions in terms of both quantity and influence.

• The U.S. and Canada have built an industry-oriented AI technology development ecosystem through organic cooperation with both academia and the Government. Mainland China and South Korea, by contrast, have a government-driven AI technology development ecosystem with relatively low qualitative outputs from the sector.

• The U.S., the U.K., and Canada have a relatively high proportion of inventions in robotics and autonomous control, whereas in Mainland China and South Korea, machine learning and neural networks are making progress. Each country/region produces high-quality inventions in their predominant AI fields, while the U.S. has produced high-impact inventions in almost all AI fields.

“The driving forces in building a sustainable AI innovation ecosystem are important national strategies. A country’s future AI capabilities will be determined by how quickly and robustly it develops its own AI ecosystem and how well it transforms the existing industry with AI technologies. Countries that build a successful AI ecosystem have the potential to accelerate growth while absorbing the AI capabilities of other countries. AI talents are already moving to countries with excellent AI ecosystems,” said Director of the ISPI Wonjoon Kim.

“AI, together with other high-tech IT technologies including big data and the Internet of Things are accelerating the digital transformation by leading an intelligent hyper-connected society and enabling the convergence of technology and business. With the rapid growth of AI innovation, AI applications are also expanding in various ways across industries and in our lives,” added Justin Kim, Special Advisor at the ISPI and a co-author of the report.

2021.12.21 View 7759

KAIST ISPI Releases Report on the Global AI Innovation Landscape

Providing key insights for building a successful AI ecosystem

The KAIST Innovation Strategy and Policy Institute (ISPI) has launched a report on the global innovation landscape of artificial intelligence in collaboration with Clarivate Plc. The report shows that AI has become a key technology and that cross-industry learning is an important AI innovation. It also stresses that the quality of innovation, not volume, is a critical success factor in technological competitiveness.

Key findings of the report include:

• Neural networks and machine learning have been unrivaled in terms of scale and growth (more than 46%), and most other AI technologies show a growth rate of more than 20%.

• Although Mainland China has shown the highest growth rate in terms of AI inventions, the influence of Chinese AI is relatively low. In contrast, the United States holds a leading position in AI-related inventions in terms of both quantity and influence.

• The U.S. and Canada have built an industry-oriented AI technology development ecosystem through organic cooperation with both academia and the Government. Mainland China and South Korea, by contrast, have a government-driven AI technology development ecosystem with relatively low qualitative outputs from the sector.

• The U.S., the U.K., and Canada have a relatively high proportion of inventions in robotics and autonomous control, whereas in Mainland China and South Korea, machine learning and neural networks are making progress. Each country/region produces high-quality inventions in their predominant AI fields, while the U.S. has produced high-impact inventions in almost all AI fields.

“The driving forces in building a sustainable AI innovation ecosystem are important national strategies. A country’s future AI capabilities will be determined by how quickly and robustly it develops its own AI ecosystem and how well it transforms the existing industry with AI technologies. Countries that build a successful AI ecosystem have the potential to accelerate growth while absorbing the AI capabilities of other countries. AI talents are already moving to countries with excellent AI ecosystems,” said Director of the ISPI Wonjoon Kim.

“AI, together with other high-tech IT technologies including big data and the Internet of Things are accelerating the digital transformation by leading an intelligent hyper-connected society and enabling the convergence of technology and business. With the rapid growth of AI innovation, AI applications are also expanding in various ways across industries and in our lives,” added Justin Kim, Special Advisor at the ISPI and a co-author of the report.

2021.12.21 View 7759 -

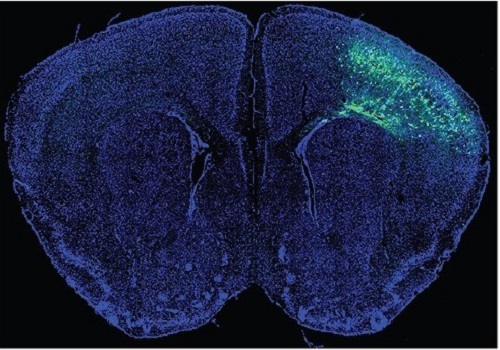

A Mechanism Underlying Most Common Cause of Epileptic Seizures Revealed

An interdisciplinary study shows that neurons carrying somatic mutations in MTOR can lead to focal epileptogenesis via non-cell-autonomous hyperexcitability of nearby nonmutated neurons

During fetal development, cells should migrate to the outer edge of the brain to form critical connections for information transfer and regulation in the body. When even a few cells fail to move to the correct location, the neurons become disorganized and this results in focal cortical dysplasia. This condition is the most common cause of seizures that cannot be controlled with medication in children and the second most common cause in adults.

Now, an interdisciplinary team studying neurogenetics, neural networks, and neurophysiology at KAIST has revealed how dysfunctions in even a small percentage of cells can cause disorder across the entire brain. They published their results on June 28 in Annals of Neurology.

The work builds on a previous finding, also by a KAIST scientists, who found that focal cortical dysplasia was caused by mutations in the cells involved in mTOR, a pathway that regulates signaling between neurons in the brain.

“Only 1 to 2% of neurons carrying mutations in the mTOR signaling pathway that regulates cell signaling in the brain have been found to include seizures in animal models of focal cortical dysplasia,” said Professor Jong-Woo Sohn from the Department of Biological Sciences. “The main challenge of this study was to explain how nearby non-mutated neurons are hyperexcitable.”

Initially, the researchers hypothesized that the mutated cells affected the number of excitatory and inhibitory synapses in all neurons, mutated or not. These neural gates can trigger or halt activity, respectively, in other neurons. Seizures are a result of extreme activity, called hyperexcitability. If the mutated cells upend the balance and result in more excitatory cells, the researchers thought, it made sense that the cells would be more susceptible to hyperexcitability and, as a result, seizures.

“Contrary to our expectations, the synaptic input balance was not changed in either the mutated or non-mutated neurons,” said Professor Jeong Ho Lee from the Graduate School of Medical Science and Engineering. “We turned our attention to a protein overproduced by mutated neurons.”