memory

-

Decoding Fear: KAIST Identifies An Affective Brain Circuit Crucial for Fear Memory Formation by Non-nociceptive Threat Stimulus

Fear memories can form in the brain following exposure to threatening situations such as natural disasters, accidents, or violence. When these memories become excessive or distorted, they can lead to severe mental health disorders, including post-traumatic stress disorder (PTSD), anxiety disorders, and depression. However, the mechanisms underlying fear memory formation triggered by affective pain rather than direct physical pain have remained largely unexplored – until now.

A KAIST research team has identified, for the first time, a brain circuit specifically responsible for forming fear memories in the absence of physical pain, marking a significant advance in understanding how psychological distress is processed and drives fear memory formation in the brain. This discovery opens the door to the development of targeted treatments for trauma-related conditions by addressing the underlying neural pathways.

< Photo 1. (from left) Professor Jin-Hee Han, Dr. Junho Han and Ph.D. Candidate Boin Suh of the Department of Biological Sciences >

KAIST (President Kwang-Hyung Lee) announced on May 15th that the research team led by Professor Jin-Hee Han in the Department of Biological Sciences has identified the pIC-PBN circuit*, a key neural pathway involved in forming fear memories triggered by psychological threats in the absence of sensory pain. This groundbreaking work was conducted through experiments with mice.*pIC–PBN circuit: A newly identified descending neural pathway from the posterior insular cortex (pIC) to the parabrachial nucleus (PBN), specialized for transmitting psychological threat information.

Traditionally, the lateral parabrachial nucleus (PBN) has been recognized as a critical part of the ascending pain pathway, receiving pain signals from the spinal cord. However, this study reveals a previously unknown role for the PBN in processing fear induced by non-painful psychological stimuli, fundamentally changing our understanding of its function in the brain.

This work is considered the first experimental evidence that 'emotional distress' and 'physical pain' are processed through different neural circuits to form fear memories, making it a significant contribution to the field of neuroscience. It clearly demonstrates the existence of a dedicated pathway (pIC-PBN) for transmitting emotional distress.

The study's first author, Dr. Junho Han, shared the personal motivation behind this research: “Our dog, Lego, is afraid of motorcycles. He never actually crashed into one, but ever since having a traumatizing event of having a motorbike almost run into him, just hearing the sound now triggers a fearful response. Humans react similarly – even if you didn’t have a personal experience of being involved in an accident, a near-miss or exposure to alarming media can create lasting fear memories, which may eventually lead to PTSD.”

He continued, “Until now, fear memory research has mainly relied on experimental models involving physical pain. However, much of real-world human fears arise from psychological threats, rather than from direct physical harm. Despite this, little was known about the brain circuits responsible for processing these psychological threats that can drive fear memory formation.”

To investigate this, the research team developed a novel fear conditioning model that utilizes visual threat stimuli instead of electrical shocks. In this model, mice were exposed to a rapidly expanding visual disk on a ceiling screen, simulating the threat of an approaching predator. This approach allowed the team to demonstrate that fear memories can form in response to a non-nociceptive, psychological threat alone, without the need for physical pain.

< Figure 1. Artificial activation of the posterior insular cortex (pIC) to lateral parabrachial nucleus (PBN) neural circuit induces anxiety-like behaviors and fear memory formation in mice. >

Using advanced chemogenetic and optogenetic techniques, the team precisely controlled neuronal activity, revealing that the lateral parabrachial nucleus (PBN) is essential to form fear memories in response to visual threats. They further traced the origin of these signals to the posterior insular cortex (pIC), a region known to process negative emotions and pain, confirming a direct connection between the two areas.

The study also showed that inhibiting the pIC–PBN circuit significantly reduced fear memory formation in response to visual threats, without affecting innate fear responses or physical pain-based learning. Conversely, artificially activating this circuit alone was sufficient to drive fear memory formation, confirming its role as a key pathway for processing psychological threat information.

< Figure 2. Schematic diagram of brain neural circuits transmitting emotional & physical pain threat signals. Visual threat stimuli do not involve physical pain but can create an anxious state and form fear memory through the affective pain signaling pathway. >

Professor Jin-Hee Han commented, “This study lays an important foundation for understanding how emotional distress-based mental disorders, such as PTSD, panic disorder, and anxiety disorder, develop, and opens new possibilities for targeted treatment approaches.”

The findings, authored by Dr. Junho Han (first author), Ph.D. candidate Boin Suh (second author), and Dr. Jin-Hee Han (corresponding author) of the Department of Biological Sciences, were published online in the international journal Science Advances on May 9, 2025.※ Paper Title: A top-down insular cortex circuit crucial for non-nociceptive fear learning. Science Advances (https://doi.org/10.1101/2024.10.14.618356)※ Author Information: Junho Han (first author), Boin Suh (second author), and Jin-Hee Han (corresponding author)

This research was supported by grants from the National Research Foundation of Korea (NRF-2022M3E5E8081183 and NRF-2017M3C7A1031322).

2025.05.15 View 3068

Decoding Fear: KAIST Identifies An Affective Brain Circuit Crucial for Fear Memory Formation by Non-nociceptive Threat Stimulus

Fear memories can form in the brain following exposure to threatening situations such as natural disasters, accidents, or violence. When these memories become excessive or distorted, they can lead to severe mental health disorders, including post-traumatic stress disorder (PTSD), anxiety disorders, and depression. However, the mechanisms underlying fear memory formation triggered by affective pain rather than direct physical pain have remained largely unexplored – until now.

A KAIST research team has identified, for the first time, a brain circuit specifically responsible for forming fear memories in the absence of physical pain, marking a significant advance in understanding how psychological distress is processed and drives fear memory formation in the brain. This discovery opens the door to the development of targeted treatments for trauma-related conditions by addressing the underlying neural pathways.

< Photo 1. (from left) Professor Jin-Hee Han, Dr. Junho Han and Ph.D. Candidate Boin Suh of the Department of Biological Sciences >

KAIST (President Kwang-Hyung Lee) announced on May 15th that the research team led by Professor Jin-Hee Han in the Department of Biological Sciences has identified the pIC-PBN circuit*, a key neural pathway involved in forming fear memories triggered by psychological threats in the absence of sensory pain. This groundbreaking work was conducted through experiments with mice.*pIC–PBN circuit: A newly identified descending neural pathway from the posterior insular cortex (pIC) to the parabrachial nucleus (PBN), specialized for transmitting psychological threat information.

Traditionally, the lateral parabrachial nucleus (PBN) has been recognized as a critical part of the ascending pain pathway, receiving pain signals from the spinal cord. However, this study reveals a previously unknown role for the PBN in processing fear induced by non-painful psychological stimuli, fundamentally changing our understanding of its function in the brain.

This work is considered the first experimental evidence that 'emotional distress' and 'physical pain' are processed through different neural circuits to form fear memories, making it a significant contribution to the field of neuroscience. It clearly demonstrates the existence of a dedicated pathway (pIC-PBN) for transmitting emotional distress.

The study's first author, Dr. Junho Han, shared the personal motivation behind this research: “Our dog, Lego, is afraid of motorcycles. He never actually crashed into one, but ever since having a traumatizing event of having a motorbike almost run into him, just hearing the sound now triggers a fearful response. Humans react similarly – even if you didn’t have a personal experience of being involved in an accident, a near-miss or exposure to alarming media can create lasting fear memories, which may eventually lead to PTSD.”

He continued, “Until now, fear memory research has mainly relied on experimental models involving physical pain. However, much of real-world human fears arise from psychological threats, rather than from direct physical harm. Despite this, little was known about the brain circuits responsible for processing these psychological threats that can drive fear memory formation.”

To investigate this, the research team developed a novel fear conditioning model that utilizes visual threat stimuli instead of electrical shocks. In this model, mice were exposed to a rapidly expanding visual disk on a ceiling screen, simulating the threat of an approaching predator. This approach allowed the team to demonstrate that fear memories can form in response to a non-nociceptive, psychological threat alone, without the need for physical pain.

< Figure 1. Artificial activation of the posterior insular cortex (pIC) to lateral parabrachial nucleus (PBN) neural circuit induces anxiety-like behaviors and fear memory formation in mice. >

Using advanced chemogenetic and optogenetic techniques, the team precisely controlled neuronal activity, revealing that the lateral parabrachial nucleus (PBN) is essential to form fear memories in response to visual threats. They further traced the origin of these signals to the posterior insular cortex (pIC), a region known to process negative emotions and pain, confirming a direct connection between the two areas.

The study also showed that inhibiting the pIC–PBN circuit significantly reduced fear memory formation in response to visual threats, without affecting innate fear responses or physical pain-based learning. Conversely, artificially activating this circuit alone was sufficient to drive fear memory formation, confirming its role as a key pathway for processing psychological threat information.

< Figure 2. Schematic diagram of brain neural circuits transmitting emotional & physical pain threat signals. Visual threat stimuli do not involve physical pain but can create an anxious state and form fear memory through the affective pain signaling pathway. >

Professor Jin-Hee Han commented, “This study lays an important foundation for understanding how emotional distress-based mental disorders, such as PTSD, panic disorder, and anxiety disorder, develop, and opens new possibilities for targeted treatment approaches.”

The findings, authored by Dr. Junho Han (first author), Ph.D. candidate Boin Suh (second author), and Dr. Jin-Hee Han (corresponding author) of the Department of Biological Sciences, were published online in the international journal Science Advances on May 9, 2025.※ Paper Title: A top-down insular cortex circuit crucial for non-nociceptive fear learning. Science Advances (https://doi.org/10.1101/2024.10.14.618356)※ Author Information: Junho Han (first author), Boin Suh (second author), and Jin-Hee Han (corresponding author)

This research was supported by grants from the National Research Foundation of Korea (NRF-2022M3E5E8081183 and NRF-2017M3C7A1031322).

2025.05.15 View 3068 -

KAIST researchers developed a novel ultra-low power memory for neuromorphic computing

A team of Korean researchers is making headlines by developing a new memory device that can be used to replace existing memory or used in implementing neuromorphic computing for next-generation artificial intelligence hardware for its low processing costs and its ultra-low power consumption.

KAIST (President Kwang-Hyung Lee) announced on April 4th that Professor Shinhyun Choi's research team in the School of Electrical Engineering has developed a next-generation phase change memory* device featuring ultra-low-power consumption that can replace DRAM and NAND flash memory.

☞ Phase change memory: A memory device that stores and/or processes information by changing the crystalline states of materials to be amorphous or crystalline using heat, thereby changing its resistance state.

Existing phase change memory has the problems such as expensive fabrication process for making highly scaled device and requiring substantial amount of power for operation. To solve these problems, Professor Choi’s research team developed an ultra-low power phase change memory device by electrically forming a very small nanometer (nm) scale phase changeable filament without expensive fabrication processes. This new development has the groundbreaking advantage of not only having a very low processing cost but also of enabling operating with ultra-low power consumption.

DRAM, one of the most popularly used memory, is very fast, but has volatile characteristics in which data disappears when the power is turned off. NAND flash memory, a storage device, has relatively slow read/write speeds, but it has non-volatile characteristic that enables it to preserve the data even when the power is cut off.

Phase change memory, on the other hand, combines the advantages of both DRAM and NAND flash memory, offering high speed and non-volatile characteristics. For this reason, phase change memory is being highlighted as the next-generation memory that can replace existing memory, and is being actively researched as a memory technology or neuromorphic computing technology that mimics the human brain.

However, conventional phase change memory devices require a substantial amount of power to operate, making it difficult to make practical large-capacity memory products or realize a neuromorphic computing system. In order to maximize the thermal efficiency for memory device operation, previous research efforts focused on reducing the power consumption by shrinking the physical size of the device through the use of the state-of-the-art lithography technologies, but they were met with limitations in terms of practicality as the degree of improvement in power consumption was minimal whereas the cost and the difficulty of fabrication increased with each improvement.

In order to solve the power consumption problem of phase change memory, Professor Shinhyun Choi’s research team created a method to electrically form phase change materials in extremely small area, successfully implementing an ultra-low-power phase change memory device that consumes 15 times less power than a conventional phase change memory device fabricated with the expensive lithography tool.

< Figure 1. Illustrations of the ultra-low power phase change memory device developed through this study and the comparison of power consumption by the newly developed phase change memory device compared to conventional phase change memory devices. >

Professor Shinhyun Choi expressed strong confidence in how this research will span out in the future in the new field of research saying, "The phase change memory device we have developed is significant as it offers a novel approach to solve the lingering problems in producing a memory device at a greatly improved manufacturing cost and energy efficiency. We expect the results of our study to become the foundation of future electronic engineering, enabling various applications including high-density three-dimensional vertical memory and neuromorphic computing systems as it opened up the possibilities to choose from a variety of materials.” He went on to add, “I would like to thank the National Research Foundation of Korea and the National NanoFab Center for supporting this research.”

This study, in which See-On Park, a student of MS-PhD Integrated Program, and Seokman Hong, a doctoral student of the School of Electrical Engineering at KAIST, participated as first authors, was published on April 4 in the April issue of the renowned international academic journal Nature. (Paper title: Phase-Change Memory via a Phase-Changeable Self-Confined Nano-Filament)

This research was conducted with support from the Next-Generation Intelligent Semiconductor Technology Development Project, PIM AI Semiconductor Core Technology Development (Device) Project, Excellent Emerging Research Program of the National Research Foundation of Korea, and the Semiconductor Process-based Nanomedical Devices Development Project of the National NanoFab Center.

2024.04.04 View 8791

KAIST researchers developed a novel ultra-low power memory for neuromorphic computing

A team of Korean researchers is making headlines by developing a new memory device that can be used to replace existing memory or used in implementing neuromorphic computing for next-generation artificial intelligence hardware for its low processing costs and its ultra-low power consumption.

KAIST (President Kwang-Hyung Lee) announced on April 4th that Professor Shinhyun Choi's research team in the School of Electrical Engineering has developed a next-generation phase change memory* device featuring ultra-low-power consumption that can replace DRAM and NAND flash memory.

☞ Phase change memory: A memory device that stores and/or processes information by changing the crystalline states of materials to be amorphous or crystalline using heat, thereby changing its resistance state.

Existing phase change memory has the problems such as expensive fabrication process for making highly scaled device and requiring substantial amount of power for operation. To solve these problems, Professor Choi’s research team developed an ultra-low power phase change memory device by electrically forming a very small nanometer (nm) scale phase changeable filament without expensive fabrication processes. This new development has the groundbreaking advantage of not only having a very low processing cost but also of enabling operating with ultra-low power consumption.

DRAM, one of the most popularly used memory, is very fast, but has volatile characteristics in which data disappears when the power is turned off. NAND flash memory, a storage device, has relatively slow read/write speeds, but it has non-volatile characteristic that enables it to preserve the data even when the power is cut off.

Phase change memory, on the other hand, combines the advantages of both DRAM and NAND flash memory, offering high speed and non-volatile characteristics. For this reason, phase change memory is being highlighted as the next-generation memory that can replace existing memory, and is being actively researched as a memory technology or neuromorphic computing technology that mimics the human brain.

However, conventional phase change memory devices require a substantial amount of power to operate, making it difficult to make practical large-capacity memory products or realize a neuromorphic computing system. In order to maximize the thermal efficiency for memory device operation, previous research efforts focused on reducing the power consumption by shrinking the physical size of the device through the use of the state-of-the-art lithography technologies, but they were met with limitations in terms of practicality as the degree of improvement in power consumption was minimal whereas the cost and the difficulty of fabrication increased with each improvement.

In order to solve the power consumption problem of phase change memory, Professor Shinhyun Choi’s research team created a method to electrically form phase change materials in extremely small area, successfully implementing an ultra-low-power phase change memory device that consumes 15 times less power than a conventional phase change memory device fabricated with the expensive lithography tool.

< Figure 1. Illustrations of the ultra-low power phase change memory device developed through this study and the comparison of power consumption by the newly developed phase change memory device compared to conventional phase change memory devices. >

Professor Shinhyun Choi expressed strong confidence in how this research will span out in the future in the new field of research saying, "The phase change memory device we have developed is significant as it offers a novel approach to solve the lingering problems in producing a memory device at a greatly improved manufacturing cost and energy efficiency. We expect the results of our study to become the foundation of future electronic engineering, enabling various applications including high-density three-dimensional vertical memory and neuromorphic computing systems as it opened up the possibilities to choose from a variety of materials.” He went on to add, “I would like to thank the National Research Foundation of Korea and the National NanoFab Center for supporting this research.”

This study, in which See-On Park, a student of MS-PhD Integrated Program, and Seokman Hong, a doctoral student of the School of Electrical Engineering at KAIST, participated as first authors, was published on April 4 in the April issue of the renowned international academic journal Nature. (Paper title: Phase-Change Memory via a Phase-Changeable Self-Confined Nano-Filament)

This research was conducted with support from the Next-Generation Intelligent Semiconductor Technology Development Project, PIM AI Semiconductor Core Technology Development (Device) Project, Excellent Emerging Research Program of the National Research Foundation of Korea, and the Semiconductor Process-based Nanomedical Devices Development Project of the National NanoFab Center.

2024.04.04 View 8791 -

Using light to throw and catch atoms to open up a new chapter for quantum computing

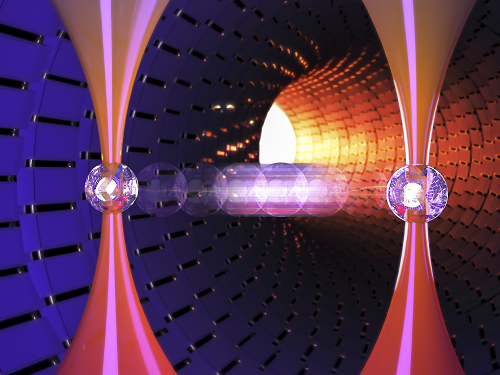

The technology to move and arrange atoms, the most basic component of a quantum computer, is very important to Rydberg quantum computing research. However, to place the atoms at the desired location, the atoms must be captured and transported one by one using a highly focused laser beam, commonly referred to as an optical tweezer. and, the quantum information of the atoms is likely to change midway.

KAIST (President Kwang Hyung Lee) announced on the 27th that a research team led by Professor Jaewook Ahn of the Department of Physics developed a technology to throw and receive rubidium atoms one by one using a laser beam.

The research team developed a method to throw and receive atoms which would minimize the time the optical tweezers are in contact with the atoms in which the quantum information the atoms carry may change. The research team used the characteristic that the rubidium atoms, which are kept at a very low temperature of 40μK below absolute zero, move very sensitively to the electromagnetic force applied by light along the focal point of the light tweezers.

The research team accelerated the laser of an optical tweezer to give an optical kick to an atom to send it to a target, then caught the flying atom with another optical tweezer to stop it. The atom flew at a speed of 65 cm/s, and traveled up to 4.2 μm. Compared to the existing technique of guiding the atoms with the optical tweezers, the technique of throwing and receiving atoms eliminates the need to calculate the transporting path for the tweezers, and makes it easier to fix the defects in the atomic arrangement. As a result, it is effective in generating and maintaining a large number of atomic arrangements, and when the technology is used to throw and receive flying atom qubits, it will be used in studying new and more powerful quantum computing methods that presupposes the structural changes in quantum arrangements.

"This technology will be used to develop larger and more powerful Rydberg quantum computers," says Professor Jaewook Ahn. “In a Rydberg quantum computer,” he continues, “atoms are arranged to store quantum information and interact with neighboring atoms through electromagnetic forces to perform quantum computing. The method of throwing an atom away for quick reconstruction the quantum array can be an effective way to fix an error in a quantum computer that requires a removal or replacement of an atom.”

The research, which was conducted by doctoral students Hansub Hwang and Andrew Byun of the Department of Physics at KAIST and Sylvain de Léséleuc, a researcher at the National Institute of Natural Sciences in Japan, was published in the international journal, Optica, 0n March 9th. (Paper title: Optical tweezers throw and catch single atoms).

This research was carried out with the support of the Samsung Science & Technology Foundation.

<Figure 1> A schematic diagram of the atom catching and throwing technique. The optical tweezer on the left kicks the atom to throw it into a trajectory to have the tweezer on the right catch it to stop it.

2023.03.28 View 8034

Using light to throw and catch atoms to open up a new chapter for quantum computing

The technology to move and arrange atoms, the most basic component of a quantum computer, is very important to Rydberg quantum computing research. However, to place the atoms at the desired location, the atoms must be captured and transported one by one using a highly focused laser beam, commonly referred to as an optical tweezer. and, the quantum information of the atoms is likely to change midway.

KAIST (President Kwang Hyung Lee) announced on the 27th that a research team led by Professor Jaewook Ahn of the Department of Physics developed a technology to throw and receive rubidium atoms one by one using a laser beam.

The research team developed a method to throw and receive atoms which would minimize the time the optical tweezers are in contact with the atoms in which the quantum information the atoms carry may change. The research team used the characteristic that the rubidium atoms, which are kept at a very low temperature of 40μK below absolute zero, move very sensitively to the electromagnetic force applied by light along the focal point of the light tweezers.

The research team accelerated the laser of an optical tweezer to give an optical kick to an atom to send it to a target, then caught the flying atom with another optical tweezer to stop it. The atom flew at a speed of 65 cm/s, and traveled up to 4.2 μm. Compared to the existing technique of guiding the atoms with the optical tweezers, the technique of throwing and receiving atoms eliminates the need to calculate the transporting path for the tweezers, and makes it easier to fix the defects in the atomic arrangement. As a result, it is effective in generating and maintaining a large number of atomic arrangements, and when the technology is used to throw and receive flying atom qubits, it will be used in studying new and more powerful quantum computing methods that presupposes the structural changes in quantum arrangements.

"This technology will be used to develop larger and more powerful Rydberg quantum computers," says Professor Jaewook Ahn. “In a Rydberg quantum computer,” he continues, “atoms are arranged to store quantum information and interact with neighboring atoms through electromagnetic forces to perform quantum computing. The method of throwing an atom away for quick reconstruction the quantum array can be an effective way to fix an error in a quantum computer that requires a removal or replacement of an atom.”

The research, which was conducted by doctoral students Hansub Hwang and Andrew Byun of the Department of Physics at KAIST and Sylvain de Léséleuc, a researcher at the National Institute of Natural Sciences in Japan, was published in the international journal, Optica, 0n March 9th. (Paper title: Optical tweezers throw and catch single atoms).

This research was carried out with the support of the Samsung Science & Technology Foundation.

<Figure 1> A schematic diagram of the atom catching and throwing technique. The optical tweezer on the left kicks the atom to throw it into a trajectory to have the tweezer on the right catch it to stop it.

2023.03.28 View 8034 -

Neuromorphic Memory Device Simulates Neurons and Synapses

Simultaneous emulation of neuronal and synaptic properties promotes the development of brain-like artificial intelligence

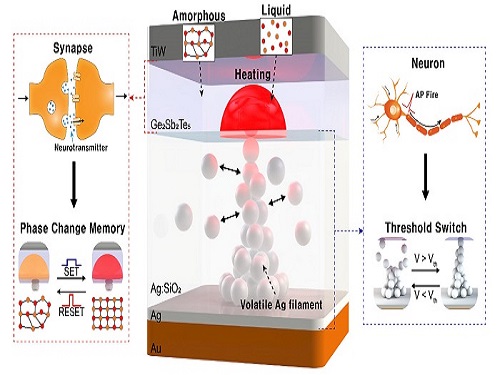

Researchers have reported a nano-sized neuromorphic memory device that emulates neurons and synapses simultaneously in a unit cell, another step toward completing the goal of neuromorphic computing designed to rigorously mimic the human brain with semiconductor devices.

Neuromorphic computing aims to realize artificial intelligence (AI) by mimicking the mechanisms of neurons and synapses that make up the human brain. Inspired by the cognitive functions of the human brain that current computers cannot provide, neuromorphic devices have been widely investigated. However, current Complementary Metal-Oxide Semiconductor (CMOS)-based neuromorphic circuits simply connect artificial neurons and synapses without synergistic interactions, and the concomitant implementation of neurons and synapses still remains a challenge. To address these issues, a research team led by Professor Keon Jae Lee from the Department of Materials Science and Engineering implemented the biological working mechanisms of humans by introducing the neuron-synapse interactions in a single memory cell, rather than the conventional approach of electrically connecting artificial neuronal and synaptic devices.

Similar to commercial graphics cards, the artificial synaptic devices previously studied often used to accelerate parallel computations, which shows clear differences from the operational mechanisms of the human brain. The research team implemented the synergistic interactions between neurons and synapses in the neuromorphic memory device, emulating the mechanisms of the biological neural network. In addition, the developed neuromorphic device can replace complex CMOS neuron circuits with a single device, providing high scalability and cost efficiency.

The human brain consists of a complex network of 100 billion neurons and 100 trillion synapses. The functions and structures of neurons and synapses can flexibly change according to the external stimuli, adapting to the surrounding environment. The research team developed a neuromorphic device in which short-term and long-term memories coexist using volatile and non-volatile memory devices that mimic the characteristics of neurons and synapses, respectively. A threshold switch device is used as volatile memory and phase-change memory is used as a non-volatile device. Two thin-film devices are integrated without intermediate electrodes, implementing the functional adaptability of neurons and synapses in the neuromorphic memory.

Professor Keon Jae Lee explained, "Neurons and synapses interact with each other to establish cognitive functions such as memory and learning, so simulating both is an essential element for brain-inspired artificial intelligence. The developed neuromorphic memory device also mimics the retraining effect that allows quick learning of the forgotten information by implementing a positive feedback effect between neurons and synapses.”

This result entitled “Simultaneous emulation of synaptic and intrinsic plasticity using a memristive synapse” was published in the May 19, 2022 issue of Nature Communications.

-Publication:Sang Hyun Sung, Tae Jin Kim, Hyera Shin, Tae Hong Im, and Keon Jae Lee (2022) “Simultaneous emulation of synaptic and intrinsic plasticity using a memristive synapse,” Nature Communications May 19, 2022 (DOI: 10.1038/s41467-022-30432-2)

-Profile:Professor Keon Jae Leehttp://fand.kaist.ac.kr

Department of Materials Science and EngineeringKAIST

2022.05.20 View 15501

Neuromorphic Memory Device Simulates Neurons and Synapses

Simultaneous emulation of neuronal and synaptic properties promotes the development of brain-like artificial intelligence

Researchers have reported a nano-sized neuromorphic memory device that emulates neurons and synapses simultaneously in a unit cell, another step toward completing the goal of neuromorphic computing designed to rigorously mimic the human brain with semiconductor devices.

Neuromorphic computing aims to realize artificial intelligence (AI) by mimicking the mechanisms of neurons and synapses that make up the human brain. Inspired by the cognitive functions of the human brain that current computers cannot provide, neuromorphic devices have been widely investigated. However, current Complementary Metal-Oxide Semiconductor (CMOS)-based neuromorphic circuits simply connect artificial neurons and synapses without synergistic interactions, and the concomitant implementation of neurons and synapses still remains a challenge. To address these issues, a research team led by Professor Keon Jae Lee from the Department of Materials Science and Engineering implemented the biological working mechanisms of humans by introducing the neuron-synapse interactions in a single memory cell, rather than the conventional approach of electrically connecting artificial neuronal and synaptic devices.

Similar to commercial graphics cards, the artificial synaptic devices previously studied often used to accelerate parallel computations, which shows clear differences from the operational mechanisms of the human brain. The research team implemented the synergistic interactions between neurons and synapses in the neuromorphic memory device, emulating the mechanisms of the biological neural network. In addition, the developed neuromorphic device can replace complex CMOS neuron circuits with a single device, providing high scalability and cost efficiency.

The human brain consists of a complex network of 100 billion neurons and 100 trillion synapses. The functions and structures of neurons and synapses can flexibly change according to the external stimuli, adapting to the surrounding environment. The research team developed a neuromorphic device in which short-term and long-term memories coexist using volatile and non-volatile memory devices that mimic the characteristics of neurons and synapses, respectively. A threshold switch device is used as volatile memory and phase-change memory is used as a non-volatile device. Two thin-film devices are integrated without intermediate electrodes, implementing the functional adaptability of neurons and synapses in the neuromorphic memory.

Professor Keon Jae Lee explained, "Neurons and synapses interact with each other to establish cognitive functions such as memory and learning, so simulating both is an essential element for brain-inspired artificial intelligence. The developed neuromorphic memory device also mimics the retraining effect that allows quick learning of the forgotten information by implementing a positive feedback effect between neurons and synapses.”

This result entitled “Simultaneous emulation of synaptic and intrinsic plasticity using a memristive synapse” was published in the May 19, 2022 issue of Nature Communications.

-Publication:Sang Hyun Sung, Tae Jin Kim, Hyera Shin, Tae Hong Im, and Keon Jae Lee (2022) “Simultaneous emulation of synaptic and intrinsic plasticity using a memristive synapse,” Nature Communications May 19, 2022 (DOI: 10.1038/s41467-022-30432-2)

-Profile:Professor Keon Jae Leehttp://fand.kaist.ac.kr

Department of Materials Science and EngineeringKAIST

2022.05.20 View 15501 -

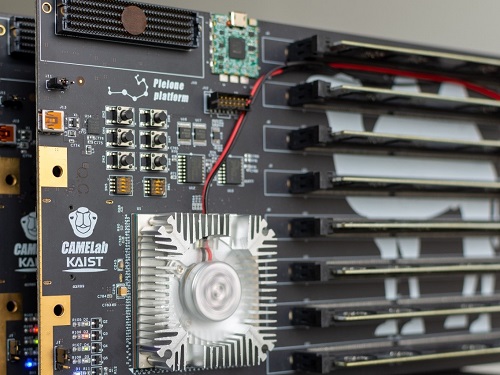

LightPC Presents a Resilient System Using Only Non-Volatile Memory

Lightweight Persistence Centric System (LightPC) ensures both data and execution persistence for energy-efficient full system persistence

A KAIST research team has developed hardware and software technology that ensures both data and execution persistence. The Lightweight Persistence Centric System (LightPC) makes the systems resilient against power failures by utilizing only non-volatile memory as the main memory.

“We mounted non-volatile memory on a system board prototype and created an operating system to verify the effectiveness of LightPC,” said Professor Myoungsoo Jung. The team confirmed that LightPC validated its execution while powering up and down in the middle of execution, showing up to eight times more memory, 4.3 times faster application execution, and 73% lower power consumption compared to traditional systems.

Professor Jung said that LightPC can be utilized in a variety of fields such as data centers and high-performance computing to provide large-capacity memory, high performance, low power consumption, and service reliability.

In general, power failures on legacy systems can lead to the loss of data stored in the DRAM-based main memory. Unlike volatile memory such as DRAM, non-volatile memory can retain its data without power. Although non-volatile memory has the characteristics of lower power consumption and larger capacity than DRAM, non-volatile memory is typically used for the task of secondary storage due to its lower write performance. For this reason, nonvolatile memory is often used with DRAM. However, modern systems employing non-volatile memory-based main memory experience unexpected performance degradation due to the complicated memory microarchitecture.

To enable both data and execution persistent in legacy systems, it is necessary to transfer the data from the volatile memory to the non-volatile memory. Checkpointing is one possible solution. It periodically transfers the data in preparation for a sudden power failure. While this technology is essential for ensuring high mobility and reliability for users, checkpointing also has fatal drawbacks. It takes additional time and power to move data and requires a data recovery process as well as restarting the system.

In order to address these issues, the research team developed a processor and memory controller to raise the performance of non-volatile memory-only memory. LightPC matches the performance of DRAM by minimizing the internal volatile memory components from non-volatile memory, exposing the non-volatile memory (PRAM) media to the host, and increasing parallelism to service on-the-fly requests as soon as possible.

The team also presented operating system technology that quickly makes execution states of running processes persistent without the need for a checkpointing process. The operating system prevents all modifications to execution states and data by keeping all program executions idle before transferring data in order to support consistency within a period much shorter than the standard power hold-up time of about 16 minutes. For consistency, when the power is recovered, the computer almost immediately revives itself and re-executes all the offline processes immediately without the need for a boot process.

The researchers will present their work (LightPC: Hardware and Software Co-Design for Energy-Efficient Full System Persistence) at the International Symposium on Computer Architecture (ISCA) 2022 in New York in June. More information is available at the CAMELab website (http://camelab.org).

-Profile: Professor Myoungsoo Jung Computer Architecture and Memory Systems Laboratory (CAMEL)http://camelab.org School of Electrical EngineeringKAIST

2022.04.25 View 24249

LightPC Presents a Resilient System Using Only Non-Volatile Memory

Lightweight Persistence Centric System (LightPC) ensures both data and execution persistence for energy-efficient full system persistence

A KAIST research team has developed hardware and software technology that ensures both data and execution persistence. The Lightweight Persistence Centric System (LightPC) makes the systems resilient against power failures by utilizing only non-volatile memory as the main memory.

“We mounted non-volatile memory on a system board prototype and created an operating system to verify the effectiveness of LightPC,” said Professor Myoungsoo Jung. The team confirmed that LightPC validated its execution while powering up and down in the middle of execution, showing up to eight times more memory, 4.3 times faster application execution, and 73% lower power consumption compared to traditional systems.

Professor Jung said that LightPC can be utilized in a variety of fields such as data centers and high-performance computing to provide large-capacity memory, high performance, low power consumption, and service reliability.

In general, power failures on legacy systems can lead to the loss of data stored in the DRAM-based main memory. Unlike volatile memory such as DRAM, non-volatile memory can retain its data without power. Although non-volatile memory has the characteristics of lower power consumption and larger capacity than DRAM, non-volatile memory is typically used for the task of secondary storage due to its lower write performance. For this reason, nonvolatile memory is often used with DRAM. However, modern systems employing non-volatile memory-based main memory experience unexpected performance degradation due to the complicated memory microarchitecture.

To enable both data and execution persistent in legacy systems, it is necessary to transfer the data from the volatile memory to the non-volatile memory. Checkpointing is one possible solution. It periodically transfers the data in preparation for a sudden power failure. While this technology is essential for ensuring high mobility and reliability for users, checkpointing also has fatal drawbacks. It takes additional time and power to move data and requires a data recovery process as well as restarting the system.

In order to address these issues, the research team developed a processor and memory controller to raise the performance of non-volatile memory-only memory. LightPC matches the performance of DRAM by minimizing the internal volatile memory components from non-volatile memory, exposing the non-volatile memory (PRAM) media to the host, and increasing parallelism to service on-the-fly requests as soon as possible.

The team also presented operating system technology that quickly makes execution states of running processes persistent without the need for a checkpointing process. The operating system prevents all modifications to execution states and data by keeping all program executions idle before transferring data in order to support consistency within a period much shorter than the standard power hold-up time of about 16 minutes. For consistency, when the power is recovered, the computer almost immediately revives itself and re-executes all the offline processes immediately without the need for a boot process.

The researchers will present their work (LightPC: Hardware and Software Co-Design for Energy-Efficient Full System Persistence) at the International Symposium on Computer Architecture (ISCA) 2022 in New York in June. More information is available at the CAMELab website (http://camelab.org).

-Profile: Professor Myoungsoo Jung Computer Architecture and Memory Systems Laboratory (CAMEL)http://camelab.org School of Electrical EngineeringKAIST

2022.04.25 View 24249 -

CXL-Based Memory Disaggregation Technology Opens Up a New Direction for Big Data Solution Frameworks

A KAIST team’s compute express link (CXL) provides new insights on memory disaggregation and ensures direct access and high-performance capabilities

A team from the Computer Architecture and Memory Systems Laboratory (CAMEL) at KAIST presented a new compute express link (CXL) solution whose directly accessible, and high-performance memory disaggregation opens new directions for big data memory processing. Professor Myoungsoo Jung said the team’s technology significantly improves performance compared to existing remote direct memory access (RDMA)-based memory disaggregation.

CXL is a peripheral component interconnect-express (PCIe)-based new dynamic multi-protocol made for efficiently utilizing memory devices and accelerators. Many enterprise data centers and memory vendors are paying attention to it as the next-generation multi-protocol for the era of big data.

Emerging big data applications such as machine learning, graph analytics, and in-memory databases require large memory capacities. However, scaling out the memory capacity via a prior memory interface like double data rate (DDR) is limited by the number of the central processing units (CPUs) and memory controllers. Therefore, memory disaggregation, which allows connecting a host to another host’s memory or memory nodes, has appeared.

RDMA is a way that a host can directly access another host’s memory via InfiniBand, the commonly used network protocol in data centers. Nowadays, most existing memory disaggregation technologies employ RDMA to get a large memory capacity. As a result, a host can share another host’s memory by transferring the data between local and remote memory.

Although RDMA-based memory disaggregation provides a large memory capacity to a host, two critical problems exist. First, scaling out the memory still needs an extra CPU to be added. Since passive memory such as dynamic random-access memory (DRAM), cannot operate by itself, it should be controlled by the CPU. Second, redundant data copies and software fabric interventions for RDMA-based memory disaggregation cause longer access latency. For example, remote memory access latency in RDMA-based memory disaggregation is multiple orders of magnitude longer than local memory access.

To address these issues, Professor Jung’s team developed the CXL-based memory disaggregation framework, including CXL-enabled customized CPUs, CXL devices, CXL switches, and CXL-aware operating system modules. The team’s CXL device is a pure passive and directly accessible memory node that contains multiple DRAM dual inline memory modules (DIMMs) and a CXL memory controller. Since the CXL memory controller supports the memory in the CXL device, a host can utilize the memory node without processor or software intervention. The team’s CXL switch enables scaling out a host’s memory capacity by hierarchically connecting multiple CXL devices to the CXL switch allowing more than hundreds of devices. Atop the switches and devices, the team’s CXL-enabled operating system removes redundant data copy and protocol conversion exhibited by conventional RDMA, which can significantly decrease access latency to the memory nodes.

In a test comparing loading 64B (cacheline) data from memory pooling devices, CXL-based memory disaggregation showed 8.2 times higher data load performance than RDMA-based memory disaggregation and even similar performance to local DRAM memory. In the team’s evaluations for a big data benchmark such as a machine learning-based test, CXL-based memory disaggregation technology also showed a maximum of 3.7 times higher performance than prior RDMA-based memory disaggregation technologies.

“Escaping from the conventional RDMA-based memory disaggregation, our CXL-based memory disaggregation framework can provide high scalability and performance for diverse datacenters and cloud service infrastructures,” said Professor Jung. He went on to stress, “Our CXL-based memory disaggregation research will bring about a new paradigm for memory solutions that will lead the era of big data.”

-Profile: Professor Myoungsoo Jung Computer Architecture and Memory Systems Laboratory (CAMEL)http://camelab.org School of Electrical EngineeringKAIST

2022.03.16 View 24316

CXL-Based Memory Disaggregation Technology Opens Up a New Direction for Big Data Solution Frameworks

A KAIST team’s compute express link (CXL) provides new insights on memory disaggregation and ensures direct access and high-performance capabilities

A team from the Computer Architecture and Memory Systems Laboratory (CAMEL) at KAIST presented a new compute express link (CXL) solution whose directly accessible, and high-performance memory disaggregation opens new directions for big data memory processing. Professor Myoungsoo Jung said the team’s technology significantly improves performance compared to existing remote direct memory access (RDMA)-based memory disaggregation.

CXL is a peripheral component interconnect-express (PCIe)-based new dynamic multi-protocol made for efficiently utilizing memory devices and accelerators. Many enterprise data centers and memory vendors are paying attention to it as the next-generation multi-protocol for the era of big data.

Emerging big data applications such as machine learning, graph analytics, and in-memory databases require large memory capacities. However, scaling out the memory capacity via a prior memory interface like double data rate (DDR) is limited by the number of the central processing units (CPUs) and memory controllers. Therefore, memory disaggregation, which allows connecting a host to another host’s memory or memory nodes, has appeared.

RDMA is a way that a host can directly access another host’s memory via InfiniBand, the commonly used network protocol in data centers. Nowadays, most existing memory disaggregation technologies employ RDMA to get a large memory capacity. As a result, a host can share another host’s memory by transferring the data between local and remote memory.

Although RDMA-based memory disaggregation provides a large memory capacity to a host, two critical problems exist. First, scaling out the memory still needs an extra CPU to be added. Since passive memory such as dynamic random-access memory (DRAM), cannot operate by itself, it should be controlled by the CPU. Second, redundant data copies and software fabric interventions for RDMA-based memory disaggregation cause longer access latency. For example, remote memory access latency in RDMA-based memory disaggregation is multiple orders of magnitude longer than local memory access.

To address these issues, Professor Jung’s team developed the CXL-based memory disaggregation framework, including CXL-enabled customized CPUs, CXL devices, CXL switches, and CXL-aware operating system modules. The team’s CXL device is a pure passive and directly accessible memory node that contains multiple DRAM dual inline memory modules (DIMMs) and a CXL memory controller. Since the CXL memory controller supports the memory in the CXL device, a host can utilize the memory node without processor or software intervention. The team’s CXL switch enables scaling out a host’s memory capacity by hierarchically connecting multiple CXL devices to the CXL switch allowing more than hundreds of devices. Atop the switches and devices, the team’s CXL-enabled operating system removes redundant data copy and protocol conversion exhibited by conventional RDMA, which can significantly decrease access latency to the memory nodes.

In a test comparing loading 64B (cacheline) data from memory pooling devices, CXL-based memory disaggregation showed 8.2 times higher data load performance than RDMA-based memory disaggregation and even similar performance to local DRAM memory. In the team’s evaluations for a big data benchmark such as a machine learning-based test, CXL-based memory disaggregation technology also showed a maximum of 3.7 times higher performance than prior RDMA-based memory disaggregation technologies.

“Escaping from the conventional RDMA-based memory disaggregation, our CXL-based memory disaggregation framework can provide high scalability and performance for diverse datacenters and cloud service infrastructures,” said Professor Jung. He went on to stress, “Our CXL-based memory disaggregation research will bring about a new paradigm for memory solutions that will lead the era of big data.”

-Profile: Professor Myoungsoo Jung Computer Architecture and Memory Systems Laboratory (CAMEL)http://camelab.org School of Electrical EngineeringKAIST

2022.03.16 View 24316 -

Study of T Cells from COVID-19 Convalescents Guides Vaccine Strategies

Researchers confirm that most COVID-19 patients in their convalescent stage carry stem cell-like memory T cells for months

A KAIST immunology research team found that most convalescent patients of COVID-19 develop and maintain T cell memory for over 10 months regardless of the severity of their symptoms. In addition, memory T cells proliferate rapidly after encountering their cognate antigen and accomplish their multifunctional roles. This study provides new insights for effective vaccine strategies against COVID-19, considering the self-renewal capacity and multipotency of memory T cells.

COVID-19 is a disease caused by severe acute respiratory syndrome coronavirus-2 (SARS-CoV-2) infection. When patients recover from COVID-19, SARS-CoV-2-specific adaptive immune memory is developed. The adaptive immune system consists of two principal components: B cells that produce antibodies and T cells that eliminate infected cells. The current results suggest that the protective immune function of memory T cells will be implemented upon re-exposure to SARS-CoV-2.

Recently, the role of memory T cells against SARS-CoV-2 has been gaining attention as neutralizing antibodies wane after recovery. Although memory T cells cannot prevent the infection itself, they play a central role in preventing the severe progression of COVID-19. However, the longevity and functional maintenance of SARS-CoV-2-specific memory T cells remain unknown.

Professor Eui-Cheol Shin and his collaborators investigated the characteristics and functions of stem cell-like memory T cells, which are expected to play a crucial role in long-term immunity. Researchers analyzed the generation of stem cell-like memory T cells and multi-cytokine producing polyfunctional memory T cells, using cutting-edge immunological techniques.

This research is significant in that revealing the long-term immunity of COVID-19 convalescent patients provides an indicator regarding the long-term persistence of T cell immunity, one of the main goals of future vaccine development, as well as evaluating the long-term efficacy of currently available COVID-19 vaccines.

The research team is presently conducting a follow-up study to identify the memory T cell formation and functional characteristics of those who received COVID-19 vaccines, and to understand the immunological effect of COVID-19 vaccines by comparing the characteristics of memory T cells from vaccinated individuals with those of COVID-19 convalescent patients.

PhD candidate Jae Hyung Jung and Dr. Min-Seok Rha, a clinical fellow at Yonsei Severance Hospital, who led the study together explained, “Our analysis will enhance the understanding of COVID-19 immunity and establish an index for COVID-19 vaccine-induced memory T cells.”

“This study is the world’s longest longitudinal study on differentiation and functions of memory T cells among COVID-19 convalescent patients. The research on the temporal dynamics of immune responses has laid the groundwork for building a strategy for next-generation vaccine development,” Professor Shin added. This work was supported by the Samsung Science and Technology Foundation and KAIST, and was published in Nature Communications on June 30.

-Publication:

Jung, J.H., Rha, MS., Sa, M. et al. SARS-CoV-2-specific T cell memory is sustained in COVID-19 convalescent patients for 10 months with successful development of stem cell-like memory T cells. Nat Communications 12, 4043 (2021). https://doi.org/10.1038/s41467-021-24377-1

-Profile:

Professor Eui-Cheol Shin

Laboratory of Immunology & Infectious Diseases (http://liid.kaist.ac.kr/)

Graduate School of Medical Science and Engineering

KAIST

2021.07.05 View 15192

Study of T Cells from COVID-19 Convalescents Guides Vaccine Strategies

Researchers confirm that most COVID-19 patients in their convalescent stage carry stem cell-like memory T cells for months

A KAIST immunology research team found that most convalescent patients of COVID-19 develop and maintain T cell memory for over 10 months regardless of the severity of their symptoms. In addition, memory T cells proliferate rapidly after encountering their cognate antigen and accomplish their multifunctional roles. This study provides new insights for effective vaccine strategies against COVID-19, considering the self-renewal capacity and multipotency of memory T cells.

COVID-19 is a disease caused by severe acute respiratory syndrome coronavirus-2 (SARS-CoV-2) infection. When patients recover from COVID-19, SARS-CoV-2-specific adaptive immune memory is developed. The adaptive immune system consists of two principal components: B cells that produce antibodies and T cells that eliminate infected cells. The current results suggest that the protective immune function of memory T cells will be implemented upon re-exposure to SARS-CoV-2.

Recently, the role of memory T cells against SARS-CoV-2 has been gaining attention as neutralizing antibodies wane after recovery. Although memory T cells cannot prevent the infection itself, they play a central role in preventing the severe progression of COVID-19. However, the longevity and functional maintenance of SARS-CoV-2-specific memory T cells remain unknown.

Professor Eui-Cheol Shin and his collaborators investigated the characteristics and functions of stem cell-like memory T cells, which are expected to play a crucial role in long-term immunity. Researchers analyzed the generation of stem cell-like memory T cells and multi-cytokine producing polyfunctional memory T cells, using cutting-edge immunological techniques.

This research is significant in that revealing the long-term immunity of COVID-19 convalescent patients provides an indicator regarding the long-term persistence of T cell immunity, one of the main goals of future vaccine development, as well as evaluating the long-term efficacy of currently available COVID-19 vaccines.

The research team is presently conducting a follow-up study to identify the memory T cell formation and functional characteristics of those who received COVID-19 vaccines, and to understand the immunological effect of COVID-19 vaccines by comparing the characteristics of memory T cells from vaccinated individuals with those of COVID-19 convalescent patients.

PhD candidate Jae Hyung Jung and Dr. Min-Seok Rha, a clinical fellow at Yonsei Severance Hospital, who led the study together explained, “Our analysis will enhance the understanding of COVID-19 immunity and establish an index for COVID-19 vaccine-induced memory T cells.”

“This study is the world’s longest longitudinal study on differentiation and functions of memory T cells among COVID-19 convalescent patients. The research on the temporal dynamics of immune responses has laid the groundwork for building a strategy for next-generation vaccine development,” Professor Shin added. This work was supported by the Samsung Science and Technology Foundation and KAIST, and was published in Nature Communications on June 30.

-Publication:

Jung, J.H., Rha, MS., Sa, M. et al. SARS-CoV-2-specific T cell memory is sustained in COVID-19 convalescent patients for 10 months with successful development of stem cell-like memory T cells. Nat Communications 12, 4043 (2021). https://doi.org/10.1038/s41467-021-24377-1

-Profile:

Professor Eui-Cheol Shin

Laboratory of Immunology & Infectious Diseases (http://liid.kaist.ac.kr/)

Graduate School of Medical Science and Engineering

KAIST

2021.07.05 View 15192 -

T-GPS Processes a Graph with Trillion Edges on a Single Computer

Trillion-scale graph processing simulation on a single computer presents a new concept of graph processing

A KAIST research team has developed a new technology that enables to process a large-scale graph algorithm without storing the graph in the main memory or on disks. Named as T-GPS (Trillion-scale Graph Processing Simulation) by the developer Professor Min-Soo Kim from the School of Computing at KAIST, it can process a graph with one trillion edges using a single computer.

Graphs are widely used to represent and analyze real-world objects in many domains such as social networks, business intelligence, biology, and neuroscience. As the number of graph applications increases rapidly, developing and testing new graph algorithms is becoming more important than ever before. Nowadays, many industrial applications require a graph algorithm to process a large-scale graph (e.g., one trillion edges). So, when developing and testing graph algorithms such for a large-scale graph, a synthetic graph is usually used instead of a real graph. This is because sharing and utilizing large-scale real graphs is very limited due to their being proprietary or being practically impossible to collect.

Conventionally, developing and testing graph algorithms is done via the following two-step approach: generating and storing a graph and executing an algorithm on the graph using a graph processing engine.

The first step generates a synthetic graph and stores it on disks. The synthetic graph is usually generated by either parameter-based generation methods or graph upscaling methods. The former extracts a small number of parameters that can capture some properties of a given real graph and generates the synthetic graph with the parameters. The latter upscales a given real graph to a larger one so as to preserve the properties of the original real graph as much as possible.

The second step loads the stored graph into the main memory of the graph processing engine such as Apache GraphX and executes a given graph algorithm on the engine. Since the size of the graph is too large to fit in the main memory of a single computer, the graph engine typically runs on a cluster of several tens or hundreds of computers. Therefore, the cost of the conventional two-step approach is very high.

The research team solved the problem of the conventional two-step approach. It does not generate and store a large-scale synthetic graph. Instead, it just loads the initial small real graph into main memory. Then, T-GPS processes a graph algorithm on the small real graph as if the large-scale synthetic graph that should be generated from the real graph exists in main memory. After the algorithm is done, T-GPS returns the exactly same result as the conventional two-step approach.

The key idea of T-GPS is generating only the part of the synthetic graph that the algorithm needs to access on the fly and modifying the graph processing engine to recognize the part generated on the fly as the part of the synthetic graph actually generated.

The research team showed that T-GPS can process a graph of 1 trillion edges using a single computer, while the conventional two-step approach can only process of a graph of 1 billion edges using a cluster of eleven computers of the same specification. Thus, T-GPS outperforms the conventional approach by 10,000 times in terms of computing resources. The team also showed that the speed of processing an algorithm in T-GPS is up to 43 times faster than the conventional approach. This is because T-GPS has no network communication overhead, while the conventional approach has a lot of communication overhead among computers.

Professor Kim believes that this work will have a large impact on the IT industry where almost every area utilizes graph data, adding, “T-GPS can significantly increase both the scale and efficiency of developing a new graph algorithm.”

This work was supported by the National Research Foundation (NRF) of Korea and Institute of Information & communications Technology Planning & Evaluation (IITP).

Publication:

Park, H., et al. (2021) “Trillion-scale Graph Processing Simulation based on Top-Down Graph Upscaling,” Presented at the IEEE ICDE 2021 (April 19-22, 2021, Chania, Greece)

Profile:

Min-Soo Kim

Associate Professor

minsoo.k@kaist.ac.kr

http://infolab.kaist.ac.kr

School of Computing

KAIST

2021.05.06 View 8796

T-GPS Processes a Graph with Trillion Edges on a Single Computer

Trillion-scale graph processing simulation on a single computer presents a new concept of graph processing

A KAIST research team has developed a new technology that enables to process a large-scale graph algorithm without storing the graph in the main memory or on disks. Named as T-GPS (Trillion-scale Graph Processing Simulation) by the developer Professor Min-Soo Kim from the School of Computing at KAIST, it can process a graph with one trillion edges using a single computer.

Graphs are widely used to represent and analyze real-world objects in many domains such as social networks, business intelligence, biology, and neuroscience. As the number of graph applications increases rapidly, developing and testing new graph algorithms is becoming more important than ever before. Nowadays, many industrial applications require a graph algorithm to process a large-scale graph (e.g., one trillion edges). So, when developing and testing graph algorithms such for a large-scale graph, a synthetic graph is usually used instead of a real graph. This is because sharing and utilizing large-scale real graphs is very limited due to their being proprietary or being practically impossible to collect.

Conventionally, developing and testing graph algorithms is done via the following two-step approach: generating and storing a graph and executing an algorithm on the graph using a graph processing engine.

The first step generates a synthetic graph and stores it on disks. The synthetic graph is usually generated by either parameter-based generation methods or graph upscaling methods. The former extracts a small number of parameters that can capture some properties of a given real graph and generates the synthetic graph with the parameters. The latter upscales a given real graph to a larger one so as to preserve the properties of the original real graph as much as possible.

The second step loads the stored graph into the main memory of the graph processing engine such as Apache GraphX and executes a given graph algorithm on the engine. Since the size of the graph is too large to fit in the main memory of a single computer, the graph engine typically runs on a cluster of several tens or hundreds of computers. Therefore, the cost of the conventional two-step approach is very high.

The research team solved the problem of the conventional two-step approach. It does not generate and store a large-scale synthetic graph. Instead, it just loads the initial small real graph into main memory. Then, T-GPS processes a graph algorithm on the small real graph as if the large-scale synthetic graph that should be generated from the real graph exists in main memory. After the algorithm is done, T-GPS returns the exactly same result as the conventional two-step approach.

The key idea of T-GPS is generating only the part of the synthetic graph that the algorithm needs to access on the fly and modifying the graph processing engine to recognize the part generated on the fly as the part of the synthetic graph actually generated.

The research team showed that T-GPS can process a graph of 1 trillion edges using a single computer, while the conventional two-step approach can only process of a graph of 1 billion edges using a cluster of eleven computers of the same specification. Thus, T-GPS outperforms the conventional approach by 10,000 times in terms of computing resources. The team also showed that the speed of processing an algorithm in T-GPS is up to 43 times faster than the conventional approach. This is because T-GPS has no network communication overhead, while the conventional approach has a lot of communication overhead among computers.

Professor Kim believes that this work will have a large impact on the IT industry where almost every area utilizes graph data, adding, “T-GPS can significantly increase both the scale and efficiency of developing a new graph algorithm.”

This work was supported by the National Research Foundation (NRF) of Korea and Institute of Information & communications Technology Planning & Evaluation (IITP).

Publication:

Park, H., et al. (2021) “Trillion-scale Graph Processing Simulation based on Top-Down Graph Upscaling,” Presented at the IEEE ICDE 2021 (April 19-22, 2021, Chania, Greece)

Profile:

Min-Soo Kim

Associate Professor

minsoo.k@kaist.ac.kr

http://infolab.kaist.ac.kr

School of Computing

KAIST

2021.05.06 View 8796 -

Professor Sue-Hyun Lee Listed Among WEF 2020 Young Scientists

Professor Sue-Hyun Lee from the Department of Bio and Brain Engineering joined the World Economic Forum (WEF)’s Young Scientists Community on May 26. The class of 2020 comprises 25 leading researchers from 14 countries across the world who are at the forefront of scientific problem-solving and social change. Professor Lee was the only Korean on this year’s roster.

The WEF created the Young Scientists Community in 2008 to engage leaders from the public and private sectors with science and the role it plays in society. The WEF selects rising-star academics, 40 and under, from various fields every year, and helps them become stronger ambassadors for science, especially in tackling pressing global challenges including cybersecurity, climate change, poverty, and pandemics.

Professor Lee is researching how memories are encoded, recalled, and updated, and how emotional processes affect human memory, in order to ultimately direct the development of therapeutic methods to treat mental disorders. She has made significant contributions to resolving ongoing debates over the maintenance and changes of memory traces in the brain.

In recognition of her research excellence, leadership, and commitment to serving society, the President and the Dean of the College of Engineering at KAIST nominated Professor Lee to the WEF’s Class of 2020 Young Scientists Selection Committee. The Committee also acknowledged Professor Lee’s achievements and potential for expanding the boundaries of knowledge and practical applications of science, and accepted her into the Community.

During her three-year membership in the Community, Professor Lee will be committed to participating in WEF-initiated activities and events related to promising therapeutic interventions for mental disorders and future directions of artificial intelligence.

Seven of this year’s WEF Young Scientists are from Asia, including Professor Lee, while eight are based in Europe. Six study in the Americas, two work in South Africa, and the remaining two in the Middle East. Fourteen, more than half, of the newly announced 25 Young Scientists are women.

(END)

2020.05.26 View 13948

Professor Sue-Hyun Lee Listed Among WEF 2020 Young Scientists

Professor Sue-Hyun Lee from the Department of Bio and Brain Engineering joined the World Economic Forum (WEF)’s Young Scientists Community on May 26. The class of 2020 comprises 25 leading researchers from 14 countries across the world who are at the forefront of scientific problem-solving and social change. Professor Lee was the only Korean on this year’s roster.

The WEF created the Young Scientists Community in 2008 to engage leaders from the public and private sectors with science and the role it plays in society. The WEF selects rising-star academics, 40 and under, from various fields every year, and helps them become stronger ambassadors for science, especially in tackling pressing global challenges including cybersecurity, climate change, poverty, and pandemics.

Professor Lee is researching how memories are encoded, recalled, and updated, and how emotional processes affect human memory, in order to ultimately direct the development of therapeutic methods to treat mental disorders. She has made significant contributions to resolving ongoing debates over the maintenance and changes of memory traces in the brain.

In recognition of her research excellence, leadership, and commitment to serving society, the President and the Dean of the College of Engineering at KAIST nominated Professor Lee to the WEF’s Class of 2020 Young Scientists Selection Committee. The Committee also acknowledged Professor Lee’s achievements and potential for expanding the boundaries of knowledge and practical applications of science, and accepted her into the Community.

During her three-year membership in the Community, Professor Lee will be committed to participating in WEF-initiated activities and events related to promising therapeutic interventions for mental disorders and future directions of artificial intelligence.

Seven of this year’s WEF Young Scientists are from Asia, including Professor Lee, while eight are based in Europe. Six study in the Americas, two work in South Africa, and the remaining two in the Middle East. Fourteen, more than half, of the newly announced 25 Young Scientists are women.

(END)

2020.05.26 View 13948 -

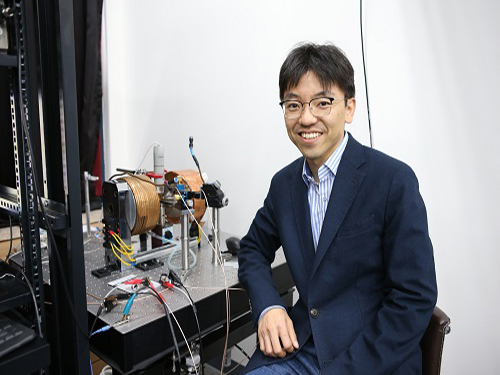

Professor Byong-Guk Park Named Scientist of October

< Professor Byong-Guk Park >

Professor Byong-Guk Park from the Department of Materials Science and Engineering was selected as the ‘Scientist of the Month’ for October 2019 by the Ministry of Science and ICT and the National Research Foundation of Korea. Professor Park was recognized for his contributions to the advancement of spin-orbit torque (SOT)-based magnetic random access memory (MRAM) technology. He received 10 million KRW in prize money.

A next-generation, non-volatile memory device MRAM consists of thin magnetic films. It can be applied in “logic-in-memory” devices, in which logic and memory functionalities coexist, thus drastically improving the performance of complementary metal-oxide semiconductor (CMOS) processors. Conventional MRAM technology is limited in its ability to increase the operation speed of a memory device while maintaining a high density.

Professor Park tackled this challenge by introducing a new material, antiferromagnet (IrMn), that generates a sizable amount of SOT as well as an exchange-bias field, which makes successful data writing possible without an external magnetic field. This research outcome paved the way for the development of MRAM, which has a simple device structure but features high speeds and density.

Professor Park said, “I feel rewarded to have forwarded the feasibility and applicability of MRAM. I will continue devoting myself to studying further on the development of new materials that can help enhance the performance of memory devices."

(END)

2019.10.10 View 11808

Professor Byong-Guk Park Named Scientist of October

< Professor Byong-Guk Park >

Professor Byong-Guk Park from the Department of Materials Science and Engineering was selected as the ‘Scientist of the Month’ for October 2019 by the Ministry of Science and ICT and the National Research Foundation of Korea. Professor Park was recognized for his contributions to the advancement of spin-orbit torque (SOT)-based magnetic random access memory (MRAM) technology. He received 10 million KRW in prize money.

A next-generation, non-volatile memory device MRAM consists of thin magnetic films. It can be applied in “logic-in-memory” devices, in which logic and memory functionalities coexist, thus drastically improving the performance of complementary metal-oxide semiconductor (CMOS) processors. Conventional MRAM technology is limited in its ability to increase the operation speed of a memory device while maintaining a high density.

Professor Park tackled this challenge by introducing a new material, antiferromagnet (IrMn), that generates a sizable amount of SOT as well as an exchange-bias field, which makes successful data writing possible without an external magnetic field. This research outcome paved the way for the development of MRAM, which has a simple device structure but features high speeds and density.

Professor Park said, “I feel rewarded to have forwarded the feasibility and applicability of MRAM. I will continue devoting myself to studying further on the development of new materials that can help enhance the performance of memory devices."

(END)

2019.10.10 View 11808 -

High-Speed Motion Core Technology for Magnetic Memory

(Professor Kab-Jin Kim of the Department of Physics)

A joint research team led by Professor Kab-Jin Kim of the Department of Physics, KAIST and Professor Kyung-Jin Lee at Korea University developed technology to dramatically enhance the speed of next generation domain wall-based magnetic memory. This research was published online in Nature Materials on September 25.

Currently-used memory materials, D-RAM and S-RAM, are fast but volatile, leading to memory loss when the power is switched off. Flash memory is non-volatile but slow, while hard disk drives (HDD) have greater storage but are high in energy usage and weak in physical shock tolerance.

To overcome the limitations of existing memory materials, ‘domain wall-based, magnetic memory’ is being researched. The core mechanism of domain wall magnetic memory is the movement of a domain wall by the current. Non-volatility is secured by using magnetic nanowires and the lack of mechanical rotation reduced power usage. This is a new form of high density, low power next-generation memory.

However, previous studies showed the speed limit of domain wall memory to be hundreds m/s at maximum due to the ‘Walker breakdown phenomenon’, which refers to velocity breakdown from the angular precession of a domain wall. Therefore, there was a need to develop core technology to remove the Walker breakdown phenomenon and increase the speed for the commercialization of domain wall memory.

Most domain wall memory studies used ferromagnetic bodies, which cannot overcome the Walker breakdown phenomenon. The team discovered that the use of ‘ferrimagnetic‘ GdFeCo at certain conditions could overcome the Walker breakdown phenomenon and using this mechanism they could increase domain wall speed to over 2Km/s at room temperature.

Domain wall memory is high-density, low-power, and non-volatile memory. The memory could be the leading next-generation memory with the addition of the high speed property discovered in this research.