Electrical+Engineering

-

No More Touch Issues on Rainy Days! KAIST Develops Human-Like Tactile Sensor

Recent advancements in robotics have enabled machines to handle delicate objects like eggs with precision, thanks to highly integrated pressure sensors that provide detailed tactile feedback. However, even the most advanced robots struggle to accurately detect pressure in complex environments involving water, bending, or electromagnetic interference. A research team at KAIST has successfully developed a pressure sensor that operates stably without external interference, even on wet surfaces like a smartphone screen covered in water, achieving human-level tactile sensitivity.

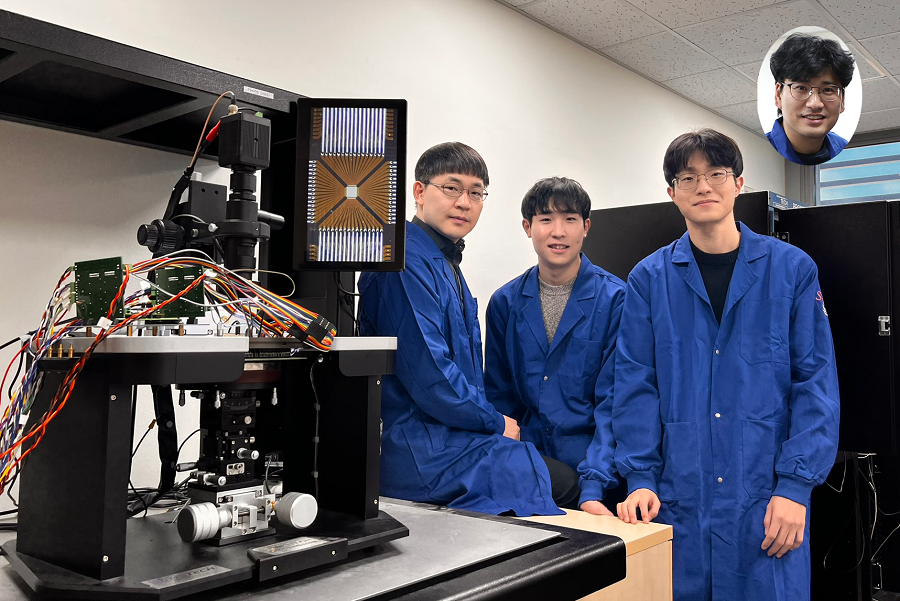

KAIST (represented by President Kwang Hyung Lee) announced on the 10th of March that a research team led by Professor Jun-Bo Yoon from the School of Electrical Engineering has developed a high-resolution pressure sensor that remains unaffected by external interference such as "ghost touches" caused by moisture on touchscreens.

Capacitive pressure sensors, widely used in touch systems due to their simple structure and durability, are essential components of human-machine interface (HMI) technologies in smartphones, wearable devices, and robots. However, they are prone to malfunctions caused by water droplets, electromagnetic interference, and curves.

To address these issues, the research team investigated the root causes of interference in capacitive pressure sensors. They identified that the "fringe field" generated at the sensor’s edges is particularly susceptible to external disturbances.

The researchers concluded that, to fundamentally resolve this issue, suppressing the fringe field was necessary. Through theoretical analysis, they determined that reducing the electrode spacing to the nanometer scale could effectively minimize the fringe field to below a few percent.

Utilizing proprietary micro/nanofabrication techniques, the team developed a nanogap pressure sensor with an electrode spacing of 900 nanometers (nm). This newly developed sensor reliably detected pressure regardless of the material exerting force and remained unaffected by bending or electromagnetic interference.

Furthermore, the team successfully implemented an artificial tactile system utilizing the developed sensor’s characteristics. Human skin contains specialized pressure receptors called Merkel’s disks. To artificially mimic them, the exclusive detection of pressure was necessary, but hadn’t been achieved by conventional sensors.

Professor Yoon’s research team overcame these challenges, developing a sensor achieving a density comparable to Merkel’s discs and enabling wireless, high-precision pressure sensing.

To explore potential applications, the researcher also developed a force touch pad system, demonstrating its ability to capture pressure magnitude and distribution with high resolution and without interference.

Professor Yoon stated, “Our nanogap pressure sensor operates reliably even in rainy conditions or sweaty environments, eliminating common touch malfunctions. We believe this innovation will significantly enhance everyday user experiences.”

He added, “This technology has the potential to revolutionize various fields, including precision tactile sensors for robotics, medical wearable devices, and next-generation augmented reality (AR) and virtual reality (VR) interfaces.”

The study was led by Jae-Soon Yang (Ph.D.), Myung-Kun Chung (Ph.D. candidate), and Jae-Young Yoo (Assistant Professor at Sungkyunkwan University, a KAIST Ph.D. graduate). The research findings were published in Nature Communications on February 27, 2025. (Paper title: “Interference-Free Nanogap Pressure Sensor Array with High Spatial Resolution for Wireless Human-Machine Interface Applications”, DOI: 10.1038/s41467-025-57232-8)

This study was supported by the National Research Foundation of Korea’s Mid-Career Researcher Program and Leading Research Center Support Program.

2025.03.14 View 1093

No More Touch Issues on Rainy Days! KAIST Develops Human-Like Tactile Sensor

Recent advancements in robotics have enabled machines to handle delicate objects like eggs with precision, thanks to highly integrated pressure sensors that provide detailed tactile feedback. However, even the most advanced robots struggle to accurately detect pressure in complex environments involving water, bending, or electromagnetic interference. A research team at KAIST has successfully developed a pressure sensor that operates stably without external interference, even on wet surfaces like a smartphone screen covered in water, achieving human-level tactile sensitivity.

KAIST (represented by President Kwang Hyung Lee) announced on the 10th of March that a research team led by Professor Jun-Bo Yoon from the School of Electrical Engineering has developed a high-resolution pressure sensor that remains unaffected by external interference such as "ghost touches" caused by moisture on touchscreens.

Capacitive pressure sensors, widely used in touch systems due to their simple structure and durability, are essential components of human-machine interface (HMI) technologies in smartphones, wearable devices, and robots. However, they are prone to malfunctions caused by water droplets, electromagnetic interference, and curves.

To address these issues, the research team investigated the root causes of interference in capacitive pressure sensors. They identified that the "fringe field" generated at the sensor’s edges is particularly susceptible to external disturbances.

The researchers concluded that, to fundamentally resolve this issue, suppressing the fringe field was necessary. Through theoretical analysis, they determined that reducing the electrode spacing to the nanometer scale could effectively minimize the fringe field to below a few percent.

Utilizing proprietary micro/nanofabrication techniques, the team developed a nanogap pressure sensor with an electrode spacing of 900 nanometers (nm). This newly developed sensor reliably detected pressure regardless of the material exerting force and remained unaffected by bending or electromagnetic interference.

Furthermore, the team successfully implemented an artificial tactile system utilizing the developed sensor’s characteristics. Human skin contains specialized pressure receptors called Merkel’s disks. To artificially mimic them, the exclusive detection of pressure was necessary, but hadn’t been achieved by conventional sensors.

Professor Yoon’s research team overcame these challenges, developing a sensor achieving a density comparable to Merkel’s discs and enabling wireless, high-precision pressure sensing.

To explore potential applications, the researcher also developed a force touch pad system, demonstrating its ability to capture pressure magnitude and distribution with high resolution and without interference.

Professor Yoon stated, “Our nanogap pressure sensor operates reliably even in rainy conditions or sweaty environments, eliminating common touch malfunctions. We believe this innovation will significantly enhance everyday user experiences.”

He added, “This technology has the potential to revolutionize various fields, including precision tactile sensors for robotics, medical wearable devices, and next-generation augmented reality (AR) and virtual reality (VR) interfaces.”

The study was led by Jae-Soon Yang (Ph.D.), Myung-Kun Chung (Ph.D. candidate), and Jae-Young Yoo (Assistant Professor at Sungkyunkwan University, a KAIST Ph.D. graduate). The research findings were published in Nature Communications on February 27, 2025. (Paper title: “Interference-Free Nanogap Pressure Sensor Array with High Spatial Resolution for Wireless Human-Machine Interface Applications”, DOI: 10.1038/s41467-025-57232-8)

This study was supported by the National Research Foundation of Korea’s Mid-Career Researcher Program and Leading Research Center Support Program.

2025.03.14 View 1093 -

KAIST Develops Wearable Carbon Dioxide Sensor to Enable Real-time Apnea Diagnosis

- Professor Seunghyup Yoo’s research team of the School of Electrical Engineering developed an ultralow-power carbon dioxide (CO2) sensor using a flexible and thin organic photodiode, and succeeded in real-time breathing monitoring by attaching it to a commercial mask

- Wearable devices with features such as low power, high stability, and flexibility can be utilized for early diagnosis of various diseases such as chronic obstructive pulmonary disease and sleep apnea

< Photo 1. From the left, School of Electrical Engineering, Ph.D. candidate DongHo Choi, Professor Seunghyup Yoo, and Department of Materials Science and Engineering, Bachelor’s candidate MinJae Kim >

Carbon dioxide (CO2) is a major respiratory metabolite, and continuous monitoring of CO2 concentration in exhaled breath is not only an important indicator for early detection and diagnosis of respiratory and circulatory system diseases, but can also be widely used for monitoring personal exercise status. KAIST researchers succeeded in accurately measuring CO2 concentration by attaching it to the inside of a mask.

KAIST (President Kwang-Hyung Lee) announced on February 10th that Professor Seunghyup Yoo's research team in the Department of Electrical and Electronic Engineering developed a low-power, high-speed wearable CO2 sensor capable of stable breathing monitoring in real time.

Existing non-invasive CO2 sensors had limitations in that they were large in size and consumed high power. In particular, optochemical CO2 sensors using fluorescent molecules have the advantage of being miniaturized and lightweight, but due to the photodegradation phenomenon of dye molecules, they are difficult to use stably for a long time, which limits their use as wearable healthcare sensors.

Optochemical CO2 sensors utilize the fact that the intensity of fluorescence emitted from fluorescent molecules decreases depending on the concentration of CO2, and it is important to effectively detect changes in fluorescence light.

To this end, the research team developed a low-power CO2 sensor consisting of an LED and an organic photodiode surrounding it. Based on high light collection efficiency, the sensor, which minimizes the amount of excitation light irradiated on fluorescent molecules, achieved a device power consumption of 171 μW, which is tens of times lower than existing sensors that consume several mW.

< Figure 1. Structure and operating principle of the developed optochemical carbon dioxide (CO2) sensor. Light emitted from the LED is converted into fluorescence through the fluorescent film, reflected from the light scattering layer, and incident on the organic photodiode. CO2 reacts with a small amount of water inside the fluorescent film to form carbonic acid (H2CO3), which increases the concentration of hydrogen ions (H+), and the fluorescence intensity due to 470 nm excitation light decreases. The circular organic photodiode with high light collection efficiency effectively detects changes in fluorescence intensity, lowers the power required light up the LED, and reduces light-induced deterioration. >

The research team also elucidated the photodegradation path of fluorescent molecules used in CO2 sensors, revealed the cause of the increase in error over time in photochemical sensors, and suggested an optical design method to suppress the occurrence of errors.

Based on this, the research team developed a sensor that effectively reduces errors caused by photodegradation, which was a chronic problem of existing photochemical sensors, and can be used continuously for up to 9 hours while existing technologies based on the same material can be used for less than 20 minutes, and can be used multiple times when replacing the CO2 detection fluorescent film.

< Figure 2. Wearable smart mask and real-time breathing monitoring. The fabricated sensor module consists of four elements (①: gas-permeable light-scattering layer, ②: color filter and organic photodiode, ③: light-emitting diode, ④: CO2-detecting fluorescent film). The thin and light sensor (D1: 400 nm, D2: 470 nm) is attached to the inside of the mask to monitor the wearer's breathing in real time. >

The developed sensor accurately measured CO2 concentration by being attached to the inside of a mask based on the advantages of being light (0.12 g), thin (0.7 mm), and flexible. In addition, it showed fast speed and high resolution that can monitor respiratory rate by distinguishing between inhalation and exhalation in real time.

< Photo 2. The developed sensor attached to the inside of the mask >

Professor Seunghyup Yoo said, "The developed sensor has excellent characteristics such as low power, high stability, and flexibility, so it can be widely applied to wearable devices, and can be used for the early diagnosis of various diseases such as hypercapnia, chronic obstructive pulmonary disease, and sleep apnea." He added, "In particular, it is expected to be used to improve side effects caused by rebreathing in environments where dust is generated or where masks are worn for long periods of time, such as during seasonal changes."

This study, in which KAIST's Department of Materials Science and Engineering's undergraduate student Minjae Kim and School of Electrical Engineering's doctoral student Dongho Choi participated as joint first authors, was published in the online version of Cell's sister journal, Device, on the 22nd of last month. (Paper title: Ultralow-power carbon dioxide sensor for real-time breath monitoring) DOI: https://doi.org/10.1016/j.device.2024.100681

< Photo 3. From the left, Professor Seunghyup Yoo of the School of Electrical Engineering, MinJae Kim, an undergraduate student in the Department of Materials Science and Engineering, and Dongho Choi, a doctoral student in the School of Electrical Engineering >

This study was supported by the Ministry of Trade, Industry and Energy's Materials and Components Technology Development Project, the National Research Foundation of Korea's Original Technology Development Project, and the KAIST Undergraduate Research Participation Project. This work was supported by the (URP) program.

2025.02.13 View 2587

KAIST Develops Wearable Carbon Dioxide Sensor to Enable Real-time Apnea Diagnosis

- Professor Seunghyup Yoo’s research team of the School of Electrical Engineering developed an ultralow-power carbon dioxide (CO2) sensor using a flexible and thin organic photodiode, and succeeded in real-time breathing monitoring by attaching it to a commercial mask

- Wearable devices with features such as low power, high stability, and flexibility can be utilized for early diagnosis of various diseases such as chronic obstructive pulmonary disease and sleep apnea

< Photo 1. From the left, School of Electrical Engineering, Ph.D. candidate DongHo Choi, Professor Seunghyup Yoo, and Department of Materials Science and Engineering, Bachelor’s candidate MinJae Kim >

Carbon dioxide (CO2) is a major respiratory metabolite, and continuous monitoring of CO2 concentration in exhaled breath is not only an important indicator for early detection and diagnosis of respiratory and circulatory system diseases, but can also be widely used for monitoring personal exercise status. KAIST researchers succeeded in accurately measuring CO2 concentration by attaching it to the inside of a mask.

KAIST (President Kwang-Hyung Lee) announced on February 10th that Professor Seunghyup Yoo's research team in the Department of Electrical and Electronic Engineering developed a low-power, high-speed wearable CO2 sensor capable of stable breathing monitoring in real time.

Existing non-invasive CO2 sensors had limitations in that they were large in size and consumed high power. In particular, optochemical CO2 sensors using fluorescent molecules have the advantage of being miniaturized and lightweight, but due to the photodegradation phenomenon of dye molecules, they are difficult to use stably for a long time, which limits their use as wearable healthcare sensors.

Optochemical CO2 sensors utilize the fact that the intensity of fluorescence emitted from fluorescent molecules decreases depending on the concentration of CO2, and it is important to effectively detect changes in fluorescence light.

To this end, the research team developed a low-power CO2 sensor consisting of an LED and an organic photodiode surrounding it. Based on high light collection efficiency, the sensor, which minimizes the amount of excitation light irradiated on fluorescent molecules, achieved a device power consumption of 171 μW, which is tens of times lower than existing sensors that consume several mW.

< Figure 1. Structure and operating principle of the developed optochemical carbon dioxide (CO2) sensor. Light emitted from the LED is converted into fluorescence through the fluorescent film, reflected from the light scattering layer, and incident on the organic photodiode. CO2 reacts with a small amount of water inside the fluorescent film to form carbonic acid (H2CO3), which increases the concentration of hydrogen ions (H+), and the fluorescence intensity due to 470 nm excitation light decreases. The circular organic photodiode with high light collection efficiency effectively detects changes in fluorescence intensity, lowers the power required light up the LED, and reduces light-induced deterioration. >

The research team also elucidated the photodegradation path of fluorescent molecules used in CO2 sensors, revealed the cause of the increase in error over time in photochemical sensors, and suggested an optical design method to suppress the occurrence of errors.

Based on this, the research team developed a sensor that effectively reduces errors caused by photodegradation, which was a chronic problem of existing photochemical sensors, and can be used continuously for up to 9 hours while existing technologies based on the same material can be used for less than 20 minutes, and can be used multiple times when replacing the CO2 detection fluorescent film.

< Figure 2. Wearable smart mask and real-time breathing monitoring. The fabricated sensor module consists of four elements (①: gas-permeable light-scattering layer, ②: color filter and organic photodiode, ③: light-emitting diode, ④: CO2-detecting fluorescent film). The thin and light sensor (D1: 400 nm, D2: 470 nm) is attached to the inside of the mask to monitor the wearer's breathing in real time. >

The developed sensor accurately measured CO2 concentration by being attached to the inside of a mask based on the advantages of being light (0.12 g), thin (0.7 mm), and flexible. In addition, it showed fast speed and high resolution that can monitor respiratory rate by distinguishing between inhalation and exhalation in real time.

< Photo 2. The developed sensor attached to the inside of the mask >

Professor Seunghyup Yoo said, "The developed sensor has excellent characteristics such as low power, high stability, and flexibility, so it can be widely applied to wearable devices, and can be used for the early diagnosis of various diseases such as hypercapnia, chronic obstructive pulmonary disease, and sleep apnea." He added, "In particular, it is expected to be used to improve side effects caused by rebreathing in environments where dust is generated or where masks are worn for long periods of time, such as during seasonal changes."

This study, in which KAIST's Department of Materials Science and Engineering's undergraduate student Minjae Kim and School of Electrical Engineering's doctoral student Dongho Choi participated as joint first authors, was published in the online version of Cell's sister journal, Device, on the 22nd of last month. (Paper title: Ultralow-power carbon dioxide sensor for real-time breath monitoring) DOI: https://doi.org/10.1016/j.device.2024.100681

< Photo 3. From the left, Professor Seunghyup Yoo of the School of Electrical Engineering, MinJae Kim, an undergraduate student in the Department of Materials Science and Engineering, and Dongho Choi, a doctoral student in the School of Electrical Engineering >

This study was supported by the Ministry of Trade, Industry and Energy's Materials and Components Technology Development Project, the National Research Foundation of Korea's Original Technology Development Project, and the KAIST Undergraduate Research Participation Project. This work was supported by the (URP) program.

2025.02.13 View 2587 -

KAIST Develops Neuromorphic Semiconductor Chip that Learns and Corrects Itself

< Photo. The research team of the School of Electrical Engineering posed by the newly deveoped processor. (From center to the right) Professor Young-Gyu Yoon, Integrated Master's and Doctoral Program Students Seungjae Han and Hakcheon Jeong and Professor Shinhyun Choi >

- Professor Shinhyun Choi and Professor Young-Gyu Yoon’s Joint Research Team from the School of Electrical Engineering developed a computing chip that can learn, correct errors, and process AI tasks

- Equipping a computing chip with high-reliability memristor devices with self-error correction functions for real-time learning and image processing

Existing computer systems have separate data processing and storage devices, making them inefficient for processing complex data like AI. A KAIST research team has developed a memristor-based integrated system similar to the way our brain processes information. It is now ready for application in various devices including smart security cameras, allowing them to recognize suspicious activity immediately without having to rely on remote cloud servers, and medical devices with which it can help analyze health data in real time.

KAIST (President Kwang Hyung Lee) announced on the 17th of January that the joint research team of Professor Shinhyun Choi and Professor Young-Gyu Yoon of the School of Electrical Engineering has developed a next-generation neuromorphic semiconductor-based ultra-small computing chip that can learn and correct errors on its own.

< Figure 1. Scanning electron microscope (SEM) image of a computing chip equipped with a highly reliable selector-less 32×32 memristor crossbar array (left). Hardware system developed for real-time artificial intelligence implementation (right). >

What is special about this computing chip is that it can learn and correct errors that occur due to non-ideal characteristics that were difficult to solve in existing neuromorphic devices. For example, when processing a video stream, the chip learns to automatically separate a moving object from the background, and it becomes better at this task over time.

This self-learning ability has been proven by achieving accuracy comparable to ideal computer simulations in real-time image processing. The research team's main achievement is that it has completed a system that is both reliable and practical, beyond the development of brain-like components.

The research team has developed the world's first memristor-based integrated system that can adapt to immediate environmental changes, and has presented an innovative solution that overcomes the limitations of existing technology.

< Figure 2. Background and foreground separation results of an image containing non-ideal characteristics of memristor devices (left). Real-time image separation results through on-device learning using the memristor computing chip developed by our research team (right). >

At the heart of this innovation is a next-generation semiconductor device called a memristor*. The variable resistance characteristics of this device can replace the role of synapses in neural networks, and by utilizing it, data storage and computation can be performed simultaneously, just like our brain cells.

*Memristor: A compound word of memory and resistor, next-generation electrical device whose resistance value is determined by the amount and direction of charge that has flowed between the two terminals in the past.

The research team designed a highly reliable memristor that can precisely control resistance changes and developed an efficient system that excludes complex compensation processes through self-learning. This study is significant in that it experimentally verified the commercialization possibility of a next-generation neuromorphic semiconductor-based integrated system that supports real-time learning and inference.

This technology will revolutionize the way artificial intelligence is used in everyday devices, allowing AI tasks to be processed locally without relying on remote cloud servers, making them faster, more privacy-protected, and more energy-efficient.

“This system is like a smart workspace where everything is within arm’s reach instead of having to go back and forth between desks and file cabinets,” explained KAIST researchers Hakcheon Jeong and Seungjae Han, who led the development of this technology. “This is similar to the way our brain processes information, where everything is processed efficiently at once at one spot.”

The research was conducted with Hakcheon Jeong and Seungjae Han, the students of Integrated Master's and Doctoral Program at KAIST School of Electrical Engineering being the co-first authors, the results of which was published online in the international academic journal, Nature Electronics, on January 8, 2025.

*Paper title: Self-supervised video processing with self-calibration on an analogue computing platform based on a selector-less memristor array ( https://doi.org/10.1038/s41928-024-01318-6 )

This research was supported by the Next-Generation Intelligent Semiconductor Technology Development Project, Excellent New Researcher Project and PIM AI Semiconductor Core Technology Development Project of the National Research Foundation of Korea, and the Electronics and Telecommunications Research Institute Research and Development Support Project of the Institute of Information & communications Technology Planning & Evaluation.

2025.01.17 View 3720

KAIST Develops Neuromorphic Semiconductor Chip that Learns and Corrects Itself

< Photo. The research team of the School of Electrical Engineering posed by the newly deveoped processor. (From center to the right) Professor Young-Gyu Yoon, Integrated Master's and Doctoral Program Students Seungjae Han and Hakcheon Jeong and Professor Shinhyun Choi >

- Professor Shinhyun Choi and Professor Young-Gyu Yoon’s Joint Research Team from the School of Electrical Engineering developed a computing chip that can learn, correct errors, and process AI tasks

- Equipping a computing chip with high-reliability memristor devices with self-error correction functions for real-time learning and image processing

Existing computer systems have separate data processing and storage devices, making them inefficient for processing complex data like AI. A KAIST research team has developed a memristor-based integrated system similar to the way our brain processes information. It is now ready for application in various devices including smart security cameras, allowing them to recognize suspicious activity immediately without having to rely on remote cloud servers, and medical devices with which it can help analyze health data in real time.

KAIST (President Kwang Hyung Lee) announced on the 17th of January that the joint research team of Professor Shinhyun Choi and Professor Young-Gyu Yoon of the School of Electrical Engineering has developed a next-generation neuromorphic semiconductor-based ultra-small computing chip that can learn and correct errors on its own.

< Figure 1. Scanning electron microscope (SEM) image of a computing chip equipped with a highly reliable selector-less 32×32 memristor crossbar array (left). Hardware system developed for real-time artificial intelligence implementation (right). >

What is special about this computing chip is that it can learn and correct errors that occur due to non-ideal characteristics that were difficult to solve in existing neuromorphic devices. For example, when processing a video stream, the chip learns to automatically separate a moving object from the background, and it becomes better at this task over time.

This self-learning ability has been proven by achieving accuracy comparable to ideal computer simulations in real-time image processing. The research team's main achievement is that it has completed a system that is both reliable and practical, beyond the development of brain-like components.

The research team has developed the world's first memristor-based integrated system that can adapt to immediate environmental changes, and has presented an innovative solution that overcomes the limitations of existing technology.

< Figure 2. Background and foreground separation results of an image containing non-ideal characteristics of memristor devices (left). Real-time image separation results through on-device learning using the memristor computing chip developed by our research team (right). >

At the heart of this innovation is a next-generation semiconductor device called a memristor*. The variable resistance characteristics of this device can replace the role of synapses in neural networks, and by utilizing it, data storage and computation can be performed simultaneously, just like our brain cells.

*Memristor: A compound word of memory and resistor, next-generation electrical device whose resistance value is determined by the amount and direction of charge that has flowed between the two terminals in the past.

The research team designed a highly reliable memristor that can precisely control resistance changes and developed an efficient system that excludes complex compensation processes through self-learning. This study is significant in that it experimentally verified the commercialization possibility of a next-generation neuromorphic semiconductor-based integrated system that supports real-time learning and inference.

This technology will revolutionize the way artificial intelligence is used in everyday devices, allowing AI tasks to be processed locally without relying on remote cloud servers, making them faster, more privacy-protected, and more energy-efficient.

“This system is like a smart workspace where everything is within arm’s reach instead of having to go back and forth between desks and file cabinets,” explained KAIST researchers Hakcheon Jeong and Seungjae Han, who led the development of this technology. “This is similar to the way our brain processes information, where everything is processed efficiently at once at one spot.”

The research was conducted with Hakcheon Jeong and Seungjae Han, the students of Integrated Master's and Doctoral Program at KAIST School of Electrical Engineering being the co-first authors, the results of which was published online in the international academic journal, Nature Electronics, on January 8, 2025.

*Paper title: Self-supervised video processing with self-calibration on an analogue computing platform based on a selector-less memristor array ( https://doi.org/10.1038/s41928-024-01318-6 )

This research was supported by the Next-Generation Intelligent Semiconductor Technology Development Project, Excellent New Researcher Project and PIM AI Semiconductor Core Technology Development Project of the National Research Foundation of Korea, and the Electronics and Telecommunications Research Institute Research and Development Support Project of the Institute of Information & communications Technology Planning & Evaluation.

2025.01.17 View 3720 -

KAIST Research Team Develops Stretchable Microelectrodes Array for Organoid Signal Monitoring

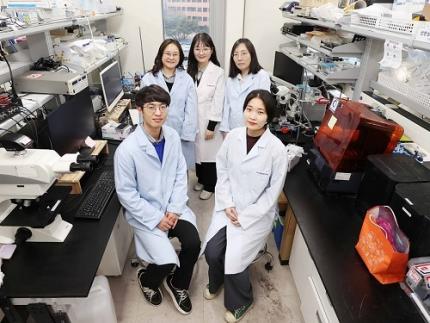

< Photo 1. (From top left) Professor Hyunjoo J. Lee, Dr. Mi-Young Son, Dr. Mi-Ok Lee(In the front row from left) Doctoral student Kiup Kim, Doctoral student Youngsun Lee >

On January 14th, the KAIST research team led by Professor Hyunjoo J. Lee from the School of Electrical Engineering in collaboration with Dr. Mi-Young Son and Dr. Mi-Ok Lee at Korea Research Institute of Bioscience and Biotechnology (KRIBB) announced the development of a highly stretchable microelectrode array (sMEA) designed for non-invasive electrophysiological signal measurement of organoids.

Organoids* are highly promising models for human biology and are expected to replace many animal experiments. Their potential applications include disease modeling, drug screening, and personalized medicine as they closely mimic the structure and function of humans.

*Organoids: three-dimensional in vitro tissue models derived from human stem cells

Despite these advantages, existing organoid research has primarily focused on genetic analysis, with limited studies on organoid functionality. For effective drug evaluation and precise biological research, technology that preserves the three-dimensional structure of organoids while enabling real-time monitoring of their functions is needed. However, it’s challenging to provide non-invasive ways to evaluate the functionalities without incurring damage to the tissues. This challenge is particularly significant for electrophysiological signal measurement in cardiac and brain organoids since the sensor needs to be in direct contact with organoids of varying size and irregular shape. Achieving tight contact between electrodes and the external surface of the organoids without damaging the organoids has been a persistent challenge.

< Figure 1. Schematic image of highly stretchable MEA (sMEA) with protruding microelectrodes. >

The KAIST research team developed a highly stretchable microelectrode array with a unique serpentine structure that contacts the surface of organoids in a highly conformal fashion. They successfully demonstrated real-time measurement and analysis of electrophysiological signals from two types of electrogenic organoids (heart and brain). By employing a micro-electromechanical system (MEMS)-based process, the team fabricated the serpentine-structured microelectrode array and used an electrochemical deposition process to develop PEDOT:PSS-based protruding microelectrodes. These innovations demonstrated exceptional stretchability and close surface adherence to various organoid sizes. The protruding microelectrodes improved contact between organoids and the electrodes, ensuring stable and reliable electrophysiological signal measurements with high signal-to-noise ratios (SNR).

< Figure 2. Conceptual illustration, optical image, and fluorescence images of an organoid captured by the sMEA with protruding microelectrodes.>

Using this technology, the team successfully monitored and analyzed electrophysiological signals from cardiac spheroids of various sizes, revealing three-dimensional signal propagation patterns and identifying changes in signal characteristics according to size. They also measured electrophysiological signals in midbrain organoids, demonstrating the versatility of the technology. Additionally, they monitored signal modulations induced by various drugs, showcasing the potential of this technology for drug screening applications.

< Figure 3. SNR improvement effect by protruding PEDOT:PSS microelectrodes. >

Prof. Hyunjoo Jenny Lee stated, “By integrating MEMS technology and electrochemical deposition techniques, we successfully developed a stretchable microelectrode array adaptable to organoids of diverse sizes and shapes. The high practicality is a major advantage of this system since the fabrication is based on semiconductor fabrication with high volume production, reliability, and accuracy. This technology that enables in situ, real-time analysis of states and functionalities of organoids will be a game changer in high-through drug screening.”

This study led by Ph.D. candidate Kiup Kim from KAIST and Ph.D. candidate Youngsun Lee from KRIBB, with significant contributions from Dr. Kwang Bo Jung, was published online on December 15, 2024 in Advanced Materials (IF: 27.4).

< Figure 4. Drug screening using cardiac spheroids and midbrain organoids.>

This research was supported by a grant from 3D-TissueChip Based Drug Discovery Platform Technology Development Program (No. 20009209) funded by the Ministry of Trade, Industry & Energy (MOTIE, Korea), by the Commercialization Promotion Agency for R&D Outcomes (COMPA) funded by the Ministry of Science and ICT (MSIT) (RS-2024-00415902), by the K-Brain Project of the National Research Foundation (NRF) funded by the Korean government (MSIT) (RS-2023-00262568), by BK21 FOUR (Connected AI Education & Research Program for Industry and Society Innovation, KAIST EE, No. 4120200113769), and by Korea Research Institute of Bioscience and Biotechnology (KRIBB) Research Initiative Program (KGM4722432).

2025.01.14 View 1878

KAIST Research Team Develops Stretchable Microelectrodes Array for Organoid Signal Monitoring

< Photo 1. (From top left) Professor Hyunjoo J. Lee, Dr. Mi-Young Son, Dr. Mi-Ok Lee(In the front row from left) Doctoral student Kiup Kim, Doctoral student Youngsun Lee >

On January 14th, the KAIST research team led by Professor Hyunjoo J. Lee from the School of Electrical Engineering in collaboration with Dr. Mi-Young Son and Dr. Mi-Ok Lee at Korea Research Institute of Bioscience and Biotechnology (KRIBB) announced the development of a highly stretchable microelectrode array (sMEA) designed for non-invasive electrophysiological signal measurement of organoids.

Organoids* are highly promising models for human biology and are expected to replace many animal experiments. Their potential applications include disease modeling, drug screening, and personalized medicine as they closely mimic the structure and function of humans.

*Organoids: three-dimensional in vitro tissue models derived from human stem cells

Despite these advantages, existing organoid research has primarily focused on genetic analysis, with limited studies on organoid functionality. For effective drug evaluation and precise biological research, technology that preserves the three-dimensional structure of organoids while enabling real-time monitoring of their functions is needed. However, it’s challenging to provide non-invasive ways to evaluate the functionalities without incurring damage to the tissues. This challenge is particularly significant for electrophysiological signal measurement in cardiac and brain organoids since the sensor needs to be in direct contact with organoids of varying size and irregular shape. Achieving tight contact between electrodes and the external surface of the organoids without damaging the organoids has been a persistent challenge.

< Figure 1. Schematic image of highly stretchable MEA (sMEA) with protruding microelectrodes. >

The KAIST research team developed a highly stretchable microelectrode array with a unique serpentine structure that contacts the surface of organoids in a highly conformal fashion. They successfully demonstrated real-time measurement and analysis of electrophysiological signals from two types of electrogenic organoids (heart and brain). By employing a micro-electromechanical system (MEMS)-based process, the team fabricated the serpentine-structured microelectrode array and used an electrochemical deposition process to develop PEDOT:PSS-based protruding microelectrodes. These innovations demonstrated exceptional stretchability and close surface adherence to various organoid sizes. The protruding microelectrodes improved contact between organoids and the electrodes, ensuring stable and reliable electrophysiological signal measurements with high signal-to-noise ratios (SNR).

< Figure 2. Conceptual illustration, optical image, and fluorescence images of an organoid captured by the sMEA with protruding microelectrodes.>

Using this technology, the team successfully monitored and analyzed electrophysiological signals from cardiac spheroids of various sizes, revealing three-dimensional signal propagation patterns and identifying changes in signal characteristics according to size. They also measured electrophysiological signals in midbrain organoids, demonstrating the versatility of the technology. Additionally, they monitored signal modulations induced by various drugs, showcasing the potential of this technology for drug screening applications.

< Figure 3. SNR improvement effect by protruding PEDOT:PSS microelectrodes. >

Prof. Hyunjoo Jenny Lee stated, “By integrating MEMS technology and electrochemical deposition techniques, we successfully developed a stretchable microelectrode array adaptable to organoids of diverse sizes and shapes. The high practicality is a major advantage of this system since the fabrication is based on semiconductor fabrication with high volume production, reliability, and accuracy. This technology that enables in situ, real-time analysis of states and functionalities of organoids will be a game changer in high-through drug screening.”

This study led by Ph.D. candidate Kiup Kim from KAIST and Ph.D. candidate Youngsun Lee from KRIBB, with significant contributions from Dr. Kwang Bo Jung, was published online on December 15, 2024 in Advanced Materials (IF: 27.4).

< Figure 4. Drug screening using cardiac spheroids and midbrain organoids.>

This research was supported by a grant from 3D-TissueChip Based Drug Discovery Platform Technology Development Program (No. 20009209) funded by the Ministry of Trade, Industry & Energy (MOTIE, Korea), by the Commercialization Promotion Agency for R&D Outcomes (COMPA) funded by the Ministry of Science and ICT (MSIT) (RS-2024-00415902), by the K-Brain Project of the National Research Foundation (NRF) funded by the Korean government (MSIT) (RS-2023-00262568), by BK21 FOUR (Connected AI Education & Research Program for Industry and Society Innovation, KAIST EE, No. 4120200113769), and by Korea Research Institute of Bioscience and Biotechnology (KRIBB) Research Initiative Program (KGM4722432).

2025.01.14 View 1878 -

KAIST Secures Core Technology for Ultra-High-Resolution Image Sensors

A joint research team from Korea and the United States has developed next-generation, high-resolution image sensor technology with higher power efficiency and a smaller size compared to existing sensors. Notably, they have secured foundational technology for ultra-high-resolution shortwave infrared (SWIR) image sensors, an area currently dominated by Sony, paving the way for future market entry.

KAIST (represented by President Kwang Hyung Lee) announced on the 20th of November that a research team led by Professor SangHyeon Kim from the School of Electrical Engineering, in collaboration with Inha University and Yale University in the U.S., has developed an ultra-thin broadband photodiode (PD), marking a significant breakthrough in high-performance image sensor technology.

This research drastically improves the trade-off between the absorption layer thickness and quantum efficiency found in conventional photodiode technology. Specifically, it achieved high quantum efficiency of over 70% even in an absorption layer thinner than one micrometer (μm), reducing the thickness of the absorption layer by approximately 70% compared to existing technologies.

A thinner absorption layer simplifies pixel processing, allowing for higher resolution and smoother carrier diffusion, which is advantageous for light carrier acquisition while also reducing the cost. However, a fundamental issue with thinner absorption layers is the reduced absorption of long-wavelength light.

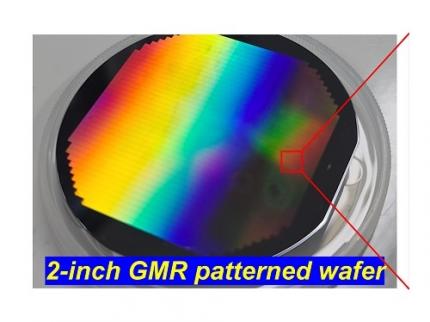

< Figure 1. Schematic diagram of the InGaAs photodiode image sensor integrated on the Guided-Mode Resonance (GMR) structure proposed in this study (left), a photograph of the fabricated wafer, and a scanning electron microscope (SEM) image of the periodic patterns (right) >

The research team introduced a guided-mode resonance (GMR) structure* that enables high-efficiency light absorption across a wide spectral range from 400 nanometers (nm) to 1,700 nanometers (nm). This wavelength range includes not only visible light but also light the SWIR region, making it valuable for various industrial applications.

*Guided-Mode Resonance (GMR) Structure: A concept used in electromagnetics, a phenomenon in which a specific (light) wave resonates (forming a strong electric/magnetic field) at a specific wavelength. Since energy is maximized under these conditions, it has been used to increase antenna or radar efficiency.

The improved performance in the SWIR region is expected to play a significant role in developing next-generation image sensors with increasingly high resolutions. The GMR structure, in particular, holds potential for further enhancing resolution and other performance metrics through hybrid integration and monolithic 3D integration with complementary metal-oxide-semiconductor (CMOS)-based readout integrated circuits (ROIC).

< Figure 2. Benchmark for state-of-the-art InGaAs-based SWIR pixels with simulated EQE lines as a function of TAL variation. Performance is maintained while reducing the absorption layer thickness from 2.1 micrometers or more to 1 micrometer or less while reducing it by 50% to 70% >

The research team has significantly enhanced international competitiveness in low-power devices and ultra-high-resolution imaging technology, opening up possibilities for applications in digital cameras, security systems, medical and industrial image sensors, as well as future ultra-high-resolution sensors for autonomous driving, aerospace, and satellite observation.

Professor Sang Hyun Kim, the lead researcher, commented, “This research demonstrates that significantly higher performance than existing technologies can be achieved even with ultra-thin absorption layers.”

< Figure 3. Top optical microscope image and cross-sectional scanning electron microscope image of the InGaAs photodiode image sensor fabricated on the GMR structure (left). Improved quantum efficiency performance of the ultra-thin image sensor (red) fabricated with the technology proposed in this study (right) >

The results of this research were published on 15th of November, in the prestigious international journal Light: Science & Applications (JCR 2.9%, IF=20.6), with Professor Dae-Myung Geum of Inha University (formerly a KAIST postdoctoral researcher) and Dr. Jinha Lim (currently a postdoctoral researcher at Yale University) as co-first authors. (Paper title: “Highly-efficient (>70%) and Wide-spectral (400 nm -1700 nm) sub-micron-thick InGaAs photodiodes for future high-resolution image sensors”)

This study was supported by the National Research Foundation of Korea.

2024.11.22 View 2841

KAIST Secures Core Technology for Ultra-High-Resolution Image Sensors

A joint research team from Korea and the United States has developed next-generation, high-resolution image sensor technology with higher power efficiency and a smaller size compared to existing sensors. Notably, they have secured foundational technology for ultra-high-resolution shortwave infrared (SWIR) image sensors, an area currently dominated by Sony, paving the way for future market entry.

KAIST (represented by President Kwang Hyung Lee) announced on the 20th of November that a research team led by Professor SangHyeon Kim from the School of Electrical Engineering, in collaboration with Inha University and Yale University in the U.S., has developed an ultra-thin broadband photodiode (PD), marking a significant breakthrough in high-performance image sensor technology.

This research drastically improves the trade-off between the absorption layer thickness and quantum efficiency found in conventional photodiode technology. Specifically, it achieved high quantum efficiency of over 70% even in an absorption layer thinner than one micrometer (μm), reducing the thickness of the absorption layer by approximately 70% compared to existing technologies.

A thinner absorption layer simplifies pixel processing, allowing for higher resolution and smoother carrier diffusion, which is advantageous for light carrier acquisition while also reducing the cost. However, a fundamental issue with thinner absorption layers is the reduced absorption of long-wavelength light.

< Figure 1. Schematic diagram of the InGaAs photodiode image sensor integrated on the Guided-Mode Resonance (GMR) structure proposed in this study (left), a photograph of the fabricated wafer, and a scanning electron microscope (SEM) image of the periodic patterns (right) >

The research team introduced a guided-mode resonance (GMR) structure* that enables high-efficiency light absorption across a wide spectral range from 400 nanometers (nm) to 1,700 nanometers (nm). This wavelength range includes not only visible light but also light the SWIR region, making it valuable for various industrial applications.

*Guided-Mode Resonance (GMR) Structure: A concept used in electromagnetics, a phenomenon in which a specific (light) wave resonates (forming a strong electric/magnetic field) at a specific wavelength. Since energy is maximized under these conditions, it has been used to increase antenna or radar efficiency.

The improved performance in the SWIR region is expected to play a significant role in developing next-generation image sensors with increasingly high resolutions. The GMR structure, in particular, holds potential for further enhancing resolution and other performance metrics through hybrid integration and monolithic 3D integration with complementary metal-oxide-semiconductor (CMOS)-based readout integrated circuits (ROIC).

< Figure 2. Benchmark for state-of-the-art InGaAs-based SWIR pixels with simulated EQE lines as a function of TAL variation. Performance is maintained while reducing the absorption layer thickness from 2.1 micrometers or more to 1 micrometer or less while reducing it by 50% to 70% >

The research team has significantly enhanced international competitiveness in low-power devices and ultra-high-resolution imaging technology, opening up possibilities for applications in digital cameras, security systems, medical and industrial image sensors, as well as future ultra-high-resolution sensors for autonomous driving, aerospace, and satellite observation.

Professor Sang Hyun Kim, the lead researcher, commented, “This research demonstrates that significantly higher performance than existing technologies can be achieved even with ultra-thin absorption layers.”

< Figure 3. Top optical microscope image and cross-sectional scanning electron microscope image of the InGaAs photodiode image sensor fabricated on the GMR structure (left). Improved quantum efficiency performance of the ultra-thin image sensor (red) fabricated with the technology proposed in this study (right) >

The results of this research were published on 15th of November, in the prestigious international journal Light: Science & Applications (JCR 2.9%, IF=20.6), with Professor Dae-Myung Geum of Inha University (formerly a KAIST postdoctoral researcher) and Dr. Jinha Lim (currently a postdoctoral researcher at Yale University) as co-first authors. (Paper title: “Highly-efficient (>70%) and Wide-spectral (400 nm -1700 nm) sub-micron-thick InGaAs photodiodes for future high-resolution image sensors”)

This study was supported by the National Research Foundation of Korea.

2024.11.22 View 2841 -

KAIST Researchers Introduce New and Improved, Next-Generation Perovskite Solar Cell

- KAIST-Yonsei university researchers developed innovative dipole technology to maximize near-infrared photon harvesting efficiency

- Overcoming the shortcoming of existing perovskite solar cells that cannot utilize approximately 52% of total solar energy

- Development of next-generation solar cell technology with high efficiency and high stability that can absorb near-infrared light beyond the existing visible light range with a perovskite-dipole-organic semiconductor hybrid structure

< Photo. (From left) Professor Jung-Yong Lee, Ph.D. candidate Min-Ho Lee, and Master’s candidate Min Seok Kim of the School of Electrical Engineering >

Existing perovskite solar cells, which have the problem of not being able to utilize approximately 52% of total solar energy, have been developed by a Korean research team as an innovative technology that maximizes near-infrared light capture performance while greatly improving power conversion efficiency. This greatly increases the possibility of commercializing next-generation solar cells and is expected to contribute to important technological advancements in the global solar cell market.

The research team of Professor Jung-Yong Lee of the School of Electrical Engineering at KAIST (President Kwang-Hyung Lee) and Professor Woojae Kim of the Department of Chemistry at Yonsei University announced on October 31st that they have developed a high-efficiency and high-stability organic-inorganic hybrid solar cell production technology that maximizes near-infrared light capture beyond the existing visible light range.

The research team suggested and advanced a hybrid next-generation device structure with organic photo-semiconductors that complements perovskite materials limited to visible light absorption and expands the absorption range to near-infrared.

In addition, they revealed the electronic structure problem that mainly occurs in the structure and announced a high-performance solar cell device that dramatically solved this problem by introducing a dipole layer*.

*Dipole layer: A thin material layer that controls the energy level within the device to facilitate charge transport and forms an interface potential difference to improve device performance.

Existing lead-based perovskite solar cells have a problem in that their absorption spectrum is limited to the visible light region with a wavelength of 850 nanometers (nm) or less, which prevents them from utilizing approximately 52% of the total solar energy.

To solve this problem, the research team designed a hybrid device that combined an organic bulk heterojunction (BHJ) with perovskite and implemented a solar cell that can absorb up to the near-infrared region.

In particular, by introducing a sub-nanometer dipole interface layer, they succeeded in alleviating the energy barrier between the perovskite and the organic bulk heterojunction (BHJ), suppressing charge accumulation, maximizing the contribution to the near-infrared, and improving the current density (JSC) to 4.9 mA/cm².

The key achievement of this study is that the power conversion efficiency (PCE) of the hybrid device has been significantly increased from 20.4% to 24.0%. In particular, this study achieved a high internal quantum efficiency (IQE) compared to previous studies, reaching 78% in the near-infrared region.

< Figure. The illustration of the mechanism of improving the electronic structure and charge transfer capability through Perovskite/organic hybrid device structure and dipole interfacial layers (DILs). The proposed dipole interfacial layer forms a strong interfacial dipole, effectively reducing the energy barrier between the perovskite and organic bulk heterojunction (BHJ), and suppressing hole accumulation. This technology improves near-infrared photon harvesting and charge transfer, and as a result, the power conversion efficiency of the solar cell increases to 24.0%. In addition, it achieves excellent stability by maintaining performance for 1,200 hours even in an extremely humid environment. >

In addition, this device showed high stability, showing excellent results of maintaining more than 80% of the initial efficiency in the maximum output tracking for more than 800 hours even under extreme humidity conditions.

Professor Jung-Yong Lee said, “Through this study, we have effectively solved the charge accumulation and energy band mismatch problems faced by existing perovskite/organic hybrid solar cells, and we will be able to significantly improve the power conversion efficiency while maximizing the near-infrared light capture performance, which will be a new breakthrough that can solve the mechanical-chemical stability problems of existing perovskites and overcome the optical limitations.”

This study, in which KAIST School of Electrical Engineering Ph.D. candidate Min-Ho Lee and Master's candidate Min Seok Kim participated as co-first authors, was published in the September 30th online edition of the international academic journal Advanced Materials. (Paper title: Suppressing Hole Accumulation Through Sub-Nanometer Dipole Interfaces in Hybrid Perovskite/Organic Solar Cells for Boosting Near-Infrared Photon Harvesting).

This study was conducted with the support of the National Research Foundation of Korea.

2024.10.31 View 4302

KAIST Researchers Introduce New and Improved, Next-Generation Perovskite Solar Cell

- KAIST-Yonsei university researchers developed innovative dipole technology to maximize near-infrared photon harvesting efficiency

- Overcoming the shortcoming of existing perovskite solar cells that cannot utilize approximately 52% of total solar energy

- Development of next-generation solar cell technology with high efficiency and high stability that can absorb near-infrared light beyond the existing visible light range with a perovskite-dipole-organic semiconductor hybrid structure

< Photo. (From left) Professor Jung-Yong Lee, Ph.D. candidate Min-Ho Lee, and Master’s candidate Min Seok Kim of the School of Electrical Engineering >

Existing perovskite solar cells, which have the problem of not being able to utilize approximately 52% of total solar energy, have been developed by a Korean research team as an innovative technology that maximizes near-infrared light capture performance while greatly improving power conversion efficiency. This greatly increases the possibility of commercializing next-generation solar cells and is expected to contribute to important technological advancements in the global solar cell market.

The research team of Professor Jung-Yong Lee of the School of Electrical Engineering at KAIST (President Kwang-Hyung Lee) and Professor Woojae Kim of the Department of Chemistry at Yonsei University announced on October 31st that they have developed a high-efficiency and high-stability organic-inorganic hybrid solar cell production technology that maximizes near-infrared light capture beyond the existing visible light range.

The research team suggested and advanced a hybrid next-generation device structure with organic photo-semiconductors that complements perovskite materials limited to visible light absorption and expands the absorption range to near-infrared.

In addition, they revealed the electronic structure problem that mainly occurs in the structure and announced a high-performance solar cell device that dramatically solved this problem by introducing a dipole layer*.

*Dipole layer: A thin material layer that controls the energy level within the device to facilitate charge transport and forms an interface potential difference to improve device performance.

Existing lead-based perovskite solar cells have a problem in that their absorption spectrum is limited to the visible light region with a wavelength of 850 nanometers (nm) or less, which prevents them from utilizing approximately 52% of the total solar energy.

To solve this problem, the research team designed a hybrid device that combined an organic bulk heterojunction (BHJ) with perovskite and implemented a solar cell that can absorb up to the near-infrared region.

In particular, by introducing a sub-nanometer dipole interface layer, they succeeded in alleviating the energy barrier between the perovskite and the organic bulk heterojunction (BHJ), suppressing charge accumulation, maximizing the contribution to the near-infrared, and improving the current density (JSC) to 4.9 mA/cm².

The key achievement of this study is that the power conversion efficiency (PCE) of the hybrid device has been significantly increased from 20.4% to 24.0%. In particular, this study achieved a high internal quantum efficiency (IQE) compared to previous studies, reaching 78% in the near-infrared region.

< Figure. The illustration of the mechanism of improving the electronic structure and charge transfer capability through Perovskite/organic hybrid device structure and dipole interfacial layers (DILs). The proposed dipole interfacial layer forms a strong interfacial dipole, effectively reducing the energy barrier between the perovskite and organic bulk heterojunction (BHJ), and suppressing hole accumulation. This technology improves near-infrared photon harvesting and charge transfer, and as a result, the power conversion efficiency of the solar cell increases to 24.0%. In addition, it achieves excellent stability by maintaining performance for 1,200 hours even in an extremely humid environment. >

In addition, this device showed high stability, showing excellent results of maintaining more than 80% of the initial efficiency in the maximum output tracking for more than 800 hours even under extreme humidity conditions.

Professor Jung-Yong Lee said, “Through this study, we have effectively solved the charge accumulation and energy band mismatch problems faced by existing perovskite/organic hybrid solar cells, and we will be able to significantly improve the power conversion efficiency while maximizing the near-infrared light capture performance, which will be a new breakthrough that can solve the mechanical-chemical stability problems of existing perovskites and overcome the optical limitations.”

This study, in which KAIST School of Electrical Engineering Ph.D. candidate Min-Ho Lee and Master's candidate Min Seok Kim participated as co-first authors, was published in the September 30th online edition of the international academic journal Advanced Materials. (Paper title: Suppressing Hole Accumulation Through Sub-Nanometer Dipole Interfaces in Hybrid Perovskite/Organic Solar Cells for Boosting Near-Infrared Photon Harvesting).

This study was conducted with the support of the National Research Foundation of Korea.

2024.10.31 View 4302 -

KAIST Proposes AI Training Method that will Drastically Shorten Time for Complex Quantum Mechanical Calculations

- Professor Yong-Hoon Kim's team from the School of Electrical Engineering succeeded for the first time in accelerating quantum mechanical electronic structure calculations using a convolutional neural network (CNN) model

- Presenting an AI learning principle of quantum mechanical 3D chemical bonding information, the work is expected to accelerate the computer-assisted designing of next-generation materials and devices

The close relationship between AI and high-performance scientific computing can be seen in the fact that both the 2024 Nobel Prizes in Physics and Chemistry were awarded to scientists for their AI-related research contributions in their respective fields of study. KAIST researchers succeeded in dramatically reducing the computation time for highly sophisticated quantum mechanical computer simulations by predicting atomic-level chemical bonding information distributed in 3D space using a novel AI approach.

KAIST (President Kwang-Hyung Lee) announced on the 30th of October that Professor Yong-Hoon Kim's team from the School of Electrical Engineering developed a 3D computer vision artificial neural network-based computation methodology that bypasses the complex algorithms required for atomic-level quantum mechanical calculations traditionally performed using supercomputers to derive the properties of materials.

< Figure 1. Various methodologies are utilized in the simulation of materials and materials, such as quantum mechanical calculations at the nanometer (nm) level, classical mechanical force fields at the scale of tens to hundreds of nanometers, continuum dynamics calculations at the macroscopic scale, and calculations that mix simulations at different scales. These simulations are already playing a key role in a wide range of basic research and application development fields in combination with informatics techniques. Recently, there have been active efforts to introduce machine learning techniques to radically accelerate simulations, but research on introducing machine learning techniques to quantum mechanical electronic structure calculations, which form the basis of high-scale simulations, is still insufficient. >

The quantum mechanical density functional theory (DFT) calculations using supercomputers have become an essential and standard tool in a wide range of research and development fields, including advanced materials and drug design, as they allow fast and accurate prediction of material properties.

*Density functional theory (DFT): A representative theory of ab initio (first principles) calculations that calculate quantum mechanical properties from the atomic level.

However, practical DFT calculations require generating 3D electron density and solving quantum mechanical equations through a complex, iterative self-consistent field (SCF)* process that must be repeated tens to hundreds of times. This restricts its application to systems with only a few hundred to a few thousand atoms.

*Self-consistent field (SCF): A scientific computing method widely used to solve complex many-body problems that must be described by a number of interconnected simultaneous differential equations.

Professor Yong-Hoon Kim’s research team questioned whether recent advancements in AI techniques could be used to bypass the SCF process. As a result, they developed the DeepSCF model, which accelerates calculations by learning chemical bonding information distributed in a 3D space using neural network algorithms from the field of computer vision.

< Figure 2. The deepSCF methodology developed in this study provides a way to rapidly accelerate DFT calculations by avoiding the self-consistent field process (orange box) that had to be performed repeatedly in traditional quantum mechanical electronic structure calculations through artificial neural network techniques (green box). The self-consistent field process is a process of predicting the 3D electron density, constructing the corresponding potential, and then solving the quantum mechanical Cohn-Sham equations, repeating tens to hundreds of times. The core idea of the deepSCF methodology is that the residual electron density (δρ), which is the difference between the electron density (ρ) and the sum of the electron densities of the constituent atoms (ρ0), corresponds to chemical bonding information, so the self-consistent field process is replaced with a 3D convolutional neural network model. >

The research team focused on the fact that, according to density functional theory, electron density contains all quantum mechanical information of electrons, and that the residual electron density — the difference between the total electron density and the sum of the electron densities of the constituent atoms — contains chemical bonding information. They used this as the target for machine learning.

They then adopted a dataset of organic molecules with various chemical bonding characteristics, and applied random rotations and deformations to the atomic structures of these molecules to further enhance the model’s accuracy and generalization capabilities. Ultimately, the research team demonstrated the validity and efficiency of the DeepSCF methodology on large, complex systems.

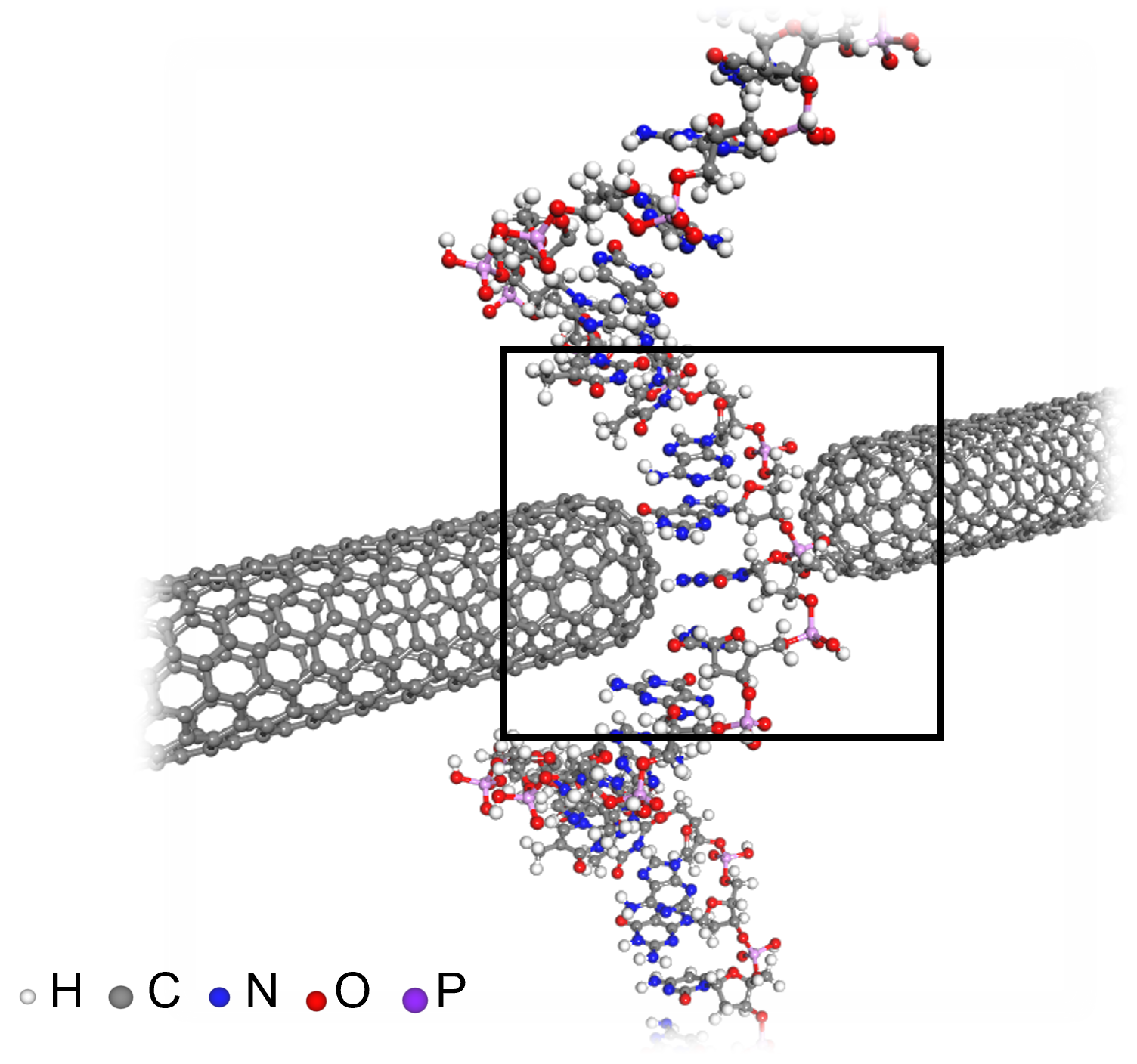

< Figure 3. An example of applying the deepSCF methodology to a carbon nanotube-based DNA sequence analysis device model (top left). In addition to classical mechanical interatomic forces (bottom right), the residual electron density (top right) and quantum mechanical electronic structure properties such as the electronic density of states (DOS) (bottom left) containing information on chemical bonding are rapidly predicted with an accuracy corresponding to the standard DFT calculation results that perform the SCF process. >

Professor Yong-Hoon Kim, who supervised the research, explained that his team had found a way to map quantum mechanical chemical bonding information in a 3D space onto artificial neural networks. He noted, “Since quantum mechanical electron structure calculations underpin materials simulations across all scales, this research establishes a foundational principle for accelerating material calculations using artificial intelligence.”

Ryong-Gyu Lee, a PhD candidate in the School of Electrical Engineering, served as the first author of this research, which was published online on October 24 in Npj Computational Materials, a prestigious journal in the field of material computation. (Paper title: “Convolutional network learning of self-consistent electron density via grid-projected atomic fingerprints”)

This research was conducted with support from the KAIST High-Risk Research Program for Graduate Students and the National Research Foundation of Korea’s Mid-career Researcher Support Program.

2024.10.30 View 3336

KAIST Proposes AI Training Method that will Drastically Shorten Time for Complex Quantum Mechanical Calculations

- Professor Yong-Hoon Kim's team from the School of Electrical Engineering succeeded for the first time in accelerating quantum mechanical electronic structure calculations using a convolutional neural network (CNN) model

- Presenting an AI learning principle of quantum mechanical 3D chemical bonding information, the work is expected to accelerate the computer-assisted designing of next-generation materials and devices

The close relationship between AI and high-performance scientific computing can be seen in the fact that both the 2024 Nobel Prizes in Physics and Chemistry were awarded to scientists for their AI-related research contributions in their respective fields of study. KAIST researchers succeeded in dramatically reducing the computation time for highly sophisticated quantum mechanical computer simulations by predicting atomic-level chemical bonding information distributed in 3D space using a novel AI approach.

KAIST (President Kwang-Hyung Lee) announced on the 30th of October that Professor Yong-Hoon Kim's team from the School of Electrical Engineering developed a 3D computer vision artificial neural network-based computation methodology that bypasses the complex algorithms required for atomic-level quantum mechanical calculations traditionally performed using supercomputers to derive the properties of materials.

< Figure 1. Various methodologies are utilized in the simulation of materials and materials, such as quantum mechanical calculations at the nanometer (nm) level, classical mechanical force fields at the scale of tens to hundreds of nanometers, continuum dynamics calculations at the macroscopic scale, and calculations that mix simulations at different scales. These simulations are already playing a key role in a wide range of basic research and application development fields in combination with informatics techniques. Recently, there have been active efforts to introduce machine learning techniques to radically accelerate simulations, but research on introducing machine learning techniques to quantum mechanical electronic structure calculations, which form the basis of high-scale simulations, is still insufficient. >

The quantum mechanical density functional theory (DFT) calculations using supercomputers have become an essential and standard tool in a wide range of research and development fields, including advanced materials and drug design, as they allow fast and accurate prediction of material properties.

*Density functional theory (DFT): A representative theory of ab initio (first principles) calculations that calculate quantum mechanical properties from the atomic level.

However, practical DFT calculations require generating 3D electron density and solving quantum mechanical equations through a complex, iterative self-consistent field (SCF)* process that must be repeated tens to hundreds of times. This restricts its application to systems with only a few hundred to a few thousand atoms.

*Self-consistent field (SCF): A scientific computing method widely used to solve complex many-body problems that must be described by a number of interconnected simultaneous differential equations.

Professor Yong-Hoon Kim’s research team questioned whether recent advancements in AI techniques could be used to bypass the SCF process. As a result, they developed the DeepSCF model, which accelerates calculations by learning chemical bonding information distributed in a 3D space using neural network algorithms from the field of computer vision.

< Figure 2. The deepSCF methodology developed in this study provides a way to rapidly accelerate DFT calculations by avoiding the self-consistent field process (orange box) that had to be performed repeatedly in traditional quantum mechanical electronic structure calculations through artificial neural network techniques (green box). The self-consistent field process is a process of predicting the 3D electron density, constructing the corresponding potential, and then solving the quantum mechanical Cohn-Sham equations, repeating tens to hundreds of times. The core idea of the deepSCF methodology is that the residual electron density (δρ), which is the difference between the electron density (ρ) and the sum of the electron densities of the constituent atoms (ρ0), corresponds to chemical bonding information, so the self-consistent field process is replaced with a 3D convolutional neural network model. >

The research team focused on the fact that, according to density functional theory, electron density contains all quantum mechanical information of electrons, and that the residual electron density — the difference between the total electron density and the sum of the electron densities of the constituent atoms — contains chemical bonding information. They used this as the target for machine learning.

They then adopted a dataset of organic molecules with various chemical bonding characteristics, and applied random rotations and deformations to the atomic structures of these molecules to further enhance the model’s accuracy and generalization capabilities. Ultimately, the research team demonstrated the validity and efficiency of the DeepSCF methodology on large, complex systems.

< Figure 3. An example of applying the deepSCF methodology to a carbon nanotube-based DNA sequence analysis device model (top left). In addition to classical mechanical interatomic forces (bottom right), the residual electron density (top right) and quantum mechanical electronic structure properties such as the electronic density of states (DOS) (bottom left) containing information on chemical bonding are rapidly predicted with an accuracy corresponding to the standard DFT calculation results that perform the SCF process. >

Professor Yong-Hoon Kim, who supervised the research, explained that his team had found a way to map quantum mechanical chemical bonding information in a 3D space onto artificial neural networks. He noted, “Since quantum mechanical electron structure calculations underpin materials simulations across all scales, this research establishes a foundational principle for accelerating material calculations using artificial intelligence.”

Ryong-Gyu Lee, a PhD candidate in the School of Electrical Engineering, served as the first author of this research, which was published online on October 24 in Npj Computational Materials, a prestigious journal in the field of material computation. (Paper title: “Convolutional network learning of self-consistent electron density via grid-projected atomic fingerprints”)

This research was conducted with support from the KAIST High-Risk Research Program for Graduate Students and the National Research Foundation of Korea’s Mid-career Researcher Support Program.

2024.10.30 View 3336 -

KAIST Develops Technology for the Precise Diagnosis of Electric Vehicle Batteries Using Small Currents

Accurately diagnosing the state of electric vehicle (EV) batteries is essential for their efficient management and safe use. KAIST researchers have developed a new technology that can diagnose and monitor the state of batteries with high precision using only small amounts of current, which is expected to maximize the batteries’ long-term stability and efficiency.

KAIST (represented by President Kwang Hyung Lee) announced on the 17th of October that a research team led by Professors Kyeongha Kwon and Sang-Gug Lee from the School of Electrical Engineering had developed electrochemical impedance spectroscopy (EIS) technology that can be used to improve the stability and performance of high-capacity batteries in electric vehicles.

EIS is a powerful tool that measures the impedance* magnitude and changes in a battery, allowing the evaluation of battery efficiency and loss. It is considered an important tool for assessing the state of charge (SOC) and state of health (SOH) of batteries. Additionally, it can be used to identify thermal characteristics, chemical/physical changes, predict battery life, and determine the causes of failures. *Battery Impedance: A measure of the resistance to current flow within the battery that is used to assess battery performance and condition.

However, traditional EIS equipment is expensive and complex, making it difficult to install, operate, and maintain. Moreover, due to sensitivity and precision limitations, applying current disturbances of several amperes (A) to a battery can cause significant electrical stress, increasing the risk of battery failure or fire and making it difficult to use in practice.

< Figure 1. Flow chart for diagnosis and prevention of unexpected combustion via the use of the electrochemical impedance spectroscopy (EIS) for the batteries for electric vehicles. >

To address this, the KAIST research team developed and validated a low-current EIS system for diagnosing the condition and health of high-capacity EV batteries. This EIS system can precisely measure battery impedance with low current disturbances (10mA), minimizing thermal effects and safety issues during the measurement process.

In addition, the system minimizes bulky and costly components, making it easy to integrate into vehicles. The system was proven effective in identifying the electrochemical properties of batteries under various operating conditions, including different temperatures and SOC levels.