smartphone

-

See-through exhibitions using smartphones: KAIST develops the AR magic lens, WonderScope

WonderScope shows what’s underneath the surface of an object through an augmented reality technology.

< Photo 1. Demonstration at ACM SIGGRAPH >

- A KAIST research team led by Professor Woohun Lee from the Department of Industrial Design and Professor Geehyuk Lee from the School of Computing have developed a smartphone “appcessory” called WonderScope that can easily add an augmented reality (AR) perspective to the surface of exhibits

- The research won an Honorable Mention for Emerging Technologies Best in Show at ACM SIGGRAPH, one of the largest international conferences on computer graphics and interactions

- The technology was improved and validated through real-life applications in three special exhibitions including one at the Geological Museum at the Korea Institute of Geoscience and Mineral Resources (KIGAM) held in 2020, and two at the National Science Museum each in 2021 and 2022

- The technology is expected to be used for public science exhibitions and museums as well as for interactive teaching materials to stimulate children’s curiosity

A KAIST research team led by Professor Woohun Lee from the Department of Industrial Design and Professor Geehyuk Lee from the School of Computing developed a novel augmented reality (AR) device, WonderScope, which displays the insides of an object directly from its surface. By installing and connecting WonderScope to a mobile device through Bluetooth, users can see through exhibits as if looking through a magic lens.

Many science museums nowadays have incorporated the use of AR apps for mobile devices. Such apps add digital information to the exhibition, providing a unique experience. However, visitors must watch the screen from a certain distance away from the exhibited items, often causing them to focus more on the digital contents rather than the exhibits themselves. In other words, the distance and distractions that exist between the exhibit and the mobile device may actually cause the visitors to feel detached from the exhibition. To solve this problem, museums needed a magic AR lens that could be used directly from the surface of the item.

To accomplish this, smartphones must know exactly where on the surface of an object it is placed. Generally, this would require an additional recognition device either on the inside or on the surface of the item, or a special pattern printed on its surface. Realistically speaking, these are impractical solutions, as exhibits would either appear overly complex or face spatial restrictions.

WonderScope, on the other hand, uses a much more practical method to identify the location of a smartphone on the surface of an exhibit. First, it reads a small RFID tag attached to the surface of an object, and calculates the location of the moving smartphone by adding its relative movements based on the readings from an optical displacement sensor and an acceleration sensor. The research team also took into consideration the height of the smartphone, and the characteristics of the surface profile in order to calculate the device’s position more accurately. By attaching or embedding RFID tags on exhibits, visitors can easily experience the effects of a magic AR lens through their smartphones.

For its wider use, WonderScope must be able to locate itself from various types of exhibit surfaces. To this end, WoderScope uses readings from an optical displacement sensor and an acceleration sensor with complementary characteristics, allowing stable locating capacities on various textures including paper, stone, wood, plastic, acrylic, and glass, as well as surfaces with physical patterns or irregularities. As a result, WonderScope can identify its location from a distance as close as 4 centimeters from an object, also enabling simple three-dimensional interactions near the surface of the exhibits.

The research team developed various case project templates and WonderScope support tools to allow the facile production of smartphone apps that use general-purpose virtual reality (VR) and the game engine Unity. WonderScope is also compatible with various types of devices that run on the Android operating system, including smartwatches, smartphones, and tablets, allowing it to be applied to exhibitions in many forms.

< Photo 2. Human body model showing demonstration >

< Photo 3. Demonstration of the underground mineral exploration game >

< Photo 4. Demonstration of Apollo 11 moon exploration experience >

The research team developed WonderScope with funding from the science and culture exhibition enhancement support project by the Ministry of Science and ICT. Between October 27, 2020 and February 28, 2021, WonderScope was used to observe underground volcanic activity and the insides of volcanic rocks at “There Once was a Volcano”, a special exhibition held at the Geological Museum in the Korea institute of Geoscience and Mineral Resources (KIGAM). From September 28 to October 3, 2021, it was used to observe the surface of Jung-moon-kyung (a bronze mirror with fine linear design) at the special exhibition “A Bronze Mirror Shines on Science” at the National Science Museum. And from August 2 to October 3, 2022 it was applied to a moon landing simulation at “The Special Exhibition on Moon Exploration”, also at the National Science Museum. Through various field demonstrations over the years, the research team has improved the performance and usability of WonderScope.

< Photo 5. Observation of surface corrosion of the main gate >

The research team demonstrated WonderScope at the Emerging Technologies forum during ACM SIGGRAPH 2022, a computer graphics and interaction technology conference that was held in Vancouver, Canada between August 8 and 11 this year. At this conference, where the latest interactive technologies are introduced, the team won an Honorable Mention for Best in Show. The judges commented that “WonderScope will be a new technology that provides the audience with a unique joy of participation during their visits to exhibitions and museums.”

< Photo 6. Cover of Digital Creativity >

WonderScope is a cylindrical “appcessory” module, 5cm in diameter and 4.5cm in height. It is small enough to be easily attached to a smartphone and embedded on most exhibits. Professor Woohun Lee from the KAIST Department of Industrial Design, who supervised the research, said, “WonderScope can be applied to various applications including not only educational, but also industrial exhibitions, in many ways.” He added, “We also expect for it to be used as an interactive teaching tool that stimulates children’s curiosity.”

Introductory video of WonderScope: https://www.youtube.com/watch?v=X2MyAXRt7h4&t=7s

2022.10.24 View 10078

See-through exhibitions using smartphones: KAIST develops the AR magic lens, WonderScope

WonderScope shows what’s underneath the surface of an object through an augmented reality technology.

< Photo 1. Demonstration at ACM SIGGRAPH >

- A KAIST research team led by Professor Woohun Lee from the Department of Industrial Design and Professor Geehyuk Lee from the School of Computing have developed a smartphone “appcessory” called WonderScope that can easily add an augmented reality (AR) perspective to the surface of exhibits

- The research won an Honorable Mention for Emerging Technologies Best in Show at ACM SIGGRAPH, one of the largest international conferences on computer graphics and interactions

- The technology was improved and validated through real-life applications in three special exhibitions including one at the Geological Museum at the Korea Institute of Geoscience and Mineral Resources (KIGAM) held in 2020, and two at the National Science Museum each in 2021 and 2022

- The technology is expected to be used for public science exhibitions and museums as well as for interactive teaching materials to stimulate children’s curiosity

A KAIST research team led by Professor Woohun Lee from the Department of Industrial Design and Professor Geehyuk Lee from the School of Computing developed a novel augmented reality (AR) device, WonderScope, which displays the insides of an object directly from its surface. By installing and connecting WonderScope to a mobile device through Bluetooth, users can see through exhibits as if looking through a magic lens.

Many science museums nowadays have incorporated the use of AR apps for mobile devices. Such apps add digital information to the exhibition, providing a unique experience. However, visitors must watch the screen from a certain distance away from the exhibited items, often causing them to focus more on the digital contents rather than the exhibits themselves. In other words, the distance and distractions that exist between the exhibit and the mobile device may actually cause the visitors to feel detached from the exhibition. To solve this problem, museums needed a magic AR lens that could be used directly from the surface of the item.

To accomplish this, smartphones must know exactly where on the surface of an object it is placed. Generally, this would require an additional recognition device either on the inside or on the surface of the item, or a special pattern printed on its surface. Realistically speaking, these are impractical solutions, as exhibits would either appear overly complex or face spatial restrictions.

WonderScope, on the other hand, uses a much more practical method to identify the location of a smartphone on the surface of an exhibit. First, it reads a small RFID tag attached to the surface of an object, and calculates the location of the moving smartphone by adding its relative movements based on the readings from an optical displacement sensor and an acceleration sensor. The research team also took into consideration the height of the smartphone, and the characteristics of the surface profile in order to calculate the device’s position more accurately. By attaching or embedding RFID tags on exhibits, visitors can easily experience the effects of a magic AR lens through their smartphones.

For its wider use, WonderScope must be able to locate itself from various types of exhibit surfaces. To this end, WoderScope uses readings from an optical displacement sensor and an acceleration sensor with complementary characteristics, allowing stable locating capacities on various textures including paper, stone, wood, plastic, acrylic, and glass, as well as surfaces with physical patterns or irregularities. As a result, WonderScope can identify its location from a distance as close as 4 centimeters from an object, also enabling simple three-dimensional interactions near the surface of the exhibits.

The research team developed various case project templates and WonderScope support tools to allow the facile production of smartphone apps that use general-purpose virtual reality (VR) and the game engine Unity. WonderScope is also compatible with various types of devices that run on the Android operating system, including smartwatches, smartphones, and tablets, allowing it to be applied to exhibitions in many forms.

< Photo 2. Human body model showing demonstration >

< Photo 3. Demonstration of the underground mineral exploration game >

< Photo 4. Demonstration of Apollo 11 moon exploration experience >

The research team developed WonderScope with funding from the science and culture exhibition enhancement support project by the Ministry of Science and ICT. Between October 27, 2020 and February 28, 2021, WonderScope was used to observe underground volcanic activity and the insides of volcanic rocks at “There Once was a Volcano”, a special exhibition held at the Geological Museum in the Korea institute of Geoscience and Mineral Resources (KIGAM). From September 28 to October 3, 2021, it was used to observe the surface of Jung-moon-kyung (a bronze mirror with fine linear design) at the special exhibition “A Bronze Mirror Shines on Science” at the National Science Museum. And from August 2 to October 3, 2022 it was applied to a moon landing simulation at “The Special Exhibition on Moon Exploration”, also at the National Science Museum. Through various field demonstrations over the years, the research team has improved the performance and usability of WonderScope.

< Photo 5. Observation of surface corrosion of the main gate >

The research team demonstrated WonderScope at the Emerging Technologies forum during ACM SIGGRAPH 2022, a computer graphics and interaction technology conference that was held in Vancouver, Canada between August 8 and 11 this year. At this conference, where the latest interactive technologies are introduced, the team won an Honorable Mention for Best in Show. The judges commented that “WonderScope will be a new technology that provides the audience with a unique joy of participation during their visits to exhibitions and museums.”

< Photo 6. Cover of Digital Creativity >

WonderScope is a cylindrical “appcessory” module, 5cm in diameter and 4.5cm in height. It is small enough to be easily attached to a smartphone and embedded on most exhibits. Professor Woohun Lee from the KAIST Department of Industrial Design, who supervised the research, said, “WonderScope can be applied to various applications including not only educational, but also industrial exhibitions, in many ways.” He added, “We also expect for it to be used as an interactive teaching tool that stimulates children’s curiosity.”

Introductory video of WonderScope: https://www.youtube.com/watch?v=X2MyAXRt7h4&t=7s

2022.10.24 View 10078 -

Wirelessly Rechargeable Soft Brain Implant Controls Brain Cells

Researchers have invented a smartphone-controlled soft brain implant that can be recharged wirelessly from outside the body. It enables long-term neural circuit manipulation without the need for periodic disruptive surgeries to replace the battery of the implant. Scientists believe this technology can help uncover and treat psychiatric disorders and neurodegenerative diseases such as addiction, depression, and Parkinson’s.

A group of KAIST researchers and collaborators have engineered a tiny brain implant that can be wirelessly recharged from outside the body to control brain circuits for long periods of time without battery replacement. The device is constructed of ultra-soft and bio-compliant polymers to help provide long-term compatibility with tissue. Geared with micrometer-sized LEDs (equivalent to the size of a grain of salt) mounted on ultrathin probes (the thickness of a human hair), it can wirelessly manipulate target neurons in the deep brain using light.

This study, led by Professor Jae-Woong Jeong, is a step forward from the wireless head-mounted implant neural device he developed in 2019. That previous version could indefinitely deliver multiple drugs and light stimulation treatment wirelessly by using a smartphone. For more, Manipulating Brain Cells by Smartphone.

For the new upgraded version, the research team came up with a fully implantable, soft optoelectronic system that can be remotely and selectively controlled by a smartphone. This research was published on January 22, 2021 in Nature Communications.

The new wireless charging technology addresses the limitations of current brain implants. Wireless implantable device technologies have recently become popular as alternatives to conventional tethered implants, because they help minimize stress and inflammation in freely-moving animals during brain studies, which in turn enhance the lifetime of the devices. However, such devices require either intermittent surgeries to replace discharged batteries, or special and bulky wireless power setups, which limit experimental options as well as the scalability of animal experiments.

“This powerful device eliminates the need for additional painful surgeries to replace an exhausted battery in the implant, allowing seamless chronic neuromodulation,” said Professor Jeong. “We believe that the same basic technology can be applied to various types of implants, including deep brain stimulators, and cardiac and gastric pacemakers, to reduce the burden on patients for long-term use within the body.”

To enable wireless battery charging and controls, researchers developed a tiny circuit that integrates a wireless energy harvester with a coil antenna and a Bluetooth low-energy chip. An alternating magnetic field can harmlessly penetrate through tissue, and generate electricity inside the device to charge the battery. Then the battery-powered Bluetooth implant delivers programmable patterns of light to brain cells using an “easy-to-use” smartphone app for real-time brain control.

“This device can be operated anywhere and anytime to manipulate neural circuits, which makes it a highly versatile tool for investigating brain functions,” said lead author Choong Yeon Kim, a researcher at KAIST.

Neuroscientists successfully tested these implants in rats and demonstrated their ability to suppress cocaine-induced behaviour after the rats were injected with cocaine. This was achieved by precise light stimulation of relevant target neurons in their brains using the smartphone-controlled LEDs. Furthermore, the battery in the implants could be repeatedly recharged while the rats were behaving freely, thus minimizing any physical interruption to the experiments.

“Wireless battery re-charging makes experimental procedures much less complicated,” said the co-lead author Min Jeong Ku, a researcher at Yonsei University’s College of Medicine.

“The fact that we can control a specific behaviour of animals, by delivering light stimulation into the brain just with a simple manipulation of smartphone app, watching freely moving animals nearby, is very interesting and stimulates a lot of imagination,” said Jeong-Hoon Kim, a professor of physiology at Yonsei University’s College of Medicine. “This technology will facilitate various avenues of brain research.”

The researchers believe this brain implant technology may lead to new opportunities for brain research and therapeutic intervention to treat diseases in the brain and other organs.

This work was supported by grants from the National Research Foundation of Korea and the KAIST Global Singularity Research Program.

-Profile

Professor Jae-Woong Jeong

https://www.jeongresearch.org/

School of Electrical Engineering

KAIST

2021.01.26 View 26600

Wirelessly Rechargeable Soft Brain Implant Controls Brain Cells

Researchers have invented a smartphone-controlled soft brain implant that can be recharged wirelessly from outside the body. It enables long-term neural circuit manipulation without the need for periodic disruptive surgeries to replace the battery of the implant. Scientists believe this technology can help uncover and treat psychiatric disorders and neurodegenerative diseases such as addiction, depression, and Parkinson’s.

A group of KAIST researchers and collaborators have engineered a tiny brain implant that can be wirelessly recharged from outside the body to control brain circuits for long periods of time without battery replacement. The device is constructed of ultra-soft and bio-compliant polymers to help provide long-term compatibility with tissue. Geared with micrometer-sized LEDs (equivalent to the size of a grain of salt) mounted on ultrathin probes (the thickness of a human hair), it can wirelessly manipulate target neurons in the deep brain using light.

This study, led by Professor Jae-Woong Jeong, is a step forward from the wireless head-mounted implant neural device he developed in 2019. That previous version could indefinitely deliver multiple drugs and light stimulation treatment wirelessly by using a smartphone. For more, Manipulating Brain Cells by Smartphone.

For the new upgraded version, the research team came up with a fully implantable, soft optoelectronic system that can be remotely and selectively controlled by a smartphone. This research was published on January 22, 2021 in Nature Communications.

The new wireless charging technology addresses the limitations of current brain implants. Wireless implantable device technologies have recently become popular as alternatives to conventional tethered implants, because they help minimize stress and inflammation in freely-moving animals during brain studies, which in turn enhance the lifetime of the devices. However, such devices require either intermittent surgeries to replace discharged batteries, or special and bulky wireless power setups, which limit experimental options as well as the scalability of animal experiments.

“This powerful device eliminates the need for additional painful surgeries to replace an exhausted battery in the implant, allowing seamless chronic neuromodulation,” said Professor Jeong. “We believe that the same basic technology can be applied to various types of implants, including deep brain stimulators, and cardiac and gastric pacemakers, to reduce the burden on patients for long-term use within the body.”

To enable wireless battery charging and controls, researchers developed a tiny circuit that integrates a wireless energy harvester with a coil antenna and a Bluetooth low-energy chip. An alternating magnetic field can harmlessly penetrate through tissue, and generate electricity inside the device to charge the battery. Then the battery-powered Bluetooth implant delivers programmable patterns of light to brain cells using an “easy-to-use” smartphone app for real-time brain control.

“This device can be operated anywhere and anytime to manipulate neural circuits, which makes it a highly versatile tool for investigating brain functions,” said lead author Choong Yeon Kim, a researcher at KAIST.

Neuroscientists successfully tested these implants in rats and demonstrated their ability to suppress cocaine-induced behaviour after the rats were injected with cocaine. This was achieved by precise light stimulation of relevant target neurons in their brains using the smartphone-controlled LEDs. Furthermore, the battery in the implants could be repeatedly recharged while the rats were behaving freely, thus minimizing any physical interruption to the experiments.

“Wireless battery re-charging makes experimental procedures much less complicated,” said the co-lead author Min Jeong Ku, a researcher at Yonsei University’s College of Medicine.

“The fact that we can control a specific behaviour of animals, by delivering light stimulation into the brain just with a simple manipulation of smartphone app, watching freely moving animals nearby, is very interesting and stimulates a lot of imagination,” said Jeong-Hoon Kim, a professor of physiology at Yonsei University’s College of Medicine. “This technology will facilitate various avenues of brain research.”

The researchers believe this brain implant technology may lead to new opportunities for brain research and therapeutic intervention to treat diseases in the brain and other organs.

This work was supported by grants from the National Research Foundation of Korea and the KAIST Global Singularity Research Program.

-Profile

Professor Jae-Woong Jeong

https://www.jeongresearch.org/

School of Electrical Engineering

KAIST

2021.01.26 View 26600 -

Object Identification and Interaction with a Smartphone Knock

(Professor Lee (far right) demonstrate 'Knocker' with his students.)

A KAIST team has featured a new technology, “Knocker”, which identifies objects and executes actions just by knocking on it with the smartphone. Software powered by machine learning of sounds, vibrations, and other reactions will perform the users’ directions.

What separates Knocker from existing technology is the sensor fusion of sound and motion. Previously, object identification used either computer vision technology with cameras or hardware such as RFID (Radio Frequency Identification) tags. These solutions all have their limitations. For computer vision technology, users need to take pictures of every item. Even worse, the technology will not work well in poor lighting situations. Using hardware leads to additional costs and labor burdens.

Knocker, on the other hand, can identify objects even in dark environments only with a smartphone, without requiring any specialized hardware or using a camera. Knocker utilizes the smartphone’s built-in sensors such as a microphone, an accelerometer, and a gyroscope to capture a unique set of responses generated when a smartphone is knocked against an object. Machine learning is used to analyze these responses and classify and identify objects.

The research team under Professor Sung-Ju Lee from the School of Computing confirmed the applicability of Knocker technology using 23 everyday objects such as books, laptop computers, water bottles, and bicycles. In noisy environments such as a busy café or on the side of a road, it achieved 83% identification accuracy. In a quiet indoor environment, the accuracy rose to 98%.

The team believes Knocker will open a new paradigm of object interaction. For instance, by knocking on an empty water bottle, a smartphone can automatically order new water bottles from a merchant app. When integrated with IoT devices, knocking on a bed’s headboard before going to sleep could turn off the lights and set an alarm. The team suggested and implemented 15 application cases in the paper, presented during the 2019 ACM International Joint Conference on Pervasive and Ubiquitous Computing (UbiComp 2019) held in London last month.

Professor Sung-Ju Lee said, “This new technology does not require any specialized sensor or hardware. It simply uses the built-in sensors on smartphones and takes advantage of the power of machine learning. It’s a software solution that everyday smartphone users could immediately benefit from.” He continued, “This technology enables users to conveniently interact with their favorite objects.”

The research was supported in part by the Next-Generation Information Computing Development Program through the National Research Foundation of Korea funded by the Ministry of Science and ICT and an Institute for Information & Communications Technology Promotion (IITP) grant funded by the Ministry of Science and ICT.

Figure: An example knock on a bottle. Knocker identifies the object by analyzing a unique set of responses from the knock, and automatically launches a proper application or service.

2019.10.02 View 28742

Object Identification and Interaction with a Smartphone Knock

(Professor Lee (far right) demonstrate 'Knocker' with his students.)

A KAIST team has featured a new technology, “Knocker”, which identifies objects and executes actions just by knocking on it with the smartphone. Software powered by machine learning of sounds, vibrations, and other reactions will perform the users’ directions.

What separates Knocker from existing technology is the sensor fusion of sound and motion. Previously, object identification used either computer vision technology with cameras or hardware such as RFID (Radio Frequency Identification) tags. These solutions all have their limitations. For computer vision technology, users need to take pictures of every item. Even worse, the technology will not work well in poor lighting situations. Using hardware leads to additional costs and labor burdens.

Knocker, on the other hand, can identify objects even in dark environments only with a smartphone, without requiring any specialized hardware or using a camera. Knocker utilizes the smartphone’s built-in sensors such as a microphone, an accelerometer, and a gyroscope to capture a unique set of responses generated when a smartphone is knocked against an object. Machine learning is used to analyze these responses and classify and identify objects.

The research team under Professor Sung-Ju Lee from the School of Computing confirmed the applicability of Knocker technology using 23 everyday objects such as books, laptop computers, water bottles, and bicycles. In noisy environments such as a busy café or on the side of a road, it achieved 83% identification accuracy. In a quiet indoor environment, the accuracy rose to 98%.

The team believes Knocker will open a new paradigm of object interaction. For instance, by knocking on an empty water bottle, a smartphone can automatically order new water bottles from a merchant app. When integrated with IoT devices, knocking on a bed’s headboard before going to sleep could turn off the lights and set an alarm. The team suggested and implemented 15 application cases in the paper, presented during the 2019 ACM International Joint Conference on Pervasive and Ubiquitous Computing (UbiComp 2019) held in London last month.

Professor Sung-Ju Lee said, “This new technology does not require any specialized sensor or hardware. It simply uses the built-in sensors on smartphones and takes advantage of the power of machine learning. It’s a software solution that everyday smartphone users could immediately benefit from.” He continued, “This technology enables users to conveniently interact with their favorite objects.”

The research was supported in part by the Next-Generation Information Computing Development Program through the National Research Foundation of Korea funded by the Ministry of Science and ICT and an Institute for Information & Communications Technology Promotion (IITP) grant funded by the Ministry of Science and ICT.

Figure: An example knock on a bottle. Knocker identifies the object by analyzing a unique set of responses from the knock, and automatically launches a proper application or service.

2019.10.02 View 28742 -

Augmented Reality Application for Smart Tour

‘K-Culture Time Machine,’ an augmented and virtual reality application will create a new way to take a tour. Prof. Woon-taek Woo's research team of Graduate School of Culture Technology of KAIST developed AR/VR application for smart tourism.

The 'K-Culture Time Machine' application (iOS App Store app name: KCTM) was launched on iOS App Store in Korea on May 22 as a pilot service that is targetting the Changdeokgung Palace of Seoul.

The application provides remote experience over time and space for cultural heritage or relics thorough wearable 360-degree video. Users can remotely experience cultural heritage sites with 360-degree video provided by installing a smartphone in a smartphone HMD device, and can search information on historical figures, places, and events related to cultural heritage. Also, 3D reconstruction of lost cultural heritage can be experienced.

Without using wearable HMD devices, mobile-based cultural heritage guides can be provided based on the vision-based recognition on the cultural heritages. Through the embedded camera in smartphone, the application can identify the heritages and provide related information and contents of the hertages. For example, in Changdeokgung Palace, a user can move inside the Changdeokgung Palace from Donhwa-Gate (the main gate of the Changdeokgung Palace), Injeong-Jeon(main hall), Injeong-Moon (Main gate of Injeong-Jeon), and to Huijeongdang (rest place for the king). Through the 360 degree panoramic image or video, the user can experience the virtual scene of heritages.

The virtual 3D reconstruction of the seungjeongwon (Royal Secretariat) which does not exist at present can be shown of the east side of the Injeong-Jeon The functions can be experienced on a smartphone without a wearable device, and it would be a commercial application that can be utilized in the field once the augmented reality function which is under development is completed.

Professor Woo and his research team constructed and applied standardized metadata of cultural heritage database and AR/VR contents. Through this standardized metadata, unlike existing applications which are temporarily consumed after development, reusable and interoperable contents can be made.Professor Woo said, "By enhancing the interoperability and reusability of AR contents, we will be able to preoccupy new markets in the field of smart tourism."

The research was conducted through the joint work with Post Media (CEO Hong Seung-mo) in the CT R&D project of the Ministry of Culture, Sports and Tourism of Korea. The results of the research will be announced through the HCI International 2017 conference in Canada this July.

Figure 1. 360 degree panorama image / video function screen of 'K-Culture Time Machine'. Smartphone HMD allows users to freely experience various cultural sites remotely.

Figure 2. 'K-Culture Time Machine' mobile augmented reality function screen. By analyzing the location of the user and the screen viewed through the camera, information related to the cultural heritage are provided to enhance the user experience.

Figure 3. The concept of 360-degree panoramic video-based VR service of "K-Culture Time Machine", a wearable application supporting smart tour of the historical sites. Through the smartphone HMD, a user can remotely experience cultural heritage sites and 3D reconstruction of cultural heritage that does not currently exist.

2017.05.30 View 12121

Augmented Reality Application for Smart Tour

‘K-Culture Time Machine,’ an augmented and virtual reality application will create a new way to take a tour. Prof. Woon-taek Woo's research team of Graduate School of Culture Technology of KAIST developed AR/VR application for smart tourism.

The 'K-Culture Time Machine' application (iOS App Store app name: KCTM) was launched on iOS App Store in Korea on May 22 as a pilot service that is targetting the Changdeokgung Palace of Seoul.

The application provides remote experience over time and space for cultural heritage or relics thorough wearable 360-degree video. Users can remotely experience cultural heritage sites with 360-degree video provided by installing a smartphone in a smartphone HMD device, and can search information on historical figures, places, and events related to cultural heritage. Also, 3D reconstruction of lost cultural heritage can be experienced.

Without using wearable HMD devices, mobile-based cultural heritage guides can be provided based on the vision-based recognition on the cultural heritages. Through the embedded camera in smartphone, the application can identify the heritages and provide related information and contents of the hertages. For example, in Changdeokgung Palace, a user can move inside the Changdeokgung Palace from Donhwa-Gate (the main gate of the Changdeokgung Palace), Injeong-Jeon(main hall), Injeong-Moon (Main gate of Injeong-Jeon), and to Huijeongdang (rest place for the king). Through the 360 degree panoramic image or video, the user can experience the virtual scene of heritages.

The virtual 3D reconstruction of the seungjeongwon (Royal Secretariat) which does not exist at present can be shown of the east side of the Injeong-Jeon The functions can be experienced on a smartphone without a wearable device, and it would be a commercial application that can be utilized in the field once the augmented reality function which is under development is completed.

Professor Woo and his research team constructed and applied standardized metadata of cultural heritage database and AR/VR contents. Through this standardized metadata, unlike existing applications which are temporarily consumed after development, reusable and interoperable contents can be made.Professor Woo said, "By enhancing the interoperability and reusability of AR contents, we will be able to preoccupy new markets in the field of smart tourism."

The research was conducted through the joint work with Post Media (CEO Hong Seung-mo) in the CT R&D project of the Ministry of Culture, Sports and Tourism of Korea. The results of the research will be announced through the HCI International 2017 conference in Canada this July.

Figure 1. 360 degree panorama image / video function screen of 'K-Culture Time Machine'. Smartphone HMD allows users to freely experience various cultural sites remotely.

Figure 2. 'K-Culture Time Machine' mobile augmented reality function screen. By analyzing the location of the user and the screen viewed through the camera, information related to the cultural heritage are provided to enhance the user experience.

Figure 3. The concept of 360-degree panoramic video-based VR service of "K-Culture Time Machine", a wearable application supporting smart tour of the historical sites. Through the smartphone HMD, a user can remotely experience cultural heritage sites and 3D reconstruction of cultural heritage that does not currently exist.

2017.05.30 View 12121 -

Improving Traffic Safety with a Crowdsourced Traffic Violation Reporting App

KAIST researchers revealed that crowdsourced traffic violation reporting with smartphone-based continuous video capturing can dramatically change the current practice of policing activities on the road and will significantly improve traffic safety.

Professor Uichin Lee of the Department of Industrial and Systems Engineering and the Graduate School of Knowledge Service Engineering at KAIST and his research team designed and evaluated Mobile Roadwatch, a mobile app that helps citizen record traffic violation with their smartphones and report the recorded videos to the police.

This app supports continuous video recording just like onboard vehicle dashboard cameras. Mobile Roadwatch allows drivers to safely capture traffic violations by simply touching a smartphone screen while driving. The captured videos are automatically tagged with contextual information such as location and time. This information will be used as important evidence for the police to ticket the violators. All of the captured videos can be conveniently reviewed, allowing users to decide which events to report to the police.

The team conducted a two-week field study to understand how drivers use Mobile Roadwatch. They found that the drivers tended to capture all traffic risks regardless of the level of their involvement and the seriousness of the traffic risks. However, when it came to actual reporting, they tended to report only serious traffic violations, which could have led to car accidents, such as traffic signal violations and illegal U-turns. After receiving feedback about their reports from the police, drivers typically felt very good about their contributions to traffic safety.

At the same time, some drivers felt pleased to know that the offenders received tickets since they thought these offenders deserved to be ticketed. While participating in the Mobile Roadwatch campaign, drivers reported that they tried to drive as safely as possible and abide by traffic laws. This was because they wanted to be as fair as possible so that they could capture others’ violations without feeling guilty. They were also afraid that other drivers might capture their violations.

Professor Lee said, “Our study participants answered that Mobile Roadwatch served as a very useful tool for reporting traffic violations, and they were highly satisfied with its features. Beyond simple reporting, our tool can be extended to support online communities, which help people actively discuss various local safety issues and work with the police and local authorities to solve these safety issues.”

Korea and India were the early adaptors supporting video-based reporting of traffic violations to the police. In recent years, the number of reports has dramatically increased. For example, Korea’s ‘Looking for a Witness’ (released in April 2015) received more than half million reported violations as of November 2016. In the US, authorities started tapping into smartphone recordings by releasing video-based reporting apps such as ICE Blackbox and Mobile Justice. Professor Lee said that the existing services cannot be used while driving, because none of the existing services support continuous video recording and safe event capturing behind the wheel.

Professor Lee’s team has been incorporating advanced computer vision techniques into Mobile Roadwatch for automatically capturing traffic violations and safety risks, including potholes and obstacles. The researchers will present their results in May at the ACM CHI Conference on Human Factors in Computing Systems (CHI 2017) in Denver, CO, USA. Their research was supported by the KAIST-KUSTAR fund.

(Caption: A driver is trying to capture an event by touching a screen. The Mobile Radwatch supports continuous video recording and safe event captureing behind the wheel.)

2017.04.10 View 11206

Improving Traffic Safety with a Crowdsourced Traffic Violation Reporting App

KAIST researchers revealed that crowdsourced traffic violation reporting with smartphone-based continuous video capturing can dramatically change the current practice of policing activities on the road and will significantly improve traffic safety.

Professor Uichin Lee of the Department of Industrial and Systems Engineering and the Graduate School of Knowledge Service Engineering at KAIST and his research team designed and evaluated Mobile Roadwatch, a mobile app that helps citizen record traffic violation with their smartphones and report the recorded videos to the police.

This app supports continuous video recording just like onboard vehicle dashboard cameras. Mobile Roadwatch allows drivers to safely capture traffic violations by simply touching a smartphone screen while driving. The captured videos are automatically tagged with contextual information such as location and time. This information will be used as important evidence for the police to ticket the violators. All of the captured videos can be conveniently reviewed, allowing users to decide which events to report to the police.

The team conducted a two-week field study to understand how drivers use Mobile Roadwatch. They found that the drivers tended to capture all traffic risks regardless of the level of their involvement and the seriousness of the traffic risks. However, when it came to actual reporting, they tended to report only serious traffic violations, which could have led to car accidents, such as traffic signal violations and illegal U-turns. After receiving feedback about their reports from the police, drivers typically felt very good about their contributions to traffic safety.

At the same time, some drivers felt pleased to know that the offenders received tickets since they thought these offenders deserved to be ticketed. While participating in the Mobile Roadwatch campaign, drivers reported that they tried to drive as safely as possible and abide by traffic laws. This was because they wanted to be as fair as possible so that they could capture others’ violations without feeling guilty. They were also afraid that other drivers might capture their violations.

Professor Lee said, “Our study participants answered that Mobile Roadwatch served as a very useful tool for reporting traffic violations, and they were highly satisfied with its features. Beyond simple reporting, our tool can be extended to support online communities, which help people actively discuss various local safety issues and work with the police and local authorities to solve these safety issues.”

Korea and India were the early adaptors supporting video-based reporting of traffic violations to the police. In recent years, the number of reports has dramatically increased. For example, Korea’s ‘Looking for a Witness’ (released in April 2015) received more than half million reported violations as of November 2016. In the US, authorities started tapping into smartphone recordings by releasing video-based reporting apps such as ICE Blackbox and Mobile Justice. Professor Lee said that the existing services cannot be used while driving, because none of the existing services support continuous video recording and safe event capturing behind the wheel.

Professor Lee’s team has been incorporating advanced computer vision techniques into Mobile Roadwatch for automatically capturing traffic violations and safety risks, including potholes and obstacles. The researchers will present their results in May at the ACM CHI Conference on Human Factors in Computing Systems (CHI 2017) in Denver, CO, USA. Their research was supported by the KAIST-KUSTAR fund.

(Caption: A driver is trying to capture an event by touching a screen. The Mobile Radwatch supports continuous video recording and safe event captureing behind the wheel.)

2017.04.10 View 11206 -

An App to Digitally Detox from Smartphone Addiction: Lock n' LOL

KAIST researchers have developed an application that helps people restrain themselves from using smartphones during meetings or social gatherings.

The app’s group limit mode enforces users to curtail their smartphone usage through peer-pressure while offering flexibility to use the phone in an emergency.

When a fake phone company released its line of products, NoPhones, a thin, rectangular-shaped plastic block that looked just like a smartphone but did not function, many doubted that the simulated smartphones would find any users. Surprisingly, close to 4,000 fake phones were sold to consumers who wanted to curb their phone usage.

As smartphones penetrate every facet of our daily lives, a growing number of people have expressed concern about distractions or even the addictions they suffer from overusing smartphones.

Professor Uichin Lee of the Department of Knowledge Service Engineering at the Korea Advanced Institute of Science and Technology (KAIST) and his research team have recently introduced a solution to this problem by developing an application, Lock n’ LoL (Lock Your Smartphone and Laugh Out Loud), to help people lock their smartphones altogether and keep them from using the phone while engaged in social activities such as meetings, conferences, and discussions.

Researchers note that the overuse of smartphones often results from users’ habitual checking of messages, emails, or other online contents such as status updates in social networking service (SNS). External stimuli, for example, notification alarms, add to smartphone distractions and interruptions in group interactions.

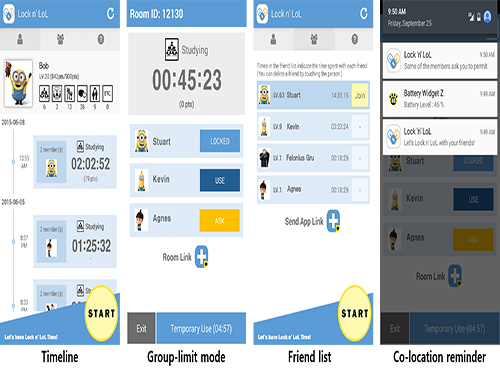

The Lock n’ LoL allows users to create a new room or join an existing room. The users then invite meeting participants or friends to the room and share its ID with them to enact the Group Limit (lock) mode. When phones are in the lock mode, all alarms and notifications are automatically muted, and users must ask permission to unlock their phones. However, in an emergency, users can access their phones for accumulative five minutes in a temporary unlimit mode.

In addition, the app’s Co-location Reminder detects and lists nearby users to encourage app users to limit their phone use. The Lock n’ LoL also displays important statistics to monitor users’ behavior such as the current week’s total limit time, the weekly average usage time, top friends ranked by time spent together, and top activities in which the users participated.

Professor Lee said,

“We conducted the Lock n’ LoL campaign throughout the campus for one month this year with 1,000 students participating. As a result, we discovered that students accumulated more than 10,000 free hours from using the app on their smartphones. The students said that they were able to focus more on their group activities. In an age of the Internet of Things, we expect that the adverse effects of mobile distractions and addictions will emerge as a social concern, and our Lock n’ LoL is a key effort to address this issue.”

He added, “This app will certainly help family members to interact more with each other during the holiday season.”

The Lock n’ LoL is available for free download on the App Store and Google Play: https://itunes.apple.com/lc/app/lock-n-lol/id1030287673?mt=8.

YouTube link: https://youtu.be/1wY2pI9qFYM

Figure 1: User Interfaces of Lock n’ LoL

This shows the final design of Lock n’ LoL, which consists of three tabs: My Info, Friends, and Group Limit Mode. Users can activate the limit mode by clicking the start button at the bottom of the screen.

Figure 2: Statistics of Field Deployment

This shows the deployment summary of Lock n’ LoL campaign in May 2015.

2015.12.17 View 10632

An App to Digitally Detox from Smartphone Addiction: Lock n' LOL

KAIST researchers have developed an application that helps people restrain themselves from using smartphones during meetings or social gatherings.

The app’s group limit mode enforces users to curtail their smartphone usage through peer-pressure while offering flexibility to use the phone in an emergency.

When a fake phone company released its line of products, NoPhones, a thin, rectangular-shaped plastic block that looked just like a smartphone but did not function, many doubted that the simulated smartphones would find any users. Surprisingly, close to 4,000 fake phones were sold to consumers who wanted to curb their phone usage.

As smartphones penetrate every facet of our daily lives, a growing number of people have expressed concern about distractions or even the addictions they suffer from overusing smartphones.

Professor Uichin Lee of the Department of Knowledge Service Engineering at the Korea Advanced Institute of Science and Technology (KAIST) and his research team have recently introduced a solution to this problem by developing an application, Lock n’ LoL (Lock Your Smartphone and Laugh Out Loud), to help people lock their smartphones altogether and keep them from using the phone while engaged in social activities such as meetings, conferences, and discussions.

Researchers note that the overuse of smartphones often results from users’ habitual checking of messages, emails, or other online contents such as status updates in social networking service (SNS). External stimuli, for example, notification alarms, add to smartphone distractions and interruptions in group interactions.

The Lock n’ LoL allows users to create a new room or join an existing room. The users then invite meeting participants or friends to the room and share its ID with them to enact the Group Limit (lock) mode. When phones are in the lock mode, all alarms and notifications are automatically muted, and users must ask permission to unlock their phones. However, in an emergency, users can access their phones for accumulative five minutes in a temporary unlimit mode.

In addition, the app’s Co-location Reminder detects and lists nearby users to encourage app users to limit their phone use. The Lock n’ LoL also displays important statistics to monitor users’ behavior such as the current week’s total limit time, the weekly average usage time, top friends ranked by time spent together, and top activities in which the users participated.

Professor Lee said,

“We conducted the Lock n’ LoL campaign throughout the campus for one month this year with 1,000 students participating. As a result, we discovered that students accumulated more than 10,000 free hours from using the app on their smartphones. The students said that they were able to focus more on their group activities. In an age of the Internet of Things, we expect that the adverse effects of mobile distractions and addictions will emerge as a social concern, and our Lock n’ LoL is a key effort to address this issue.”

He added, “This app will certainly help family members to interact more with each other during the holiday season.”

The Lock n’ LoL is available for free download on the App Store and Google Play: https://itunes.apple.com/lc/app/lock-n-lol/id1030287673?mt=8.

YouTube link: https://youtu.be/1wY2pI9qFYM

Figure 1: User Interfaces of Lock n’ LoL

This shows the final design of Lock n’ LoL, which consists of three tabs: My Info, Friends, and Group Limit Mode. Users can activate the limit mode by clicking the start button at the bottom of the screen.

Figure 2: Statistics of Field Deployment

This shows the deployment summary of Lock n’ LoL campaign in May 2015.

2015.12.17 View 10632 -

IAMCOMPANY, an educational technology startup created by a KAIST student, featured online in EdSurge

EdSurge is a U.S.-based online news site focused on education and technology innovation, which published an article, dated August 12, 2014, on IAMCOMPANY (http://iamcompany.net), a startup created by a KAIST student, Inmo (Ryan) Chung.

The article introduced one of the company’s most popular and free smartphone applications called “IAMSCHOOL” that “funnels school announcements and class notices to parents’ smartphones using a format similar to Twitter and Google+.”

For more about IAMCOMPANY, please visit the link below:

EdSurge, August 12, 2014

“South Korea’s Biggest Educational Information App Plans Pan-Asian Expansion”

https://www.edsurge.com/n/2014-08-12-south-korea-s-biggest-educational-information-app-plans-pan-asian-expansion

2014.08.19 View 8636

IAMCOMPANY, an educational technology startup created by a KAIST student, featured online in EdSurge

EdSurge is a U.S.-based online news site focused on education and technology innovation, which published an article, dated August 12, 2014, on IAMCOMPANY (http://iamcompany.net), a startup created by a KAIST student, Inmo (Ryan) Chung.

The article introduced one of the company’s most popular and free smartphone applications called “IAMSCHOOL” that “funnels school announcements and class notices to parents’ smartphones using a format similar to Twitter and Google+.”

For more about IAMCOMPANY, please visit the link below:

EdSurge, August 12, 2014

“South Korea’s Biggest Educational Information App Plans Pan-Asian Expansion”

https://www.edsurge.com/n/2014-08-12-south-korea-s-biggest-educational-information-app-plans-pan-asian-expansion

2014.08.19 View 8636