VR

-

KAIST's Pioneering VR Precision Technology & Choreography Tool Receive Spotlights at CHI 2025

Accurate pointing in virtual spaces is essential for seamless interaction. If pointing is not precise, selecting the desired object becomes challenging, breaking user immersion and reducing overall experience quality. KAIST researchers have developed a technology that offers a vivid, lifelike experience in virtual space, alongside a new tool that assists choreographers throughout the creative process.

KAIST (President Kwang-Hyung Lee) announced on May 13th that a research team led by Professor Sang Ho Yoon of the Graduate School of Culture Technology, in collaboration with Professor Yang Zhang of the University of California, Los Angeles (UCLA), has developed the ‘T2IRay’ technology and the ‘ChoreoCraft’ platform, which enables choreographers to work more freely and creatively in virtual reality. These technologies received two Honorable Mention awards, recognizing the top 5% of papers, at CHI 2025*, the best international conference in the field of human-computer interaction, hosted by the Association for Computing Machinery (ACM) from April 25 to May 1.

< (From left) PhD candidates Jina Kim and Kyungeun Jung along with Master's candidate, Hyunyoung Han and Professor Sang Ho Yoon of KAIST Graduate School of Culture Technology and Professor Yang Zhang (top) of UCLA >

T2IRay: Enabling Virtual Input with Precision

T2IRay introduces a novel input method that allows for precise object pointing in virtual environments by expanding traditional thumb-to-index gestures. This approach overcomes previous limitations, such as interruptions or reduced accuracy due to changes in hand position or orientation.

The technology uses a local coordinate system based on finger relationships, ensuring continuous input even as hand positions shift. It accurately captures subtle thumb movements within this coordinate system, integrating natural head movements to allow fluid, intuitive control across a wide range.

< Figure 1. T2IRay framework utilizing the delicate movements of the thumb and index fingers for AR/VR pointing >

Professor Sang Ho Yoon explained, “T2IRay can significantly enhance the user experience in AR/VR by enabling smooth, stable control even when the user’s hands are in motion.”

This study, led by first author Jina Kim, was supported by the Excellent New Researcher Support Project of the National Research Foundation of Korea under the Ministry of Science and ICT, as well as the University ICT Research Center (ITRC) Support Project of the Institute of Information and Communications Technology Planning and Evaluation (IITP).

▴ Paper title: T2IRay: Design of Thumb-to-Index Based Indirect Pointing for Continuous and Robust AR/VR Input▴ Paper link: https://doi.org/10.1145/3706598.3713442

▴ T2IRay demo video: https://youtu.be/ElJlcJbkJPY

ChoreoCraft: Creativity Support through VR for Choreographers

In addition, Professor Yoon’s team developed ‘ChoreoCraft,’ a virtual reality tool designed to support choreographers by addressing the unique challenges they face, such as memorizing complex movements, overcoming creative blocks, and managing subjective feedback.

ChoreoCraft reduces reliance on memory by allowing choreographers to save and refine movements directly within a VR space, using a motion-capture avatar for real-time interaction. It also enhances creativity by suggesting movements that naturally fit with prior choreography and musical elements. Furthermore, the system provides quantitative feedback by analyzing kinematic factors like motion stability and engagement, helping choreographers make data-driven creative decisions.

< Figure 2. ChoreoCraft's approaches to encourage creative process >

Professor Yoon noted, “ChoreoCraft is a tool designed to address the core challenges faced by choreographers, enhancing both creativity and efficiency. In user tests with professional choreographers, it received high marks for its ability to spark creative ideas and provide valuable quantitative feedback.”

This research was conducted in collaboration with doctoral candidate Kyungeun Jung and master’s candidate Hyunyoung Han, alongside the Electronics and Telecommunications Research Institute (ETRI) and One Million Co., Ltd. (CEO Hye-rang Kim), with support from the Cultural and Arts Immersive Service Development Project by the Ministry of Culture, Sports and Tourism.

▴ Paper title: ChoreoCraft: In-situ Crafting of Choreography in Virtual Reality through Creativity Support Tools▴ Paper link: https://doi.org/10.1145/3706598.3714220

▴ ChoreoCraft demo video: https://youtu.be/Ms1fwiSBjjw

*CHI (Conference on Human Factors in Computing Systems): The premier international conference on human-computer interaction, organized by the ACM, was held this year from April 25 to May 1, 2025.

2025.05.13 View 1471

KAIST's Pioneering VR Precision Technology & Choreography Tool Receive Spotlights at CHI 2025

Accurate pointing in virtual spaces is essential for seamless interaction. If pointing is not precise, selecting the desired object becomes challenging, breaking user immersion and reducing overall experience quality. KAIST researchers have developed a technology that offers a vivid, lifelike experience in virtual space, alongside a new tool that assists choreographers throughout the creative process.

KAIST (President Kwang-Hyung Lee) announced on May 13th that a research team led by Professor Sang Ho Yoon of the Graduate School of Culture Technology, in collaboration with Professor Yang Zhang of the University of California, Los Angeles (UCLA), has developed the ‘T2IRay’ technology and the ‘ChoreoCraft’ platform, which enables choreographers to work more freely and creatively in virtual reality. These technologies received two Honorable Mention awards, recognizing the top 5% of papers, at CHI 2025*, the best international conference in the field of human-computer interaction, hosted by the Association for Computing Machinery (ACM) from April 25 to May 1.

< (From left) PhD candidates Jina Kim and Kyungeun Jung along with Master's candidate, Hyunyoung Han and Professor Sang Ho Yoon of KAIST Graduate School of Culture Technology and Professor Yang Zhang (top) of UCLA >

T2IRay: Enabling Virtual Input with Precision

T2IRay introduces a novel input method that allows for precise object pointing in virtual environments by expanding traditional thumb-to-index gestures. This approach overcomes previous limitations, such as interruptions or reduced accuracy due to changes in hand position or orientation.

The technology uses a local coordinate system based on finger relationships, ensuring continuous input even as hand positions shift. It accurately captures subtle thumb movements within this coordinate system, integrating natural head movements to allow fluid, intuitive control across a wide range.

< Figure 1. T2IRay framework utilizing the delicate movements of the thumb and index fingers for AR/VR pointing >

Professor Sang Ho Yoon explained, “T2IRay can significantly enhance the user experience in AR/VR by enabling smooth, stable control even when the user’s hands are in motion.”

This study, led by first author Jina Kim, was supported by the Excellent New Researcher Support Project of the National Research Foundation of Korea under the Ministry of Science and ICT, as well as the University ICT Research Center (ITRC) Support Project of the Institute of Information and Communications Technology Planning and Evaluation (IITP).

▴ Paper title: T2IRay: Design of Thumb-to-Index Based Indirect Pointing for Continuous and Robust AR/VR Input▴ Paper link: https://doi.org/10.1145/3706598.3713442

▴ T2IRay demo video: https://youtu.be/ElJlcJbkJPY

ChoreoCraft: Creativity Support through VR for Choreographers

In addition, Professor Yoon’s team developed ‘ChoreoCraft,’ a virtual reality tool designed to support choreographers by addressing the unique challenges they face, such as memorizing complex movements, overcoming creative blocks, and managing subjective feedback.

ChoreoCraft reduces reliance on memory by allowing choreographers to save and refine movements directly within a VR space, using a motion-capture avatar for real-time interaction. It also enhances creativity by suggesting movements that naturally fit with prior choreography and musical elements. Furthermore, the system provides quantitative feedback by analyzing kinematic factors like motion stability and engagement, helping choreographers make data-driven creative decisions.

< Figure 2. ChoreoCraft's approaches to encourage creative process >

Professor Yoon noted, “ChoreoCraft is a tool designed to address the core challenges faced by choreographers, enhancing both creativity and efficiency. In user tests with professional choreographers, it received high marks for its ability to spark creative ideas and provide valuable quantitative feedback.”

This research was conducted in collaboration with doctoral candidate Kyungeun Jung and master’s candidate Hyunyoung Han, alongside the Electronics and Telecommunications Research Institute (ETRI) and One Million Co., Ltd. (CEO Hye-rang Kim), with support from the Cultural and Arts Immersive Service Development Project by the Ministry of Culture, Sports and Tourism.

▴ Paper title: ChoreoCraft: In-situ Crafting of Choreography in Virtual Reality through Creativity Support Tools▴ Paper link: https://doi.org/10.1145/3706598.3714220

▴ ChoreoCraft demo video: https://youtu.be/Ms1fwiSBjjw

*CHI (Conference on Human Factors in Computing Systems): The premier international conference on human-computer interaction, organized by the ACM, was held this year from April 25 to May 1, 2025.

2025.05.13 View 1471 -

KAIST develops 'MetaVRain' that realizes vivid 3D real-life images

KAIST (President Kwang Hyung Lee) is a high-speed, low-power artificial intelligence (AI: Artificial Intelligent) semiconductor* MetaVRain, which implements artificial intelligence-based 3D rendering that can render images close to real life on mobile devices.

* AI semiconductor: Semiconductor equipped with artificial intelligence processing functions such as recognition, reasoning, learning, and judgment, and implemented with optimized technology based on super intelligence, ultra-low power, and ultra-reliability

The artificial intelligence semiconductor developed by the research team makes the existing ray-tracing*-based 3D rendering driven by GPU into artificial intelligence-based 3D rendering on a newly manufactured AI semiconductor, making it a 3D video capture studio that requires enormous costs. is not needed, so the cost of 3D model production can be greatly reduced and the memory used can be reduced by more than 180 times. In particular, the existing 3D graphic editing and design, which used complex software such as Blender, is replaced with simple artificial intelligence learning, so the general public can easily apply and edit the desired style.

* Ray-tracing: Technology that obtains images close to real life by tracing the trajectory of all light rays that change according to the light source, shape and texture of the object

This research, in which doctoral student Donghyun Han participated as the first author, was presented at the International Solid-State Circuit Design Conference (ISSCC) held in San Francisco, USA from February 18th to 22nd by semiconductor researchers from all over the world.

(Paper Number 2.7, Paper Title: MetaVRain: A 133mW Real-time Hyper-realistic 3D NeRF Processor with 1D-2D Hybrid Neural Engines for Metaverse on Mobile Devices (Authors: Donghyeon Han, Junha Ryu, Sangyeob Kim, Sangjin Kim, and Hoi-Jun Yoo))

Professor Yoo's team discovered inefficient operations that occur when implementing 3D rendering through artificial intelligence, and developed a new concept semiconductor that combines human visual recognition methods to reduce them. When a person remembers an object, he has the cognitive ability to immediately guess what the current object looks like based on the process of starting with a rough outline and gradually specifying its shape, and if it is an object he saw right before. In imitation of such a human cognitive process, the newly developed semiconductor adopts an operation method that grasps the rough shape of an object in advance through low-resolution voxels and minimizes the amount of computation required for current rendering based on the result of rendering in the past.

MetaVRain, developed by Professor Yu's team, achieved the world's best performance by developing a state-of-the-art CMOS chip as well as a hardware architecture that mimics the human visual recognition process. MetaVRain is optimized for artificial intelligence-based 3D rendering technology and achieves a rendering speed of up to 100 FPS or more, which is 911 times faster than conventional GPUs. In addition, as a result of the study, the energy efficiency, which represents the energy consumed per video screen processing, is 26,400 times higher than that of GPU, opening the possibility of artificial intelligence-based real-time rendering in VR/AR headsets and mobile devices.

To show an example of using MetaVRain, the research team developed a smart 3D rendering application system together, and showed an example of changing the style of a 3D model according to the user's preferred style. Since you only need to give artificial intelligence an image of the desired style and perform re-learning, you can easily change the style of the 3D model without the help of complicated software. In addition to the example of the application system implemented by Professor Yu's team, it is expected that various application examples will be possible, such as creating a realistic 3D avatar modeled after a user's face, creating 3D models of various structures, and changing the weather according to the film production environment. do.

Starting with MetaVRain, the research team expects that the field of 3D graphics will also begin to be replaced by artificial intelligence, and revealed that the combination of artificial intelligence and 3D graphics is a great technological innovation for the realization of the metaverse.

Professor Hoi-Jun Yoo of the Department of Electrical and Electronic Engineering at KAIST, who led the research, said, “Currently, 3D graphics are focused on depicting what an object looks like, not how people see it.” The significance of this study was revealed as a study that enabled efficient 3D graphics by borrowing the way people recognize and express objects by imitating them.” He also foresaw the future, saying, “The realization of the metaverse will be achieved through innovation in artificial intelligence technology and innovation in artificial intelligence semiconductors, as shown in this study.”

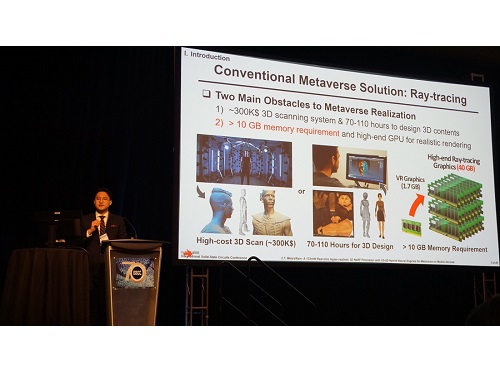

Figure 1. Description of the MetaVRain demo screen

Photo of Presentation at the International Solid-State Circuits Conference (ISSCC)

2023.03.13 View 7320

KAIST develops 'MetaVRain' that realizes vivid 3D real-life images

KAIST (President Kwang Hyung Lee) is a high-speed, low-power artificial intelligence (AI: Artificial Intelligent) semiconductor* MetaVRain, which implements artificial intelligence-based 3D rendering that can render images close to real life on mobile devices.

* AI semiconductor: Semiconductor equipped with artificial intelligence processing functions such as recognition, reasoning, learning, and judgment, and implemented with optimized technology based on super intelligence, ultra-low power, and ultra-reliability

The artificial intelligence semiconductor developed by the research team makes the existing ray-tracing*-based 3D rendering driven by GPU into artificial intelligence-based 3D rendering on a newly manufactured AI semiconductor, making it a 3D video capture studio that requires enormous costs. is not needed, so the cost of 3D model production can be greatly reduced and the memory used can be reduced by more than 180 times. In particular, the existing 3D graphic editing and design, which used complex software such as Blender, is replaced with simple artificial intelligence learning, so the general public can easily apply and edit the desired style.

* Ray-tracing: Technology that obtains images close to real life by tracing the trajectory of all light rays that change according to the light source, shape and texture of the object

This research, in which doctoral student Donghyun Han participated as the first author, was presented at the International Solid-State Circuit Design Conference (ISSCC) held in San Francisco, USA from February 18th to 22nd by semiconductor researchers from all over the world.

(Paper Number 2.7, Paper Title: MetaVRain: A 133mW Real-time Hyper-realistic 3D NeRF Processor with 1D-2D Hybrid Neural Engines for Metaverse on Mobile Devices (Authors: Donghyeon Han, Junha Ryu, Sangyeob Kim, Sangjin Kim, and Hoi-Jun Yoo))

Professor Yoo's team discovered inefficient operations that occur when implementing 3D rendering through artificial intelligence, and developed a new concept semiconductor that combines human visual recognition methods to reduce them. When a person remembers an object, he has the cognitive ability to immediately guess what the current object looks like based on the process of starting with a rough outline and gradually specifying its shape, and if it is an object he saw right before. In imitation of such a human cognitive process, the newly developed semiconductor adopts an operation method that grasps the rough shape of an object in advance through low-resolution voxels and minimizes the amount of computation required for current rendering based on the result of rendering in the past.

MetaVRain, developed by Professor Yu's team, achieved the world's best performance by developing a state-of-the-art CMOS chip as well as a hardware architecture that mimics the human visual recognition process. MetaVRain is optimized for artificial intelligence-based 3D rendering technology and achieves a rendering speed of up to 100 FPS or more, which is 911 times faster than conventional GPUs. In addition, as a result of the study, the energy efficiency, which represents the energy consumed per video screen processing, is 26,400 times higher than that of GPU, opening the possibility of artificial intelligence-based real-time rendering in VR/AR headsets and mobile devices.

To show an example of using MetaVRain, the research team developed a smart 3D rendering application system together, and showed an example of changing the style of a 3D model according to the user's preferred style. Since you only need to give artificial intelligence an image of the desired style and perform re-learning, you can easily change the style of the 3D model without the help of complicated software. In addition to the example of the application system implemented by Professor Yu's team, it is expected that various application examples will be possible, such as creating a realistic 3D avatar modeled after a user's face, creating 3D models of various structures, and changing the weather according to the film production environment. do.

Starting with MetaVRain, the research team expects that the field of 3D graphics will also begin to be replaced by artificial intelligence, and revealed that the combination of artificial intelligence and 3D graphics is a great technological innovation for the realization of the metaverse.

Professor Hoi-Jun Yoo of the Department of Electrical and Electronic Engineering at KAIST, who led the research, said, “Currently, 3D graphics are focused on depicting what an object looks like, not how people see it.” The significance of this study was revealed as a study that enabled efficient 3D graphics by borrowing the way people recognize and express objects by imitating them.” He also foresaw the future, saying, “The realization of the metaverse will be achieved through innovation in artificial intelligence technology and innovation in artificial intelligence semiconductors, as shown in this study.”

Figure 1. Description of the MetaVRain demo screen

Photo of Presentation at the International Solid-State Circuits Conference (ISSCC)

2023.03.13 View 7320 -

Professor Sang Su Lee’s Team Wins Seven iF Design Awards 2022

Professor Sang Su Lee from the Department of Industrial Design and his team’s five apps made in collaboration with NH Investment and Securities won iF Design Awards in the fields of UI, UX, service design, product design, and communication. These apps are now offered as NH Investment and Securities mobile applications.

The iF Design Awards recognize top quality creativity in product design, communication, packaging, service design and concepts, and architecture and interior design, as well as user experience (UX) and interface for digital media (UI).

In the field of UI, ‘Gretell’ is a mobile stock investment app service designed by Lee and his team to support investors struggling to learn about investing by archiving personalized information. Gretell provides investment information including news and reports. Users learn, evaluate, and leave comments. This shows both quantitative and qualitative indications, leading to rational decision-making. Other user’s comments are shared to reduce confirmation bias. Through this process, Gretell helps users who are impulsive or easily swayed by others’ opinions to grow as independent investors.

‘Bright’ is another app created by Lee’s team. It helps people exercise their rights as shareholders. As the need to exercise shareholders’ rights increases, many people are frustrated that investors with a small number of shares don’t have a lot of power. Bright provides a space for shareholders to share their opinions and brings people together so that individuals can be more proactive as shareholders. The Integrated Power of Attorney System (IPAS) expands the chances for shareholders to exercise their rights and allows users to submit proposals that can be communicated during the general meeting. Bright fosters influential shareholders, responsible companies, and a healthy society.

For communications, ‘Rewind’ is a stock information services app that visualizes past stock charts through sentiment analysis. Existing services focus on numbers, while Rewind takes a qualitative approach. Rewind analyzes public sentiment toward each event by collecting opinions on social media and then visualizes them chronologically along with the stock chart. Rewind allows users to review stock market movements and record their thoughts. Users can gain their own insights into current events in the stock market and make wiser investment decisions. The intuitive color gradient design provides a pleasant and simplified information experience.

In the area of interfaces for digital media and service design, ‘Groo’ is a green bond investing service app that helps users participate in green investment though investing in green bonds that support green projects for environmental improvement. Not restricted to trading bonds, Groo joins users in the holistic experience of green investing, from taking an interest in environmental issues to confirming the impact of the investment.

Next, ‘Modu’ is a story-based empathy expression training game for children with intellectual disabilities. Modu was developed to support emotion recognition and empathy behavior training in children with mild intellectual disabilities (MID) and borderline intellectual functioning (BIF).

Finally, the diving VR device for neutral buoyancy training, ‘Blow-yancy’, also made winner’s list. The device mimics scuba diving training without having to go into the water, therefore beginner divers are able getting feeling of diving while remaining perfectly safe and not harming any corals. It is expected that the device will be able to help protect at-risk underwater ecosystems.

2022.05.10 View 8852

Professor Sang Su Lee’s Team Wins Seven iF Design Awards 2022

Professor Sang Su Lee from the Department of Industrial Design and his team’s five apps made in collaboration with NH Investment and Securities won iF Design Awards in the fields of UI, UX, service design, product design, and communication. These apps are now offered as NH Investment and Securities mobile applications.

The iF Design Awards recognize top quality creativity in product design, communication, packaging, service design and concepts, and architecture and interior design, as well as user experience (UX) and interface for digital media (UI).

In the field of UI, ‘Gretell’ is a mobile stock investment app service designed by Lee and his team to support investors struggling to learn about investing by archiving personalized information. Gretell provides investment information including news and reports. Users learn, evaluate, and leave comments. This shows both quantitative and qualitative indications, leading to rational decision-making. Other user’s comments are shared to reduce confirmation bias. Through this process, Gretell helps users who are impulsive or easily swayed by others’ opinions to grow as independent investors.

‘Bright’ is another app created by Lee’s team. It helps people exercise their rights as shareholders. As the need to exercise shareholders’ rights increases, many people are frustrated that investors with a small number of shares don’t have a lot of power. Bright provides a space for shareholders to share their opinions and brings people together so that individuals can be more proactive as shareholders. The Integrated Power of Attorney System (IPAS) expands the chances for shareholders to exercise their rights and allows users to submit proposals that can be communicated during the general meeting. Bright fosters influential shareholders, responsible companies, and a healthy society.

For communications, ‘Rewind’ is a stock information services app that visualizes past stock charts through sentiment analysis. Existing services focus on numbers, while Rewind takes a qualitative approach. Rewind analyzes public sentiment toward each event by collecting opinions on social media and then visualizes them chronologically along with the stock chart. Rewind allows users to review stock market movements and record their thoughts. Users can gain their own insights into current events in the stock market and make wiser investment decisions. The intuitive color gradient design provides a pleasant and simplified information experience.

In the area of interfaces for digital media and service design, ‘Groo’ is a green bond investing service app that helps users participate in green investment though investing in green bonds that support green projects for environmental improvement. Not restricted to trading bonds, Groo joins users in the holistic experience of green investing, from taking an interest in environmental issues to confirming the impact of the investment.

Next, ‘Modu’ is a story-based empathy expression training game for children with intellectual disabilities. Modu was developed to support emotion recognition and empathy behavior training in children with mild intellectual disabilities (MID) and borderline intellectual functioning (BIF).

Finally, the diving VR device for neutral buoyancy training, ‘Blow-yancy’, also made winner’s list. The device mimics scuba diving training without having to go into the water, therefore beginner divers are able getting feeling of diving while remaining perfectly safe and not harming any corals. It is expected that the device will be able to help protect at-risk underwater ecosystems.

2022.05.10 View 8852 -

Digital Big Bang, Metaverse Technologies

The GSI Forum 2021 will explore the potential of new metaverse technologies that will change our daily lives

KAIST will be hosting a live online international forum on Sept.8 at 9 am (KST) through its KAIST YouTube channel. The forum will explore global trends regarding metaverse technology innovations and applications and discuss how we can build a new technology ecosystem.

Titled `Digital Big Bang, Metaverse Technology,' the Global Strategy Institute-International Forum 2021 will be the fourth event of its kind, following the three international forums held in 2020. The forum will delve into the development trends of metaverse platforms and AR/VR technologies and gather experts to discuss how such technologies could transform multiple aspects of our future, including education.

President Kwang Hyung Lee explains in his opening remarks that new technologies are truly opening a new horizon for our lives, saying, “In the education sector, digital technology will also create new opportunities to resolve the longstanding pedagogical shortfalls of one-way knowledge delivery systems. New digital technologies will help to unlock the creativity of our students. Education tailored to the students’ individual levels will not only help them accumulate knowledge but improve their ability to use it. Universities around the world are now at the same starting line. We should carve out our own distinct metaverse that is viable for human interactions and diverse technological experiences that promote students’ creativity and collaborative minds.”

Minster of Science and ICT Hyesook Lim will introduce how the Korean government is working to develop metaverse industries as a new potential engine of growth for the future in her welcoming remarks. The government’s efforts include collaborations with the private sector, investments in R&D, the development of talent, and regulatory reforms. Minister Lim will also emphasize the importance of national-level discussions regarding the establishment of a metaverse ecosystem and long-term value creation.

The organizers have invited global experts to share their knowledge and insights.

Kidong Bae, who is in charge of the KT Enterprise Project and ‘Metaverse One Team’ will talk about the current trends in the metaverse market and their implications, as well as KT’s XR technology references. He will also introduce strategies to establish and utilize a metaverse ecosystem, and highlight their new technologies as a global leader in 5G networks.

Jinha Lee, co-founder and CPO of the American AR solution company Spatial, will showcase a remote collaboration office that utilizes AR technology as a potential solution for collaborative activities in the post-COVID-19 era, where remote working is the ‘new normal.’ Furthermore, Lee will discuss how future workplaces that are not limited by space or distance will affect our values and creativity.

Professor Frank Steinicke from the University of Hamburg will present the ideal form of next-generation immersive technology that combines intelligent virtual agents, mixed reality, and IoT, and discuss his predictions for how the future of metaverse technology will be affected.

Marco Tempest, a creative technologist at NASA and a Director’s Fellow at the MIT Media Lab, will also be joining the forum as a plenary speaker. Tempest will discuss the potential of immersive technology in media, marketing, and entertainment, and will propose a future direction for immersive technology to enable the sharing of experiences, emotions, and knowledge.

Other speakers include Beomjoo Kim from Unity Technologies Korea, Professor Woontaek Woo from the Graduate School of Culture Technology at KAIST, Vice President of Global Sales at Labster Joseph Ferraro, and CEO of 3DBear Jussi Kajala. They will make presentations on metaverse technology applications for future education.

The keynote session will also have an online panel consisting of 50 domestic and overseas metaverse specialists, scientists, and teachers. The forum will hold a Q&A and discussion session where the panel members can ask questions to the keynote speakers regarding the prospects of metaverse and immersive technologies for education.

GSI Director Hoon Sohn stated, "KAIST will seize new opportunities that will arise in a future centered around metaverse technology and will be at the forefront to take advantage of the growing demand for innovative science and technology in non-contact societies. KAIST will also play a pivotal role in facilitating global cooperation, which will be vital to establish a metaverse ecosystem.”

2021.09.07 View 9552

Digital Big Bang, Metaverse Technologies

The GSI Forum 2021 will explore the potential of new metaverse technologies that will change our daily lives

KAIST will be hosting a live online international forum on Sept.8 at 9 am (KST) through its KAIST YouTube channel. The forum will explore global trends regarding metaverse technology innovations and applications and discuss how we can build a new technology ecosystem.

Titled `Digital Big Bang, Metaverse Technology,' the Global Strategy Institute-International Forum 2021 will be the fourth event of its kind, following the three international forums held in 2020. The forum will delve into the development trends of metaverse platforms and AR/VR technologies and gather experts to discuss how such technologies could transform multiple aspects of our future, including education.

President Kwang Hyung Lee explains in his opening remarks that new technologies are truly opening a new horizon for our lives, saying, “In the education sector, digital technology will also create new opportunities to resolve the longstanding pedagogical shortfalls of one-way knowledge delivery systems. New digital technologies will help to unlock the creativity of our students. Education tailored to the students’ individual levels will not only help them accumulate knowledge but improve their ability to use it. Universities around the world are now at the same starting line. We should carve out our own distinct metaverse that is viable for human interactions and diverse technological experiences that promote students’ creativity and collaborative minds.”

Minster of Science and ICT Hyesook Lim will introduce how the Korean government is working to develop metaverse industries as a new potential engine of growth for the future in her welcoming remarks. The government’s efforts include collaborations with the private sector, investments in R&D, the development of talent, and regulatory reforms. Minister Lim will also emphasize the importance of national-level discussions regarding the establishment of a metaverse ecosystem and long-term value creation.

The organizers have invited global experts to share their knowledge and insights.

Kidong Bae, who is in charge of the KT Enterprise Project and ‘Metaverse One Team’ will talk about the current trends in the metaverse market and their implications, as well as KT’s XR technology references. He will also introduce strategies to establish and utilize a metaverse ecosystem, and highlight their new technologies as a global leader in 5G networks.

Jinha Lee, co-founder and CPO of the American AR solution company Spatial, will showcase a remote collaboration office that utilizes AR technology as a potential solution for collaborative activities in the post-COVID-19 era, where remote working is the ‘new normal.’ Furthermore, Lee will discuss how future workplaces that are not limited by space or distance will affect our values and creativity.

Professor Frank Steinicke from the University of Hamburg will present the ideal form of next-generation immersive technology that combines intelligent virtual agents, mixed reality, and IoT, and discuss his predictions for how the future of metaverse technology will be affected.

Marco Tempest, a creative technologist at NASA and a Director’s Fellow at the MIT Media Lab, will also be joining the forum as a plenary speaker. Tempest will discuss the potential of immersive technology in media, marketing, and entertainment, and will propose a future direction for immersive technology to enable the sharing of experiences, emotions, and knowledge.

Other speakers include Beomjoo Kim from Unity Technologies Korea, Professor Woontaek Woo from the Graduate School of Culture Technology at KAIST, Vice President of Global Sales at Labster Joseph Ferraro, and CEO of 3DBear Jussi Kajala. They will make presentations on metaverse technology applications for future education.

The keynote session will also have an online panel consisting of 50 domestic and overseas metaverse specialists, scientists, and teachers. The forum will hold a Q&A and discussion session where the panel members can ask questions to the keynote speakers regarding the prospects of metaverse and immersive technologies for education.

GSI Director Hoon Sohn stated, "KAIST will seize new opportunities that will arise in a future centered around metaverse technology and will be at the forefront to take advantage of the growing demand for innovative science and technology in non-contact societies. KAIST will also play a pivotal role in facilitating global cooperation, which will be vital to establish a metaverse ecosystem.”

2021.09.07 View 9552 -

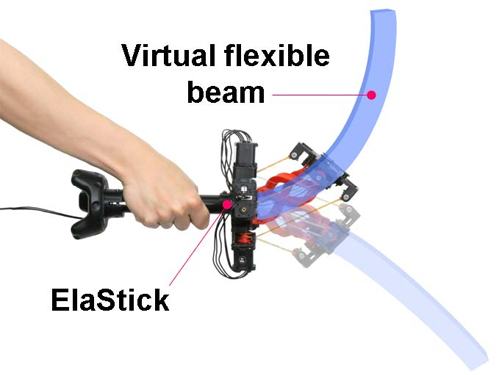

Feel the Force with ElaStick

ElaStick, a handheld variable stiffness display, renders the dynamic haptic response of a flexible object

Haptic controllers play an important role in providing rich and immersive virtual reality experiences. Professor Andrea Bianchi’s team in the Department of Industrial Design recreated the haptic response of flexible objects made of different materials and with different shapes by changing the stiffness of a custom-controller – ElaStick.

ElaStick is a portable hand-held force-feedback controller that is capable of rendering the illusion of how flexible and deformable objects feel when held in the hand. This VR haptic controller can change its stiffness in two directions independently and continuously. Since providing haptic feedback enhances the VR experience, researchers have suggested numerous approaches for rendering the physical properties of virtual objects - such as weights, the movement of mass, impacts, and damped oscillations.

The research team designed a new mechanism based on a quaternion joint and four variable-stiffness tendons. The quaternion joint is a two-DoF bending joint that enables ElaStick to bend and oscillate in any direction using a pair of tendons with varying stiffness. In fact, each tendon around the joint is made of a series of elastic rubber bands and inelastic fishing lines and can vary its stiffness by changing the proportion of the two materials. Thanks to these structures, each pair of tendons can behave independently, controlling the anisotropic characteristics of the entire device.

“The main challenge was to implement the mechanism to control the stiffness while maintaining independence between deformations in two perpendicular directions,” said Professor Bianchi.

The research team successfully measured the relative threshold of human perception on the stiffness of a handheld object. The results showed that the just-noticeable difference (JND) of human perception of stiffness is at most about 30% of the change from the initial value. It also found that appropriate haptic responses significantly enhance the quality of the VR experience. The research team surveyed the perceived realism, immersion, and enjoyment of participants after they played with various flexible objects in VR.

“It is meaningful that the haptic feedback of a flexible object was mechanically reproduced and its effectiveness in VR was proven. ElaStick has succeeded in implementing a novel mechanism to recreate the dynamic response of flexible objects that mimic real ones, suggesting a new category of haptic feedback that can be provided in VR,” explained Professor Bianchi.

The team plans to extend the ElaStick’s applications, from being used merely as a game controller to driving simulations, medical training, and many other digital contexts. This research, led by MS candidate Neung Ryu, won the Best Paper Award at the ACM UIST 2020 (the ACM Symposium on User Interface Software & Technology) last month.

-ProfileProfessor Andrea BianchiMakinteract.kaist.ac.krDepartment of Industrial DesignKAIST

2020.11.23 View 6945

Feel the Force with ElaStick

ElaStick, a handheld variable stiffness display, renders the dynamic haptic response of a flexible object

Haptic controllers play an important role in providing rich and immersive virtual reality experiences. Professor Andrea Bianchi’s team in the Department of Industrial Design recreated the haptic response of flexible objects made of different materials and with different shapes by changing the stiffness of a custom-controller – ElaStick.

ElaStick is a portable hand-held force-feedback controller that is capable of rendering the illusion of how flexible and deformable objects feel when held in the hand. This VR haptic controller can change its stiffness in two directions independently and continuously. Since providing haptic feedback enhances the VR experience, researchers have suggested numerous approaches for rendering the physical properties of virtual objects - such as weights, the movement of mass, impacts, and damped oscillations.

The research team designed a new mechanism based on a quaternion joint and four variable-stiffness tendons. The quaternion joint is a two-DoF bending joint that enables ElaStick to bend and oscillate in any direction using a pair of tendons with varying stiffness. In fact, each tendon around the joint is made of a series of elastic rubber bands and inelastic fishing lines and can vary its stiffness by changing the proportion of the two materials. Thanks to these structures, each pair of tendons can behave independently, controlling the anisotropic characteristics of the entire device.

“The main challenge was to implement the mechanism to control the stiffness while maintaining independence between deformations in two perpendicular directions,” said Professor Bianchi.

The research team successfully measured the relative threshold of human perception on the stiffness of a handheld object. The results showed that the just-noticeable difference (JND) of human perception of stiffness is at most about 30% of the change from the initial value. It also found that appropriate haptic responses significantly enhance the quality of the VR experience. The research team surveyed the perceived realism, immersion, and enjoyment of participants after they played with various flexible objects in VR.

“It is meaningful that the haptic feedback of a flexible object was mechanically reproduced and its effectiveness in VR was proven. ElaStick has succeeded in implementing a novel mechanism to recreate the dynamic response of flexible objects that mimic real ones, suggesting a new category of haptic feedback that can be provided in VR,” explained Professor Bianchi.

The team plans to extend the ElaStick’s applications, from being used merely as a game controller to driving simulations, medical training, and many other digital contexts. This research, led by MS candidate Neung Ryu, won the Best Paper Award at the ACM UIST 2020 (the ACM Symposium on User Interface Software & Technology) last month.

-ProfileProfessor Andrea BianchiMakinteract.kaist.ac.krDepartment of Industrial DesignKAIST

2020.11.23 View 6945 -

A Deep-Learned E-Skin Decodes Complex Human Motion

A deep-learning powered single-strained electronic skin sensor can capture human motion from a distance. The single strain sensor placed on the wrist decodes complex five-finger motions in real time with a virtual 3D hand that mirrors the original motions. The deep neural network boosted by rapid situation learning (RSL) ensures stable operation regardless of its position on the surface of the skin.

Conventional approaches require many sensor networks that cover the entire curvilinear surfaces of the target area. Unlike conventional wafer-based fabrication, this laser fabrication provides a new sensing paradigm for motion tracking.

The research team, led by Professor Sungho Jo from the School of Computing, collaborated with Professor Seunghwan Ko from Seoul National University to design this new measuring system that extracts signals corresponding to multiple finger motions by generating cracks in metal nanoparticle films using laser technology. The sensor patch was then attached to a user’s wrist to detect the movement of the fingers.

The concept of this research started from the idea that pinpointing a single area would be more efficient for identifying movements than affixing sensors to every joint and muscle. To make this targeting strategy work, it needs to accurately capture the signals from different areas at the point where they all converge, and then decoupling the information entangled in the converged signals. To maximize users’ usability and mobility, the research team used a single-channeled sensor to generate the signals corresponding to complex hand motions.

The rapid situation learning (RSL) system collects data from arbitrary parts on the wrist and automatically trains the model in a real-time demonstration with a virtual 3D hand that mirrors the original motions. To enhance the sensitivity of the sensor, researchers used laser-induced nanoscale cracking.

This sensory system can track the motion of the entire body with a small sensory network and facilitate the indirect remote measurement of human motions, which is applicable for wearable VR/AR systems.

The research team said they focused on two tasks while developing the sensor. First, they analyzed the sensor signal patterns into a latent space encapsulating temporal sensor behavior and then they mapped the latent vectors to finger motion metric spaces.

Professor Jo said, “Our system is expandable to other body parts. We already confirmed that the sensor is also capable of extracting gait motions from a pelvis. This technology is expected to provide a turning point in health-monitoring, motion tracking, and soft robotics.”

This study was featured in Nature Communications.

Publication:

Kim, K. K., et al. (2020) A deep-learned skin sensor decoding the epicentral human motions. Nature Communications. 11. 2149. https://doi.org/10.1038/s41467-020-16040-y29

Link to download the full-text paper:

https://www.nature.com/articles/s41467-020-16040-y.pdf

Profile: Professor Sungho Jo

shjo@kaist.ac.kr

http://nmail.kaist.ac.kr

Neuro-Machine Augmented Intelligence Lab

School of Computing

College of Engineering

KAIST

2020.06.10 View 13116

A Deep-Learned E-Skin Decodes Complex Human Motion

A deep-learning powered single-strained electronic skin sensor can capture human motion from a distance. The single strain sensor placed on the wrist decodes complex five-finger motions in real time with a virtual 3D hand that mirrors the original motions. The deep neural network boosted by rapid situation learning (RSL) ensures stable operation regardless of its position on the surface of the skin.

Conventional approaches require many sensor networks that cover the entire curvilinear surfaces of the target area. Unlike conventional wafer-based fabrication, this laser fabrication provides a new sensing paradigm for motion tracking.

The research team, led by Professor Sungho Jo from the School of Computing, collaborated with Professor Seunghwan Ko from Seoul National University to design this new measuring system that extracts signals corresponding to multiple finger motions by generating cracks in metal nanoparticle films using laser technology. The sensor patch was then attached to a user’s wrist to detect the movement of the fingers.

The concept of this research started from the idea that pinpointing a single area would be more efficient for identifying movements than affixing sensors to every joint and muscle. To make this targeting strategy work, it needs to accurately capture the signals from different areas at the point where they all converge, and then decoupling the information entangled in the converged signals. To maximize users’ usability and mobility, the research team used a single-channeled sensor to generate the signals corresponding to complex hand motions.

The rapid situation learning (RSL) system collects data from arbitrary parts on the wrist and automatically trains the model in a real-time demonstration with a virtual 3D hand that mirrors the original motions. To enhance the sensitivity of the sensor, researchers used laser-induced nanoscale cracking.

This sensory system can track the motion of the entire body with a small sensory network and facilitate the indirect remote measurement of human motions, which is applicable for wearable VR/AR systems.

The research team said they focused on two tasks while developing the sensor. First, they analyzed the sensor signal patterns into a latent space encapsulating temporal sensor behavior and then they mapped the latent vectors to finger motion metric spaces.

Professor Jo said, “Our system is expandable to other body parts. We already confirmed that the sensor is also capable of extracting gait motions from a pelvis. This technology is expected to provide a turning point in health-monitoring, motion tracking, and soft robotics.”

This study was featured in Nature Communications.

Publication:

Kim, K. K., et al. (2020) A deep-learned skin sensor decoding the epicentral human motions. Nature Communications. 11. 2149. https://doi.org/10.1038/s41467-020-16040-y29

Link to download the full-text paper:

https://www.nature.com/articles/s41467-020-16040-y.pdf

Profile: Professor Sungho Jo

shjo@kaist.ac.kr

http://nmail.kaist.ac.kr

Neuro-Machine Augmented Intelligence Lab

School of Computing

College of Engineering

KAIST

2020.06.10 View 13116 -

Adding Smart to Science Museum

KAIST and the National Science Museum (NSM) created an Exhibition Research Center for Smart Science to launch exhibitions that integrate emerging technologies in the Fourth Industrial Revolution, including augmented reality (AR), virtual reality (VR), Internet of Things (IoTs), and artificial intelligence (AI).

There has been a great demand for a novel technology for better, user-oriented exhibition services. The NSM continuously faces the problem of not having enough professional guides. Additionally, there have been constant complaints about its current mobile application for exhibitions not being very effective.

To tackle these problems, the new center was founded, involving 11 institutes and universities. Sponsored by the National Research Foundation, it will oversee 15 projects in three areas: exhibition-based technology, exhibition operational technology, and exhibition content.

The group first aims to provide a location-based exhibition guide system service, which allows it to incorporate various technological services, such as AR/VR to visitors. An indoor locating system named KAILOS, which was developed by KAIST, will be applied to this service. They will also launch a mobile application service that provides audio-based exhibition guides.

To further cater to visitors’ needs, the group plans to apply a user-centered ecosystem, a living lab concept to create pleasant environment for visitors.

“Every year, hundred thousands of young people visit the National Science Museum. I believe that the exhibition guide system has to be innovative, using cutting-edge IT technology in order to help them cherish their dreams and inspirations through science,” Jeong Heoi Bae, President of Exhibition and Research Bureau of NSM, emphasized.

Professor Dong Soo Han from the School of Computing, who took the position of research head of the group, said, “We will systematically develop exhibition technology and contents for the science museum to create a platform for smart science museums. It will be the first time to provide an exhibition guide system that integrates AR/VR with an indoor location system.”

The center will first apply the new system to the NSM and then expand it to 167 science museums and other regional museums.

2018.09.04 View 9746

Adding Smart to Science Museum

KAIST and the National Science Museum (NSM) created an Exhibition Research Center for Smart Science to launch exhibitions that integrate emerging technologies in the Fourth Industrial Revolution, including augmented reality (AR), virtual reality (VR), Internet of Things (IoTs), and artificial intelligence (AI).

There has been a great demand for a novel technology for better, user-oriented exhibition services. The NSM continuously faces the problem of not having enough professional guides. Additionally, there have been constant complaints about its current mobile application for exhibitions not being very effective.

To tackle these problems, the new center was founded, involving 11 institutes and universities. Sponsored by the National Research Foundation, it will oversee 15 projects in three areas: exhibition-based technology, exhibition operational technology, and exhibition content.

The group first aims to provide a location-based exhibition guide system service, which allows it to incorporate various technological services, such as AR/VR to visitors. An indoor locating system named KAILOS, which was developed by KAIST, will be applied to this service. They will also launch a mobile application service that provides audio-based exhibition guides.

To further cater to visitors’ needs, the group plans to apply a user-centered ecosystem, a living lab concept to create pleasant environment for visitors.

“Every year, hundred thousands of young people visit the National Science Museum. I believe that the exhibition guide system has to be innovative, using cutting-edge IT technology in order to help them cherish their dreams and inspirations through science,” Jeong Heoi Bae, President of Exhibition and Research Bureau of NSM, emphasized.

Professor Dong Soo Han from the School of Computing, who took the position of research head of the group, said, “We will systematically develop exhibition technology and contents for the science museum to create a platform for smart science museums. It will be the first time to provide an exhibition guide system that integrates AR/VR with an indoor location system.”

The center will first apply the new system to the NSM and then expand it to 167 science museums and other regional museums.

2018.09.04 View 9746 -

Professor Jinah Park Received the Prime Minister's Award

Professor Jinah Park of the School of Computing received the Prime Minister’s Citation Ribbon on April 21 at a ceremony celebrating the Day of Science and ICT. The awardee was selected by the Ministry of Science, ICT and Future Planning and Korea Communications Commission.

Professor Park was recognized for her convergence R&D of a VR simulator for dental treatment with haptic feedback, in addition to her research on understanding 3D interaction behavior in VR environments. Her major academic contributions are in the field of medical imaging, where she developed a computational technique to analyze cardiac motion from tagging data.

Professor Park said she was very pleased to see her twenty-plus years of research on ways to converge computing into medical areas finally bear fruit. She also thanked her colleagues and students in her Computer Graphics and CGV Research Lab for working together to make this achievement possible.

2017.04.26 View 10356

Professor Jinah Park Received the Prime Minister's Award

Professor Jinah Park of the School of Computing received the Prime Minister’s Citation Ribbon on April 21 at a ceremony celebrating the Day of Science and ICT. The awardee was selected by the Ministry of Science, ICT and Future Planning and Korea Communications Commission.

Professor Park was recognized for her convergence R&D of a VR simulator for dental treatment with haptic feedback, in addition to her research on understanding 3D interaction behavior in VR environments. Her major academic contributions are in the field of medical imaging, where she developed a computational technique to analyze cardiac motion from tagging data.

Professor Park said she was very pleased to see her twenty-plus years of research on ways to converge computing into medical areas finally bear fruit. She also thanked her colleagues and students in her Computer Graphics and CGV Research Lab for working together to make this achievement possible.

2017.04.26 View 10356 -

ICISTS Hosts the International Interdisciplinary Conference

A KAIST student organization, The International Conference for the Integration of Science, Technology and Society (ICISTS), will host ICISTS 2016 at the Hotel ICC in Daejeon from 3 to 7 August with the participation of around 300 Korean and international students.

ICISTS was first established in 2005 to provide an annual platform for delegates and speakers to discuss the integration and the convergence of science, technology, and society regardless of their academic backgrounds.

This year’s conference, with the theme of “Beyond the Center,” emphasizes the ways in which technological advancements can change central organizations in areas such as financial technology, healthcare, and global governance.

The keynote speakers include Dennis Hong, a developer of the first automobile for the blind and a professor of the Mechanical and Aerospace Engineering Department at UCLA, Dor Konforty, a founder and a CEO of SNS platform Synereo, and Marzena Rostek, a professor of Economics at the University of Wisconsin-Madison.

Other notable speakers include: Gi-Jung Jung, Head of the National Fusion Research Institute; Janos Barberis, Founder of FinTech HK; Tae-Hoon Kim, CEO and Founder of Rainist; Gulrez Shah Azhar, Assistant Policy Analyst at RAND Corporation; Thomas Concannon, Senior Policy Researcher at RAND Corporation; Leah Vriesman, Professor at the School of Public Health, UCLA; and Bjorn Cumps, Professor of Management Practice at Vlerick Business School in Belgium.

The conference consists of keynote speeches, panel discussions, open talks, experience sessions, team project presentations, a culture night, and a beer party, at which all participants will be encouraged to interact with speakers and delegates and to discuss the topics of their interest.

Han-Kyul Jung, ICISTS’s Head of Public Relations, said, “This conference will not only allow the delegates to understand the trends of future technology, but also be an opportunity for KAIST students to form valuable contacts with students from around the world.”

For more information, please go to www.icists.org.

2016.07.20 View 8893

ICISTS Hosts the International Interdisciplinary Conference

A KAIST student organization, The International Conference for the Integration of Science, Technology and Society (ICISTS), will host ICISTS 2016 at the Hotel ICC in Daejeon from 3 to 7 August with the participation of around 300 Korean and international students.

ICISTS was first established in 2005 to provide an annual platform for delegates and speakers to discuss the integration and the convergence of science, technology, and society regardless of their academic backgrounds.

This year’s conference, with the theme of “Beyond the Center,” emphasizes the ways in which technological advancements can change central organizations in areas such as financial technology, healthcare, and global governance.

The keynote speakers include Dennis Hong, a developer of the first automobile for the blind and a professor of the Mechanical and Aerospace Engineering Department at UCLA, Dor Konforty, a founder and a CEO of SNS platform Synereo, and Marzena Rostek, a professor of Economics at the University of Wisconsin-Madison.

Other notable speakers include: Gi-Jung Jung, Head of the National Fusion Research Institute; Janos Barberis, Founder of FinTech HK; Tae-Hoon Kim, CEO and Founder of Rainist; Gulrez Shah Azhar, Assistant Policy Analyst at RAND Corporation; Thomas Concannon, Senior Policy Researcher at RAND Corporation; Leah Vriesman, Professor at the School of Public Health, UCLA; and Bjorn Cumps, Professor of Management Practice at Vlerick Business School in Belgium.

The conference consists of keynote speeches, panel discussions, open talks, experience sessions, team project presentations, a culture night, and a beer party, at which all participants will be encouraged to interact with speakers and delegates and to discuss the topics of their interest.

Han-Kyul Jung, ICISTS’s Head of Public Relations, said, “This conference will not only allow the delegates to understand the trends of future technology, but also be an opportunity for KAIST students to form valuable contacts with students from around the world.”

For more information, please go to www.icists.org.

2016.07.20 View 8893