Robot

-

KAIST debuts “DreamWaQer” - a quadrupedal robot that can walk in the dark

- The team led by Professor Hyun Myung of the School of Electrical Engineering developed “DreamWaQ”, a deep reinforcement learning-based walking robot control technology that can walk in an atypical environment without visual and/or tactile information

- Utilization of “DreamWaQ” technology can enable mass production of various types of “DreamWaQers”

- Expected to be used in exploration of atypical environment involving unique circumstances such as disasters by fire.

A team of Korean engineering researchers has developed a quadrupedal robot technology that can climb up and down the steps and moves without falling over in uneven environments such as tree roots without the help of visual or tactile sensors even in disastrous situations in which visual confirmation is impeded due to darkness or thick smoke from the flames.

KAIST (President Kwang Hyung Lee) announced on the 29th of March that Professor Hyun Myung's research team at the Urban Robotics Lab in the School of Electrical Engineering developed a walking robot control technology that enables robust 'blind locomotion' in various atypical environments.

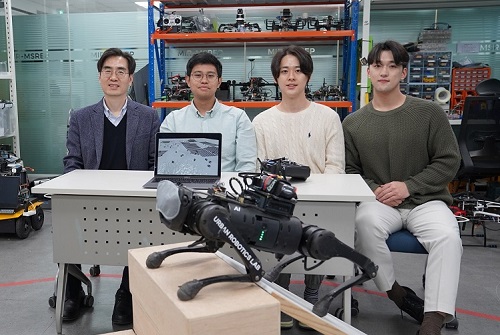

< (From left) Prof. Hyun Myung, Doctoral Candidates I Made Aswin Nahrendra, Byeongho Yu, and Minho Oh. In the foreground is the DreamWaQer, a quadrupedal robot equipped with DreamWaQ technology. >

The KAIST research team developed "DreamWaQ" technology, which was named so as it enables walking robots to move about even in the dark, just as a person can walk without visual help fresh out of bed and going to the bathroom in the dark. With this technology installed atop any legged robots, it will be possible to create various types of "DreamWaQers".

Existing walking robot controllers are based on kinematics and/or dynamics models. This is expressed as a model-based control method. In particular, on atypical environments like the open, uneven fields, it is necessary to obtain the feature information of the terrain more quickly in order to maintain stability as it walks. However, it has been shown to depend heavily on the cognitive ability to survey the surrounding environment.

In contrast, the controller developed by Professor Hyun Myung's research team based on deep reinforcement learning (RL) methods can quickly calculate appropriate control commands for each motor of the walking robot through data of various environments obtained from the simulator. Whereas the existing controllers that learned from simulations required a separate re-orchestration to make it work with an actual robot, this controller developed by the research team is expected to be easily applied to various walking robots because it does not require an additional tuning process.

DreamWaQ, the controller developed by the research team, is largely composed of a context estimation network that estimates the ground and robot information and a policy network that computes control commands. The context-aided estimator network estimates the ground information implicitly and the robot’s status explicitly through inertial information and joint information. This information is fed into the policy network to be used to generate optimal control commands. Both networks are learned together in the simulation.

While the context-aided estimator network is learned through supervised learning, the policy network is learned through an actor-critic architecture, a deep RL methodology. The actor network can only implicitly infer surrounding terrain information. In the simulation, the surrounding terrain information is known, and the critic, or the value network, that has the exact terrain information evaluates the policy of the actor network.

This whole learning process takes only about an hour in a GPU-enabled PC, and the actual robot is equipped with only the network of learned actors. Without looking at the surrounding terrain, it goes through the process of imagining which environment is similar to one of the various environments learned in the simulation using only the inertial sensor (IMU) inside the robot and the measurement of joint angles. If it suddenly encounters an offset, such as a staircase, it will not know until its foot touches the step, but it will quickly draw up terrain information the moment its foot touches the surface. Then the control command suitable for the estimated terrain information is transmitted to each motor, enabling rapidly adapted walking.

The DreamWaQer robot walked not only in the laboratory environment, but also in an outdoor environment around the campus with many curbs and speed bumps, and over a field with many tree roots and gravel, demonstrating its abilities by overcoming a staircase with a difference of a height that is two-thirds of its body. In addition, regardless of the environment, the research team confirmed that it was capable of stable walking ranging from a slow speed of 0.3 m/s to a rather fast speed of 1.0 m/s.

The results of this study were produced by a student in doctorate course, I Made Aswin Nahrendra, as the first author, and his colleague Byeongho Yu as a co-author. It has been accepted to be presented at the upcoming IEEE International Conference on Robotics and Automation (ICRA) scheduled to be held in London at the end of May. (Paper title: DreamWaQ: Learning Robust Quadrupedal Locomotion With Implicit Terrain Imagination via Deep Reinforcement Learning)

The videos of the walking robot DreamWaQer equipped with the developed DreamWaQ can be found at the address below.

Main Introduction: https://youtu.be/JC1_bnTxPiQ Experiment Sketches: https://youtu.be/mhUUZVbeDA0

Meanwhile, this research was carried out with the support from the Robot Industry Core Technology Development Program of the Ministry of Trade, Industry and Energy (MOTIE). (Task title: Development of Mobile Intelligence SW for Autonomous Navigation of Legged Robots in Dynamic and Atypical Environments for Real Application)

< Figure 1. Overview of DreamWaQ, a controller developed by this research team. This network consists of an estimator network that learns implicit and explicit estimates together, a policy network that acts as a controller, and a value network that provides guides to the policies during training. When implemented in a real robot, only the estimator and policy network are used. Both networks run in less than 1 ms on the robot's on-board computer. >

< Figure 2. Since the estimator can implicitly estimate the ground information as the foot touches the surface, it is possible to adapt quickly to rapidly changing ground conditions. >

< Figure 3. Results showing that even a small walking robot was able to overcome steps with height differences of about 20cm. >

2023.05.18 View 10494

KAIST debuts “DreamWaQer” - a quadrupedal robot that can walk in the dark

- The team led by Professor Hyun Myung of the School of Electrical Engineering developed “DreamWaQ”, a deep reinforcement learning-based walking robot control technology that can walk in an atypical environment without visual and/or tactile information

- Utilization of “DreamWaQ” technology can enable mass production of various types of “DreamWaQers”

- Expected to be used in exploration of atypical environment involving unique circumstances such as disasters by fire.

A team of Korean engineering researchers has developed a quadrupedal robot technology that can climb up and down the steps and moves without falling over in uneven environments such as tree roots without the help of visual or tactile sensors even in disastrous situations in which visual confirmation is impeded due to darkness or thick smoke from the flames.

KAIST (President Kwang Hyung Lee) announced on the 29th of March that Professor Hyun Myung's research team at the Urban Robotics Lab in the School of Electrical Engineering developed a walking robot control technology that enables robust 'blind locomotion' in various atypical environments.

< (From left) Prof. Hyun Myung, Doctoral Candidates I Made Aswin Nahrendra, Byeongho Yu, and Minho Oh. In the foreground is the DreamWaQer, a quadrupedal robot equipped with DreamWaQ technology. >

The KAIST research team developed "DreamWaQ" technology, which was named so as it enables walking robots to move about even in the dark, just as a person can walk without visual help fresh out of bed and going to the bathroom in the dark. With this technology installed atop any legged robots, it will be possible to create various types of "DreamWaQers".

Existing walking robot controllers are based on kinematics and/or dynamics models. This is expressed as a model-based control method. In particular, on atypical environments like the open, uneven fields, it is necessary to obtain the feature information of the terrain more quickly in order to maintain stability as it walks. However, it has been shown to depend heavily on the cognitive ability to survey the surrounding environment.

In contrast, the controller developed by Professor Hyun Myung's research team based on deep reinforcement learning (RL) methods can quickly calculate appropriate control commands for each motor of the walking robot through data of various environments obtained from the simulator. Whereas the existing controllers that learned from simulations required a separate re-orchestration to make it work with an actual robot, this controller developed by the research team is expected to be easily applied to various walking robots because it does not require an additional tuning process.

DreamWaQ, the controller developed by the research team, is largely composed of a context estimation network that estimates the ground and robot information and a policy network that computes control commands. The context-aided estimator network estimates the ground information implicitly and the robot’s status explicitly through inertial information and joint information. This information is fed into the policy network to be used to generate optimal control commands. Both networks are learned together in the simulation.

While the context-aided estimator network is learned through supervised learning, the policy network is learned through an actor-critic architecture, a deep RL methodology. The actor network can only implicitly infer surrounding terrain information. In the simulation, the surrounding terrain information is known, and the critic, or the value network, that has the exact terrain information evaluates the policy of the actor network.

This whole learning process takes only about an hour in a GPU-enabled PC, and the actual robot is equipped with only the network of learned actors. Without looking at the surrounding terrain, it goes through the process of imagining which environment is similar to one of the various environments learned in the simulation using only the inertial sensor (IMU) inside the robot and the measurement of joint angles. If it suddenly encounters an offset, such as a staircase, it will not know until its foot touches the step, but it will quickly draw up terrain information the moment its foot touches the surface. Then the control command suitable for the estimated terrain information is transmitted to each motor, enabling rapidly adapted walking.

The DreamWaQer robot walked not only in the laboratory environment, but also in an outdoor environment around the campus with many curbs and speed bumps, and over a field with many tree roots and gravel, demonstrating its abilities by overcoming a staircase with a difference of a height that is two-thirds of its body. In addition, regardless of the environment, the research team confirmed that it was capable of stable walking ranging from a slow speed of 0.3 m/s to a rather fast speed of 1.0 m/s.

The results of this study were produced by a student in doctorate course, I Made Aswin Nahrendra, as the first author, and his colleague Byeongho Yu as a co-author. It has been accepted to be presented at the upcoming IEEE International Conference on Robotics and Automation (ICRA) scheduled to be held in London at the end of May. (Paper title: DreamWaQ: Learning Robust Quadrupedal Locomotion With Implicit Terrain Imagination via Deep Reinforcement Learning)

The videos of the walking robot DreamWaQer equipped with the developed DreamWaQ can be found at the address below.

Main Introduction: https://youtu.be/JC1_bnTxPiQ Experiment Sketches: https://youtu.be/mhUUZVbeDA0

Meanwhile, this research was carried out with the support from the Robot Industry Core Technology Development Program of the Ministry of Trade, Industry and Energy (MOTIE). (Task title: Development of Mobile Intelligence SW for Autonomous Navigation of Legged Robots in Dynamic and Atypical Environments for Real Application)

< Figure 1. Overview of DreamWaQ, a controller developed by this research team. This network consists of an estimator network that learns implicit and explicit estimates together, a policy network that acts as a controller, and a value network that provides guides to the policies during training. When implemented in a real robot, only the estimator and policy network are used. Both networks run in less than 1 ms on the robot's on-board computer. >

< Figure 2. Since the estimator can implicitly estimate the ground information as the foot touches the surface, it is possible to adapt quickly to rapidly changing ground conditions. >

< Figure 3. Results showing that even a small walking robot was able to overcome steps with height differences of about 20cm. >

2023.05.18 View 10494 -

KAIST’s Robo-Dog “RaiBo” runs through the sandy beach

KAIST (President Kwang Hyung Lee) announced on the 25th that a research team led by Professor Jemin Hwangbo of the Department of Mechanical Engineering developed a quadrupedal robot control technology that can walk robustly with agility even in deformable terrain such as sandy beach.

< Photo. RAI Lab Team with Professor Hwangbo in the middle of the back row. >

Professor Hwangbo's research team developed a technology to model the force received by a walking robot on the ground made of granular materials such as sand and simulate it via a quadrupedal robot. Also, the team worked on an artificial neural network structure which is suitable in making real-time decisions needed in adapting to various types of ground without prior information while walking at the same time and applied it on to reinforcement learning. The trained neural network controller is expected to expand the scope of application of quadrupedal walking robots by proving its robustness in changing terrain, such as the ability to move in high-speed even on a sandy beach and walk and turn on soft grounds like an air mattress without losing balance.

This research, with Ph.D. Student Soo-Young Choi of KAIST Department of Mechanical Engineering as the first author, was published in January in the “Science Robotics”. (Paper title: Learning quadrupedal locomotion on deformable terrain).

Reinforcement learning is an AI learning method used to create a machine that collects data on the results of various actions in an arbitrary situation and utilizes that set of data to perform a task. Because the amount of data required for reinforcement learning is so vast, a method of collecting data through simulations that approximates physical phenomena in the real environment is widely used.

In particular, learning-based controllers in the field of walking robots have been applied to real environments after learning through data collected in simulations to successfully perform walking controls in various terrains.

However, since the performance of the learning-based controller rapidly decreases when the actual environment has any discrepancy from the learned simulation environment, it is important to implement an environment similar to the real one in the data collection stage. Therefore, in order to create a learning-based controller that can maintain balance in a deforming terrain, the simulator must provide a similar contact experience.

The research team defined a contact model that predicted the force generated upon contact from the motion dynamics of a walking body based on a ground reaction force model that considered the additional mass effect of granular media defined in previous studies.

Furthermore, by calculating the force generated from one or several contacts at each time step, the deforming terrain was efficiently simulated.

The research team also introduced an artificial neural network structure that implicitly predicts ground characteristics by using a recurrent neural network that analyzes time-series data from the robot's sensors.

The learned controller was mounted on the robot 'RaiBo', which was built hands-on by the research team to show high-speed walking of up to 3.03 m/s on a sandy beach where the robot's feet were completely submerged in the sand. Even when applied to harder grounds, such as grassy fields, and a running track, it was able to run stably by adapting to the characteristics of the ground without any additional programming or revision to the controlling algorithm.

In addition, it rotated with stability at 1.54 rad/s (approximately 90° per second) on an air mattress and demonstrated its quick adaptability even in the situation in which the terrain suddenly turned soft.

The research team demonstrated the importance of providing a suitable contact experience during the learning process by comparison with a controller that assumed the ground to be rigid, and proved that the proposed recurrent neural network modifies the controller's walking method according to the ground properties.

The simulation and learning methodology developed by the research team is expected to contribute to robots performing practical tasks as it expands the range of terrains that various walking robots can operate on.

The first author, Suyoung Choi, said, “It has been shown that providing a learning-based controller with a close contact experience with real deforming ground is essential for application to deforming terrain.” He went on to add that “The proposed controller can be used without prior information on the terrain, so it can be applied to various robot walking studies.”

This research was carried out with the support of the Samsung Research Funding & Incubation Center of Samsung Electronics.

< Figure 1. Adaptability of the proposed controller to various ground environments. The controller learned from a wide range of randomized granular media simulations showed adaptability to various natural and artificial terrains, and demonstrated high-speed walking ability and energy efficiency. >

< Figure 2. Contact model definition for simulation of granular substrates. The research team used a model that considered the additional mass effect for the vertical force and a Coulomb friction model for the horizontal direction while approximating the contact with the granular medium as occurring at a point. Furthermore, a model that simulates the ground resistance that can occur on the side of the foot was introduced and used for simulation. >

2023.01.26 View 15637

KAIST’s Robo-Dog “RaiBo” runs through the sandy beach

KAIST (President Kwang Hyung Lee) announced on the 25th that a research team led by Professor Jemin Hwangbo of the Department of Mechanical Engineering developed a quadrupedal robot control technology that can walk robustly with agility even in deformable terrain such as sandy beach.

< Photo. RAI Lab Team with Professor Hwangbo in the middle of the back row. >

Professor Hwangbo's research team developed a technology to model the force received by a walking robot on the ground made of granular materials such as sand and simulate it via a quadrupedal robot. Also, the team worked on an artificial neural network structure which is suitable in making real-time decisions needed in adapting to various types of ground without prior information while walking at the same time and applied it on to reinforcement learning. The trained neural network controller is expected to expand the scope of application of quadrupedal walking robots by proving its robustness in changing terrain, such as the ability to move in high-speed even on a sandy beach and walk and turn on soft grounds like an air mattress without losing balance.

This research, with Ph.D. Student Soo-Young Choi of KAIST Department of Mechanical Engineering as the first author, was published in January in the “Science Robotics”. (Paper title: Learning quadrupedal locomotion on deformable terrain).

Reinforcement learning is an AI learning method used to create a machine that collects data on the results of various actions in an arbitrary situation and utilizes that set of data to perform a task. Because the amount of data required for reinforcement learning is so vast, a method of collecting data through simulations that approximates physical phenomena in the real environment is widely used.

In particular, learning-based controllers in the field of walking robots have been applied to real environments after learning through data collected in simulations to successfully perform walking controls in various terrains.

However, since the performance of the learning-based controller rapidly decreases when the actual environment has any discrepancy from the learned simulation environment, it is important to implement an environment similar to the real one in the data collection stage. Therefore, in order to create a learning-based controller that can maintain balance in a deforming terrain, the simulator must provide a similar contact experience.

The research team defined a contact model that predicted the force generated upon contact from the motion dynamics of a walking body based on a ground reaction force model that considered the additional mass effect of granular media defined in previous studies.

Furthermore, by calculating the force generated from one or several contacts at each time step, the deforming terrain was efficiently simulated.

The research team also introduced an artificial neural network structure that implicitly predicts ground characteristics by using a recurrent neural network that analyzes time-series data from the robot's sensors.

The learned controller was mounted on the robot 'RaiBo', which was built hands-on by the research team to show high-speed walking of up to 3.03 m/s on a sandy beach where the robot's feet were completely submerged in the sand. Even when applied to harder grounds, such as grassy fields, and a running track, it was able to run stably by adapting to the characteristics of the ground without any additional programming or revision to the controlling algorithm.

In addition, it rotated with stability at 1.54 rad/s (approximately 90° per second) on an air mattress and demonstrated its quick adaptability even in the situation in which the terrain suddenly turned soft.

The research team demonstrated the importance of providing a suitable contact experience during the learning process by comparison with a controller that assumed the ground to be rigid, and proved that the proposed recurrent neural network modifies the controller's walking method according to the ground properties.

The simulation and learning methodology developed by the research team is expected to contribute to robots performing practical tasks as it expands the range of terrains that various walking robots can operate on.

The first author, Suyoung Choi, said, “It has been shown that providing a learning-based controller with a close contact experience with real deforming ground is essential for application to deforming terrain.” He went on to add that “The proposed controller can be used without prior information on the terrain, so it can be applied to various robot walking studies.”

This research was carried out with the support of the Samsung Research Funding & Incubation Center of Samsung Electronics.

< Figure 1. Adaptability of the proposed controller to various ground environments. The controller learned from a wide range of randomized granular media simulations showed adaptability to various natural and artificial terrains, and demonstrated high-speed walking ability and energy efficiency. >

< Figure 2. Contact model definition for simulation of granular substrates. The research team used a model that considered the additional mass effect for the vertical force and a Coulomb friction model for the horizontal direction while approximating the contact with the granular medium as occurring at a point. Furthermore, a model that simulates the ground resistance that can occur on the side of the foot was introduced and used for simulation. >

2023.01.26 View 15637 -

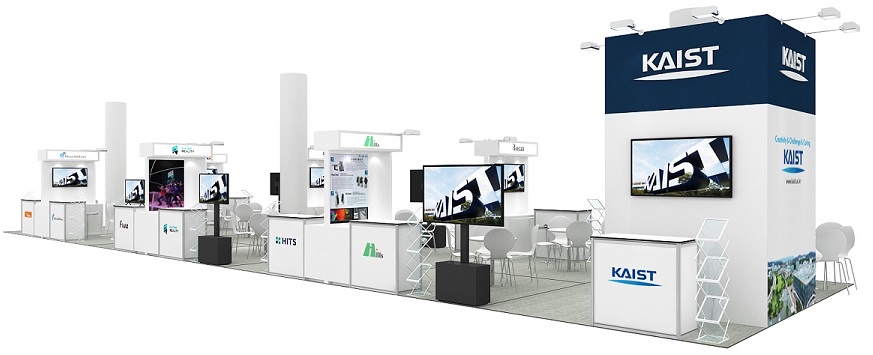

KAIST to showcase a pack of KAIST Start-ups at CES 2023

- KAIST is to run an Exclusive Booth at the Venetian Expo (Hall G) in Eureka Park, at CES 2023, to be held in Las Vegas from Thursday, January 5th through Sunday, the 8th.

- Twelve businesses recently put together by KAIST faculty, alumni, and the start-ups given legal usage of KAIST technologies will be showcased.

- Out of the participating start-ups, the products by Fluiz and Hills Robotics were selected as the “CES Innovation Award 2023 Honoree”, scoring top in their respective categories.

On January 3, KAIST announced that there will be a KAIST booth at Consumer Electronics Show (CES) 2023, the most influential tech event in the world, to be held in Las Vegas from January 3 to 8.

At this exclusive corner, KAIST will introduce the technologies of KAIST start-ups over the exhibition period.

KAIST first started holding its exclusive booth in CES 2019 with five start-up businesses, following up at CES 2020 with 12 start-ups and at CES 2022 with 10 start-ups. At CES 2023, which would be KAIST’s fourth conference, KAIST will be accompanying 12 businesses including start-ups by the faculty members, alumni, and technology transfer companies that just began their businesses with technologies from their research findings that stands a head above others.

To maximize the publicity opportunity, KAIST will support each company’s marketing strategies through cooperation with the Korea International Trade Association (KITA), and provide an opportunity for the school and each startup to create global identity and exhibit the excellence of their technologies at the convention.

The following companies will be at the KAIST Booth in Eureka Park:

The twelve startups mentioned above aim to achieve global technology commecialization in their respective fields of expertise spanning from eXtended Reality (XR) and gaming, to AI and robotics, vehicle and transport, mobile platform, smart city, autonomous driving, healthcare, internet of thing (IoT), through joint research and development, technology transfer and investment attraction from world’s leading institutions and enterprises.

In particular, Fluiz and Hills Robotics won the CES Innovation Award as 2023 Honorees and is expected to attain greater achievements in the future.

A staff member from the KAIST Institute of Technology Value Creation said, “The KAIST Showcase for CES 2023 has prepared a new pitching space for each of the companies for their own IR efforts, and we hope that KAIST startups will actively and effectively market their products and technologies while they are at the convention. We hope it will help them utilize their time here to establish their name in presence here which will eventually serve as a good foothold for them and their predecessors to further global commercialization goals.”

2023.01.04 View 15104

KAIST to showcase a pack of KAIST Start-ups at CES 2023

- KAIST is to run an Exclusive Booth at the Venetian Expo (Hall G) in Eureka Park, at CES 2023, to be held in Las Vegas from Thursday, January 5th through Sunday, the 8th.

- Twelve businesses recently put together by KAIST faculty, alumni, and the start-ups given legal usage of KAIST technologies will be showcased.

- Out of the participating start-ups, the products by Fluiz and Hills Robotics were selected as the “CES Innovation Award 2023 Honoree”, scoring top in their respective categories.

On January 3, KAIST announced that there will be a KAIST booth at Consumer Electronics Show (CES) 2023, the most influential tech event in the world, to be held in Las Vegas from January 3 to 8.

At this exclusive corner, KAIST will introduce the technologies of KAIST start-ups over the exhibition period.

KAIST first started holding its exclusive booth in CES 2019 with five start-up businesses, following up at CES 2020 with 12 start-ups and at CES 2022 with 10 start-ups. At CES 2023, which would be KAIST’s fourth conference, KAIST will be accompanying 12 businesses including start-ups by the faculty members, alumni, and technology transfer companies that just began their businesses with technologies from their research findings that stands a head above others.

To maximize the publicity opportunity, KAIST will support each company’s marketing strategies through cooperation with the Korea International Trade Association (KITA), and provide an opportunity for the school and each startup to create global identity and exhibit the excellence of their technologies at the convention.

The following companies will be at the KAIST Booth in Eureka Park:

The twelve startups mentioned above aim to achieve global technology commecialization in their respective fields of expertise spanning from eXtended Reality (XR) and gaming, to AI and robotics, vehicle and transport, mobile platform, smart city, autonomous driving, healthcare, internet of thing (IoT), through joint research and development, technology transfer and investment attraction from world’s leading institutions and enterprises.

In particular, Fluiz and Hills Robotics won the CES Innovation Award as 2023 Honorees and is expected to attain greater achievements in the future.

A staff member from the KAIST Institute of Technology Value Creation said, “The KAIST Showcase for CES 2023 has prepared a new pitching space for each of the companies for their own IR efforts, and we hope that KAIST startups will actively and effectively market their products and technologies while they are at the convention. We hope it will help them utilize their time here to establish their name in presence here which will eventually serve as a good foothold for them and their predecessors to further global commercialization goals.”

2023.01.04 View 15104 -

A Quick but Clingy Creepy-Crawler that will MARVEL You

Engineered by KAIST Mechanics, a quadrupedal robot climbs steel walls and crawls across metal ceilings at the fastest speed that the world has ever seen.

< Photo 1. (From left) KAIST ME Prof. Hae-Won Park, Ph.D. Student Yong Um, Ph.D. Student Seungwoo Hong >

- Professor Hae-Won Park's team at the Department of Mechanical Engineering developed a quadrupedal robot that can move at a high speed on ferrous walls and ceilings.

- It is expected to make a wide variety of contributions as it is to be used to conduct inspections and repairs of large steel structures such as ships, bridges, and transmission towers, offering an alternative to dangerous or risky activities required in hazardous environments while maintaining productivity and efficiency through automation and unmanning of such operations.

- The study was published as the cover paper of the December issue of Science Robotics.

KAIST (President Kwang Hyung Lee) announced on the 26th that a research team led by Professor Hae-Won Park of the Department of Mechanical Engineering developed a quadrupedal walking robot that can move at high speed on steel walls and ceilings named M.A.R.V.E.L. - rightly so as it is a Magnetically Adhesive Robot for Versatile and Expeditious Locomotion as described in their paper, “Agile and Versatile Climbing on Ferromagnetic Surfaces with a Quadrupedal Robot.” (DOI: 10.1126/scirobotics.add1017)

To make this happen, Professor Park's research team developed a foot pad that can quickly turn the magnetic adhesive force on and off while retaining high adhesive force even on an uneven surface through the use of the Electro-Permanent Magnet (EPM), a device that can magnetize and demagnetize an electromagnet with little power, and the Magneto-Rheological Elastomer (MRE), an elastic material made by mixing a magnetic response factor, such as iron powder, with an elastic material, such as rubber, which they mounted on a small quadrupedal robot they made in-house, at their own laboratory. These walking robots are expected to be put into a wide variety of usage, including being programmed to perform inspections, repairs, and maintenance tasks on large structures made of steel, such as ships, bridges, transmission towers, large storage areas, and construction sites.

This study, in which Seungwoo Hong and Yong Um of the Department of Mechanical Engineering participated as co-first authors, was published as the cover paper in the December issue of Science Robotics.

< Image on the Cover of 2022 December issue of Science Robotics >

Existing wall-climbing robots use wheels or endless tracks, so their mobility is limited on surfaces with steps or irregularities. On the other hand, walking robots for climbing can expect improved mobility in obstacle terrain, but have disadvantages in that they have significantly slower moving speeds or cannot perform various movements.

In order to enable fast movement of the walking robot, the sole of the foot must have strong adhesion force and be able to control the adhesion to quickly switch from sticking to the surface or to be off of it. In addition, it is necessary to maintain the adhesion force even on a rough or uneven surface.

To solve this problem, the research team used the EPM and MRE for the first time in designing the soles of walking robots. An EPM is a magnet that can turn on and off the electromagnetic force with a short current pulse. Unlike general electromagnets, it has the advantage that it does not require energy to maintain the magnetic force. The research team proposed a new EPM with a rectangular structure arrangement, enabling faster switching while significantly lowering the voltage required for switching compared to existing electromagnets.

In addition, the research team was able to increase the frictional force without significantly reducing the magnetic force of the sole by covering the sole with an MRE. The proposed sole weighs only 169 g, but provides a vertical gripping force of about *535 Newtons (N) and a frictional force of 445 N, which is sufficient gripping force for a quadrupedal robot weighing 8 kg.

* 535 N converted to kg is 54.5 kg, and 445 N is 45.4 kg. In other words, even if an external force of up to 54.5 kg in the vertical direction and up to 45.4 kg in the horizontal direction is applied (or even if a corresponding weight is hung), the sole of the foot does not come off the steel plate.

MARVEL climbed up a vertical wall at high speed at a speed of 70 cm per second, and was able to walk while hanging upside down from the ceiling at a maximum speed of 50 cm per second. This is the world's fastest speed for a walking climbing robot. In addition, the research team demonstrated that the robot can climb at a speed of up to 35 cm even on a surface that is painted, dirty with dust and the rust-tainted surfaces of water tanks, proving the robot's performance in a real environment. It was experimentally demonstrated that the robot not only exhibited high speed, but also can switch from floor to wall and from wall to ceiling, and overcome 5-cm high obstacles protruding from walls without difficulty.

The new climbing quadrupedal robot is expected to be widely used for inspection, repair, and maintenance of large steel structures such as ships, bridges, transmission towers, oil pipelines, large storage areas, and construction sites. As the works required in these places involves risks such as falls, suffocation and other accidents that may result in serious injuries or casualties, the need for automation is of utmost urgency.

One of the first co-authors of the paper, a Ph.D. student, Yong Um of KAIST’s Department of Mechanical Engineering, said, "By the use of the magnetic soles made up of the EPM and MRE and the non-linear model predictive controller suitable for climbing, the robot can speedily move through a variety of ferromagnetic surfaces including walls and ceilings, not just level grounds. We believe this would become a cornerstone that will expand the mobility and the places of pedal-mobile robots can venture into." He added, “These robots can be put into good use in executing dangerous and difficult tasks on steel structures in places like the shipbuilding yards.”

This research was carried out with support from the National Research Foundation of Korea's Basic Research in Science & Engineering Program for Mid-Career Researchers and Korea Shipbuilding & Offshore Engineering Co., Ltd..

< Figure 1. The quadrupedal robot (MARVEL) walking over various ferrous surfaces. (A) vertical wall (B) ceiling. (C) over obstacles on a vertical wall (D) making floor-to-wall and wall-to-ceiling transitions (E) moving over a storage tank (F) walking on a wall with a 2-kg weight and over a ceiling with a 3-kg load. >

< Figure 2. Description of the magnetic foot (A) Components of the magnet sole: ankle, Square Eletro-Permanent Magnet(S-EPM), MRE footpad. (B) Components of the S-EPM and MRE footpad. (C) Working principle of the S-EPM. When the magnetization direction is aligned as shown in the left figure, magnetic flux comes out of the keeper and circulates through the steel plate, generating holding force (ON state). Conversely, if the magnetization direction is aligned as shown in the figure on the right, the magnetic flux circulates inside the S-EPM and the holding force disappears (OFF state). >

Video Introduction: Agile and versatile climbing on ferromagnetic surfaces with a quadrupedal robot - YouTube

2022.12.30 View 16986

A Quick but Clingy Creepy-Crawler that will MARVEL You

Engineered by KAIST Mechanics, a quadrupedal robot climbs steel walls and crawls across metal ceilings at the fastest speed that the world has ever seen.

< Photo 1. (From left) KAIST ME Prof. Hae-Won Park, Ph.D. Student Yong Um, Ph.D. Student Seungwoo Hong >

- Professor Hae-Won Park's team at the Department of Mechanical Engineering developed a quadrupedal robot that can move at a high speed on ferrous walls and ceilings.

- It is expected to make a wide variety of contributions as it is to be used to conduct inspections and repairs of large steel structures such as ships, bridges, and transmission towers, offering an alternative to dangerous or risky activities required in hazardous environments while maintaining productivity and efficiency through automation and unmanning of such operations.

- The study was published as the cover paper of the December issue of Science Robotics.

KAIST (President Kwang Hyung Lee) announced on the 26th that a research team led by Professor Hae-Won Park of the Department of Mechanical Engineering developed a quadrupedal walking robot that can move at high speed on steel walls and ceilings named M.A.R.V.E.L. - rightly so as it is a Magnetically Adhesive Robot for Versatile and Expeditious Locomotion as described in their paper, “Agile and Versatile Climbing on Ferromagnetic Surfaces with a Quadrupedal Robot.” (DOI: 10.1126/scirobotics.add1017)

To make this happen, Professor Park's research team developed a foot pad that can quickly turn the magnetic adhesive force on and off while retaining high adhesive force even on an uneven surface through the use of the Electro-Permanent Magnet (EPM), a device that can magnetize and demagnetize an electromagnet with little power, and the Magneto-Rheological Elastomer (MRE), an elastic material made by mixing a magnetic response factor, such as iron powder, with an elastic material, such as rubber, which they mounted on a small quadrupedal robot they made in-house, at their own laboratory. These walking robots are expected to be put into a wide variety of usage, including being programmed to perform inspections, repairs, and maintenance tasks on large structures made of steel, such as ships, bridges, transmission towers, large storage areas, and construction sites.

This study, in which Seungwoo Hong and Yong Um of the Department of Mechanical Engineering participated as co-first authors, was published as the cover paper in the December issue of Science Robotics.

< Image on the Cover of 2022 December issue of Science Robotics >

Existing wall-climbing robots use wheels or endless tracks, so their mobility is limited on surfaces with steps or irregularities. On the other hand, walking robots for climbing can expect improved mobility in obstacle terrain, but have disadvantages in that they have significantly slower moving speeds or cannot perform various movements.

In order to enable fast movement of the walking robot, the sole of the foot must have strong adhesion force and be able to control the adhesion to quickly switch from sticking to the surface or to be off of it. In addition, it is necessary to maintain the adhesion force even on a rough or uneven surface.

To solve this problem, the research team used the EPM and MRE for the first time in designing the soles of walking robots. An EPM is a magnet that can turn on and off the electromagnetic force with a short current pulse. Unlike general electromagnets, it has the advantage that it does not require energy to maintain the magnetic force. The research team proposed a new EPM with a rectangular structure arrangement, enabling faster switching while significantly lowering the voltage required for switching compared to existing electromagnets.

In addition, the research team was able to increase the frictional force without significantly reducing the magnetic force of the sole by covering the sole with an MRE. The proposed sole weighs only 169 g, but provides a vertical gripping force of about *535 Newtons (N) and a frictional force of 445 N, which is sufficient gripping force for a quadrupedal robot weighing 8 kg.

* 535 N converted to kg is 54.5 kg, and 445 N is 45.4 kg. In other words, even if an external force of up to 54.5 kg in the vertical direction and up to 45.4 kg in the horizontal direction is applied (or even if a corresponding weight is hung), the sole of the foot does not come off the steel plate.

MARVEL climbed up a vertical wall at high speed at a speed of 70 cm per second, and was able to walk while hanging upside down from the ceiling at a maximum speed of 50 cm per second. This is the world's fastest speed for a walking climbing robot. In addition, the research team demonstrated that the robot can climb at a speed of up to 35 cm even on a surface that is painted, dirty with dust and the rust-tainted surfaces of water tanks, proving the robot's performance in a real environment. It was experimentally demonstrated that the robot not only exhibited high speed, but also can switch from floor to wall and from wall to ceiling, and overcome 5-cm high obstacles protruding from walls without difficulty.

The new climbing quadrupedal robot is expected to be widely used for inspection, repair, and maintenance of large steel structures such as ships, bridges, transmission towers, oil pipelines, large storage areas, and construction sites. As the works required in these places involves risks such as falls, suffocation and other accidents that may result in serious injuries or casualties, the need for automation is of utmost urgency.

One of the first co-authors of the paper, a Ph.D. student, Yong Um of KAIST’s Department of Mechanical Engineering, said, "By the use of the magnetic soles made up of the EPM and MRE and the non-linear model predictive controller suitable for climbing, the robot can speedily move through a variety of ferromagnetic surfaces including walls and ceilings, not just level grounds. We believe this would become a cornerstone that will expand the mobility and the places of pedal-mobile robots can venture into." He added, “These robots can be put into good use in executing dangerous and difficult tasks on steel structures in places like the shipbuilding yards.”

This research was carried out with support from the National Research Foundation of Korea's Basic Research in Science & Engineering Program for Mid-Career Researchers and Korea Shipbuilding & Offshore Engineering Co., Ltd..

< Figure 1. The quadrupedal robot (MARVEL) walking over various ferrous surfaces. (A) vertical wall (B) ceiling. (C) over obstacles on a vertical wall (D) making floor-to-wall and wall-to-ceiling transitions (E) moving over a storage tank (F) walking on a wall with a 2-kg weight and over a ceiling with a 3-kg load. >

< Figure 2. Description of the magnetic foot (A) Components of the magnet sole: ankle, Square Eletro-Permanent Magnet(S-EPM), MRE footpad. (B) Components of the S-EPM and MRE footpad. (C) Working principle of the S-EPM. When the magnetization direction is aligned as shown in the left figure, magnetic flux comes out of the keeper and circulates through the steel plate, generating holding force (ON state). Conversely, if the magnetization direction is aligned as shown in the figure on the right, the magnetic flux circulates inside the S-EPM and the holding force disappears (OFF state). >

Video Introduction: Agile and versatile climbing on ferromagnetic surfaces with a quadrupedal robot - YouTube

2022.12.30 View 16986 -

KI-Robotics Wins the 2021 Hyundai Motor Autonomous Driving Challenge

Professor Hyunchul Shim’s autonomous driving team topped the challenge

KI-Robotics, a KAIST autonomous driving research team led by Professor Hyunchul Shim from the School of Electric Engineering won the 2021 Hyundai Motor Autonomous Driving Challenge held in Seoul on November 29. The KI-Robotics team received 100 million won in prize money and a field trip to the US.

Out of total 23 teams, the six teams competed in the finals by simultaneously driving through a 4km section within the test operation region, where other traffic was constrained. The challenge included avoiding and overtaking vehicles, crossing intersections, and keeping to traffic laws including traffic lights, lanes, speed limit, and school zones. The contestants were ranked by their order of course completion, but points were deducted every time they violated a traffic rule. A driver and an invigilator rode in each car in case of an emergency, and the race was broadcasted live on a large screen on stage and via YouTube.

In the first round, KI-Robotics came in first with a score of 11 minutes and 27 seconds after a tight race with Incheon University. Although the team’s result in the second round exceeded 16 minutes due to traffic conditions like traffic lights, the 11 minutes and 27 seconds ultimately ranked first out of the six universities. It is worth noting that KI-Robotics focused on its vehicle’s perception and judgement rather than speed when building its algorithm. Out of the six universities that made it to the final round, KI-Robotics was the only team that excluded GPS from the vehicle to minimize its risk.

The team considered the fact that GPS signals are not accurate in urban settings, meaning location errors can cause problems while driving. As an alternative, the team added three radar sensors and cameras in the front and the back of the vehicle. They also used the urban-specific SLAM technology they developed to construct a precise map and were more successful in location determination.

As opposed to other teams that focused on speed, the KAIST team also developed overtaking route construction technology, taking into consideration the locations of surrounding cars, which gave them an advantage in responding to obstacles while keeping to real urban traffic rules. Through this, the KAIST team could score highest in rounds one and two combined.

Professor Shim said, “I am very glad that the autonomous driving technology our research team has been developing over the last ten years has borne fruit. I would like to thank the leader, Daegyu Lee, and all the students that participated in the development, as they did more than their best under difficult conditions.”

Dae-Gyu Lee, the leader of KI-Robotics and a Ph.D. candidate in the School of Electrical Engineering, explained, “Since we came in fourth in the preliminary round, we were further behind than we expected. But we were able to overtake the cars ahead of us and shorten our record.”

2021.12.07 View 7056

KI-Robotics Wins the 2021 Hyundai Motor Autonomous Driving Challenge

Professor Hyunchul Shim’s autonomous driving team topped the challenge

KI-Robotics, a KAIST autonomous driving research team led by Professor Hyunchul Shim from the School of Electric Engineering won the 2021 Hyundai Motor Autonomous Driving Challenge held in Seoul on November 29. The KI-Robotics team received 100 million won in prize money and a field trip to the US.

Out of total 23 teams, the six teams competed in the finals by simultaneously driving through a 4km section within the test operation region, where other traffic was constrained. The challenge included avoiding and overtaking vehicles, crossing intersections, and keeping to traffic laws including traffic lights, lanes, speed limit, and school zones. The contestants were ranked by their order of course completion, but points were deducted every time they violated a traffic rule. A driver and an invigilator rode in each car in case of an emergency, and the race was broadcasted live on a large screen on stage and via YouTube.

In the first round, KI-Robotics came in first with a score of 11 minutes and 27 seconds after a tight race with Incheon University. Although the team’s result in the second round exceeded 16 minutes due to traffic conditions like traffic lights, the 11 minutes and 27 seconds ultimately ranked first out of the six universities. It is worth noting that KI-Robotics focused on its vehicle’s perception and judgement rather than speed when building its algorithm. Out of the six universities that made it to the final round, KI-Robotics was the only team that excluded GPS from the vehicle to minimize its risk.

The team considered the fact that GPS signals are not accurate in urban settings, meaning location errors can cause problems while driving. As an alternative, the team added three radar sensors and cameras in the front and the back of the vehicle. They also used the urban-specific SLAM technology they developed to construct a precise map and were more successful in location determination.

As opposed to other teams that focused on speed, the KAIST team also developed overtaking route construction technology, taking into consideration the locations of surrounding cars, which gave them an advantage in responding to obstacles while keeping to real urban traffic rules. Through this, the KAIST team could score highest in rounds one and two combined.

Professor Shim said, “I am very glad that the autonomous driving technology our research team has been developing over the last ten years has borne fruit. I would like to thank the leader, Daegyu Lee, and all the students that participated in the development, as they did more than their best under difficult conditions.”

Dae-Gyu Lee, the leader of KI-Robotics and a Ph.D. candidate in the School of Electrical Engineering, explained, “Since we came in fourth in the preliminary round, we were further behind than we expected. But we were able to overtake the cars ahead of us and shorten our record.”

2021.12.07 View 7056 -

Hubo Professor Jun-Ho Oh Donates Startup Shares Worth 5 Billion KRW

Rainbow Robotics stock used to endow the development fund

Emeritus Professor Jun-Ho Oh, who developed the 2015 DARPA Challenge winning humanoid robot DRC-Hubo, donated 5 billion KRW on October 25 during a ceremony held at the KAIST campus in Daejeon.

Professor Oh donated his 20% share (400 shares) of his startup Rainbow Robotics, which was established in 2011. Rainbow Robotics was listed on the KOSDAQ this February. The 400 shares were converted to 200,000 shares with a value of approximately 5 billion KRW when listed this year.

KAIST sold the stocks and endowed the Jun-Ho Oh Fund, which will be used for the development of the university. He was the 39th faculty member who launched a startup with technology from his lab and became the biggest faculty entrepreneur donor.

“I have received huge support and funding for my research. Fortunately, the research had a good result and led to the startup. Now I am very delighted to pay back the university. I feel that I have played a part in building the school’s startup ecosystem and creating a virtuous circle,” said Professor Oh during the ceremony.

KAIST President Kwang Hyung Lee declared, “Professor Oh has been a very impressive exemplary model for our aspiring faculty and student tech startups. We will spare no effort to support startups at KAIST.”

Professor Oh, who retired from the Department of Mechanical Engineering last year, now serves as the CTO at Rainbow Robotics. The company is developing humanoid bipedal robots and collaborative robots, and advancing robot technology including parts for astronomical observations.

Professor Hae-Won Park and Professor Je Min Hwangbo, who are now responsible for the Hubo Lab, also joined the ceremony along with employees of Rainbow Robotics.

2021.10.26 View 9818

Hubo Professor Jun-Ho Oh Donates Startup Shares Worth 5 Billion KRW

Rainbow Robotics stock used to endow the development fund

Emeritus Professor Jun-Ho Oh, who developed the 2015 DARPA Challenge winning humanoid robot DRC-Hubo, donated 5 billion KRW on October 25 during a ceremony held at the KAIST campus in Daejeon.

Professor Oh donated his 20% share (400 shares) of his startup Rainbow Robotics, which was established in 2011. Rainbow Robotics was listed on the KOSDAQ this February. The 400 shares were converted to 200,000 shares with a value of approximately 5 billion KRW when listed this year.

KAIST sold the stocks and endowed the Jun-Ho Oh Fund, which will be used for the development of the university. He was the 39th faculty member who launched a startup with technology from his lab and became the biggest faculty entrepreneur donor.

“I have received huge support and funding for my research. Fortunately, the research had a good result and led to the startup. Now I am very delighted to pay back the university. I feel that I have played a part in building the school’s startup ecosystem and creating a virtuous circle,” said Professor Oh during the ceremony.

KAIST President Kwang Hyung Lee declared, “Professor Oh has been a very impressive exemplary model for our aspiring faculty and student tech startups. We will spare no effort to support startups at KAIST.”

Professor Oh, who retired from the Department of Mechanical Engineering last year, now serves as the CTO at Rainbow Robotics. The company is developing humanoid bipedal robots and collaborative robots, and advancing robot technology including parts for astronomical observations.

Professor Hae-Won Park and Professor Je Min Hwangbo, who are now responsible for the Hubo Lab, also joined the ceremony along with employees of Rainbow Robotics.

2021.10.26 View 9818 -

Research Day Highlights the Most Impactful Technologies of the Year

Technology Converting Full HD Image to 4-Times Higher UHD Via Deep Learning Cited as the Research of the Year

The technology converting a full HD image into a four-times higher UHD image in real time via AI deep learning was recognized as the Research of the Year. Professor Munchurl Kim from the School of Electrical Engineering who developed the technology won the Research of the Year Grand Prize during the 2021 KAIST Research Day ceremony on May 25. Professor Kim was lauded for conducting creative research on machine learning and deep learning-based image processing.

KAIST’s Research Day recognizes the most notable research outcomes of the year, while creating opportunities for researchers to immerse themselves into interdisciplinary research projects with their peers. The ceremony was broadcast online due to Covid-19 and announced the Ten R&D Achievement of the Year that are expected to make a significant impact.

To celebrate the award, Professor Kim gave a lecture on “Computational Imaging through Deep Learning for the Acquisition of High-Quality Images.” Focusing on the fact that advancements in artificial intelligence technology can show superior performance when used to convert low-quality videos to higher quality, he introduced some of the AI technologies that are currently being applied in the field of image restoration and quality improvement.

Professors Eui-Cheol Shin from the Graduate School of Medical Science and Engineering and In-Cheol Park from the School of Electrical Engineering each received Research Awards, and Professor Junyong Noh from the Graduate School of Culture Technology was selected for the Innovation Award. Professors Dong Ki Yoon from the Department of Chemistry and Hyungki Kim from the Department of Mechanical Engineering were awarded the Interdisciplinary Award as a team for their joint research.

Meanwhile, out of KAIST’s ten most notable R&D achievements, those from the field of natural and biological sciences included research on rare earth element-platinum nanoparticle catalysts by Professor Ryong Ryoo from the Department of Chemistry, real-time observations of the locational changes in all of the atoms in a molecule by Professor Hyotcherl Ihee from the Department of Chemistry, and an investigation on memory retention mechanisms after synapse removal from an astrocyte by Professor Won-Suk Chung from the Department of Biological Sciences.

Awardees from the engineering field were a wearable robot for paraplegics with the world’s best functionality and walking speed by Professor Kyoungchul Kong from the Department of Mechanical Engineering, fair machine learning by Professor Changho Suh from the School of Electrical Engineering, and a generative adversarial networks processing unit (GANPU), an AI semiconductor that can learn from even mobiles by processing multiple and deep networks by Professor Hoi-Jun Yoo from the School of Electrical Engineering.

Others selected as part of the ten research studies were the development of epigenetic reprogramming technology in tumour by Professor Pilnam Kim from the Department of Bio and Brain Engineering, the development of an original technology for reverse cell aging by Professor Kwang-Hyun Cho from the Department of Bio and Brain Engineering, a heterogeneous metal element catalyst for atmospheric purification by Professor Hyunjoo Lee from the Department of Chemical and Biomolecular Engineering, and the Mobile Clinic Module (MCM): a negative pressure ward for epidemic hospitals by Professor Taek-jin Nam (reported at the Wall Street Journal) from the Department of Industrial Design.

2021.05.31 View 15815

Research Day Highlights the Most Impactful Technologies of the Year

Technology Converting Full HD Image to 4-Times Higher UHD Via Deep Learning Cited as the Research of the Year

The technology converting a full HD image into a four-times higher UHD image in real time via AI deep learning was recognized as the Research of the Year. Professor Munchurl Kim from the School of Electrical Engineering who developed the technology won the Research of the Year Grand Prize during the 2021 KAIST Research Day ceremony on May 25. Professor Kim was lauded for conducting creative research on machine learning and deep learning-based image processing.

KAIST’s Research Day recognizes the most notable research outcomes of the year, while creating opportunities for researchers to immerse themselves into interdisciplinary research projects with their peers. The ceremony was broadcast online due to Covid-19 and announced the Ten R&D Achievement of the Year that are expected to make a significant impact.

To celebrate the award, Professor Kim gave a lecture on “Computational Imaging through Deep Learning for the Acquisition of High-Quality Images.” Focusing on the fact that advancements in artificial intelligence technology can show superior performance when used to convert low-quality videos to higher quality, he introduced some of the AI technologies that are currently being applied in the field of image restoration and quality improvement.

Professors Eui-Cheol Shin from the Graduate School of Medical Science and Engineering and In-Cheol Park from the School of Electrical Engineering each received Research Awards, and Professor Junyong Noh from the Graduate School of Culture Technology was selected for the Innovation Award. Professors Dong Ki Yoon from the Department of Chemistry and Hyungki Kim from the Department of Mechanical Engineering were awarded the Interdisciplinary Award as a team for their joint research.

Meanwhile, out of KAIST’s ten most notable R&D achievements, those from the field of natural and biological sciences included research on rare earth element-platinum nanoparticle catalysts by Professor Ryong Ryoo from the Department of Chemistry, real-time observations of the locational changes in all of the atoms in a molecule by Professor Hyotcherl Ihee from the Department of Chemistry, and an investigation on memory retention mechanisms after synapse removal from an astrocyte by Professor Won-Suk Chung from the Department of Biological Sciences.

Awardees from the engineering field were a wearable robot for paraplegics with the world’s best functionality and walking speed by Professor Kyoungchul Kong from the Department of Mechanical Engineering, fair machine learning by Professor Changho Suh from the School of Electrical Engineering, and a generative adversarial networks processing unit (GANPU), an AI semiconductor that can learn from even mobiles by processing multiple and deep networks by Professor Hoi-Jun Yoo from the School of Electrical Engineering.

Others selected as part of the ten research studies were the development of epigenetic reprogramming technology in tumour by Professor Pilnam Kim from the Department of Bio and Brain Engineering, the development of an original technology for reverse cell aging by Professor Kwang-Hyun Cho from the Department of Bio and Brain Engineering, a heterogeneous metal element catalyst for atmospheric purification by Professor Hyunjoo Lee from the Department of Chemical and Biomolecular Engineering, and the Mobile Clinic Module (MCM): a negative pressure ward for epidemic hospitals by Professor Taek-jin Nam (reported at the Wall Street Journal) from the Department of Industrial Design.

2021.05.31 View 15815 -

‘WalkON Suit 4’ Releases Paraplegics from Wheelchairs

- KAIST Athletes in ‘WalkON Suit 4’ Dominated the Cybathlon 2020 Global Edition. -

Paraplegic athletes Byeong-Uk Kim and Joohyun Lee from KAIST’s Team Angel Robotics won a gold and a bronze medal respectively at the Cybathlon 2020 Global Edition last week. ‘WalkON Suit 4,’ a wearable robot developed by the Professor Kyoungchul Kong’s team from the Department of Mechanical Engineering topped the standings at the event with double medal success.

Kim, the former bronze medallist, clinched his gold medal by finishing all six tasks in 3 minutes and 47 seconds, whereas Lee came in third with a time of 5 minutes and 51 seconds. TWIICE, a Swiss team, lagged 53 seconds behind Kim’s winning time to be the runner-up.

Cybathlon is a global championship, organized by ETH Zurich, which brings together people with physical disabilities to compete using state-of-the-art assistive technologies to perform everyday tasks. The first championship was held in 2016 in Zurich, Switzerland.

Due to the COVID-19 pandemic, the second championship was postponed twice and held in a new format in a decentralized setting. A total of 51 teams from 20 countries across the world performed the events in their home bases in different time zones instead of traveling to Zurich. Under the supervision of a referee and timekeeper, all races were filmed and then reviewed by judges.

KAIST’s Team Angel Robotics participated in the Powered Exoskeleton Race category, where nine pilots representing five nations including Korea, Switzerland, the US, Russia, and France competed against each other.

The team installed their own arena and raced at the KAIST Main Campus in Daejeon according to the framework, tasks, and rules defined by the competition committee. The two paraplegic pilots were each equipped with exoskeletal devices, the WalkON Suit 4, and undertook six tasks related to daily activities.

The WalkON Suit 4 recorded the fastest walking speed for a complete paraplegic ever reported. For a continuous walk, it achieved a maximum speed of 40 meters per minute. This is comparable to the average walking pace of a non-disabled person, which is around two to four kilometers per hour.

The research team raised the functionality of the robot by adding technology that can observe the user’s level of anxiety and external factors like the state of the walking surface, so it can control itself intelligently. The assistive functions a robot should provide vary greatly with the environment, and the WalkON Suit 4 made it possible to analyze the pace of the user within 30 steps and provide a personally optimized walking pattern, enabling a high walking speed.

The six tasks that Kim and Lee had to complete were:1) sitting and standing back up, 2) navigating around obstacles while avoiding collisions, 3) stepping over obstacles on the ground, 4) going up and down stairs, 5) walking across a tilted path, and 6) climbing a steep slope, opening and closing a door, and descending a steep slope.

Points were given based on the accuracy of each completed task, and the final scores were calculated by adding all of the points that were gained in each attempt, which lasted 10 minutes. Each pilot was given three opportunities and used his/her highest score. Should pilots have the same final score, the pilot who completed the race in the shortest amount of time would win.

Kim said in his victory speech that he was so thrilled to see all his and fellow researchers’ years of hard work paying off. “This will be a good opportunity to show how outstanding Korean wearable robot technologies are,” he added.

Lee, who participated in the competition for the first time, said, “By showing that I can overcome my physical disabilities with robot technology, I’d like to send out a message of hope to everyone who is tired because of COVID-19”.

Professor Kong’s team collaborated in technology development and pilot training with their colleagues from Angel Robotics Co., Ltd., Severance Rehabilitation Hospital, Yeungnam University, Stalks, and the Institute of Rehabilitation Technology.

Footage from the competition is available at the Cybathlon’s official website.

(END)

2020.11.20 View 10340

‘WalkON Suit 4’ Releases Paraplegics from Wheelchairs

- KAIST Athletes in ‘WalkON Suit 4’ Dominated the Cybathlon 2020 Global Edition. -

Paraplegic athletes Byeong-Uk Kim and Joohyun Lee from KAIST’s Team Angel Robotics won a gold and a bronze medal respectively at the Cybathlon 2020 Global Edition last week. ‘WalkON Suit 4,’ a wearable robot developed by the Professor Kyoungchul Kong’s team from the Department of Mechanical Engineering topped the standings at the event with double medal success.

Kim, the former bronze medallist, clinched his gold medal by finishing all six tasks in 3 minutes and 47 seconds, whereas Lee came in third with a time of 5 minutes and 51 seconds. TWIICE, a Swiss team, lagged 53 seconds behind Kim’s winning time to be the runner-up.

Cybathlon is a global championship, organized by ETH Zurich, which brings together people with physical disabilities to compete using state-of-the-art assistive technologies to perform everyday tasks. The first championship was held in 2016 in Zurich, Switzerland.

Due to the COVID-19 pandemic, the second championship was postponed twice and held in a new format in a decentralized setting. A total of 51 teams from 20 countries across the world performed the events in their home bases in different time zones instead of traveling to Zurich. Under the supervision of a referee and timekeeper, all races were filmed and then reviewed by judges.

KAIST’s Team Angel Robotics participated in the Powered Exoskeleton Race category, where nine pilots representing five nations including Korea, Switzerland, the US, Russia, and France competed against each other.

The team installed their own arena and raced at the KAIST Main Campus in Daejeon according to the framework, tasks, and rules defined by the competition committee. The two paraplegic pilots were each equipped with exoskeletal devices, the WalkON Suit 4, and undertook six tasks related to daily activities.

The WalkON Suit 4 recorded the fastest walking speed for a complete paraplegic ever reported. For a continuous walk, it achieved a maximum speed of 40 meters per minute. This is comparable to the average walking pace of a non-disabled person, which is around two to four kilometers per hour.

The research team raised the functionality of the robot by adding technology that can observe the user’s level of anxiety and external factors like the state of the walking surface, so it can control itself intelligently. The assistive functions a robot should provide vary greatly with the environment, and the WalkON Suit 4 made it possible to analyze the pace of the user within 30 steps and provide a personally optimized walking pattern, enabling a high walking speed.

The six tasks that Kim and Lee had to complete were:1) sitting and standing back up, 2) navigating around obstacles while avoiding collisions, 3) stepping over obstacles on the ground, 4) going up and down stairs, 5) walking across a tilted path, and 6) climbing a steep slope, opening and closing a door, and descending a steep slope.

Points were given based on the accuracy of each completed task, and the final scores were calculated by adding all of the points that were gained in each attempt, which lasted 10 minutes. Each pilot was given three opportunities and used his/her highest score. Should pilots have the same final score, the pilot who completed the race in the shortest amount of time would win.

Kim said in his victory speech that he was so thrilled to see all his and fellow researchers’ years of hard work paying off. “This will be a good opportunity to show how outstanding Korean wearable robot technologies are,” he added.

Lee, who participated in the competition for the first time, said, “By showing that I can overcome my physical disabilities with robot technology, I’d like to send out a message of hope to everyone who is tired because of COVID-19”.

Professor Kong’s team collaborated in technology development and pilot training with their colleagues from Angel Robotics Co., Ltd., Severance Rehabilitation Hospital, Yeungnam University, Stalks, and the Institute of Rehabilitation Technology.

Footage from the competition is available at the Cybathlon’s official website.

(END)

2020.11.20 View 10340 -

Professor Jee-Hwan Ryu Receives IEEE ICRA 2020 Outstanding Reviewer Award

Professor Jee-Hwan Ryu from the Department of Civil and Environmental Engineering was selected as this year’s winner of the Outstanding Reviewer Award presented by the Institute of Electrical and Electronics Engineers International Conference on Robotics and Automation (IEEE ICRA). The award ceremony took place on June 5 during the conference that is being held online May 31 through August 31 for three months.

The IEEE ICRA Outstanding Reviewer Award is given every year to the top reviewers who have provided constructive and high-quality thesis reviews, and contributed to improving the quality of papers published as results of the conference.

Professor Ryu was one of the four winners of this year’s award. He was selected from 9,425 candidates, which was approximately three times bigger than the candidate pool in previous years. He was strongly recommended by the editorial committee of the conference.

(END)

2020.06.10 View 9831

Professor Jee-Hwan Ryu Receives IEEE ICRA 2020 Outstanding Reviewer Award

Professor Jee-Hwan Ryu from the Department of Civil and Environmental Engineering was selected as this year’s winner of the Outstanding Reviewer Award presented by the Institute of Electrical and Electronics Engineers International Conference on Robotics and Automation (IEEE ICRA). The award ceremony took place on June 5 during the conference that is being held online May 31 through August 31 for three months.

The IEEE ICRA Outstanding Reviewer Award is given every year to the top reviewers who have provided constructive and high-quality thesis reviews, and contributed to improving the quality of papers published as results of the conference.

Professor Ryu was one of the four winners of this year’s award. He was selected from 9,425 candidates, which was approximately three times bigger than the candidate pool in previous years. He was strongly recommended by the editorial committee of the conference.

(END)

2020.06.10 View 9831 -

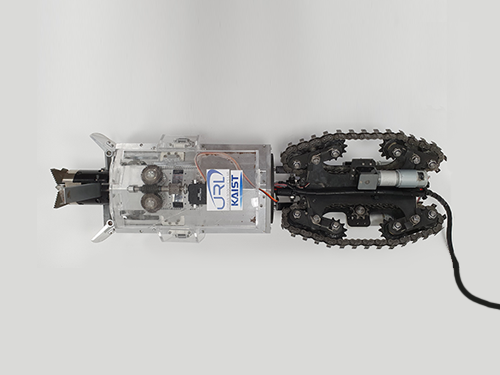

‘Mole-bot’ Optimized for Underground and Space Exploration

Biomimetic drilling robot provides new insights into the development of efficient drilling technologies

Mole-bot, a drilling biomimetic robot designed by KAIST, boasts a stout scapula, a waist inclinable on all sides, and powerful forelimbs. Most of all, the powerful torque from the expandable drilling bit mimicking the chiseling ability of a mole’s front teeth highlights the best feature of the drilling robot.

The Mole-bot is expected to be used for space exploration and mining for underground resources such as coalbed methane and Rare Earth Elements (REE), which require highly advanced drilling technologies in complex environments.

The research team, led by Professor Hyun Myung from the School of Electrical Engineering, found inspiration for their drilling bot from two striking features of the African mole-rat and European mole.

“The crushing power of the African mole-rat’s teeth is so powerful that they can dig a hole with 48 times more power than their body weight. We used this characteristic for building the main excavation tool. And its expandable drill is designed not to collide with its forelimbs,” said Professor Myung.

The 25-cm wide and 84-cm long Mole-bot can excavate three times faster with six times higher directional accuracy than conventional models. The Mole-bot weighs 26 kg.

After digging, the robot removes the excavated soil and debris using its forelimbs. This embedded muscle feature, inspired by the European mole’s scapula, converts linear motion into a powerful rotational force. For directional drilling, the robot’s elongated waist changes its direction 360° like living mammals.

For exploring underground environments, the research team developed and applied new sensor systems and algorithms to identify the robot’s position and orientation using graph-based 3D Simultaneous Localization and Mapping (SLAM) technology that matches the Earth’s magnetic field sequence, which enables 3D autonomous navigation underground.

According to Market & Market’s survey, the directional drilling market in 2016 is estimated to be 83.3 billion USD and is expected to grow to 103 billion USD in 2021. The growth of the drilling market, starting with the Shale Revolution, is likely to expand into the future development of space and polar resources. As initiated by Space X recently, more attention for planetary exploration will be on the rise and its related technology and equipment market will also increase.

The Mole-bot is a huge step forward for efficient underground drilling and exploration technologies. Unlike conventional drilling processes that use environmentally unfriendly mud compounds for cleaning debris, Mole-bot can mitigate environmental destruction. The researchers said their system saves on cost and labor and does not require additional pipelines or other ancillary equipment.

“We look forward to a more efficient resource exploration with this type of drilling robot. We also hope Mole-bot will have a very positive impact on the robotics market in terms of its extensive application spectra and economic feasibility,” said Professor Myung.

This research, made in collaboration with Professor Jung-Wuk Hong and Professor Tae-Hyuk Kwon’s team in the Department of Civil and Environmental Engineering for robot structure analysis and geotechnical experiments, was supported by the Ministry of Trade, Industry and Energy’s Industrial Technology Innovation Project.

Profile

Professor Hyun Myung

Urban Robotics Lab

http://urobot.kaist.ac.kr/

School of Electrical Engineering

KAIST

2020.06.05 View 11502

‘Mole-bot’ Optimized for Underground and Space Exploration

Biomimetic drilling robot provides new insights into the development of efficient drilling technologies

Mole-bot, a drilling biomimetic robot designed by KAIST, boasts a stout scapula, a waist inclinable on all sides, and powerful forelimbs. Most of all, the powerful torque from the expandable drilling bit mimicking the chiseling ability of a mole’s front teeth highlights the best feature of the drilling robot.

The Mole-bot is expected to be used for space exploration and mining for underground resources such as coalbed methane and Rare Earth Elements (REE), which require highly advanced drilling technologies in complex environments.

The research team, led by Professor Hyun Myung from the School of Electrical Engineering, found inspiration for their drilling bot from two striking features of the African mole-rat and European mole.

“The crushing power of the African mole-rat’s teeth is so powerful that they can dig a hole with 48 times more power than their body weight. We used this characteristic for building the main excavation tool. And its expandable drill is designed not to collide with its forelimbs,” said Professor Myung.

The 25-cm wide and 84-cm long Mole-bot can excavate three times faster with six times higher directional accuracy than conventional models. The Mole-bot weighs 26 kg.