ACM

-

Flexible User Interface Distribution for Ubiquitous Multi-Device Interaction

< Research Group of Professor Insik Shin (center) >

KAIST researchers have developed mobile software platform technology that allows a mobile application (app) to be executed simultaneously and more dynamically on multiple smart devices. Its high flexibility and broad applicability can help accelerate a shift from the current single-device paradigm to a multiple one, which enables users to utilize mobile apps in ways previously unthinkable.

Recent trends in mobile and IoT technologies in this era of 5G high-speed wireless communication have been hallmarked by the emergence of new display hardware and smart devices such as dual screens, foldable screens, smart watches, smart TVs, and smart cars. However, the current mobile app ecosystem is still confined to the conventional single-device paradigm in which users can employ only one screen on one device at a time. Due to this limitation, the real potential of multi-device environments has not been fully explored.

A KAIST research team led by Professor Insik Shin from the School of Computing, in collaboration with Professor Steve Ko’s group from the State University of New York at Buffalo, has developed mobile software platform technology named FLUID that can flexibly distribute the user interfaces (UIs) of an app to a number of other devices in real time without needing any modifications. The proposed technology provides single-device virtualization, and ensures that the interactions between the distributed UI elements across multiple devices remain intact.

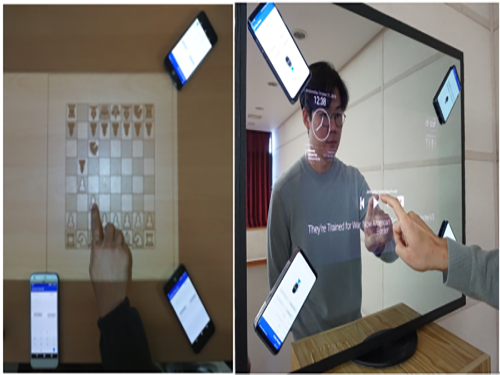

This flexible multimodal interaction can be realized in diverse ubiquitous user experiences (UX), such as using live video steaming and chatting apps including YouTube, LiveMe, and AfreecaTV. FLUID can ensure that the video is not obscured by the chat window by distributing and displaying them separately on different devices respectively, which lets users enjoy the chat function while watching the video at the same time.

In addition, the UI for the destination input on a navigation app can be migrated into the passenger’s device with the help of FLUID, so that the destination can be easily and safely entered by the passenger while the driver is at the wheel.

FLUID can also support 5G multi-view apps – the latest service that allows sports or games to be viewed from various angles on a single device. With FLUID, the user can watch the event simultaneously from different viewpoints on multiple devices without switching between viewpoints on a single screen.

PhD candidate Sangeun Oh, who is the first author, and his team implemented the prototype of FLUID on the leading open-source mobile operating system, Android, and confirmed that it can successfully deliver the new UX to 20 existing legacy apps.

“This new technology can be applied to next-generation products from South Korean companies such as LG’s dual screen phone and Samsung’s foldable phone and is expected to embolden their competitiveness by giving them a head-start in the global market.” said Professor Shin.

This study will be presented at the 25th Annual International Conference on Mobile Computing and Networking (ACM MobiCom 2019) October 21 through 25 in Los Cabos, Mexico. The research was supported by the National Science Foundation (NSF) (CNS-1350883 (CAREER) and CNS-1618531).

Figure 1. Live video streaming and chatting app scenario

Figure 2. Navigation app scenario

Figure 3. 5G multi-view app scenario

Publication: Sangeun Oh, Ahyeon Kim, Sunjae Lee, Kilho Lee, Dae R. Jeong, Steven Y. Ko, and Insik Shin. 2019. FLUID: Flexible User Interface Distribution for Ubiquitous Multi-device Interaction. To be published in Proceedings of the 25th Annual International Conference on Mobile Computing and Networking (ACM MobiCom 2019). ACM, New York, NY, USA. Article Number and DOI Name TBD.

Video Material:

https://youtu.be/lGO4GwH4enA

Profile: Prof. Insik Shin, MS, PhD

ishin@kaist.ac.kr

https://cps.kaist.ac.kr/~ishin

Professor

Cyber-Physical Systems (CPS) Lab

School of Computing

Korea Advanced Institute of Science and Technology (KAIST)

http://kaist.ac.kr Daejeon 34141, Korea

Profile: Sangeun Oh, PhD Candidate

ohsang1213@kaist.ac.kr

https://cps.kaist.ac.kr/

PhD Candidate

Cyber-Physical Systems (CPS) Lab

School of Computing

Korea Advanced Institute of Science and Technology (KAIST)

http://kaist.ac.kr Daejeon 34141, Korea

Profile: Prof. Steve Ko, PhD

stevko@buffalo.edu

https://nsr.cse.buffalo.edu/?page_id=272

Associate Professor

Networked Systems Research Group

Department of Computer Science and Engineering

State University of New York at Buffalo

http://www.buffalo.edu/ Buffalo 14260, USA

(END)

2019.07.20 View 42597

Flexible User Interface Distribution for Ubiquitous Multi-Device Interaction

< Research Group of Professor Insik Shin (center) >

KAIST researchers have developed mobile software platform technology that allows a mobile application (app) to be executed simultaneously and more dynamically on multiple smart devices. Its high flexibility and broad applicability can help accelerate a shift from the current single-device paradigm to a multiple one, which enables users to utilize mobile apps in ways previously unthinkable.

Recent trends in mobile and IoT technologies in this era of 5G high-speed wireless communication have been hallmarked by the emergence of new display hardware and smart devices such as dual screens, foldable screens, smart watches, smart TVs, and smart cars. However, the current mobile app ecosystem is still confined to the conventional single-device paradigm in which users can employ only one screen on one device at a time. Due to this limitation, the real potential of multi-device environments has not been fully explored.

A KAIST research team led by Professor Insik Shin from the School of Computing, in collaboration with Professor Steve Ko’s group from the State University of New York at Buffalo, has developed mobile software platform technology named FLUID that can flexibly distribute the user interfaces (UIs) of an app to a number of other devices in real time without needing any modifications. The proposed technology provides single-device virtualization, and ensures that the interactions between the distributed UI elements across multiple devices remain intact.

This flexible multimodal interaction can be realized in diverse ubiquitous user experiences (UX), such as using live video steaming and chatting apps including YouTube, LiveMe, and AfreecaTV. FLUID can ensure that the video is not obscured by the chat window by distributing and displaying them separately on different devices respectively, which lets users enjoy the chat function while watching the video at the same time.

In addition, the UI for the destination input on a navigation app can be migrated into the passenger’s device with the help of FLUID, so that the destination can be easily and safely entered by the passenger while the driver is at the wheel.

FLUID can also support 5G multi-view apps – the latest service that allows sports or games to be viewed from various angles on a single device. With FLUID, the user can watch the event simultaneously from different viewpoints on multiple devices without switching between viewpoints on a single screen.

PhD candidate Sangeun Oh, who is the first author, and his team implemented the prototype of FLUID on the leading open-source mobile operating system, Android, and confirmed that it can successfully deliver the new UX to 20 existing legacy apps.

“This new technology can be applied to next-generation products from South Korean companies such as LG’s dual screen phone and Samsung’s foldable phone and is expected to embolden their competitiveness by giving them a head-start in the global market.” said Professor Shin.

This study will be presented at the 25th Annual International Conference on Mobile Computing and Networking (ACM MobiCom 2019) October 21 through 25 in Los Cabos, Mexico. The research was supported by the National Science Foundation (NSF) (CNS-1350883 (CAREER) and CNS-1618531).

Figure 1. Live video streaming and chatting app scenario

Figure 2. Navigation app scenario

Figure 3. 5G multi-view app scenario

Publication: Sangeun Oh, Ahyeon Kim, Sunjae Lee, Kilho Lee, Dae R. Jeong, Steven Y. Ko, and Insik Shin. 2019. FLUID: Flexible User Interface Distribution for Ubiquitous Multi-device Interaction. To be published in Proceedings of the 25th Annual International Conference on Mobile Computing and Networking (ACM MobiCom 2019). ACM, New York, NY, USA. Article Number and DOI Name TBD.

Video Material:

https://youtu.be/lGO4GwH4enA

Profile: Prof. Insik Shin, MS, PhD

ishin@kaist.ac.kr

https://cps.kaist.ac.kr/~ishin

Professor

Cyber-Physical Systems (CPS) Lab

School of Computing

Korea Advanced Institute of Science and Technology (KAIST)

http://kaist.ac.kr Daejeon 34141, Korea

Profile: Sangeun Oh, PhD Candidate

ohsang1213@kaist.ac.kr

https://cps.kaist.ac.kr/

PhD Candidate

Cyber-Physical Systems (CPS) Lab

School of Computing

Korea Advanced Institute of Science and Technology (KAIST)

http://kaist.ac.kr Daejeon 34141, Korea

Profile: Prof. Steve Ko, PhD

stevko@buffalo.edu

https://nsr.cse.buffalo.edu/?page_id=272

Associate Professor

Networked Systems Research Group

Department of Computer Science and Engineering

State University of New York at Buffalo

http://www.buffalo.edu/ Buffalo 14260, USA

(END)

2019.07.20 View 42597 -

Anti-drone Technology for Anti-Terrorism Applications

(from top right clockwise: Professor Yongdae Kim,

PhD Candidates Yujin Kwon, Juhwan Noh, Hocheol Shin, and Dohyun Kim)

KAIST researchers have developed anti-drone technology that can hijack other drones by spoofing its location using fake GPS signals. This technology can safely guide a drone to a desired location without any sudden change in direction in emergency situations, and thus respond effectively to dangerous drones such as those intending to carry out acts of terrorism.

Advancements in the drone industry have led to the wider use of drones in our daily lives in areas of reconnaissance, searching and rescuing, disaster prevention and response, and delivery services. At the same time, there has also been a growing concern about privacy, safety, and security issues regarding drones, especially those arising from intrusion into private property and secure facilities. Therefore, the anti-drone industry is rapidly expanding to detect and respond to this possible drone invasion.

The current anti-drone systems used in airports and other key locations utilize electronic jamming signals, high-power lasers, or nets to neutralize drones. For example, drones trespassing on airports are often countered with simple jamming signals that can prevent the drones from moving and changing position, but this may result in a prolonged delay in flight departures and arrivals at the airports. Drones used for terrorist attacks – armed with explosives or weapons – must also be neutralized a safe distance from the public and vital infrastructure to minimize any damage.

Due to this need for a new anti-drone technology to counter these threats, a KAIST research team led by Professor Yongdae Kim from the School of Electrical Engineering has developed technology that securely thwarts drones by tricking them with fake GPS signals.

Fake GPS signals have been used in previous studies to cause confusion inside the drone regarding its location, making the drone drift from its position or path. However, such attack tactics cannot be applied in GPS safety mode. GPS safety mode is an emergency mode that ensures drone safety when the signal is cut or location accuracy is low due to a fake GPS signals. This mode differs between models and manufacturers.

Professor Kim’s team analyzed the GPS safety mode of different drone models made from major drone manufacturers such as DJI and Parrot, made classification systems, and designed a drone abduction technique that covers almost all the types of drone GPS safety modes, and is universally applicable to any drone that uses GPS regardless of model or manufacturer. The research team applied their new technique to four different drones and have proven that the drones can be safely hijacked and guided to the direction of intentional abduction within a small margin of error.

Professor Kim said, “Conventional consumer drones equipped with GPS safety mode seem to be safe from fake GPS signals, however, most of these drones are able to be detoured since they detect GPS errors in a rudimentary manner.” He continued, “This technology can contribute particularly to reducing damage to airports and the airline industry caused by illegal drone flights.”

The research team is planning to commercialize the developed technology by applying it to existing anti-drone solutions through technology transfer.” This research, featured in the ACM Transactions on Privacy and Security (TOPS) on April 9, was supported by the Defense Acquisition Program Administration (DAPA) and the Agency for Defense Development (ADD).

Image 1.

Experimental environment in which a fake GPS signal was produced from a PC and injected into the drone signal using directional antennae

Publication:

Juhwan Noh, Yujin Kwon, Yunmok Son, Hocheol Shin, Dohyun Kim, Jaeyeong Choi, and Yongdae Kim. 2019. Tractor Beam: Safe-hijacking of Consumer Drones with Adaptive GPS Spoofing. ACM Transactions on Privacy and Security. New York, NY, USA, Vol. 22, No. 2, Article 12, 26 pages. https://doi.org/10.1145/3309735

Profile: Prof. Yongdae Kim, MS, PhD

yongdaek@kaist.ac.kr

https://www.syssec.kr/

Professor

School of Electrical Engineering

Korea Advanced Institute of Science and Technology (KAIST)

http://kaist.ac.kr Daejeon 34141, Korea

Profile: Juhwan Noh, PhD Candidate

juhwan@kaist.ac.kr

PhD Candidate

System Security (SysSec) Lab

School of Electrical Engineering

Korea Advanced Institute of Science and Technology (KAIST)

http://kaist.ac.kr Daejeon 34141, Korea

(END)

2019.06.25 View 46464

Anti-drone Technology for Anti-Terrorism Applications

(from top right clockwise: Professor Yongdae Kim,

PhD Candidates Yujin Kwon, Juhwan Noh, Hocheol Shin, and Dohyun Kim)

KAIST researchers have developed anti-drone technology that can hijack other drones by spoofing its location using fake GPS signals. This technology can safely guide a drone to a desired location without any sudden change in direction in emergency situations, and thus respond effectively to dangerous drones such as those intending to carry out acts of terrorism.

Advancements in the drone industry have led to the wider use of drones in our daily lives in areas of reconnaissance, searching and rescuing, disaster prevention and response, and delivery services. At the same time, there has also been a growing concern about privacy, safety, and security issues regarding drones, especially those arising from intrusion into private property and secure facilities. Therefore, the anti-drone industry is rapidly expanding to detect and respond to this possible drone invasion.

The current anti-drone systems used in airports and other key locations utilize electronic jamming signals, high-power lasers, or nets to neutralize drones. For example, drones trespassing on airports are often countered with simple jamming signals that can prevent the drones from moving and changing position, but this may result in a prolonged delay in flight departures and arrivals at the airports. Drones used for terrorist attacks – armed with explosives or weapons – must also be neutralized a safe distance from the public and vital infrastructure to minimize any damage.

Due to this need for a new anti-drone technology to counter these threats, a KAIST research team led by Professor Yongdae Kim from the School of Electrical Engineering has developed technology that securely thwarts drones by tricking them with fake GPS signals.

Fake GPS signals have been used in previous studies to cause confusion inside the drone regarding its location, making the drone drift from its position or path. However, such attack tactics cannot be applied in GPS safety mode. GPS safety mode is an emergency mode that ensures drone safety when the signal is cut or location accuracy is low due to a fake GPS signals. This mode differs between models and manufacturers.

Professor Kim’s team analyzed the GPS safety mode of different drone models made from major drone manufacturers such as DJI and Parrot, made classification systems, and designed a drone abduction technique that covers almost all the types of drone GPS safety modes, and is universally applicable to any drone that uses GPS regardless of model or manufacturer. The research team applied their new technique to four different drones and have proven that the drones can be safely hijacked and guided to the direction of intentional abduction within a small margin of error.

Professor Kim said, “Conventional consumer drones equipped with GPS safety mode seem to be safe from fake GPS signals, however, most of these drones are able to be detoured since they detect GPS errors in a rudimentary manner.” He continued, “This technology can contribute particularly to reducing damage to airports and the airline industry caused by illegal drone flights.”

The research team is planning to commercialize the developed technology by applying it to existing anti-drone solutions through technology transfer.” This research, featured in the ACM Transactions on Privacy and Security (TOPS) on April 9, was supported by the Defense Acquisition Program Administration (DAPA) and the Agency for Defense Development (ADD).

Image 1.

Experimental environment in which a fake GPS signal was produced from a PC and injected into the drone signal using directional antennae

Publication:

Juhwan Noh, Yujin Kwon, Yunmok Son, Hocheol Shin, Dohyun Kim, Jaeyeong Choi, and Yongdae Kim. 2019. Tractor Beam: Safe-hijacking of Consumer Drones with Adaptive GPS Spoofing. ACM Transactions on Privacy and Security. New York, NY, USA, Vol. 22, No. 2, Article 12, 26 pages. https://doi.org/10.1145/3309735

Profile: Prof. Yongdae Kim, MS, PhD

yongdaek@kaist.ac.kr

https://www.syssec.kr/

Professor

School of Electrical Engineering

Korea Advanced Institute of Science and Technology (KAIST)

http://kaist.ac.kr Daejeon 34141, Korea

Profile: Juhwan Noh, PhD Candidate

juhwan@kaist.ac.kr

PhD Candidate

System Security (SysSec) Lab

School of Electrical Engineering

Korea Advanced Institute of Science and Technology (KAIST)

http://kaist.ac.kr Daejeon 34141, Korea

(END)

2019.06.25 View 46464 -

Sound-based Touch Input Technology for Smart Tables and Mirrors

(from left: MS candidate Anish Byanjankar, Research Assistant Professor Hyosu Kim and Professor Insik Shin)

Time passes so quickly, especially in the morning. Your hands are so busy brushing your teeth and checking the weather on your smartphone. You might wish that your mirror could turn into a touch screen and free up your hands. That wish can be achieved very soon. A KAIST team has developed a smartphone-based touch sound localization technology to facilitate ubiquitous interactions, turning objects like furniture and mirrors into touch input tools.

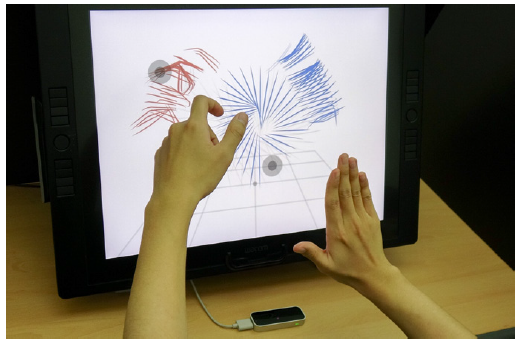

This technology analyzes touch sounds generated from a user’s touch on a surface and identifies the location of the touch input. For instance, users can turn surrounding tables or walls into virtual keyboards and write lengthy e-mails much more conveniently by using only the built-in microphone on their smartphones or tablets. Moreover, family members can enjoy a virtual chessboard or enjoy board games on their dining tables.

Additionally, traditional smart devices such as smart TVs or mirrors, which only provide simple screen display functions, can play a smarter role by adding touch input function support (see the image below).

Figure 1.Examples of using touch input technology: By using only smartphone, you can use surrounding objects as a touch screen anytime and anywhere.

The most important aspect of enabling the sound-based touch input method is to identify the location of touch inputs in a precise manner (within about 1cm error). However, it is challenging to meet these requirements, mainly because this technology can be used in diverse and dynamically changing environments. Users may use objects like desks, walls, or mirrors as touch input tools and the surrounding environments (e.g. location of nearby objects or ambient noise level) can be varied. These environmental changes can affect the characteristics of touch sounds.

To address this challenge, Professor Insik Shin from the School of Computing and his team focused on analyzing the fundamental properties of touch sounds, especially how they are transmitted through solid surfaces.

On solid surfaces, sound experiences a dispersion phenomenon that makes different frequency components travel at different speeds. Based on this phenomenon, the team observed that the arrival time difference (TDoA) between frequency components increases in proportion to the sound transmission distance, and this linear relationship is not affected by the variations of surround environments.

Based on these observations, Research Assistant Professor Hyosu Kim proposed a novel sound-based touch input technology that records touch sounds transmitted through solid surfaces, then conducts a simple calibration process to identify the relationship between TDoA and the sound transmission distance, finally achieving accurate touch input localization.

The accuracy of the proposed system was then measured. The average localization error was lower than about 0.4 cm on a 17-inch touch screen. Particularly, it provided a measurement error of less than 1cm, even with a variety of objects such as wooden desks, glass mirrors, and acrylic boards and when the position of nearby objects and noise levels changed dynamically. Experiments with practical users have also shown positive responses to all measurement factors, including user experience and accuracy.

Professor Shin said, “This is novel touch interface technology that allows a touch input system just by installing three to four microphones, so it can easily turn nearby objects into touch screens.”

The proposed system was presented at ACM SenSys, a top-tier conference in the field of mobile computing and sensing, and was selected as a best paper runner-up in November 2018.

(The demonstration video of the sound-based touch input technology)

2018.12.26 View 10523

Sound-based Touch Input Technology for Smart Tables and Mirrors

(from left: MS candidate Anish Byanjankar, Research Assistant Professor Hyosu Kim and Professor Insik Shin)

Time passes so quickly, especially in the morning. Your hands are so busy brushing your teeth and checking the weather on your smartphone. You might wish that your mirror could turn into a touch screen and free up your hands. That wish can be achieved very soon. A KAIST team has developed a smartphone-based touch sound localization technology to facilitate ubiquitous interactions, turning objects like furniture and mirrors into touch input tools.

This technology analyzes touch sounds generated from a user’s touch on a surface and identifies the location of the touch input. For instance, users can turn surrounding tables or walls into virtual keyboards and write lengthy e-mails much more conveniently by using only the built-in microphone on their smartphones or tablets. Moreover, family members can enjoy a virtual chessboard or enjoy board games on their dining tables.

Additionally, traditional smart devices such as smart TVs or mirrors, which only provide simple screen display functions, can play a smarter role by adding touch input function support (see the image below).

Figure 1.Examples of using touch input technology: By using only smartphone, you can use surrounding objects as a touch screen anytime and anywhere.

The most important aspect of enabling the sound-based touch input method is to identify the location of touch inputs in a precise manner (within about 1cm error). However, it is challenging to meet these requirements, mainly because this technology can be used in diverse and dynamically changing environments. Users may use objects like desks, walls, or mirrors as touch input tools and the surrounding environments (e.g. location of nearby objects or ambient noise level) can be varied. These environmental changes can affect the characteristics of touch sounds.

To address this challenge, Professor Insik Shin from the School of Computing and his team focused on analyzing the fundamental properties of touch sounds, especially how they are transmitted through solid surfaces.

On solid surfaces, sound experiences a dispersion phenomenon that makes different frequency components travel at different speeds. Based on this phenomenon, the team observed that the arrival time difference (TDoA) between frequency components increases in proportion to the sound transmission distance, and this linear relationship is not affected by the variations of surround environments.

Based on these observations, Research Assistant Professor Hyosu Kim proposed a novel sound-based touch input technology that records touch sounds transmitted through solid surfaces, then conducts a simple calibration process to identify the relationship between TDoA and the sound transmission distance, finally achieving accurate touch input localization.

The accuracy of the proposed system was then measured. The average localization error was lower than about 0.4 cm on a 17-inch touch screen. Particularly, it provided a measurement error of less than 1cm, even with a variety of objects such as wooden desks, glass mirrors, and acrylic boards and when the position of nearby objects and noise levels changed dynamically. Experiments with practical users have also shown positive responses to all measurement factors, including user experience and accuracy.

Professor Shin said, “This is novel touch interface technology that allows a touch input system just by installing three to four microphones, so it can easily turn nearby objects into touch screens.”

The proposed system was presented at ACM SenSys, a top-tier conference in the field of mobile computing and sensing, and was selected as a best paper runner-up in November 2018.

(The demonstration video of the sound-based touch input technology)

2018.12.26 View 10523 -

It's Time to 3D Sketch with Air Scaffolding

People often use their hands when describing an object, while pens are great tools for describing objects in detail. Taking this idea, a KAIST team introduced a new 3D sketching workflow, combining the strengths of hand and pen input. This technique will ease the way for ideation in three dimensions, leading to efficient product design in terms of time and cost.

For a designer's drawing to become a product in reality, one has to transform a designer's 2D drawing into a 3D shape; however, it is difficult to infer accurate 3D shapes that match the original intention from an inaccurate 2D drawing made by hand. When creating a 3D shape from a planar 2D drawing, unobtainable information is required. On the other hand, loss of depth information occurs when a 3D shape is expressed as a 2D drawing using perspective drawing techniques.

To fill in these “missing links” during the conversion, "3D sketching" techniques have been actively studied. Their main purpose is to help designers naturally provide missing 3D shape information in a 2D drawing. For example, if a designer draws two symmetric curves from a single point of view or draws the same curves from different points of view, the geometric clues that are left in this process are collected and mathematically interpreted to define the proper 3D curve. As a result, designers can use 3D sketching to directly draw a 3D shape as if using pen and paper.

Among 3D sketching tools, sketching with hand motions, in VR environments in particular, has drawn attention because it is easy and quick. But the biggest limitation is that they cannot articulate the design solely using rough hand motions, hence they are difficult to be applied to product designs. Moreover, users may feel tired after raising their hands in the air during the entire drawing process.

Using hand motions but to elaborate designs, Professor Seok-Hyung Bae and his team from the Department of Industrial Design integrated hand motions and pen-based sketching, allocating roles according to their strengths. This new technique is called Agile 3D Sketching with Air Scaffolding. Designers use their hand motions in the air to create rough 3D shapes which will be used as scaffolds, and then they can add details with pen-based 3D sketching on a tablet (Figure 1).

Figure 1. In the agile 3D sketching workflow with air scaffolding, the user (a) makes unconstrained hand movements in the air to quickly generate rough shapes to be used as scaffolds, (b) uses the scaffolds as references and draws finer details with them, (c) produces a high-fidelity 3D concept sketch of a steering wheel in an iterative and progressive manner.

The team came up with an algorithm to identify descriptive hand motions from transitory hand motions and extract only the intended shapes from unconstrained hand motions, based on air scaffolds from the identified motions.

Through user tests, the team identified that this technique is easy to learn and use, and demonstrates good applicability. Most importantly, the users can reduce time, yet enhance the accuracy of defining the proportion and scale of products.

Eventually, this tool will be able to be applied to various fields including the automobile industry, home appliances, animations and the movie making industry, and robotics. It also can be linked to smart production technology, such as 3D printing, to make manufacturing process faster and more flexible.

PhD candidate Yongkwan Kim, who led the research project, said, “I believe the system will enhance product quality and work efficiency because designers can express their 3D ideas quickly yet accurately without using complex 3D CAD modeling software. I will make it into a product that every designer wants to use in various fields.”

“There have been many attempts to encourage creative activities in various fields by using advanced computer technology. Based on in-depth understanding of designers, we will take the lead in innovating the design process by applying cutting-edge technology,” Professor Bae added.

Professor Bae and his team from the Department of Industrial Design has been delving into developing better 3D sketching tools. They started with a 3D curve sketching system for professional designers called ILoveSketch and moved on to SketchingWithHands for designing a handheld product with first-person hand postures captured by a hand-tracking sensor. They then took their project to the next level and introduced Agile 3D Sketching with Air Scaffolding, a new 3D sketching workflow combining hand motion and pen drawing which was chosen as one of the CHI (Conference on Human Factors in Computing Systems) 2018 Best Papers by the Association for Computing Machinery.

- Click the link to watch video clip of SketchingWithHands

2018.07.25 View 10629

It's Time to 3D Sketch with Air Scaffolding

People often use their hands when describing an object, while pens are great tools for describing objects in detail. Taking this idea, a KAIST team introduced a new 3D sketching workflow, combining the strengths of hand and pen input. This technique will ease the way for ideation in three dimensions, leading to efficient product design in terms of time and cost.

For a designer's drawing to become a product in reality, one has to transform a designer's 2D drawing into a 3D shape; however, it is difficult to infer accurate 3D shapes that match the original intention from an inaccurate 2D drawing made by hand. When creating a 3D shape from a planar 2D drawing, unobtainable information is required. On the other hand, loss of depth information occurs when a 3D shape is expressed as a 2D drawing using perspective drawing techniques.

To fill in these “missing links” during the conversion, "3D sketching" techniques have been actively studied. Their main purpose is to help designers naturally provide missing 3D shape information in a 2D drawing. For example, if a designer draws two symmetric curves from a single point of view or draws the same curves from different points of view, the geometric clues that are left in this process are collected and mathematically interpreted to define the proper 3D curve. As a result, designers can use 3D sketching to directly draw a 3D shape as if using pen and paper.

Among 3D sketching tools, sketching with hand motions, in VR environments in particular, has drawn attention because it is easy and quick. But the biggest limitation is that they cannot articulate the design solely using rough hand motions, hence they are difficult to be applied to product designs. Moreover, users may feel tired after raising their hands in the air during the entire drawing process.

Using hand motions but to elaborate designs, Professor Seok-Hyung Bae and his team from the Department of Industrial Design integrated hand motions and pen-based sketching, allocating roles according to their strengths. This new technique is called Agile 3D Sketching with Air Scaffolding. Designers use their hand motions in the air to create rough 3D shapes which will be used as scaffolds, and then they can add details with pen-based 3D sketching on a tablet (Figure 1).

Figure 1. In the agile 3D sketching workflow with air scaffolding, the user (a) makes unconstrained hand movements in the air to quickly generate rough shapes to be used as scaffolds, (b) uses the scaffolds as references and draws finer details with them, (c) produces a high-fidelity 3D concept sketch of a steering wheel in an iterative and progressive manner.

The team came up with an algorithm to identify descriptive hand motions from transitory hand motions and extract only the intended shapes from unconstrained hand motions, based on air scaffolds from the identified motions.

Through user tests, the team identified that this technique is easy to learn and use, and demonstrates good applicability. Most importantly, the users can reduce time, yet enhance the accuracy of defining the proportion and scale of products.

Eventually, this tool will be able to be applied to various fields including the automobile industry, home appliances, animations and the movie making industry, and robotics. It also can be linked to smart production technology, such as 3D printing, to make manufacturing process faster and more flexible.

PhD candidate Yongkwan Kim, who led the research project, said, “I believe the system will enhance product quality and work efficiency because designers can express their 3D ideas quickly yet accurately without using complex 3D CAD modeling software. I will make it into a product that every designer wants to use in various fields.”

“There have been many attempts to encourage creative activities in various fields by using advanced computer technology. Based on in-depth understanding of designers, we will take the lead in innovating the design process by applying cutting-edge technology,” Professor Bae added.

Professor Bae and his team from the Department of Industrial Design has been delving into developing better 3D sketching tools. They started with a 3D curve sketching system for professional designers called ILoveSketch and moved on to SketchingWithHands for designing a handheld product with first-person hand postures captured by a hand-tracking sensor. They then took their project to the next level and introduced Agile 3D Sketching with Air Scaffolding, a new 3D sketching workflow combining hand motion and pen drawing which was chosen as one of the CHI (Conference on Human Factors in Computing Systems) 2018 Best Papers by the Association for Computing Machinery.

- Click the link to watch video clip of SketchingWithHands

2018.07.25 View 10629 -

A New Theory Improves Button Designs

Pressing a button appears effortless. People easily dismisses how challenging it is. Researchers at KAIST and Aalto University in Finland, created detailed simulations of button-pressing with the goal of producing human-like presses.

The researchers argue that the key capability of the brain is a probabilistic model. The brain learns a model that allows it to predict a suitable motor command for a button. If a press fails, it can pick a very good alternative and try it out. "Without this ability, we would have to learn to use every button like it was new," tells Professor Byungjoo Lee from the Graduate School of Culture Technology at KAIST.

After successfully activating the button, the brain can tune the motor command to be more precise, use less energy and to avoid stress or pain. "These factors together, with practice, produce the fast, minimum-effort, elegant touch people are able to perform."

The brain uses probabilistic models also to extract information optimally from the sensations that arise when the finger moves and its tip touches the button. It "enriches" the ephemeral sensations optimally based on prior experience to estimate the time the button was impacted. For example, tactile sensation from the tip of the finger a better predictor for button activation than proprioception (angle position) and visual feedback.

Best performance is achieved when all sensations are considered together. To adapt, the brain must fuse their information using prior experiences. Professor Lee explains, "We believe that the brain picks up these skills over repeated button pressings that start already as a child. What appears easy for us now has been acquired over years."

The research was triggered by admiration of our remarkable capability to adapt button-pressing. Professor Antti Oulasvirta at Aalto University said, "We push a button on a remote controller differently than a piano key. The press of a skilled user is surprisingly elegant when looked at terms of timing, reliability, and energy use. We successfully press buttons without ever knowing the inner workings of a button. It is essentially a black box to our motor system. On the other hand, we also fail to activate buttons, and some buttons are known to be worse than others."

Previous research has shown that touch buttons are worse than push-buttons, but there has not been adequate theoretical explanation.

"In the past, there has been very little attention to buttons, although we use them all the time" says Dr. Sunjun Kim from Aalto University. The new theory and simulations can be used to design better buttons.

"One exciting implication of the theory is that activating the button at the moment when the sensation is strongest will help users better rhythm their keypresses."

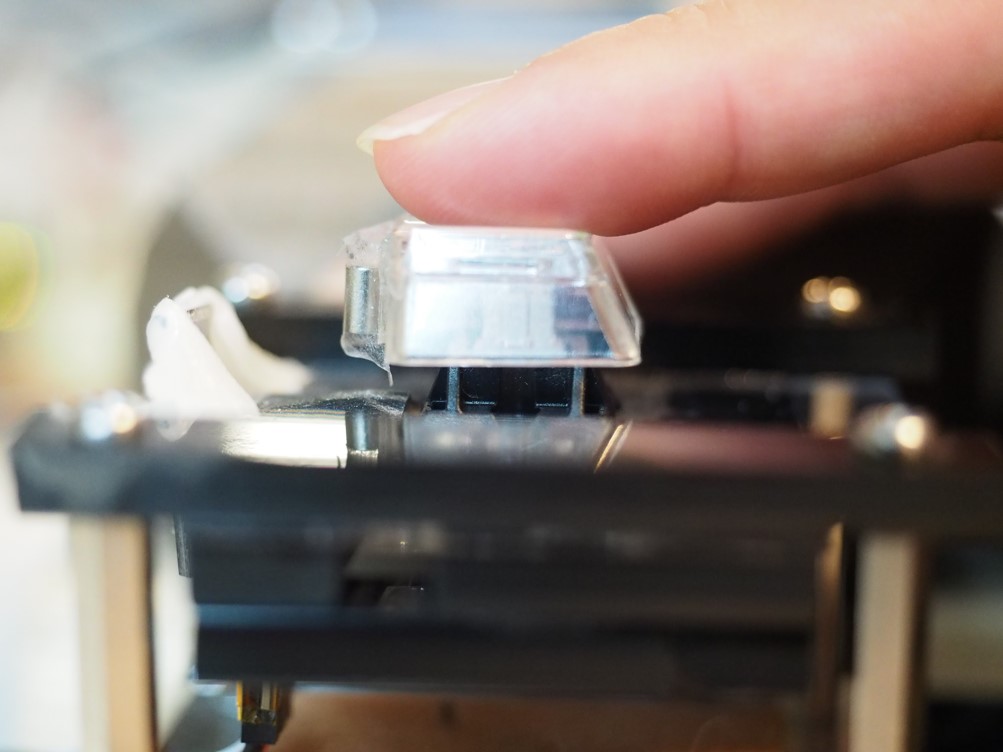

To test this hypothesis, the researchers created a new method for changing the way buttons are activated. The technique is called Impact Activation. Instead of activating the button at first contact, it activates it when the button cap or finger hits the floor with maximum impact.

The technique was 94% better in rapid tapping than the regular activation method for a push-button (Cherry MX switch) and 37% than a regular touchscreen button using a capacitive touch sensor. The technique can be easily deployed in touchscreens. However, regular physical keyboards do not offer the required sensing capability, although special products exist (e.g., the Wooting keyboard) on which it can be implemented.

The simulations shed new light on what happens during a button press. One problem the brain must overcome is that muscles do not activate as perfectly as we will, but every press is slightly different. Moreover, a button press is very fast, occurring within 100 milliseconds, and is too fast for correcting movement. The key to understanding button-pressing is therefore to understand how the brain adapts based on the limited sensations that are the residue of the brief press event.

The researchers also used the simulation to explain differences among physical and touchscreen-based button types. Both physical and touch buttons provide clear tactile signals from the impact of the tip with the button floor. However, with the physical button this signal is more pronounced and longer.

"Where the two button types also differ is the starting height of the finger, and this makes a difference," explains Professor Lee. "When we pull up the finger from the touchscreen, it will end up at different height every time. Its down-press cannot be as accurately controlled in time as with a push-button where the finger can rest on top of the key cap."

Three scientific articles, "Neuromechanics of a Button Press", "Impact activation improves rapid button pressing", and "Moving target selection: A cue integration model", will be presented at the CHI Conference on Human Factors in Computing Systems in Montréal, Canada, in April 2018.

2018.03.22 View 8478

A New Theory Improves Button Designs

Pressing a button appears effortless. People easily dismisses how challenging it is. Researchers at KAIST and Aalto University in Finland, created detailed simulations of button-pressing with the goal of producing human-like presses.

The researchers argue that the key capability of the brain is a probabilistic model. The brain learns a model that allows it to predict a suitable motor command for a button. If a press fails, it can pick a very good alternative and try it out. "Without this ability, we would have to learn to use every button like it was new," tells Professor Byungjoo Lee from the Graduate School of Culture Technology at KAIST.

After successfully activating the button, the brain can tune the motor command to be more precise, use less energy and to avoid stress or pain. "These factors together, with practice, produce the fast, minimum-effort, elegant touch people are able to perform."

The brain uses probabilistic models also to extract information optimally from the sensations that arise when the finger moves and its tip touches the button. It "enriches" the ephemeral sensations optimally based on prior experience to estimate the time the button was impacted. For example, tactile sensation from the tip of the finger a better predictor for button activation than proprioception (angle position) and visual feedback.

Best performance is achieved when all sensations are considered together. To adapt, the brain must fuse their information using prior experiences. Professor Lee explains, "We believe that the brain picks up these skills over repeated button pressings that start already as a child. What appears easy for us now has been acquired over years."

The research was triggered by admiration of our remarkable capability to adapt button-pressing. Professor Antti Oulasvirta at Aalto University said, "We push a button on a remote controller differently than a piano key. The press of a skilled user is surprisingly elegant when looked at terms of timing, reliability, and energy use. We successfully press buttons without ever knowing the inner workings of a button. It is essentially a black box to our motor system. On the other hand, we also fail to activate buttons, and some buttons are known to be worse than others."

Previous research has shown that touch buttons are worse than push-buttons, but there has not been adequate theoretical explanation.

"In the past, there has been very little attention to buttons, although we use them all the time" says Dr. Sunjun Kim from Aalto University. The new theory and simulations can be used to design better buttons.

"One exciting implication of the theory is that activating the button at the moment when the sensation is strongest will help users better rhythm their keypresses."

To test this hypothesis, the researchers created a new method for changing the way buttons are activated. The technique is called Impact Activation. Instead of activating the button at first contact, it activates it when the button cap or finger hits the floor with maximum impact.

The technique was 94% better in rapid tapping than the regular activation method for a push-button (Cherry MX switch) and 37% than a regular touchscreen button using a capacitive touch sensor. The technique can be easily deployed in touchscreens. However, regular physical keyboards do not offer the required sensing capability, although special products exist (e.g., the Wooting keyboard) on which it can be implemented.

The simulations shed new light on what happens during a button press. One problem the brain must overcome is that muscles do not activate as perfectly as we will, but every press is slightly different. Moreover, a button press is very fast, occurring within 100 milliseconds, and is too fast for correcting movement. The key to understanding button-pressing is therefore to understand how the brain adapts based on the limited sensations that are the residue of the brief press event.

The researchers also used the simulation to explain differences among physical and touchscreen-based button types. Both physical and touch buttons provide clear tactile signals from the impact of the tip with the button floor. However, with the physical button this signal is more pronounced and longer.

"Where the two button types also differ is the starting height of the finger, and this makes a difference," explains Professor Lee. "When we pull up the finger from the touchscreen, it will end up at different height every time. Its down-press cannot be as accurately controlled in time as with a push-button where the finger can rest on top of the key cap."

Three scientific articles, "Neuromechanics of a Button Press", "Impact activation improves rapid button pressing", and "Moving target selection: A cue integration model", will be presented at the CHI Conference on Human Factors in Computing Systems in Montréal, Canada, in April 2018.

2018.03.22 View 8478 -

Multi-Device Mobile Platform for App Functionality Sharing

Case 1. Mr. Kim, an employee, logged on to his SNS account using a tablet PC at the airport while traveling overseas. However, a malicious virus was installed on the tablet PC and some photos posted on his SNS were deleted by someone else.

Case 2. Mr. and Mrs. Brown are busy contacting credit card and game companies, because his son, who likes games, purchased a million dollars worth of game items using his smartphone.

Case 3. Mr. Park, who enjoys games, bought a sensor-based racing game through his tablet PC. However, he could not enjoy the racing game on his tablet because it was not comfortable to tilt the device for game control.

The above cases are some of the various problems that can arise in modern society where diverse smart devices, including smartphones, exist. Recently, new technology has been developed to easily solve these problems. Professor Insik Shin from the School of Computing has developed ‘Mobile Plus,’ which is a mobile platform that can share the functionalities of applications between smart devices.

This is a novel technology that allows applications to easily share their functionalities without needing any modifications. Smartphone users often use Facebook to log in to another SNS account like Instagram, or use a gallery app to post some photos on their SNS. These examples are possible, because the applications share their login and photo management functionalities.

The functionality sharing enables users to utilize smartphones in various and convenient ways and allows app developers to easily create applications. However, current mobile platforms such as Android or iOS only support functionality sharing within a single mobile device. It is burdensome for both developers and users to share functionalities across devices because developers would need to create more complex applications and users would need to install the applications on each device.

To address this problem, Professor Shin’s research team developed platform technology to support functionality sharing between devices. The main concept is using virtualization to give the illusion that the applications running on separate devices are on a single device. They succeeded in this virtualization by extending a RPC (Remote Procedure Call) scheme to multi-device environments.

This virtualization technology enables the existing applications to share their functionalities without needing any modifications, regardless of the type of applications. So users can now use them without additional purchases or updates. Mobile Plus can support hardware functionalities like cameras, microphones, and GPS as well as application functionalities such as logins, payments, and photo sharing. Its greatest advantage is its wide range of possible applications.

Professor Shin said, "Mobile Plus is expected to have great synergy with smart home and smart car technologies. It can provide novel user experiences (UXs) so that users can easily utilize various applications of smart home/vehicle infotainment systems by using a smartphone as their hub."

This research was published at ACM MobiSys, an international conference on mobile computing that was hosted in the United States on June 21.

Figure1. Users can securely log on to SNS accounts by using their personal devices

Figure 2. Parents can control impulse shopping of their children.

Figure 3. Users can enjoy games more and more by using the smartphone as a controller.

2017.08.09 View 11358

Multi-Device Mobile Platform for App Functionality Sharing

Case 1. Mr. Kim, an employee, logged on to his SNS account using a tablet PC at the airport while traveling overseas. However, a malicious virus was installed on the tablet PC and some photos posted on his SNS were deleted by someone else.

Case 2. Mr. and Mrs. Brown are busy contacting credit card and game companies, because his son, who likes games, purchased a million dollars worth of game items using his smartphone.

Case 3. Mr. Park, who enjoys games, bought a sensor-based racing game through his tablet PC. However, he could not enjoy the racing game on his tablet because it was not comfortable to tilt the device for game control.

The above cases are some of the various problems that can arise in modern society where diverse smart devices, including smartphones, exist. Recently, new technology has been developed to easily solve these problems. Professor Insik Shin from the School of Computing has developed ‘Mobile Plus,’ which is a mobile platform that can share the functionalities of applications between smart devices.

This is a novel technology that allows applications to easily share their functionalities without needing any modifications. Smartphone users often use Facebook to log in to another SNS account like Instagram, or use a gallery app to post some photos on their SNS. These examples are possible, because the applications share their login and photo management functionalities.

The functionality sharing enables users to utilize smartphones in various and convenient ways and allows app developers to easily create applications. However, current mobile platforms such as Android or iOS only support functionality sharing within a single mobile device. It is burdensome for both developers and users to share functionalities across devices because developers would need to create more complex applications and users would need to install the applications on each device.

To address this problem, Professor Shin’s research team developed platform technology to support functionality sharing between devices. The main concept is using virtualization to give the illusion that the applications running on separate devices are on a single device. They succeeded in this virtualization by extending a RPC (Remote Procedure Call) scheme to multi-device environments.

This virtualization technology enables the existing applications to share their functionalities without needing any modifications, regardless of the type of applications. So users can now use them without additional purchases or updates. Mobile Plus can support hardware functionalities like cameras, microphones, and GPS as well as application functionalities such as logins, payments, and photo sharing. Its greatest advantage is its wide range of possible applications.

Professor Shin said, "Mobile Plus is expected to have great synergy with smart home and smart car technologies. It can provide novel user experiences (UXs) so that users can easily utilize various applications of smart home/vehicle infotainment systems by using a smartphone as their hub."

This research was published at ACM MobiSys, an international conference on mobile computing that was hosted in the United States on June 21.

Figure1. Users can securely log on to SNS accounts by using their personal devices

Figure 2. Parents can control impulse shopping of their children.

Figure 3. Users can enjoy games more and more by using the smartphone as a controller.

2017.08.09 View 11358 -

Students from Science Academies Shed a Light on KAIST

Recent KAIST statistics show that graduates from science academies distinguish themselves not only by their academic performance at KAIST but also in various professional careers after graduation.

Every year, approximately 20% of newly-enrolled students of KAIST are from science academies. In the case of the class of 2017, 170 students from science academies accounted for 22% of the newly-enrolled students. Moreover, they are forming a top-tier student group on campus. As shown in the table below, the ratio of students graduating early for either enrolling in graduate programs or landing a job indicates their excellent performance at KAIST.

There are eight science academies in Korea: Korea Science Academy of KAIST located in Busan, Seoul Science High School, Gyeonggi Science High School, Gwangju Science High School, Daejeon Science High School, Sejong Academy of Science and Arts, and Incheon Arts and Sciences Academy.

Recently, KAIST analyzed 532 university graduates from the class of 2012. It was found that 23 out of 63 graduates with the alma mater of science academies finished their degree early; as a result, the early graduation ratio of the class of 2012 stood at 36.5%. This percentage was significantly higher than that of students from other high schools.

Among the notable graduates, there was a student who made headlines with donation of 30 million KRW to KAIST. His donation was the largest donation from an enrolled student on record. His story goes back when Android smartphones were about to be distributed. Seung-Gyu Oh, then a student in the School of Electrical Engineering felt that existing subway apps were inconvenient, so he invented his own subway app that navigated the nearest subway lines in 2015. His app hit the market and ranked second in the subway app category. It had approximately five million users, which led to it generating advertising revenue. After the successful launch of the app, Oh accepted the takeover offered by Daum Kakao. He then donated 30 million KRW to his alma mater. “Since high school, I’ve always been thinking that I have received many benefits from my country and felt heavily responsible for it,” the alumnus of Korea Science of Academy and KAIST said. “I decided to make a donation to my alma mater, KAIST because I wanted to return what I had received from my country.” After graduation, Oh is now working for the web firm, Daum Kakao.

In May 24, 2017, the 41st International Collegiate Programming Contest, hosted by Association for Computing Machinery (ACM) and sponsored by IBM, was held in Rapid City, South Dakota in the US. It is a prestigious contest that has been held annually since 1977. College students from around the world participate in this contest; and in 2017, a total of 50,000 students from 2,900 universities in 104 countries participated in regional competitions, and approximately 400 students made it to the final round, entering into a fierce competition. KAIST students also participated in this contest. The team was comprised of Ji-Hoon Ko, Jong-Won Lee, and Han-Pil Kang from the School of Computing. They are also alumni of Gyeonggi Science High School. They received the ‘First Problem Solver’ award and a bronze medal which came with a 3,000 USD cash prize.

Sung-Jin Oh, who also graduated from Korea Science Academy of KAIST, is a research professor at the Korea Institute of Advanced Study (KIAS). He is the youngest recipient of the ‘Young Scientist Award’, which he received by proving a hypothesis from Einstein’s Theory of General Relativity mathematically at the age of 27. After graduating from KAIST, Oh earned his master’s and doctorate degrees from Princeton University, completed his post-doctoral fellow at UC Berkeley, and is now immersing himself in research at KIAS.

Heui-Kwang Noh from the Department of Chemistry and Kang-Min Ahn from the School of Computing, who were selected to receive the presidential scholarship for science in 2014, both graduated from Gyeonggi Science High School. Noh was recognized for his outstanding academic capacity and was also chosen for the ‘GE Foundation Scholar-Leaders Program’ in 2015. The ‘GE Foundation Scholar-Leaders Program’, established in 1992 by the GE Foundation, aims at fostering talented students. This program is for post-secondary students who have both creativity and leadership. It selects five outstanding students and provides 3 million KRW per annum for a maximum of three years.

The grantees of this program have become influential people in various fields, including professors, executives, staff members of national/international firms, and researchers. And they are making a huge contribution to the development of engineering and science. Noh continues doing various activities, including the completion of his internship at ‘Harvard-MIT Biomedical Optics’ and the publication of a paper (3rd author) for the ACS Omega of American Chemical Society (ACS).

Ahn, a member of the Young Engineers Honor Society (YEHS) of the National Academy of Engineering of Korea, had an interest in startup businesses. In 2015, he founded DataStorm, a firm specializing in developing data solution, and merged with a cloud back-office, Jobis & Villains, in 2016. Ahn is continuing his business activities and this year he founded, and is successfully running, cocKorea.

“KAIST students whose alma mater are science academies form a top-tier group on campus and produce excellent performance,” said Associate Vice President for Admissions, Hayong Shin. “KAIST is making every effort to assist these students so that they can perform to the best of their ability.”

(Clockwise from top left: Seung-Gyu Oh, Sung-Jin Oh, Heui-Kwang Noh and Kang-Min Ahn)

2017.08.09 View 11219

Students from Science Academies Shed a Light on KAIST

Recent KAIST statistics show that graduates from science academies distinguish themselves not only by their academic performance at KAIST but also in various professional careers after graduation.

Every year, approximately 20% of newly-enrolled students of KAIST are from science academies. In the case of the class of 2017, 170 students from science academies accounted for 22% of the newly-enrolled students. Moreover, they are forming a top-tier student group on campus. As shown in the table below, the ratio of students graduating early for either enrolling in graduate programs or landing a job indicates their excellent performance at KAIST.

There are eight science academies in Korea: Korea Science Academy of KAIST located in Busan, Seoul Science High School, Gyeonggi Science High School, Gwangju Science High School, Daejeon Science High School, Sejong Academy of Science and Arts, and Incheon Arts and Sciences Academy.

Recently, KAIST analyzed 532 university graduates from the class of 2012. It was found that 23 out of 63 graduates with the alma mater of science academies finished their degree early; as a result, the early graduation ratio of the class of 2012 stood at 36.5%. This percentage was significantly higher than that of students from other high schools.

Among the notable graduates, there was a student who made headlines with donation of 30 million KRW to KAIST. His donation was the largest donation from an enrolled student on record. His story goes back when Android smartphones were about to be distributed. Seung-Gyu Oh, then a student in the School of Electrical Engineering felt that existing subway apps were inconvenient, so he invented his own subway app that navigated the nearest subway lines in 2015. His app hit the market and ranked second in the subway app category. It had approximately five million users, which led to it generating advertising revenue. After the successful launch of the app, Oh accepted the takeover offered by Daum Kakao. He then donated 30 million KRW to his alma mater. “Since high school, I’ve always been thinking that I have received many benefits from my country and felt heavily responsible for it,” the alumnus of Korea Science of Academy and KAIST said. “I decided to make a donation to my alma mater, KAIST because I wanted to return what I had received from my country.” After graduation, Oh is now working for the web firm, Daum Kakao.

In May 24, 2017, the 41st International Collegiate Programming Contest, hosted by Association for Computing Machinery (ACM) and sponsored by IBM, was held in Rapid City, South Dakota in the US. It is a prestigious contest that has been held annually since 1977. College students from around the world participate in this contest; and in 2017, a total of 50,000 students from 2,900 universities in 104 countries participated in regional competitions, and approximately 400 students made it to the final round, entering into a fierce competition. KAIST students also participated in this contest. The team was comprised of Ji-Hoon Ko, Jong-Won Lee, and Han-Pil Kang from the School of Computing. They are also alumni of Gyeonggi Science High School. They received the ‘First Problem Solver’ award and a bronze medal which came with a 3,000 USD cash prize.

Sung-Jin Oh, who also graduated from Korea Science Academy of KAIST, is a research professor at the Korea Institute of Advanced Study (KIAS). He is the youngest recipient of the ‘Young Scientist Award’, which he received by proving a hypothesis from Einstein’s Theory of General Relativity mathematically at the age of 27. After graduating from KAIST, Oh earned his master’s and doctorate degrees from Princeton University, completed his post-doctoral fellow at UC Berkeley, and is now immersing himself in research at KIAS.

Heui-Kwang Noh from the Department of Chemistry and Kang-Min Ahn from the School of Computing, who were selected to receive the presidential scholarship for science in 2014, both graduated from Gyeonggi Science High School. Noh was recognized for his outstanding academic capacity and was also chosen for the ‘GE Foundation Scholar-Leaders Program’ in 2015. The ‘GE Foundation Scholar-Leaders Program’, established in 1992 by the GE Foundation, aims at fostering talented students. This program is for post-secondary students who have both creativity and leadership. It selects five outstanding students and provides 3 million KRW per annum for a maximum of three years.

The grantees of this program have become influential people in various fields, including professors, executives, staff members of national/international firms, and researchers. And they are making a huge contribution to the development of engineering and science. Noh continues doing various activities, including the completion of his internship at ‘Harvard-MIT Biomedical Optics’ and the publication of a paper (3rd author) for the ACS Omega of American Chemical Society (ACS).

Ahn, a member of the Young Engineers Honor Society (YEHS) of the National Academy of Engineering of Korea, had an interest in startup businesses. In 2015, he founded DataStorm, a firm specializing in developing data solution, and merged with a cloud back-office, Jobis & Villains, in 2016. Ahn is continuing his business activities and this year he founded, and is successfully running, cocKorea.

“KAIST students whose alma mater are science academies form a top-tier group on campus and produce excellent performance,” said Associate Vice President for Admissions, Hayong Shin. “KAIST is making every effort to assist these students so that they can perform to the best of their ability.”

(Clockwise from top left: Seung-Gyu Oh, Sung-Jin Oh, Heui-Kwang Noh and Kang-Min Ahn)

2017.08.09 View 11219 -

KAIST Team Wins Bronze Medal at Int'l Programming Contest

A KAIST Team consisting of undergraduate students from the School of Computing and Department of Mathematical Science received a bronze medal and First Problem Solver award at an international undergraduate programming competition, The Association for Computing Machinery-International Collegiate Programming Contest (ACM-ICPC) World Finals.

The 41st ACM-ICPC hosted by ACM and funded by IBM was held in South Dakota in the US on May 25. The competition, first held in 1977, is aimed at undergraduate students from around the world. A total of 50,000 students from 2900 universities and 103 countries participated in the regional competition and 400 students competed in the finals.

The competition required teams of three to solve 12 problems. The KAIST team was coached by Emeritus Professor Sung-Yong Shin and Professor Taisook Han. The student contestants were Jihoon Ko and Hanpil Kang from the School of Computing and Jongwoon Lee from the Department of Mathematical Science. The team finished ranked 9th, receiving a bronze medal and a $3000 prize. Additionally, the team was the first to solve all the problems and received the First Problem Solver award. Detailed score information can be found on. https://icpc.baylor.edu/scoreboard/

(Photo caption: Professor Taisook Han and his students)

2017.06.12 View 12391

KAIST Team Wins Bronze Medal at Int'l Programming Contest

A KAIST Team consisting of undergraduate students from the School of Computing and Department of Mathematical Science received a bronze medal and First Problem Solver award at an international undergraduate programming competition, The Association for Computing Machinery-International Collegiate Programming Contest (ACM-ICPC) World Finals.

The 41st ACM-ICPC hosted by ACM and funded by IBM was held in South Dakota in the US on May 25. The competition, first held in 1977, is aimed at undergraduate students from around the world. A total of 50,000 students from 2900 universities and 103 countries participated in the regional competition and 400 students competed in the finals.

The competition required teams of three to solve 12 problems. The KAIST team was coached by Emeritus Professor Sung-Yong Shin and Professor Taisook Han. The student contestants were Jihoon Ko and Hanpil Kang from the School of Computing and Jongwoon Lee from the Department of Mathematical Science. The team finished ranked 9th, receiving a bronze medal and a $3000 prize. Additionally, the team was the first to solve all the problems and received the First Problem Solver award. Detailed score information can be found on. https://icpc.baylor.edu/scoreboard/

(Photo caption: Professor Taisook Han and his students)

2017.06.12 View 12391 -

Professor Otfried Cheong Named as Distinguished Scientist by ACM

Professor Otfried Cheong (Schwarzkopf) of the School of Computing was named as a Distinguished Scientist of 2016 by the Association for Computing Machinery (ACM).

The ACM recognized 45 Distinguished Members in the category of Distinguished Scientist, Educator, and Engineer for their individual contributions to the field of computing. Professor Cheong is the sole recipient from a Korean institution. The recipients were selected among the top 10 percent of ACM members with at least 15 years of professional experience and five years of continuous professional membership.

He is known as one of the authors of the widely used computational geometry textbook Computational Geometry: Algorithms and Applications and as the developer of Ipe, a vector graphics editor. Professor Cheong joined KAIST in 2005, after earning his doctorate from the Free University of Berlin in 1992. He previously taught at Ultrecht University, Pohang University of Science and Technology, Hong Kong University of Science and Technology, and the Eindhoven University of Technology.

2017.04.17 View 9962

Professor Otfried Cheong Named as Distinguished Scientist by ACM

Professor Otfried Cheong (Schwarzkopf) of the School of Computing was named as a Distinguished Scientist of 2016 by the Association for Computing Machinery (ACM).

The ACM recognized 45 Distinguished Members in the category of Distinguished Scientist, Educator, and Engineer for their individual contributions to the field of computing. Professor Cheong is the sole recipient from a Korean institution. The recipients were selected among the top 10 percent of ACM members with at least 15 years of professional experience and five years of continuous professional membership.

He is known as one of the authors of the widely used computational geometry textbook Computational Geometry: Algorithms and Applications and as the developer of Ipe, a vector graphics editor. Professor Cheong joined KAIST in 2005, after earning his doctorate from the Free University of Berlin in 1992. He previously taught at Ultrecht University, Pohang University of Science and Technology, Hong Kong University of Science and Technology, and the Eindhoven University of Technology.

2017.04.17 View 9962 -

Improving Traffic Safety with a Crowdsourced Traffic Violation Reporting App

KAIST researchers revealed that crowdsourced traffic violation reporting with smartphone-based continuous video capturing can dramatically change the current practice of policing activities on the road and will significantly improve traffic safety.

Professor Uichin Lee of the Department of Industrial and Systems Engineering and the Graduate School of Knowledge Service Engineering at KAIST and his research team designed and evaluated Mobile Roadwatch, a mobile app that helps citizen record traffic violation with their smartphones and report the recorded videos to the police.

This app supports continuous video recording just like onboard vehicle dashboard cameras. Mobile Roadwatch allows drivers to safely capture traffic violations by simply touching a smartphone screen while driving. The captured videos are automatically tagged with contextual information such as location and time. This information will be used as important evidence for the police to ticket the violators. All of the captured videos can be conveniently reviewed, allowing users to decide which events to report to the police.

The team conducted a two-week field study to understand how drivers use Mobile Roadwatch. They found that the drivers tended to capture all traffic risks regardless of the level of their involvement and the seriousness of the traffic risks. However, when it came to actual reporting, they tended to report only serious traffic violations, which could have led to car accidents, such as traffic signal violations and illegal U-turns. After receiving feedback about their reports from the police, drivers typically felt very good about their contributions to traffic safety.

At the same time, some drivers felt pleased to know that the offenders received tickets since they thought these offenders deserved to be ticketed. While participating in the Mobile Roadwatch campaign, drivers reported that they tried to drive as safely as possible and abide by traffic laws. This was because they wanted to be as fair as possible so that they could capture others’ violations without feeling guilty. They were also afraid that other drivers might capture their violations.

Professor Lee said, “Our study participants answered that Mobile Roadwatch served as a very useful tool for reporting traffic violations, and they were highly satisfied with its features. Beyond simple reporting, our tool can be extended to support online communities, which help people actively discuss various local safety issues and work with the police and local authorities to solve these safety issues.”

Korea and India were the early adaptors supporting video-based reporting of traffic violations to the police. In recent years, the number of reports has dramatically increased. For example, Korea’s ‘Looking for a Witness’ (released in April 2015) received more than half million reported violations as of November 2016. In the US, authorities started tapping into smartphone recordings by releasing video-based reporting apps such as ICE Blackbox and Mobile Justice. Professor Lee said that the existing services cannot be used while driving, because none of the existing services support continuous video recording and safe event capturing behind the wheel.

Professor Lee’s team has been incorporating advanced computer vision techniques into Mobile Roadwatch for automatically capturing traffic violations and safety risks, including potholes and obstacles. The researchers will present their results in May at the ACM CHI Conference on Human Factors in Computing Systems (CHI 2017) in Denver, CO, USA. Their research was supported by the KAIST-KUSTAR fund.

(Caption: A driver is trying to capture an event by touching a screen. The Mobile Radwatch supports continuous video recording and safe event captureing behind the wheel.)

2017.04.10 View 12351

Improving Traffic Safety with a Crowdsourced Traffic Violation Reporting App

KAIST researchers revealed that crowdsourced traffic violation reporting with smartphone-based continuous video capturing can dramatically change the current practice of policing activities on the road and will significantly improve traffic safety.

Professor Uichin Lee of the Department of Industrial and Systems Engineering and the Graduate School of Knowledge Service Engineering at KAIST and his research team designed and evaluated Mobile Roadwatch, a mobile app that helps citizen record traffic violation with their smartphones and report the recorded videos to the police.

This app supports continuous video recording just like onboard vehicle dashboard cameras. Mobile Roadwatch allows drivers to safely capture traffic violations by simply touching a smartphone screen while driving. The captured videos are automatically tagged with contextual information such as location and time. This information will be used as important evidence for the police to ticket the violators. All of the captured videos can be conveniently reviewed, allowing users to decide which events to report to the police.

The team conducted a two-week field study to understand how drivers use Mobile Roadwatch. They found that the drivers tended to capture all traffic risks regardless of the level of their involvement and the seriousness of the traffic risks. However, when it came to actual reporting, they tended to report only serious traffic violations, which could have led to car accidents, such as traffic signal violations and illegal U-turns. After receiving feedback about their reports from the police, drivers typically felt very good about their contributions to traffic safety.

At the same time, some drivers felt pleased to know that the offenders received tickets since they thought these offenders deserved to be ticketed. While participating in the Mobile Roadwatch campaign, drivers reported that they tried to drive as safely as possible and abide by traffic laws. This was because they wanted to be as fair as possible so that they could capture others’ violations without feeling guilty. They were also afraid that other drivers might capture their violations.

Professor Lee said, “Our study participants answered that Mobile Roadwatch served as a very useful tool for reporting traffic violations, and they were highly satisfied with its features. Beyond simple reporting, our tool can be extended to support online communities, which help people actively discuss various local safety issues and work with the police and local authorities to solve these safety issues.”

Korea and India were the early adaptors supporting video-based reporting of traffic violations to the police. In recent years, the number of reports has dramatically increased. For example, Korea’s ‘Looking for a Witness’ (released in April 2015) received more than half million reported violations as of November 2016. In the US, authorities started tapping into smartphone recordings by releasing video-based reporting apps such as ICE Blackbox and Mobile Justice. Professor Lee said that the existing services cannot be used while driving, because none of the existing services support continuous video recording and safe event capturing behind the wheel.

Professor Lee’s team has been incorporating advanced computer vision techniques into Mobile Roadwatch for automatically capturing traffic violations and safety risks, including potholes and obstacles. The researchers will present their results in May at the ACM CHI Conference on Human Factors in Computing Systems (CHI 2017) in Denver, CO, USA. Their research was supported by the KAIST-KUSTAR fund.

(Caption: A driver is trying to capture an event by touching a screen. The Mobile Radwatch supports continuous video recording and safe event captureing behind the wheel.)

2017.04.10 View 12351 -

Furniture That Learns to Move by Itself