AI

-

‘Realizing the Dream Beyond Limits’… KAIST Space Institute opens

“KAIST Space Institute will present a new paradigm for space research and education, foster creative talents, and become a space research center to lead the advancement of national space initiatives” (KAIST President Kwang-Hyung Lee)

< Vision Declaration Ceremony Photo during Space Research Institute Opening Ceremony >

KAIST (President Kwang-Hyung Lee) opened the ‘Space Institute’, which brings together all of KAIST’s space technology capabilities to realize the dream of mankind leaping higher toward space, and held the opening ceremony on September 30th in the main auditorium of Daejeon. The opening ceremony included a vision declaration ceremony and a special lecture for KAIST Space Institute.

KAIST Space Institute is a newly established organization for space missions and convergence/core technology research that can lead the new space era. In September 2022, a promotion team was established to plan the specifics, and it was officially established as an organization within the school in April of this year to lead to this opening ceremony.

< Group Commemorative Photo during Space Research Institute Opening Ceremony >

Under the slogan of ‘Realizing the dreams beyond limits,’ the organization is to carry out projects to realize the vision of ▴ conducting space research that aims to be the first and expand the horizons of human life, ▴ presenting a new paradigm for space research and education as a global leading university space research cluster, ▴ contributing to domestic space technology innovation and space industry ecosystem establishment through verification and development of challenging space missions, and ▴ supporting sustainable national space development by fostering creative talents to lead space convergence.

To this end, the space research organizations that have been operated independently by various departments have been integrated and reorganized under the name of the Space Institute, and the following subordinate organizations will be established in the future: ▴ Artificial Satellite Research Institute, ▴ Space Technology Innovation Talent Training Center, ▴ Space Core Technology Research Institute, and ▴ Space Convergence Technology Research Institute.

At the same time, ▴ Hanwha Space Hub-KAIST Space Research Center, ▴ Perigee-KAIST Rocket Research Center, and ▴ Future Space Education Center will be reorganized under the Space Institute to pool their capabilities.

< President Kwang-Hyung Lee giving a welcoming speech at Space Research Institute Opening Ceremony >

The Satellite Research Institute, a subordinate organization, successfully developed and launched Korea’s first mass-produced ‘ultra-small cluster satellite 1’ in April of this year. It is currently conducting active research with the goal of launching a satellite in 2027 to verify active control technology for space objects.

The first special lecture was given by Professor Se-jin Kwon of the Department of Aerospace Engineering on the topic of ‘KAIST Space Exploration Journey and Vision.’ The institute looked back on its space development history and present future research and development directions and operational plans.

Next, Professor Daniel J. Scheeres, who was appointed as Co-director of the Space Institute and a Visiting Professor of KAIST Department of Aerospace Engineering, delivered a message on the topic of ‘The Future of Asteroid Exploration.’ He talked about preventing asteroid collisions, and lectured on the spirit of challenge for continuous exploration and future research issues.

Co-director Scheeres is a leading scholar in the fields of space engineering and celestial dynamics who was invited to lead the KAIST Space Institute’s leading international cooperation activities. He is well known as a key researcher who led asteroid research, including the ‘DART’ mission, in which the National Aeronautics and Space Administration (NASA) experimented with changing the trajectory an asteroid by colliding it with a spacecraft.

After its full-fledged opening, the Space Institute will be operated as an open organization where domestic and foreign experts as well as KAIST students can freely participate in research and education.

< Director Jae-heung Han introducing the organization at Space Research Institute Opening Ceremony >

KAIST Space Institute Director Jae-heung Han said, “On the 10th anniversary of the death of the late Dr. Soon-dal Choi, who helped establish space technology in Korea, we have established KAIST Space Institute to inherit the spirit of ‘Uri-Byul’, our first satellite, and develop subsequent achievements.”

He also said, “With a sense of mission to challenge limitations and venture into the greater unknown, we will strive to strengthen our space research and development capabilities and our global status as a leading aerospace country.”

2024.09.30 View 3354

‘Realizing the Dream Beyond Limits’… KAIST Space Institute opens

“KAIST Space Institute will present a new paradigm for space research and education, foster creative talents, and become a space research center to lead the advancement of national space initiatives” (KAIST President Kwang-Hyung Lee)

< Vision Declaration Ceremony Photo during Space Research Institute Opening Ceremony >

KAIST (President Kwang-Hyung Lee) opened the ‘Space Institute’, which brings together all of KAIST’s space technology capabilities to realize the dream of mankind leaping higher toward space, and held the opening ceremony on September 30th in the main auditorium of Daejeon. The opening ceremony included a vision declaration ceremony and a special lecture for KAIST Space Institute.

KAIST Space Institute is a newly established organization for space missions and convergence/core technology research that can lead the new space era. In September 2022, a promotion team was established to plan the specifics, and it was officially established as an organization within the school in April of this year to lead to this opening ceremony.

< Group Commemorative Photo during Space Research Institute Opening Ceremony >

Under the slogan of ‘Realizing the dreams beyond limits,’ the organization is to carry out projects to realize the vision of ▴ conducting space research that aims to be the first and expand the horizons of human life, ▴ presenting a new paradigm for space research and education as a global leading university space research cluster, ▴ contributing to domestic space technology innovation and space industry ecosystem establishment through verification and development of challenging space missions, and ▴ supporting sustainable national space development by fostering creative talents to lead space convergence.

To this end, the space research organizations that have been operated independently by various departments have been integrated and reorganized under the name of the Space Institute, and the following subordinate organizations will be established in the future: ▴ Artificial Satellite Research Institute, ▴ Space Technology Innovation Talent Training Center, ▴ Space Core Technology Research Institute, and ▴ Space Convergence Technology Research Institute.

At the same time, ▴ Hanwha Space Hub-KAIST Space Research Center, ▴ Perigee-KAIST Rocket Research Center, and ▴ Future Space Education Center will be reorganized under the Space Institute to pool their capabilities.

< President Kwang-Hyung Lee giving a welcoming speech at Space Research Institute Opening Ceremony >

The Satellite Research Institute, a subordinate organization, successfully developed and launched Korea’s first mass-produced ‘ultra-small cluster satellite 1’ in April of this year. It is currently conducting active research with the goal of launching a satellite in 2027 to verify active control technology for space objects.

The first special lecture was given by Professor Se-jin Kwon of the Department of Aerospace Engineering on the topic of ‘KAIST Space Exploration Journey and Vision.’ The institute looked back on its space development history and present future research and development directions and operational plans.

Next, Professor Daniel J. Scheeres, who was appointed as Co-director of the Space Institute and a Visiting Professor of KAIST Department of Aerospace Engineering, delivered a message on the topic of ‘The Future of Asteroid Exploration.’ He talked about preventing asteroid collisions, and lectured on the spirit of challenge for continuous exploration and future research issues.

Co-director Scheeres is a leading scholar in the fields of space engineering and celestial dynamics who was invited to lead the KAIST Space Institute’s leading international cooperation activities. He is well known as a key researcher who led asteroid research, including the ‘DART’ mission, in which the National Aeronautics and Space Administration (NASA) experimented with changing the trajectory an asteroid by colliding it with a spacecraft.

After its full-fledged opening, the Space Institute will be operated as an open organization where domestic and foreign experts as well as KAIST students can freely participate in research and education.

< Director Jae-heung Han introducing the organization at Space Research Institute Opening Ceremony >

KAIST Space Institute Director Jae-heung Han said, “On the 10th anniversary of the death of the late Dr. Soon-dal Choi, who helped establish space technology in Korea, we have established KAIST Space Institute to inherit the spirit of ‘Uri-Byul’, our first satellite, and develop subsequent achievements.”

He also said, “With a sense of mission to challenge limitations and venture into the greater unknown, we will strive to strengthen our space research and development capabilities and our global status as a leading aerospace country.”

2024.09.30 View 3354 -

Professor Joseph J. Lim of KAIST receives the Best System Paper Award from RSS 2023, First in Korea

- Professor Joseph J. Lim from the Kim Jaechul Graduate School of AI at KAIST and his team receive an award for the most outstanding paper in the implementation of robot systems.

- Professor Lim works on AI-based perception, reasoning, and sequential decision-making to develop systems capable of intelligent decision-making, including robot learning

< Photo 1. RSS2023 Best System Paper Award Presentation >

The team of Professor Joseph J. Lim from the Kim Jaechul Graduate School of AI at KAIST has been honored with the 'Best System Paper Award' at "Robotics: Science and Systems (RSS) 2023".

The RSS conference is globally recognized as a leading event for showcasing the latest discoveries and advancements in the field of robotics. It is a venue where the greatest minds in robotics engineering and robot learning come together to share their research breakthroughs. The RSS Best System Paper Award is a prestigious honor granted to a paper that excels in presenting real-world robot system implementation and experimental results.

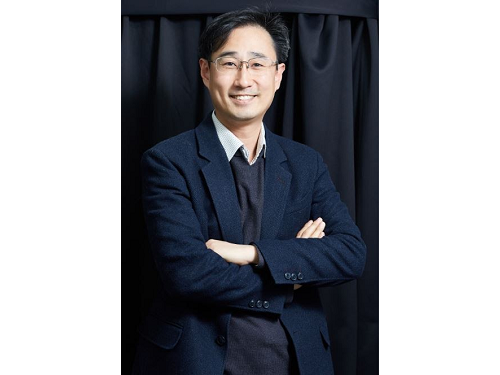

< Photo 2. Professor Joseph J. Lim of Kim Jaechul Graduate School of AI at KAIST >

The team led by Professor Lim, including two Master's students and an alumnus (soon to be appointed at Yonsei University), received the prestigious RSS Best System Paper Award, making it the first-ever achievement for a Korean and for a domestic institution.

< Photo 3. Certificate of the Best System Paper Award presented at RSS 2023 >

This award is especially meaningful considering the broader challenges in the field. Although recent progress in artificial intelligence and deep learning algorithms has resulted in numerous breakthroughs in robotics, most of these achievements have been confined to relatively simple and short tasks, like walking or pick-and-place. Moreover, tasks are typically performed in simulated environments rather than dealing with more complex, long-horizon real-world tasks such as factory operations or household chores. These limitations primarily stem from the considerable challenge of acquiring data required to develop and validate learning-based AI techniques, particularly in real-world complex tasks.

In light of these challenges, this paper introduced a benchmark that employs 3D printing to simplify the reproduction of furniture assembly tasks in real-world environments. Furthermore, it proposed a standard benchmark for the development and comparison of algorithms for complex and long-horizon tasks, supported by teleoperation data. Ultimately, the paper suggests a new research direction of addressing complex and long-horizon tasks and encourages diverse advancements in research by facilitating reproducible experiments in real-world environments.

Professor Lim underscored the growing potential for integrating robots into daily life, driven by an aging population and an increase in single-person households. As robots become part of everyday life, testing their performance in real-world scenarios becomes increasingly crucial. He hoped this research would serve as a cornerstone for future studies in this field.

The Master's students, Minho Heo and Doohyun Lee, from the Kim Jaechul Graduate School of AI at KAIST, also shared their aspirations to become global researchers in the domain of robot learning. Meanwhile, the alumnus of Professor Lim's research lab, Dr. Youngwoon Lee, is set to be appointed to the Graduate School of AI at Yonsei University and will continue pursuing research in robot learning.

Paper title: Furniture Bench: Reproducible Real-World Benchmark for Long-Horizon Complex Manipulation. Robotics: Science and Systems.

< Image. Conceptual Summary of the 3D Printing Technology >

2023.07.31 View 10606

Professor Joseph J. Lim of KAIST receives the Best System Paper Award from RSS 2023, First in Korea

- Professor Joseph J. Lim from the Kim Jaechul Graduate School of AI at KAIST and his team receive an award for the most outstanding paper in the implementation of robot systems.

- Professor Lim works on AI-based perception, reasoning, and sequential decision-making to develop systems capable of intelligent decision-making, including robot learning

< Photo 1. RSS2023 Best System Paper Award Presentation >

The team of Professor Joseph J. Lim from the Kim Jaechul Graduate School of AI at KAIST has been honored with the 'Best System Paper Award' at "Robotics: Science and Systems (RSS) 2023".

The RSS conference is globally recognized as a leading event for showcasing the latest discoveries and advancements in the field of robotics. It is a venue where the greatest minds in robotics engineering and robot learning come together to share their research breakthroughs. The RSS Best System Paper Award is a prestigious honor granted to a paper that excels in presenting real-world robot system implementation and experimental results.

< Photo 2. Professor Joseph J. Lim of Kim Jaechul Graduate School of AI at KAIST >

The team led by Professor Lim, including two Master's students and an alumnus (soon to be appointed at Yonsei University), received the prestigious RSS Best System Paper Award, making it the first-ever achievement for a Korean and for a domestic institution.

< Photo 3. Certificate of the Best System Paper Award presented at RSS 2023 >

This award is especially meaningful considering the broader challenges in the field. Although recent progress in artificial intelligence and deep learning algorithms has resulted in numerous breakthroughs in robotics, most of these achievements have been confined to relatively simple and short tasks, like walking or pick-and-place. Moreover, tasks are typically performed in simulated environments rather than dealing with more complex, long-horizon real-world tasks such as factory operations or household chores. These limitations primarily stem from the considerable challenge of acquiring data required to develop and validate learning-based AI techniques, particularly in real-world complex tasks.

In light of these challenges, this paper introduced a benchmark that employs 3D printing to simplify the reproduction of furniture assembly tasks in real-world environments. Furthermore, it proposed a standard benchmark for the development and comparison of algorithms for complex and long-horizon tasks, supported by teleoperation data. Ultimately, the paper suggests a new research direction of addressing complex and long-horizon tasks and encourages diverse advancements in research by facilitating reproducible experiments in real-world environments.

Professor Lim underscored the growing potential for integrating robots into daily life, driven by an aging population and an increase in single-person households. As robots become part of everyday life, testing their performance in real-world scenarios becomes increasingly crucial. He hoped this research would serve as a cornerstone for future studies in this field.

The Master's students, Minho Heo and Doohyun Lee, from the Kim Jaechul Graduate School of AI at KAIST, also shared their aspirations to become global researchers in the domain of robot learning. Meanwhile, the alumnus of Professor Lim's research lab, Dr. Youngwoon Lee, is set to be appointed to the Graduate School of AI at Yonsei University and will continue pursuing research in robot learning.

Paper title: Furniture Bench: Reproducible Real-World Benchmark for Long-Horizon Complex Manipulation. Robotics: Science and Systems.

< Image. Conceptual Summary of the 3D Printing Technology >

2023.07.31 View 10606 -

A KAIST Research Team Identifies a Cancer Reversion Mechanism

Despite decades of intensive cancer research by numerous biomedical scientists, cancer still holds its place as the number one cause of death in Korea. The fundamental reason behind the limitations of current cancer treatment methods is the fact that they all aim to completely destroy cancer cells, which eventually allows the cancer cells to acquire immunity. In other words, recurrences and side-effects caused by the destruction of healthy cells are inevitable. To this end, some have suggested anticancer treatment methods based on cancer reversion, which can revert cancer cells back to normal or near-normal cells under certain conditions. However, the practical development of this idea has not yet been attempted.

On June 8, a KAIST research team led by Professor Kwang-Hyun Cho from the Department of Bio and Brain Engineering reported to have successfully identified the fundamental principle of a process that can revert cancer cells back to normal cells without killing the cells.

Professor Cho’s team focused on the fact that unlike normal cells, which react according to external stimuli, cancer cells tend to ignore such stimuli and only undergo uncontrolled cell division. Through computer simulation analysis, the team discovered that the input-output (I/O) relationships that were distorted by genetic mutations could be reverted back to normal I/O relationships under certain conditions. The team then demonstrated through molecular cell experiments that such I/O relationship recovery also occurred in real cancer cells.

The results of this study, written by Dr. Jae Il Joo and Dr. Hwa-Jeong Park, were published in Wiley’s Advanced Science online on June 2 under the title, "Normalizing input-output relationships of cancer networks for reversion therapy."

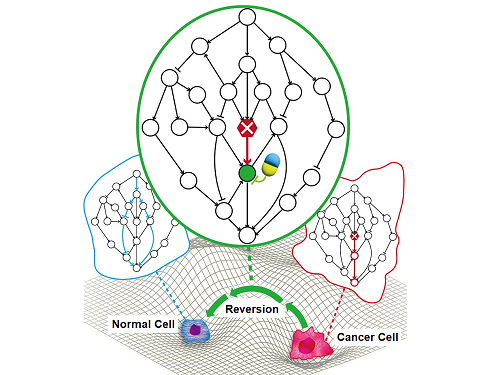

< Image 1. Input-output (I/O) relationships in gene regulatory networks >

Professor Kwang-Hyun Cho's research team classified genes into four types by simulation-analyzing the effect of gene mutations on the I/O relationship of gene regulatory networks. (Figure A-J) In addition, by analyzing 18 genes of the cancer-related gene regulatory network, it was confirmed that when mutations occur in more than half of the genes constituting each network, reversibility is possible through appropriate control. (Figure K)

Professor Cho’s team uncovered that the reason the distorted I/O relationships of cancer cells could be reverted back to normal ones was the robustness and redundancy of intracellular gene control networks that developed over the course of evolution. In addition, they found that some genes were more promising as targets for cancer reversion than others, and showed through molecular cell experiments that controlling such genes could revert the distorted I/O relationships of cancer cells back to normal ones.

< Image 2. Simulation results of restoration of bladder cancer gene regulation network and I/O relationship of bladder cancer cells. >

The research team classified the effects of gene mutations on the I/O relationship in the bladder cancer gene regulation network by simulation analysis and classified them into 4 types. (Figure A) Through this, it was found that the distorted input-output relationship between bladder cancer cell lines KU-1919 and HCT-1197 could be restored to normal. (Figure B)

< Image 3. Analysis of survival of bladder cancer patients according to reversible gene mutation and I/O recovery experiment of bladder cancer cells. >

As predicted through network simulation analysis, Professor Kwang-Hyun Cho's research team confirmed through molecular cell experiments that the response to TGF-b was normally restored when AKT and MAP3K1 were inhibited in the bladder cancer cell line KU-1919. (Figure A-G) In addition, it was confirmed that there is a difference in the survival rate of bladder cancer patients depending on the presence or absence of a reversible gene mutation. (Figure H)

The results of this research show that the reversion of real cancer cells does not happen by chance, and that it is possible to systematically explore targets that can induce this phenomenon, thereby creating the potential for the development of innovative anticancer drugs that can control such target genes.

< Image 4. Cancer cell reversibility principle >

The research team analyzed the reversibility, redundancy, and robustness of various networks and found that there was a positive correlation between them. From this, it was found that reversibility was additionally inherent in the process of evolution in which the gene regulatory network acquired redundancy and consistency.

Professor Cho said, “By uncovering the fundamental principles of a new cancer reversion treatment strategy that may overcome the unresolved limitations of existing chemotherapy, we have increased the possibility of developing new and innovative drugs that can improve both the prognosis and quality of life of cancer patients.”

< Image 5. Conceptual diagram of research results >

The research team identified the fundamental control principle of cancer cell reversibility through systems biology research. When the I/O relationship of the intracellular gene regulatory network is distorted by mutation, the distorted I/O relationship can be restored to a normal state by identifying and adjusting the reversible gene target based on the redundancy of the molecular circuit inherent in the complex network.

After Professor Cho’s team first suggested the concept of reversion treatment, they published their results for reverting colorectal cancer in January 2020, and in January 2022 they successfully re-programmed malignant breast cancer cells back into hormone-treatable ones. In January 2023, the team successfully removed the metastasis ability from lung cancer cells and reverted them back to a state that allowed improved drug reactivity. However, these results were case studies of specific types of cancer and did not reveal what common principle allowed cancer reversion across all cancer types, making this the first revelation of the general principle of cancer reversion and its evolutionary origins.

This research was funded by the Ministry of Science and ICT of the Republic of Korea and the National Research Foundation of Korea.

2023.06.20 View 11839

A KAIST Research Team Identifies a Cancer Reversion Mechanism

Despite decades of intensive cancer research by numerous biomedical scientists, cancer still holds its place as the number one cause of death in Korea. The fundamental reason behind the limitations of current cancer treatment methods is the fact that they all aim to completely destroy cancer cells, which eventually allows the cancer cells to acquire immunity. In other words, recurrences and side-effects caused by the destruction of healthy cells are inevitable. To this end, some have suggested anticancer treatment methods based on cancer reversion, which can revert cancer cells back to normal or near-normal cells under certain conditions. However, the practical development of this idea has not yet been attempted.

On June 8, a KAIST research team led by Professor Kwang-Hyun Cho from the Department of Bio and Brain Engineering reported to have successfully identified the fundamental principle of a process that can revert cancer cells back to normal cells without killing the cells.

Professor Cho’s team focused on the fact that unlike normal cells, which react according to external stimuli, cancer cells tend to ignore such stimuli and only undergo uncontrolled cell division. Through computer simulation analysis, the team discovered that the input-output (I/O) relationships that were distorted by genetic mutations could be reverted back to normal I/O relationships under certain conditions. The team then demonstrated through molecular cell experiments that such I/O relationship recovery also occurred in real cancer cells.

The results of this study, written by Dr. Jae Il Joo and Dr. Hwa-Jeong Park, were published in Wiley’s Advanced Science online on June 2 under the title, "Normalizing input-output relationships of cancer networks for reversion therapy."

< Image 1. Input-output (I/O) relationships in gene regulatory networks >

Professor Kwang-Hyun Cho's research team classified genes into four types by simulation-analyzing the effect of gene mutations on the I/O relationship of gene regulatory networks. (Figure A-J) In addition, by analyzing 18 genes of the cancer-related gene regulatory network, it was confirmed that when mutations occur in more than half of the genes constituting each network, reversibility is possible through appropriate control. (Figure K)

Professor Cho’s team uncovered that the reason the distorted I/O relationships of cancer cells could be reverted back to normal ones was the robustness and redundancy of intracellular gene control networks that developed over the course of evolution. In addition, they found that some genes were more promising as targets for cancer reversion than others, and showed through molecular cell experiments that controlling such genes could revert the distorted I/O relationships of cancer cells back to normal ones.

< Image 2. Simulation results of restoration of bladder cancer gene regulation network and I/O relationship of bladder cancer cells. >

The research team classified the effects of gene mutations on the I/O relationship in the bladder cancer gene regulation network by simulation analysis and classified them into 4 types. (Figure A) Through this, it was found that the distorted input-output relationship between bladder cancer cell lines KU-1919 and HCT-1197 could be restored to normal. (Figure B)

< Image 3. Analysis of survival of bladder cancer patients according to reversible gene mutation and I/O recovery experiment of bladder cancer cells. >

As predicted through network simulation analysis, Professor Kwang-Hyun Cho's research team confirmed through molecular cell experiments that the response to TGF-b was normally restored when AKT and MAP3K1 were inhibited in the bladder cancer cell line KU-1919. (Figure A-G) In addition, it was confirmed that there is a difference in the survival rate of bladder cancer patients depending on the presence or absence of a reversible gene mutation. (Figure H)

The results of this research show that the reversion of real cancer cells does not happen by chance, and that it is possible to systematically explore targets that can induce this phenomenon, thereby creating the potential for the development of innovative anticancer drugs that can control such target genes.

< Image 4. Cancer cell reversibility principle >

The research team analyzed the reversibility, redundancy, and robustness of various networks and found that there was a positive correlation between them. From this, it was found that reversibility was additionally inherent in the process of evolution in which the gene regulatory network acquired redundancy and consistency.

Professor Cho said, “By uncovering the fundamental principles of a new cancer reversion treatment strategy that may overcome the unresolved limitations of existing chemotherapy, we have increased the possibility of developing new and innovative drugs that can improve both the prognosis and quality of life of cancer patients.”

< Image 5. Conceptual diagram of research results >

The research team identified the fundamental control principle of cancer cell reversibility through systems biology research. When the I/O relationship of the intracellular gene regulatory network is distorted by mutation, the distorted I/O relationship can be restored to a normal state by identifying and adjusting the reversible gene target based on the redundancy of the molecular circuit inherent in the complex network.

After Professor Cho’s team first suggested the concept of reversion treatment, they published their results for reverting colorectal cancer in January 2020, and in January 2022 they successfully re-programmed malignant breast cancer cells back into hormone-treatable ones. In January 2023, the team successfully removed the metastasis ability from lung cancer cells and reverted them back to a state that allowed improved drug reactivity. However, these results were case studies of specific types of cancer and did not reveal what common principle allowed cancer reversion across all cancer types, making this the first revelation of the general principle of cancer reversion and its evolutionary origins.

This research was funded by the Ministry of Science and ICT of the Republic of Korea and the National Research Foundation of Korea.

2023.06.20 View 11839 -

The cause of disability in aged brain meningeal membranes identified

Due to the increase in average age, studies on changes in the brain following general aging process without serious brain diseases have also become an issue that requires in-depth studies. Regarding aging research, as aging progresses, ‘sugar’ accumulates in the body, and the accumulated sugar becomes a causative agent for various diseases such as aging-related inflammation and vascular disease. In the end, “surplus” sugar molecules attach to various proteins in the body and interfere with their functions.

KAIST (President Kwang Hyung Lee), a joint research team of Professor Pilnam Kim and Professor Yong Jeong of the Department of Bio and Brain Engineering, revealed on the 15th that it was confirmed that the function of being the “front line of defense” for the cerebrocortex of the brain meninges, the layers of membranes that surrounds the brain, is hindered when 'sugar' begins to build up on them as aging progresses.

Professor Kim's research team confirmed excessive accumulation of sugar molecules in the meninges of the elderly and also confirmed that sugar accumulation occurs mouse models in accordance with certain age levels. The meninges are thin membranes that surround the brain and exist at the boundary between the cerebrospinal fluid and the cortex and play an important role in protecting the brain. In this study, it was revealed that the dysfunction of these brain membranes caused by aging is induced by 'excess' sugar in the brain. In particular, as the meningeal membrane becomes thinner and stickier due to aging, a new paradigm has been provided for the discovery of the principle of the decrease in material exchange between the cerebrospinal fluid and the cerebral cortex.

This research was conducted by the Ph.D. candidate Hyo Min Kim and Dr. Shinheun Kim as the co-first authors to be published online on February 28th in the international journal, Aging Cell. (Paper Title: Glycation-mediated tissue-level remodeling of brain meningeal membrane by aging)

The meninges, which are in direct contact with the cerebrospinal fluid, are mainly composed of collagen, an extracellular matrix (ECM) protein, and are composed of fibroblasts, which are cells that produce this protein. The cells that come in contact with collagen proteins that are attached with sugar have a low collagen production function, while the meningeal membrane continuously thins and collapses as the expression of collagen degrading enzymes increases.

Studies on the relationship between excess sugar molecules accumulation in the brain due to continued sugar intake and the degeneration of neurons and brain diseases have been continuously conducted. However, this study was the first to identify meningeal degeneration and dysfunction caused by glucose accumulation with the focus on the meninges itself, and the results are expected to present new ideas for research into approach towards discoveries of new treatments for brain disease.

Researcher Hyomin Kim, the first author, introduced the research results as “an interesting study that identified changes in the barriers of the brain due to aging through a convergent approach, starting from the human brain and utilizing an animal model with a biomimetic meningeal model”.

Professor Pilnam Kim's research team is conducting research and development to remove sugar that accumulated throughout the human body, including the meninges. Advanced glycation end products, which are waste products formed when proteins and sugars meet in the human body, are partially removed by macrophages. However, glycated products bound to extracellular matrix proteins such as collagen are difficult to remove naturally. Through the KAIST-Ceragem Research Center, this research team is developing a healthcare medical device to remove 'sugar residue' in the body.

This study was carried out with the National Research Foundation of Korea's collective research support.

Figure 1. Schematic diagram of proposed mechanism showing aging‐related ECM remodeling through meningeal fibroblasts on the brain leptomeninges. Meningeal fibroblasts in the young brain showed dynamic COL1A1 synthetic and COL1‐interactive function on the collagen membrane. They showed ITGB1‐mediated adhesion on the COL1‐composed leptomeningeal membrane and induction of COL1A1 synthesis for maintaining the collagen membrane. With aging, meningeal fibroblasts showed depletion of COL1A1 synthetic function and altered cell–matrix interaction.

Figure 2. Representative rat meningeal images observed in the study. Compared to young rats, it was confirmed that type 1 collagen (COL1) decreased along with the accumulation of glycated end products (AGE) in the brain membrane of aged rats, and the activity of integrin beta 1 (ITGB1), a representative receptor corresponding to cell-collagen interaction. Instead, it was observed that the activity of discoidin domain receptor 2 (DDR2), one of the tyrosine kinases, increased.

Figure 3. Substance flux through the brain membrane decreases with aging. It was confirmed that the degree of adsorption of fluorescent substances contained in cerebrospinal fluid (CSF) to the brain membrane increased and the degree of entry into the periphery of the cerebral blood vessels decreased in the aged rats. In this study, only the influx into the brain was confirmed during the entry and exit of substances, but the degree of outflow will also be confirmed through future studies.

2023.03.15 View 9409

The cause of disability in aged brain meningeal membranes identified

Due to the increase in average age, studies on changes in the brain following general aging process without serious brain diseases have also become an issue that requires in-depth studies. Regarding aging research, as aging progresses, ‘sugar’ accumulates in the body, and the accumulated sugar becomes a causative agent for various diseases such as aging-related inflammation and vascular disease. In the end, “surplus” sugar molecules attach to various proteins in the body and interfere with their functions.

KAIST (President Kwang Hyung Lee), a joint research team of Professor Pilnam Kim and Professor Yong Jeong of the Department of Bio and Brain Engineering, revealed on the 15th that it was confirmed that the function of being the “front line of defense” for the cerebrocortex of the brain meninges, the layers of membranes that surrounds the brain, is hindered when 'sugar' begins to build up on them as aging progresses.

Professor Kim's research team confirmed excessive accumulation of sugar molecules in the meninges of the elderly and also confirmed that sugar accumulation occurs mouse models in accordance with certain age levels. The meninges are thin membranes that surround the brain and exist at the boundary between the cerebrospinal fluid and the cortex and play an important role in protecting the brain. In this study, it was revealed that the dysfunction of these brain membranes caused by aging is induced by 'excess' sugar in the brain. In particular, as the meningeal membrane becomes thinner and stickier due to aging, a new paradigm has been provided for the discovery of the principle of the decrease in material exchange between the cerebrospinal fluid and the cerebral cortex.

This research was conducted by the Ph.D. candidate Hyo Min Kim and Dr. Shinheun Kim as the co-first authors to be published online on February 28th in the international journal, Aging Cell. (Paper Title: Glycation-mediated tissue-level remodeling of brain meningeal membrane by aging)

The meninges, which are in direct contact with the cerebrospinal fluid, are mainly composed of collagen, an extracellular matrix (ECM) protein, and are composed of fibroblasts, which are cells that produce this protein. The cells that come in contact with collagen proteins that are attached with sugar have a low collagen production function, while the meningeal membrane continuously thins and collapses as the expression of collagen degrading enzymes increases.

Studies on the relationship between excess sugar molecules accumulation in the brain due to continued sugar intake and the degeneration of neurons and brain diseases have been continuously conducted. However, this study was the first to identify meningeal degeneration and dysfunction caused by glucose accumulation with the focus on the meninges itself, and the results are expected to present new ideas for research into approach towards discoveries of new treatments for brain disease.

Researcher Hyomin Kim, the first author, introduced the research results as “an interesting study that identified changes in the barriers of the brain due to aging through a convergent approach, starting from the human brain and utilizing an animal model with a biomimetic meningeal model”.

Professor Pilnam Kim's research team is conducting research and development to remove sugar that accumulated throughout the human body, including the meninges. Advanced glycation end products, which are waste products formed when proteins and sugars meet in the human body, are partially removed by macrophages. However, glycated products bound to extracellular matrix proteins such as collagen are difficult to remove naturally. Through the KAIST-Ceragem Research Center, this research team is developing a healthcare medical device to remove 'sugar residue' in the body.

This study was carried out with the National Research Foundation of Korea's collective research support.

Figure 1. Schematic diagram of proposed mechanism showing aging‐related ECM remodeling through meningeal fibroblasts on the brain leptomeninges. Meningeal fibroblasts in the young brain showed dynamic COL1A1 synthetic and COL1‐interactive function on the collagen membrane. They showed ITGB1‐mediated adhesion on the COL1‐composed leptomeningeal membrane and induction of COL1A1 synthesis for maintaining the collagen membrane. With aging, meningeal fibroblasts showed depletion of COL1A1 synthetic function and altered cell–matrix interaction.

Figure 2. Representative rat meningeal images observed in the study. Compared to young rats, it was confirmed that type 1 collagen (COL1) decreased along with the accumulation of glycated end products (AGE) in the brain membrane of aged rats, and the activity of integrin beta 1 (ITGB1), a representative receptor corresponding to cell-collagen interaction. Instead, it was observed that the activity of discoidin domain receptor 2 (DDR2), one of the tyrosine kinases, increased.

Figure 3. Substance flux through the brain membrane decreases with aging. It was confirmed that the degree of adsorption of fluorescent substances contained in cerebrospinal fluid (CSF) to the brain membrane increased and the degree of entry into the periphery of the cerebral blood vessels decreased in the aged rats. In this study, only the influx into the brain was confirmed during the entry and exit of substances, but the degree of outflow will also be confirmed through future studies.

2023.03.15 View 9409 -

KAIST team develops smart immune system that can pin down on malignant tumors

A joint research team led by Professor Jung Kyoon Choi of the KAIST Department of Bio and Brain Engineering and Professor Jong-Eun Park of the KAIST Graduate School of Medical Science and Engineering (GSMSE) announced the development of the key technologies to treat cancers using smart immune cells designed based on AI and big data analysis. This technology is expected to be a next-generation immunotherapy that allows precision targeting of tumor cells by having the chimeric antigen receptors (CARs) operate through a logical circuit. Professor Hee Jung An of CHA Bundang Medical Center and Professor Hae-Ock Lee of the Catholic University of Korea also participated in this research to contribute joint effort.

Professor Jung Kyoon Choi’s team built a gene expression database from millions of cells, and used this to successfully develop and verify a deep-learning algorithm that could detect the differences in gene expression patterns between tumor cells and normal cells through a logical circuit. CAR immune cells that were fitted with the logic circuits discovered through this methodology could distinguish between tumorous and normal cells as a computer would, and therefore showed potentials to strike only on tumor cells accurately without causing unwanted side effects.

This research, conducted by co-first authors Dr. Joonha Kwon of the KAIST Department of Bio and Brain Engineering and Ph.D. candidate Junho Kang of KAIST GSMSE, was published by Nature Biotechnology on February 16, under the title Single-cell mapping of combinatorial target antigens for CAR switches using logic gates.

An area in cancer research where the most attempts and advances have been made in recent years is immunotherapy. This field of treatment, which utilizes the patient’s own immune system in order to overcome cancer, has several methods including immune checkpoint inhibitors, cancer vaccines and cellular treatments. Immune cells like CAR-T or CAR-NK equipped with chimera antigen receptors, in particular, can recognize cancer antigens and directly destroy cancer cells.

Starting with its success in blood cancer treatment, scientists have been trying to expand the application of CAR cell therapy to treat solid cancer. But there have been difficulties to develop CAR cells with effective killing abilities against solid cancer cells with minimized side effects. Accordingly, in recent years, the development of smarter CAR engineering technologies, i.e., computational logic gates such as AND, OR, and NOT, to effectively target cancer cells has been underway.

At this point in time, the research team built a large-scale database for cancer and normal cells to discover the exact genes that are expressed only from cancer cells at a single-cell level. The team followed this up by developing an AI algorithm that could search for a combination of genes that best distinguishes cancer cells from normal cells. This algorithm, in particular, has been used to find a logic circuit that can specifically target cancer cells through cell-level simulations of all gene combinations. CAR-T cells equipped with logic circuits discovered through this methodology are expected to distinguish cancerous cells from normal cells like computers, thereby minimizing side effects and maximizing the effects of chemotherapy.

Dr. Joonha Kwon, who is the first author of this paper, said, “this research suggests a new method that hasn’t been tried before. What’s particularly noteworthy is the process in which we found the optimal CAR cell circuit through simulations of millions of individual tumors and normal cells.” He added, “This is an innovative technology that can apply AI and computer logic circuits to immune cell engineering. It would contribute greatly to expanding CAR therapy, which is being successfully used for blood cancer, to solid cancers as well.”

This research was funded by the Original Technology Development Project and Research Program for Next Generation Applied Omic of the Korea Research Foundation.

Figure 1. A schematic diagram of manufacturing and administration process of CAR therapy and of cancer cell-specific dual targeting using CAR.

Figure 2. Deep learning (convolutional neural networks, CNNs) algorithm for selection of dual targets based on gene combination (left) and algorithm for calculating expressing cell fractions by gene combination according to logical circuit (right).

2023.03.09 View 11238

KAIST team develops smart immune system that can pin down on malignant tumors

A joint research team led by Professor Jung Kyoon Choi of the KAIST Department of Bio and Brain Engineering and Professor Jong-Eun Park of the KAIST Graduate School of Medical Science and Engineering (GSMSE) announced the development of the key technologies to treat cancers using smart immune cells designed based on AI and big data analysis. This technology is expected to be a next-generation immunotherapy that allows precision targeting of tumor cells by having the chimeric antigen receptors (CARs) operate through a logical circuit. Professor Hee Jung An of CHA Bundang Medical Center and Professor Hae-Ock Lee of the Catholic University of Korea also participated in this research to contribute joint effort.

Professor Jung Kyoon Choi’s team built a gene expression database from millions of cells, and used this to successfully develop and verify a deep-learning algorithm that could detect the differences in gene expression patterns between tumor cells and normal cells through a logical circuit. CAR immune cells that were fitted with the logic circuits discovered through this methodology could distinguish between tumorous and normal cells as a computer would, and therefore showed potentials to strike only on tumor cells accurately without causing unwanted side effects.

This research, conducted by co-first authors Dr. Joonha Kwon of the KAIST Department of Bio and Brain Engineering and Ph.D. candidate Junho Kang of KAIST GSMSE, was published by Nature Biotechnology on February 16, under the title Single-cell mapping of combinatorial target antigens for CAR switches using logic gates.

An area in cancer research where the most attempts and advances have been made in recent years is immunotherapy. This field of treatment, which utilizes the patient’s own immune system in order to overcome cancer, has several methods including immune checkpoint inhibitors, cancer vaccines and cellular treatments. Immune cells like CAR-T or CAR-NK equipped with chimera antigen receptors, in particular, can recognize cancer antigens and directly destroy cancer cells.

Starting with its success in blood cancer treatment, scientists have been trying to expand the application of CAR cell therapy to treat solid cancer. But there have been difficulties to develop CAR cells with effective killing abilities against solid cancer cells with minimized side effects. Accordingly, in recent years, the development of smarter CAR engineering technologies, i.e., computational logic gates such as AND, OR, and NOT, to effectively target cancer cells has been underway.

At this point in time, the research team built a large-scale database for cancer and normal cells to discover the exact genes that are expressed only from cancer cells at a single-cell level. The team followed this up by developing an AI algorithm that could search for a combination of genes that best distinguishes cancer cells from normal cells. This algorithm, in particular, has been used to find a logic circuit that can specifically target cancer cells through cell-level simulations of all gene combinations. CAR-T cells equipped with logic circuits discovered through this methodology are expected to distinguish cancerous cells from normal cells like computers, thereby minimizing side effects and maximizing the effects of chemotherapy.

Dr. Joonha Kwon, who is the first author of this paper, said, “this research suggests a new method that hasn’t been tried before. What’s particularly noteworthy is the process in which we found the optimal CAR cell circuit through simulations of millions of individual tumors and normal cells.” He added, “This is an innovative technology that can apply AI and computer logic circuits to immune cell engineering. It would contribute greatly to expanding CAR therapy, which is being successfully used for blood cancer, to solid cancers as well.”

This research was funded by the Original Technology Development Project and Research Program for Next Generation Applied Omic of the Korea Research Foundation.

Figure 1. A schematic diagram of manufacturing and administration process of CAR therapy and of cancer cell-specific dual targeting using CAR.

Figure 2. Deep learning (convolutional neural networks, CNNs) algorithm for selection of dual targets based on gene combination (left) and algorithm for calculating expressing cell fractions by gene combination according to logical circuit (right).

2023.03.09 View 11238 -

KAIST researchers discovers the neural circuit that reacts to alarm clock

KAIST (President Kwang Hyung Lee) announced on the 20th that a research team led by Professor Daesoo Kim of the Department of Brain and Cognitive Sciences and Dr. Jeongjin Kim 's team from the Korea Institute of Science and Technology (KIST) have identified the principle of awakening animals by responding to sounds even while sleeping.

Sleep is a very important physiological process that organizes brain activity and maintains health. During sleep, the function of sensory nerves is blocked, so the ability to detect danger in the proximity is reduced. However, many animals detect approaching predators and respond even while sleeping. Scientists thought that animals ready for danger by alternating between deep sleep and light sleep.

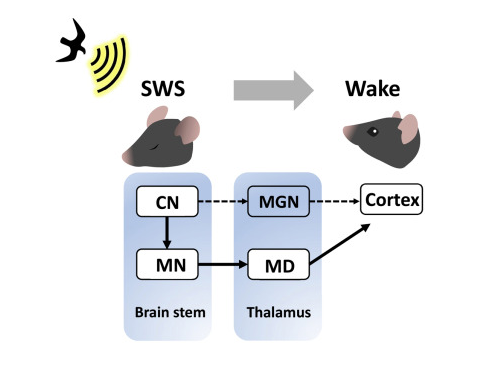

A research team led by Professor Daesoo Kim at KAIST discovered that animals have neural circuits that respond to sounds even during deep sleep. While awake, the medial geniculate thalamus responds to sounds, but during deep sleep, or Non-REM sleep, the Mediodorsal thalamus responds to sounds to wake up the brain.

As a result of the study, when the rats fell into deep sleep, the nerves of the medial geniculate thalamus were also sleeping, but the nerves of mediodorsal thalamus were awake and responded immediately to sounds. In addition, it was observed that when mediodorsal thalamus was inhibited, the rats could not wake up even when a sound was heard, and when the mediodorsal thalamus was stimulated, the rats woke up within a few seconds without sound.

This is the first study to show that sleep and wakefulness can transmit auditory signals through different neural circuits, and was reported in the international journal, Current Biology on February 7, and was highlighted by Nature. (https://www.nature.com/articles/d41586-023-00354-0)

Professor Daesoo Kim explained, “The findings of this study can used in developing digital healthcare technologies to be used to improve understanding of disorders of senses and wakefulness seen in various brain diseases and to control the senses in the future.”

This research was carried out with the support from the National Research Foundation of Korea's Mid-Career Research Foundation Program.

Figure 1. Traditionally, sound signals were thought to be propagated from the auditory nerve to the auditory thalamus. However, while in slow-wave sleep, the auditory nerve sends sound signals to the mediodorsal thalamic neurons via the brainstem nerve to induce arousal in the brain.

Figure 2. GRIK4 dorsomedial nerve in response to sound stimulation. The awakening effect is induced as the activity of the GRIK4 dorsal medial nerve increases based on the time when sound stimulation is given.

2023.03.03 View 6473

KAIST researchers discovers the neural circuit that reacts to alarm clock

KAIST (President Kwang Hyung Lee) announced on the 20th that a research team led by Professor Daesoo Kim of the Department of Brain and Cognitive Sciences and Dr. Jeongjin Kim 's team from the Korea Institute of Science and Technology (KIST) have identified the principle of awakening animals by responding to sounds even while sleeping.

Sleep is a very important physiological process that organizes brain activity and maintains health. During sleep, the function of sensory nerves is blocked, so the ability to detect danger in the proximity is reduced. However, many animals detect approaching predators and respond even while sleeping. Scientists thought that animals ready for danger by alternating between deep sleep and light sleep.

A research team led by Professor Daesoo Kim at KAIST discovered that animals have neural circuits that respond to sounds even during deep sleep. While awake, the medial geniculate thalamus responds to sounds, but during deep sleep, or Non-REM sleep, the Mediodorsal thalamus responds to sounds to wake up the brain.

As a result of the study, when the rats fell into deep sleep, the nerves of the medial geniculate thalamus were also sleeping, but the nerves of mediodorsal thalamus were awake and responded immediately to sounds. In addition, it was observed that when mediodorsal thalamus was inhibited, the rats could not wake up even when a sound was heard, and when the mediodorsal thalamus was stimulated, the rats woke up within a few seconds without sound.

This is the first study to show that sleep and wakefulness can transmit auditory signals through different neural circuits, and was reported in the international journal, Current Biology on February 7, and was highlighted by Nature. (https://www.nature.com/articles/d41586-023-00354-0)

Professor Daesoo Kim explained, “The findings of this study can used in developing digital healthcare technologies to be used to improve understanding of disorders of senses and wakefulness seen in various brain diseases and to control the senses in the future.”

This research was carried out with the support from the National Research Foundation of Korea's Mid-Career Research Foundation Program.

Figure 1. Traditionally, sound signals were thought to be propagated from the auditory nerve to the auditory thalamus. However, while in slow-wave sleep, the auditory nerve sends sound signals to the mediodorsal thalamic neurons via the brainstem nerve to induce arousal in the brain.

Figure 2. GRIK4 dorsomedial nerve in response to sound stimulation. The awakening effect is induced as the activity of the GRIK4 dorsal medial nerve increases based on the time when sound stimulation is given.

2023.03.03 View 6473 -

KAIST Holds 2023 Commencement Ceremony

< Photo 1. On the 17th, KAIST held the 2023 Commencement Ceremony for a total of 2,870 students, including 691 doctors. >

KAIST held its 2023 commencement ceremony at the Sports Complex of its main campus in Daejeon at 2 p.m. on February 27. It was the first commencement ceremony to invite all its graduates since the start of COVID-19 quarantine measures.

KAIST awarded a total of 2,870 degrees including 691 PhD degrees, 1,464 master’s degrees, and 715 bachelor’s degrees, which adds to the total of 74,999 degrees KAIST has conferred since its foundation in 1971, which includes 15,772 PhD, 38,360 master’s and 20,867 bachelor’s degrees.

This year’s Cum Laude, Gabin Ryu, from the Department of Mechanical Engineering received the Minister of Science and ICT Award. Seung-ju Lee from the School of Computing received the Chairman of the KAIST Board of Trustees Award, while Jantakan Nedsaengtip, an international student from Thailand received the KAIST Presidential Award, and Jaeyong Hwang from the Department of Physics and Junmo Lee from the Department of Industrial and Systems Engineering each received the President of the Alumni Association Award and the Chairman of the KAIST Development Foundation Award, respectively.

Minister Jong-ho Lee of the Ministry of Science and ICT awarded the recipients of the academic awards and delivered a congratulatory speech.

Yujin Cha from the Department of Bio and Brain Engineering, who received a PhD degree after 19 years since his entrance to KAIST as an undergraduate student in 2004 gave a speech on behalf of the graduates to move and inspire the graduates and the guests.

After Cha received a bachelor’s degree from the Department of Nuclear and Quantum Engineering, he entered a medical graduate school and became a radiation oncology specialist. But after experiencing the death of a young patient who suffered from osteosarcoma, he returned to his alma mater to become a scientist. As he believes that science and technology is the ultimate solution to the limitations of modern medicine, he started as a PhD student at the Department of Bio and Brain Engineering in 2018, hoping to find such solutions.

During his course, he identified the characteristics of the decision-making process of doctors during diagnosis, and developed a brain-inspired AI algorithm. It is an original and challenging study that attempted to develop a fundamental machine learning theory from the data he collected from 200 doctors of different specialties.

Cha said, “Humans and AI can cooperate by humans utilizing the unique learning abilities of AI to develop our expertise, while AIs can mimic us humans’ learning abilities to improve.” He added, “My ultimate goal is to develop technology to a level at which humans and machines influence each other and ‘coevolve’, and applying it not only to medicine, but in all areas.”

Cha, who is currently an assistant professor at the KAIST Biomedical Research Center, has also written Artificial Intelligence for Doctors in 2017 to help medical personnel use AI in clinical fields, and the book was selected as one of the 2018 Sejong Books in the academic category.

During his speech at this year’s commencement ceremony, he shared that “there are so many things in the world that are difficult to solve and many things to solve them with, but I believe the things that can really broaden the horizons of the world and find fundamental solutions to the problems at hand are science and technology.”

Meanwhile, singer-songwriter Sae Byul Park who studied at the KAIST Graduate School of Culture Technology will also receive her PhD degree.

Natural language processing (NLP) is a field in AI that teaches a computer to understand and analyze human language that is actively being studied. An example of NLP is ChatGTP, which recently received a lot of attention. For her research, Park analyzed music rather than language using NLP technology.

To analyze music, which is in the form of sound, using the methods for NLP, it is necessary to rebuild notes and beats into a form of words or sentences as in a language. For this, Park designed an algorithm called Mel2Word and applied it to her research.

She also suggested that by converting melodies into texts for analysis, one would be able to quantitatively express music as sentences or words with meaning and context rather than as simple sounds representing a certain note.

Park said, “music has always been considered as a product of subjective emotion, but this research provides a framework that can calculate and analyze music.”

Park’s study can later be developed into a tool to measure the similarities between musical work, as well as a piece’s originality, artistry and popularity, and it can be used as a clue to explore the fundamental principles of how humans respond to music from a cognitive science perspective.

Park began her Ph.D. program in 2014, while carrying on with her musical activities as well as public and university lectures alongside, and dealing with personally major events including marriage and childbirth during the course of years. She already met the requirements to receive her degree in 2019, but delayed her graduation in order to improve the level of completion of her research, and finally graduated with her current achievements after nine years.

Professor Juhan Nam, who supervised Park’s research, said, “Park, who has a bachelor’s degree in psychology, later learned to code for graduate school, and has complete high-quality research in the field of artificial intelligence.” He added, “Though it took a long time, her attitude of not giving up until the end as a researcher is also excellent.”

Sae Byul Park is currently lecturing courses entitled Culture Technology and Music Information Retrieval at the Underwood International College of Yonsei University.

Park said, “the 10 or so years I’ve spent at KAIST as a graduate student was a time I could learn and prosper not only academically but from all angles of life.” She added, “having received a doctorate degree is not the end, but a ‘commencement’. Therefore, I will start to root deeper from the seeds I sowed and work harder as a both a scholar and an artist.”

< Photo 2. From left) Yujin Cha (Valedictorian, Medical-Scientist Program Ph.D. graduate), Saebyeol Park (a singer-songwriter, Ph.D. graduate from the Graduate School of Culture and Technology), Junseok Moon and Inah Seo (the two highlighted CEO graduates from the Department of Management Engineering's master’s program) >

Young entrepreneurs who dream of solving social problems will also be wearing their graduation caps. Two such graduates are Jun-seok Moon and Inah Seo, receiving their master’s degrees in social entrepreneurship MBA from the KAIST College of Business.

Before entrance, Moon ran a café helping African refugees stand on their own feet. Then, he entered KAIST to later expand his business and learn social entrepreneurship in order to sustainably help refugees in the blind spots of human rights and welfare.

During his master’s course, Moon realized that he could achieve active carbon reduction by changing the coffee alone, and switched his business field and founded Equal Table. The amount of carbon an individual can reduce by refraining from using a single paper cup is 10g, while changing the coffee itself can reduce it by 300g.

1kg of coffee emits 15kg of carbon over the course of its production, distribution, processing, and consumption, but Moon produces nearly carbon-neutral coffee beans by having innovated the entire process. In particular, the company-to-company ESG business solution is Moon’s new start-up area. It provides companies with carbon-reduced coffee made by roasting raw beans from carbon-neutral certified farms with 100% renewable energy, and shows how much carbon has been reduced in its making. Equal Table will launch the service this month in collaboration with SK Telecom, its first partner.

Inah Seo, who also graduated with Moon, founded Conscious Wear to start a fashion business reducing environmental pollution. In order to realize her mission, she felt the need to gain the appropriate expertise in management, and enrolled for the social entrepreneurship MBA.

Out of the various fashion industries, Seo focused on the leather market, which is worth 80 trillion won. Due to thickness or contamination issues, only about 60% of animal skin fabric is used, and the rest is discarded. Heavy metals are used during such processes, which also directly affects the environment.

During the social entrepreneurship MBA course, Seo collaborated with SK Chemicals, which had links through the program, and launched eco-friendly leather bags. The bags used discarded leather that was recycled by grinding and reprocessing into a biomaterial called PO3G. It was the first case in which PO3G that is over 90% biodegradable was applied to regenerated leather. In other words, it can reduce environmental pollution in the processing and disposal stages, while also reducing carbon emissions and water usage by one-tenth compared to existing cowhide products.

The social entrepreneurship MBA course, from which Moon and Seo graduated, will run in integration with the Graduate School of Green Growth as an Impact MBA program starting this year. KAIST plans to steadily foster entrepreneurs who will lead meaningful changes in the environment and society as well as economic values through innovative technologies and ideas.

< Photo 3. NYU President Emeritus John Sexton (left), who received this year's honorary doctorate of science, poses with President Kwang Hyung Lee >

Meanwhile, during this day’s commencement ceremony, KAIST also presented President Emeritus John Sexton of New York University with an honorary doctorate in science. He was recognized for laying the foundation for the cooperation between KAIST and New York University, such as promoting joint campuses.

< Photo 4. At the commencement ceremony of KAIST held on the 17th, President Kwang Hyung Lee is encouraging the graduates with his commencement address. >

President Kwang Hyung Lee emphasized in his commencement speech that, “if you can draw up the future and work hard toward your goal, the future can become a work of art that you create with your own hands,” and added, “Never stop on the journey toward your dreams, and do not give up even when you are met with failure. Failure happens to everyone, all the time. The important thing is to know 'why you failed', and to use those elements of failure as the driving force for the next try.”

2023.02.20 View 21778

KAIST Holds 2023 Commencement Ceremony

< Photo 1. On the 17th, KAIST held the 2023 Commencement Ceremony for a total of 2,870 students, including 691 doctors. >

KAIST held its 2023 commencement ceremony at the Sports Complex of its main campus in Daejeon at 2 p.m. on February 27. It was the first commencement ceremony to invite all its graduates since the start of COVID-19 quarantine measures.

KAIST awarded a total of 2,870 degrees including 691 PhD degrees, 1,464 master’s degrees, and 715 bachelor’s degrees, which adds to the total of 74,999 degrees KAIST has conferred since its foundation in 1971, which includes 15,772 PhD, 38,360 master’s and 20,867 bachelor’s degrees.

This year’s Cum Laude, Gabin Ryu, from the Department of Mechanical Engineering received the Minister of Science and ICT Award. Seung-ju Lee from the School of Computing received the Chairman of the KAIST Board of Trustees Award, while Jantakan Nedsaengtip, an international student from Thailand received the KAIST Presidential Award, and Jaeyong Hwang from the Department of Physics and Junmo Lee from the Department of Industrial and Systems Engineering each received the President of the Alumni Association Award and the Chairman of the KAIST Development Foundation Award, respectively.

Minister Jong-ho Lee of the Ministry of Science and ICT awarded the recipients of the academic awards and delivered a congratulatory speech.

Yujin Cha from the Department of Bio and Brain Engineering, who received a PhD degree after 19 years since his entrance to KAIST as an undergraduate student in 2004 gave a speech on behalf of the graduates to move and inspire the graduates and the guests.

After Cha received a bachelor’s degree from the Department of Nuclear and Quantum Engineering, he entered a medical graduate school and became a radiation oncology specialist. But after experiencing the death of a young patient who suffered from osteosarcoma, he returned to his alma mater to become a scientist. As he believes that science and technology is the ultimate solution to the limitations of modern medicine, he started as a PhD student at the Department of Bio and Brain Engineering in 2018, hoping to find such solutions.

During his course, he identified the characteristics of the decision-making process of doctors during diagnosis, and developed a brain-inspired AI algorithm. It is an original and challenging study that attempted to develop a fundamental machine learning theory from the data he collected from 200 doctors of different specialties.

Cha said, “Humans and AI can cooperate by humans utilizing the unique learning abilities of AI to develop our expertise, while AIs can mimic us humans’ learning abilities to improve.” He added, “My ultimate goal is to develop technology to a level at which humans and machines influence each other and ‘coevolve’, and applying it not only to medicine, but in all areas.”

Cha, who is currently an assistant professor at the KAIST Biomedical Research Center, has also written Artificial Intelligence for Doctors in 2017 to help medical personnel use AI in clinical fields, and the book was selected as one of the 2018 Sejong Books in the academic category.

During his speech at this year’s commencement ceremony, he shared that “there are so many things in the world that are difficult to solve and many things to solve them with, but I believe the things that can really broaden the horizons of the world and find fundamental solutions to the problems at hand are science and technology.”

Meanwhile, singer-songwriter Sae Byul Park who studied at the KAIST Graduate School of Culture Technology will also receive her PhD degree.

Natural language processing (NLP) is a field in AI that teaches a computer to understand and analyze human language that is actively being studied. An example of NLP is ChatGTP, which recently received a lot of attention. For her research, Park analyzed music rather than language using NLP technology.

To analyze music, which is in the form of sound, using the methods for NLP, it is necessary to rebuild notes and beats into a form of words or sentences as in a language. For this, Park designed an algorithm called Mel2Word and applied it to her research.

She also suggested that by converting melodies into texts for analysis, one would be able to quantitatively express music as sentences or words with meaning and context rather than as simple sounds representing a certain note.

Park said, “music has always been considered as a product of subjective emotion, but this research provides a framework that can calculate and analyze music.”

Park’s study can later be developed into a tool to measure the similarities between musical work, as well as a piece’s originality, artistry and popularity, and it can be used as a clue to explore the fundamental principles of how humans respond to music from a cognitive science perspective.

Park began her Ph.D. program in 2014, while carrying on with her musical activities as well as public and university lectures alongside, and dealing with personally major events including marriage and childbirth during the course of years. She already met the requirements to receive her degree in 2019, but delayed her graduation in order to improve the level of completion of her research, and finally graduated with her current achievements after nine years.

Professor Juhan Nam, who supervised Park’s research, said, “Park, who has a bachelor’s degree in psychology, later learned to code for graduate school, and has complete high-quality research in the field of artificial intelligence.” He added, “Though it took a long time, her attitude of not giving up until the end as a researcher is also excellent.”

Sae Byul Park is currently lecturing courses entitled Culture Technology and Music Information Retrieval at the Underwood International College of Yonsei University.

Park said, “the 10 or so years I’ve spent at KAIST as a graduate student was a time I could learn and prosper not only academically but from all angles of life.” She added, “having received a doctorate degree is not the end, but a ‘commencement’. Therefore, I will start to root deeper from the seeds I sowed and work harder as a both a scholar and an artist.”

< Photo 2. From left) Yujin Cha (Valedictorian, Medical-Scientist Program Ph.D. graduate), Saebyeol Park (a singer-songwriter, Ph.D. graduate from the Graduate School of Culture and Technology), Junseok Moon and Inah Seo (the two highlighted CEO graduates from the Department of Management Engineering's master’s program) >

Young entrepreneurs who dream of solving social problems will also be wearing their graduation caps. Two such graduates are Jun-seok Moon and Inah Seo, receiving their master’s degrees in social entrepreneurship MBA from the KAIST College of Business.

Before entrance, Moon ran a café helping African refugees stand on their own feet. Then, he entered KAIST to later expand his business and learn social entrepreneurship in order to sustainably help refugees in the blind spots of human rights and welfare.

During his master’s course, Moon realized that he could achieve active carbon reduction by changing the coffee alone, and switched his business field and founded Equal Table. The amount of carbon an individual can reduce by refraining from using a single paper cup is 10g, while changing the coffee itself can reduce it by 300g.

1kg of coffee emits 15kg of carbon over the course of its production, distribution, processing, and consumption, but Moon produces nearly carbon-neutral coffee beans by having innovated the entire process. In particular, the company-to-company ESG business solution is Moon’s new start-up area. It provides companies with carbon-reduced coffee made by roasting raw beans from carbon-neutral certified farms with 100% renewable energy, and shows how much carbon has been reduced in its making. Equal Table will launch the service this month in collaboration with SK Telecom, its first partner.

Inah Seo, who also graduated with Moon, founded Conscious Wear to start a fashion business reducing environmental pollution. In order to realize her mission, she felt the need to gain the appropriate expertise in management, and enrolled for the social entrepreneurship MBA.

Out of the various fashion industries, Seo focused on the leather market, which is worth 80 trillion won. Due to thickness or contamination issues, only about 60% of animal skin fabric is used, and the rest is discarded. Heavy metals are used during such processes, which also directly affects the environment.

During the social entrepreneurship MBA course, Seo collaborated with SK Chemicals, which had links through the program, and launched eco-friendly leather bags. The bags used discarded leather that was recycled by grinding and reprocessing into a biomaterial called PO3G. It was the first case in which PO3G that is over 90% biodegradable was applied to regenerated leather. In other words, it can reduce environmental pollution in the processing and disposal stages, while also reducing carbon emissions and water usage by one-tenth compared to existing cowhide products.

The social entrepreneurship MBA course, from which Moon and Seo graduated, will run in integration with the Graduate School of Green Growth as an Impact MBA program starting this year. KAIST plans to steadily foster entrepreneurs who will lead meaningful changes in the environment and society as well as economic values through innovative technologies and ideas.

< Photo 3. NYU President Emeritus John Sexton (left), who received this year's honorary doctorate of science, poses with President Kwang Hyung Lee >

Meanwhile, during this day’s commencement ceremony, KAIST also presented President Emeritus John Sexton of New York University with an honorary doctorate in science. He was recognized for laying the foundation for the cooperation between KAIST and New York University, such as promoting joint campuses.

< Photo 4. At the commencement ceremony of KAIST held on the 17th, President Kwang Hyung Lee is encouraging the graduates with his commencement address. >

President Kwang Hyung Lee emphasized in his commencement speech that, “if you can draw up the future and work hard toward your goal, the future can become a work of art that you create with your own hands,” and added, “Never stop on the journey toward your dreams, and do not give up even when you are met with failure. Failure happens to everyone, all the time. The important thing is to know 'why you failed', and to use those elements of failure as the driving force for the next try.”

2023.02.20 View 21778 -

KAIST presents a fundamental technology to remove metastatic traits from lung cancer cells

KAIST (President Kwang Hyung Lee) announced on January 30th that a research team led by Professor Kwang-Hyun Cho from the Department of Bio and Brain Engineering succeeded in using systems biology research to change the properties of carcinogenic cells in the lungs and eliminate both drug resistance and their ability to proliferate out to other areas of the body.

As the incidences of cancer increase within aging populations, cancer has become the most lethal disease threatening healthy life. Fatality rates are especially high when early detection does not happen in time and metastasis has occurred in various organs. In order to resolve this problem, a series of attempts were made to remove or lower the ability of cancer cells to spread, but they resulted in cancer cells in the intermediate state becoming more unstable and even more malignant, which created serious treatment challenges.