camera

-

KAIST Develops Insect-Eye-Inspired Camera Capturing 9,120 Frames Per Second

< (From left) Bio and Brain Engineering PhD Student Jae-Myeong Kwon, Professor Ki-Hun Jeong, PhD Student Hyun-Kyung Kim, PhD Student Young-Gil Cha, and Professor Min H. Kim of the School of Computing >

The compound eyes of insects can detect fast-moving objects in parallel and, in low-light conditions, enhance sensitivity by integrating signals over time to determine motion. Inspired by these biological mechanisms, KAIST researchers have successfully developed a low-cost, high-speed camera that overcomes the limitations of frame rate and sensitivity faced by conventional high-speed cameras.

KAIST (represented by President Kwang Hyung Lee) announced on the 16th of January that a research team led by Professors Ki-Hun Jeong (Department of Bio and Brain Engineering) and Min H. Kim (School of Computing) has developed a novel bio-inspired camera capable of ultra-high-speed imaging with high sensitivity by mimicking the visual structure of insect eyes.

High-quality imaging under high-speed and low-light conditions is a critical challenge in many applications. While conventional high-speed cameras excel in capturing fast motion, their sensitivity decreases as frame rates increase because the time available to collect light is reduced.

To address this issue, the research team adopted an approach similar to insect vision, utilizing multiple optical channels and temporal summation. Unlike traditional monocular camera systems, the bio-inspired camera employs a compound-eye-like structure that allows for the parallel acquisition of frames from different time intervals.

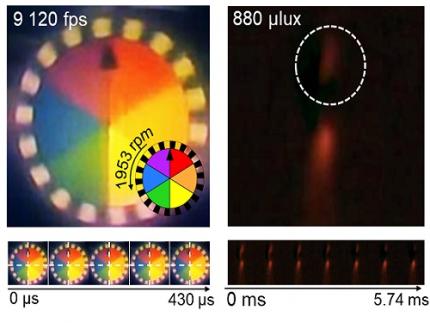

< Figure 1. (A) Vision in a fast-eyed insect. Reflected light from swiftly moving objects sequentially stimulates the photoreceptors along the individual optical channels called ommatidia, of which the visual signals are separately and parallelly processed via the lamina and medulla. Each neural response is temporally summed to enhance the visual signals. The parallel processing and temporal summation allow fast and low-light imaging in dim light. (B) High-speed and high-sensitivity microlens array camera (HS-MAC). A rolling shutter image sensor is utilized to simultaneously acquire multiple frames by channel division, and temporal summation is performed in parallel to realize high speed and sensitivity even in a low-light environment. In addition, the frame components of a single fragmented array image are stitched into a single blurred frame, which is subsequently deblurred by compressive image reconstruction. >

During this process, light is accumulated over overlapping time periods for each frame, increasing the signal-to-noise ratio. The researchers demonstrated that their bio-inspired camera could capture objects up to 40 times dimmer than those detectable by conventional high-speed cameras.

The team also introduced a "channel-splitting" technique to significantly enhance the camera's speed, achieving frame rates thousands of times faster than those supported by the image sensors used in packaging. Additionally, a "compressed image restoration" algorithm was employed to eliminate blur caused by frame integration and reconstruct sharp images.

The resulting bio-inspired camera is less than one millimeter thick and extremely compact, capable of capturing 9,120 frames per second while providing clear images in low-light conditions.

< Figure 2. A high-speed, high-sensitivity biomimetic camera packaged in an image sensor. It is made small enough to fit on a finger, with a thickness of less than 1 mm. >

The research team plans to extend this technology to develop advanced image processing algorithms for 3D imaging and super-resolution imaging, aiming for applications in biomedical imaging, mobile devices, and various other camera technologies.

Hyun-Kyung Kim, a doctoral student in the Department of Bio and Brain Engineering at KAIST and the study's first author, stated, “We have experimentally validated that the insect-eye-inspired camera delivers outstanding performance in high-speed and low-light imaging despite its small size. This camera opens up possibilities for diverse applications in portable camera systems, security surveillance, and medical imaging.”

< Figure 3. Rotating plate and flame captured using the high-speed, high-sensitivity biomimetic camera. The rotating plate at 1,950 rpm was accurately captured at 9,120 fps. In addition, the pinch-off of the flame with a faint intensity of 880 µlux was accurately captured at 1,020 fps. >

This research was published in the international journal Science Advances in January 2025 (Paper Title: “Biologically-inspired microlens array camera for high-speed and high-sensitivity imaging”).

DOI: https://doi.org/10.1126/sciadv.ads3389

This study was supported by the Korea Research Institute for Defense Technology Planning and Advancement (KRIT) of the Defense Acquisition Program Administration (DAPA), the Ministry of Science and ICT, and the Ministry of Trade, Industry and Energy (MOTIE).

2025.01.16 View 7362

KAIST Develops Insect-Eye-Inspired Camera Capturing 9,120 Frames Per Second

< (From left) Bio and Brain Engineering PhD Student Jae-Myeong Kwon, Professor Ki-Hun Jeong, PhD Student Hyun-Kyung Kim, PhD Student Young-Gil Cha, and Professor Min H. Kim of the School of Computing >

The compound eyes of insects can detect fast-moving objects in parallel and, in low-light conditions, enhance sensitivity by integrating signals over time to determine motion. Inspired by these biological mechanisms, KAIST researchers have successfully developed a low-cost, high-speed camera that overcomes the limitations of frame rate and sensitivity faced by conventional high-speed cameras.

KAIST (represented by President Kwang Hyung Lee) announced on the 16th of January that a research team led by Professors Ki-Hun Jeong (Department of Bio and Brain Engineering) and Min H. Kim (School of Computing) has developed a novel bio-inspired camera capable of ultra-high-speed imaging with high sensitivity by mimicking the visual structure of insect eyes.

High-quality imaging under high-speed and low-light conditions is a critical challenge in many applications. While conventional high-speed cameras excel in capturing fast motion, their sensitivity decreases as frame rates increase because the time available to collect light is reduced.

To address this issue, the research team adopted an approach similar to insect vision, utilizing multiple optical channels and temporal summation. Unlike traditional monocular camera systems, the bio-inspired camera employs a compound-eye-like structure that allows for the parallel acquisition of frames from different time intervals.

< Figure 1. (A) Vision in a fast-eyed insect. Reflected light from swiftly moving objects sequentially stimulates the photoreceptors along the individual optical channels called ommatidia, of which the visual signals are separately and parallelly processed via the lamina and medulla. Each neural response is temporally summed to enhance the visual signals. The parallel processing and temporal summation allow fast and low-light imaging in dim light. (B) High-speed and high-sensitivity microlens array camera (HS-MAC). A rolling shutter image sensor is utilized to simultaneously acquire multiple frames by channel division, and temporal summation is performed in parallel to realize high speed and sensitivity even in a low-light environment. In addition, the frame components of a single fragmented array image are stitched into a single blurred frame, which is subsequently deblurred by compressive image reconstruction. >

During this process, light is accumulated over overlapping time periods for each frame, increasing the signal-to-noise ratio. The researchers demonstrated that their bio-inspired camera could capture objects up to 40 times dimmer than those detectable by conventional high-speed cameras.

The team also introduced a "channel-splitting" technique to significantly enhance the camera's speed, achieving frame rates thousands of times faster than those supported by the image sensors used in packaging. Additionally, a "compressed image restoration" algorithm was employed to eliminate blur caused by frame integration and reconstruct sharp images.

The resulting bio-inspired camera is less than one millimeter thick and extremely compact, capable of capturing 9,120 frames per second while providing clear images in low-light conditions.

< Figure 2. A high-speed, high-sensitivity biomimetic camera packaged in an image sensor. It is made small enough to fit on a finger, with a thickness of less than 1 mm. >

The research team plans to extend this technology to develop advanced image processing algorithms for 3D imaging and super-resolution imaging, aiming for applications in biomedical imaging, mobile devices, and various other camera technologies.

Hyun-Kyung Kim, a doctoral student in the Department of Bio and Brain Engineering at KAIST and the study's first author, stated, “We have experimentally validated that the insect-eye-inspired camera delivers outstanding performance in high-speed and low-light imaging despite its small size. This camera opens up possibilities for diverse applications in portable camera systems, security surveillance, and medical imaging.”

< Figure 3. Rotating plate and flame captured using the high-speed, high-sensitivity biomimetic camera. The rotating plate at 1,950 rpm was accurately captured at 9,120 fps. In addition, the pinch-off of the flame with a faint intensity of 880 µlux was accurately captured at 1,020 fps. >

This research was published in the international journal Science Advances in January 2025 (Paper Title: “Biologically-inspired microlens array camera for high-speed and high-sensitivity imaging”).

DOI: https://doi.org/10.1126/sciadv.ads3389

This study was supported by the Korea Research Institute for Defense Technology Planning and Advancement (KRIT) of the Defense Acquisition Program Administration (DAPA), the Ministry of Science and ICT, and the Ministry of Trade, Industry and Energy (MOTIE).

2025.01.16 View 7362 -

KAIST builds a high-resolution 3D holographic sensor using a single mask

Holographic cameras can provide more realistic images than ordinary cameras thanks to their ability to acquire 3D information about objects. However, existing holographic cameras use interferometers that measure the wavelength and refraction of light through the interference of light waves, which makes them complex and sensitive to their surrounding environment.

On August 23, a KAIST research team led by Professor YongKeun Park from the Department of Physics announced a new leap forward in 3D holographic imaging sensor technology.

The team proposed an innovative holographic camera technology that does not use complex interferometry. Instead, it uses a mask to precisely measure the phase information of light and reconstruct the 3D information of an object with higher accuracy.

< Figure 1. Structure and principle of the proposed holographic camera. The amplitude and phase information of light scattered from a holographic camera can be measured. >

The team used a mask that fulfills certain mathematical conditions and incorporated it into an ordinary camera, and the light scattered from a laser is measured through the mask and analyzed using a computer. This does not require a complex interferometer and allows the phase information of light to be collected through a simplified optical system. With this technique, the mask that is placed between the two lenses and behind an object plays an important role. The mask selectively filters specific parts of light,, and the intensity of the light passing through the lens can be measured using an ordinary commercial camera. This technique combines the image data received from the camera with the unique pattern received from the mask and reconstructs an object’s precise 3D information using an algorithm.

This method allows a high-resolution 3D image of an object to be captured in any position. In practical situations, one can construct a laser-based holographic 3D image sensor by adding a mask with a simple design to a general image sensor. This makes the design and construction of the optical system much easier. In particular, this novel technology can capture high-resolution holographic images of objects moving at high speeds, which widens its potential field of application.

< Figure 2. A moving doll captured by a conventional camera and the proposed holographic camera. When taking a picture without focusing on the object, only a blurred image of the doll can be obtained from a general camera, but the proposed holographic camera can restore the blurred image of the doll into a clear image. >

The results of this study, conducted by Dr. Jeonghun Oh from the KAIST Department of Physics as the first author, were published in Nature Communications on August 12 under the title, "Non-interferometric stand-alone single-shot holographic camera using reciprocal diffractive imaging".

Dr. Oh said, “The holographic camera module we are suggesting can be built by adding a filter to an ordinary camera, which would allow even non-experts to handle it easily in everyday life if it were to be commercialized.” He added, “In particular, it is a promising candidate with the potential to replace existing remote sensing technologies.”

This research was supported by the National Research Foundation’s Leader Research Project, the Korean Ministry of Science and ICT’s Core Hologram Technology Support Project, and the Nano and Material Technology Development Project.

2023.09.05 View 8938

KAIST builds a high-resolution 3D holographic sensor using a single mask

Holographic cameras can provide more realistic images than ordinary cameras thanks to their ability to acquire 3D information about objects. However, existing holographic cameras use interferometers that measure the wavelength and refraction of light through the interference of light waves, which makes them complex and sensitive to their surrounding environment.

On August 23, a KAIST research team led by Professor YongKeun Park from the Department of Physics announced a new leap forward in 3D holographic imaging sensor technology.

The team proposed an innovative holographic camera technology that does not use complex interferometry. Instead, it uses a mask to precisely measure the phase information of light and reconstruct the 3D information of an object with higher accuracy.

< Figure 1. Structure and principle of the proposed holographic camera. The amplitude and phase information of light scattered from a holographic camera can be measured. >

The team used a mask that fulfills certain mathematical conditions and incorporated it into an ordinary camera, and the light scattered from a laser is measured through the mask and analyzed using a computer. This does not require a complex interferometer and allows the phase information of light to be collected through a simplified optical system. With this technique, the mask that is placed between the two lenses and behind an object plays an important role. The mask selectively filters specific parts of light,, and the intensity of the light passing through the lens can be measured using an ordinary commercial camera. This technique combines the image data received from the camera with the unique pattern received from the mask and reconstructs an object’s precise 3D information using an algorithm.

This method allows a high-resolution 3D image of an object to be captured in any position. In practical situations, one can construct a laser-based holographic 3D image sensor by adding a mask with a simple design to a general image sensor. This makes the design and construction of the optical system much easier. In particular, this novel technology can capture high-resolution holographic images of objects moving at high speeds, which widens its potential field of application.

< Figure 2. A moving doll captured by a conventional camera and the proposed holographic camera. When taking a picture without focusing on the object, only a blurred image of the doll can be obtained from a general camera, but the proposed holographic camera can restore the blurred image of the doll into a clear image. >

The results of this study, conducted by Dr. Jeonghun Oh from the KAIST Department of Physics as the first author, were published in Nature Communications on August 12 under the title, "Non-interferometric stand-alone single-shot holographic camera using reciprocal diffractive imaging".

Dr. Oh said, “The holographic camera module we are suggesting can be built by adding a filter to an ordinary camera, which would allow even non-experts to handle it easily in everyday life if it were to be commercialized.” He added, “In particular, it is a promising candidate with the potential to replace existing remote sensing technologies.”

This research was supported by the National Research Foundation’s Leader Research Project, the Korean Ministry of Science and ICT’s Core Hologram Technology Support Project, and the Nano and Material Technology Development Project.

2023.09.05 View 8938 -

AI Light-Field Camera Reads 3D Facial Expressions

Machine-learned, light-field camera reads facial expressions from high-contrast illumination invariant 3D facial images

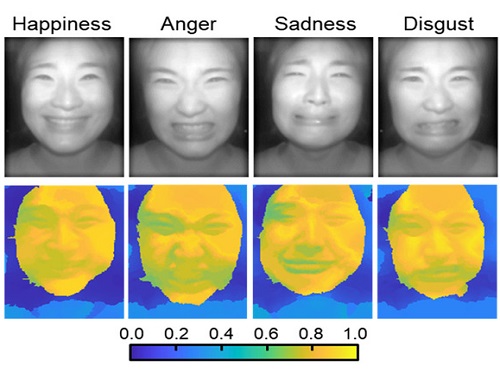

A joint research team led by Professors Ki-Hun Jeong and Doheon Lee from the KAIST Department of Bio and Brain Engineering reported the development of a technique for facial expression detection by merging near-infrared light-field camera techniques with artificial intelligence (AI) technology.

Unlike a conventional camera, the light-field camera contains micro-lens arrays in front of the image sensor, which makes the camera small enough to fit into a smart phone, while allowing it to acquire the spatial and directional information of the light with a single shot. The technique has received attention as it can reconstruct images in a variety of ways including multi-views, refocusing, and 3D image acquisition, giving rise to many potential applications.

However, the optical crosstalk between shadows caused by external light sources in the environment and the micro-lens has limited existing light-field cameras from being able to provide accurate image contrast and 3D reconstruction.

The joint research team applied a vertical-cavity surface-emitting laser (VCSEL) in the near-IR range to stabilize the accuracy of 3D image reconstruction that previously depended on environmental light. When an external light source is shone on a face at 0-, 30-, and 60-degree angles, the light field camera reduces 54% of image reconstruction errors. Additionally, by inserting a light-absorbing layer for visible and near-IR wavelengths between the micro-lens arrays, the team could minimize optical crosstalk while increasing the image contrast by 2.1 times.

Through this technique, the team could overcome the limitations of existing light-field cameras and was able to develop their NIR-based light-field camera (NIR-LFC), optimized for the 3D image reconstruction of facial expressions. Using the NIR-LFC, the team acquired high-quality 3D reconstruction images of facial expressions expressing various emotions regardless of the lighting conditions of the surrounding environment.

The facial expressions in the acquired 3D images were distinguished through machine learning with an average of 85% accuracy – a statistically significant figure compared to when 2D images were used. Furthermore, by calculating the interdependency of distance information that varies with facial expression in 3D images, the team could identify the information a light-field camera utilizes to distinguish human expressions.

Professor Ki-Hun Jeong said, “The sub-miniature light-field camera developed by the research team has the potential to become the new platform to quantitatively analyze the facial expressions and emotions of humans.” To highlight the significance of this research, he added, “It could be applied in various fields including mobile healthcare, field diagnosis, social cognition, and human-machine interactions.”

This research was published in Advanced Intelligent Systems online on December 16, under the title, “Machine-Learned Light-field Camera that Reads Facial Expression from High-Contrast and Illumination Invariant 3D Facial Images.” This research was funded by the Ministry of Science and ICT and the Ministry of Trade, Industry and Energy.

-Publication“Machine-learned light-field camera that reads fascial expression from high-contrast and illumination invariant 3D facial images,” Sang-In Bae, Sangyeon Lee, Jae-Myeong Kwon, Hyun-Kyung Kim. Kyung-Won Jang, Doheon Lee, Ki-Hun Jeong, Advanced Intelligent Systems, December 16, 2021 (doi.org/10.1002/aisy.202100182)

ProfileProfessor Ki-Hun JeongBiophotonic LaboratoryDepartment of Bio and Brain EngineeringKAIST

Professor Doheon LeeDepartment of Bio and Brain EngineeringKAIST

2022.01.21 View 13820

AI Light-Field Camera Reads 3D Facial Expressions

Machine-learned, light-field camera reads facial expressions from high-contrast illumination invariant 3D facial images

A joint research team led by Professors Ki-Hun Jeong and Doheon Lee from the KAIST Department of Bio and Brain Engineering reported the development of a technique for facial expression detection by merging near-infrared light-field camera techniques with artificial intelligence (AI) technology.

Unlike a conventional camera, the light-field camera contains micro-lens arrays in front of the image sensor, which makes the camera small enough to fit into a smart phone, while allowing it to acquire the spatial and directional information of the light with a single shot. The technique has received attention as it can reconstruct images in a variety of ways including multi-views, refocusing, and 3D image acquisition, giving rise to many potential applications.

However, the optical crosstalk between shadows caused by external light sources in the environment and the micro-lens has limited existing light-field cameras from being able to provide accurate image contrast and 3D reconstruction.

The joint research team applied a vertical-cavity surface-emitting laser (VCSEL) in the near-IR range to stabilize the accuracy of 3D image reconstruction that previously depended on environmental light. When an external light source is shone on a face at 0-, 30-, and 60-degree angles, the light field camera reduces 54% of image reconstruction errors. Additionally, by inserting a light-absorbing layer for visible and near-IR wavelengths between the micro-lens arrays, the team could minimize optical crosstalk while increasing the image contrast by 2.1 times.

Through this technique, the team could overcome the limitations of existing light-field cameras and was able to develop their NIR-based light-field camera (NIR-LFC), optimized for the 3D image reconstruction of facial expressions. Using the NIR-LFC, the team acquired high-quality 3D reconstruction images of facial expressions expressing various emotions regardless of the lighting conditions of the surrounding environment.

The facial expressions in the acquired 3D images were distinguished through machine learning with an average of 85% accuracy – a statistically significant figure compared to when 2D images were used. Furthermore, by calculating the interdependency of distance information that varies with facial expression in 3D images, the team could identify the information a light-field camera utilizes to distinguish human expressions.

Professor Ki-Hun Jeong said, “The sub-miniature light-field camera developed by the research team has the potential to become the new platform to quantitatively analyze the facial expressions and emotions of humans.” To highlight the significance of this research, he added, “It could be applied in various fields including mobile healthcare, field diagnosis, social cognition, and human-machine interactions.”

This research was published in Advanced Intelligent Systems online on December 16, under the title, “Machine-Learned Light-field Camera that Reads Facial Expression from High-Contrast and Illumination Invariant 3D Facial Images.” This research was funded by the Ministry of Science and ICT and the Ministry of Trade, Industry and Energy.

-Publication“Machine-learned light-field camera that reads fascial expression from high-contrast and illumination invariant 3D facial images,” Sang-In Bae, Sangyeon Lee, Jae-Myeong Kwon, Hyun-Kyung Kim. Kyung-Won Jang, Doheon Lee, Ki-Hun Jeong, Advanced Intelligent Systems, December 16, 2021 (doi.org/10.1002/aisy.202100182)

ProfileProfessor Ki-Hun JeongBiophotonic LaboratoryDepartment of Bio and Brain EngineeringKAIST

Professor Doheon LeeDepartment of Bio and Brain EngineeringKAIST

2022.01.21 View 13820 -

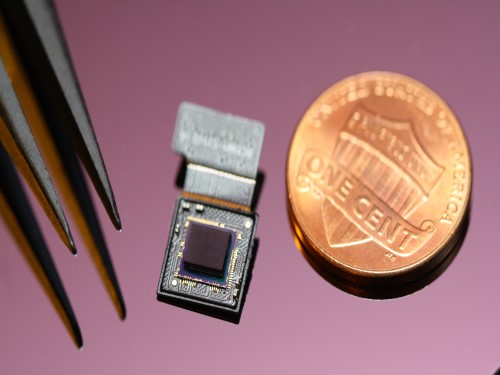

Ultrathin but Fully Packaged High-Resolution Camera

- Biologically inspired ultrathin arrayed camera captures super-resolution images. -

The unique structures of biological vision systems in nature inspired scientists to design ultracompact imaging systems. A research group led by Professor Ki-Hun Jeong have made an ultracompact camera that captures high-contrast and high-resolution images. Fully packaged with micro-optical elements such as inverted micro-lenses, multilayered pinhole arrays, and gap spacers on the image sensor, the camera boasts a total track length of 740 μm and a field of view of 73°.

Inspired by the eye structures of the paper wasp species Xenos peckii, the research team completely suppressed optical noise between micro-lenses while reducing camera thickness. The camera has successfully demonstrated high-contrast clear array images acquired from tiny micro lenses. To further enhance the image quality of the captured image, the team combined the arrayed images into one image through super-resolution imaging.

An insect’s compound eye has superior visual characteristics, such as a wide viewing angle, high motion sensitivity, and a large depth of field while maintaining a small volume of visual structure with a small focal length. Among them, the eyes of Xenos peckii and an endoparasite found on paper wasps have hundreds of photoreceptors in a single lens unlike conventional compound eyes. In particular, the eye structures of an adult Xenos peckii exhibit hundreds of photoreceptors on an individual eyelet and offer engineering inspiration for ultrathin cameras or imaging applications because they have higher visual acuity than other compound eyes.

For instance, Xenos peckii’s eye-inspired cameras provide a 50 times higher spatial resolution than those based on arthropod eyes. In addition, the effective image resolution of the Xenos peckii’s eye can be further improved using the image overlaps between neighboring eyelets. This unique structure offers higher visual resolution than other insect eyes.

The team achieved high-contrast and super-resolution imaging through a novel arrayed design of micro-optical elements comprising multilayered aperture arrays and inverted micro-lens arrays directly stacked over an image sensor. This optical component was integrated with a complementary metal oxide semiconductor image sensor.

This is first demonstration of super-resolution imaging which acquires a single integrated image with high contrast and high resolving power reconstructed from high-contrast array images. It is expected that this ultrathin arrayed camera can be applied for further developing mobile devices, advanced surveillance vehicles, and endoscopes.

Professor Jeong said, “This research has led to technological advances in imaging technology. We will continue to strive to make significant impacts on multidisciplinary research projects in the fields of microtechnology and nanotechnology, seeking inspiration from natural photonic structures.”

This work was featured in Light Science & Applications last month and was supported by the National Research Foundation (NRF) of and the Ministry of Health and Welfare (MOHW) of Korea.

Image credit: Professor Ki-Hun Jeong, KAIST

Image usage restrictions: News organizations may use or redistribute this image, with proper attribution, as part of news coverage of this paper only.

Publication:

Kisoo Kim, Kyung-Won Jang, Jae-Kwan Ryu, and Ki-Hun Jeong. (2020) “Biologically inspired ultrathin arrayed camera for high-contrast and high-resolution imaging”. Light Science & Applications. Volume 9. Article 28. Available online at https://doi.org/10.1038/s41377-020-0261-8

Profile:

Ki-Hun Jeong

Professor

kjeong@kaist.ac.kr

http://biophotonics.kaist.ac.kr/

Department of Bio and Brain Engineering

KAIST

Profile:

Kisoo Kim

Ph.D. Candidate

kisoo.kim1@kaist.ac.kr

http://biophotonics.kaist.ac.kr/

Department of Bio and Brain Engineering

KAIST

(END)

2020.03.23 View 20578

Ultrathin but Fully Packaged High-Resolution Camera

- Biologically inspired ultrathin arrayed camera captures super-resolution images. -

The unique structures of biological vision systems in nature inspired scientists to design ultracompact imaging systems. A research group led by Professor Ki-Hun Jeong have made an ultracompact camera that captures high-contrast and high-resolution images. Fully packaged with micro-optical elements such as inverted micro-lenses, multilayered pinhole arrays, and gap spacers on the image sensor, the camera boasts a total track length of 740 μm and a field of view of 73°.

Inspired by the eye structures of the paper wasp species Xenos peckii, the research team completely suppressed optical noise between micro-lenses while reducing camera thickness. The camera has successfully demonstrated high-contrast clear array images acquired from tiny micro lenses. To further enhance the image quality of the captured image, the team combined the arrayed images into one image through super-resolution imaging.

An insect’s compound eye has superior visual characteristics, such as a wide viewing angle, high motion sensitivity, and a large depth of field while maintaining a small volume of visual structure with a small focal length. Among them, the eyes of Xenos peckii and an endoparasite found on paper wasps have hundreds of photoreceptors in a single lens unlike conventional compound eyes. In particular, the eye structures of an adult Xenos peckii exhibit hundreds of photoreceptors on an individual eyelet and offer engineering inspiration for ultrathin cameras or imaging applications because they have higher visual acuity than other compound eyes.

For instance, Xenos peckii’s eye-inspired cameras provide a 50 times higher spatial resolution than those based on arthropod eyes. In addition, the effective image resolution of the Xenos peckii’s eye can be further improved using the image overlaps between neighboring eyelets. This unique structure offers higher visual resolution than other insect eyes.

The team achieved high-contrast and super-resolution imaging through a novel arrayed design of micro-optical elements comprising multilayered aperture arrays and inverted micro-lens arrays directly stacked over an image sensor. This optical component was integrated with a complementary metal oxide semiconductor image sensor.

This is first demonstration of super-resolution imaging which acquires a single integrated image with high contrast and high resolving power reconstructed from high-contrast array images. It is expected that this ultrathin arrayed camera can be applied for further developing mobile devices, advanced surveillance vehicles, and endoscopes.

Professor Jeong said, “This research has led to technological advances in imaging technology. We will continue to strive to make significant impacts on multidisciplinary research projects in the fields of microtechnology and nanotechnology, seeking inspiration from natural photonic structures.”

This work was featured in Light Science & Applications last month and was supported by the National Research Foundation (NRF) of and the Ministry of Health and Welfare (MOHW) of Korea.

Image credit: Professor Ki-Hun Jeong, KAIST

Image usage restrictions: News organizations may use or redistribute this image, with proper attribution, as part of news coverage of this paper only.

Publication:

Kisoo Kim, Kyung-Won Jang, Jae-Kwan Ryu, and Ki-Hun Jeong. (2020) “Biologically inspired ultrathin arrayed camera for high-contrast and high-resolution imaging”. Light Science & Applications. Volume 9. Article 28. Available online at https://doi.org/10.1038/s41377-020-0261-8

Profile:

Ki-Hun Jeong

Professor

kjeong@kaist.ac.kr

http://biophotonics.kaist.ac.kr/

Department of Bio and Brain Engineering

KAIST

Profile:

Kisoo Kim

Ph.D. Candidate

kisoo.kim1@kaist.ac.kr

http://biophotonics.kaist.ac.kr/

Department of Bio and Brain Engineering

KAIST

(END)

2020.03.23 View 20578 -

Team KAT Wins the Autonomous Car Challenge

(Team KAT receiving the Presidential Award)

A KAIST team won the 2018 International Autonomous Car Challenge for University Students held in Daegu on November 2.

Professor Seung-Hyun Kong from the ChoChunShik Graduate School of Green Transportation and his team participated in this contest with the team named KAT (KAIST Autonomous Technologies). The team received the Presidential Award with a fifty million won cash prize and an opportunity for a field trip abroad.

The competition was conducted on actual roads with Connected Autonomous Vehicles (CAV), which incorporate autonomous driving technologies and vehicle-to-everything (V2X) communication system.

In this contest, the autonomous vehicles were given a mission to pick up passengers or parcels. Through the V2X communication, the contest gave current location of the passengers or parcels, their destination, and service profitability according to distance and level of service difficulty.

The participating vehicles had to be equipped very accurate and robust navigation system since they had to drive on narrow roads as well as go through tunnels where GPS was not available. Moreover, they had to use camera-based recognition technology that was invulnerable to backlight as the contest was in the late afternoon.

The contest scored the mission in the following way: the vehicles get points if they pick up passengers and safely drop them off at their destination; on the other hand, points are deducted when they violate lanes or traffic lights. It will be a major black mark if a participant sitting in the driver’s seat needs to get involved in driving due to a technical issue.

Youngbo Shim of KAT said, “We believe that we got major points for technical superiority in autonomous driving and our algorithm for passenger selection.”

This contest, hosted by Ministry of Trade, Industry and Energy, was the first international competition for autonomous driving on actual roads. A total of nine teams participated in the final contest, four domestic teams and five teams allied with overseas universities such as Tsinghua University, Waseda University, and Nanyang Technological University.

Professor Kong said, “There is still a long way to go for fully autonomous vehicles that drive flexibly under congested traffic conditions. However, we will continue to our research in order to achieve high-quality autonomous driving technology.”

(Team KAT getting ready for the challenge)

2018.11.06 View 13730

Team KAT Wins the Autonomous Car Challenge

(Team KAT receiving the Presidential Award)

A KAIST team won the 2018 International Autonomous Car Challenge for University Students held in Daegu on November 2.

Professor Seung-Hyun Kong from the ChoChunShik Graduate School of Green Transportation and his team participated in this contest with the team named KAT (KAIST Autonomous Technologies). The team received the Presidential Award with a fifty million won cash prize and an opportunity for a field trip abroad.

The competition was conducted on actual roads with Connected Autonomous Vehicles (CAV), which incorporate autonomous driving technologies and vehicle-to-everything (V2X) communication system.

In this contest, the autonomous vehicles were given a mission to pick up passengers or parcels. Through the V2X communication, the contest gave current location of the passengers or parcels, their destination, and service profitability according to distance and level of service difficulty.

The participating vehicles had to be equipped very accurate and robust navigation system since they had to drive on narrow roads as well as go through tunnels where GPS was not available. Moreover, they had to use camera-based recognition technology that was invulnerable to backlight as the contest was in the late afternoon.

The contest scored the mission in the following way: the vehicles get points if they pick up passengers and safely drop them off at their destination; on the other hand, points are deducted when they violate lanes or traffic lights. It will be a major black mark if a participant sitting in the driver’s seat needs to get involved in driving due to a technical issue.

Youngbo Shim of KAT said, “We believe that we got major points for technical superiority in autonomous driving and our algorithm for passenger selection.”

This contest, hosted by Ministry of Trade, Industry and Energy, was the first international competition for autonomous driving on actual roads. A total of nine teams participated in the final contest, four domestic teams and five teams allied with overseas universities such as Tsinghua University, Waseda University, and Nanyang Technological University.

Professor Kong said, “There is still a long way to go for fully autonomous vehicles that drive flexibly under congested traffic conditions. However, we will continue to our research in order to achieve high-quality autonomous driving technology.”

(Team KAT getting ready for the challenge)

2018.11.06 View 13730 -

Award Winning Portable Sound Camera Design

- A member of KAIST’s faculty has won the “Red Dot Design Award,” one of three of the most prestigious design competitions in the world, for the portable sound camera.

KAIST’s Industrial Design Professor Suk-Hyung Bae’s portable sound camera design, made by SM Instruments and Hyundai, has received a “Red Dot Design Award: Product Design,” one of the most prestigious design competitions in the world.

If you are a driver, you must have experienced unexplained noises in your car. Most industrial products, including cars, may produce abnormal noises caused by an error in design or worn-out machinery. However, it is difficult to identify the exact location of the sound with ears alone.

This is where the sound camera comes in. Just as thermal detector cameras show the distribution of temperature, sound cameras use a microphone arrangement to express the distribution of sound and to find the location of the sound. However, existing sound cameras are not only too big and heavy, their assembly and installation are complex and must be fixed on a tripod. These limitations made it impossible to measure noises from small areas or the base of cars.

The newly developed product is an all-in-one system resolving the inconvenience of assembling the microphone before taking measurements. Moreover, the handle in the middle is ergonomically designed so users can balance its weight with one hand. The two handles on the sides work as a support and enable the user to hold the camera in various ways.

At the award ceremony, Professor Suk-Hyung Bae commented, “The effective combination of cutting edge technology and design components has been recognized.” He also said, “It shows the competency of the KAIST’s Department of Industrial Design, which has a high understanding of science and technology.”

On the other hand, SM Instruments is a sound vibration specialist company which got its start from KAIST’s Technology Business Incubation Centre in 2006 and earned its independence by gaining proprietary technology in only two years. SM Instruments is contributing to developing national sound and vibration technology through relentless change and innovation. ;

Figure 1: Red Dot Design Award winning the portable sound camera, SeeSV-S205

Figure 2: Identifying the location of the noise using the portable sound camera

Figure 3: The image showing the sound distribution using the portable sound camera

2013.04.09 View 21509

Award Winning Portable Sound Camera Design

- A member of KAIST’s faculty has won the “Red Dot Design Award,” one of three of the most prestigious design competitions in the world, for the portable sound camera.

KAIST’s Industrial Design Professor Suk-Hyung Bae’s portable sound camera design, made by SM Instruments and Hyundai, has received a “Red Dot Design Award: Product Design,” one of the most prestigious design competitions in the world.

If you are a driver, you must have experienced unexplained noises in your car. Most industrial products, including cars, may produce abnormal noises caused by an error in design or worn-out machinery. However, it is difficult to identify the exact location of the sound with ears alone.

This is where the sound camera comes in. Just as thermal detector cameras show the distribution of temperature, sound cameras use a microphone arrangement to express the distribution of sound and to find the location of the sound. However, existing sound cameras are not only too big and heavy, their assembly and installation are complex and must be fixed on a tripod. These limitations made it impossible to measure noises from small areas or the base of cars.

The newly developed product is an all-in-one system resolving the inconvenience of assembling the microphone before taking measurements. Moreover, the handle in the middle is ergonomically designed so users can balance its weight with one hand. The two handles on the sides work as a support and enable the user to hold the camera in various ways.

At the award ceremony, Professor Suk-Hyung Bae commented, “The effective combination of cutting edge technology and design components has been recognized.” He also said, “It shows the competency of the KAIST’s Department of Industrial Design, which has a high understanding of science and technology.”

On the other hand, SM Instruments is a sound vibration specialist company which got its start from KAIST’s Technology Business Incubation Centre in 2006 and earned its independence by gaining proprietary technology in only two years. SM Instruments is contributing to developing national sound and vibration technology through relentless change and innovation. ;

Figure 1: Red Dot Design Award winning the portable sound camera, SeeSV-S205

Figure 2: Identifying the location of the noise using the portable sound camera

Figure 3: The image showing the sound distribution using the portable sound camera

2013.04.09 View 21509 -

3D contents using our technology

Professor Noh Jun Yong’s research team from KAIST Graduate School of Culture Technology has successfully developed a software program that improves the semiautomatic conversation rate efficiency of 3D stereoscopic images by 3 times.

This software, named ‘NAKiD’, was first presented at the renowned Computer Graphics conference/exhibition ‘Siggraph 2012’ in August and received intense interest from the participants.

The ‘NAKiD’ technology is forecasted to replace the expensive imported equipment and technology used in 3D filming.

For multi-viewpoint no-glasses 3D stereopsis, two cameras are needed to film the image. However, ‘NAKiD’ can easily convert images from a single camera into a 3D image, greatly decreasing the problems in the film production process as well as its cost.

There are 2 methods commonly used in the production of 3D stereoscopic images; filming using two cameras and the 3D conversion using computer software.

The use of two cameras requires expensive equipment and the filmed images need further processing after production. On the other hand, 3D conversion technology does not require extra devices in the production process and can also convert the existing 2D contents into 3D, a main reason why many countries are focusing on the development of stereoscopic technology.

Stereoscopic conversion is largely divided in to 3 steps; object separation, formation of depth information and stereo rendering. Professor Noh’s teams focused on the optimization of each step to increase the efficiency of the conversion system.

Professor Noh’s research team first increased the separation accuracy to the degree of a single hair and created an algorithm that automatically fills in the background originally covered by the separated object.

The team succeeded in the automatic formation of depth information using the geographic or architectural characteristic and vanishing points. For the stereo rendering process, the team decreased the rendering time by reusing the rendered information of one side, rather than the traditional method of rendering the left and right images separately.

Professor Noh said that ‘although 3D TVs are becoming more and more commercialized, there are not enough programs that can be watched in 3D’ and that ‘stereoscopic conversion technology is receiving high praise in the field of graphics because it allows the easy production of 3D contents with small cost’.

2012.10.20 View 11229

3D contents using our technology

Professor Noh Jun Yong’s research team from KAIST Graduate School of Culture Technology has successfully developed a software program that improves the semiautomatic conversation rate efficiency of 3D stereoscopic images by 3 times.

This software, named ‘NAKiD’, was first presented at the renowned Computer Graphics conference/exhibition ‘Siggraph 2012’ in August and received intense interest from the participants.

The ‘NAKiD’ technology is forecasted to replace the expensive imported equipment and technology used in 3D filming.

For multi-viewpoint no-glasses 3D stereopsis, two cameras are needed to film the image. However, ‘NAKiD’ can easily convert images from a single camera into a 3D image, greatly decreasing the problems in the film production process as well as its cost.

There are 2 methods commonly used in the production of 3D stereoscopic images; filming using two cameras and the 3D conversion using computer software.

The use of two cameras requires expensive equipment and the filmed images need further processing after production. On the other hand, 3D conversion technology does not require extra devices in the production process and can also convert the existing 2D contents into 3D, a main reason why many countries are focusing on the development of stereoscopic technology.

Stereoscopic conversion is largely divided in to 3 steps; object separation, formation of depth information and stereo rendering. Professor Noh’s teams focused on the optimization of each step to increase the efficiency of the conversion system.

Professor Noh’s research team first increased the separation accuracy to the degree of a single hair and created an algorithm that automatically fills in the background originally covered by the separated object.

The team succeeded in the automatic formation of depth information using the geographic or architectural characteristic and vanishing points. For the stereo rendering process, the team decreased the rendering time by reusing the rendered information of one side, rather than the traditional method of rendering the left and right images separately.

Professor Noh said that ‘although 3D TVs are becoming more and more commercialized, there are not enough programs that can be watched in 3D’ and that ‘stereoscopic conversion technology is receiving high praise in the field of graphics because it allows the easy production of 3D contents with small cost’.

2012.10.20 View 11229