Artificial+Intelligence

-

KAIST Develops Robots That React to Danger Like Humans

<(From left) Ph.D candidate See-On Park, Professor Jongwon Lee, and Professor Shinhyun Choi>

In the midst of the co-development of artificial intelligence and robotic advancements, developing technologies that enable robots to efficiently perceive and respond to their surroundings like humans has become a crucial task. In this context, Korean researchers are gaining attention for newly implementing an artificial sensory nervous system that mimics the sensory nervous system of living organisms without the need for separate complex software or circuitry. This breakthrough technology is expected to be applied in fields such as in ultra-small robots and robotic prosthetics, where intelligent and energy-efficient responses to external stimuli are essential.

KAIST (President Kwang Hyung Lee) announced on July15th that a joint research team led by Endowed Chair Professor Shinhyun Choi of the School of Electrical Engineering at KAIST and Professor Jongwon Lee of the Department of Semiconductor Convergence at Chungnam National University (President Jung Kyum Kim) developed a next-generation neuromorphic semiconductor-based artificial sensory nervous system. This system mimics the functions of a living organism's sensory nervous system, and enables a new type of robotic system that can efficiently responds to external stimuli.

In nature, animals — including humans — ignore safe or familiar stimuli and selectively react sensitively to important or dangerous ones. This selective response helps prevent unnecessary energy consumption while maintaining rapid awareness of critical signals. For instance, the sound of an air conditioner or the feel of clothing against the skin soon become familiar and are disregarded. However, if someone calls your name or a sharp object touches your skin, a rapid focus and response occur. These behaviors are regulated by the 'habituation' and 'sensitization' functions in the sensory nervous system. Attempts have been consistently made to apply these sensory nervous system functions of living organisms in order to create robots that efficiently respond to external environments like humans.

However, implementing complex neural characteristics such as habituation and sensitization in robots has faced difficulties in miniaturization and energy efficiency due to the need for separate software or complex circuitry. In particular, there have been attempts to utilize memristors, a neuromorphic semiconductor. A memristor is a next-generation electrical device, which has been widely utilized as an artificial synapse due to its ability to store analog value in the form of device resistance. However, existing memristors had limitations in mimicking the complex characteristics of the nervous system because they only allowed simple monotonic changes in conductivity.

To overcome these limitations, the research team developed a new memristor capable of reproducing complex neural response patterns such as habituation and sensitization within a single device. By introducing additional layer inside the memristor that alter conductivity in opposite directions, the device can more realistically emulate the dynamic synaptic behaviors of a real nervous system — for example, decreasing its response to repeated safe stimuli but quickly regaining sensitivity when a danger signal is detected.

<New memristor mimicking functions of sensory nervous system such as habituation/sensitization>

Using this new memristor, the research team built an artificial sensory nervous system capable of recognizing touch and pain, an applied it to a robotic hand to test its performance. When safe tactile stimuli were repeatedly applied, the robot hand, which initially reacted sensitively to unfamiliar tactile stimuli, gradually showed habituation characteristics by ignoring the stimuli. Later, when stimuli were applied along with an electric shock, it recognized this as a danger signal and showed sensitization characteristics by reacting sensitively again. Through this, it was experimentally proven that robots can efficiently respond to stimuli like humans without separate complex software or processors, verifying the possibility of developing energy-efficient neuro-inspired robots.

<Robot arm with memristor-based artificial sensory nervous system>

See-On Park, researcher at KAIST, stated, "By mimicking the human sensory nervous system with next-generation semiconductors, we have opened up the possibility of implementing a new concept of robots that are smarter and more energy-efficient in responding to external environments." He added, "This technology is expected to be utilized in various fusion fields of next-generation semiconductors and robotics, such as ultra-small robots, military robots, and medical robots like robotic prosthetics".

This research was published online on July 1st in the international journal 'Nature Communications,' with Ph.D candidate See-On Park as the first author.

Paper Title: Experimental demonstration of third-order memristor-based artificial sensory nervous system for neuro-inspired robotics

DOI: https://doi.org/10.1038/s41467-025-60818-x

This research was supported by the Korea National Research Foundation's Next-Generation Intelligent Semiconductor Technology Development Project, the Mid-Career Researcher Program, the PIM Artificial Intelligence Semiconductor Core Technology Development Project, the Excellent New Researcher Program, and the Nano Convergence Technology Division, National Nanofab Center's (NNFC) Nano-Medical Device Project.

2025.07.16 View 295

KAIST Develops Robots That React to Danger Like Humans

<(From left) Ph.D candidate See-On Park, Professor Jongwon Lee, and Professor Shinhyun Choi>

In the midst of the co-development of artificial intelligence and robotic advancements, developing technologies that enable robots to efficiently perceive and respond to their surroundings like humans has become a crucial task. In this context, Korean researchers are gaining attention for newly implementing an artificial sensory nervous system that mimics the sensory nervous system of living organisms without the need for separate complex software or circuitry. This breakthrough technology is expected to be applied in fields such as in ultra-small robots and robotic prosthetics, where intelligent and energy-efficient responses to external stimuli are essential.

KAIST (President Kwang Hyung Lee) announced on July15th that a joint research team led by Endowed Chair Professor Shinhyun Choi of the School of Electrical Engineering at KAIST and Professor Jongwon Lee of the Department of Semiconductor Convergence at Chungnam National University (President Jung Kyum Kim) developed a next-generation neuromorphic semiconductor-based artificial sensory nervous system. This system mimics the functions of a living organism's sensory nervous system, and enables a new type of robotic system that can efficiently responds to external stimuli.

In nature, animals — including humans — ignore safe or familiar stimuli and selectively react sensitively to important or dangerous ones. This selective response helps prevent unnecessary energy consumption while maintaining rapid awareness of critical signals. For instance, the sound of an air conditioner or the feel of clothing against the skin soon become familiar and are disregarded. However, if someone calls your name or a sharp object touches your skin, a rapid focus and response occur. These behaviors are regulated by the 'habituation' and 'sensitization' functions in the sensory nervous system. Attempts have been consistently made to apply these sensory nervous system functions of living organisms in order to create robots that efficiently respond to external environments like humans.

However, implementing complex neural characteristics such as habituation and sensitization in robots has faced difficulties in miniaturization and energy efficiency due to the need for separate software or complex circuitry. In particular, there have been attempts to utilize memristors, a neuromorphic semiconductor. A memristor is a next-generation electrical device, which has been widely utilized as an artificial synapse due to its ability to store analog value in the form of device resistance. However, existing memristors had limitations in mimicking the complex characteristics of the nervous system because they only allowed simple monotonic changes in conductivity.

To overcome these limitations, the research team developed a new memristor capable of reproducing complex neural response patterns such as habituation and sensitization within a single device. By introducing additional layer inside the memristor that alter conductivity in opposite directions, the device can more realistically emulate the dynamic synaptic behaviors of a real nervous system — for example, decreasing its response to repeated safe stimuli but quickly regaining sensitivity when a danger signal is detected.

<New memristor mimicking functions of sensory nervous system such as habituation/sensitization>

Using this new memristor, the research team built an artificial sensory nervous system capable of recognizing touch and pain, an applied it to a robotic hand to test its performance. When safe tactile stimuli were repeatedly applied, the robot hand, which initially reacted sensitively to unfamiliar tactile stimuli, gradually showed habituation characteristics by ignoring the stimuli. Later, when stimuli were applied along with an electric shock, it recognized this as a danger signal and showed sensitization characteristics by reacting sensitively again. Through this, it was experimentally proven that robots can efficiently respond to stimuli like humans without separate complex software or processors, verifying the possibility of developing energy-efficient neuro-inspired robots.

<Robot arm with memristor-based artificial sensory nervous system>

See-On Park, researcher at KAIST, stated, "By mimicking the human sensory nervous system with next-generation semiconductors, we have opened up the possibility of implementing a new concept of robots that are smarter and more energy-efficient in responding to external environments." He added, "This technology is expected to be utilized in various fusion fields of next-generation semiconductors and robotics, such as ultra-small robots, military robots, and medical robots like robotic prosthetics".

This research was published online on July 1st in the international journal 'Nature Communications,' with Ph.D candidate See-On Park as the first author.

Paper Title: Experimental demonstration of third-order memristor-based artificial sensory nervous system for neuro-inspired robotics

DOI: https://doi.org/10.1038/s41467-025-60818-x

This research was supported by the Korea National Research Foundation's Next-Generation Intelligent Semiconductor Technology Development Project, the Mid-Career Researcher Program, the PIM Artificial Intelligence Semiconductor Core Technology Development Project, the Excellent New Researcher Program, and the Nano Convergence Technology Division, National Nanofab Center's (NNFC) Nano-Medical Device Project.

2025.07.16 View 295 -

KAIST to Lead the Way in Nurturing Talent and Driving S&T Innovation for a G3 AI Powerhouse

* Focusing on nurturing talent and dedicating to R&D to become a G3 AI powerhouse (Top 3 AI Nations).

* Leading the realization of an "AI-driven Basic Society for All" and developing technologies that leverage AI to overcome the crisis in Korea's manufacturing sector.

* 50 years ago, South Korea emerged as a scientific and technological powerhouse from the ashes, with KAIST at its core, contributing to the development of scientific and technological talent, innovative technology, national industrial growth, and the creation of a startup innovation ecosystem.

As public interest in AI and science and technology has significantly grown with the inauguration of the new government, KAIST (President Kwang Hyung Lee) announced its plan, on June 24th, to transform into an "AI-centric, Value-Creating Science and Technology University" that leads national innovation based on science and technology and spearheads solutions to global challenges.

At a time when South Korea is undergoing a major transition to a technology-driven society, KAIST, drawing on its half-century of experience as a "Starter Kit" for national development, is preparing to leap beyond being a mere educational and research institution to become a global innovation hub that creates new social value.

In particular, KAIST has presented a vision for realizing an "AI-driven Basic Society" where all citizens can utilize AI without alienation, enabling South Korea to ascend to the top three AI nations (G3). To achieve this, through the "National AI Research Hub" project (headed by Kee Eung Kim), led by KAIST representing South Korea, the institution is dedicated to enhancing industrial competitiveness and effectively solving social problems based on AI technology.

< KAIST President Kwang Hyung Lee >

KAIST's research achievements in the AI field are garnering international attention. In the top three machine learning conferences (ICML, NeurIPS, ICLR), KAIST ranked 5th globally and 1st in Asia over the past five years (2020-2024). During the same period, based on the number of papers published in top conferences in machine learning, natural language processing, and computer vision (ICML, NeurIPS, ICLR, ACL, EMNLP, NAACL, CVPR, ICCV, ECCV), KAIST ranked 5th globally and 4th in Asia. Furthermore, KAIST has consistently demonstrated unparalleled research capabilities, ranking 1st globally in the average number of papers accepted at ISSCC (International Solid-State Circuits Conference), the world's most prestigious academic conference on semiconductor integrated circuits, for 19 years (2006-2024).

KAIST is continuously expanding its research into core AI technologies, including hyper-scale AI models (Korean LLM), neuromorphic semiconductors, and low-power AI processors, as well as various application areas such as autonomous driving, urban air mobility (UAM), precision medicine, and explainable AI (XAI).

In the manufacturing sector, KAIST's AI technologies are also driving on-site innovation. Professor Young Jae Jang's team has enhanced productivity in advanced manufacturing fields like semiconductors and displays through digital twins utilizing manufacturing site data and AI-based prediction technology. Professor Song Min Kim's team developed ultra-low power wireless tag technology capable of tracking locations with sub-centimeter precision, accelerating the implementation of smart factories. Technologies such as industrial process optimization and equipment failure prediction developed by INEEJI Co., Ltd., founded by Professor Jaesik Choi, are being rapidly applied in real industrial settings, yielding results. INEEJI was designated as a national strategic technology in the 'Explainable AI (XAI)' field by the government in March.

< Researchers performing data analysis for AI research >

Practical applications are also emerging in the robotics sector, which is closely linked to AI. Professor Jemin Hwangbo's team from the Department of Mechanical Engineering garnered attention by newly developing RAIBO 2, a quadrupedal robot usable in high-risk environments such as disaster relief and rough terrain exploration. Professor Kyoung Chul Kong's team and Angel Robotics Co., Ltd. developed the WalkOn Suit exoskeleton robot, significantly improving the quality of life for individuals with complete lower body paralysis or walking disabilities.

Additionally, remarkable research is ongoing in future core technology areas such as AI semiconductors, quantum cryptography communication, ultra-small satellites, hydrogen fuel cells, next-generation batteries, and biomimetic sensors. Notably, space exploration technology based on small satellites, asteroid exploration projects, energy harvesting, and high-speed charging technologies are gaining attention.

Particularly in advanced bio and life sciences, KAIST is collaborating with Germany's Merck company on various research initiatives, including synthetic biology and mRNA. KAIST is also contributing to the construction of a 430 billion won Merck Bio-Center in Daejeon, thereby stimulating the local economy and creating jobs.

Based on these cutting-edge research capabilities, KAIST continues to expand its influence not only within the industry but also on the global stage. It has established strategic partnerships with leading universities worldwide, including MIT, Stanford University, and New York University (NYU). Notably, KAIST and NYU have established a joint campus in New York to strengthen human exchange and collaborative research. Active industry-academia collaborations with global companies such as Google, Intel, and TSMC are also ongoing, playing a pivotal role in future technology development and the creation of an innovation ecosystem.

These activities also lead to a strong startup ecosystem that drives South Korean industries. The flow of startups, which began with companies like Qnix Computer, Nexon, and Naver, has expanded to a total of 1,914 companies to date. Their cumulative assets amount to 94 trillion won, with sales reaching 36 trillion won and employing approximately 60,000 people. Over 90% of these are technology-based startups originating from faculty and student labs, demonstrating a model that makes a tangible economic contribution based on science and technology.

< Students at work >

Having consistently generated diverse achievements, KAIST has already produced approximately 80,000 "KAISTians" who have created innovation through challenge and failure, and is currently recruiting new talent to continue driving innovation that transforms South Korea and the world.

President Kwang Hyung Lee emphasized, "KAIST will establish itself as a global leader in science and technology, designing the future of South Korea and humanity and creating tangible value." He added, "We will focus on talent nurturing and research and development to realize the new government's national agenda of becoming a G3 AI powerhouse."

He further stated, "KAIST's vision for the AI field, in which it places particular emphasis, is to strive for a society where everyone can freely utilize AI. We will contribute to significantly boosting productivity by recovering manufacturing competitiveness through AI and actively disseminating physical AI, AI robots, and AI mobility technologies to industrial sites."

2025.06.24 View 1646

KAIST to Lead the Way in Nurturing Talent and Driving S&T Innovation for a G3 AI Powerhouse

* Focusing on nurturing talent and dedicating to R&D to become a G3 AI powerhouse (Top 3 AI Nations).

* Leading the realization of an "AI-driven Basic Society for All" and developing technologies that leverage AI to overcome the crisis in Korea's manufacturing sector.

* 50 years ago, South Korea emerged as a scientific and technological powerhouse from the ashes, with KAIST at its core, contributing to the development of scientific and technological talent, innovative technology, national industrial growth, and the creation of a startup innovation ecosystem.

As public interest in AI and science and technology has significantly grown with the inauguration of the new government, KAIST (President Kwang Hyung Lee) announced its plan, on June 24th, to transform into an "AI-centric, Value-Creating Science and Technology University" that leads national innovation based on science and technology and spearheads solutions to global challenges.

At a time when South Korea is undergoing a major transition to a technology-driven society, KAIST, drawing on its half-century of experience as a "Starter Kit" for national development, is preparing to leap beyond being a mere educational and research institution to become a global innovation hub that creates new social value.

In particular, KAIST has presented a vision for realizing an "AI-driven Basic Society" where all citizens can utilize AI without alienation, enabling South Korea to ascend to the top three AI nations (G3). To achieve this, through the "National AI Research Hub" project (headed by Kee Eung Kim), led by KAIST representing South Korea, the institution is dedicated to enhancing industrial competitiveness and effectively solving social problems based on AI technology.

< KAIST President Kwang Hyung Lee >

KAIST's research achievements in the AI field are garnering international attention. In the top three machine learning conferences (ICML, NeurIPS, ICLR), KAIST ranked 5th globally and 1st in Asia over the past five years (2020-2024). During the same period, based on the number of papers published in top conferences in machine learning, natural language processing, and computer vision (ICML, NeurIPS, ICLR, ACL, EMNLP, NAACL, CVPR, ICCV, ECCV), KAIST ranked 5th globally and 4th in Asia. Furthermore, KAIST has consistently demonstrated unparalleled research capabilities, ranking 1st globally in the average number of papers accepted at ISSCC (International Solid-State Circuits Conference), the world's most prestigious academic conference on semiconductor integrated circuits, for 19 years (2006-2024).

KAIST is continuously expanding its research into core AI technologies, including hyper-scale AI models (Korean LLM), neuromorphic semiconductors, and low-power AI processors, as well as various application areas such as autonomous driving, urban air mobility (UAM), precision medicine, and explainable AI (XAI).

In the manufacturing sector, KAIST's AI technologies are also driving on-site innovation. Professor Young Jae Jang's team has enhanced productivity in advanced manufacturing fields like semiconductors and displays through digital twins utilizing manufacturing site data and AI-based prediction technology. Professor Song Min Kim's team developed ultra-low power wireless tag technology capable of tracking locations with sub-centimeter precision, accelerating the implementation of smart factories. Technologies such as industrial process optimization and equipment failure prediction developed by INEEJI Co., Ltd., founded by Professor Jaesik Choi, are being rapidly applied in real industrial settings, yielding results. INEEJI was designated as a national strategic technology in the 'Explainable AI (XAI)' field by the government in March.

< Researchers performing data analysis for AI research >

Practical applications are also emerging in the robotics sector, which is closely linked to AI. Professor Jemin Hwangbo's team from the Department of Mechanical Engineering garnered attention by newly developing RAIBO 2, a quadrupedal robot usable in high-risk environments such as disaster relief and rough terrain exploration. Professor Kyoung Chul Kong's team and Angel Robotics Co., Ltd. developed the WalkOn Suit exoskeleton robot, significantly improving the quality of life for individuals with complete lower body paralysis or walking disabilities.

Additionally, remarkable research is ongoing in future core technology areas such as AI semiconductors, quantum cryptography communication, ultra-small satellites, hydrogen fuel cells, next-generation batteries, and biomimetic sensors. Notably, space exploration technology based on small satellites, asteroid exploration projects, energy harvesting, and high-speed charging technologies are gaining attention.

Particularly in advanced bio and life sciences, KAIST is collaborating with Germany's Merck company on various research initiatives, including synthetic biology and mRNA. KAIST is also contributing to the construction of a 430 billion won Merck Bio-Center in Daejeon, thereby stimulating the local economy and creating jobs.

Based on these cutting-edge research capabilities, KAIST continues to expand its influence not only within the industry but also on the global stage. It has established strategic partnerships with leading universities worldwide, including MIT, Stanford University, and New York University (NYU). Notably, KAIST and NYU have established a joint campus in New York to strengthen human exchange and collaborative research. Active industry-academia collaborations with global companies such as Google, Intel, and TSMC are also ongoing, playing a pivotal role in future technology development and the creation of an innovation ecosystem.

These activities also lead to a strong startup ecosystem that drives South Korean industries. The flow of startups, which began with companies like Qnix Computer, Nexon, and Naver, has expanded to a total of 1,914 companies to date. Their cumulative assets amount to 94 trillion won, with sales reaching 36 trillion won and employing approximately 60,000 people. Over 90% of these are technology-based startups originating from faculty and student labs, demonstrating a model that makes a tangible economic contribution based on science and technology.

< Students at work >

Having consistently generated diverse achievements, KAIST has already produced approximately 80,000 "KAISTians" who have created innovation through challenge and failure, and is currently recruiting new talent to continue driving innovation that transforms South Korea and the world.

President Kwang Hyung Lee emphasized, "KAIST will establish itself as a global leader in science and technology, designing the future of South Korea and humanity and creating tangible value." He added, "We will focus on talent nurturing and research and development to realize the new government's national agenda of becoming a G3 AI powerhouse."

He further stated, "KAIST's vision for the AI field, in which it places particular emphasis, is to strive for a society where everyone can freely utilize AI. We will contribute to significantly boosting productivity by recovering manufacturing competitiveness through AI and actively disseminating physical AI, AI robots, and AI mobility technologies to industrial sites."

2025.06.24 View 1646 -

“For the First Time, We Shared a Meaningful Exchange”: KAIST Develops an AI App for Parents and Minimally Verbal Autistic Children Connect

• KAIST team up with NAVER AI Lab and Dodakim Child Development Center Develop ‘AAcessTalk’, an AI-driven Communication Tool bridging the gap Between Children with Autism and their Parents

• The project earned the prestigious Best Paper Award at the ACM CHI 2025, the Premier International Conference in Human-Computer Interaction

• Families share heartwarming stories of breakthrough communication and newfound understanding.

< Photo 1. (From left) Professor Hwajung Hong and Doctoral candidate Dasom Choi of the Department of Industrial Design with SoHyun Park and Young-Ho Kim of Naver Cloud AI Lab >

For many families of minimally verbal autistic (MVA) children, communication often feels like an uphill battle. But now, thanks to a new AI-powered app developed by researchers at KAIST in collaboration with NAVER AI Lab and Dodakim Child Development Center, parents are finally experiencing moments of genuine connection with their children.

On the 16th, the KAIST (President Kwang Hyung Lee) research team, led by Professor Hwajung Hong of the Department of Industrial Design, announced the development of ‘AAcessTalk,’ an artificial intelligence (AI)-based communication tool that enables genuine communication between children with autism and their parents.

This research was recognized for its human-centered AI approach and received international attention, earning the Best Paper Award at the ACM CHI 2025*, an international conference held in Yokohama, Japan.*ACM CHI (ACM Conference on Human Factors in Computing Systems) 2025: One of the world's most prestigious academic conference in the field of Human-Computer Interaction (HCI).

This year, approximately 1,200 papers were selected out of about 5,000 submissions, with the Best Paper Award given to only the top 1%. The conference, which drew over 5,000 researchers, was the largest in its history, reflecting the growing interest in ‘Human-AI Interaction.’

Called AACessTalk, the app offers personalized vocabulary cards tailored to each child’s interests and context, while guiding parents through conversations with customized prompts. This creates a space where children’s voices can finally be heard—and where parents and children can connect on a deeper level.

Traditional augmentative and alternative communication (AAC) tools have relied heavily on fixed card systems that often fail to capture the subtle emotions and shifting interests of children with autism. AACessTalk breaks new ground by integrating AI technology that adapts in real time to the child’s mood and environment.

< Figure. Schematics of AACessTalk system. It provides personalized vocabulary cards for children with autism and context-based conversation guides for parents to focus on practical communication. Large ‘Turn Pass Button’ is placed at the child’s side to allow the child to lead the conversation. >

Among its standout features is a large ‘Turn Pass Button’ that gives children control over when to start or end conversations—allowing them to lead with agency. Another feature, the “What about Mom/Dad?” button, encourages children to ask about their parents’ thoughts, fostering mutual engagement in dialogue, something many children had never done before.

One parent shared, “For the first time, we shared a meaningful exchange.” Such stories were common among the 11 families who participated in a two-week pilot study, where children used the app to take more initiative in conversations and parents discovered new layers of their children’s language abilities.

Parents also reported moments of surprise and joy when their children used unexpected words or took the lead in conversations, breaking free from repetitive patterns. “I was amazed when my child used a word I hadn’t heard before. It helped me understand them in a whole new way,” recalled one caregiver.

Professor Hwajung Hong, who led the research at KAIST’s Department of Industrial Design, emphasized the importance of empowering children to express their own voices. “This study shows that AI can be more than a communication aid—it can be a bridge to genuine connection and understanding within families,” she said.

Looking ahead, the team plans to refine and expand human-centered AI technologies that honor neurodiversity, with a focus on bringing practical solutions to socially vulnerable groups and enriching user experiences.

This research is the result of KAIST Department of Industrial Design doctoral student Dasom Choi's internship at NAVER AI Lab.* Thesis Title: AACessTalk: Fostering Communication between Minimally Verbal Autistic Children and Parents with Contextual Guidance and Card Recommendation* DOI: 10.1145/3706598.3713792* Main Author Information: Dasom Choi (KAIST, NAVER AI Lab, First Author), SoHyun Park (NAVER AI Lab) , Kyungah Lee (Dodakim Child Development Center), Hwajung Hong (KAIST), and Young-Ho Kim (NAVER AI Lab, Corresponding Author)

This research was supported by the NAVER AI Lab internship program and grants from the National Research Foundation of Korea: the Doctoral Student Research Encouragement Grant (NRF-2024S1A5B5A19043580) and the Mid-Career Researcher Support Program for the Development of a Generative AI-Based Augmentative and Alternative Communication System for Autism Spectrum Disorder (RS-2024-00458557).

2025.05.19 View 4940

“For the First Time, We Shared a Meaningful Exchange”: KAIST Develops an AI App for Parents and Minimally Verbal Autistic Children Connect

• KAIST team up with NAVER AI Lab and Dodakim Child Development Center Develop ‘AAcessTalk’, an AI-driven Communication Tool bridging the gap Between Children with Autism and their Parents

• The project earned the prestigious Best Paper Award at the ACM CHI 2025, the Premier International Conference in Human-Computer Interaction

• Families share heartwarming stories of breakthrough communication and newfound understanding.

< Photo 1. (From left) Professor Hwajung Hong and Doctoral candidate Dasom Choi of the Department of Industrial Design with SoHyun Park and Young-Ho Kim of Naver Cloud AI Lab >

For many families of minimally verbal autistic (MVA) children, communication often feels like an uphill battle. But now, thanks to a new AI-powered app developed by researchers at KAIST in collaboration with NAVER AI Lab and Dodakim Child Development Center, parents are finally experiencing moments of genuine connection with their children.

On the 16th, the KAIST (President Kwang Hyung Lee) research team, led by Professor Hwajung Hong of the Department of Industrial Design, announced the development of ‘AAcessTalk,’ an artificial intelligence (AI)-based communication tool that enables genuine communication between children with autism and their parents.

This research was recognized for its human-centered AI approach and received international attention, earning the Best Paper Award at the ACM CHI 2025*, an international conference held in Yokohama, Japan.*ACM CHI (ACM Conference on Human Factors in Computing Systems) 2025: One of the world's most prestigious academic conference in the field of Human-Computer Interaction (HCI).

This year, approximately 1,200 papers were selected out of about 5,000 submissions, with the Best Paper Award given to only the top 1%. The conference, which drew over 5,000 researchers, was the largest in its history, reflecting the growing interest in ‘Human-AI Interaction.’

Called AACessTalk, the app offers personalized vocabulary cards tailored to each child’s interests and context, while guiding parents through conversations with customized prompts. This creates a space where children’s voices can finally be heard—and where parents and children can connect on a deeper level.

Traditional augmentative and alternative communication (AAC) tools have relied heavily on fixed card systems that often fail to capture the subtle emotions and shifting interests of children with autism. AACessTalk breaks new ground by integrating AI technology that adapts in real time to the child’s mood and environment.

< Figure. Schematics of AACessTalk system. It provides personalized vocabulary cards for children with autism and context-based conversation guides for parents to focus on practical communication. Large ‘Turn Pass Button’ is placed at the child’s side to allow the child to lead the conversation. >

Among its standout features is a large ‘Turn Pass Button’ that gives children control over when to start or end conversations—allowing them to lead with agency. Another feature, the “What about Mom/Dad?” button, encourages children to ask about their parents’ thoughts, fostering mutual engagement in dialogue, something many children had never done before.

One parent shared, “For the first time, we shared a meaningful exchange.” Such stories were common among the 11 families who participated in a two-week pilot study, where children used the app to take more initiative in conversations and parents discovered new layers of their children’s language abilities.

Parents also reported moments of surprise and joy when their children used unexpected words or took the lead in conversations, breaking free from repetitive patterns. “I was amazed when my child used a word I hadn’t heard before. It helped me understand them in a whole new way,” recalled one caregiver.

Professor Hwajung Hong, who led the research at KAIST’s Department of Industrial Design, emphasized the importance of empowering children to express their own voices. “This study shows that AI can be more than a communication aid—it can be a bridge to genuine connection and understanding within families,” she said.

Looking ahead, the team plans to refine and expand human-centered AI technologies that honor neurodiversity, with a focus on bringing practical solutions to socially vulnerable groups and enriching user experiences.

This research is the result of KAIST Department of Industrial Design doctoral student Dasom Choi's internship at NAVER AI Lab.* Thesis Title: AACessTalk: Fostering Communication between Minimally Verbal Autistic Children and Parents with Contextual Guidance and Card Recommendation* DOI: 10.1145/3706598.3713792* Main Author Information: Dasom Choi (KAIST, NAVER AI Lab, First Author), SoHyun Park (NAVER AI Lab) , Kyungah Lee (Dodakim Child Development Center), Hwajung Hong (KAIST), and Young-Ho Kim (NAVER AI Lab, Corresponding Author)

This research was supported by the NAVER AI Lab internship program and grants from the National Research Foundation of Korea: the Doctoral Student Research Encouragement Grant (NRF-2024S1A5B5A19043580) and the Mid-Career Researcher Support Program for the Development of a Generative AI-Based Augmentative and Alternative Communication System for Autism Spectrum Disorder (RS-2024-00458557).

2025.05.19 View 4940 -

KAIST & CMU Unveils Amuse, a Songwriting AI-Collaborator to Help Create Music

Wouldn't it be great if music creators had someone to brainstorm with, help them when they're stuck, and explore different musical directions together? Researchers of KAIST and Carnegie Mellon University (CMU) have developed AI technology similar to a fellow songwriter who helps create music.

KAIST (President Kwang-Hyung Lee) has developed an AI-based music creation support system, Amuse, by a research team led by Professor Sung-Ju Lee of the School of Electrical Engineering in collaboration with CMU. The research was presented at the ACM Conference on Human Factors in Computing Systems (CHI), one of the world’s top conferences in human-computer interaction, held in Yokohama, Japan from April 26 to May 1. It received the Best Paper Award, given to only the top 1% of all submissions.

< (From left) Professor Chris Donahue of Carnegie Mellon University, Ph.D. Student Yewon Kim and Professor Sung-Ju Lee of the School of Electrical Engineering >

The system developed by Professor Sung-Ju Lee’s research team, Amuse, is an AI-based system that converts various forms of inspiration such as text, images, and audio into harmonic structures (chord progressions) to support composition.

For example, if a user inputs a phrase, image, or sound clip such as “memories of a warm summer beach”, Amuse automatically generates and suggests chord progressions that match the inspiration.

Unlike existing generative AI, Amuse is differentiated in that it respects the user's creative flow and naturally induces creative exploration through an interactive method that allows flexible integration and modification of AI suggestions.

The core technology of the Amuse system is a generation method that blends two approaches: a large language model creates music code based on the user's prompt and inspiration, while another AI model, trained on real music data, filters out awkward or unnatural results using rejection sampling.

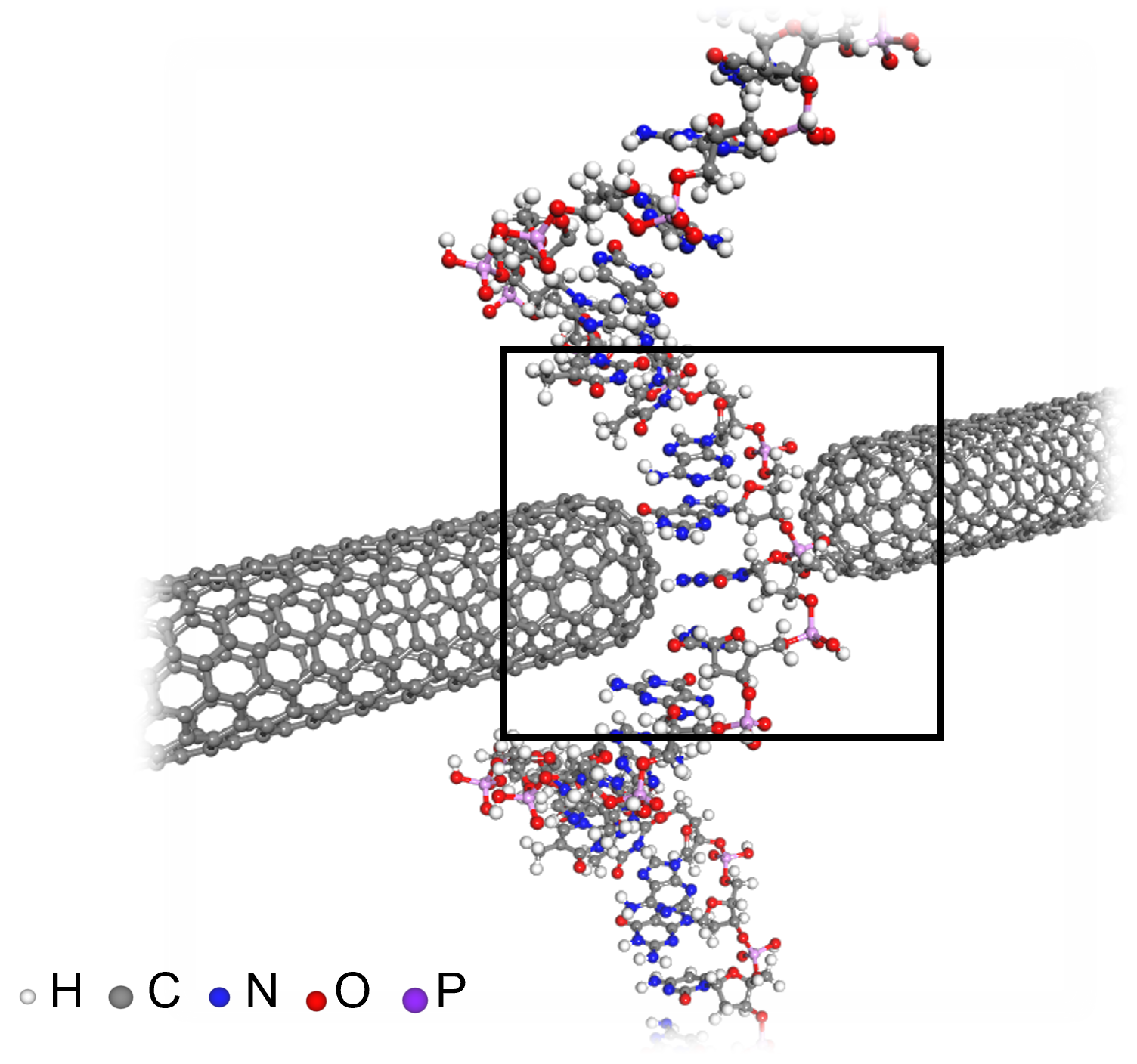

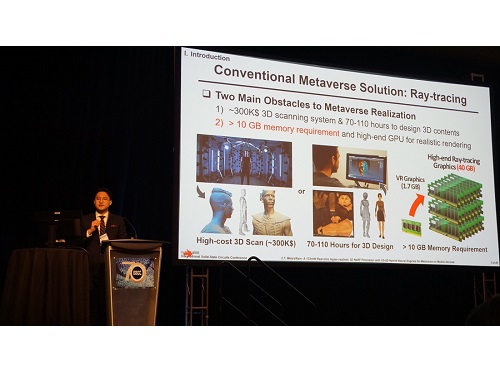

< Figure 1. Amuse system configuration. After extracting music keywords from user input, a large language model-based code progression is generated and refined through rejection sampling (left). Code extraction from audio input is also possible (right). The bottom is an example visualizing the chord structure of the generated code. >

The research team conducted a user study targeting actual musicians and evaluated that Amuse has high potential as a creative companion, or a Co-Creative AI, a concept in which people and AI collaborate, rather than having a generative AI simply put together a song.

The paper, in which a Ph.D. student Yewon Kim and Professor Sung-Ju Lee of KAIST School of Electrical and Electronic Engineering and Carnegie Mellon University Professor Chris Donahue participated, demonstrated the potential of creative AI system design in both academia and industry. ※ Paper title: Amuse: Human-AI Collaborative Songwriting with Multimodal Inspirations DOI: https://doi.org/10.1145/3706598.3713818

※ Research demo video: https://youtu.be/udilkRSnftI?si=FNXccC9EjxHOCrm1

※ Research homepage: https://nmsl.kaist.ac.kr/projects/amuse/

Professor Sung-Ju Lee said, “Recent generative AI technology has raised concerns in that it directly imitates copyrighted content, thereby violating the copyright of the creator, or generating results one-way regardless of the creator’s intention. Accordingly, the research team was aware of this trend, paid attention to what the creator actually needs, and focused on designing an AI system centered on the creator.”

He continued, “Amuse is an attempt to explore the possibility of collaboration with AI while maintaining the initiative of the creator, and is expected to be a starting point for suggesting a more creator-friendly direction in the development of music creation tools and generative AI systems in the future.”

This research was conducted with the support of the National Research Foundation of Korea with funding from the government (Ministry of Science and ICT). (RS-2024-00337007)

2025.05.07 View 6427

KAIST & CMU Unveils Amuse, a Songwriting AI-Collaborator to Help Create Music

Wouldn't it be great if music creators had someone to brainstorm with, help them when they're stuck, and explore different musical directions together? Researchers of KAIST and Carnegie Mellon University (CMU) have developed AI technology similar to a fellow songwriter who helps create music.

KAIST (President Kwang-Hyung Lee) has developed an AI-based music creation support system, Amuse, by a research team led by Professor Sung-Ju Lee of the School of Electrical Engineering in collaboration with CMU. The research was presented at the ACM Conference on Human Factors in Computing Systems (CHI), one of the world’s top conferences in human-computer interaction, held in Yokohama, Japan from April 26 to May 1. It received the Best Paper Award, given to only the top 1% of all submissions.

< (From left) Professor Chris Donahue of Carnegie Mellon University, Ph.D. Student Yewon Kim and Professor Sung-Ju Lee of the School of Electrical Engineering >

The system developed by Professor Sung-Ju Lee’s research team, Amuse, is an AI-based system that converts various forms of inspiration such as text, images, and audio into harmonic structures (chord progressions) to support composition.

For example, if a user inputs a phrase, image, or sound clip such as “memories of a warm summer beach”, Amuse automatically generates and suggests chord progressions that match the inspiration.

Unlike existing generative AI, Amuse is differentiated in that it respects the user's creative flow and naturally induces creative exploration through an interactive method that allows flexible integration and modification of AI suggestions.

The core technology of the Amuse system is a generation method that blends two approaches: a large language model creates music code based on the user's prompt and inspiration, while another AI model, trained on real music data, filters out awkward or unnatural results using rejection sampling.

< Figure 1. Amuse system configuration. After extracting music keywords from user input, a large language model-based code progression is generated and refined through rejection sampling (left). Code extraction from audio input is also possible (right). The bottom is an example visualizing the chord structure of the generated code. >

The research team conducted a user study targeting actual musicians and evaluated that Amuse has high potential as a creative companion, or a Co-Creative AI, a concept in which people and AI collaborate, rather than having a generative AI simply put together a song.

The paper, in which a Ph.D. student Yewon Kim and Professor Sung-Ju Lee of KAIST School of Electrical and Electronic Engineering and Carnegie Mellon University Professor Chris Donahue participated, demonstrated the potential of creative AI system design in both academia and industry. ※ Paper title: Amuse: Human-AI Collaborative Songwriting with Multimodal Inspirations DOI: https://doi.org/10.1145/3706598.3713818

※ Research demo video: https://youtu.be/udilkRSnftI?si=FNXccC9EjxHOCrm1

※ Research homepage: https://nmsl.kaist.ac.kr/projects/amuse/

Professor Sung-Ju Lee said, “Recent generative AI technology has raised concerns in that it directly imitates copyrighted content, thereby violating the copyright of the creator, or generating results one-way regardless of the creator’s intention. Accordingly, the research team was aware of this trend, paid attention to what the creator actually needs, and focused on designing an AI system centered on the creator.”

He continued, “Amuse is an attempt to explore the possibility of collaboration with AI while maintaining the initiative of the creator, and is expected to be a starting point for suggesting a more creator-friendly direction in the development of music creation tools and generative AI systems in the future.”

This research was conducted with the support of the National Research Foundation of Korea with funding from the government (Ministry of Science and ICT). (RS-2024-00337007)

2025.05.07 View 6427 -

KAIST, Galaxy Corporation Hold Signboard Ceremony for ‘AI Entertech Research Center’

KAIST (President Kwang-Hyung Lee) announced on the 9th that it will hold a signboard ceremony for the establishment of the ‘AI Entertech Research Center’ with the artificial intelligence entertech company, Galaxy Corporation (CEO Yong-ho Choi) at the main campus of KAIST.

< (Galaxy Corporation, from center to the left) CEO Yongho Choi, Director Hyunjung Kim and related persons / (KAIST, from center to the right) Professor SeungSeob Lee of the Department of Mechanical Engineering, Provost and Executive Vice President Gyun Min Lee, Dean Jung Kim of the Department of Mechanical Engineering and Professor Yong Jin Yoon of the same department >

This collaboration is a part of KAIST’s art convergence research strategy and is an extension of its efforts to lead future K-Culture through the development of creative cultural content based on science and technology. Beyond simple technological development, KAIST has been continuously implementing the convergence model of ‘Tech-Art’ that expands the horizon of the content industry through the fusion of emotional technology and cultural imagination.

Previously, KAIST established the ‘Sumi Jo Performing Arts Research Center’ in collaboration with world-renowned soprano Sumi Jo, a visiting professor, and has been leading the convergence research of art and engineering, such as AI-based interactive performance technology and immersive content. The establishment of the ‘AI Entertech Research Center’ this time is being evaluated as a new challenge for the technological expansion of the K-content industry.

In addition, the role of singer G-Dragon (real name Kwon Ji-yong), an artist affiliated with Galaxy Corporation and a visiting professor in the Department of Mechanical Engineering at KAIST, was also a major factor. Since being appointed to KAIST last year, Professor Kwon has been actively promoting the establishment of a research center and soliciting KAIST research projects through his agency to develop the ‘AI Entertech’ field, which fuses entertainment and cutting-edge technology.

< (Galaxy Corporation, from center to the left) CEO Yongho Choi, Director Hyunjung Kim and related persons / (KAIST, from center to the right) Professor SeungSeob Lee of the Department of Mechanical Engineering, Provost and Executive Vice President Gyun Min Lee, Dean Jung Kim of the Department of Mechanical Engineering and Professor Yong Jin Yoon of the same department >

The AI Entertech Research Center is scheduled to officially launch in the third quarter of this year, and this inauguration ceremony was held in line with Professor Kwon Ji-yong’s schedule to visit KAIST. Galaxy Corporation recently had a private meeting with Microsoft (MS) CEO Nadella as the only entertech company, and is actively promoting the globalization of AI entertech. In addition, since last year, it has established a cooperative relationship with KAIST and plans to actively seek the convergence of entertech and technology that transcends time and space through the establishment of a research center.

Professor Kwon Ji-yong will attend the ‘Innovate Korea 2025’ event co-hosted by KAIST, Herald Media Group, and the National Research Council of Science and Technology, held at the KAIST Lyu Keun-Chul Sports Complex in the afternoon of the same day, and will give a special talk on the topic of ‘The Future of AI Entertech.’ In addition to Professor Kwon, Professor SeungSeob Lee of the Department of Mechanical Engineering at KAIST, Professor Sang-gyun Kim of Kyunghee University, and CEO Yong-ho Choi of Galaxy Corporation will also participate in this talk show.

The two organizations signed an MOU last year to jointly research science and technology for the global spread of K-pop, and the establishment of this research center is the first tangible result of this. Once the research center is fully operational, various projects such as the development of an AI-based entertech platform and joint research on global content technology will be promoted.

< A photo of Professor Kwon Ji-yong (right) from at the talk show with KAIST President Kwang-Hyung Lee (left) from the previous year >

Yong-ho Choi, Galaxy Corporation CHO (Chief Happiness Officer), said, “This collaboration is the starting point for providing a completely new entertainment experience to fans around the world by grafting KAIST AI and cutting-edge technologies onto the fandom platform,” and added, “The convergence of AI and entertech is not just technological advancement; it is a driving force for innovation that enriches human life.”

Kwang-Hyung Lee, KAIST President, said, “I am confident that KAIST’s scientific and technological capabilities, combined with Professor Kwon Ji-yong’s global sensibility, will lead the technological evolution of K-culture,” and added, “I hope that KAIST’s spirit of challenge and research DNA will create a new wave in the entertech market.”

Meanwhile, Galaxy Corporation, the agency of Professor G-Dragon Kwon Ji-yong, is an AI entertainment technology company that presents a new paradigm based on IP, media, tech, and entertainment convergence technology. (End)

2025.04.09 View 4949

KAIST, Galaxy Corporation Hold Signboard Ceremony for ‘AI Entertech Research Center’

KAIST (President Kwang-Hyung Lee) announced on the 9th that it will hold a signboard ceremony for the establishment of the ‘AI Entertech Research Center’ with the artificial intelligence entertech company, Galaxy Corporation (CEO Yong-ho Choi) at the main campus of KAIST.

< (Galaxy Corporation, from center to the left) CEO Yongho Choi, Director Hyunjung Kim and related persons / (KAIST, from center to the right) Professor SeungSeob Lee of the Department of Mechanical Engineering, Provost and Executive Vice President Gyun Min Lee, Dean Jung Kim of the Department of Mechanical Engineering and Professor Yong Jin Yoon of the same department >

This collaboration is a part of KAIST’s art convergence research strategy and is an extension of its efforts to lead future K-Culture through the development of creative cultural content based on science and technology. Beyond simple technological development, KAIST has been continuously implementing the convergence model of ‘Tech-Art’ that expands the horizon of the content industry through the fusion of emotional technology and cultural imagination.

Previously, KAIST established the ‘Sumi Jo Performing Arts Research Center’ in collaboration with world-renowned soprano Sumi Jo, a visiting professor, and has been leading the convergence research of art and engineering, such as AI-based interactive performance technology and immersive content. The establishment of the ‘AI Entertech Research Center’ this time is being evaluated as a new challenge for the technological expansion of the K-content industry.

In addition, the role of singer G-Dragon (real name Kwon Ji-yong), an artist affiliated with Galaxy Corporation and a visiting professor in the Department of Mechanical Engineering at KAIST, was also a major factor. Since being appointed to KAIST last year, Professor Kwon has been actively promoting the establishment of a research center and soliciting KAIST research projects through his agency to develop the ‘AI Entertech’ field, which fuses entertainment and cutting-edge technology.

< (Galaxy Corporation, from center to the left) CEO Yongho Choi, Director Hyunjung Kim and related persons / (KAIST, from center to the right) Professor SeungSeob Lee of the Department of Mechanical Engineering, Provost and Executive Vice President Gyun Min Lee, Dean Jung Kim of the Department of Mechanical Engineering and Professor Yong Jin Yoon of the same department >

The AI Entertech Research Center is scheduled to officially launch in the third quarter of this year, and this inauguration ceremony was held in line with Professor Kwon Ji-yong’s schedule to visit KAIST. Galaxy Corporation recently had a private meeting with Microsoft (MS) CEO Nadella as the only entertech company, and is actively promoting the globalization of AI entertech. In addition, since last year, it has established a cooperative relationship with KAIST and plans to actively seek the convergence of entertech and technology that transcends time and space through the establishment of a research center.

Professor Kwon Ji-yong will attend the ‘Innovate Korea 2025’ event co-hosted by KAIST, Herald Media Group, and the National Research Council of Science and Technology, held at the KAIST Lyu Keun-Chul Sports Complex in the afternoon of the same day, and will give a special talk on the topic of ‘The Future of AI Entertech.’ In addition to Professor Kwon, Professor SeungSeob Lee of the Department of Mechanical Engineering at KAIST, Professor Sang-gyun Kim of Kyunghee University, and CEO Yong-ho Choi of Galaxy Corporation will also participate in this talk show.

The two organizations signed an MOU last year to jointly research science and technology for the global spread of K-pop, and the establishment of this research center is the first tangible result of this. Once the research center is fully operational, various projects such as the development of an AI-based entertech platform and joint research on global content technology will be promoted.

< A photo of Professor Kwon Ji-yong (right) from at the talk show with KAIST President Kwang-Hyung Lee (left) from the previous year >

Yong-ho Choi, Galaxy Corporation CHO (Chief Happiness Officer), said, “This collaboration is the starting point for providing a completely new entertainment experience to fans around the world by grafting KAIST AI and cutting-edge technologies onto the fandom platform,” and added, “The convergence of AI and entertech is not just technological advancement; it is a driving force for innovation that enriches human life.”

Kwang-Hyung Lee, KAIST President, said, “I am confident that KAIST’s scientific and technological capabilities, combined with Professor Kwon Ji-yong’s global sensibility, will lead the technological evolution of K-culture,” and added, “I hope that KAIST’s spirit of challenge and research DNA will create a new wave in the entertech market.”

Meanwhile, Galaxy Corporation, the agency of Professor G-Dragon Kwon Ji-yong, is an AI entertainment technology company that presents a new paradigm based on IP, media, tech, and entertainment convergence technology. (End)

2025.04.09 View 4949 -

KAIST Research Team Develops an AI Framework Capable of Overcoming the Strength-Ductility Dilemma in Additive-manufactured Titanium Alloys

<(From Left) Ph.D. Student Jaejung Park and Professor Seungchul Lee of KAIST Department of Mechanical Engineering and , Professor Hyoung Seop Kim of POSTECH, and M.S.–Ph.D. Integrated Program Student Jeong Ah Lee of POSTECH. >

The KAIST research team led by Professor Seungchul Lee from Department of Mechanical Engineering, in collaboration with Professor Hyoung Seop Kim’s team at POSTECH, successfully overcame the strength–ductility dilemma of Ti 6Al 4V alloy using artificial intelligence, enabling the production of high strength, high ductility metal products. The AI developed by the team accurately predicts mechanical properties based on various 3D printing process parameters while also providing uncertainty information, and it uses both to recommend process parameters that hold high promise for 3D printing.

Among various 3D printing technologies, laser powder bed fusion is an innovative method for manufacturing Ti-6Al-4V alloy, renowned for its high strength and bio-compatibility. However, this alloy made via 3D printing has traditionally faced challenges in simultaneously achieving high strength and high ductility. Although there have been attempts to address this issue by adjusting both the printing process parameters and heat treatment conditions, the vast number of possible combinations made it difficult to explore them all through experiments and simulations alone.

The active learning framework developed by the team quickly explores a wide range of 3D printing process parameters and heat treatment conditions to recommend those expected to improve both strength and ductility of the alloy. These recommendations are based on the AI model’s predictions of ultimate tensile strength and total elongation along with associated uncertainty information for each set of process parameters and heat treatment conditions. The recommended conditions are then validated by performing 3D printing and tensile tests to obtain the true mechanical property values. These new data are incorporated into further AI model training, and through iterative exploration, the optimal process parameters and heat treatment conditions for producing high-performance alloys were determined in only five iterations. With these optimized conditions, the 3D printed Ti-6Al-4V alloy achieved an ultimate tensile strength of 1190 MPa and a total elongation of 16.5%, successfully overcoming the strength–ductility dilemma.

Professor Seungchul Lee commented, “In this study, by optimizing the 3D printing process parameters and heat treatment conditions, we were able to develop a high-strength, high-ductility Ti-6Al-4V alloy with minimal experimentation trials. Compared to previous studies, we produced an alloy with a similar ultimate tensile strength but higher total elongation, as well as that with a similar elongation but greater ultimate tensile strength.” He added, “Furthermore, if our approach is applied not only to mechanical properties but also to other properties such as thermal conductivity and thermal expansion, we anticipate that it will enable efficient exploration of 3D printing process parameters and heat treatment conditions.”

This study was published in Nature Communications on January 22 (https://doi.org/10.1038/s41467-025-56267-1), and the research was supported by the National Research Foundation of Korea’s Nano & Material Technology Development Program and the Leading Research Center Program.

2025.02.21 View 6451

KAIST Research Team Develops an AI Framework Capable of Overcoming the Strength-Ductility Dilemma in Additive-manufactured Titanium Alloys

<(From Left) Ph.D. Student Jaejung Park and Professor Seungchul Lee of KAIST Department of Mechanical Engineering and , Professor Hyoung Seop Kim of POSTECH, and M.S.–Ph.D. Integrated Program Student Jeong Ah Lee of POSTECH. >

The KAIST research team led by Professor Seungchul Lee from Department of Mechanical Engineering, in collaboration with Professor Hyoung Seop Kim’s team at POSTECH, successfully overcame the strength–ductility dilemma of Ti 6Al 4V alloy using artificial intelligence, enabling the production of high strength, high ductility metal products. The AI developed by the team accurately predicts mechanical properties based on various 3D printing process parameters while also providing uncertainty information, and it uses both to recommend process parameters that hold high promise for 3D printing.

Among various 3D printing technologies, laser powder bed fusion is an innovative method for manufacturing Ti-6Al-4V alloy, renowned for its high strength and bio-compatibility. However, this alloy made via 3D printing has traditionally faced challenges in simultaneously achieving high strength and high ductility. Although there have been attempts to address this issue by adjusting both the printing process parameters and heat treatment conditions, the vast number of possible combinations made it difficult to explore them all through experiments and simulations alone.

The active learning framework developed by the team quickly explores a wide range of 3D printing process parameters and heat treatment conditions to recommend those expected to improve both strength and ductility of the alloy. These recommendations are based on the AI model’s predictions of ultimate tensile strength and total elongation along with associated uncertainty information for each set of process parameters and heat treatment conditions. The recommended conditions are then validated by performing 3D printing and tensile tests to obtain the true mechanical property values. These new data are incorporated into further AI model training, and through iterative exploration, the optimal process parameters and heat treatment conditions for producing high-performance alloys were determined in only five iterations. With these optimized conditions, the 3D printed Ti-6Al-4V alloy achieved an ultimate tensile strength of 1190 MPa and a total elongation of 16.5%, successfully overcoming the strength–ductility dilemma.

Professor Seungchul Lee commented, “In this study, by optimizing the 3D printing process parameters and heat treatment conditions, we were able to develop a high-strength, high-ductility Ti-6Al-4V alloy with minimal experimentation trials. Compared to previous studies, we produced an alloy with a similar ultimate tensile strength but higher total elongation, as well as that with a similar elongation but greater ultimate tensile strength.” He added, “Furthermore, if our approach is applied not only to mechanical properties but also to other properties such as thermal conductivity and thermal expansion, we anticipate that it will enable efficient exploration of 3D printing process parameters and heat treatment conditions.”

This study was published in Nature Communications on January 22 (https://doi.org/10.1038/s41467-025-56267-1), and the research was supported by the National Research Foundation of Korea’s Nano & Material Technology Development Program and the Leading Research Center Program.

2025.02.21 View 6451 -

KAIST Proposes a New Way to Circumvent a Long-time Frustration in Neural Computing

The human brain begins learning through spontaneous random activities even before it receives sensory information from the external world. The technology developed by the KAIST research team enables much faster and more accurate learning when exposed to actual data by pre-learning random information in a brain-mimicking artificial neural network, and is expected to be a breakthrough in the development of brain-based artificial intelligence and neuromorphic computing technology in the future.

KAIST (President Kwang-Hyung Lee) announced on the 16th of December that Professor Se-Bum Paik 's research team in the Department of Brain Cognitive Sciences solved the weight transport problem*, a long-standing challenge in neural network learning, and through this, explained the principles that enable resource-efficient learning in biological brain neural networks.

*Weight transport problem: This is the biggest obstacle to the development of artificial intelligence that mimics the biological brain. It is the fundamental reason why large-scale memory and computational work are required in the learning of general artificial neural networks, unlike biological brains.

Over the past several decades, the development of artificial intelligence has been based on error backpropagation learning proposed by Geoffery Hinton, who won the Nobel Prize in Physics this year. However, error backpropagation learning was thought to be impossible in biological brains because it requires the unrealistic assumption that individual neurons must know all the connected information across multiple layers in order to calculate the error signal for learning.

< Figure 1. Illustration depicting the method of random noise training and its effects >

This difficult problem, called the weight transport problem, was raised by Francis Crick, who won the Nobel Prize in Physiology or Medicine for the discovery of the structure of DNA, after the error backpropagation learning was proposed by Hinton in 1986. Since then, it has been considered the reason why the operating principles of natural neural networks and artificial neural networks will forever be fundamentally different.

At the borderline of artificial intelligence and neuroscience, researchers including Hinton have continued to attempt to create biologically plausible models that can implement the learning principles of the brain by solving the weight transport problem.

In 2016, a joint research team from Oxford University and DeepMind in the UK first proposed the concept of error backpropagation learning being possible without weight transport, drawing attention from the academic world. However, biologically plausible error backpropagation learning without weight transport was inefficient, with slow learning speeds and low accuracy, making it difficult to apply in reality.

KAIST research team noted that the biological brain begins learning through internal spontaneous random neural activity even before experiencing external sensory experiences. To mimic this, the research team pre-trained a biologically plausible neural network without weight transport with meaningless random information (random noise).

As a result, they showed that the symmetry of the forward and backward neural cell connections of the neural network, which is an essential condition for error backpropagation learning, can be created. In other words, learning without weight transport is possible through random pre-training.

< Figure 2. Illustration depicting the meta-learning effect of random noise training >

The research team revealed that learning random information before learning actual data has the property of meta-learning, which is ‘learning how to learn.’ It was shown that neural networks that pre-learned random noise perform much faster and more accurate learning when exposed to actual data, and can achieve high learning efficiency without weight transport.

< Figure 3. Illustration depicting research on understanding the brain's operating principles through artificial neural networks >

Professor Se-Bum Paik said, “It breaks the conventional understanding of existing machine learning that only data learning is important, and provides a new perspective that focuses on the neuroscience principles of creating appropriate conditions before learning,” and added, “It is significant in that it solves important problems in artificial neural network learning through clues from developmental neuroscience, and at the same time provides insight into the brain’s learning principles through artificial neural network models.”

This study, in which Jeonghwan Cheon, a Master’s candidate of KAIST Department of Brain and Cognitive Sciences participated as the first author and Professor Sang Wan Lee of the same department as a co-author, was presented at the 38th Neural Information Processing Systems (NeurIPS), the world's top artificial intelligence conference, on December 14th in Vancouver, Canada. (Paper title: Pretraining with random noise for fast and robust learning without weight transport)

This study was conducted with the support of the National Research Foundation of Korea's Basic Research Program in Science and Engineering, the Information and Communications Technology Planning and Evaluation Institute's Talent Development Program, and the KAIST Singularity Professor Program.

2024.12.16 View 8619

KAIST Proposes a New Way to Circumvent a Long-time Frustration in Neural Computing

The human brain begins learning through spontaneous random activities even before it receives sensory information from the external world. The technology developed by the KAIST research team enables much faster and more accurate learning when exposed to actual data by pre-learning random information in a brain-mimicking artificial neural network, and is expected to be a breakthrough in the development of brain-based artificial intelligence and neuromorphic computing technology in the future.

KAIST (President Kwang-Hyung Lee) announced on the 16th of December that Professor Se-Bum Paik 's research team in the Department of Brain Cognitive Sciences solved the weight transport problem*, a long-standing challenge in neural network learning, and through this, explained the principles that enable resource-efficient learning in biological brain neural networks.

*Weight transport problem: This is the biggest obstacle to the development of artificial intelligence that mimics the biological brain. It is the fundamental reason why large-scale memory and computational work are required in the learning of general artificial neural networks, unlike biological brains.

Over the past several decades, the development of artificial intelligence has been based on error backpropagation learning proposed by Geoffery Hinton, who won the Nobel Prize in Physics this year. However, error backpropagation learning was thought to be impossible in biological brains because it requires the unrealistic assumption that individual neurons must know all the connected information across multiple layers in order to calculate the error signal for learning.

< Figure 1. Illustration depicting the method of random noise training and its effects >

This difficult problem, called the weight transport problem, was raised by Francis Crick, who won the Nobel Prize in Physiology or Medicine for the discovery of the structure of DNA, after the error backpropagation learning was proposed by Hinton in 1986. Since then, it has been considered the reason why the operating principles of natural neural networks and artificial neural networks will forever be fundamentally different.

At the borderline of artificial intelligence and neuroscience, researchers including Hinton have continued to attempt to create biologically plausible models that can implement the learning principles of the brain by solving the weight transport problem.

In 2016, a joint research team from Oxford University and DeepMind in the UK first proposed the concept of error backpropagation learning being possible without weight transport, drawing attention from the academic world. However, biologically plausible error backpropagation learning without weight transport was inefficient, with slow learning speeds and low accuracy, making it difficult to apply in reality.

KAIST research team noted that the biological brain begins learning through internal spontaneous random neural activity even before experiencing external sensory experiences. To mimic this, the research team pre-trained a biologically plausible neural network without weight transport with meaningless random information (random noise).

As a result, they showed that the symmetry of the forward and backward neural cell connections of the neural network, which is an essential condition for error backpropagation learning, can be created. In other words, learning without weight transport is possible through random pre-training.

< Figure 2. Illustration depicting the meta-learning effect of random noise training >

The research team revealed that learning random information before learning actual data has the property of meta-learning, which is ‘learning how to learn.’ It was shown that neural networks that pre-learned random noise perform much faster and more accurate learning when exposed to actual data, and can achieve high learning efficiency without weight transport.

< Figure 3. Illustration depicting research on understanding the brain's operating principles through artificial neural networks >

Professor Se-Bum Paik said, “It breaks the conventional understanding of existing machine learning that only data learning is important, and provides a new perspective that focuses on the neuroscience principles of creating appropriate conditions before learning,” and added, “It is significant in that it solves important problems in artificial neural network learning through clues from developmental neuroscience, and at the same time provides insight into the brain’s learning principles through artificial neural network models.”

This study, in which Jeonghwan Cheon, a Master’s candidate of KAIST Department of Brain and Cognitive Sciences participated as the first author and Professor Sang Wan Lee of the same department as a co-author, was presented at the 38th Neural Information Processing Systems (NeurIPS), the world's top artificial intelligence conference, on December 14th in Vancouver, Canada. (Paper title: Pretraining with random noise for fast and robust learning without weight transport)

This study was conducted with the support of the National Research Foundation of Korea's Basic Research Program in Science and Engineering, the Information and Communications Technology Planning and Evaluation Institute's Talent Development Program, and the KAIST Singularity Professor Program.

2024.12.16 View 8619 -

KAIST Awarded Presidential Commendation for Contributions in Software Industry

- At the “25th Software Industry Day” celebration held in the afternoon on Monday, December 2nd, 2024 at Yangjae L Tower in Seoul

- KAIST was awarded the “Presidential Commendation” for its contributions for the advancement of the Software Industry in the Group Category

- Korea’s first AI master’s and doctoral degree program opened at KAIST Kim Jaechul Graduate School of AI

- Focus on training non-major developers through SW Officer Training Academy "Jungle", Machine Learning Engineer Bootcamp, etc., talents who can integrate development and collaboration, and advanced talents in the latest AI technologies.

- Professor Minjoon Seo of KAIST Kim Jaechul Graduate School of AI received Prime Minister’s Commendation for his contributions for the advancement of the software industry.

< Photo 1. Professor Kyung-soo Kim, the Senior Vice President for Planning and Budget (second from the left) and the Manager of Planning Team, Mr. Sunghoon Jung, stand at the stage after receiving the Presidential Commendation as KAIST was selected as one of the groups that contributed to the advancement of the software industry at the "25th Software Industry Day" celebration. >

“KAIST has been leading the way in achieving the grand goal of fostering 1 million AI talents in Korea by services that pan from providing various educational opportunities, from developing the capabilities of experts with no computer science specialty to fostering advanced professionals. I would like to thank all members of KAIST community who worked hard to achieve the great feat of receiving the Presidential Commendations.” (KAIST President Kwang Hyung Lee)

KAIST (President Kwang Hyung Lee) announced on December 3rd that it was selected as a group that contributed to the advancement of the software industry at the “2024 Software Industry Day” celebration held at the Yangjae El Tower in Seoul on the 2nd of December and received a presidential commendation.

The “Software Industry Day”, hosted by the Ministry of Science and ICT and organized by the National IT Industry Promotion Agency and the Korea Software Industry Association, is an event designed to promote the status of software industry workers in Korea and to honor their achievements.

Every year, those who have made significant contributions to policy development, human resource development, and export growth for industry revitalization are selected and awarded the ‘Software Industry Development Contribution Award.’

KAIST was recognized for its contribution to developing a demand-based, industrial field-centric curriculum and fostering non-major developers and convergence talents with the goal of expanding software value and fostering excellent human resources.

< Photo 2. Senior Vice President for Planning and Budget Kyung-soo Kim receiving the commendation as the representative of KAIST >

Specifically, it first opened the SW Officer Training Academy "Jungle" to foster convergent program developers equipped with the abilities to handle both the computer coding and human interactions for collaborations. This is a non-degree program that provides intensive study and assignments for 5 months for graduates and intellectuals without prior knowledge of computer science.

KAIST Kim Jaechul Graduate School of AI opened and operated Korea’s first master's and doctoral degree program in the field of artificial intelligence. In addition, it planned a “Machine Learning Engineers’ Boot Camp” and conducted lectures and practical training for a total of 16 weeks on the latest AI technologies such as deep learning basics and large language models. It aims to strengthen the practical capabilities of start-up companies while lowering the threshold for companies to introduce AI technology.

Also, KAIST was selected to participate in the 1st and 2nd stages of the Software-centered University Project and has been taking part in the project since 2016. Through this, it was highly evaluated for promoting curriculum based on latest technology, an autonomous system where students directly select integrated education, and expansion of internships.

< Photo 3. Professor Minjoon Seo of Kim Jaechul Graduate School of AI, who received the Prime Minister's Commendation for his contribution to the advancement of the software industry on the same day >

At the awards ceremony that day, Professor Minjoon Seo of KAIST Kim Jaechul Graduate School of AI also received the Prime Minister's Commendation for his contribution to the advancement of the software industry. Professor Seo was recognized for his leading research achievements in the fields of AI and natural language processing by publishing 28 papers in top international AI conferences over the past four years.