ETRI

-

KAIST sends out Music and Bio-Signs of Professor Kwon Ji-yong, a.k.a. G-Dragon, into Space to Pulsate through Universe and Resonate among Stars

KAIST (President Kwang-Hyung Lee) announced on the 10th of April that it successfully promoted the world’s first ‘Space Sound Source Transmission Project’ based on media art at the KAIST Space Research Institute on April 9th through collaboration between Professor Jinjoon Lee of the Graduate School of Culture Technology, a world-renowned media artist, and the global K-Pop artist, G-Dragon.

This project was proposed as part of the ‘AI Entertech Research Center’ being promoted by KAIST and Galaxy Corporation. It is a project to transmit the message and sound of G-Dragon (real name, Kwon Ji-yong), a singer/song writer affiliated with Galaxy Corporation and a visiting professor in the Department of Mechanical Engineering at KAIST, to space for the first time in the world.

This is a convergence project that combines science, technology, art, and popular music, and is a new form of ‘space culture content’ experiment that connects KAIST’s cutting-edge space technology, Professor Jinjoon Lee’s media art work, and G-Dragon’s voice and sound source containing his latest digital single, "HOME SWEET HOME".

< Photo 1. Professor Jinjoon Lee's Open Your Eyes Project "Iris"'s imagery projected on the 13m space antenna at the Space Research Institute >

This collaboration was planned with the theme of ‘emotional signals that expand the inner universe of humans to the outer universe.’ The image of G-Dragon’s iris was augmented through AI as a window into soul symbolizing his uniqueness and identity, and the new song “Home Sweet Home” was combined as an audio message containing the vibration of that emotion.

This was actually transmitted into space using a next-generation small satellite developed by KAIST Space Research Institute, completing a symbolic performance in which an individual’s inner universe is transmitted to outer space.

Professor Jinjoon Lee’s cinematic media art work “Iris” was unveiled at the site. This work was screened in the world’s first projection mapping method* on KAIST Space Research Institute’s 13m space antenna. This video was created using generative artificial intelligence (AI) technology based on the image of G-Dragon's iris, and combined with sound using the data of the sounds of Emile Bell rings – the bell that holds a thousand years of history, it presented an emotional art experience that transcends time and space.

*Projection Mapping: A technology that projects light and images onto actual structures to create visual changes, and is a method of expression that artistically reinterprets space.

This work is one of the major research achievements of KAIST TX Lab and Professor Lee based on new media technology based on biometric data such as iris, heartbeat, and brain waves.

Professor Jinjoon Lee said, "The iris is a symbol that reflects inner emotions and identity, so much so that it is called the 'mirror of the soul,' and this work sought to express 'the infinite universe seen from the inside of humanity' through G-Dragon's gaze."

< Photo 2. (From left) Professor Jinjoon Lee of the Graduate School of Culture Technology and G-Dragon (Visiting Professor Kwon Ji-yong of the Department of Mechanical Engineering) >

He continued, "The universe is a realm of technology as well as a stage for imagination and emotion, and I look forward to an encounter with the unknown through a new attempt to speak of art in the language of science including AI and imagine science in the form of art." “G-Dragon’s voice and music have now begun their journey to space,” said Yong-ho Choi, Galaxy Corporation’s Chief Happiness Officer (CHO). “This project is an act of leaving music as a legacy for humanity, while also having an important meaning of attempting to communicate with space.” He added, “This is a pioneering step to introduce human culture to space, and it will remain as a monumental performance that opens a new chapter in the history of music comparable to the Beatles.”

Galaxy Corporation is leading the future entertainment technology industry through its collaboration with KAIST, and was recently selected as the only entertainment technology company in a private meeting with Microsoft CEO Nadella. In particular, it is promoting the globalization of AI entertainment technology, receiving praise as a “pioneer of imagination” for new forms of AI entertainment content, including the AI contents for the deceased.

< Photo 3. Photo of G-Dragon's Home Sweet Home being sent into the space via Professor Jinjoon Lee's Space Sound Source Transmission Project >

Through this project, KAIST Space Research Institute presented new possibilities for utilizing satellite technology, and showed a model for science to connect with society in a more popular way.

KAIST President Kwang-Hyung Lee said, “KAIST is a place that always supports new imaginations and challenges,” and added, “We will continue to strive to continue creative research that no one has ever thought of, like this project that combines science, technology, and art.”

In the meantime, Galaxy Corporation, the agency of G-Dragon’s Professor Kwon Ji-yong, is an AI entertainment company that presents a new paradigm based on IP, media, tech, and entertainment convergence technology.

2025.04.10 View 1084

KAIST sends out Music and Bio-Signs of Professor Kwon Ji-yong, a.k.a. G-Dragon, into Space to Pulsate through Universe and Resonate among Stars

KAIST (President Kwang-Hyung Lee) announced on the 10th of April that it successfully promoted the world’s first ‘Space Sound Source Transmission Project’ based on media art at the KAIST Space Research Institute on April 9th through collaboration between Professor Jinjoon Lee of the Graduate School of Culture Technology, a world-renowned media artist, and the global K-Pop artist, G-Dragon.

This project was proposed as part of the ‘AI Entertech Research Center’ being promoted by KAIST and Galaxy Corporation. It is a project to transmit the message and sound of G-Dragon (real name, Kwon Ji-yong), a singer/song writer affiliated with Galaxy Corporation and a visiting professor in the Department of Mechanical Engineering at KAIST, to space for the first time in the world.

This is a convergence project that combines science, technology, art, and popular music, and is a new form of ‘space culture content’ experiment that connects KAIST’s cutting-edge space technology, Professor Jinjoon Lee’s media art work, and G-Dragon’s voice and sound source containing his latest digital single, "HOME SWEET HOME".

< Photo 1. Professor Jinjoon Lee's Open Your Eyes Project "Iris"'s imagery projected on the 13m space antenna at the Space Research Institute >

This collaboration was planned with the theme of ‘emotional signals that expand the inner universe of humans to the outer universe.’ The image of G-Dragon’s iris was augmented through AI as a window into soul symbolizing his uniqueness and identity, and the new song “Home Sweet Home” was combined as an audio message containing the vibration of that emotion.

This was actually transmitted into space using a next-generation small satellite developed by KAIST Space Research Institute, completing a symbolic performance in which an individual’s inner universe is transmitted to outer space.

Professor Jinjoon Lee’s cinematic media art work “Iris” was unveiled at the site. This work was screened in the world’s first projection mapping method* on KAIST Space Research Institute’s 13m space antenna. This video was created using generative artificial intelligence (AI) technology based on the image of G-Dragon's iris, and combined with sound using the data of the sounds of Emile Bell rings – the bell that holds a thousand years of history, it presented an emotional art experience that transcends time and space.

*Projection Mapping: A technology that projects light and images onto actual structures to create visual changes, and is a method of expression that artistically reinterprets space.

This work is one of the major research achievements of KAIST TX Lab and Professor Lee based on new media technology based on biometric data such as iris, heartbeat, and brain waves.

Professor Jinjoon Lee said, "The iris is a symbol that reflects inner emotions and identity, so much so that it is called the 'mirror of the soul,' and this work sought to express 'the infinite universe seen from the inside of humanity' through G-Dragon's gaze."

< Photo 2. (From left) Professor Jinjoon Lee of the Graduate School of Culture Technology and G-Dragon (Visiting Professor Kwon Ji-yong of the Department of Mechanical Engineering) >

He continued, "The universe is a realm of technology as well as a stage for imagination and emotion, and I look forward to an encounter with the unknown through a new attempt to speak of art in the language of science including AI and imagine science in the form of art." “G-Dragon’s voice and music have now begun their journey to space,” said Yong-ho Choi, Galaxy Corporation’s Chief Happiness Officer (CHO). “This project is an act of leaving music as a legacy for humanity, while also having an important meaning of attempting to communicate with space.” He added, “This is a pioneering step to introduce human culture to space, and it will remain as a monumental performance that opens a new chapter in the history of music comparable to the Beatles.”

Galaxy Corporation is leading the future entertainment technology industry through its collaboration with KAIST, and was recently selected as the only entertainment technology company in a private meeting with Microsoft CEO Nadella. In particular, it is promoting the globalization of AI entertainment technology, receiving praise as a “pioneer of imagination” for new forms of AI entertainment content, including the AI contents for the deceased.

< Photo 3. Photo of G-Dragon's Home Sweet Home being sent into the space via Professor Jinjoon Lee's Space Sound Source Transmission Project >

Through this project, KAIST Space Research Institute presented new possibilities for utilizing satellite technology, and showed a model for science to connect with society in a more popular way.

KAIST President Kwang-Hyung Lee said, “KAIST is a place that always supports new imaginations and challenges,” and added, “We will continue to strive to continue creative research that no one has ever thought of, like this project that combines science, technology, and art.”

In the meantime, Galaxy Corporation, the agency of G-Dragon’s Professor Kwon Ji-yong, is an AI entertainment company that presents a new paradigm based on IP, media, tech, and entertainment convergence technology.

2025.04.10 View 1084 -

Ultralight advanced material developed by KAIST and U of Toronto

< (From left) Professor Seunghwa Ryu of KAIST Department of Mechanical Engineering, Professor Tobin Filleter of the University of Toronto, Dr. Jinwook Yeo of KAIST, and Dr. Peter Serles of the University of Toronto >

Recently, in advanced industries such as automobiles, aerospace, and mobility, there has been increasing demand for materials that achieve weight reduction while maintaining excellent mechanical properties. An international joint research team has developed an ultralight, high-strength material utilizing nanostructures, presenting the potential for various industrial applications through customized design in the future.

KAIST (represented by President Kwang Hyung Lee) announced on the 18th of February that a research team led by Professor Seunghwa Ryu from the Department of Mechanical Engineering, in collaboration with Professor Tobin Filleter from the University of Toronto, has developed a nano-lattice structure that maximizes lightweight properties while maintaining high stiffness and strength.

In this study, the research team optimized the beam shape of the lattice structure to maintain its lightweight characteristics while maximizing stiffness and strength.

Particularly, using a multi-objective Bayesian optimization algorithm*, the team conducted an optimal design process that simultaneously considers tensile and shear stiffness improvement and weight reduction. They demonstrated that the optimal lattice structure could be predicted and designed with significantly less data (about 400 data points) compared to conventional methods.

*Multi-objective Bayesian optimization algorithm: A method that finds the optimal solution while considering multiple objectives simultaneously. It efficiently collects data and predicts results even under conditions of uncertainty.

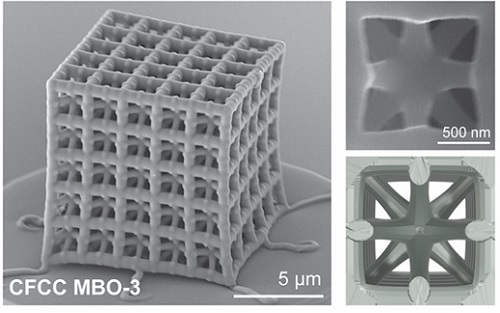

< Figure 1. Multi-objective Bayesian optimization for generative design of carbon nanolattices with high compressive stiffness and strength at low density. The upper is the illustration of process workflow. The lower part shows top four MBO CFCC geometries with their 2D Bézier curves. (The optimized structure is predicted and designed with much less data (approximately 400) than the conventional method >

Furthermore, to maximize the effect where mechanical properties improve as size decreases at the nanoscale, the research team utilized pyrolytic carbon* material to implement an ultralight, high-strength, high-stiffness nano-lattice structure.

*Pyrolytic carbon: A carbon material obtained by decomposing organic substances at high temperatures. It has excellent heat resistance and strength, making it widely used in industries such as semiconductor equipment coatings and artificial joint coatings, where it must withstand high temperatures without deformation.

For this, the team applied two-photon polymerization (2PP) technology* to precisely fabricate complex nano-lattice structures, and mechanical performance evaluations confirmed that the developed structure simultaneously possesses strength comparable to steel and the lightness of Styrofoam.

*Two-photon polymerization (2PP) technology: An advanced optical manufacturing technique based on the principle that polymerization occurs only when two photons of a specific wavelength are absorbed simultaneously.

Additionally, the research team demonstrated that multi-focus two-photon polymerization (multi-focus 2PP) technology enables the fabrication of millimeter-scale structures while maintaining nanoscale precision.

Professor Seunghwa Ryu explained, "This technology innovatively solves the stress concentration issue, which has been a limitation of conventional design methods, through three-dimensional nano-lattice structures, achieving both ultralight weight and high strength in material development."

< Figure 2. FESEM image of the fabricated nano-lattice structure and (bottom right) the macroscopic nanolattice resting on a bubble >

He further emphasized, "By integrating data-driven optimal design with precision 3D printing technology, this development not only meets the demand for lightweight materials in the aerospace and automotive industries but also opens possibilities for various industrial applications through customized design."

This study was led by Dr. Peter Serles of the Department of Mechanical & Industrial Engineering at University of Toronto and Dr. Jinwook Yeo from KAIST as co-first authors, with Professor Seunghwa Ryu and Professor Tobin Filleter as corresponding authors.

The research was published on January 23, 2025 in the international journal Advanced Materials (Paper title: “Ultrahigh Specific Strength by Bayesian Optimization of Lightweight Carbon Nanolattices”).

DOI: https://doi.org/10.1002/adma.202410651

This research was supported by the Multiphase Materials Innovation Manufacturing Research Center (an ERC program) funded by the Ministry of Science and ICT, the M3DT (Medical Device Digital Development Tool) project funded by the Ministry of Food and Drug Safety, and the KAIST International Collaboration Program.

2025.02.18 View 2287

Ultralight advanced material developed by KAIST and U of Toronto

< (From left) Professor Seunghwa Ryu of KAIST Department of Mechanical Engineering, Professor Tobin Filleter of the University of Toronto, Dr. Jinwook Yeo of KAIST, and Dr. Peter Serles of the University of Toronto >

Recently, in advanced industries such as automobiles, aerospace, and mobility, there has been increasing demand for materials that achieve weight reduction while maintaining excellent mechanical properties. An international joint research team has developed an ultralight, high-strength material utilizing nanostructures, presenting the potential for various industrial applications through customized design in the future.

KAIST (represented by President Kwang Hyung Lee) announced on the 18th of February that a research team led by Professor Seunghwa Ryu from the Department of Mechanical Engineering, in collaboration with Professor Tobin Filleter from the University of Toronto, has developed a nano-lattice structure that maximizes lightweight properties while maintaining high stiffness and strength.

In this study, the research team optimized the beam shape of the lattice structure to maintain its lightweight characteristics while maximizing stiffness and strength.

Particularly, using a multi-objective Bayesian optimization algorithm*, the team conducted an optimal design process that simultaneously considers tensile and shear stiffness improvement and weight reduction. They demonstrated that the optimal lattice structure could be predicted and designed with significantly less data (about 400 data points) compared to conventional methods.

*Multi-objective Bayesian optimization algorithm: A method that finds the optimal solution while considering multiple objectives simultaneously. It efficiently collects data and predicts results even under conditions of uncertainty.

< Figure 1. Multi-objective Bayesian optimization for generative design of carbon nanolattices with high compressive stiffness and strength at low density. The upper is the illustration of process workflow. The lower part shows top four MBO CFCC geometries with their 2D Bézier curves. (The optimized structure is predicted and designed with much less data (approximately 400) than the conventional method >

Furthermore, to maximize the effect where mechanical properties improve as size decreases at the nanoscale, the research team utilized pyrolytic carbon* material to implement an ultralight, high-strength, high-stiffness nano-lattice structure.

*Pyrolytic carbon: A carbon material obtained by decomposing organic substances at high temperatures. It has excellent heat resistance and strength, making it widely used in industries such as semiconductor equipment coatings and artificial joint coatings, where it must withstand high temperatures without deformation.

For this, the team applied two-photon polymerization (2PP) technology* to precisely fabricate complex nano-lattice structures, and mechanical performance evaluations confirmed that the developed structure simultaneously possesses strength comparable to steel and the lightness of Styrofoam.

*Two-photon polymerization (2PP) technology: An advanced optical manufacturing technique based on the principle that polymerization occurs only when two photons of a specific wavelength are absorbed simultaneously.

Additionally, the research team demonstrated that multi-focus two-photon polymerization (multi-focus 2PP) technology enables the fabrication of millimeter-scale structures while maintaining nanoscale precision.

Professor Seunghwa Ryu explained, "This technology innovatively solves the stress concentration issue, which has been a limitation of conventional design methods, through three-dimensional nano-lattice structures, achieving both ultralight weight and high strength in material development."

< Figure 2. FESEM image of the fabricated nano-lattice structure and (bottom right) the macroscopic nanolattice resting on a bubble >

He further emphasized, "By integrating data-driven optimal design with precision 3D printing technology, this development not only meets the demand for lightweight materials in the aerospace and automotive industries but also opens possibilities for various industrial applications through customized design."

This study was led by Dr. Peter Serles of the Department of Mechanical & Industrial Engineering at University of Toronto and Dr. Jinwook Yeo from KAIST as co-first authors, with Professor Seunghwa Ryu and Professor Tobin Filleter as corresponding authors.

The research was published on January 23, 2025 in the international journal Advanced Materials (Paper title: “Ultrahigh Specific Strength by Bayesian Optimization of Lightweight Carbon Nanolattices”).

DOI: https://doi.org/10.1002/adma.202410651

This research was supported by the Multiphase Materials Innovation Manufacturing Research Center (an ERC program) funded by the Ministry of Science and ICT, the M3DT (Medical Device Digital Development Tool) project funded by the Ministry of Food and Drug Safety, and the KAIST International Collaboration Program.

2025.02.18 View 2287 -

KAIST Develops AI-Driven Performance Prediction Model to Advance Space Electric Propulsion Technology

< (From left) PhD candidate Youngho Kim, Professor Wonho Choe, and PhD candidate Jaehong Park from the Department of Nuclear and Quantum Engineering >

Hall thrusters, a key space technology for missions like SpaceX's Starlink constellation and NASA's Psyche asteroid mission, are high-efficiency electric propulsion devices using plasma technology*. The KAIST research team announced that the AI-designed Hall thruster developed for CubeSats will be installed on the KAIST-Hall Effect Rocket Orbiter (K-HERO) CubeSat to demonstrate its in-orbit performance during the fourth launch of the Korean Launch Vehicle called Nuri rocket (KSLV-2) scheduled for November this year.

*Plasma is one of the four states of matter, where gases are heated to high energies, causing them to separate into charged ions and electrons. Plasma is used not only in space electric propulsion but also in semiconductor manufacturing, display processes, and sterilization devices.

On February 3rd, the research team from the KAIST Department of Nuclear and Quantum Engineering’s Electric Propulsion Laboratory, led by Professor Wonho Choe, announced the development of an AI-based technique to accurately predict the performance of Hall thrusters, the engines of satellites and space probes.

Hall thrusters provide high fuel efficiency, requiring minimal propellant to achieve significant acceleration of spacecrafts or satellites while producing substantial thrust relative to power consumption. Due to these advantages, Hall thrusters are widely used in various space missions, including the formation flight of satellite constellations, deorbiting maneuvers for space debris mitigation, and deep space missions such as asteroid exploration.

As the space industry continues to grow during the NewSpace era, the demand for Hall thrusters suited to diverse missions is increasing. To rapidly develop highly efficient, mission-optimized Hall thrusters, it is essential to predict thruster performance accurately from the design phase.

However, conventional methods have limitations, as they struggle to handle the complex plasma phenomena within Hall thrusters or are only applicable under specific conditions, leading to lower prediction accuracy.

The research team developed an AI-based performance prediction technique with high accuracy, significantly reducing the time and cost associated with the iterative design, fabrication, and testing of thrusters. Since 2003, Professor Wonho Choe’s team has been leading research on electric propulsion development in Korea. The team applied a neural network ensemble model to predict thruster performance using 18,000 Hall thruster training data points generated from their in-house numerical simulation tool.

The in-house numerical simulation tool, developed to model plasma physics and thrust performance, played a crucial role in providing high-quality training data. The simulation’s accuracy was validated through comparisons with experimental data from ten KAIST in-house Hall thrusters, with an average prediction error of less than 10%.

< Figure 1. This research has been selected as the cover article for the March 2025 issue (Volume 7, Issue 3) of the AI interdisciplinary journal, Advanced Intelligent Systems. >

The trained neural network ensemble model acts as a digital twin, accurately predicting the Hall thruster performance within seconds based on thruster design variables.

Notably, it offers detailed analyses of performance parameters such as thrust and discharge current, accounting for Hall thruster design variables like propellant flow rate and magnetic field—factors that are challenging to evaluate using traditional scaling laws.

This AI model demonstrated an average prediction error of less than 5% for the in-house 700 W and 1 kW KAIST Hall thrusters and less than 9% for a 5 kW high-power Hall thruster developed by the University of Michigan and the U.S. Air Force Research Laboratory. This confirms the broad applicability of the AI prediction method across different power levels of Hall thrusters.

Professor Wonho Choe stated, “The AI-based prediction technique developed by our team is highly accurate and is already being utilized in the analysis of thrust performance and the development of highly efficient, low-power Hall thrusters for satellites and spacecraft. This AI approach can also be applied beyond Hall thrusters to various industries, including semiconductor manufacturing, surface processing, and coating, through ion beam sources.”

< Figure 2. The AI-based prediction technique developed by the research team accurately predicts thrust performance based on design variables, making it highly valuable for the development of high-efficiency Hall thrusters. The neural network ensemble processes design variables, such as channel geometry and magnetic field information, and outputs key performance metrics like thrust and prediction accuracy, enabling efficient thruster design and performance analysis. >

Additionally, Professor Choe mentioned, “The CubeSat Hall thruster, developed using the AI technique in collaboration with our lab startup—Cosmo Bee, an electric propulsion company—will be tested in orbit this November aboard the K-HERO 3U (30 x 10 x 10 cm) CubeSat, scheduled for launch on the fourth flight of the KSLV-2 Nuri rocket.”

This research was published online in Advanced Intelligent Systems on December 25, 2024 with PhD candidate Jaehong Park as the first author and was selected as the journal’s cover article, highlighting its innovation.

< Figure 3. Image of the 150 W low-power Hall thruster for small and micro satellites, developed in collaboration with Cosmo Bee and the KAIST team. The thruster will be tested in orbit on the K-HERO CubeSat during the KSLV-2 Nuri rocket’s fourth launch in Q4 2025. >

This research was supported by the National Research Foundation of Korea’s Space Pioneer Program (200mN High Thrust Electric Propulsion System Development).

(Paper Title: Predicting Performance of Hall Effect Ion Source Using Machine Learning, DOI: https://doi.org/10.1002/aisy.202400555 )

< Figure 4. Graphs of the predicted thrust and discharge current of KAIST’s 700 W Hall thruster using the AI model (HallNN). The left image shows the Hall thruster operating in KAIST Electric Propulsion Laboratory’s vacuum chamber, while the center and right graphs present the prediction results for thrust and discharge current based on anode mass flow rate. The red lines represent AI predictions, and the blue dots represent experimental results, with a prediction error of less than 5%. >

2025.02.03 View 3216

KAIST Develops AI-Driven Performance Prediction Model to Advance Space Electric Propulsion Technology

< (From left) PhD candidate Youngho Kim, Professor Wonho Choe, and PhD candidate Jaehong Park from the Department of Nuclear and Quantum Engineering >

Hall thrusters, a key space technology for missions like SpaceX's Starlink constellation and NASA's Psyche asteroid mission, are high-efficiency electric propulsion devices using plasma technology*. The KAIST research team announced that the AI-designed Hall thruster developed for CubeSats will be installed on the KAIST-Hall Effect Rocket Orbiter (K-HERO) CubeSat to demonstrate its in-orbit performance during the fourth launch of the Korean Launch Vehicle called Nuri rocket (KSLV-2) scheduled for November this year.

*Plasma is one of the four states of matter, where gases are heated to high energies, causing them to separate into charged ions and electrons. Plasma is used not only in space electric propulsion but also in semiconductor manufacturing, display processes, and sterilization devices.

On February 3rd, the research team from the KAIST Department of Nuclear and Quantum Engineering’s Electric Propulsion Laboratory, led by Professor Wonho Choe, announced the development of an AI-based technique to accurately predict the performance of Hall thrusters, the engines of satellites and space probes.

Hall thrusters provide high fuel efficiency, requiring minimal propellant to achieve significant acceleration of spacecrafts or satellites while producing substantial thrust relative to power consumption. Due to these advantages, Hall thrusters are widely used in various space missions, including the formation flight of satellite constellations, deorbiting maneuvers for space debris mitigation, and deep space missions such as asteroid exploration.

As the space industry continues to grow during the NewSpace era, the demand for Hall thrusters suited to diverse missions is increasing. To rapidly develop highly efficient, mission-optimized Hall thrusters, it is essential to predict thruster performance accurately from the design phase.

However, conventional methods have limitations, as they struggle to handle the complex plasma phenomena within Hall thrusters or are only applicable under specific conditions, leading to lower prediction accuracy.

The research team developed an AI-based performance prediction technique with high accuracy, significantly reducing the time and cost associated with the iterative design, fabrication, and testing of thrusters. Since 2003, Professor Wonho Choe’s team has been leading research on electric propulsion development in Korea. The team applied a neural network ensemble model to predict thruster performance using 18,000 Hall thruster training data points generated from their in-house numerical simulation tool.

The in-house numerical simulation tool, developed to model plasma physics and thrust performance, played a crucial role in providing high-quality training data. The simulation’s accuracy was validated through comparisons with experimental data from ten KAIST in-house Hall thrusters, with an average prediction error of less than 10%.

< Figure 1. This research has been selected as the cover article for the March 2025 issue (Volume 7, Issue 3) of the AI interdisciplinary journal, Advanced Intelligent Systems. >

The trained neural network ensemble model acts as a digital twin, accurately predicting the Hall thruster performance within seconds based on thruster design variables.

Notably, it offers detailed analyses of performance parameters such as thrust and discharge current, accounting for Hall thruster design variables like propellant flow rate and magnetic field—factors that are challenging to evaluate using traditional scaling laws.

This AI model demonstrated an average prediction error of less than 5% for the in-house 700 W and 1 kW KAIST Hall thrusters and less than 9% for a 5 kW high-power Hall thruster developed by the University of Michigan and the U.S. Air Force Research Laboratory. This confirms the broad applicability of the AI prediction method across different power levels of Hall thrusters.

Professor Wonho Choe stated, “The AI-based prediction technique developed by our team is highly accurate and is already being utilized in the analysis of thrust performance and the development of highly efficient, low-power Hall thrusters for satellites and spacecraft. This AI approach can also be applied beyond Hall thrusters to various industries, including semiconductor manufacturing, surface processing, and coating, through ion beam sources.”

< Figure 2. The AI-based prediction technique developed by the research team accurately predicts thrust performance based on design variables, making it highly valuable for the development of high-efficiency Hall thrusters. The neural network ensemble processes design variables, such as channel geometry and magnetic field information, and outputs key performance metrics like thrust and prediction accuracy, enabling efficient thruster design and performance analysis. >

Additionally, Professor Choe mentioned, “The CubeSat Hall thruster, developed using the AI technique in collaboration with our lab startup—Cosmo Bee, an electric propulsion company—will be tested in orbit this November aboard the K-HERO 3U (30 x 10 x 10 cm) CubeSat, scheduled for launch on the fourth flight of the KSLV-2 Nuri rocket.”

This research was published online in Advanced Intelligent Systems on December 25, 2024 with PhD candidate Jaehong Park as the first author and was selected as the journal’s cover article, highlighting its innovation.

< Figure 3. Image of the 150 W low-power Hall thruster for small and micro satellites, developed in collaboration with Cosmo Bee and the KAIST team. The thruster will be tested in orbit on the K-HERO CubeSat during the KSLV-2 Nuri rocket’s fourth launch in Q4 2025. >

This research was supported by the National Research Foundation of Korea’s Space Pioneer Program (200mN High Thrust Electric Propulsion System Development).

(Paper Title: Predicting Performance of Hall Effect Ion Source Using Machine Learning, DOI: https://doi.org/10.1002/aisy.202400555 )

< Figure 4. Graphs of the predicted thrust and discharge current of KAIST’s 700 W Hall thruster using the AI model (HallNN). The left image shows the Hall thruster operating in KAIST Electric Propulsion Laboratory’s vacuum chamber, while the center and right graphs present the prediction results for thrust and discharge current based on anode mass flow rate. The red lines represent AI predictions, and the blue dots represent experimental results, with a prediction error of less than 5%. >

2025.02.03 View 3216 -

A Way for Smartwatches to Detect Depression Risks Devised by KAIST and U of Michigan Researchers

- A international joint research team of KAIST and the University of Michigan developed a digital biomarker for predicting symptoms of depression based on data collected by smartwatches

- It has the potential to be used as a medical technology to replace the economically burdensome fMRI measurement test

- It is expected to expand the scope of digital health data analysis

The CORONA virus pandemic also brought about a pandemic of mental illness. Approximately one billion people worldwide suffer from various psychiatric conditions. Korea is one of more serious cases, with approximately 1.8 million patients exhibiting depression and anxiety disorders, and the total number of patients with clinical mental diseases has increased by 37% in five years to approximately 4.65 million. A joint research team from Korea and the US has developed a technology that uses biometric data collected through wearable devices to predict tomorrow's mood and, further, to predict the possibility of developing symptoms of depression.

< Figure 1. Schematic diagram of the research results. Based on the biometric data collected by a smartwatch, a mathematical algorithm that solves the inverse problem to estimate the brain's circadian phase and sleep stages has been developed. This algorithm can estimate the degrees of circadian disruption, and these estimates can be used as the digital biomarkers to predict depression risks. >

KAIST (President Kwang Hyung Lee) announced on the 15th of January that the research team under Professor Dae Wook Kim from the Department of Brain and Cognitive Sciences and the team under Professor Daniel B. Forger from the Department of Mathematics at the University of Michigan in the United States have developed a technology to predict symptoms of depression such as sleep disorders, depression, loss of appetite, overeating, and decreased concentration in shift workers from the activity and heart rate data collected from smartwatches.

According to WHO, a promising new treatment direction for mental illness focuses on the sleep and circadian timekeeping system located in the hypothalamus of the brain, which directly affect impulsivity, emotional responses, decision-making, and overall mood.

However, in order to measure endogenous circadian rhythms and sleep states, blood or saliva must be drawn every 30 minutes throughout the night to measure changes in the concentration of the melatonin hormone in our bodies and polysomnography (PSG) must be performed. As such treatments requires hospitalization and most psychiatric patients only visit for outpatient treatment, there has been no significant progress in developing treatment methods that take these two factors into account. In addition, the cost of the PSG test, which is approximately $1000, leaves mental health treatment considering sleep and circadian rhythms out of reach for the socially disadvantaged.

The solution to overcome these problems is to employ wearable devices for the easier collection of biometric data such as heart rate, body temperature, and activity level in real time without spatial constraints. However, current wearable devices have the limitation of providing only indirect information on biomarkers required by medical staff, such as the phase of the circadian clock.

The joint research team developed a filtering technology that accurately estimates the phase of the circadian clock, which changes daily, such as heart rate and activity time series data collected from a smartwatch. This is an implementation of a digital twin that precisely describes the circadian rhythm in the brain, and it can be used to estimate circadian rhythm disruption.

< Figure 2. The suprachiasmatic nucleus located in the hypothalamus of the brain is the central biological clock that regulates the 24-hour physiological rhythm and plays a key role in maintaining the body’s circadian rhythm. If the phase of this biological clock is disrupted, it affects various parts of the brain, which can cause psychiatric conditions such as depression. >

The possibility of using the digital twin of this circadian clock to predict the symptoms of depression was verified through collaboration with the research team of Professor Srijan Sen of the Michigan Neuroscience Institute and Professor Amy Bohnert of the Department of Psychiatry of the University of Michigan.

The collaborative research team conducted a large-scale prospective cohort study involving approximately 800 shift workers and showed that the circadian rhythm disruption digital biomarker estimated through the technology can predict tomorrow's mood as well as six symptoms, including sleep problems, appetite changes, decreased concentration, and suicidal thoughts, which are representative symptoms of depression.

< Figure 3. The circadian rhythm of hormones such as melatonin regulates various physiological functions and behaviors such as heart rate and activity level. These physiological and behavioral signals can be measured in daily life through wearable devices. In order to estimate the body’s circadian rhythm inversely based on the measured biometric signals, a mathematical algorithm is needed. This algorithm plays a key role in accurately identifying the characteristics of circadian rhythms by extracting hidden physiological patterns from biosignals. >

Professor Dae Wook Kim said, "It is very meaningful to be able to conduct research that provides a clue for ways to apply wearable biometric data using mathematics that have not previously been utilized for actual disease management." He added, "We expect that this research will be able to present continuous and non-invasive mental health monitoring technology. This is expected to present a new paradigm for mental health care. By resolving some of the major problems socially disadvantaged people may face in current treatment practices, they may be able to take more active steps when experiencing symptoms of depression, such as seeking counsel before things get out of hand."

< Figure 4. A mathematical algorithm was devised to circumvent the problems of estimating the phase of the brain's biological clock and sleep stages inversely from the biodata collected by a smartwatch. This algorithm can estimate the degree of daily circadian rhythm disruption, and this estimate can be used as a digital biomarker to predict depression symptoms. >

The results of this study, in which Professor Dae Wook Kim of the Department of Brain and Cognitive Sciences at KAIST participated as the joint first author and corresponding author, were published in the online version of the international academic journal npj Digital Medicine on December 5, 2024. (Paper title: The real-world association between digital markers of circadian disruption and mental health risks) DOI: 10.1038/s41746-024-01348-6

This study was conducted with the support of the KAIST's Research Support Program for New Faculty Members, the US National Science Foundation, the US National Institutes of Health, and the US Army Research Institute MURI Program.

2025.01.20 View 4012

A Way for Smartwatches to Detect Depression Risks Devised by KAIST and U of Michigan Researchers

- A international joint research team of KAIST and the University of Michigan developed a digital biomarker for predicting symptoms of depression based on data collected by smartwatches

- It has the potential to be used as a medical technology to replace the economically burdensome fMRI measurement test

- It is expected to expand the scope of digital health data analysis

The CORONA virus pandemic also brought about a pandemic of mental illness. Approximately one billion people worldwide suffer from various psychiatric conditions. Korea is one of more serious cases, with approximately 1.8 million patients exhibiting depression and anxiety disorders, and the total number of patients with clinical mental diseases has increased by 37% in five years to approximately 4.65 million. A joint research team from Korea and the US has developed a technology that uses biometric data collected through wearable devices to predict tomorrow's mood and, further, to predict the possibility of developing symptoms of depression.

< Figure 1. Schematic diagram of the research results. Based on the biometric data collected by a smartwatch, a mathematical algorithm that solves the inverse problem to estimate the brain's circadian phase and sleep stages has been developed. This algorithm can estimate the degrees of circadian disruption, and these estimates can be used as the digital biomarkers to predict depression risks. >

KAIST (President Kwang Hyung Lee) announced on the 15th of January that the research team under Professor Dae Wook Kim from the Department of Brain and Cognitive Sciences and the team under Professor Daniel B. Forger from the Department of Mathematics at the University of Michigan in the United States have developed a technology to predict symptoms of depression such as sleep disorders, depression, loss of appetite, overeating, and decreased concentration in shift workers from the activity and heart rate data collected from smartwatches.

According to WHO, a promising new treatment direction for mental illness focuses on the sleep and circadian timekeeping system located in the hypothalamus of the brain, which directly affect impulsivity, emotional responses, decision-making, and overall mood.

However, in order to measure endogenous circadian rhythms and sleep states, blood or saliva must be drawn every 30 minutes throughout the night to measure changes in the concentration of the melatonin hormone in our bodies and polysomnography (PSG) must be performed. As such treatments requires hospitalization and most psychiatric patients only visit for outpatient treatment, there has been no significant progress in developing treatment methods that take these two factors into account. In addition, the cost of the PSG test, which is approximately $1000, leaves mental health treatment considering sleep and circadian rhythms out of reach for the socially disadvantaged.

The solution to overcome these problems is to employ wearable devices for the easier collection of biometric data such as heart rate, body temperature, and activity level in real time without spatial constraints. However, current wearable devices have the limitation of providing only indirect information on biomarkers required by medical staff, such as the phase of the circadian clock.

The joint research team developed a filtering technology that accurately estimates the phase of the circadian clock, which changes daily, such as heart rate and activity time series data collected from a smartwatch. This is an implementation of a digital twin that precisely describes the circadian rhythm in the brain, and it can be used to estimate circadian rhythm disruption.

< Figure 2. The suprachiasmatic nucleus located in the hypothalamus of the brain is the central biological clock that regulates the 24-hour physiological rhythm and plays a key role in maintaining the body’s circadian rhythm. If the phase of this biological clock is disrupted, it affects various parts of the brain, which can cause psychiatric conditions such as depression. >

The possibility of using the digital twin of this circadian clock to predict the symptoms of depression was verified through collaboration with the research team of Professor Srijan Sen of the Michigan Neuroscience Institute and Professor Amy Bohnert of the Department of Psychiatry of the University of Michigan.

The collaborative research team conducted a large-scale prospective cohort study involving approximately 800 shift workers and showed that the circadian rhythm disruption digital biomarker estimated through the technology can predict tomorrow's mood as well as six symptoms, including sleep problems, appetite changes, decreased concentration, and suicidal thoughts, which are representative symptoms of depression.

< Figure 3. The circadian rhythm of hormones such as melatonin regulates various physiological functions and behaviors such as heart rate and activity level. These physiological and behavioral signals can be measured in daily life through wearable devices. In order to estimate the body’s circadian rhythm inversely based on the measured biometric signals, a mathematical algorithm is needed. This algorithm plays a key role in accurately identifying the characteristics of circadian rhythms by extracting hidden physiological patterns from biosignals. >

Professor Dae Wook Kim said, "It is very meaningful to be able to conduct research that provides a clue for ways to apply wearable biometric data using mathematics that have not previously been utilized for actual disease management." He added, "We expect that this research will be able to present continuous and non-invasive mental health monitoring technology. This is expected to present a new paradigm for mental health care. By resolving some of the major problems socially disadvantaged people may face in current treatment practices, they may be able to take more active steps when experiencing symptoms of depression, such as seeking counsel before things get out of hand."

< Figure 4. A mathematical algorithm was devised to circumvent the problems of estimating the phase of the brain's biological clock and sleep stages inversely from the biodata collected by a smartwatch. This algorithm can estimate the degree of daily circadian rhythm disruption, and this estimate can be used as a digital biomarker to predict depression symptoms. >

The results of this study, in which Professor Dae Wook Kim of the Department of Brain and Cognitive Sciences at KAIST participated as the joint first author and corresponding author, were published in the online version of the international academic journal npj Digital Medicine on December 5, 2024. (Paper title: The real-world association between digital markers of circadian disruption and mental health risks) DOI: 10.1038/s41746-024-01348-6

This study was conducted with the support of the KAIST's Research Support Program for New Faculty Members, the US National Science Foundation, the US National Institutes of Health, and the US Army Research Institute MURI Program.

2025.01.20 View 4012 -

KAIST Secures Core Technology for Ultra-High-Resolution Image Sensors

A joint research team from Korea and the United States has developed next-generation, high-resolution image sensor technology with higher power efficiency and a smaller size compared to existing sensors. Notably, they have secured foundational technology for ultra-high-resolution shortwave infrared (SWIR) image sensors, an area currently dominated by Sony, paving the way for future market entry.

KAIST (represented by President Kwang Hyung Lee) announced on the 20th of November that a research team led by Professor SangHyeon Kim from the School of Electrical Engineering, in collaboration with Inha University and Yale University in the U.S., has developed an ultra-thin broadband photodiode (PD), marking a significant breakthrough in high-performance image sensor technology.

This research drastically improves the trade-off between the absorption layer thickness and quantum efficiency found in conventional photodiode technology. Specifically, it achieved high quantum efficiency of over 70% even in an absorption layer thinner than one micrometer (μm), reducing the thickness of the absorption layer by approximately 70% compared to existing technologies.

A thinner absorption layer simplifies pixel processing, allowing for higher resolution and smoother carrier diffusion, which is advantageous for light carrier acquisition while also reducing the cost. However, a fundamental issue with thinner absorption layers is the reduced absorption of long-wavelength light.

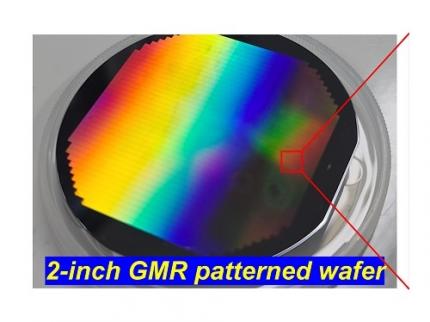

< Figure 1. Schematic diagram of the InGaAs photodiode image sensor integrated on the Guided-Mode Resonance (GMR) structure proposed in this study (left), a photograph of the fabricated wafer, and a scanning electron microscope (SEM) image of the periodic patterns (right) >

The research team introduced a guided-mode resonance (GMR) structure* that enables high-efficiency light absorption across a wide spectral range from 400 nanometers (nm) to 1,700 nanometers (nm). This wavelength range includes not only visible light but also light the SWIR region, making it valuable for various industrial applications.

*Guided-Mode Resonance (GMR) Structure: A concept used in electromagnetics, a phenomenon in which a specific (light) wave resonates (forming a strong electric/magnetic field) at a specific wavelength. Since energy is maximized under these conditions, it has been used to increase antenna or radar efficiency.

The improved performance in the SWIR region is expected to play a significant role in developing next-generation image sensors with increasingly high resolutions. The GMR structure, in particular, holds potential for further enhancing resolution and other performance metrics through hybrid integration and monolithic 3D integration with complementary metal-oxide-semiconductor (CMOS)-based readout integrated circuits (ROIC).

< Figure 2. Benchmark for state-of-the-art InGaAs-based SWIR pixels with simulated EQE lines as a function of TAL variation. Performance is maintained while reducing the absorption layer thickness from 2.1 micrometers or more to 1 micrometer or less while reducing it by 50% to 70% >

The research team has significantly enhanced international competitiveness in low-power devices and ultra-high-resolution imaging technology, opening up possibilities for applications in digital cameras, security systems, medical and industrial image sensors, as well as future ultra-high-resolution sensors for autonomous driving, aerospace, and satellite observation.

Professor Sang Hyun Kim, the lead researcher, commented, “This research demonstrates that significantly higher performance than existing technologies can be achieved even with ultra-thin absorption layers.”

< Figure 3. Top optical microscope image and cross-sectional scanning electron microscope image of the InGaAs photodiode image sensor fabricated on the GMR structure (left). Improved quantum efficiency performance of the ultra-thin image sensor (red) fabricated with the technology proposed in this study (right) >

The results of this research were published on 15th of November, in the prestigious international journal Light: Science & Applications (JCR 2.9%, IF=20.6), with Professor Dae-Myung Geum of Inha University (formerly a KAIST postdoctoral researcher) and Dr. Jinha Lim (currently a postdoctoral researcher at Yale University) as co-first authors. (Paper title: “Highly-efficient (>70%) and Wide-spectral (400 nm -1700 nm) sub-micron-thick InGaAs photodiodes for future high-resolution image sensors”)

This study was supported by the National Research Foundation of Korea.

2024.11.22 View 3196

KAIST Secures Core Technology for Ultra-High-Resolution Image Sensors

A joint research team from Korea and the United States has developed next-generation, high-resolution image sensor technology with higher power efficiency and a smaller size compared to existing sensors. Notably, they have secured foundational technology for ultra-high-resolution shortwave infrared (SWIR) image sensors, an area currently dominated by Sony, paving the way for future market entry.

KAIST (represented by President Kwang Hyung Lee) announced on the 20th of November that a research team led by Professor SangHyeon Kim from the School of Electrical Engineering, in collaboration with Inha University and Yale University in the U.S., has developed an ultra-thin broadband photodiode (PD), marking a significant breakthrough in high-performance image sensor technology.

This research drastically improves the trade-off between the absorption layer thickness and quantum efficiency found in conventional photodiode technology. Specifically, it achieved high quantum efficiency of over 70% even in an absorption layer thinner than one micrometer (μm), reducing the thickness of the absorption layer by approximately 70% compared to existing technologies.

A thinner absorption layer simplifies pixel processing, allowing for higher resolution and smoother carrier diffusion, which is advantageous for light carrier acquisition while also reducing the cost. However, a fundamental issue with thinner absorption layers is the reduced absorption of long-wavelength light.

< Figure 1. Schematic diagram of the InGaAs photodiode image sensor integrated on the Guided-Mode Resonance (GMR) structure proposed in this study (left), a photograph of the fabricated wafer, and a scanning electron microscope (SEM) image of the periodic patterns (right) >

The research team introduced a guided-mode resonance (GMR) structure* that enables high-efficiency light absorption across a wide spectral range from 400 nanometers (nm) to 1,700 nanometers (nm). This wavelength range includes not only visible light but also light the SWIR region, making it valuable for various industrial applications.

*Guided-Mode Resonance (GMR) Structure: A concept used in electromagnetics, a phenomenon in which a specific (light) wave resonates (forming a strong electric/magnetic field) at a specific wavelength. Since energy is maximized under these conditions, it has been used to increase antenna or radar efficiency.

The improved performance in the SWIR region is expected to play a significant role in developing next-generation image sensors with increasingly high resolutions. The GMR structure, in particular, holds potential for further enhancing resolution and other performance metrics through hybrid integration and monolithic 3D integration with complementary metal-oxide-semiconductor (CMOS)-based readout integrated circuits (ROIC).

< Figure 2. Benchmark for state-of-the-art InGaAs-based SWIR pixels with simulated EQE lines as a function of TAL variation. Performance is maintained while reducing the absorption layer thickness from 2.1 micrometers or more to 1 micrometer or less while reducing it by 50% to 70% >

The research team has significantly enhanced international competitiveness in low-power devices and ultra-high-resolution imaging technology, opening up possibilities for applications in digital cameras, security systems, medical and industrial image sensors, as well as future ultra-high-resolution sensors for autonomous driving, aerospace, and satellite observation.

Professor Sang Hyun Kim, the lead researcher, commented, “This research demonstrates that significantly higher performance than existing technologies can be achieved even with ultra-thin absorption layers.”

< Figure 3. Top optical microscope image and cross-sectional scanning electron microscope image of the InGaAs photodiode image sensor fabricated on the GMR structure (left). Improved quantum efficiency performance of the ultra-thin image sensor (red) fabricated with the technology proposed in this study (right) >

The results of this research were published on 15th of November, in the prestigious international journal Light: Science & Applications (JCR 2.9%, IF=20.6), with Professor Dae-Myung Geum of Inha University (formerly a KAIST postdoctoral researcher) and Dr. Jinha Lim (currently a postdoctoral researcher at Yale University) as co-first authors. (Paper title: “Highly-efficient (>70%) and Wide-spectral (400 nm -1700 nm) sub-micron-thick InGaAs photodiodes for future high-resolution image sensors”)

This study was supported by the National Research Foundation of Korea.

2024.11.22 View 3196 -

KAIST Develops Janus-like Metasurface Technology that Acts According to the Direction of Light

Metasurface technology is an advanced optical technology that is thinner, lighter, and capable of precisely controlling light through nanometer-sized artificial structures compared to conventional technologies. KAIST researchers have overcome the limitations of existing metasurface technologies and successfully designed a Janus metasurface capable of perfectly controlling asymmetric light transmission. By applying this technology, they also proposed an innovative method to significantly enhance security by only decoding information under specific conditions.

KAIST (represented by President Kwang Hyung Lee) announced on the 15th of October that a research team led by Professor Jonghwa Shin from the Department of Materials Science and Engineering had developed a Janus metasurface capable of perfectly controlling asymmetric light transmission.

Asymmetric properties, which react differently depending on the direction, play a crucial role in various fields of science and engineering. The Janus metasurface developed by the research team implements an optical system capable of performing different functions in both directions.

Like the Roman god Janus with two faces, this metasurface shows entirely different optical responses depending on the direction of incoming light, effectively operating two independent optical systems with a single device (for example, a metasurface that acts as a magnifying lens in one direction and as a polarized camera in the other). In other words, by using this technology, it's possible to operate two different optical systems (e.g., a lens and a hologram) depending on the direction of the light.

This achievement addresses a challenge that existing metasurface technologies had not resolved. Conventional metasurface technology had limitations in selectively controlling the three properties of light—intensity, phase, and polarization—based on the direction of incidence.

The research team proposed a solution based on mathematical and physical principles, and succeeded in experimentally implementing different vector holograms in both directions. Through this achievement, they showcased a complete asymmetric light transmission control technology.

< Figure 1. Schematics of a device featuring asymmetric transmission. a) Device operating as a magnifying lens for back-side illumination. b) Device operating as a polarization camera for front-side illumination. >

Additionally, the research team developed a new optical encryption technology based on this metasurface technology. By using the Janus metasurface, they implemented a vector hologram that generates different images depending on the direction and polarization state of incoming light, showcasing an optical encryption system that significantly enhances security by allowing information to be decoded only under specific conditions.

This technology is expected to serve as a next-generation security solution, applicable in various fields such as quantum communication and secure data transmission.

Furthermore, the ultra-thin structure of the metasurface is expected to significantly reduce the volume and weight of traditional optical devices, contributing greatly to the miniaturization and lightweight design of next-generation devices.

< Figure 2. Experimental demonstration of Janus vectorial holograms. With front illuminations, vector images of the butterfly and the grasshopper are created, and with the back-side illuminations, vector images of the ladybug and the beetle are created. >

Professor Jonghwa Shin from the Department of Materials Science and Engineering at KAIST stated, "This research has enabled the complete asymmetric transmission control of light’s intensity, phase, and polarization, which has been a long-standing challenge in optics. It has opened up the possibility of developing various applied optical devices." He added, "We plan to continue developing optical devices that can be applied to various fields such as augmented reality (AR), holographic displays, and LiDAR systems for autonomous vehicles, utilizing the full potential of metasurface technology."

This research, in which Hyeonhee Kim (a doctoral student in the Department of Materials Science and Engineering at KAIST) and Joonkyo Jung participated as co-first authors, was published online in the international journal Advanced Materials and is scheduled to be published in the October 31 issue. (Title of the paper: "Bidirectional Vectorial Holography Using Bi-Layer Metasurfaces and Its Application to Optical Encryption")

The research was supported by the Nano Materials Technology Development Program and the Mid-Career Researcher Program of the National Research Foundation of Korea.

2024.10.15 View 2682

KAIST Develops Janus-like Metasurface Technology that Acts According to the Direction of Light

Metasurface technology is an advanced optical technology that is thinner, lighter, and capable of precisely controlling light through nanometer-sized artificial structures compared to conventional technologies. KAIST researchers have overcome the limitations of existing metasurface technologies and successfully designed a Janus metasurface capable of perfectly controlling asymmetric light transmission. By applying this technology, they also proposed an innovative method to significantly enhance security by only decoding information under specific conditions.

KAIST (represented by President Kwang Hyung Lee) announced on the 15th of October that a research team led by Professor Jonghwa Shin from the Department of Materials Science and Engineering had developed a Janus metasurface capable of perfectly controlling asymmetric light transmission.

Asymmetric properties, which react differently depending on the direction, play a crucial role in various fields of science and engineering. The Janus metasurface developed by the research team implements an optical system capable of performing different functions in both directions.

Like the Roman god Janus with two faces, this metasurface shows entirely different optical responses depending on the direction of incoming light, effectively operating two independent optical systems with a single device (for example, a metasurface that acts as a magnifying lens in one direction and as a polarized camera in the other). In other words, by using this technology, it's possible to operate two different optical systems (e.g., a lens and a hologram) depending on the direction of the light.

This achievement addresses a challenge that existing metasurface technologies had not resolved. Conventional metasurface technology had limitations in selectively controlling the three properties of light—intensity, phase, and polarization—based on the direction of incidence.

The research team proposed a solution based on mathematical and physical principles, and succeeded in experimentally implementing different vector holograms in both directions. Through this achievement, they showcased a complete asymmetric light transmission control technology.

< Figure 1. Schematics of a device featuring asymmetric transmission. a) Device operating as a magnifying lens for back-side illumination. b) Device operating as a polarization camera for front-side illumination. >

Additionally, the research team developed a new optical encryption technology based on this metasurface technology. By using the Janus metasurface, they implemented a vector hologram that generates different images depending on the direction and polarization state of incoming light, showcasing an optical encryption system that significantly enhances security by allowing information to be decoded only under specific conditions.

This technology is expected to serve as a next-generation security solution, applicable in various fields such as quantum communication and secure data transmission.

Furthermore, the ultra-thin structure of the metasurface is expected to significantly reduce the volume and weight of traditional optical devices, contributing greatly to the miniaturization and lightweight design of next-generation devices.

< Figure 2. Experimental demonstration of Janus vectorial holograms. With front illuminations, vector images of the butterfly and the grasshopper are created, and with the back-side illuminations, vector images of the ladybug and the beetle are created. >

Professor Jonghwa Shin from the Department of Materials Science and Engineering at KAIST stated, "This research has enabled the complete asymmetric transmission control of light’s intensity, phase, and polarization, which has been a long-standing challenge in optics. It has opened up the possibility of developing various applied optical devices." He added, "We plan to continue developing optical devices that can be applied to various fields such as augmented reality (AR), holographic displays, and LiDAR systems for autonomous vehicles, utilizing the full potential of metasurface technology."

This research, in which Hyeonhee Kim (a doctoral student in the Department of Materials Science and Engineering at KAIST) and Joonkyo Jung participated as co-first authors, was published online in the international journal Advanced Materials and is scheduled to be published in the October 31 issue. (Title of the paper: "Bidirectional Vectorial Holography Using Bi-Layer Metasurfaces and Its Application to Optical Encryption")

The research was supported by the Nano Materials Technology Development Program and the Mid-Career Researcher Program of the National Research Foundation of Korea.

2024.10.15 View 2682 -

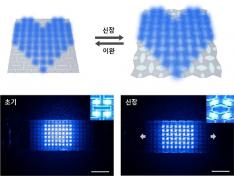

KAIST Develops Stretchable Displays Featuring 25% Expansion Without Image Distortion

Stretchable displays, praised for their spatial efficiency, design flexibility, and human-like flexibility, are seen as the next generation of display technology. A team of Korean researchers has developed a stretchable display that can expand by 25% while maintaining clear image quality without distortion. It can also stretch and contract up to 5,000 times at 15% expansion without any performance degradation, making it the first deformation-free stretchable display with a negative Poisson's ratio* developed in Korea.

*Poisson’s ratio of -1: A ratio where both width and length stretch equally, expressed as a negative value. A positive Poisson's ratio represents the ratio where horizontal stretching leads to vertical contraction, which is the case for most materials.

KAIST (represented by President Kwang-Hyung Lee) announced on the 20th of August that a research team led by Professor Byeong-Soo Bae of the Department of Materials Science and Engineering (Director of the Wearable Platform Materials Technology Center) , in collaboration with the Korea Institute of Machinery & Materials (President Seoghyeon Ryu), successfully developed a stretchable display substrate that suppresses image distortion through omnidirectional stretchability.

Currently, most stretchable displays are made with highly elastic elastomer* materials, but these materials possess a positive Poisson's ratio, causing unavoidable image distortion when the display is stretched.

*Elastomer: A polymer with elasticity similar to rubber.

To address this, the introduction of auxetic* meta-structures has been gaining attention. Unlike conventional materials, auxetic structures have a unique 'negative Poisson's ratio,' expanding in all directions when stretched in just one direction. However, traditional auxetic structures contain many empty spaces, limiting their stability and usability in display substrates.

*Auxetic structure: A special geometric structure that exhibits a negative Poisson's ratio.

To tackle the issue of image distortion, Professor Bae's research team developed a method to create a seamless surface for the auxetic meta-structure, achieving the ideal negative Poisson's ratio of -1 and overcoming the biggest challenge in auxetic meta-structures.

To overcome the second issue of elastic modulus*, the team inserted a textile made of glass fiber bundles with a diameter of just 25 micrometers (a quarter of the thickness of human hair) into the elastomer material. They then filled the empty spaces with the same elastomer, creating a flat and stable integrated film without gaps.

*Elastic Modulus: The ratio that indicates the extent of deformation when force is applied to a material. A higher elastic modulus means that the material is less likely to deform under force.

The research team theoretically identified that the difference in elasticity between the auxetic structure and the elastomer material directly influences the negative Poisson's ratio and successfully achieved an elasticity difference of over 230,000 times, producing a film with a Poisson's ratio of -1, the theoretical limit.

< Figure 2. Deformation of S-AUX film. a) Configurations and visualized principal strain distribution of the optimized S-AUX film at various strain rates. b) Biaxial stretching image. While pristine elastomer shrinks in the directions that were not stretched, S-AUX film developed in this study expands in all directions simultaneously while maintaining its original shape. >

Professor Byeong-Soo Bae, who led the study, explained, "Preventing image distortion using auxetic structures in stretchable displays is a core technology, but it has faced challenges due to the many empty spaces in the surface, making it difficult to use as a substrate. This research outcome is expected to significantly accelerate commercialization through high-resolution, distortion-free stretchable display applications that utilize the entire surface."

This study, co-authored by Dr. Yung Lee from KAIST’s Department of Materials Science and Engineering and Dr. Bongkyun Jang from the Korea Institute of Machinery & Materials, was published on August 20th in the international journal Nature Communications under the title "A seamless auxetic substrate with a negative Poisson's ratio of –1".

The research was supported by the Wearable Platform Materials Technology Center at KAIST, the Korea Institute of Machinery & Materials, and LG Display.

< Figure 3. Structural configuration of the distortion-free display components on the S-AUX film and a contour image of a micro-LED chip transferred onto the S-AUX film. >

< Figure 4. Schematic illustrations and photographic images of the S-AUX film-based image: distortion-free display in its stretched state and released state. >

2024.09.20 View 4192

KAIST Develops Stretchable Displays Featuring 25% Expansion Without Image Distortion

Stretchable displays, praised for their spatial efficiency, design flexibility, and human-like flexibility, are seen as the next generation of display technology. A team of Korean researchers has developed a stretchable display that can expand by 25% while maintaining clear image quality without distortion. It can also stretch and contract up to 5,000 times at 15% expansion without any performance degradation, making it the first deformation-free stretchable display with a negative Poisson's ratio* developed in Korea.

*Poisson’s ratio of -1: A ratio where both width and length stretch equally, expressed as a negative value. A positive Poisson's ratio represents the ratio where horizontal stretching leads to vertical contraction, which is the case for most materials.

KAIST (represented by President Kwang-Hyung Lee) announced on the 20th of August that a research team led by Professor Byeong-Soo Bae of the Department of Materials Science and Engineering (Director of the Wearable Platform Materials Technology Center) , in collaboration with the Korea Institute of Machinery & Materials (President Seoghyeon Ryu), successfully developed a stretchable display substrate that suppresses image distortion through omnidirectional stretchability.

Currently, most stretchable displays are made with highly elastic elastomer* materials, but these materials possess a positive Poisson's ratio, causing unavoidable image distortion when the display is stretched.

*Elastomer: A polymer with elasticity similar to rubber.

To address this, the introduction of auxetic* meta-structures has been gaining attention. Unlike conventional materials, auxetic structures have a unique 'negative Poisson's ratio,' expanding in all directions when stretched in just one direction. However, traditional auxetic structures contain many empty spaces, limiting their stability and usability in display substrates.

*Auxetic structure: A special geometric structure that exhibits a negative Poisson's ratio.

To tackle the issue of image distortion, Professor Bae's research team developed a method to create a seamless surface for the auxetic meta-structure, achieving the ideal negative Poisson's ratio of -1 and overcoming the biggest challenge in auxetic meta-structures.

To overcome the second issue of elastic modulus*, the team inserted a textile made of glass fiber bundles with a diameter of just 25 micrometers (a quarter of the thickness of human hair) into the elastomer material. They then filled the empty spaces with the same elastomer, creating a flat and stable integrated film without gaps.

*Elastic Modulus: The ratio that indicates the extent of deformation when force is applied to a material. A higher elastic modulus means that the material is less likely to deform under force.

The research team theoretically identified that the difference in elasticity between the auxetic structure and the elastomer material directly influences the negative Poisson's ratio and successfully achieved an elasticity difference of over 230,000 times, producing a film with a Poisson's ratio of -1, the theoretical limit.

< Figure 2. Deformation of S-AUX film. a) Configurations and visualized principal strain distribution of the optimized S-AUX film at various strain rates. b) Biaxial stretching image. While pristine elastomer shrinks in the directions that were not stretched, S-AUX film developed in this study expands in all directions simultaneously while maintaining its original shape. >

Professor Byeong-Soo Bae, who led the study, explained, "Preventing image distortion using auxetic structures in stretchable displays is a core technology, but it has faced challenges due to the many empty spaces in the surface, making it difficult to use as a substrate. This research outcome is expected to significantly accelerate commercialization through high-resolution, distortion-free stretchable display applications that utilize the entire surface."

This study, co-authored by Dr. Yung Lee from KAIST’s Department of Materials Science and Engineering and Dr. Bongkyun Jang from the Korea Institute of Machinery & Materials, was published on August 20th in the international journal Nature Communications under the title "A seamless auxetic substrate with a negative Poisson's ratio of –1".

The research was supported by the Wearable Platform Materials Technology Center at KAIST, the Korea Institute of Machinery & Materials, and LG Display.

< Figure 3. Structural configuration of the distortion-free display components on the S-AUX film and a contour image of a micro-LED chip transferred onto the S-AUX film. >

< Figure 4. Schematic illustrations and photographic images of the S-AUX film-based image: distortion-free display in its stretched state and released state. >

2024.09.20 View 4192 -

KAIST Employs Image-recognition AI to Determine Battery Composition and Conditions

An international collaborative research team has developed an image recognition technology that can accurately determine the elemental composition and the number of charge and discharge cycles of a battery by examining only its surface morphology using AI learning.

KAIST (President Kwang-Hyung Lee) announced on July 2nd that Professor Seungbum Hong from the Department of Materials Science and Engineering, in collaboration with the Electronics and Telecommunications Research Institute (ETRI) and Drexel University in the United States, has developed a method to predict the major elemental composition and charge-discharge state of NCM cathode materials with 99.6% accuracy using convolutional neural networks (CNN)*.