Myoungsoo+Jung

-

LightPC Presents a Resilient System Using Only Non-Volatile Memory

Lightweight Persistence Centric System (LightPC) ensures both data and execution persistence for energy-efficient full system persistence

A KAIST research team has developed hardware and software technology that ensures both data and execution persistence. The Lightweight Persistence Centric System (LightPC) makes the systems resilient against power failures by utilizing only non-volatile memory as the main memory.

“We mounted non-volatile memory on a system board prototype and created an operating system to verify the effectiveness of LightPC,” said Professor Myoungsoo Jung. The team confirmed that LightPC validated its execution while powering up and down in the middle of execution, showing up to eight times more memory, 4.3 times faster application execution, and 73% lower power consumption compared to traditional systems.

Professor Jung said that LightPC can be utilized in a variety of fields such as data centers and high-performance computing to provide large-capacity memory, high performance, low power consumption, and service reliability.

In general, power failures on legacy systems can lead to the loss of data stored in the DRAM-based main memory. Unlike volatile memory such as DRAM, non-volatile memory can retain its data without power. Although non-volatile memory has the characteristics of lower power consumption and larger capacity than DRAM, non-volatile memory is typically used for the task of secondary storage due to its lower write performance. For this reason, nonvolatile memory is often used with DRAM. However, modern systems employing non-volatile memory-based main memory experience unexpected performance degradation due to the complicated memory microarchitecture.

To enable both data and execution persistent in legacy systems, it is necessary to transfer the data from the volatile memory to the non-volatile memory. Checkpointing is one possible solution. It periodically transfers the data in preparation for a sudden power failure. While this technology is essential for ensuring high mobility and reliability for users, checkpointing also has fatal drawbacks. It takes additional time and power to move data and requires a data recovery process as well as restarting the system.

In order to address these issues, the research team developed a processor and memory controller to raise the performance of non-volatile memory-only memory. LightPC matches the performance of DRAM by minimizing the internal volatile memory components from non-volatile memory, exposing the non-volatile memory (PRAM) media to the host, and increasing parallelism to service on-the-fly requests as soon as possible.

The team also presented operating system technology that quickly makes execution states of running processes persistent without the need for a checkpointing process. The operating system prevents all modifications to execution states and data by keeping all program executions idle before transferring data in order to support consistency within a period much shorter than the standard power hold-up time of about 16 minutes. For consistency, when the power is recovered, the computer almost immediately revives itself and re-executes all the offline processes immediately without the need for a boot process.

The researchers will present their work (LightPC: Hardware and Software Co-Design for Energy-Efficient Full System Persistence) at the International Symposium on Computer Architecture (ISCA) 2022 in New York in June. More information is available at the CAMELab website (http://camelab.org).

-Profile: Professor Myoungsoo Jung Computer Architecture and Memory Systems Laboratory (CAMEL)http://camelab.org School of Electrical EngineeringKAIST

2022.04.25 View 24130

LightPC Presents a Resilient System Using Only Non-Volatile Memory

Lightweight Persistence Centric System (LightPC) ensures both data and execution persistence for energy-efficient full system persistence

A KAIST research team has developed hardware and software technology that ensures both data and execution persistence. The Lightweight Persistence Centric System (LightPC) makes the systems resilient against power failures by utilizing only non-volatile memory as the main memory.

“We mounted non-volatile memory on a system board prototype and created an operating system to verify the effectiveness of LightPC,” said Professor Myoungsoo Jung. The team confirmed that LightPC validated its execution while powering up and down in the middle of execution, showing up to eight times more memory, 4.3 times faster application execution, and 73% lower power consumption compared to traditional systems.

Professor Jung said that LightPC can be utilized in a variety of fields such as data centers and high-performance computing to provide large-capacity memory, high performance, low power consumption, and service reliability.

In general, power failures on legacy systems can lead to the loss of data stored in the DRAM-based main memory. Unlike volatile memory such as DRAM, non-volatile memory can retain its data without power. Although non-volatile memory has the characteristics of lower power consumption and larger capacity than DRAM, non-volatile memory is typically used for the task of secondary storage due to its lower write performance. For this reason, nonvolatile memory is often used with DRAM. However, modern systems employing non-volatile memory-based main memory experience unexpected performance degradation due to the complicated memory microarchitecture.

To enable both data and execution persistent in legacy systems, it is necessary to transfer the data from the volatile memory to the non-volatile memory. Checkpointing is one possible solution. It periodically transfers the data in preparation for a sudden power failure. While this technology is essential for ensuring high mobility and reliability for users, checkpointing also has fatal drawbacks. It takes additional time and power to move data and requires a data recovery process as well as restarting the system.

In order to address these issues, the research team developed a processor and memory controller to raise the performance of non-volatile memory-only memory. LightPC matches the performance of DRAM by minimizing the internal volatile memory components from non-volatile memory, exposing the non-volatile memory (PRAM) media to the host, and increasing parallelism to service on-the-fly requests as soon as possible.

The team also presented operating system technology that quickly makes execution states of running processes persistent without the need for a checkpointing process. The operating system prevents all modifications to execution states and data by keeping all program executions idle before transferring data in order to support consistency within a period much shorter than the standard power hold-up time of about 16 minutes. For consistency, when the power is recovered, the computer almost immediately revives itself and re-executes all the offline processes immediately without the need for a boot process.

The researchers will present their work (LightPC: Hardware and Software Co-Design for Energy-Efficient Full System Persistence) at the International Symposium on Computer Architecture (ISCA) 2022 in New York in June. More information is available at the CAMELab website (http://camelab.org).

-Profile: Professor Myoungsoo Jung Computer Architecture and Memory Systems Laboratory (CAMEL)http://camelab.org School of Electrical EngineeringKAIST

2022.04.25 View 24130 -

CXL-Based Memory Disaggregation Technology Opens Up a New Direction for Big Data Solution Frameworks

A KAIST team’s compute express link (CXL) provides new insights on memory disaggregation and ensures direct access and high-performance capabilities

A team from the Computer Architecture and Memory Systems Laboratory (CAMEL) at KAIST presented a new compute express link (CXL) solution whose directly accessible, and high-performance memory disaggregation opens new directions for big data memory processing. Professor Myoungsoo Jung said the team’s technology significantly improves performance compared to existing remote direct memory access (RDMA)-based memory disaggregation.

CXL is a peripheral component interconnect-express (PCIe)-based new dynamic multi-protocol made for efficiently utilizing memory devices and accelerators. Many enterprise data centers and memory vendors are paying attention to it as the next-generation multi-protocol for the era of big data.

Emerging big data applications such as machine learning, graph analytics, and in-memory databases require large memory capacities. However, scaling out the memory capacity via a prior memory interface like double data rate (DDR) is limited by the number of the central processing units (CPUs) and memory controllers. Therefore, memory disaggregation, which allows connecting a host to another host’s memory or memory nodes, has appeared.

RDMA is a way that a host can directly access another host’s memory via InfiniBand, the commonly used network protocol in data centers. Nowadays, most existing memory disaggregation technologies employ RDMA to get a large memory capacity. As a result, a host can share another host’s memory by transferring the data between local and remote memory.

Although RDMA-based memory disaggregation provides a large memory capacity to a host, two critical problems exist. First, scaling out the memory still needs an extra CPU to be added. Since passive memory such as dynamic random-access memory (DRAM), cannot operate by itself, it should be controlled by the CPU. Second, redundant data copies and software fabric interventions for RDMA-based memory disaggregation cause longer access latency. For example, remote memory access latency in RDMA-based memory disaggregation is multiple orders of magnitude longer than local memory access.

To address these issues, Professor Jung’s team developed the CXL-based memory disaggregation framework, including CXL-enabled customized CPUs, CXL devices, CXL switches, and CXL-aware operating system modules. The team’s CXL device is a pure passive and directly accessible memory node that contains multiple DRAM dual inline memory modules (DIMMs) and a CXL memory controller. Since the CXL memory controller supports the memory in the CXL device, a host can utilize the memory node without processor or software intervention. The team’s CXL switch enables scaling out a host’s memory capacity by hierarchically connecting multiple CXL devices to the CXL switch allowing more than hundreds of devices. Atop the switches and devices, the team’s CXL-enabled operating system removes redundant data copy and protocol conversion exhibited by conventional RDMA, which can significantly decrease access latency to the memory nodes.

In a test comparing loading 64B (cacheline) data from memory pooling devices, CXL-based memory disaggregation showed 8.2 times higher data load performance than RDMA-based memory disaggregation and even similar performance to local DRAM memory. In the team’s evaluations for a big data benchmark such as a machine learning-based test, CXL-based memory disaggregation technology also showed a maximum of 3.7 times higher performance than prior RDMA-based memory disaggregation technologies.

“Escaping from the conventional RDMA-based memory disaggregation, our CXL-based memory disaggregation framework can provide high scalability and performance for diverse datacenters and cloud service infrastructures,” said Professor Jung. He went on to stress, “Our CXL-based memory disaggregation research will bring about a new paradigm for memory solutions that will lead the era of big data.”

-Profile: Professor Myoungsoo Jung Computer Architecture and Memory Systems Laboratory (CAMEL)http://camelab.org School of Electrical EngineeringKAIST

2022.03.16 View 24155

CXL-Based Memory Disaggregation Technology Opens Up a New Direction for Big Data Solution Frameworks

A KAIST team’s compute express link (CXL) provides new insights on memory disaggregation and ensures direct access and high-performance capabilities

A team from the Computer Architecture and Memory Systems Laboratory (CAMEL) at KAIST presented a new compute express link (CXL) solution whose directly accessible, and high-performance memory disaggregation opens new directions for big data memory processing. Professor Myoungsoo Jung said the team’s technology significantly improves performance compared to existing remote direct memory access (RDMA)-based memory disaggregation.

CXL is a peripheral component interconnect-express (PCIe)-based new dynamic multi-protocol made for efficiently utilizing memory devices and accelerators. Many enterprise data centers and memory vendors are paying attention to it as the next-generation multi-protocol for the era of big data.

Emerging big data applications such as machine learning, graph analytics, and in-memory databases require large memory capacities. However, scaling out the memory capacity via a prior memory interface like double data rate (DDR) is limited by the number of the central processing units (CPUs) and memory controllers. Therefore, memory disaggregation, which allows connecting a host to another host’s memory or memory nodes, has appeared.

RDMA is a way that a host can directly access another host’s memory via InfiniBand, the commonly used network protocol in data centers. Nowadays, most existing memory disaggregation technologies employ RDMA to get a large memory capacity. As a result, a host can share another host’s memory by transferring the data between local and remote memory.

Although RDMA-based memory disaggregation provides a large memory capacity to a host, two critical problems exist. First, scaling out the memory still needs an extra CPU to be added. Since passive memory such as dynamic random-access memory (DRAM), cannot operate by itself, it should be controlled by the CPU. Second, redundant data copies and software fabric interventions for RDMA-based memory disaggregation cause longer access latency. For example, remote memory access latency in RDMA-based memory disaggregation is multiple orders of magnitude longer than local memory access.

To address these issues, Professor Jung’s team developed the CXL-based memory disaggregation framework, including CXL-enabled customized CPUs, CXL devices, CXL switches, and CXL-aware operating system modules. The team’s CXL device is a pure passive and directly accessible memory node that contains multiple DRAM dual inline memory modules (DIMMs) and a CXL memory controller. Since the CXL memory controller supports the memory in the CXL device, a host can utilize the memory node without processor or software intervention. The team’s CXL switch enables scaling out a host’s memory capacity by hierarchically connecting multiple CXL devices to the CXL switch allowing more than hundreds of devices. Atop the switches and devices, the team’s CXL-enabled operating system removes redundant data copy and protocol conversion exhibited by conventional RDMA, which can significantly decrease access latency to the memory nodes.

In a test comparing loading 64B (cacheline) data from memory pooling devices, CXL-based memory disaggregation showed 8.2 times higher data load performance than RDMA-based memory disaggregation and even similar performance to local DRAM memory. In the team’s evaluations for a big data benchmark such as a machine learning-based test, CXL-based memory disaggregation technology also showed a maximum of 3.7 times higher performance than prior RDMA-based memory disaggregation technologies.

“Escaping from the conventional RDMA-based memory disaggregation, our CXL-based memory disaggregation framework can provide high scalability and performance for diverse datacenters and cloud service infrastructures,” said Professor Jung. He went on to stress, “Our CXL-based memory disaggregation research will bring about a new paradigm for memory solutions that will lead the era of big data.”

-Profile: Professor Myoungsoo Jung Computer Architecture and Memory Systems Laboratory (CAMEL)http://camelab.org School of Electrical EngineeringKAIST

2022.03.16 View 24155 -

Advanced NVMe Controller Technology for Next Generation Memory Devices

KAIST researchers advanced non-volatile memory express (NVMe) controller technology for next generation information storage devices, and made this new technology named ‘OpenExpress’ freely available to all universities and research institutes around the world to help reduce the research cost in related fields.

NVMe is a communication protocol made for high-performance storage devices based on a peripheral component interconnect-express (PCI-E) interface. NVMe has been developed to take the place of the Serial AT Attachment (SATA) protocol, which was developed to process data on hard disk drives (HDDs) and did not perform well in solid state drives (SSDs).

Unlike HDDs that use magnetic spinning disks, SSDs use semiconductor memory, allowing the rapid reading and writing of data. SSDs also generate less heat and noise, and are much more compact and lightweight.

Since data processing in SSDs using NVMe is up to six times faster than when SATA is used, NVMe has become the standard protocol for ultra-high speed and volume data processing, and is currently used in many flash-based information storage devices.

Studies on NVMe continue at both the academic and industrial levels, however, its poor accessibility is a drawback. Major information and communications technology (ICT) companies around the world expend astronomical costs to procure intellectual property (IP) related to hardware NVMe controllers, necessary for the use of NVMe. However, such IP is not publicly disclosed, making it difficult to be used by universities and research institutes for research purposes.

Although a small number of U.S. Silicon Valley startups provide parts of their independently developed IP for research, the cost of usage is around 34,000 USD per month. The costs skyrocket even further because each copy of single-use source code purchased for IP modification costs approximately 84,000 USD.

In order to address these issues, a group of researchers led by Professor Myoungsoo Jung from the School of Electrical Engineering at KAIST developed a next generation NVMe controller technology that achieved parallel data input/output processing for SSDs in a fully hardware automated form.

The researchers presented their work at the 2020 USENIX Annual Technical Conference (USENIX ATC ’20) in July, and released it as an open research framework named ‘OpenExpress.’

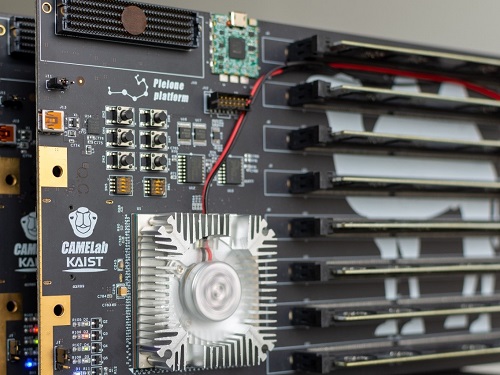

This NVMe controller technology developed by Professor Jung’s team comprises a wide range of basic hardware IP and key NVMe IP cores. To examine its actual performance, the team made an NVMe hardware controller prototype using OpenExpress, and designed all logics provided by OpenExpress to operate at high frequency.

The field-programmable gate array (FPGA) memory card prototype developed using OpenExpress demonstrated increased input/output data processing capacity per second, supporting up to 7 gigabit per second (GB/s) bandwidth. This makes it suitable for ultra-high speed and volume next generation memory device research.

In a test comparing various storage server loads on devices, the team’s FPGA also showed 76% higher bandwidth and 68% lower input/output delay compared to Intel’s new high performance SSD (Optane SSD), which is sufficient for many researchers studying systems employing future memory devices. Depending on user needs, silicon devices can be synthesized as well, which is expected to further enhance performance.

The NVMe controller technology of Professor Jung’s team can be freely used and modified under the OpenExpress open-source end-user agreement for non-commercial use by all universities and research institutes. This makes it extremely useful for research on next-generation memory compatible NVMe controllers and software stacks.

“With the product of this study being disclosed to the world, universities and research institutes can now use controllers that used to be exclusive for only the world’s biggest companies, at no cost,ˮ said Professor Jung. He went on to stress, “This is a meaningful first step in research of information storage device systems such as high-speed and volume next generation memory.”

This work was supported by a grant from MemRay, a company specializing in next generation memory development and distribution.

More details about the study can be found at http://camelab.org.

Image credit: Professor Myoungsoo Jung, KAIST

Image usage restrictions: News organizations may use or redistribute these figures and image, with proper attribution, as part of news coverage of this paper only.

-Publication:

Myoungsoo Jung. (2020). OpenExpress: Fully Hardware Automated Open Research Framework for Future Fast NVMe Devices. Presented in the Proceedings of the 2020 USENIX Annual Technical Conference (USENIX ATC ’20), Available online at https://www.usenix.org/system/files/atc20-jung.pdf

-Profile: Myoungsoo Jung

Associate Professor

m.jung@kaist.ac.kr

http://camelab.org

Computer Architecture and Memory Systems Laboratory

School of Electrical Engineering

http://kaist.ac.kr

Korea Advanced Institute of Science and Technology (KAIST)

Daejeon, Republic of Korea

(END)

2020.09.04 View 12359

Advanced NVMe Controller Technology for Next Generation Memory Devices

KAIST researchers advanced non-volatile memory express (NVMe) controller technology for next generation information storage devices, and made this new technology named ‘OpenExpress’ freely available to all universities and research institutes around the world to help reduce the research cost in related fields.

NVMe is a communication protocol made for high-performance storage devices based on a peripheral component interconnect-express (PCI-E) interface. NVMe has been developed to take the place of the Serial AT Attachment (SATA) protocol, which was developed to process data on hard disk drives (HDDs) and did not perform well in solid state drives (SSDs).

Unlike HDDs that use magnetic spinning disks, SSDs use semiconductor memory, allowing the rapid reading and writing of data. SSDs also generate less heat and noise, and are much more compact and lightweight.

Since data processing in SSDs using NVMe is up to six times faster than when SATA is used, NVMe has become the standard protocol for ultra-high speed and volume data processing, and is currently used in many flash-based information storage devices.

Studies on NVMe continue at both the academic and industrial levels, however, its poor accessibility is a drawback. Major information and communications technology (ICT) companies around the world expend astronomical costs to procure intellectual property (IP) related to hardware NVMe controllers, necessary for the use of NVMe. However, such IP is not publicly disclosed, making it difficult to be used by universities and research institutes for research purposes.

Although a small number of U.S. Silicon Valley startups provide parts of their independently developed IP for research, the cost of usage is around 34,000 USD per month. The costs skyrocket even further because each copy of single-use source code purchased for IP modification costs approximately 84,000 USD.

In order to address these issues, a group of researchers led by Professor Myoungsoo Jung from the School of Electrical Engineering at KAIST developed a next generation NVMe controller technology that achieved parallel data input/output processing for SSDs in a fully hardware automated form.

The researchers presented their work at the 2020 USENIX Annual Technical Conference (USENIX ATC ’20) in July, and released it as an open research framework named ‘OpenExpress.’

This NVMe controller technology developed by Professor Jung’s team comprises a wide range of basic hardware IP and key NVMe IP cores. To examine its actual performance, the team made an NVMe hardware controller prototype using OpenExpress, and designed all logics provided by OpenExpress to operate at high frequency.

The field-programmable gate array (FPGA) memory card prototype developed using OpenExpress demonstrated increased input/output data processing capacity per second, supporting up to 7 gigabit per second (GB/s) bandwidth. This makes it suitable for ultra-high speed and volume next generation memory device research.

In a test comparing various storage server loads on devices, the team’s FPGA also showed 76% higher bandwidth and 68% lower input/output delay compared to Intel’s new high performance SSD (Optane SSD), which is sufficient for many researchers studying systems employing future memory devices. Depending on user needs, silicon devices can be synthesized as well, which is expected to further enhance performance.

The NVMe controller technology of Professor Jung’s team can be freely used and modified under the OpenExpress open-source end-user agreement for non-commercial use by all universities and research institutes. This makes it extremely useful for research on next-generation memory compatible NVMe controllers and software stacks.

“With the product of this study being disclosed to the world, universities and research institutes can now use controllers that used to be exclusive for only the world’s biggest companies, at no cost,ˮ said Professor Jung. He went on to stress, “This is a meaningful first step in research of information storage device systems such as high-speed and volume next generation memory.”

This work was supported by a grant from MemRay, a company specializing in next generation memory development and distribution.

More details about the study can be found at http://camelab.org.

Image credit: Professor Myoungsoo Jung, KAIST

Image usage restrictions: News organizations may use or redistribute these figures and image, with proper attribution, as part of news coverage of this paper only.

-Publication:

Myoungsoo Jung. (2020). OpenExpress: Fully Hardware Automated Open Research Framework for Future Fast NVMe Devices. Presented in the Proceedings of the 2020 USENIX Annual Technical Conference (USENIX ATC ’20), Available online at https://www.usenix.org/system/files/atc20-jung.pdf

-Profile: Myoungsoo Jung

Associate Professor

m.jung@kaist.ac.kr

http://camelab.org

Computer Architecture and Memory Systems Laboratory

School of Electrical Engineering

http://kaist.ac.kr

Korea Advanced Institute of Science and Technology (KAIST)

Daejeon, Republic of Korea

(END)

2020.09.04 View 12359