visual+computing

-

KAIST Develops Insect-Eye-Inspired Camera Capturing 9,120 Frames Per Second

< (From left) Bio and Brain Engineering PhD Student Jae-Myeong Kwon, Professor Ki-Hun Jeong, PhD Student Hyun-Kyung Kim, PhD Student Young-Gil Cha, and Professor Min H. Kim of the School of Computing >

The compound eyes of insects can detect fast-moving objects in parallel and, in low-light conditions, enhance sensitivity by integrating signals over time to determine motion. Inspired by these biological mechanisms, KAIST researchers have successfully developed a low-cost, high-speed camera that overcomes the limitations of frame rate and sensitivity faced by conventional high-speed cameras.

KAIST (represented by President Kwang Hyung Lee) announced on the 16th of January that a research team led by Professors Ki-Hun Jeong (Department of Bio and Brain Engineering) and Min H. Kim (School of Computing) has developed a novel bio-inspired camera capable of ultra-high-speed imaging with high sensitivity by mimicking the visual structure of insect eyes.

High-quality imaging under high-speed and low-light conditions is a critical challenge in many applications. While conventional high-speed cameras excel in capturing fast motion, their sensitivity decreases as frame rates increase because the time available to collect light is reduced.

To address this issue, the research team adopted an approach similar to insect vision, utilizing multiple optical channels and temporal summation. Unlike traditional monocular camera systems, the bio-inspired camera employs a compound-eye-like structure that allows for the parallel acquisition of frames from different time intervals.

< Figure 1. (A) Vision in a fast-eyed insect. Reflected light from swiftly moving objects sequentially stimulates the photoreceptors along the individual optical channels called ommatidia, of which the visual signals are separately and parallelly processed via the lamina and medulla. Each neural response is temporally summed to enhance the visual signals. The parallel processing and temporal summation allow fast and low-light imaging in dim light. (B) High-speed and high-sensitivity microlens array camera (HS-MAC). A rolling shutter image sensor is utilized to simultaneously acquire multiple frames by channel division, and temporal summation is performed in parallel to realize high speed and sensitivity even in a low-light environment. In addition, the frame components of a single fragmented array image are stitched into a single blurred frame, which is subsequently deblurred by compressive image reconstruction. >

During this process, light is accumulated over overlapping time periods for each frame, increasing the signal-to-noise ratio. The researchers demonstrated that their bio-inspired camera could capture objects up to 40 times dimmer than those detectable by conventional high-speed cameras.

The team also introduced a "channel-splitting" technique to significantly enhance the camera's speed, achieving frame rates thousands of times faster than those supported by the image sensors used in packaging. Additionally, a "compressed image restoration" algorithm was employed to eliminate blur caused by frame integration and reconstruct sharp images.

The resulting bio-inspired camera is less than one millimeter thick and extremely compact, capable of capturing 9,120 frames per second while providing clear images in low-light conditions.

< Figure 2. A high-speed, high-sensitivity biomimetic camera packaged in an image sensor. It is made small enough to fit on a finger, with a thickness of less than 1 mm. >

The research team plans to extend this technology to develop advanced image processing algorithms for 3D imaging and super-resolution imaging, aiming for applications in biomedical imaging, mobile devices, and various other camera technologies.

Hyun-Kyung Kim, a doctoral student in the Department of Bio and Brain Engineering at KAIST and the study's first author, stated, “We have experimentally validated that the insect-eye-inspired camera delivers outstanding performance in high-speed and low-light imaging despite its small size. This camera opens up possibilities for diverse applications in portable camera systems, security surveillance, and medical imaging.”

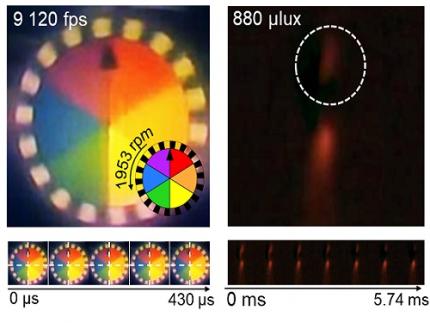

< Figure 3. Rotating plate and flame captured using the high-speed, high-sensitivity biomimetic camera. The rotating plate at 1,950 rpm was accurately captured at 9,120 fps. In addition, the pinch-off of the flame with a faint intensity of 880 µlux was accurately captured at 1,020 fps. >

This research was published in the international journal Science Advances in January 2025 (Paper Title: “Biologically-inspired microlens array camera for high-speed and high-sensitivity imaging”).

DOI: https://doi.org/10.1126/sciadv.ads3389

This study was supported by the Korea Research Institute for Defense Technology Planning and Advancement (KRIT) of the Defense Acquisition Program Administration (DAPA), the Ministry of Science and ICT, and the Ministry of Trade, Industry and Energy (MOTIE).

2025.01.16 View 8592

KAIST Develops Insect-Eye-Inspired Camera Capturing 9,120 Frames Per Second

< (From left) Bio and Brain Engineering PhD Student Jae-Myeong Kwon, Professor Ki-Hun Jeong, PhD Student Hyun-Kyung Kim, PhD Student Young-Gil Cha, and Professor Min H. Kim of the School of Computing >

The compound eyes of insects can detect fast-moving objects in parallel and, in low-light conditions, enhance sensitivity by integrating signals over time to determine motion. Inspired by these biological mechanisms, KAIST researchers have successfully developed a low-cost, high-speed camera that overcomes the limitations of frame rate and sensitivity faced by conventional high-speed cameras.

KAIST (represented by President Kwang Hyung Lee) announced on the 16th of January that a research team led by Professors Ki-Hun Jeong (Department of Bio and Brain Engineering) and Min H. Kim (School of Computing) has developed a novel bio-inspired camera capable of ultra-high-speed imaging with high sensitivity by mimicking the visual structure of insect eyes.

High-quality imaging under high-speed and low-light conditions is a critical challenge in many applications. While conventional high-speed cameras excel in capturing fast motion, their sensitivity decreases as frame rates increase because the time available to collect light is reduced.

To address this issue, the research team adopted an approach similar to insect vision, utilizing multiple optical channels and temporal summation. Unlike traditional monocular camera systems, the bio-inspired camera employs a compound-eye-like structure that allows for the parallel acquisition of frames from different time intervals.

< Figure 1. (A) Vision in a fast-eyed insect. Reflected light from swiftly moving objects sequentially stimulates the photoreceptors along the individual optical channels called ommatidia, of which the visual signals are separately and parallelly processed via the lamina and medulla. Each neural response is temporally summed to enhance the visual signals. The parallel processing and temporal summation allow fast and low-light imaging in dim light. (B) High-speed and high-sensitivity microlens array camera (HS-MAC). A rolling shutter image sensor is utilized to simultaneously acquire multiple frames by channel division, and temporal summation is performed in parallel to realize high speed and sensitivity even in a low-light environment. In addition, the frame components of a single fragmented array image are stitched into a single blurred frame, which is subsequently deblurred by compressive image reconstruction. >

During this process, light is accumulated over overlapping time periods for each frame, increasing the signal-to-noise ratio. The researchers demonstrated that their bio-inspired camera could capture objects up to 40 times dimmer than those detectable by conventional high-speed cameras.

The team also introduced a "channel-splitting" technique to significantly enhance the camera's speed, achieving frame rates thousands of times faster than those supported by the image sensors used in packaging. Additionally, a "compressed image restoration" algorithm was employed to eliminate blur caused by frame integration and reconstruct sharp images.

The resulting bio-inspired camera is less than one millimeter thick and extremely compact, capable of capturing 9,120 frames per second while providing clear images in low-light conditions.

< Figure 2. A high-speed, high-sensitivity biomimetic camera packaged in an image sensor. It is made small enough to fit on a finger, with a thickness of less than 1 mm. >

The research team plans to extend this technology to develop advanced image processing algorithms for 3D imaging and super-resolution imaging, aiming for applications in biomedical imaging, mobile devices, and various other camera technologies.

Hyun-Kyung Kim, a doctoral student in the Department of Bio and Brain Engineering at KAIST and the study's first author, stated, “We have experimentally validated that the insect-eye-inspired camera delivers outstanding performance in high-speed and low-light imaging despite its small size. This camera opens up possibilities for diverse applications in portable camera systems, security surveillance, and medical imaging.”

< Figure 3. Rotating plate and flame captured using the high-speed, high-sensitivity biomimetic camera. The rotating plate at 1,950 rpm was accurately captured at 9,120 fps. In addition, the pinch-off of the flame with a faint intensity of 880 µlux was accurately captured at 1,020 fps. >

This research was published in the international journal Science Advances in January 2025 (Paper Title: “Biologically-inspired microlens array camera for high-speed and high-sensitivity imaging”).

DOI: https://doi.org/10.1126/sciadv.ads3389

This study was supported by the Korea Research Institute for Defense Technology Planning and Advancement (KRIT) of the Defense Acquisition Program Administration (DAPA), the Ministry of Science and ICT, and the Ministry of Trade, Industry and Energy (MOTIE).

2025.01.16 View 8592 -

A Deep-Learned E-Skin Decodes Complex Human Motion

A deep-learning powered single-strained electronic skin sensor can capture human motion from a distance. The single strain sensor placed on the wrist decodes complex five-finger motions in real time with a virtual 3D hand that mirrors the original motions. The deep neural network boosted by rapid situation learning (RSL) ensures stable operation regardless of its position on the surface of the skin.

Conventional approaches require many sensor networks that cover the entire curvilinear surfaces of the target area. Unlike conventional wafer-based fabrication, this laser fabrication provides a new sensing paradigm for motion tracking.

The research team, led by Professor Sungho Jo from the School of Computing, collaborated with Professor Seunghwan Ko from Seoul National University to design this new measuring system that extracts signals corresponding to multiple finger motions by generating cracks in metal nanoparticle films using laser technology. The sensor patch was then attached to a user’s wrist to detect the movement of the fingers.

The concept of this research started from the idea that pinpointing a single area would be more efficient for identifying movements than affixing sensors to every joint and muscle. To make this targeting strategy work, it needs to accurately capture the signals from different areas at the point where they all converge, and then decoupling the information entangled in the converged signals. To maximize users’ usability and mobility, the research team used a single-channeled sensor to generate the signals corresponding to complex hand motions.

The rapid situation learning (RSL) system collects data from arbitrary parts on the wrist and automatically trains the model in a real-time demonstration with a virtual 3D hand that mirrors the original motions. To enhance the sensitivity of the sensor, researchers used laser-induced nanoscale cracking.

This sensory system can track the motion of the entire body with a small sensory network and facilitate the indirect remote measurement of human motions, which is applicable for wearable VR/AR systems.

The research team said they focused on two tasks while developing the sensor. First, they analyzed the sensor signal patterns into a latent space encapsulating temporal sensor behavior and then they mapped the latent vectors to finger motion metric spaces.

Professor Jo said, “Our system is expandable to other body parts. We already confirmed that the sensor is also capable of extracting gait motions from a pelvis. This technology is expected to provide a turning point in health-monitoring, motion tracking, and soft robotics.”

This study was featured in Nature Communications.

Publication:

Kim, K. K., et al. (2020) A deep-learned skin sensor decoding the epicentral human motions. Nature Communications. 11. 2149. https://doi.org/10.1038/s41467-020-16040-y29

Link to download the full-text paper:

https://www.nature.com/articles/s41467-020-16040-y.pdf

Profile: Professor Sungho Jo

shjo@kaist.ac.kr

http://nmail.kaist.ac.kr

Neuro-Machine Augmented Intelligence Lab

School of Computing

College of Engineering

KAIST

2020.06.10 View 14462

A Deep-Learned E-Skin Decodes Complex Human Motion

A deep-learning powered single-strained electronic skin sensor can capture human motion from a distance. The single strain sensor placed on the wrist decodes complex five-finger motions in real time with a virtual 3D hand that mirrors the original motions. The deep neural network boosted by rapid situation learning (RSL) ensures stable operation regardless of its position on the surface of the skin.

Conventional approaches require many sensor networks that cover the entire curvilinear surfaces of the target area. Unlike conventional wafer-based fabrication, this laser fabrication provides a new sensing paradigm for motion tracking.

The research team, led by Professor Sungho Jo from the School of Computing, collaborated with Professor Seunghwan Ko from Seoul National University to design this new measuring system that extracts signals corresponding to multiple finger motions by generating cracks in metal nanoparticle films using laser technology. The sensor patch was then attached to a user’s wrist to detect the movement of the fingers.

The concept of this research started from the idea that pinpointing a single area would be more efficient for identifying movements than affixing sensors to every joint and muscle. To make this targeting strategy work, it needs to accurately capture the signals from different areas at the point where they all converge, and then decoupling the information entangled in the converged signals. To maximize users’ usability and mobility, the research team used a single-channeled sensor to generate the signals corresponding to complex hand motions.

The rapid situation learning (RSL) system collects data from arbitrary parts on the wrist and automatically trains the model in a real-time demonstration with a virtual 3D hand that mirrors the original motions. To enhance the sensitivity of the sensor, researchers used laser-induced nanoscale cracking.

This sensory system can track the motion of the entire body with a small sensory network and facilitate the indirect remote measurement of human motions, which is applicable for wearable VR/AR systems.

The research team said they focused on two tasks while developing the sensor. First, they analyzed the sensor signal patterns into a latent space encapsulating temporal sensor behavior and then they mapped the latent vectors to finger motion metric spaces.

Professor Jo said, “Our system is expandable to other body parts. We already confirmed that the sensor is also capable of extracting gait motions from a pelvis. This technology is expected to provide a turning point in health-monitoring, motion tracking, and soft robotics.”

This study was featured in Nature Communications.

Publication:

Kim, K. K., et al. (2020) A deep-learned skin sensor decoding the epicentral human motions. Nature Communications. 11. 2149. https://doi.org/10.1038/s41467-020-16040-y29

Link to download the full-text paper:

https://www.nature.com/articles/s41467-020-16040-y.pdf

Profile: Professor Sungho Jo

shjo@kaist.ac.kr

http://nmail.kaist.ac.kr

Neuro-Machine Augmented Intelligence Lab

School of Computing

College of Engineering

KAIST

2020.06.10 View 14462