machine+learning

-

KAIST Develops AI to Easily Find Promising Materials That Capture Only CO₂

< Photo 1. (From left) Professor Jihan Kim, Ph.D. candidate Yunsung Lim and Dr. Hyunsoo Park of the Department of Chemical and Biomolecular Engineering >

In order to help prevent the climate crisis, actively reducing already-emitted CO₂ is essential. Accordingly, direct air capture (DAC) — a technology that directly extracts only CO₂ from the air — is gaining attention. However, effectively capturing pure CO₂ is not easy due to water vapor (H₂O) present in the air. KAIST researchers have successfully used AI-driven machine learning techniques to identify the most promising CO₂-capturing materials among metal-organic frameworks (MOFs), a key class of materials studied for this technology.

KAIST (President Kwang Hyung Lee) announced on the 29th of June that a research team led by Professor Jihan Kim from the Department of Chemical and Biomolecular Engineering, in collaboration with a team at Imperial College London, has developed a machine-learning-based simulation method that can quickly and accurately screen MOFs best suited for atmospheric CO₂ capture.

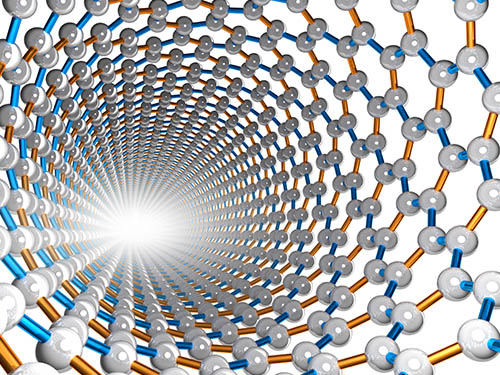

< Figure 1. Concept diagram of Direct Air Capture (DAC) technology and carbon capture using Metal-Organic Frameworks (MOFs). MOFs are promising porous materials capable of capturing carbon dioxide from the atmosphere, drawing attention as a core material for DAC technology. >

To overcome the difficulty of discovering high-performance materials due to the complexity of structures and the limitations of predicting intermolecular interactions, the research team developed a machine learning force field (MLFF) capable of precisely predicting the interactions between CO₂, water (H₂O), and MOFs. This new method enables calculations of MOF adsorption properties with quantum-mechanics-level accuracy at vastly faster speeds than before.

Using this system, the team screened over 8,000 experimentally synthesized MOF structures, identifying more than 100 promising candidates for CO₂ capture. Notably, this included new candidates that had not been uncovered by traditional force-field-based simulations. The team also analyzed the relationships between MOF chemical structure and adsorption performance, proposing seven key chemical features that will help in designing new materials for DAC.

< Figure 2. Concept diagram of adsorption simulation using Machine Learning Force Field (MLFF). The developed MLFF is applicable to various MOF structures and allows for precise calculation of adsorption properties by predicting interaction energies during repetitive Widom insertion simulations. It is characterized by simultaneously achieving high accuracy and low computational cost compared to conventional classical force fields. >

This research is recognized as a significant advance in the DAC field, greatly enhancing materials design and simulation by precisely predicting MOF-CO₂ and MOF-H₂O interactions.

The results of this research, with Ph.D. candidate Yunsung Lim and Dr. Hyunsoo Park of KAIST as co-first authors, were published in the international academic journal Matter on June 12.

※Paper Title: Accelerating CO₂ direct air capture screening for metal–organic frameworks with a transferable machine learning force field

※DOI: 10.1016/j.matt.2025.102203

This research was supported by the Saudi Aramco-KAIST CO₂ Management Center and the Ministry of Science and ICT's Global C.L.E.A.N. Project.

2025.06.29 View 266

KAIST Develops AI to Easily Find Promising Materials That Capture Only CO₂

< Photo 1. (From left) Professor Jihan Kim, Ph.D. candidate Yunsung Lim and Dr. Hyunsoo Park of the Department of Chemical and Biomolecular Engineering >

In order to help prevent the climate crisis, actively reducing already-emitted CO₂ is essential. Accordingly, direct air capture (DAC) — a technology that directly extracts only CO₂ from the air — is gaining attention. However, effectively capturing pure CO₂ is not easy due to water vapor (H₂O) present in the air. KAIST researchers have successfully used AI-driven machine learning techniques to identify the most promising CO₂-capturing materials among metal-organic frameworks (MOFs), a key class of materials studied for this technology.

KAIST (President Kwang Hyung Lee) announced on the 29th of June that a research team led by Professor Jihan Kim from the Department of Chemical and Biomolecular Engineering, in collaboration with a team at Imperial College London, has developed a machine-learning-based simulation method that can quickly and accurately screen MOFs best suited for atmospheric CO₂ capture.

< Figure 1. Concept diagram of Direct Air Capture (DAC) technology and carbon capture using Metal-Organic Frameworks (MOFs). MOFs are promising porous materials capable of capturing carbon dioxide from the atmosphere, drawing attention as a core material for DAC technology. >

To overcome the difficulty of discovering high-performance materials due to the complexity of structures and the limitations of predicting intermolecular interactions, the research team developed a machine learning force field (MLFF) capable of precisely predicting the interactions between CO₂, water (H₂O), and MOFs. This new method enables calculations of MOF adsorption properties with quantum-mechanics-level accuracy at vastly faster speeds than before.

Using this system, the team screened over 8,000 experimentally synthesized MOF structures, identifying more than 100 promising candidates for CO₂ capture. Notably, this included new candidates that had not been uncovered by traditional force-field-based simulations. The team also analyzed the relationships between MOF chemical structure and adsorption performance, proposing seven key chemical features that will help in designing new materials for DAC.

< Figure 2. Concept diagram of adsorption simulation using Machine Learning Force Field (MLFF). The developed MLFF is applicable to various MOF structures and allows for precise calculation of adsorption properties by predicting interaction energies during repetitive Widom insertion simulations. It is characterized by simultaneously achieving high accuracy and low computational cost compared to conventional classical force fields. >

This research is recognized as a significant advance in the DAC field, greatly enhancing materials design and simulation by precisely predicting MOF-CO₂ and MOF-H₂O interactions.

The results of this research, with Ph.D. candidate Yunsung Lim and Dr. Hyunsoo Park of KAIST as co-first authors, were published in the international academic journal Matter on June 12.

※Paper Title: Accelerating CO₂ direct air capture screening for metal–organic frameworks with a transferable machine learning force field

※DOI: 10.1016/j.matt.2025.102203

This research was supported by the Saudi Aramco-KAIST CO₂ Management Center and the Ministry of Science and ICT's Global C.L.E.A.N. Project.

2025.06.29 View 266 -

KAIST & CMU Unveils Amuse, a Songwriting AI-Collaborator to Help Create Music

Wouldn't it be great if music creators had someone to brainstorm with, help them when they're stuck, and explore different musical directions together? Researchers of KAIST and Carnegie Mellon University (CMU) have developed AI technology similar to a fellow songwriter who helps create music.

KAIST (President Kwang-Hyung Lee) has developed an AI-based music creation support system, Amuse, by a research team led by Professor Sung-Ju Lee of the School of Electrical Engineering in collaboration with CMU. The research was presented at the ACM Conference on Human Factors in Computing Systems (CHI), one of the world’s top conferences in human-computer interaction, held in Yokohama, Japan from April 26 to May 1. It received the Best Paper Award, given to only the top 1% of all submissions.

< (From left) Professor Chris Donahue of Carnegie Mellon University, Ph.D. Student Yewon Kim and Professor Sung-Ju Lee of the School of Electrical Engineering >

The system developed by Professor Sung-Ju Lee’s research team, Amuse, is an AI-based system that converts various forms of inspiration such as text, images, and audio into harmonic structures (chord progressions) to support composition.

For example, if a user inputs a phrase, image, or sound clip such as “memories of a warm summer beach”, Amuse automatically generates and suggests chord progressions that match the inspiration.

Unlike existing generative AI, Amuse is differentiated in that it respects the user's creative flow and naturally induces creative exploration through an interactive method that allows flexible integration and modification of AI suggestions.

The core technology of the Amuse system is a generation method that blends two approaches: a large language model creates music code based on the user's prompt and inspiration, while another AI model, trained on real music data, filters out awkward or unnatural results using rejection sampling.

< Figure 1. Amuse system configuration. After extracting music keywords from user input, a large language model-based code progression is generated and refined through rejection sampling (left). Code extraction from audio input is also possible (right). The bottom is an example visualizing the chord structure of the generated code. >

The research team conducted a user study targeting actual musicians and evaluated that Amuse has high potential as a creative companion, or a Co-Creative AI, a concept in which people and AI collaborate, rather than having a generative AI simply put together a song.

The paper, in which a Ph.D. student Yewon Kim and Professor Sung-Ju Lee of KAIST School of Electrical and Electronic Engineering and Carnegie Mellon University Professor Chris Donahue participated, demonstrated the potential of creative AI system design in both academia and industry. ※ Paper title: Amuse: Human-AI Collaborative Songwriting with Multimodal Inspirations DOI: https://doi.org/10.1145/3706598.3713818

※ Research demo video: https://youtu.be/udilkRSnftI?si=FNXccC9EjxHOCrm1

※ Research homepage: https://nmsl.kaist.ac.kr/projects/amuse/

Professor Sung-Ju Lee said, “Recent generative AI technology has raised concerns in that it directly imitates copyrighted content, thereby violating the copyright of the creator, or generating results one-way regardless of the creator’s intention. Accordingly, the research team was aware of this trend, paid attention to what the creator actually needs, and focused on designing an AI system centered on the creator.”

He continued, “Amuse is an attempt to explore the possibility of collaboration with AI while maintaining the initiative of the creator, and is expected to be a starting point for suggesting a more creator-friendly direction in the development of music creation tools and generative AI systems in the future.”

This research was conducted with the support of the National Research Foundation of Korea with funding from the government (Ministry of Science and ICT). (RS-2024-00337007)

2025.05.07 View 4753

KAIST & CMU Unveils Amuse, a Songwriting AI-Collaborator to Help Create Music

Wouldn't it be great if music creators had someone to brainstorm with, help them when they're stuck, and explore different musical directions together? Researchers of KAIST and Carnegie Mellon University (CMU) have developed AI technology similar to a fellow songwriter who helps create music.

KAIST (President Kwang-Hyung Lee) has developed an AI-based music creation support system, Amuse, by a research team led by Professor Sung-Ju Lee of the School of Electrical Engineering in collaboration with CMU. The research was presented at the ACM Conference on Human Factors in Computing Systems (CHI), one of the world’s top conferences in human-computer interaction, held in Yokohama, Japan from April 26 to May 1. It received the Best Paper Award, given to only the top 1% of all submissions.

< (From left) Professor Chris Donahue of Carnegie Mellon University, Ph.D. Student Yewon Kim and Professor Sung-Ju Lee of the School of Electrical Engineering >

The system developed by Professor Sung-Ju Lee’s research team, Amuse, is an AI-based system that converts various forms of inspiration such as text, images, and audio into harmonic structures (chord progressions) to support composition.

For example, if a user inputs a phrase, image, or sound clip such as “memories of a warm summer beach”, Amuse automatically generates and suggests chord progressions that match the inspiration.

Unlike existing generative AI, Amuse is differentiated in that it respects the user's creative flow and naturally induces creative exploration through an interactive method that allows flexible integration and modification of AI suggestions.

The core technology of the Amuse system is a generation method that blends two approaches: a large language model creates music code based on the user's prompt and inspiration, while another AI model, trained on real music data, filters out awkward or unnatural results using rejection sampling.

< Figure 1. Amuse system configuration. After extracting music keywords from user input, a large language model-based code progression is generated and refined through rejection sampling (left). Code extraction from audio input is also possible (right). The bottom is an example visualizing the chord structure of the generated code. >

The research team conducted a user study targeting actual musicians and evaluated that Amuse has high potential as a creative companion, or a Co-Creative AI, a concept in which people and AI collaborate, rather than having a generative AI simply put together a song.

The paper, in which a Ph.D. student Yewon Kim and Professor Sung-Ju Lee of KAIST School of Electrical and Electronic Engineering and Carnegie Mellon University Professor Chris Donahue participated, demonstrated the potential of creative AI system design in both academia and industry. ※ Paper title: Amuse: Human-AI Collaborative Songwriting with Multimodal Inspirations DOI: https://doi.org/10.1145/3706598.3713818

※ Research demo video: https://youtu.be/udilkRSnftI?si=FNXccC9EjxHOCrm1

※ Research homepage: https://nmsl.kaist.ac.kr/projects/amuse/

Professor Sung-Ju Lee said, “Recent generative AI technology has raised concerns in that it directly imitates copyrighted content, thereby violating the copyright of the creator, or generating results one-way regardless of the creator’s intention. Accordingly, the research team was aware of this trend, paid attention to what the creator actually needs, and focused on designing an AI system centered on the creator.”

He continued, “Amuse is an attempt to explore the possibility of collaboration with AI while maintaining the initiative of the creator, and is expected to be a starting point for suggesting a more creator-friendly direction in the development of music creation tools and generative AI systems in the future.”

This research was conducted with the support of the National Research Foundation of Korea with funding from the government (Ministry of Science and ICT). (RS-2024-00337007)

2025.05.07 View 4753 -

Seanie Lee of KAIST Kim Jaechul Graduate School of AI, named the 2023 Apple Scholars in AI Machine Learning

Seanie Lee, a Ph.D. candidate at the Kim Jaechul Graduate School of AI, has been selected as one of the Apple Scholars in AI/ML PhD fellowship program recipients for 2023. Lee, advised by Sung Ju Hwang and Juho Lee, is a rising star in AI.

< Seanie Lee of KAIST Kim Jaechul Graduate School of AI >

The Apple Scholars in AI/ML PhD fellowship program, launched in 2020, aims to discover and support young researchers with a promising future in computer science. Each year, a handful of graduate students in related fields worldwide are selected for the program. For the following two years, the selected students are provided with financial support for research, international conference attendance, internship opportunities, and mentorship by an Apple engineer.

This year, 22 PhD students were selected from leading universities worldwide, including Johns Hopkins University, MIT, Stanford University, Imperial College London, Edinburgh University, Tsinghua University, HKUST, and Technion. Seanie Lee is the first Korean student to be selected for the program.

Lee’s research focuses on transfer learning, a subfield of AI that reuses pre-trained AI models on large datasets such as images or text corpora to train them for new purposes.

(*text corpus: a collection of text resources in computer-readable forms)

His work aims to improve the performance of transfer learning by developing new data augmentation methods that allow for more effective training using few training data samples and new regularization techniques that prevent the overfitting of large AI models to training data. He has published 11 papers, all of which were accepted to top-tier conferences such as the Annual Meeting of the Association for Computational Linguistics (ACL), International Conference on Learning Representations (ICLR), and Annual Conference on Neural Information Processing Systems (NeurIPS).

“Being selected as one of the Apple Scholars in AI/ML PhD fellowship program is a great motivation for me,” said Lee. “So far, AI research has been largely focused on computer vision and natural language processing, but I want to push the boundaries now and use modern tools of AI to solve problems in natural science, like physics.”

2023.04.20 View 8104

Seanie Lee of KAIST Kim Jaechul Graduate School of AI, named the 2023 Apple Scholars in AI Machine Learning

Seanie Lee, a Ph.D. candidate at the Kim Jaechul Graduate School of AI, has been selected as one of the Apple Scholars in AI/ML PhD fellowship program recipients for 2023. Lee, advised by Sung Ju Hwang and Juho Lee, is a rising star in AI.

< Seanie Lee of KAIST Kim Jaechul Graduate School of AI >

The Apple Scholars in AI/ML PhD fellowship program, launched in 2020, aims to discover and support young researchers with a promising future in computer science. Each year, a handful of graduate students in related fields worldwide are selected for the program. For the following two years, the selected students are provided with financial support for research, international conference attendance, internship opportunities, and mentorship by an Apple engineer.

This year, 22 PhD students were selected from leading universities worldwide, including Johns Hopkins University, MIT, Stanford University, Imperial College London, Edinburgh University, Tsinghua University, HKUST, and Technion. Seanie Lee is the first Korean student to be selected for the program.

Lee’s research focuses on transfer learning, a subfield of AI that reuses pre-trained AI models on large datasets such as images or text corpora to train them for new purposes.

(*text corpus: a collection of text resources in computer-readable forms)

His work aims to improve the performance of transfer learning by developing new data augmentation methods that allow for more effective training using few training data samples and new regularization techniques that prevent the overfitting of large AI models to training data. He has published 11 papers, all of which were accepted to top-tier conferences such as the Annual Meeting of the Association for Computational Linguistics (ACL), International Conference on Learning Representations (ICLR), and Annual Conference on Neural Information Processing Systems (NeurIPS).

“Being selected as one of the Apple Scholars in AI/ML PhD fellowship program is a great motivation for me,” said Lee. “So far, AI research has been largely focused on computer vision and natural language processing, but I want to push the boundaries now and use modern tools of AI to solve problems in natural science, like physics.”

2023.04.20 View 8104 -

Yuji Roh Awarded 2022 Microsoft Research PhD Fellowship

KAIST PhD candidate Yuji Roh of the School of Electrical Engineering (advisor: Prof. Steven Euijong Whang) was selected as a recipient of the 2022 Microsoft Research PhD Fellowship.

< KAIST PhD candidate Yuji Roh (advisor: Prof. Steven Euijong Whang) >

The Microsoft Research PhD Fellowship is a scholarship program that recognizes outstanding graduate students for their exceptional and innovative research in areas relevant to computer science and related fields. This year, 36 people from around the world received the fellowship, and Yuji Roh from KAIST EE is the only recipient from universities in Korea. Each selected fellow will receive a $10,000 scholarship and an opportunity to intern at Microsoft under the guidance of an experienced researcher.

Yuji Roh was named a fellow in the field of “Machine Learning” for her outstanding achievements in Trustworthy AI. Her research highlights include designing a state-of-the-art fair training framework using batch selection and developing novel algorithms for both fair and robust training. Her works have been presented at the top machine learning conferences ICML, ICLR, and NeurIPS among others. She also co-presented a tutorial on Trustworthy AI at the top data mining conference ACM SIGKDD. She is currently interning at the NVIDIA Research AI Algorithms Group developing large-scale real-world fair AI frameworks.

The list of fellowship recipients and the interview videos are displayed on the Microsoft webpage and Youtube.

The list of recipients: https://www.microsoft.com/en-us/research/academic-program/phd-fellowship/2022-recipients/

Interview (Global): https://www.youtube.com/watch?v=T4Q-XwOOoJc

Interview (Asia): https://www.youtube.com/watch?v=qwq3R1XU8UE

[Highlighted research achievements by Yuji Roh: Fair batch selection framework]

[Highlighted research achievements by Yuji Roh: Fair and robust training framework]

2022.10.28 View 15031

Yuji Roh Awarded 2022 Microsoft Research PhD Fellowship

KAIST PhD candidate Yuji Roh of the School of Electrical Engineering (advisor: Prof. Steven Euijong Whang) was selected as a recipient of the 2022 Microsoft Research PhD Fellowship.

< KAIST PhD candidate Yuji Roh (advisor: Prof. Steven Euijong Whang) >

The Microsoft Research PhD Fellowship is a scholarship program that recognizes outstanding graduate students for their exceptional and innovative research in areas relevant to computer science and related fields. This year, 36 people from around the world received the fellowship, and Yuji Roh from KAIST EE is the only recipient from universities in Korea. Each selected fellow will receive a $10,000 scholarship and an opportunity to intern at Microsoft under the guidance of an experienced researcher.

Yuji Roh was named a fellow in the field of “Machine Learning” for her outstanding achievements in Trustworthy AI. Her research highlights include designing a state-of-the-art fair training framework using batch selection and developing novel algorithms for both fair and robust training. Her works have been presented at the top machine learning conferences ICML, ICLR, and NeurIPS among others. She also co-presented a tutorial on Trustworthy AI at the top data mining conference ACM SIGKDD. She is currently interning at the NVIDIA Research AI Algorithms Group developing large-scale real-world fair AI frameworks.

The list of fellowship recipients and the interview videos are displayed on the Microsoft webpage and Youtube.

The list of recipients: https://www.microsoft.com/en-us/research/academic-program/phd-fellowship/2022-recipients/

Interview (Global): https://www.youtube.com/watch?v=T4Q-XwOOoJc

Interview (Asia): https://www.youtube.com/watch?v=qwq3R1XU8UE

[Highlighted research achievements by Yuji Roh: Fair batch selection framework]

[Highlighted research achievements by Yuji Roh: Fair and robust training framework]

2022.10.28 View 15031 -

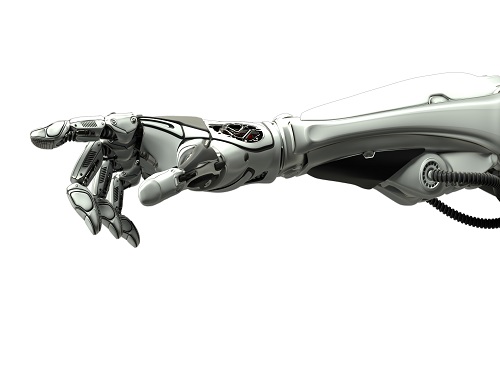

Decoding Brain Signals to Control a Robotic Arm

Advanced brain-machine interface system successfully interprets arm movement directions from neural signals in the brain

Researchers have developed a mind-reading system for decoding neural signals from the brain during arm movement. The method, described in the journal Applied Soft Computing, can be used by a person to control a robotic arm through a brain-machine interface (BMI).

A BMI is a device that translates nerve signals into commands to control a machine, such as a computer or a robotic limb. There are two main techniques for monitoring neural signals in BMIs: electroencephalography (EEG) and electrocorticography (ECoG).

The EEG exhibits signals from electrodes on the surface of the scalp and is widely employed because it is non-invasive, relatively cheap, safe and easy to use. However, the EEG has low spatial resolution and detects irrelevant neural signals, which makes it difficult to interpret the intentions of individuals from the EEG.

On the other hand, the ECoG is an invasive method that involves placing electrodes directly on the surface of the cerebral cortex below the scalp. Compared with the EEG, the ECoG can monitor neural signals with much higher spatial resolution and less background noise. However, this technique has several drawbacks.

“The ECoG is primarily used to find potential sources of epileptic seizures, meaning the electrodes are placed in different locations for different patients and may not be in the optimal regions of the brain for detecting sensory and movement signals,” explained Professor Jaeseung Jeong, a brain scientist at KAIST. “This inconsistency makes it difficult to decode brain signals to predict movements.”

To overcome these problems, Professor Jeong’s team developed a new method for decoding ECoG neural signals during arm movement. The system is based on a machine-learning system for analysing and predicting neural signals called an ‘echo-state network’ and a mathematical probability model called the Gaussian distribution.

In the study, the researchers recorded ECoG signals from four individuals with epilepsy while they were performing a reach-and-grasp task. Because the ECoG electrodes were placed according to the potential sources of each patient’s epileptic seizures, only 22% to 44% of the electrodes were located in the regions of the brain responsible for controlling movement.

During the movement task, the participants were given visual cues, either by placing a real tennis ball in front of them, or via a virtual reality headset showing a clip of a human arm reaching forward in first-person view. They were asked to reach forward, grasp an object, then return their hand and release the object, while wearing motion sensors on their wrists and fingers. In a second task, they were instructed to imagine reaching forward without moving their arms.

The researchers monitored the signals from the ECoG electrodes during real and imaginary arm movements, and tested whether the new system could predict the direction of this movement from the neural signals. They found that the novel decoder successfully classified arm movements in 24 directions in three-dimensional space, both in the real and virtual tasks, and that the results were at least five times more accurate than chance. They also used a computer simulation to show that the novel ECoG decoder could control the movements of a robotic arm.

Overall, the results suggest that the new machine learning-based BCI system successfully used ECoG signals to interpret the direction of the intended movements. The next steps will be to improve the accuracy and efficiency of the decoder. In the future, it could be used in a real-time BMI device to help people with movement or sensory impairments.

This research was supported by the KAIST Global Singularity Research Program of 2021, Brain Research Program of the National Research Foundation of Korea funded by the Ministry of Science, ICT, and Future Planning, and the Basic Science Research Program through the National Research Foundation of Korea funded by the Ministry of Education.

-PublicationHoon-Hee Kim, Jaeseung Jeong, “An electrocorticographic decoder for arm movement for brain-machine interface using an echo state network and Gaussian readout,” Applied SoftComputing online December 31, 2021 (doi.org/10.1016/j.asoc.2021.108393)

-ProfileProfessor Jaeseung JeongDepartment of Bio and Brain EngineeringCollege of EngineeringKAIST

2022.03.18 View 13477

Decoding Brain Signals to Control a Robotic Arm

Advanced brain-machine interface system successfully interprets arm movement directions from neural signals in the brain

Researchers have developed a mind-reading system for decoding neural signals from the brain during arm movement. The method, described in the journal Applied Soft Computing, can be used by a person to control a robotic arm through a brain-machine interface (BMI).

A BMI is a device that translates nerve signals into commands to control a machine, such as a computer or a robotic limb. There are two main techniques for monitoring neural signals in BMIs: electroencephalography (EEG) and electrocorticography (ECoG).

The EEG exhibits signals from electrodes on the surface of the scalp and is widely employed because it is non-invasive, relatively cheap, safe and easy to use. However, the EEG has low spatial resolution and detects irrelevant neural signals, which makes it difficult to interpret the intentions of individuals from the EEG.

On the other hand, the ECoG is an invasive method that involves placing electrodes directly on the surface of the cerebral cortex below the scalp. Compared with the EEG, the ECoG can monitor neural signals with much higher spatial resolution and less background noise. However, this technique has several drawbacks.

“The ECoG is primarily used to find potential sources of epileptic seizures, meaning the electrodes are placed in different locations for different patients and may not be in the optimal regions of the brain for detecting sensory and movement signals,” explained Professor Jaeseung Jeong, a brain scientist at KAIST. “This inconsistency makes it difficult to decode brain signals to predict movements.”

To overcome these problems, Professor Jeong’s team developed a new method for decoding ECoG neural signals during arm movement. The system is based on a machine-learning system for analysing and predicting neural signals called an ‘echo-state network’ and a mathematical probability model called the Gaussian distribution.

In the study, the researchers recorded ECoG signals from four individuals with epilepsy while they were performing a reach-and-grasp task. Because the ECoG electrodes were placed according to the potential sources of each patient’s epileptic seizures, only 22% to 44% of the electrodes were located in the regions of the brain responsible for controlling movement.

During the movement task, the participants were given visual cues, either by placing a real tennis ball in front of them, or via a virtual reality headset showing a clip of a human arm reaching forward in first-person view. They were asked to reach forward, grasp an object, then return their hand and release the object, while wearing motion sensors on their wrists and fingers. In a second task, they were instructed to imagine reaching forward without moving their arms.

The researchers monitored the signals from the ECoG electrodes during real and imaginary arm movements, and tested whether the new system could predict the direction of this movement from the neural signals. They found that the novel decoder successfully classified arm movements in 24 directions in three-dimensional space, both in the real and virtual tasks, and that the results were at least five times more accurate than chance. They also used a computer simulation to show that the novel ECoG decoder could control the movements of a robotic arm.

Overall, the results suggest that the new machine learning-based BCI system successfully used ECoG signals to interpret the direction of the intended movements. The next steps will be to improve the accuracy and efficiency of the decoder. In the future, it could be used in a real-time BMI device to help people with movement or sensory impairments.

This research was supported by the KAIST Global Singularity Research Program of 2021, Brain Research Program of the National Research Foundation of Korea funded by the Ministry of Science, ICT, and Future Planning, and the Basic Science Research Program through the National Research Foundation of Korea funded by the Ministry of Education.

-PublicationHoon-Hee Kim, Jaeseung Jeong, “An electrocorticographic decoder for arm movement for brain-machine interface using an echo state network and Gaussian readout,” Applied SoftComputing online December 31, 2021 (doi.org/10.1016/j.asoc.2021.108393)

-ProfileProfessor Jaeseung JeongDepartment of Bio and Brain EngineeringCollege of EngineeringKAIST

2022.03.18 View 13477 -

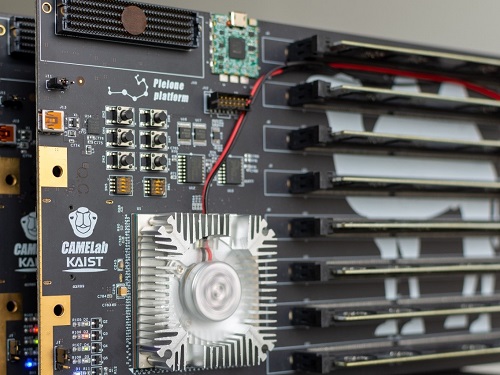

CXL-Based Memory Disaggregation Technology Opens Up a New Direction for Big Data Solution Frameworks

A KAIST team’s compute express link (CXL) provides new insights on memory disaggregation and ensures direct access and high-performance capabilities

A team from the Computer Architecture and Memory Systems Laboratory (CAMEL) at KAIST presented a new compute express link (CXL) solution whose directly accessible, and high-performance memory disaggregation opens new directions for big data memory processing. Professor Myoungsoo Jung said the team’s technology significantly improves performance compared to existing remote direct memory access (RDMA)-based memory disaggregation.

CXL is a peripheral component interconnect-express (PCIe)-based new dynamic multi-protocol made for efficiently utilizing memory devices and accelerators. Many enterprise data centers and memory vendors are paying attention to it as the next-generation multi-protocol for the era of big data.

Emerging big data applications such as machine learning, graph analytics, and in-memory databases require large memory capacities. However, scaling out the memory capacity via a prior memory interface like double data rate (DDR) is limited by the number of the central processing units (CPUs) and memory controllers. Therefore, memory disaggregation, which allows connecting a host to another host’s memory or memory nodes, has appeared.

RDMA is a way that a host can directly access another host’s memory via InfiniBand, the commonly used network protocol in data centers. Nowadays, most existing memory disaggregation technologies employ RDMA to get a large memory capacity. As a result, a host can share another host’s memory by transferring the data between local and remote memory.

Although RDMA-based memory disaggregation provides a large memory capacity to a host, two critical problems exist. First, scaling out the memory still needs an extra CPU to be added. Since passive memory such as dynamic random-access memory (DRAM), cannot operate by itself, it should be controlled by the CPU. Second, redundant data copies and software fabric interventions for RDMA-based memory disaggregation cause longer access latency. For example, remote memory access latency in RDMA-based memory disaggregation is multiple orders of magnitude longer than local memory access.

To address these issues, Professor Jung’s team developed the CXL-based memory disaggregation framework, including CXL-enabled customized CPUs, CXL devices, CXL switches, and CXL-aware operating system modules. The team’s CXL device is a pure passive and directly accessible memory node that contains multiple DRAM dual inline memory modules (DIMMs) and a CXL memory controller. Since the CXL memory controller supports the memory in the CXL device, a host can utilize the memory node without processor or software intervention. The team’s CXL switch enables scaling out a host’s memory capacity by hierarchically connecting multiple CXL devices to the CXL switch allowing more than hundreds of devices. Atop the switches and devices, the team’s CXL-enabled operating system removes redundant data copy and protocol conversion exhibited by conventional RDMA, which can significantly decrease access latency to the memory nodes.

In a test comparing loading 64B (cacheline) data from memory pooling devices, CXL-based memory disaggregation showed 8.2 times higher data load performance than RDMA-based memory disaggregation and even similar performance to local DRAM memory. In the team’s evaluations for a big data benchmark such as a machine learning-based test, CXL-based memory disaggregation technology also showed a maximum of 3.7 times higher performance than prior RDMA-based memory disaggregation technologies.

“Escaping from the conventional RDMA-based memory disaggregation, our CXL-based memory disaggregation framework can provide high scalability and performance for diverse datacenters and cloud service infrastructures,” said Professor Jung. He went on to stress, “Our CXL-based memory disaggregation research will bring about a new paradigm for memory solutions that will lead the era of big data.”

-Profile: Professor Myoungsoo Jung Computer Architecture and Memory Systems Laboratory (CAMEL)http://camelab.org School of Electrical EngineeringKAIST

2022.03.16 View 24093

CXL-Based Memory Disaggregation Technology Opens Up a New Direction for Big Data Solution Frameworks

A KAIST team’s compute express link (CXL) provides new insights on memory disaggregation and ensures direct access and high-performance capabilities

A team from the Computer Architecture and Memory Systems Laboratory (CAMEL) at KAIST presented a new compute express link (CXL) solution whose directly accessible, and high-performance memory disaggregation opens new directions for big data memory processing. Professor Myoungsoo Jung said the team’s technology significantly improves performance compared to existing remote direct memory access (RDMA)-based memory disaggregation.

CXL is a peripheral component interconnect-express (PCIe)-based new dynamic multi-protocol made for efficiently utilizing memory devices and accelerators. Many enterprise data centers and memory vendors are paying attention to it as the next-generation multi-protocol for the era of big data.

Emerging big data applications such as machine learning, graph analytics, and in-memory databases require large memory capacities. However, scaling out the memory capacity via a prior memory interface like double data rate (DDR) is limited by the number of the central processing units (CPUs) and memory controllers. Therefore, memory disaggregation, which allows connecting a host to another host’s memory or memory nodes, has appeared.

RDMA is a way that a host can directly access another host’s memory via InfiniBand, the commonly used network protocol in data centers. Nowadays, most existing memory disaggregation technologies employ RDMA to get a large memory capacity. As a result, a host can share another host’s memory by transferring the data between local and remote memory.

Although RDMA-based memory disaggregation provides a large memory capacity to a host, two critical problems exist. First, scaling out the memory still needs an extra CPU to be added. Since passive memory such as dynamic random-access memory (DRAM), cannot operate by itself, it should be controlled by the CPU. Second, redundant data copies and software fabric interventions for RDMA-based memory disaggregation cause longer access latency. For example, remote memory access latency in RDMA-based memory disaggregation is multiple orders of magnitude longer than local memory access.

To address these issues, Professor Jung’s team developed the CXL-based memory disaggregation framework, including CXL-enabled customized CPUs, CXL devices, CXL switches, and CXL-aware operating system modules. The team’s CXL device is a pure passive and directly accessible memory node that contains multiple DRAM dual inline memory modules (DIMMs) and a CXL memory controller. Since the CXL memory controller supports the memory in the CXL device, a host can utilize the memory node without processor or software intervention. The team’s CXL switch enables scaling out a host’s memory capacity by hierarchically connecting multiple CXL devices to the CXL switch allowing more than hundreds of devices. Atop the switches and devices, the team’s CXL-enabled operating system removes redundant data copy and protocol conversion exhibited by conventional RDMA, which can significantly decrease access latency to the memory nodes.

In a test comparing loading 64B (cacheline) data from memory pooling devices, CXL-based memory disaggregation showed 8.2 times higher data load performance than RDMA-based memory disaggregation and even similar performance to local DRAM memory. In the team’s evaluations for a big data benchmark such as a machine learning-based test, CXL-based memory disaggregation technology also showed a maximum of 3.7 times higher performance than prior RDMA-based memory disaggregation technologies.

“Escaping from the conventional RDMA-based memory disaggregation, our CXL-based memory disaggregation framework can provide high scalability and performance for diverse datacenters and cloud service infrastructures,” said Professor Jung. He went on to stress, “Our CXL-based memory disaggregation research will bring about a new paradigm for memory solutions that will lead the era of big data.”

-Profile: Professor Myoungsoo Jung Computer Architecture and Memory Systems Laboratory (CAMEL)http://camelab.org School of Electrical EngineeringKAIST

2022.03.16 View 24093 -

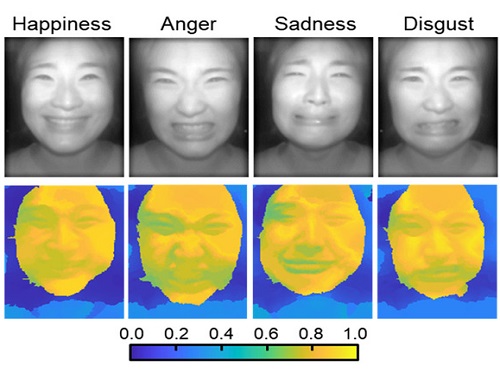

AI Light-Field Camera Reads 3D Facial Expressions

Machine-learned, light-field camera reads facial expressions from high-contrast illumination invariant 3D facial images

A joint research team led by Professors Ki-Hun Jeong and Doheon Lee from the KAIST Department of Bio and Brain Engineering reported the development of a technique for facial expression detection by merging near-infrared light-field camera techniques with artificial intelligence (AI) technology.

Unlike a conventional camera, the light-field camera contains micro-lens arrays in front of the image sensor, which makes the camera small enough to fit into a smart phone, while allowing it to acquire the spatial and directional information of the light with a single shot. The technique has received attention as it can reconstruct images in a variety of ways including multi-views, refocusing, and 3D image acquisition, giving rise to many potential applications.

However, the optical crosstalk between shadows caused by external light sources in the environment and the micro-lens has limited existing light-field cameras from being able to provide accurate image contrast and 3D reconstruction.

The joint research team applied a vertical-cavity surface-emitting laser (VCSEL) in the near-IR range to stabilize the accuracy of 3D image reconstruction that previously depended on environmental light. When an external light source is shone on a face at 0-, 30-, and 60-degree angles, the light field camera reduces 54% of image reconstruction errors. Additionally, by inserting a light-absorbing layer for visible and near-IR wavelengths between the micro-lens arrays, the team could minimize optical crosstalk while increasing the image contrast by 2.1 times.

Through this technique, the team could overcome the limitations of existing light-field cameras and was able to develop their NIR-based light-field camera (NIR-LFC), optimized for the 3D image reconstruction of facial expressions. Using the NIR-LFC, the team acquired high-quality 3D reconstruction images of facial expressions expressing various emotions regardless of the lighting conditions of the surrounding environment.

The facial expressions in the acquired 3D images were distinguished through machine learning with an average of 85% accuracy – a statistically significant figure compared to when 2D images were used. Furthermore, by calculating the interdependency of distance information that varies with facial expression in 3D images, the team could identify the information a light-field camera utilizes to distinguish human expressions.

Professor Ki-Hun Jeong said, “The sub-miniature light-field camera developed by the research team has the potential to become the new platform to quantitatively analyze the facial expressions and emotions of humans.” To highlight the significance of this research, he added, “It could be applied in various fields including mobile healthcare, field diagnosis, social cognition, and human-machine interactions.”

This research was published in Advanced Intelligent Systems online on December 16, under the title, “Machine-Learned Light-field Camera that Reads Facial Expression from High-Contrast and Illumination Invariant 3D Facial Images.” This research was funded by the Ministry of Science and ICT and the Ministry of Trade, Industry and Energy.

-Publication“Machine-learned light-field camera that reads fascial expression from high-contrast and illumination invariant 3D facial images,” Sang-In Bae, Sangyeon Lee, Jae-Myeong Kwon, Hyun-Kyung Kim. Kyung-Won Jang, Doheon Lee, Ki-Hun Jeong, Advanced Intelligent Systems, December 16, 2021 (doi.org/10.1002/aisy.202100182)

ProfileProfessor Ki-Hun JeongBiophotonic LaboratoryDepartment of Bio and Brain EngineeringKAIST

Professor Doheon LeeDepartment of Bio and Brain EngineeringKAIST

2022.01.21 View 13833

AI Light-Field Camera Reads 3D Facial Expressions

Machine-learned, light-field camera reads facial expressions from high-contrast illumination invariant 3D facial images

A joint research team led by Professors Ki-Hun Jeong and Doheon Lee from the KAIST Department of Bio and Brain Engineering reported the development of a technique for facial expression detection by merging near-infrared light-field camera techniques with artificial intelligence (AI) technology.

Unlike a conventional camera, the light-field camera contains micro-lens arrays in front of the image sensor, which makes the camera small enough to fit into a smart phone, while allowing it to acquire the spatial and directional information of the light with a single shot. The technique has received attention as it can reconstruct images in a variety of ways including multi-views, refocusing, and 3D image acquisition, giving rise to many potential applications.

However, the optical crosstalk between shadows caused by external light sources in the environment and the micro-lens has limited existing light-field cameras from being able to provide accurate image contrast and 3D reconstruction.

The joint research team applied a vertical-cavity surface-emitting laser (VCSEL) in the near-IR range to stabilize the accuracy of 3D image reconstruction that previously depended on environmental light. When an external light source is shone on a face at 0-, 30-, and 60-degree angles, the light field camera reduces 54% of image reconstruction errors. Additionally, by inserting a light-absorbing layer for visible and near-IR wavelengths between the micro-lens arrays, the team could minimize optical crosstalk while increasing the image contrast by 2.1 times.

Through this technique, the team could overcome the limitations of existing light-field cameras and was able to develop their NIR-based light-field camera (NIR-LFC), optimized for the 3D image reconstruction of facial expressions. Using the NIR-LFC, the team acquired high-quality 3D reconstruction images of facial expressions expressing various emotions regardless of the lighting conditions of the surrounding environment.

The facial expressions in the acquired 3D images were distinguished through machine learning with an average of 85% accuracy – a statistically significant figure compared to when 2D images were used. Furthermore, by calculating the interdependency of distance information that varies with facial expression in 3D images, the team could identify the information a light-field camera utilizes to distinguish human expressions.

Professor Ki-Hun Jeong said, “The sub-miniature light-field camera developed by the research team has the potential to become the new platform to quantitatively analyze the facial expressions and emotions of humans.” To highlight the significance of this research, he added, “It could be applied in various fields including mobile healthcare, field diagnosis, social cognition, and human-machine interactions.”

This research was published in Advanced Intelligent Systems online on December 16, under the title, “Machine-Learned Light-field Camera that Reads Facial Expression from High-Contrast and Illumination Invariant 3D Facial Images.” This research was funded by the Ministry of Science and ICT and the Ministry of Trade, Industry and Energy.

-Publication“Machine-learned light-field camera that reads fascial expression from high-contrast and illumination invariant 3D facial images,” Sang-In Bae, Sangyeon Lee, Jae-Myeong Kwon, Hyun-Kyung Kim. Kyung-Won Jang, Doheon Lee, Ki-Hun Jeong, Advanced Intelligent Systems, December 16, 2021 (doi.org/10.1002/aisy.202100182)

ProfileProfessor Ki-Hun JeongBiophotonic LaboratoryDepartment of Bio and Brain EngineeringKAIST

Professor Doheon LeeDepartment of Bio and Brain EngineeringKAIST

2022.01.21 View 13833 -

KAIST ISPI Releases Report on the Global AI Innovation Landscape

Providing key insights for building a successful AI ecosystem

The KAIST Innovation Strategy and Policy Institute (ISPI) has launched a report on the global innovation landscape of artificial intelligence in collaboration with Clarivate Plc. The report shows that AI has become a key technology and that cross-industry learning is an important AI innovation. It also stresses that the quality of innovation, not volume, is a critical success factor in technological competitiveness.

Key findings of the report include:

• Neural networks and machine learning have been unrivaled in terms of scale and growth (more than 46%), and most other AI technologies show a growth rate of more than 20%.

• Although Mainland China has shown the highest growth rate in terms of AI inventions, the influence of Chinese AI is relatively low. In contrast, the United States holds a leading position in AI-related inventions in terms of both quantity and influence.

• The U.S. and Canada have built an industry-oriented AI technology development ecosystem through organic cooperation with both academia and the Government. Mainland China and South Korea, by contrast, have a government-driven AI technology development ecosystem with relatively low qualitative outputs from the sector.

• The U.S., the U.K., and Canada have a relatively high proportion of inventions in robotics and autonomous control, whereas in Mainland China and South Korea, machine learning and neural networks are making progress. Each country/region produces high-quality inventions in their predominant AI fields, while the U.S. has produced high-impact inventions in almost all AI fields.

“The driving forces in building a sustainable AI innovation ecosystem are important national strategies. A country’s future AI capabilities will be determined by how quickly and robustly it develops its own AI ecosystem and how well it transforms the existing industry with AI technologies. Countries that build a successful AI ecosystem have the potential to accelerate growth while absorbing the AI capabilities of other countries. AI talents are already moving to countries with excellent AI ecosystems,” said Director of the ISPI Wonjoon Kim.

“AI, together with other high-tech IT technologies including big data and the Internet of Things are accelerating the digital transformation by leading an intelligent hyper-connected society and enabling the convergence of technology and business. With the rapid growth of AI innovation, AI applications are also expanding in various ways across industries and in our lives,” added Justin Kim, Special Advisor at the ISPI and a co-author of the report.

2021.12.21 View 9466

KAIST ISPI Releases Report on the Global AI Innovation Landscape

Providing key insights for building a successful AI ecosystem

The KAIST Innovation Strategy and Policy Institute (ISPI) has launched a report on the global innovation landscape of artificial intelligence in collaboration with Clarivate Plc. The report shows that AI has become a key technology and that cross-industry learning is an important AI innovation. It also stresses that the quality of innovation, not volume, is a critical success factor in technological competitiveness.

Key findings of the report include:

• Neural networks and machine learning have been unrivaled in terms of scale and growth (more than 46%), and most other AI technologies show a growth rate of more than 20%.

• Although Mainland China has shown the highest growth rate in terms of AI inventions, the influence of Chinese AI is relatively low. In contrast, the United States holds a leading position in AI-related inventions in terms of both quantity and influence.

• The U.S. and Canada have built an industry-oriented AI technology development ecosystem through organic cooperation with both academia and the Government. Mainland China and South Korea, by contrast, have a government-driven AI technology development ecosystem with relatively low qualitative outputs from the sector.

• The U.S., the U.K., and Canada have a relatively high proportion of inventions in robotics and autonomous control, whereas in Mainland China and South Korea, machine learning and neural networks are making progress. Each country/region produces high-quality inventions in their predominant AI fields, while the U.S. has produced high-impact inventions in almost all AI fields.

“The driving forces in building a sustainable AI innovation ecosystem are important national strategies. A country’s future AI capabilities will be determined by how quickly and robustly it develops its own AI ecosystem and how well it transforms the existing industry with AI technologies. Countries that build a successful AI ecosystem have the potential to accelerate growth while absorbing the AI capabilities of other countries. AI talents are already moving to countries with excellent AI ecosystems,” said Director of the ISPI Wonjoon Kim.

“AI, together with other high-tech IT technologies including big data and the Internet of Things are accelerating the digital transformation by leading an intelligent hyper-connected society and enabling the convergence of technology and business. With the rapid growth of AI innovation, AI applications are also expanding in various ways across industries and in our lives,” added Justin Kim, Special Advisor at the ISPI and a co-author of the report.

2021.12.21 View 9466 -

Deep Learning Framework to Enable Material Design in Unseen Domain

Researchers propose a deep neural network-based forward design space exploration using active transfer learning and data augmentation

A new study proposed a deep neural network-based forward design approach that enables an efficient search for superior materials far beyond the domain of the initial training set. This approach compensates for the weak predictive power of neural networks on an unseen domain through gradual updates of the neural network with active transfer learning and data augmentation methods.

Professor Seungwha Ryu believes that this study will help address a variety of optimization problems that have an astronomical number of possible design configurations. For the grid composite optimization problem, the proposed framework was able to provide excellent designs close to the global optima, even with the addition of a very small dataset corresponding to less than 0.5% of the initial training data-set size. This study was reported in npj Computational Materials last month.

“We wanted to mitigate the limitation of the neural network, weak predictive power beyond the training set domain for the material or structure design,” said Professor Ryu from the Department of Mechanical Engineering.

Neural network-based generative models have been actively investigated as an inverse design method for finding novel materials in a vast design space. However, the applicability of conventional generative models is limited because they cannot access data outside the range of training sets. Advanced generative models that were devised to overcome this limitation also suffer from weak predictive power for the unseen domain.

Professor Ryu’s team, in collaboration with researchers from Professor Grace Gu’s group at UC Berkeley, devised a design method that simultaneously expands the domain using the strong predictive power of a deep neural network and searches for the optimal design by repetitively performing three key steps.

First, it searches for few candidates with improved properties located close to the training set via genetic algorithms, by mixing superior designs within the training set. Then, it checks to see if the candidates really have improved properties, and expands the training set by duplicating the validated designs via a data augmentation method. Finally, they can expand the reliable prediction domain by updating the neural network with the new superior designs via transfer learning. Because the expansion proceeds along relatively narrow but correct routes toward the optimal design (depicted in the schematic of Fig. 1), the framework enables an efficient search.

As a data-hungry method, a deep neural network model tends to have reliable predictive power only within and near the domain of the training set. When the optimal configuration of materials and structures lies far beyond the initial training set, which frequently is the case, neural network-based design methods suffer from weak predictive power and become inefficient.

Researchers expect that the framework will be applicable for a wide range of optimization problems in other science and engineering disciplines with astronomically large design space, because it provides an efficient way of gradually expanding the reliable prediction domain toward the target design while avoiding the risk of being stuck in local minima. Especially, being a less-data-hungry method, design problems in which data generation is time-consuming and expensive will benefit most from this new framework.

The research team is currently applying the optimization framework for the design task of metamaterial structures, segmented thermoelectric generators, and optimal sensor distributions. “From these sets of on-going studies, we expect to better recognize the pros and cons, and the potential of the suggested algorithm. Ultimately, we want to devise more efficient machine learning-based design approaches,” explained Professor Ryu.This study was funded by the National Research Foundation of Korea and the KAIST Global Singularity Research Project.

-Publication

Yongtae Kim, Youngsoo, Charles Yang, Kundo Park, Grace X. Gu, and Seunghwa Ryu, “Deep learning framework for material design space exploration using active transfer learning and data augmentation,” npj Computational Materials (https://doi.org/10.1038/s41524-021-00609-2)

-Profile

Professor Seunghwa Ryu

Mechanics & Materials Modeling Lab

Department of Mechanical Engineering

KAIST

2021.09.29 View 13444

Deep Learning Framework to Enable Material Design in Unseen Domain

Researchers propose a deep neural network-based forward design space exploration using active transfer learning and data augmentation

A new study proposed a deep neural network-based forward design approach that enables an efficient search for superior materials far beyond the domain of the initial training set. This approach compensates for the weak predictive power of neural networks on an unseen domain through gradual updates of the neural network with active transfer learning and data augmentation methods.

Professor Seungwha Ryu believes that this study will help address a variety of optimization problems that have an astronomical number of possible design configurations. For the grid composite optimization problem, the proposed framework was able to provide excellent designs close to the global optima, even with the addition of a very small dataset corresponding to less than 0.5% of the initial training data-set size. This study was reported in npj Computational Materials last month.

“We wanted to mitigate the limitation of the neural network, weak predictive power beyond the training set domain for the material or structure design,” said Professor Ryu from the Department of Mechanical Engineering.

Neural network-based generative models have been actively investigated as an inverse design method for finding novel materials in a vast design space. However, the applicability of conventional generative models is limited because they cannot access data outside the range of training sets. Advanced generative models that were devised to overcome this limitation also suffer from weak predictive power for the unseen domain.

Professor Ryu’s team, in collaboration with researchers from Professor Grace Gu’s group at UC Berkeley, devised a design method that simultaneously expands the domain using the strong predictive power of a deep neural network and searches for the optimal design by repetitively performing three key steps.

First, it searches for few candidates with improved properties located close to the training set via genetic algorithms, by mixing superior designs within the training set. Then, it checks to see if the candidates really have improved properties, and expands the training set by duplicating the validated designs via a data augmentation method. Finally, they can expand the reliable prediction domain by updating the neural network with the new superior designs via transfer learning. Because the expansion proceeds along relatively narrow but correct routes toward the optimal design (depicted in the schematic of Fig. 1), the framework enables an efficient search.

As a data-hungry method, a deep neural network model tends to have reliable predictive power only within and near the domain of the training set. When the optimal configuration of materials and structures lies far beyond the initial training set, which frequently is the case, neural network-based design methods suffer from weak predictive power and become inefficient.

Researchers expect that the framework will be applicable for a wide range of optimization problems in other science and engineering disciplines with astronomically large design space, because it provides an efficient way of gradually expanding the reliable prediction domain toward the target design while avoiding the risk of being stuck in local minima. Especially, being a less-data-hungry method, design problems in which data generation is time-consuming and expensive will benefit most from this new framework.

The research team is currently applying the optimization framework for the design task of metamaterial structures, segmented thermoelectric generators, and optimal sensor distributions. “From these sets of on-going studies, we expect to better recognize the pros and cons, and the potential of the suggested algorithm. Ultimately, we want to devise more efficient machine learning-based design approaches,” explained Professor Ryu.This study was funded by the National Research Foundation of Korea and the KAIST Global Singularity Research Project.

-Publication

Yongtae Kim, Youngsoo, Charles Yang, Kundo Park, Grace X. Gu, and Seunghwa Ryu, “Deep learning framework for material design space exploration using active transfer learning and data augmentation,” npj Computational Materials (https://doi.org/10.1038/s41524-021-00609-2)

-Profile

Professor Seunghwa Ryu

Mechanics & Materials Modeling Lab

Department of Mechanical Engineering

KAIST

2021.09.29 View 13444 -

Prof. Changho Suh Named the 2021 James L. Massey Awardee

Professor Changho Suh from the School of Electrical Engineering was named the recipient of the 2021 James L.Massey Award. The award recognizes outstanding achievement in research and teaching by young scholars in the information theory community. The award is named in honor of James L. Massey, who was an internationally acclaimed pioneer in digital communications and revered teacher and mentor to communications engineers.

Professor Suh is a recipient of numerous awards, including the 2021 James L. Massey Research & Teaching Award for Young Scholars from the IEEE Information Theory Society, the 2019 AFOSR Grant, the 2019 Google Education Grant, the 2018 IEIE/IEEE Joint Award, the 2015 IEIE Haedong Young Engineer Award, the 2013 IEEE Communications Society Stephen O. Rice Prize, the 2011 David J. Sakrison Memorial Prize (the best dissertation award in UC Berkeley EECS), the 2009 IEEE ISIT Best Student Paper Award, the 2020 LINKGENESIS Best Teacher Award (the campus-wide Grand Prize in Teaching), and the four Departmental Teaching Awards (2013, 2019, 2020, 2021).

Dr. Suh is an IEEE Information Theory Society Distinguished Lecturer, the General Chair of the Inaugural IEEE East Asian School of Information Theory, and a Member of the Young Korean Academy of Science and Technology. He is also an Associate Editor of Machine Learning for the IEEE Transactions on Information Theory, the Editor for the IEEE Information Theory Newsletter, a Column Editor for IEEE BITS the Information Theory Magazine, an Area Chair of NeurIPS 2021, and on the Senior Program Committee of IJCAI 2019–2021.

2021.07.27 View 9470

Prof. Changho Suh Named the 2021 James L. Massey Awardee

Professor Changho Suh from the School of Electrical Engineering was named the recipient of the 2021 James L.Massey Award. The award recognizes outstanding achievement in research and teaching by young scholars in the information theory community. The award is named in honor of James L. Massey, who was an internationally acclaimed pioneer in digital communications and revered teacher and mentor to communications engineers.

Professor Suh is a recipient of numerous awards, including the 2021 James L. Massey Research & Teaching Award for Young Scholars from the IEEE Information Theory Society, the 2019 AFOSR Grant, the 2019 Google Education Grant, the 2018 IEIE/IEEE Joint Award, the 2015 IEIE Haedong Young Engineer Award, the 2013 IEEE Communications Society Stephen O. Rice Prize, the 2011 David J. Sakrison Memorial Prize (the best dissertation award in UC Berkeley EECS), the 2009 IEEE ISIT Best Student Paper Award, the 2020 LINKGENESIS Best Teacher Award (the campus-wide Grand Prize in Teaching), and the four Departmental Teaching Awards (2013, 2019, 2020, 2021).

Dr. Suh is an IEEE Information Theory Society Distinguished Lecturer, the General Chair of the Inaugural IEEE East Asian School of Information Theory, and a Member of the Young Korean Academy of Science and Technology. He is also an Associate Editor of Machine Learning for the IEEE Transactions on Information Theory, the Editor for the IEEE Information Theory Newsletter, a Column Editor for IEEE BITS the Information Theory Magazine, an Area Chair of NeurIPS 2021, and on the Senior Program Committee of IJCAI 2019–2021.

2021.07.27 View 9470 -

Alumni Professor Cho at NYU Endows Scholarship for Female Computer Scientists

Alumni Professor Kyunghyun Cho at New York University endowed the “Lim Mi-Sook Scholarship” at KAIST for female computer scientists in honor of his mother.

Professor Cho, a graduate of the School of Computing in 2011 completed his master’s and PhD at Alto University in Finland in 2014. He has been teaching at NYU since 2015 and received the Samsung Ho-Am Prize for Engineering this year in recognition of his outstanding researches in the fields of machine learning and AI.

“I hope this will encourage young female students to continue their studies in computer science and encourage others to join the discipline in the future, thereby contributing to building a more diverse community of computer scientists,” he said in his written message. His parents and President Kwang Hyung Lee attended the donation ceremony held at the Daejeon campus on June 24.

Professor Cho has developed neural network machine learning translation algorithm that is widely being used in translation engines. His contributions to AI-powered translations and innovation in the industry led him to win one of the most prestigious prizes in Korea.

He decided to donate his 300 million KRW prize money to fund two 100 million KRW scholarships named after each of his parents: the Lim Mi-Sook Scholarship is for female computer scientists and the Bae-Gyu Scholarly Award for Classics is in honor of his father, who is a Korean literature professor at Soongsil University in Korea. He will also fund a scholarship at Alto University.

“I recall there were less than five female students out of 70 students in my cohort during my undergraduate studies at KAIST even in later 2000s. Back then, it just felt natural that boys majored computer science and girls in biology.”

He said he wanted to acknowledge his mother, who had to give up her teaching career in the 1980s to take care of her children. “It made all of us think more about the burden of raising children that is placed often disproportionately on mothers and how it should be better distributed among parents, relatives, and society in order to ensure and maximize equity in education as well as career development and advances.”

He added, “As a small step to help build a more diverse environment, I have decided to donate to this fund to provide a small supplement to the small group of female students majoring in computer science.

2021.07.01 View 10533

Alumni Professor Cho at NYU Endows Scholarship for Female Computer Scientists

Alumni Professor Kyunghyun Cho at New York University endowed the “Lim Mi-Sook Scholarship” at KAIST for female computer scientists in honor of his mother.

Professor Cho, a graduate of the School of Computing in 2011 completed his master’s and PhD at Alto University in Finland in 2014. He has been teaching at NYU since 2015 and received the Samsung Ho-Am Prize for Engineering this year in recognition of his outstanding researches in the fields of machine learning and AI.

“I hope this will encourage young female students to continue their studies in computer science and encourage others to join the discipline in the future, thereby contributing to building a more diverse community of computer scientists,” he said in his written message. His parents and President Kwang Hyung Lee attended the donation ceremony held at the Daejeon campus on June 24.

Professor Cho has developed neural network machine learning translation algorithm that is widely being used in translation engines. His contributions to AI-powered translations and innovation in the industry led him to win one of the most prestigious prizes in Korea.

He decided to donate his 300 million KRW prize money to fund two 100 million KRW scholarships named after each of his parents: the Lim Mi-Sook Scholarship is for female computer scientists and the Bae-Gyu Scholarly Award for Classics is in honor of his father, who is a Korean literature professor at Soongsil University in Korea. He will also fund a scholarship at Alto University.

“I recall there were less than five female students out of 70 students in my cohort during my undergraduate studies at KAIST even in later 2000s. Back then, it just felt natural that boys majored computer science and girls in biology.”

He said he wanted to acknowledge his mother, who had to give up her teaching career in the 1980s to take care of her children. “It made all of us think more about the burden of raising children that is placed often disproportionately on mothers and how it should be better distributed among parents, relatives, and society in order to ensure and maximize equity in education as well as career development and advances.”

He added, “As a small step to help build a more diverse environment, I have decided to donate to this fund to provide a small supplement to the small group of female students majoring in computer science.

2021.07.01 View 10533 -

Streamlining the Process of Materials Discovery

The materials platform M3I3 reduces the time for materials discovery by reverse engineering future materials using multiscale/multimodal imaging and machine learning of the processing-structure-properties relationship

Developing new materials and novel processes has continued to change the world. The M3I3 Initiative at KAIST has led to new insights into advancing materials development by implementing breakthroughs in materials imaging that have created a paradigm shift in the discovery of materials. The Initiative features the multiscale modeling and imaging of structure and property relationships and materials hierarchies combined with the latest material-processing data.

The research team led by Professor Seungbum Hong analyzed the materials research projects reported by leading global institutes and research groups, and derived a quantitative model using machine learning with a scientific interpretation. This process embodies the research goal of the M3I3: Materials and Molecular Modeling, Imaging, Informatics and Integration.

The researchers discussed the role of multiscale materials and molecular imaging combined with machine learning and also presented a future outlook for developments and the major challenges of M3I3. By building this model, the research team envisions creating desired sets of properties for materials and obtaining the optimum processing recipes to synthesize them.

“The development of various microscopy and diffraction tools with the ability to map the structure, property, and performance of materials at multiscale levels and in real time enabled us to think that materials imaging could radically accelerate materials discovery and development,” says Professor Hong.

“We plan to build an M3I3 repository of searchable structural and property maps using FAIR (Findable, Accessible, Interoperable, and Reusable) principles to standardize best practices as well as streamline the training of early career researchers.”

One of the examples that shows the power of structure-property imaging at the nanoscale is the development of future materials for emerging nonvolatile memory devices. Specifically, the research team focused on microscopy using photons, electrons, and physical probes on the multiscale structural hierarchy, as well as structure-property relationships to enhance the performance of memory devices.

“M3I3 is an algorithm for performing the reverse engineering of future materials. Reverse engineering starts by analyzing the structure and composition of cutting-edge materials or products. Once the research team determines the performance of our targeted future materials, we need to know the candidate structures and compositions for producing the future materials.”

The research team has built a data-driven experimental design based on traditional NCM (nickel, cobalt, and manganese) cathode materials. With this, the research team expanded their future direction for achieving even higher discharge capacity, which can be realized via Li-rich cathodes.

However, one of the major challenges was the limitation of available data that describes the Li-rich cathode properties. To mitigate this problem, the researchers proposed two solutions: First, they should build a machine-learning-guided data generator for data augmentation. Second, they would use a machine-learning method based on ‘transfer learning.’ Since the NCM cathode database shares a common feature with a Li-rich cathode, one could consider repurposing the NCM trained model for assisting the Li-rich prediction. With the pretrained model and transfer learning, the team expects to achieve outstanding predictions for Li-rich cathodes even with the small data set.

With advances in experimental imaging and the availability of well-resolved information and big data, along with significant advances in high-performance computing and a worldwide thrust toward a general, collaborative, integrative, and on-demand research platform, there is a clear confluence in the required capabilities of advancing the M3I3 Initiative.

Professor Hong said, “Once we succeed in using the inverse “property−structure−processing” solver to develop cathode, anode, electrolyte, and membrane materials for high energy density Li-ion batteries, we will expand our scope of materials to battery/fuel cells, aerospace, automobiles, food, medicine, and cosmetic materials.”

The review was published in ACS Nano in March. This study was conducted through collaborations with Dr. Chi Hao Liow, Professor Jong Min Yuk, Professor Hye Ryung Byon, Professor Yongsoo Yang, Professor EunAe Cho, Professor Pyuck-Pa Choi, and Professor Hyuck Mo Lee at KAIST, Professor Joshua C. Agar at Lehigh University, Dr. Sergei V. Kalinin at Oak Ridge National Laboratory, Professor Peter W. Voorhees at Northwestern University, and Professor Peter Littlewood at the University of Chicago (Article title: Reducing Time to Discovery: Materials and Molecular Modeling, Imaging, Informatics, and Integration).This work was supported by the KAIST Global Singularity Research Program for 2019 and 2020.

Publication:

“Reducing Time to Discovery: Materials and Molecular Modeling, Imaging, Informatics and Integration,” S. Hong, C. H. Liow, J. M. Yuk, H. R. Byon, Y. Yang, E. Cho, J. Yeom, G. Park, H. Kang, S. Kim, Y. Shim, M. Na, C. Jeong, G. Hwang, H. Kim, H. Kim, S. Eom, S. Cho, H. Jun, Y. Lee, A. Baucour, K. Bang, M. Kim, S. Yun, J. Ryu, Y. Han, A. Jetybayeva, P.-P. Choi, J. C. Agar, S. V. Kalinin, P. W. Voorhees, P. Littlewood, and H. M. Lee, ACS Nano 15, 3, 3971–3995 (2021) https://doi.org/10.1021/acsnano.1c00211

Profile:

Seungbum Hong, PhD

Associate Professor

seungbum@kaist.ac.kr

http://mii.kaist.ac.kr

Department of Materials Science and Engineering

KAIST

(END)

2021.04.05 View 15192

Streamlining the Process of Materials Discovery

The materials platform M3I3 reduces the time for materials discovery by reverse engineering future materials using multiscale/multimodal imaging and machine learning of the processing-structure-properties relationship