User+interface

-

K-Glass 3 Offers Users a Keyboard to Type Text

KAIST researchers upgraded their smart glasses with a low-power multicore processor to employ stereo vision and deep-learning algorithms, making the user interface and experience more intuitive and convenient.

K-Glass, smart glasses reinforced with augmented reality (AR) that were first developed by KAIST in 2014, with the second version released in 2015, is back with an even stronger model. The latest version, which KAIST researchers are calling K-Glass 3, allows users to text a message or type in key words for Internet surfing by offering a virtual keyboard for text and even one for a piano.

Currently, most wearable head-mounted displays (HMDs) suffer from a lack of rich user interfaces, short battery lives, and heavy weight. Some HMDs, such as Google Glass, use a touch panel and voice commands as an interface, but they are considered merely an extension of smartphones and are not optimized for wearable smart glasses. Recently, gaze recognition was proposed for HMDs including K-Glass 2, but gaze cannot be realized as a natural user interface (UI) and experience (UX) due to its limited interactivity and lengthy gaze-calibration time, which can be up to several minutes.

As a solution, Professor Hoi-Jun Yoo and his team from the Electrical Engineering Department recently developed K-Glass 3 with a low-power natural UI and UX processor. This processor is composed of a pre-processing core to implement stereo vision, seven deep-learning cores to accelerate real-time scene recognition within 33 milliseconds, and one rendering engine for the display.

The stereo-vision camera, located on the front of K-Glass 3, works in a manner similar to three dimension (3D) sensing in human vision. The camera’s two lenses, displayed horizontally from one another just like depth perception produced by left and right eyes, take pictures of the same objects or scenes and combine these two different images to extract spatial depth information, which is necessary to reconstruct 3D environments. The camera’s vision algorithm has an energy efficiency of 20 milliwatts on average, allowing it to operate in the Glass more than 24 hours without interruption.

The research team adopted deep-learning-multi core technology dedicated for mobile devices. This technology has greatly improved the Glass’s recognition accuracy with images and speech, while shortening the time needed to process and analyze data. In addition, the Glass’s multi-core processor is advanced enough to become idle when it detects no motion from users. Instead, it executes complex deep-learning algorithms with a minimal power to achieve high performance.

Professor Yoo said, “We have succeeded in fabricating a low-power multi-core processer that consumes only 126 milliwatts of power with a high efficiency rate. It is essential to develop a smaller, lighter, and low-power processor if we want to incorporate the widespread use of smart glasses and wearable devices into everyday life. K-Glass 3’s more intuitive UI and convenient UX permit users to enjoy enhanced AR experiences such as a keyboard or a better, more responsive mouse.”

Along with the research team, UX Factory, a Korean UI and UX developer, participated in the K-Glass 3 project.

These research results entitled “A 126.1mW Real-Time Natural UI/UX Processor with Embedded Deep-Learning Core for Low-Power Smart Glasses” (lead author: Seong-Wook Park, a doctoral student in the Electrical Engineering Department, KAIST) were presented at the 2016 IEEE (Institute of Electrical and Electronics Engineers) International Solid-State Circuits Conference (ISSCC) that took place January 31-February 4, 2016 in San Francisco, California.

YouTube Link: https://youtu.be/If_anx5NerQ

Figure 1: K-Glass 3

K-Glass 3 is equipped with a stereo camera, dual microphones, a WiFi module, and eight batteries to offer higher recognition accuracy and enhanced augmented reality experiences than previous models.

Figure 2: Architecture of the Low-Power Multi-Core Processor

K-Glass 3’s processor is designed to include several cores for pre-processing, deep-learning, and graphic rendering.

Figure 3: Virtual Text and Piano Keyboard

K-Glass 3 can detect hands and recognize their movements to provide users with such augmented reality applications as a virtual text or piano keyboard.

2016.02.26 View 14213

K-Glass 3 Offers Users a Keyboard to Type Text

KAIST researchers upgraded their smart glasses with a low-power multicore processor to employ stereo vision and deep-learning algorithms, making the user interface and experience more intuitive and convenient.

K-Glass, smart glasses reinforced with augmented reality (AR) that were first developed by KAIST in 2014, with the second version released in 2015, is back with an even stronger model. The latest version, which KAIST researchers are calling K-Glass 3, allows users to text a message or type in key words for Internet surfing by offering a virtual keyboard for text and even one for a piano.

Currently, most wearable head-mounted displays (HMDs) suffer from a lack of rich user interfaces, short battery lives, and heavy weight. Some HMDs, such as Google Glass, use a touch panel and voice commands as an interface, but they are considered merely an extension of smartphones and are not optimized for wearable smart glasses. Recently, gaze recognition was proposed for HMDs including K-Glass 2, but gaze cannot be realized as a natural user interface (UI) and experience (UX) due to its limited interactivity and lengthy gaze-calibration time, which can be up to several minutes.

As a solution, Professor Hoi-Jun Yoo and his team from the Electrical Engineering Department recently developed K-Glass 3 with a low-power natural UI and UX processor. This processor is composed of a pre-processing core to implement stereo vision, seven deep-learning cores to accelerate real-time scene recognition within 33 milliseconds, and one rendering engine for the display.

The stereo-vision camera, located on the front of K-Glass 3, works in a manner similar to three dimension (3D) sensing in human vision. The camera’s two lenses, displayed horizontally from one another just like depth perception produced by left and right eyes, take pictures of the same objects or scenes and combine these two different images to extract spatial depth information, which is necessary to reconstruct 3D environments. The camera’s vision algorithm has an energy efficiency of 20 milliwatts on average, allowing it to operate in the Glass more than 24 hours without interruption.

The research team adopted deep-learning-multi core technology dedicated for mobile devices. This technology has greatly improved the Glass’s recognition accuracy with images and speech, while shortening the time needed to process and analyze data. In addition, the Glass’s multi-core processor is advanced enough to become idle when it detects no motion from users. Instead, it executes complex deep-learning algorithms with a minimal power to achieve high performance.

Professor Yoo said, “We have succeeded in fabricating a low-power multi-core processer that consumes only 126 milliwatts of power with a high efficiency rate. It is essential to develop a smaller, lighter, and low-power processor if we want to incorporate the widespread use of smart glasses and wearable devices into everyday life. K-Glass 3’s more intuitive UI and convenient UX permit users to enjoy enhanced AR experiences such as a keyboard or a better, more responsive mouse.”

Along with the research team, UX Factory, a Korean UI and UX developer, participated in the K-Glass 3 project.

These research results entitled “A 126.1mW Real-Time Natural UI/UX Processor with Embedded Deep-Learning Core for Low-Power Smart Glasses” (lead author: Seong-Wook Park, a doctoral student in the Electrical Engineering Department, KAIST) were presented at the 2016 IEEE (Institute of Electrical and Electronics Engineers) International Solid-State Circuits Conference (ISSCC) that took place January 31-February 4, 2016 in San Francisco, California.

YouTube Link: https://youtu.be/If_anx5NerQ

Figure 1: K-Glass 3

K-Glass 3 is equipped with a stereo camera, dual microphones, a WiFi module, and eight batteries to offer higher recognition accuracy and enhanced augmented reality experiences than previous models.

Figure 2: Architecture of the Low-Power Multi-Core Processor

K-Glass 3’s processor is designed to include several cores for pre-processing, deep-learning, and graphic rendering.

Figure 3: Virtual Text and Piano Keyboard

K-Glass 3 can detect hands and recognize their movements to provide users with such augmented reality applications as a virtual text or piano keyboard.

2016.02.26 View 14213 -

KAIST Introduces New UI for K-Glass 2

A newly developed user interface, the “i-Mouse,” in the K-Glass 2 tracks the user’s gaze and connects the device to the Internet through blinking eyes such as winks. This low-power interface provides smart glasses with an excellent user experience, with a long-lasting battery and augmented reality.

Smart glasses are wearable computers that will likely lead to the growth of the Internet of Things. Currently available smart glasses, however, reveal a set of problems for commercialization, such as short battery life and low energy efficiency. In addition, glasses that use voice commands have raised the issue of privacy concerns.

A research team led by Professor Hoi-Jun Yoo of the Electrical Engineering Department at the Korea Advanced Institute of Science and Technology (KAIST) has recently developed an upgraded model of the K-Glass (http://www.eurekalert.org/pub_releases/2014-02/tkai-kdl021714.php) called “K-Glass 2.”

K-Glass 2 detects users’ eye movements to point the cursor to recognize computer icons or objects in the Internet, and uses winks for commands. The researchers call this interface the “i-Mouse,” which removes the need to use hands or voice to control a mouse or touchpad. Like its predecessor, K-Glass 2 also employs augmented reality, displaying in real time the relevant, complementary information in the form of text, 3D graphics, images, and audio over the target objects selected by users.

The research results were presented, and K-Glass 2’s successful operation was demonstrated on-site to the 2015 Institute of Electrical and Electronics Engineers (IEEE) International Solid-State Circuits Conference (ISSCC) held on February 23-25, 2015 in San Francisco. The title of the paper was “A 2.71nJ/Pixel 3D-Stacked Gaze-Activated Object Recognition System for Low-power Mobile HMD Applications” (http://ieeexplore.ieee.org/Xplore/home.jsp).

The i-Mouse is a new user interface for smart glasses in which the gaze-image sensor (GIS) and object recognition processor (ORP) are stacked vertically to form a small chip. When three infrared LEDs (light-emitting diodes) built into the K-Glass 2 are projected into the user’s eyes, GIS recognizes their focal point and estimates the possible locations of the gaze as the user glances over the display screen. Then the electro-oculography sensor embedded on the nose pads reads the user’s eyelid movements, for example, winks, to click the selection. It is worth noting that the ORP is wired to perform only within the selected region of interest (ROI) by users. This results in a significant saving of battery life. Compared to the previous ORP chips, this chip uses 3.4 times less power, consuming on average 75 milliwatts (mW), thereby helping K-Glass 2 to run for almost 24 hours on a single charge.

Professor Yoo said, “The smart glass industry will surely grow as we see the Internet of Things becomes commonplace in the future. In order to expedite the commercial use of smart glasses, improving the user interface (UI) and the user experience (UX) are just as important as the development of compact-size, low-power wearable platforms with high energy efficiency. We have demonstrated such advancement through our K-Glass 2. Using the i-Mouse, K-Glass 2 can provide complicated augmented reality with low power through eye clicking.”

Professor Yoo and his doctoral student, Injoon Hong, conducted this research under the sponsorship of the Brain-mimicking Artificial Intelligence Many-core Processor project by the Ministry of Science, ICT and Future Planning in the Republic of Korea.

Youtube Link:

https://www.youtube.com/watchv=JaYtYK9E7p0&list=PLXmuftxI6pTW2jdIf69teY7QDXdI3Ougr

Picture 1: K-Glass 2

K-Glass 2 can detect eye movements and click computer icons via users’ winking.

Picture 2: Object Recognition Processor Chip

This picture shows a gaze-activated object-recognition system.

Picture 3: Augmented Reality Integrated into K-Glass 2

Users receive additional visual information overlaid on the objects they select.

2015.03.13 View 17414

KAIST Introduces New UI for K-Glass 2

A newly developed user interface, the “i-Mouse,” in the K-Glass 2 tracks the user’s gaze and connects the device to the Internet through blinking eyes such as winks. This low-power interface provides smart glasses with an excellent user experience, with a long-lasting battery and augmented reality.

Smart glasses are wearable computers that will likely lead to the growth of the Internet of Things. Currently available smart glasses, however, reveal a set of problems for commercialization, such as short battery life and low energy efficiency. In addition, glasses that use voice commands have raised the issue of privacy concerns.

A research team led by Professor Hoi-Jun Yoo of the Electrical Engineering Department at the Korea Advanced Institute of Science and Technology (KAIST) has recently developed an upgraded model of the K-Glass (http://www.eurekalert.org/pub_releases/2014-02/tkai-kdl021714.php) called “K-Glass 2.”

K-Glass 2 detects users’ eye movements to point the cursor to recognize computer icons or objects in the Internet, and uses winks for commands. The researchers call this interface the “i-Mouse,” which removes the need to use hands or voice to control a mouse or touchpad. Like its predecessor, K-Glass 2 also employs augmented reality, displaying in real time the relevant, complementary information in the form of text, 3D graphics, images, and audio over the target objects selected by users.

The research results were presented, and K-Glass 2’s successful operation was demonstrated on-site to the 2015 Institute of Electrical and Electronics Engineers (IEEE) International Solid-State Circuits Conference (ISSCC) held on February 23-25, 2015 in San Francisco. The title of the paper was “A 2.71nJ/Pixel 3D-Stacked Gaze-Activated Object Recognition System for Low-power Mobile HMD Applications” (http://ieeexplore.ieee.org/Xplore/home.jsp).

The i-Mouse is a new user interface for smart glasses in which the gaze-image sensor (GIS) and object recognition processor (ORP) are stacked vertically to form a small chip. When three infrared LEDs (light-emitting diodes) built into the K-Glass 2 are projected into the user’s eyes, GIS recognizes their focal point and estimates the possible locations of the gaze as the user glances over the display screen. Then the electro-oculography sensor embedded on the nose pads reads the user’s eyelid movements, for example, winks, to click the selection. It is worth noting that the ORP is wired to perform only within the selected region of interest (ROI) by users. This results in a significant saving of battery life. Compared to the previous ORP chips, this chip uses 3.4 times less power, consuming on average 75 milliwatts (mW), thereby helping K-Glass 2 to run for almost 24 hours on a single charge.

Professor Yoo said, “The smart glass industry will surely grow as we see the Internet of Things becomes commonplace in the future. In order to expedite the commercial use of smart glasses, improving the user interface (UI) and the user experience (UX) are just as important as the development of compact-size, low-power wearable platforms with high energy efficiency. We have demonstrated such advancement through our K-Glass 2. Using the i-Mouse, K-Glass 2 can provide complicated augmented reality with low power through eye clicking.”

Professor Yoo and his doctoral student, Injoon Hong, conducted this research under the sponsorship of the Brain-mimicking Artificial Intelligence Many-core Processor project by the Ministry of Science, ICT and Future Planning in the Republic of Korea.

Youtube Link:

https://www.youtube.com/watchv=JaYtYK9E7p0&list=PLXmuftxI6pTW2jdIf69teY7QDXdI3Ougr

Picture 1: K-Glass 2

K-Glass 2 can detect eye movements and click computer icons via users’ winking.

Picture 2: Object Recognition Processor Chip

This picture shows a gaze-activated object-recognition system.

Picture 3: Augmented Reality Integrated into K-Glass 2

Users receive additional visual information overlaid on the objects they select.

2015.03.13 View 17414 -

Interactions Features KAIST's Human-Computer Interaction Lab

Interactions, a bi-monthly magazine published by the Association for Computing Machinery (ACM), the largest educational and scientific computing society in the world, featured an article introducing Human-Computer Interaction (HCI) Lab at KAIST in the March/April 2015 issue (http://interactions.acm.org/archive/toc/march-april-2015).

Established in 2002, the HCI Lab (http://hcil.kaist.ac.kr/) is run by Professor Geehyuk Lee of the Computer Science Department at KAIST. The lab conducts various research projects to improve the design and operation of physical user interfaces and develops new interaction techniques for new types of computers. For the article, see the link below:

ACM Interactions, March and April 2015

Day in the Lab: Human-Computer Interaction Lab @ KAIST

http://interactions.acm.org/archive/view/march-april-2015/human-computer-interaction-lab-kaist

2015.03.02 View 11497

Interactions Features KAIST's Human-Computer Interaction Lab

Interactions, a bi-monthly magazine published by the Association for Computing Machinery (ACM), the largest educational and scientific computing society in the world, featured an article introducing Human-Computer Interaction (HCI) Lab at KAIST in the March/April 2015 issue (http://interactions.acm.org/archive/toc/march-april-2015).

Established in 2002, the HCI Lab (http://hcil.kaist.ac.kr/) is run by Professor Geehyuk Lee of the Computer Science Department at KAIST. The lab conducts various research projects to improve the design and operation of physical user interfaces and develops new interaction techniques for new types of computers. For the article, see the link below:

ACM Interactions, March and April 2015

Day in the Lab: Human-Computer Interaction Lab @ KAIST

http://interactions.acm.org/archive/view/march-april-2015/human-computer-interaction-lab-kaist

2015.03.02 View 11497 -

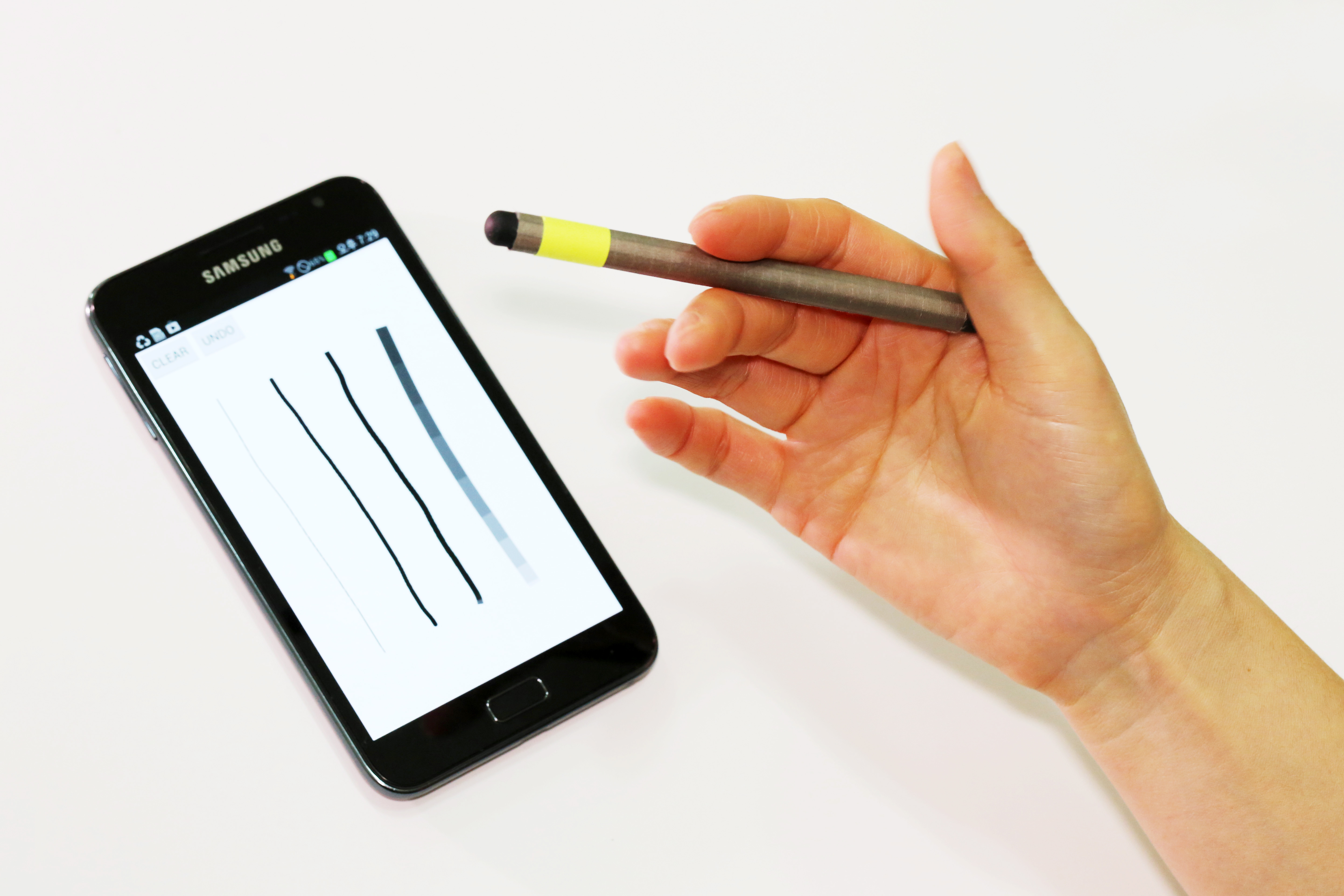

A magnetic pen for smartphones adds another level of conveniences

Utilizing existing features on smartphones, the MagPen provides users with a compatible and simple input tool regardless of the type of phones they are using.

A doctoral candidate at the Korea Advanced Institute of Science and Technology (KAIST) developed a magnetically driven pen interface that works both on and around mobile devices. This interface, called the MagPen, can be used for any type of smartphones and tablet computers so long as they have magnetometers embedded in.

Advised by Professor Kwang-yun Wohn of the Graduate School of Culture Technology (GSCT) at KAIST, Sungjae Hwang, a Ph.D. student, created the MagPen in collaboration with Myung-Wook Ahn, a master"s student at the GSCT of KAIST, and Andrea Bianchi, a professor at Sungkyunkwan University.

Almost all mobile devices today provide location-based services, and magnetometers are incorporated in the integrated circuits of smartphones or tablet PCs, functioning as compasses. Taking advantage of built-in magnetometers, Hwang"s team came up with a technology that enabled an input tool for mobile devices such as a capacitive stylus pen to interact more sensitively and effectively with the devices" touch screen. Text and command entered by a stylus pen are expressed better on the screen of mobile devices than those done by human fingers.

The MagPen utilizes magnetometers equipped with smartphones, thus there is no need to build an additional sensing panel for a touchscreen as well as circuits, communication modules, or batteries for the pen. With an application installed on smartphones, it senses and analyzes the magnetic field produced by a permanent magnet embedded in a standard capacitive stylus pen.

Sungjae Hwang said, "Our technology is eco-friendly and very affordable because we are able to improve the expressiveness of the stylus pen without requiring additional hardware beyond those already installed on the current mobile devices. The technology allows smartphone users to enjoy added convenience while no wastes generated."

The MagPen detects the direction at which a stylus pen is pointing; selects colors by dragging the pen across smartphone bezel; identifies pens with different magnetic properties; recognizes pen-spinning gestures; and estimates the finger pressure applied to the pen.

Notably, with its spinning motion, the MagPen expands the scope of input gestures recognized by a stylus pen beyond its existing vocabularies of gestures and techniques such as titling, hovering, and varying pressures. The tip of the pen switches from a pointer to an eraser and vice versa when spinning. Or, it can choose the thickness of the lines drawn on a screen by spinning.

"It"s quite remarkable to see that the MagPen can understand spinning motion. It"s like the pen changes its living environment from two dimensions to three dimensions. This is the most creative characteristic of our technology," added Sungjae Hwang.

Hwang"s initial research result was first presented at the International Conference on Intelligent User Interfaces organized by the Association for Computing Machinery and held on March 19-22 in Santa Monica, the US.

In the next month of August, the research team will present a paper on the MagPen technology, entitled "MagPen: Magnetically Driven Pen Interaction On and Around Conventional Smartphones" and receive an Honorable Mention Award at the 15th International Conference on Human-Computer Interaction with Mobile Devices and Services (MobileHCI 2013) to be held in Germany.

In addition to the MagPen, Hwang and his team are conducting other projects to develop different types of magnetic gadgets (collectively called "MagGetz") that include the Magnetic Marionette, a magnetic cover for a smartphone, which offers augmented interactions with the phone, as well as magnetic widgets such as buttons and toggle interface.

Hwang has filed ten patents for the MagGetz technology.

Youtube Links: http://www.youtube.com/watch?v=NkPo2las7wc, http://www.youtube.com/watch?v=J9GtgyzoZmM

2013.07.25 View 12795

A magnetic pen for smartphones adds another level of conveniences

Utilizing existing features on smartphones, the MagPen provides users with a compatible and simple input tool regardless of the type of phones they are using.

A doctoral candidate at the Korea Advanced Institute of Science and Technology (KAIST) developed a magnetically driven pen interface that works both on and around mobile devices. This interface, called the MagPen, can be used for any type of smartphones and tablet computers so long as they have magnetometers embedded in.

Advised by Professor Kwang-yun Wohn of the Graduate School of Culture Technology (GSCT) at KAIST, Sungjae Hwang, a Ph.D. student, created the MagPen in collaboration with Myung-Wook Ahn, a master"s student at the GSCT of KAIST, and Andrea Bianchi, a professor at Sungkyunkwan University.

Almost all mobile devices today provide location-based services, and magnetometers are incorporated in the integrated circuits of smartphones or tablet PCs, functioning as compasses. Taking advantage of built-in magnetometers, Hwang"s team came up with a technology that enabled an input tool for mobile devices such as a capacitive stylus pen to interact more sensitively and effectively with the devices" touch screen. Text and command entered by a stylus pen are expressed better on the screen of mobile devices than those done by human fingers.

The MagPen utilizes magnetometers equipped with smartphones, thus there is no need to build an additional sensing panel for a touchscreen as well as circuits, communication modules, or batteries for the pen. With an application installed on smartphones, it senses and analyzes the magnetic field produced by a permanent magnet embedded in a standard capacitive stylus pen.

Sungjae Hwang said, "Our technology is eco-friendly and very affordable because we are able to improve the expressiveness of the stylus pen without requiring additional hardware beyond those already installed on the current mobile devices. The technology allows smartphone users to enjoy added convenience while no wastes generated."

The MagPen detects the direction at which a stylus pen is pointing; selects colors by dragging the pen across smartphone bezel; identifies pens with different magnetic properties; recognizes pen-spinning gestures; and estimates the finger pressure applied to the pen.

Notably, with its spinning motion, the MagPen expands the scope of input gestures recognized by a stylus pen beyond its existing vocabularies of gestures and techniques such as titling, hovering, and varying pressures. The tip of the pen switches from a pointer to an eraser and vice versa when spinning. Or, it can choose the thickness of the lines drawn on a screen by spinning.

"It"s quite remarkable to see that the MagPen can understand spinning motion. It"s like the pen changes its living environment from two dimensions to three dimensions. This is the most creative characteristic of our technology," added Sungjae Hwang.

Hwang"s initial research result was first presented at the International Conference on Intelligent User Interfaces organized by the Association for Computing Machinery and held on March 19-22 in Santa Monica, the US.

In the next month of August, the research team will present a paper on the MagPen technology, entitled "MagPen: Magnetically Driven Pen Interaction On and Around Conventional Smartphones" and receive an Honorable Mention Award at the 15th International Conference on Human-Computer Interaction with Mobile Devices and Services (MobileHCI 2013) to be held in Germany.

In addition to the MagPen, Hwang and his team are conducting other projects to develop different types of magnetic gadgets (collectively called "MagGetz") that include the Magnetic Marionette, a magnetic cover for a smartphone, which offers augmented interactions with the phone, as well as magnetic widgets such as buttons and toggle interface.

Hwang has filed ten patents for the MagGetz technology.

Youtube Links: http://www.youtube.com/watch?v=NkPo2las7wc, http://www.youtube.com/watch?v=J9GtgyzoZmM

2013.07.25 View 12795 -

KAIST shocks the world with its creativity

Researchers at KAIST yielded great results at the world’s leading international Human Computer Interaction Society.

Professor Lee Gi Hyuk’s (Department of Computer Sciences) and Professor Bae Seok Hyung’ (Department of Industrial Design) respective teams received awards in two criteria in student innovation contest and was the only domestic university that presented their thesis at the ACM Symposium on User Interface Software.

The ACM UIST holds a student innovation contest prior to its opening. This year’s topic was the pressure sensing multi touch pad of Synaptics and involved 27 prestigious universities including MIT and CMU.

The KAIST team (Ki Son Joon Ph.D. candidate, Son Jeong Min M.A. candidate of Department of Computer Sciences and Woo Soo Jin M.A. candidate of Department of Industrial Design) designed a system that allows modulated control by attaching a simple structure to the pressure sensing multi touch pad.

The second KAIST team (Huh Seong Guk Ph.D. candidate, Han Jae Hyun Ph.D. candidate, Koo Ji Sung Ph.D. candidate at the Department of Computer Sciences, and Choi Ha Yan M.A. candidate at Department of Industrial Design) designed a system that utilizes a highly elastic fiber to allow the sensing of lateral forces. They also created a slingshot game application which was the second most popular system.

In the thesis session Professor Bae’s team (Lee DaWhee Ph.D. candidate, Son Kyung Hee Ph.D. candidate, Lee Joon Hyup M.A. candidate at Department of Industrial Design) presented a thesis that dealt with the technology that innovated the table pen for displays.

The new ‘phantom pen’ solved the issue arising from the hiding effect of the pen’s contact point and the display error due to the thickness of the display. In addition the ‘phantom pen’ has the ability to show the same effects as crayons or markers in a digital environment.

2012.11.29 View 12627

KAIST shocks the world with its creativity

Researchers at KAIST yielded great results at the world’s leading international Human Computer Interaction Society.

Professor Lee Gi Hyuk’s (Department of Computer Sciences) and Professor Bae Seok Hyung’ (Department of Industrial Design) respective teams received awards in two criteria in student innovation contest and was the only domestic university that presented their thesis at the ACM Symposium on User Interface Software.

The ACM UIST holds a student innovation contest prior to its opening. This year’s topic was the pressure sensing multi touch pad of Synaptics and involved 27 prestigious universities including MIT and CMU.

The KAIST team (Ki Son Joon Ph.D. candidate, Son Jeong Min M.A. candidate of Department of Computer Sciences and Woo Soo Jin M.A. candidate of Department of Industrial Design) designed a system that allows modulated control by attaching a simple structure to the pressure sensing multi touch pad.

The second KAIST team (Huh Seong Guk Ph.D. candidate, Han Jae Hyun Ph.D. candidate, Koo Ji Sung Ph.D. candidate at the Department of Computer Sciences, and Choi Ha Yan M.A. candidate at Department of Industrial Design) designed a system that utilizes a highly elastic fiber to allow the sensing of lateral forces. They also created a slingshot game application which was the second most popular system.

In the thesis session Professor Bae’s team (Lee DaWhee Ph.D. candidate, Son Kyung Hee Ph.D. candidate, Lee Joon Hyup M.A. candidate at Department of Industrial Design) presented a thesis that dealt with the technology that innovated the table pen for displays.

The new ‘phantom pen’ solved the issue arising from the hiding effect of the pen’s contact point and the display error due to the thickness of the display. In addition the ‘phantom pen’ has the ability to show the same effects as crayons or markers in a digital environment.

2012.11.29 View 12627