Data

-

KAIST's Li-Fi - Achieves 100 Times Faster Speed and Enhanced Security of Wi-Fi

- KAIST-KRISS Develop 'On-Device Encryption Optical Transmitter' Based on Eco-Friendly Quantum Dots

- New Li-Fi Platform Technology Achieves High Performance with 17.4% Device Efficiency and 29,000 nit Brightness, Simultaneously Improving Transmission Speed and Security

- Presents New Methodology for High-Speed and Encrypted Communication Through Single-Device-Based Dual-Channel Optical Modulation

< Photo 1. (Front row from left) Seungmin Shin, First Author; Professor Himchan Cho; (Back row from left) Hyungdoh Lee, Seungwoo Lee, Wonbeom Lee; (Top left) Dr. Kyung-geun Lim >

Li-Fi (Light Fidelity) is a wireless communication technology that utilizes the visible light spectrum (400-800 THz), similar to LED light, offering speeds up to 100 times faster than existing Wi-Fi (up to 224 Gbps). While it has fewer limitations in available frequency allocation and less radio interference, it is relatively vulnerable to security breaches as anyone can access it. Korean researchers have now proposed a new Li-Fi platform that overcomes the limitations of conventional optical communication devices and can simultaneously enhance both transmission speed and security.

KAIST (President Kwang Hyung Lee) announced on the 24th that Professor Himchan Cho's research team from the Department of Materials Science and Engineering, in collaboration with Dr. Kyung-geun Lim of the Korea Research Institute of Standards and Science (KRISS, President Ho-Seong Lee) under the National Research Council of Science & Technology (NST, Chairman Young-Sik Kim), has developed 'on-device encryption optical communication device' technology for the utilization of 'Li-Fi,' which is attracting attention as a next-generation ultra-high-speed data communication.

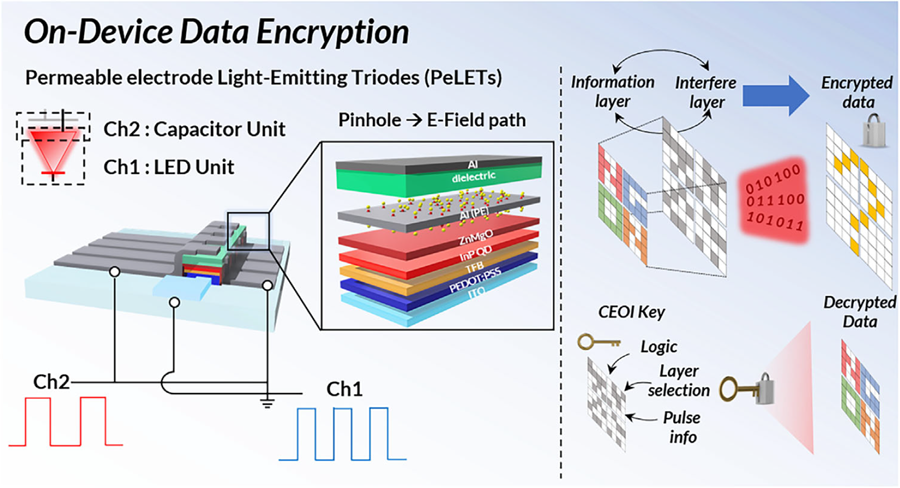

Professor Cho's team created high-efficiency light-emitting triode devices using eco-friendly quantum dots (low-toxicity and sustainable materials). The device developed by the research team is a mechanism that generates light using an electric field. Specifically, the electric field is concentrated in 'tiny holes (pinholes) in the permeable electrode' and transmitted beyond the electrode. This device utilizes this principle to simultaneously process two input data streams.

Using this principle, the research team developed a technology called 'on-device encryption optical transmitter.' The core of this technology is that the device itself converts information into light and simultaneously encrypts it. This means that enhanced security data transmission is possible without the need for complex, separate equipment.

External Quantum Efficiency (EQE) is an indicator of how efficiently electricity is converted into light, with a general commercialization standard of about 20%. The newly developed device recorded an EQE of 17.4%, and its luminance was 29,000 nit, significantly exceeding the maximum brightness of a smartphone OLED screen, which is 2,000 nit, demonstrating a brightness more than 10 times higher.

< Figure 1. Schematic diagram of the device structure developed by the research team and encrypted communication >

Furthermore, to more accurately understand how this device converts information into light, the research team used a method called 'transient electroluminescence analysis.' They analyzed the light-emitting characteristics generated by the device when voltage was instantaneously applied for very short durations (hundreds of nanoseconds = billionths of a second). Through this analysis, they investigated the movement of charges within the device at hundreds of nanoseconds, elucidating the operating mechanism of dual-channel optical modulation implemented within a single device.

Professor Himchan Cho of KAIST stated, "This research overcomes the limitations of existing optical communication devices and proposes a new communication platform that can both increase transmission speed and enhance security."

< Photo 2. Professor Himchan Cho, Department of Materials Science and Engineering >

He added, "This technology, which strengthens security without additional equipment and simultaneously enables encryption and transmission, can be widely applied in various fields where security is crucial in the future."

This research, with Seungmin Shin, a Ph.D. candidate at KAIST's Department of Materials Science and Engineering, participating as the first author, and Professor Himchan Cho and Dr. Kyung-geun Lim of KRISS as co-corresponding authors, was published in the international journal 'Advanced Materials' on May 30th and was selected as an inside front cover paper.※ Paper Title: High-Efficiency Quantum Dot Permeable electrode Light-Emitting Triodes for Visible-Light Communications and On-Device Data Encryption※ DOI: https://doi.org/10.1002/adma.202503189

This research was supported by the National Research Foundation of Korea, the National Research Council of Science & Technology (NST), and the Korea Institute for Advancement of Technology.

2025.06.24 View 643

KAIST's Li-Fi - Achieves 100 Times Faster Speed and Enhanced Security of Wi-Fi

- KAIST-KRISS Develop 'On-Device Encryption Optical Transmitter' Based on Eco-Friendly Quantum Dots

- New Li-Fi Platform Technology Achieves High Performance with 17.4% Device Efficiency and 29,000 nit Brightness, Simultaneously Improving Transmission Speed and Security

- Presents New Methodology for High-Speed and Encrypted Communication Through Single-Device-Based Dual-Channel Optical Modulation

< Photo 1. (Front row from left) Seungmin Shin, First Author; Professor Himchan Cho; (Back row from left) Hyungdoh Lee, Seungwoo Lee, Wonbeom Lee; (Top left) Dr. Kyung-geun Lim >

Li-Fi (Light Fidelity) is a wireless communication technology that utilizes the visible light spectrum (400-800 THz), similar to LED light, offering speeds up to 100 times faster than existing Wi-Fi (up to 224 Gbps). While it has fewer limitations in available frequency allocation and less radio interference, it is relatively vulnerable to security breaches as anyone can access it. Korean researchers have now proposed a new Li-Fi platform that overcomes the limitations of conventional optical communication devices and can simultaneously enhance both transmission speed and security.

KAIST (President Kwang Hyung Lee) announced on the 24th that Professor Himchan Cho's research team from the Department of Materials Science and Engineering, in collaboration with Dr. Kyung-geun Lim of the Korea Research Institute of Standards and Science (KRISS, President Ho-Seong Lee) under the National Research Council of Science & Technology (NST, Chairman Young-Sik Kim), has developed 'on-device encryption optical communication device' technology for the utilization of 'Li-Fi,' which is attracting attention as a next-generation ultra-high-speed data communication.

Professor Cho's team created high-efficiency light-emitting triode devices using eco-friendly quantum dots (low-toxicity and sustainable materials). The device developed by the research team is a mechanism that generates light using an electric field. Specifically, the electric field is concentrated in 'tiny holes (pinholes) in the permeable electrode' and transmitted beyond the electrode. This device utilizes this principle to simultaneously process two input data streams.

Using this principle, the research team developed a technology called 'on-device encryption optical transmitter.' The core of this technology is that the device itself converts information into light and simultaneously encrypts it. This means that enhanced security data transmission is possible without the need for complex, separate equipment.

External Quantum Efficiency (EQE) is an indicator of how efficiently electricity is converted into light, with a general commercialization standard of about 20%. The newly developed device recorded an EQE of 17.4%, and its luminance was 29,000 nit, significantly exceeding the maximum brightness of a smartphone OLED screen, which is 2,000 nit, demonstrating a brightness more than 10 times higher.

< Figure 1. Schematic diagram of the device structure developed by the research team and encrypted communication >

Furthermore, to more accurately understand how this device converts information into light, the research team used a method called 'transient electroluminescence analysis.' They analyzed the light-emitting characteristics generated by the device when voltage was instantaneously applied for very short durations (hundreds of nanoseconds = billionths of a second). Through this analysis, they investigated the movement of charges within the device at hundreds of nanoseconds, elucidating the operating mechanism of dual-channel optical modulation implemented within a single device.

Professor Himchan Cho of KAIST stated, "This research overcomes the limitations of existing optical communication devices and proposes a new communication platform that can both increase transmission speed and enhance security."

< Photo 2. Professor Himchan Cho, Department of Materials Science and Engineering >

He added, "This technology, which strengthens security without additional equipment and simultaneously enables encryption and transmission, can be widely applied in various fields where security is crucial in the future."

This research, with Seungmin Shin, a Ph.D. candidate at KAIST's Department of Materials Science and Engineering, participating as the first author, and Professor Himchan Cho and Dr. Kyung-geun Lim of KRISS as co-corresponding authors, was published in the international journal 'Advanced Materials' on May 30th and was selected as an inside front cover paper.※ Paper Title: High-Efficiency Quantum Dot Permeable electrode Light-Emitting Triodes for Visible-Light Communications and On-Device Data Encryption※ DOI: https://doi.org/10.1002/adma.202503189

This research was supported by the National Research Foundation of Korea, the National Research Council of Science & Technology (NST), and the Korea Institute for Advancement of Technology.

2025.06.24 View 643 -

KAIST Discovers Molecular Switch that Reverses Cancerous Transformation at the Critical Moment of Transition

< (From left) PhD student Seoyoon D. Jeong, (bottom) Professor Kwang-Hyun Cho, (top) Dr. Dongkwan Shin, Dr. Jeong-Ryeol Gong >

Professor Kwang-Hyun Cho’s research team has recently been highlighted for their work on developing an original technology for cancer reversal treatment that does not kill cancer cells but only changes their characteristics to reverse them to a state similar to normal cells. This time, they have succeeded in revealing for the first time that a molecular switch that can induce cancer reversal at the moment when normal cells change into cancer cells is hidden in the genetic network.

KAIST (President Kwang-Hyung Lee) announced on the 5th of February that Professor Kwang-Hyun Cho's research team of the Department of Bio and Brain Engineering has succeeded in developing a fundamental technology to capture the critical transition phenomenon at the moment when normal cells change into cancer cells and analyze it to discover a molecular switch that can revert cancer cells back into normal cells.

A critical transition is a phenomenon in which a sudden change in state occurs at a specific point in time, like water changing into steam at 100℃. This critical transition phenomenon also occurs in the process in which normal cells change into cancer cells at a specific point in time due to the accumulation of genetic and epigenetic changes.

The research team discovered that normal cells can enter an unstable critical transition state where normal cells and cancer cells coexist just before they change into cancer cells during tumorigenesis, the production or development of tumors, and analyzed this critical transition state using a systems biology method to develop a cancer reversal molecular switch identification technology that can reverse the cancerization process. They then applied this to colon cancer cells and confirmed through molecular cell experiments that cancer cells can recover the characteristics of normal cells.

This is an original technology that automatically infers a computer model of the genetic network that controls the critical transition of cancer development from single-cell RNA sequencing data, and systematically finds molecular switches for cancer reversion by simulation analysis. It is expected that this technology will be applied to the development of reversion therapies for other cancers in the future.

Professor Kwang-Hyun Cho said, "We have discovered a molecular switch that can revert the fate of cancer cells back to a normal state by capturing the moment of critical transition right before normal cells are changed into an irreversible cancerous state."

< Figure 1. Overall conceptual framework of the technology that automatically constructs a molecular regulatory network from single-cell RNA sequencing data of colon cancer cells to discover molecular switches for cancer reversion through computer simulation analysis. Professor Kwang-Hyun Cho's research team established a fundamental technology for automatic construction of a computer model of a core gene network by analyzing the entire process of tumorigenesis of colon cells turning into cancer cells, and developed an original technology for discovering the molecular switches that can induce cancer cell reversal through attractor landscape analysis. >

He continued, "In particular, this study has revealed in detail, at the genetic network level, what changes occur within cells behind the process of cancer development, which has been considered a mystery until now." He emphasized, "This is the first study to reveal that an important clue that can revert the fate of tumorigenesis is hidden at this very critical moment of change."

< Figure 2. Identification of tumor transition state using single-cell RNA sequencing data from colorectal cancer. Using single-cell RNA sequencing data from colorectal cancer patient-derived organoids for normal and cancerous tissues, a critical transition was identified in which normal and cancerous cells coexist and instability increases (a-d). The critical transition was confirmed to show intermediate levels of major phenotypic features related to cancer or normal tissues that are indicative of the states between the normal and cancerous cells (e). >

The results of this study, conducted by KAIST Dr. Dongkwan Shin (currently at the National Cancer Center), Dr. Jeong-Ryeol Gong, and doctoral student Seoyoon D. Jeong jointly with a research team at Seoul National University that provided the organoids (in vitro cultured tissues) from colon cancer patient, were published as an online paper in the international journal ‘Advanced Science’ published by Wiley on January 22nd.

(Paper title: Attractor landscape analysis reveals a reversion switch in the transition of colorectal tumorigenesis) (DOI: https://doi.org/10.1002/advs.202412503)

< Figure 3. Reconstruction of a dynamic network model for the transition state of colorectal cancer.

A new technology was established to build a gene network computer model that can simulate the dynamic changes between genes by integrating single-cell RNA sequencing data and existing experimental results on gene-to-gene interactions in the critical transition of cancer. (a). Using this technology, a gene network computer model for the critical transition of colorectal cancer was constructed, and the distribution of attractors representing normal and cancer cell phenotypes was investigated through attractor landscape analysis (b-e). >

This study was conducted with the support of the National Research Foundation of Korea under the Ministry of Science and ICT through the Mid-Career Researcher Program and Basic Research Laboratory Program and the Disease-Centered Translational Research Project of the Korea Health Industry Development Institute (KHIDI) of the Ministry of Health and Welfare.

< Figure 4. Quantification of attractor landscapes and discovery of transcription factors for cancer reversibility through perturbation simulation analysis. A methodology for implementing discontinuous attractor landscapes continuously from a computer model of gene networks and quantifying them as cancer scores was introduced (a), and attractor landscapes for the critical transition of colorectal cancer were secured (b-d). By tracking the change patterns of normal and cancer cell attractors through perturbation simulation analysis for each gene, the optimal combination of transcription factors for cancer reversion was discovered (e-h). This was confirmed in various parameter combinations as well (i). >

< Figure 5. Identification and experimental validation of the optimal target gene for cancer reversion. Among the common target genes of the discovered transcription factor combinations, we identified cancer reversing molecular switches that are predicted to suppress cancer cell proliferation and restore the characteristics of normal colon cells (a-d). When inhibitors for the molecular switches were treated to organoids derived from colon cancer patients, it was confirmed that cancer cell proliferation was suppressed and the expression of key genes related to cancer development was inhibited (e-h), and a group of genes related to normal colon epithelium was activated and transformed into a state similar to normal colon cells (i-j). >

< Figure 6. Schematic diagram of the research results. Professor Kwang-Hyun Cho's research team developed an original technology to systematically discover key molecular switches that can induce reversion of colon cancer cells through a systems biology approach using an attractor landscape analysis of a genetic network model for the critical transition at the moment of transformation from normal cells to cancer cells, and verified the reversing effect of actual colon cancer through cellular experiments. >

2025.02.05 View 26434

KAIST Discovers Molecular Switch that Reverses Cancerous Transformation at the Critical Moment of Transition

< (From left) PhD student Seoyoon D. Jeong, (bottom) Professor Kwang-Hyun Cho, (top) Dr. Dongkwan Shin, Dr. Jeong-Ryeol Gong >

Professor Kwang-Hyun Cho’s research team has recently been highlighted for their work on developing an original technology for cancer reversal treatment that does not kill cancer cells but only changes their characteristics to reverse them to a state similar to normal cells. This time, they have succeeded in revealing for the first time that a molecular switch that can induce cancer reversal at the moment when normal cells change into cancer cells is hidden in the genetic network.

KAIST (President Kwang-Hyung Lee) announced on the 5th of February that Professor Kwang-Hyun Cho's research team of the Department of Bio and Brain Engineering has succeeded in developing a fundamental technology to capture the critical transition phenomenon at the moment when normal cells change into cancer cells and analyze it to discover a molecular switch that can revert cancer cells back into normal cells.

A critical transition is a phenomenon in which a sudden change in state occurs at a specific point in time, like water changing into steam at 100℃. This critical transition phenomenon also occurs in the process in which normal cells change into cancer cells at a specific point in time due to the accumulation of genetic and epigenetic changes.

The research team discovered that normal cells can enter an unstable critical transition state where normal cells and cancer cells coexist just before they change into cancer cells during tumorigenesis, the production or development of tumors, and analyzed this critical transition state using a systems biology method to develop a cancer reversal molecular switch identification technology that can reverse the cancerization process. They then applied this to colon cancer cells and confirmed through molecular cell experiments that cancer cells can recover the characteristics of normal cells.

This is an original technology that automatically infers a computer model of the genetic network that controls the critical transition of cancer development from single-cell RNA sequencing data, and systematically finds molecular switches for cancer reversion by simulation analysis. It is expected that this technology will be applied to the development of reversion therapies for other cancers in the future.

Professor Kwang-Hyun Cho said, "We have discovered a molecular switch that can revert the fate of cancer cells back to a normal state by capturing the moment of critical transition right before normal cells are changed into an irreversible cancerous state."

< Figure 1. Overall conceptual framework of the technology that automatically constructs a molecular regulatory network from single-cell RNA sequencing data of colon cancer cells to discover molecular switches for cancer reversion through computer simulation analysis. Professor Kwang-Hyun Cho's research team established a fundamental technology for automatic construction of a computer model of a core gene network by analyzing the entire process of tumorigenesis of colon cells turning into cancer cells, and developed an original technology for discovering the molecular switches that can induce cancer cell reversal through attractor landscape analysis. >

He continued, "In particular, this study has revealed in detail, at the genetic network level, what changes occur within cells behind the process of cancer development, which has been considered a mystery until now." He emphasized, "This is the first study to reveal that an important clue that can revert the fate of tumorigenesis is hidden at this very critical moment of change."

< Figure 2. Identification of tumor transition state using single-cell RNA sequencing data from colorectal cancer. Using single-cell RNA sequencing data from colorectal cancer patient-derived organoids for normal and cancerous tissues, a critical transition was identified in which normal and cancerous cells coexist and instability increases (a-d). The critical transition was confirmed to show intermediate levels of major phenotypic features related to cancer or normal tissues that are indicative of the states between the normal and cancerous cells (e). >

The results of this study, conducted by KAIST Dr. Dongkwan Shin (currently at the National Cancer Center), Dr. Jeong-Ryeol Gong, and doctoral student Seoyoon D. Jeong jointly with a research team at Seoul National University that provided the organoids (in vitro cultured tissues) from colon cancer patient, were published as an online paper in the international journal ‘Advanced Science’ published by Wiley on January 22nd.

(Paper title: Attractor landscape analysis reveals a reversion switch in the transition of colorectal tumorigenesis) (DOI: https://doi.org/10.1002/advs.202412503)

< Figure 3. Reconstruction of a dynamic network model for the transition state of colorectal cancer.

A new technology was established to build a gene network computer model that can simulate the dynamic changes between genes by integrating single-cell RNA sequencing data and existing experimental results on gene-to-gene interactions in the critical transition of cancer. (a). Using this technology, a gene network computer model for the critical transition of colorectal cancer was constructed, and the distribution of attractors representing normal and cancer cell phenotypes was investigated through attractor landscape analysis (b-e). >

This study was conducted with the support of the National Research Foundation of Korea under the Ministry of Science and ICT through the Mid-Career Researcher Program and Basic Research Laboratory Program and the Disease-Centered Translational Research Project of the Korea Health Industry Development Institute (KHIDI) of the Ministry of Health and Welfare.

< Figure 4. Quantification of attractor landscapes and discovery of transcription factors for cancer reversibility through perturbation simulation analysis. A methodology for implementing discontinuous attractor landscapes continuously from a computer model of gene networks and quantifying them as cancer scores was introduced (a), and attractor landscapes for the critical transition of colorectal cancer were secured (b-d). By tracking the change patterns of normal and cancer cell attractors through perturbation simulation analysis for each gene, the optimal combination of transcription factors for cancer reversion was discovered (e-h). This was confirmed in various parameter combinations as well (i). >

< Figure 5. Identification and experimental validation of the optimal target gene for cancer reversion. Among the common target genes of the discovered transcription factor combinations, we identified cancer reversing molecular switches that are predicted to suppress cancer cell proliferation and restore the characteristics of normal colon cells (a-d). When inhibitors for the molecular switches were treated to organoids derived from colon cancer patients, it was confirmed that cancer cell proliferation was suppressed and the expression of key genes related to cancer development was inhibited (e-h), and a group of genes related to normal colon epithelium was activated and transformed into a state similar to normal colon cells (i-j). >

< Figure 6. Schematic diagram of the research results. Professor Kwang-Hyun Cho's research team developed an original technology to systematically discover key molecular switches that can induce reversion of colon cancer cells through a systems biology approach using an attractor landscape analysis of a genetic network model for the critical transition at the moment of transformation from normal cells to cancer cells, and verified the reversing effect of actual colon cancer through cellular experiments. >

2025.02.05 View 26434 -

Unraveling Mitochondrial DNA Mutations in Human Cells

Throughout our lifetime, cells accumulate DNA mutations, which contribute to genetic diversity, or “mosaicism”, among cells. These genomic mutations are pivotal for the aging process and the onset of various diseases, including cancer. Mitochondria, essential cellular organelles involved in energy metabolism and apoptosis, possess their own DNA, which are susceptible to mutations. However, studies on mtDNA mutations and mosaicism have been limited due to a variety of technical challenges.

Genomic scientists from KAIST have revealed the genetic mosaicism characterized by variations in mitochondrial DNA (mtDNA) across normal human cells. This study provides fundamental insights into understanding human aging and disease onset mechanisms.

The study, “Mitochondrial DNA mosaicism in normal human somatic cells,” was published in Nature Genetics on July 22. It was led by graduate student Jisong An under the supervision of Professor Young Seok Ju from the Graduate School of Medical Science and Engineering.

Researchers from Seoul National University College of Medicine, Yonsei University College of Medicine, Korea University College of Medicine, Washington University School of Medicine National Cancer Center, Seoul National University Hospital, Gangnam Severance Hospital and KAIST faculty startup company Inocras Inc. also participated in this study.

< Figure 1. a. Flow of experiment. b. Schematic diagram illustrating the origin and dynamics of mtDNA alterations across a lifetime. >

The study involved a bioinformatic analysis of whole-genome sequences from 2,096 single cells obtained from normal human colorectal epithelial tissue, fibroblasts, and blood collected from 31 individuals. The study highlights an average of three significant mtDNA differences between cells, with approximately 6% of these variations confirmed to be inherited as heteroplasmy from the mother.

Moreover, mutations significantly increased during tumorigenesis, with some mutations contributing to instability in mitochondrial RNA. Based on these findings, the study illustrates a computational model that comprehensively elucidates the evolution of mitochondria from embryonic development to aging and tumorigenesis.

This study systematically reveals the mechanisms behind mitochondrial DNA mosaicism in normal human cells, establishing a crucial foundation for understanding the impact of mtDNA on aging and disease onset.

Professor Ju remarked, “By systematically utilizing whole-genome big data, we can illuminate previously unknown phenomena in life sciences.” He emphasized the significance of the study, adding, “For the first time, we have established a method to systematically understand mitochondrial DNA changes occurring during human embryonic development, aging, and cancer development.”

This work was supported by the National Research Foundation of Korea and the Suh Kyungbae Foundation.

2024.07.24 View 5382

Unraveling Mitochondrial DNA Mutations in Human Cells

Throughout our lifetime, cells accumulate DNA mutations, which contribute to genetic diversity, or “mosaicism”, among cells. These genomic mutations are pivotal for the aging process and the onset of various diseases, including cancer. Mitochondria, essential cellular organelles involved in energy metabolism and apoptosis, possess their own DNA, which are susceptible to mutations. However, studies on mtDNA mutations and mosaicism have been limited due to a variety of technical challenges.

Genomic scientists from KAIST have revealed the genetic mosaicism characterized by variations in mitochondrial DNA (mtDNA) across normal human cells. This study provides fundamental insights into understanding human aging and disease onset mechanisms.

The study, “Mitochondrial DNA mosaicism in normal human somatic cells,” was published in Nature Genetics on July 22. It was led by graduate student Jisong An under the supervision of Professor Young Seok Ju from the Graduate School of Medical Science and Engineering.

Researchers from Seoul National University College of Medicine, Yonsei University College of Medicine, Korea University College of Medicine, Washington University School of Medicine National Cancer Center, Seoul National University Hospital, Gangnam Severance Hospital and KAIST faculty startup company Inocras Inc. also participated in this study.

< Figure 1. a. Flow of experiment. b. Schematic diagram illustrating the origin and dynamics of mtDNA alterations across a lifetime. >

The study involved a bioinformatic analysis of whole-genome sequences from 2,096 single cells obtained from normal human colorectal epithelial tissue, fibroblasts, and blood collected from 31 individuals. The study highlights an average of three significant mtDNA differences between cells, with approximately 6% of these variations confirmed to be inherited as heteroplasmy from the mother.

Moreover, mutations significantly increased during tumorigenesis, with some mutations contributing to instability in mitochondrial RNA. Based on these findings, the study illustrates a computational model that comprehensively elucidates the evolution of mitochondria from embryonic development to aging and tumorigenesis.

This study systematically reveals the mechanisms behind mitochondrial DNA mosaicism in normal human cells, establishing a crucial foundation for understanding the impact of mtDNA on aging and disease onset.

Professor Ju remarked, “By systematically utilizing whole-genome big data, we can illuminate previously unknown phenomena in life sciences.” He emphasized the significance of the study, adding, “For the first time, we have established a method to systematically understand mitochondrial DNA changes occurring during human embryonic development, aging, and cancer development.”

This work was supported by the National Research Foundation of Korea and the Suh Kyungbae Foundation.

2024.07.24 View 5382 -

A KAIST-SNUH Team Devises a Way to Make Mathematical Predictions to find Metabolites Related to Somatic Mutations in Cancers

Cancer is characterized by abnormal metabolic processes different from those of normal cells. Therefore, cancer metabolism has been extensively studied to develop effective diagnosis and treatment strategies. Notable achievements of cancer metabolism studies include the discovery of oncometabolites* and the approval of anticancer drugs by the U.S. Food and Drug Administration (FDA) that target enzymes associated with oncometabolites. Approved anticancer drugs such as ‘Tibsovo (active ingredient: ivosidenib)’ and ‘Idhifa (active ingredient: enasidenib)’ are both used for the treatment of acute myeloid leukemia. Despite such achievements, studying cancer metabolism, especially oncometabolites, remains challenging due to time-consuming and expensive methodologies such as metabolomics. Thus, the number of confirmed oncometabolites is very small although a relatively large number of cancer-associated gene mutations have been well studied.

*Oncometabolite: A metabolite that shows pro-oncogenic function when abnormally accumulated in cancer cells. An oncometabolite is often generated as a result of gene mutations, and this accumulation promotes the growth and survival of cancer cells. Representative oncometabolites include 2-hydroxyglutarate, succinate, and fumarate.

On March 18th, a KAIST research team led by Professor Hyun Uk Kim from the Department of Chemical and Biomolecular Engineering developed a computational workflow that systematically predicts metabolites and metabolic pathways associated with somatic mutations in cancer through collaboration with research teams under Prof Youngil Koh, Prof. Hongseok Yun, and Prof. Chang Wook Jeong from Seoul National University Hospital.

The research teams have successfully reconstructed patient-specific genome-scale metabolic models (GEMs)* for 1,043 cancer patients across 24 cancer types by integrating publicly available cancer patients’ transcriptome data (i.e., from international cancer genome consortiums such as PCAWG and TCGA) into a generic human GEM. The resulting patient-specific GEMs make it possible to predict each patient’s metabolic phenotypes.

*Genome-scale metabolic model (GEM): A computational model that mathematically describes all of the biochemical reactions that take place inside a cell. It allows for the prediction of the cell’s metabolic phenotypes under various genetic and/or environmental conditions.

< Figure 1. Schematic diagram of a computational methodology for predicting metabolites and metabolic pathways associated with cancer somatic mutations. of a computational methodology for predicting metabolites and metabolic pathways associated with cancer somatic mutations. >

The team developed a four-step computational workflow using the patient-specific GEMs from 1,043 cancer patients and somatic mutation data obtained from the corresponding cancer patients. This workflow begins with the calculation of the flux-sum value of each metabolite by simulating the patient-specific GEMs. The flux-sum value quantifies the intracellular importance of a metabolite. Next, the workflow identifies metabolites that appear to be significantly associated with specific gene mutations through a statistical analysis of the predicted flux-sum data and the mutation data. Finally, the workflow selects altered metabolic pathways that significantly contribute to the biosynthesis of the predicted oncometabolite candidates, ultimately generating metabolite-gene-pathway sets as an output.

The two co-first authors, Dr. GaRyoung Lee (currently a postdoctoral fellow at the Dana-Farber Cancer Institute and Harvard Medical School) and Dr. Sang Mi Lee (currently a postdoctoral fellow at Harvard Medical School) said, “The computational workflow developed can systematically predict how genetic mutations affect cellular metabolism through metabolic pathways. Importantly, it can easily be applied to different types of cancer based on the mutation and transcriptome data of cancer patient cohorts.”

Prof. Kim said, “The computational workflow and its resulting prediction outcomes will serve as the groundwork for identifying novel oncometabolites and for facilitating the development of various treatment and diagnosis strategies”.

This study, which was supported by the National Research Foundation of Korea, has been published online in Genome Biology, a representative journal in the field of biotechnology and genetics, under the title "Prediction of metabolites associated with somatic mutations in cancers by using genome‑scale metabolic models and mutation data".

2024.03.18 View 7012

A KAIST-SNUH Team Devises a Way to Make Mathematical Predictions to find Metabolites Related to Somatic Mutations in Cancers

Cancer is characterized by abnormal metabolic processes different from those of normal cells. Therefore, cancer metabolism has been extensively studied to develop effective diagnosis and treatment strategies. Notable achievements of cancer metabolism studies include the discovery of oncometabolites* and the approval of anticancer drugs by the U.S. Food and Drug Administration (FDA) that target enzymes associated with oncometabolites. Approved anticancer drugs such as ‘Tibsovo (active ingredient: ivosidenib)’ and ‘Idhifa (active ingredient: enasidenib)’ are both used for the treatment of acute myeloid leukemia. Despite such achievements, studying cancer metabolism, especially oncometabolites, remains challenging due to time-consuming and expensive methodologies such as metabolomics. Thus, the number of confirmed oncometabolites is very small although a relatively large number of cancer-associated gene mutations have been well studied.

*Oncometabolite: A metabolite that shows pro-oncogenic function when abnormally accumulated in cancer cells. An oncometabolite is often generated as a result of gene mutations, and this accumulation promotes the growth and survival of cancer cells. Representative oncometabolites include 2-hydroxyglutarate, succinate, and fumarate.

On March 18th, a KAIST research team led by Professor Hyun Uk Kim from the Department of Chemical and Biomolecular Engineering developed a computational workflow that systematically predicts metabolites and metabolic pathways associated with somatic mutations in cancer through collaboration with research teams under Prof Youngil Koh, Prof. Hongseok Yun, and Prof. Chang Wook Jeong from Seoul National University Hospital.

The research teams have successfully reconstructed patient-specific genome-scale metabolic models (GEMs)* for 1,043 cancer patients across 24 cancer types by integrating publicly available cancer patients’ transcriptome data (i.e., from international cancer genome consortiums such as PCAWG and TCGA) into a generic human GEM. The resulting patient-specific GEMs make it possible to predict each patient’s metabolic phenotypes.

*Genome-scale metabolic model (GEM): A computational model that mathematically describes all of the biochemical reactions that take place inside a cell. It allows for the prediction of the cell’s metabolic phenotypes under various genetic and/or environmental conditions.

< Figure 1. Schematic diagram of a computational methodology for predicting metabolites and metabolic pathways associated with cancer somatic mutations. of a computational methodology for predicting metabolites and metabolic pathways associated with cancer somatic mutations. >

The team developed a four-step computational workflow using the patient-specific GEMs from 1,043 cancer patients and somatic mutation data obtained from the corresponding cancer patients. This workflow begins with the calculation of the flux-sum value of each metabolite by simulating the patient-specific GEMs. The flux-sum value quantifies the intracellular importance of a metabolite. Next, the workflow identifies metabolites that appear to be significantly associated with specific gene mutations through a statistical analysis of the predicted flux-sum data and the mutation data. Finally, the workflow selects altered metabolic pathways that significantly contribute to the biosynthesis of the predicted oncometabolite candidates, ultimately generating metabolite-gene-pathway sets as an output.

The two co-first authors, Dr. GaRyoung Lee (currently a postdoctoral fellow at the Dana-Farber Cancer Institute and Harvard Medical School) and Dr. Sang Mi Lee (currently a postdoctoral fellow at Harvard Medical School) said, “The computational workflow developed can systematically predict how genetic mutations affect cellular metabolism through metabolic pathways. Importantly, it can easily be applied to different types of cancer based on the mutation and transcriptome data of cancer patient cohorts.”

Prof. Kim said, “The computational workflow and its resulting prediction outcomes will serve as the groundwork for identifying novel oncometabolites and for facilitating the development of various treatment and diagnosis strategies”.

This study, which was supported by the National Research Foundation of Korea, has been published online in Genome Biology, a representative journal in the field of biotechnology and genetics, under the title "Prediction of metabolites associated with somatic mutations in cancers by using genome‑scale metabolic models and mutation data".

2024.03.18 View 7012 -

NYU-KAIST Global AI & Digital Governance Conference Held

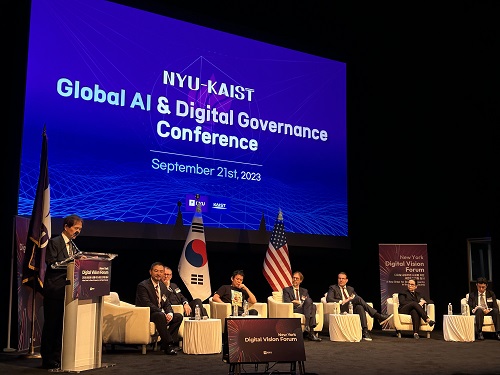

< Photo 1. Opening of NYU-KAIST Global AI & Digital Governance Conference >

In attendance of the Minister of Science and ICT Jong-ho Lee, NYU President Linda G. Mills, and KAIST President Kwang Hyung Lee, KAIST co-hosted the NYU-KAIST Global AI & Digital Governance Conference at the Paulson Center of New York University (NYU) in New York City, USA on September 21st, 9:30 pm.

At the conference, KAIST and NYU discussed the direction and policies for ‘global AI and digital governance’ with participants of upto 300 people which includes scholars, professors, and students involved in the academic field of AI and digitalization from both Korea and the United States and other international backgrounds. This conference was a forum of an international discussion that sought new directions for AI and digital technology take in the future and gathered consensus on regulations.

Following a welcoming address by KAIST President, Kwang Hyung Lee and a congratulatory message from the Minister of Science and ICT, Jong-ho Lee, a panel discussion was held, moderated by Professor Matthew Liao, a graduate of Princeton and Oxford University, currently serving as a professor at NYU and the director at the Center for Bioethics of the NYU School of Global Public Health.

Six prominent scholars took part in the panel discussion. Prof. Kyung-hyun Cho of NYU Applied Mathematics and Data Science Center, a KAIST graduate who has joined the ranks of the world-class in AI language models and Professor Jong Chul Ye, the Director of Promotion Council for Digital Health at KAIST, who is leading innovative research in the field of medical AI working in collaboration with major hospitals at home and abroad was on the panel. Additionally, Professor Luciano Floridi, a founding member of the Yale University Center for Digital Ethics, Professor Shannon Vallor, the Baillie Gifford Professor in the Ethics of Data and Artificial Intelligence at the University of Edinburgh of the UK, Professor Stefaan Verhulst, a Co-Founder and the DIrector of GovLab‘s Data Program at NYU’s Tandon School of Engineering, and Professor Urs Gasser, who is in charge of public policy, governance and innovative technology at the Technical University of Munich, also participated.

Professor Matthew Liao from NYU led the discussion on various topics such as the ways to to regulate AI and digital technologies; the concerns about how deep learning technology being developed in medicinal purposes could be used in warfare; the scope of responsibilities Al scientists' responsibility should carry in ensuring the usage of AI are limited to benign purposes only; the effects of external regulation on the AI model developers and the research they pursue; and on the lessons that can be learned from the regulations in other fields.

During the panel discussion, there was an exchange of ideas about a system of standards that could harmonize digital development and regulatory and social ethics in today’s situation in which digital transformation accelerates technological development at a global level, there is a looming concern that while such advancements are bringing economic vitality it may create digital divides and probles like manipulation of public opinion. Professor Jong-cheol Ye of KAIST (Director of the Promotion Council for Digital Health), in particular, emphasized that it is important to find a point of balance that does not hinder the advancements rather than opting to enforcing strict regulations.

< Photo 2. Panel Discussion in Session at NYU-KAIST Global AI & Digital Governance Conference >

KAIST President Kwang Hyung Lee explained, “At the Digital Governance Forum we had last October, we focused on exploring new governance to solve digital challenges in the time of global digital transition, and this year’s main focus was on regulations.”

“This conference served as an opportunity of immense value as we came to understand that appropriate regulations can be a motivation to spur further developments rather than a hurdle when it comes to technological advancements, and that it is important for us to clearly understand artificial intelligence and consider what should and can be regulated when we are to set regulations on artificial intelligence,” he continued.

Earlier, KAIST signed a cooperation agreement with NYU to build a joint campus, June last year and held a plaque presentation ceremony for the KAIST NYU Joint Campus last September to promote joint research between the two universities. KAIST is currently conducting joint research with NYU in nine fields, including AI and digital research. The KAIST-NYU Joint Campus was conceived with the goal of building an innovative sandbox campus centering aroung science, technology, engineering, and mathematics (STEM) combining NYU's excellent humanities and arts as well as basic science and convergence research capabilities with KAIST's science and technology.

KAIST has contributed to the development of Korea's industry and economy through technological innovation aiding in the nation’s transformation into an innovative nation with scientific and technological prowess. KAIST will now pursue an anchor/base strategy to raise KAIST's awareness in New York through the NYU Joint Campus by establishing a KAIST campus within the campus of NYU, the heart of New York.

2023.09.22 View 11712

NYU-KAIST Global AI & Digital Governance Conference Held

< Photo 1. Opening of NYU-KAIST Global AI & Digital Governance Conference >

In attendance of the Minister of Science and ICT Jong-ho Lee, NYU President Linda G. Mills, and KAIST President Kwang Hyung Lee, KAIST co-hosted the NYU-KAIST Global AI & Digital Governance Conference at the Paulson Center of New York University (NYU) in New York City, USA on September 21st, 9:30 pm.

At the conference, KAIST and NYU discussed the direction and policies for ‘global AI and digital governance’ with participants of upto 300 people which includes scholars, professors, and students involved in the academic field of AI and digitalization from both Korea and the United States and other international backgrounds. This conference was a forum of an international discussion that sought new directions for AI and digital technology take in the future and gathered consensus on regulations.

Following a welcoming address by KAIST President, Kwang Hyung Lee and a congratulatory message from the Minister of Science and ICT, Jong-ho Lee, a panel discussion was held, moderated by Professor Matthew Liao, a graduate of Princeton and Oxford University, currently serving as a professor at NYU and the director at the Center for Bioethics of the NYU School of Global Public Health.

Six prominent scholars took part in the panel discussion. Prof. Kyung-hyun Cho of NYU Applied Mathematics and Data Science Center, a KAIST graduate who has joined the ranks of the world-class in AI language models and Professor Jong Chul Ye, the Director of Promotion Council for Digital Health at KAIST, who is leading innovative research in the field of medical AI working in collaboration with major hospitals at home and abroad was on the panel. Additionally, Professor Luciano Floridi, a founding member of the Yale University Center for Digital Ethics, Professor Shannon Vallor, the Baillie Gifford Professor in the Ethics of Data and Artificial Intelligence at the University of Edinburgh of the UK, Professor Stefaan Verhulst, a Co-Founder and the DIrector of GovLab‘s Data Program at NYU’s Tandon School of Engineering, and Professor Urs Gasser, who is in charge of public policy, governance and innovative technology at the Technical University of Munich, also participated.

Professor Matthew Liao from NYU led the discussion on various topics such as the ways to to regulate AI and digital technologies; the concerns about how deep learning technology being developed in medicinal purposes could be used in warfare; the scope of responsibilities Al scientists' responsibility should carry in ensuring the usage of AI are limited to benign purposes only; the effects of external regulation on the AI model developers and the research they pursue; and on the lessons that can be learned from the regulations in other fields.

During the panel discussion, there was an exchange of ideas about a system of standards that could harmonize digital development and regulatory and social ethics in today’s situation in which digital transformation accelerates technological development at a global level, there is a looming concern that while such advancements are bringing economic vitality it may create digital divides and probles like manipulation of public opinion. Professor Jong-cheol Ye of KAIST (Director of the Promotion Council for Digital Health), in particular, emphasized that it is important to find a point of balance that does not hinder the advancements rather than opting to enforcing strict regulations.

< Photo 2. Panel Discussion in Session at NYU-KAIST Global AI & Digital Governance Conference >

KAIST President Kwang Hyung Lee explained, “At the Digital Governance Forum we had last October, we focused on exploring new governance to solve digital challenges in the time of global digital transition, and this year’s main focus was on regulations.”

“This conference served as an opportunity of immense value as we came to understand that appropriate regulations can be a motivation to spur further developments rather than a hurdle when it comes to technological advancements, and that it is important for us to clearly understand artificial intelligence and consider what should and can be regulated when we are to set regulations on artificial intelligence,” he continued.

Earlier, KAIST signed a cooperation agreement with NYU to build a joint campus, June last year and held a plaque presentation ceremony for the KAIST NYU Joint Campus last September to promote joint research between the two universities. KAIST is currently conducting joint research with NYU in nine fields, including AI and digital research. The KAIST-NYU Joint Campus was conceived with the goal of building an innovative sandbox campus centering aroung science, technology, engineering, and mathematics (STEM) combining NYU's excellent humanities and arts as well as basic science and convergence research capabilities with KAIST's science and technology.

KAIST has contributed to the development of Korea's industry and economy through technological innovation aiding in the nation’s transformation into an innovative nation with scientific and technological prowess. KAIST will now pursue an anchor/base strategy to raise KAIST's awareness in New York through the NYU Joint Campus by establishing a KAIST campus within the campus of NYU, the heart of New York.

2023.09.22 View 11712 -

KAIST researchers find sleep delays more prevalent in countries of particular culture than others

Sleep has a huge impact on health, well-being and productivity, but how long and how well people sleep these days has not been accurately reported. Previous research on how much and how well we sleep has mostly relied on self-reports or was confined within the data from the unnatural environments of the sleep laboratories.

So, the questions remained: Is the amount and quality of sleep purely a personal choice? Could they be independent from social factors such as culture and geography?

< From left to right, Sungkyu Park of Kangwon National University, South Korea; Assem Zhunis of KAIST and IBS, South Korea; Marios Constantinides of Nokia Bell Labs, UK; Luca Maria Aiello of the IT University of Copenhagen, Denmark; Daniele Quercia of Nokia Bell Labs and King's College London, UK; and Meeyoung Cha of IBS and KAIST, South Korea >

A new study led by researchers at Korea Advanced Institute of Science and Technology (KAIST) and Nokia Bell Labs in the United Kingdom investigated the cultural and individual factors that influence sleep. In contrast to previous studies that relied on surveys or controlled experiments at labs, the team used commercially available smartwatches for extensive data collection, analyzing 52 million logs collected over a four-year period from 30,082 individuals in 11 countries. These people wore Nokia smartwatches, which allowed the team to investigate country-specific sleep patterns based on the digital logs from the devices.

< Figure comparing survey and smartwatch logs on average sleep-time, wake-time, and sleep durations. Digital logs consistently recorded delayed hours of wake- and sleep-time, resulting in shorter sleep durations. >

Digital logs collected from the smartwatches revealed discrepancies in wake-up times and sleep-times, sometimes by tens of minutes to an hour, from the data previously collected from self-report assessments. The average sleep-time overall was calculated to be around midnight, and the average wake-up time was 7:42 AM. The team discovered, however, that individuals' sleep is heavily linked to their geographical location and cultural factors. While wake-up times were similar, sleep-time varied by country. Individuals in higher GDP countries had more records of delayed bedtime. Those in collectivist culture, compared to individualist culture, also showed more records of delayed bedtime. Among the studied countries, Japan had the shortest total sleep duration, averaging a duration of under 7 hours, while Finland had the longest, averaging 8 hours.

Researchers calculated essential sleep metrics used in clinical studies, such as sleep efficiency, sleep duration, and overslept hours on weekends, to analyze the extensive sleep patterns. Using Principal Component Analysis (PCA), they further condensed these metrics into two major sleep dimensions representing sleep quality and quantity. A cross-country comparison revealed that societal factors account for 55% of the variation in sleep quality and 63% of the variation in sleep quantity.

Countries with a higher individualism index (IDV), which placed greater emphasis on individual achievements and relationships, had significantly longer sleep durations, which could be attributed to such societies having a norm of going to bed early. Spain and Japan, on the other hand, had the bedtime scheduled at the latest hours despite having the highest collectivism scores (low IDV). The study also discovered a moderate relationship between a higher uncertainty avoidance index (UAI), which measures implementation of general laws and regulation in daily lives of regular citizens, and better sleep quality.

Researchers also investigated how physical activity can affect sleep quantity and quality to see if individuals can counterbalance cultural influences through personal interventions. They discovered that increasing daily activity can improve sleep quality in terms of shortened time needed in falling asleep and waking up. Individuals who exercise more, however, did not sleep longer. The effect of exercise differed by country, with more pronounced effects observed in some countries, such as the United States and Finland. Interestingly, in Japan, no obvious effect of exercise could be observed. These findings suggest that the relationship between daily activity and sleep may differ by country and that different exercise regimens may be more effective in different cultures.

This research published on the Scientific Reports by the international journal, Nature, sheds light on the influence of social factors on sleep. (Paper Title "Social dimensions impact individual sleep quantity and quality" Article number: 9681)

One of the co-authors, Daniele Quercia, commented: “Excessive work schedules, long working hours, and late bedtime in high-income countries and social engagement due to high collectivism may cause bedtimes to be delayed.”

Commenting on the research, the first author Shaun Sungkyu Park said, "While it is intriguing to see that a society can play a role in determining the quantity and quality of an individual's sleep with large-scale data, the significance of this study is that it quantitatively shows that even within the same culture (country), individual efforts such as daily exercise can have a positive impact on sleep quantity and quality."

"Sleep not only has a great impact on one’s well-being but it is also known to be associated with health issues such as obesity and dementia," said the lead author, Meeyoung Cha. "In order to ensure adequate sleep and improve sleep quality in an aging society, not only individual efforts but also a social support must be provided to work together," she said. The research team will contribute to the development of the high-tech sleep industry by making a code that easily calculates the sleep indicators developed in this study available free of charge, as well as providing the benchmark data for various types of sleep research to follow.

2023.07.07 View 8610

KAIST researchers find sleep delays more prevalent in countries of particular culture than others

Sleep has a huge impact on health, well-being and productivity, but how long and how well people sleep these days has not been accurately reported. Previous research on how much and how well we sleep has mostly relied on self-reports or was confined within the data from the unnatural environments of the sleep laboratories.

So, the questions remained: Is the amount and quality of sleep purely a personal choice? Could they be independent from social factors such as culture and geography?

< From left to right, Sungkyu Park of Kangwon National University, South Korea; Assem Zhunis of KAIST and IBS, South Korea; Marios Constantinides of Nokia Bell Labs, UK; Luca Maria Aiello of the IT University of Copenhagen, Denmark; Daniele Quercia of Nokia Bell Labs and King's College London, UK; and Meeyoung Cha of IBS and KAIST, South Korea >

A new study led by researchers at Korea Advanced Institute of Science and Technology (KAIST) and Nokia Bell Labs in the United Kingdom investigated the cultural and individual factors that influence sleep. In contrast to previous studies that relied on surveys or controlled experiments at labs, the team used commercially available smartwatches for extensive data collection, analyzing 52 million logs collected over a four-year period from 30,082 individuals in 11 countries. These people wore Nokia smartwatches, which allowed the team to investigate country-specific sleep patterns based on the digital logs from the devices.

< Figure comparing survey and smartwatch logs on average sleep-time, wake-time, and sleep durations. Digital logs consistently recorded delayed hours of wake- and sleep-time, resulting in shorter sleep durations. >

Digital logs collected from the smartwatches revealed discrepancies in wake-up times and sleep-times, sometimes by tens of minutes to an hour, from the data previously collected from self-report assessments. The average sleep-time overall was calculated to be around midnight, and the average wake-up time was 7:42 AM. The team discovered, however, that individuals' sleep is heavily linked to their geographical location and cultural factors. While wake-up times were similar, sleep-time varied by country. Individuals in higher GDP countries had more records of delayed bedtime. Those in collectivist culture, compared to individualist culture, also showed more records of delayed bedtime. Among the studied countries, Japan had the shortest total sleep duration, averaging a duration of under 7 hours, while Finland had the longest, averaging 8 hours.

Researchers calculated essential sleep metrics used in clinical studies, such as sleep efficiency, sleep duration, and overslept hours on weekends, to analyze the extensive sleep patterns. Using Principal Component Analysis (PCA), they further condensed these metrics into two major sleep dimensions representing sleep quality and quantity. A cross-country comparison revealed that societal factors account for 55% of the variation in sleep quality and 63% of the variation in sleep quantity.

Countries with a higher individualism index (IDV), which placed greater emphasis on individual achievements and relationships, had significantly longer sleep durations, which could be attributed to such societies having a norm of going to bed early. Spain and Japan, on the other hand, had the bedtime scheduled at the latest hours despite having the highest collectivism scores (low IDV). The study also discovered a moderate relationship between a higher uncertainty avoidance index (UAI), which measures implementation of general laws and regulation in daily lives of regular citizens, and better sleep quality.

Researchers also investigated how physical activity can affect sleep quantity and quality to see if individuals can counterbalance cultural influences through personal interventions. They discovered that increasing daily activity can improve sleep quality in terms of shortened time needed in falling asleep and waking up. Individuals who exercise more, however, did not sleep longer. The effect of exercise differed by country, with more pronounced effects observed in some countries, such as the United States and Finland. Interestingly, in Japan, no obvious effect of exercise could be observed. These findings suggest that the relationship between daily activity and sleep may differ by country and that different exercise regimens may be more effective in different cultures.

This research published on the Scientific Reports by the international journal, Nature, sheds light on the influence of social factors on sleep. (Paper Title "Social dimensions impact individual sleep quantity and quality" Article number: 9681)

One of the co-authors, Daniele Quercia, commented: “Excessive work schedules, long working hours, and late bedtime in high-income countries and social engagement due to high collectivism may cause bedtimes to be delayed.”

Commenting on the research, the first author Shaun Sungkyu Park said, "While it is intriguing to see that a society can play a role in determining the quantity and quality of an individual's sleep with large-scale data, the significance of this study is that it quantitatively shows that even within the same culture (country), individual efforts such as daily exercise can have a positive impact on sleep quantity and quality."

"Sleep not only has a great impact on one’s well-being but it is also known to be associated with health issues such as obesity and dementia," said the lead author, Meeyoung Cha. "In order to ensure adequate sleep and improve sleep quality in an aging society, not only individual efforts but also a social support must be provided to work together," she said. The research team will contribute to the development of the high-tech sleep industry by making a code that easily calculates the sleep indicators developed in this study available free of charge, as well as providing the benchmark data for various types of sleep research to follow.

2023.07.07 View 8610 -

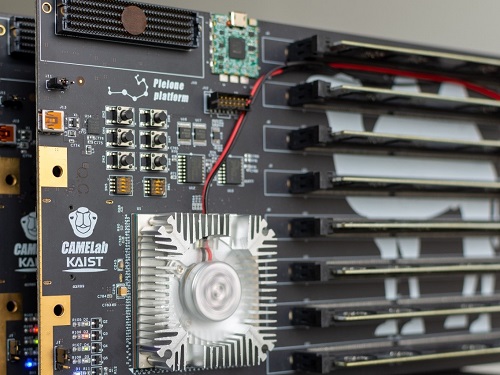

A KAIST research team develops a high-performance modular SSD system semiconductor

In recent years, there has been a rise in demand for large amounts of data to train AI models and, thus, data size has become increasingly important over time. Accordingly, solid state drives (SSDs, storage devices that use a semiconductor memory unit), which are core storage devices for data centers and cloud services, have also seen an increase in demand. However, the internal components of higher performing SSDs have become more tightly coupled, and this tightly-coupled structure limits SSD from maximized performance.

On June 15, a KAIST research team led by Professor Dongjun Kim (John Kim) from the School of Electrical Engineering (EE) announced the development of the first SSD system semiconductor structure that can increase the reading/writing performance of next generation SSDs and extend their lifespan through high-performance modular SSD systems.

Professor Kim’s team identified the limitations of the tightly-coupled structures in existing SSD designs and proposed a de-coupled structure that can maximize SSD performance by configuring an internal on-chip network specialized for flash memory. This technique utilizes on-chip network technology, which can freely send packet-based data within the chip and is often used to design non-memory system semiconductors like CPUs and GPUs. Through this, the team developed a ‘modular SSD’, which shows reduced interdependence between front-end and back-end designs, and allows their independent design and assembly.

*on-chip network: a packet-based connection structure for the internal components of system semiconductors like CPUs/GPUs. On-chip networks are one of the most critical design components for high-performing system semiconductors, and their importance grows with the size of the semiconductor chip.

Professor Kim’s team refers to the components nearer to the CPU as the front-end and the parts closer to the flash memory as back-end. They newly constructed an on-chip network specific to flash memory in order to allow data transmission between the back-end’s flash controller, proposing a de-coupled structure that can minimize performance drop.

The SSD can accelerate some functions of the flash translation layer, a critical element to drive the SSD, in order to allow flash memory to actively overcome its limitations. Another advantage of the de-coupled, modular structure is that the flash translation layer is not limited to the characteristics of specific flash memories. Instead, their front-end and back-end designs can be carried out independently. Through this, the team could produce 21-times faster response times compared to existing systems and extend SSD lifespan by 23% by also applying the DDS defect detection technique.

< Figure 1. Schematic diagram of the structure of a high-performance modular SSD system developed by Professor Dong-Jun Kim's team >

This research, conducted by first author and Ph.D. candidate Jiho Kim from the KAIST School of EE and co-author Professor Myoungsoo Jung, was presented on the 19th of June at the 50th IEEE/ACM International Symposium on Computer Architecture, the most prestigious academic conference in the field of computer architecture, held in Orlando, Florida. (Paper Title: Decoupled SSD: Rethinking SSD Architecture through Network-based Flash Controllers)

< Figure 2. Conceptual diagram of hardware acceleration through high-performance modular SSD system >

Professor Dongjun Kim, who led the research, said, “This research is significant in that it identified the structural limitations of existing SSDs, and showed that on-chip network technology based on system memory semiconductors like CPUs can drive the hardware to actively carry out the necessary actions. We expect this to contribute greatly to the next-generation high-performance SSD market.” He added, “The de-coupled architecture is a structure that can actively operate to extend devices’ lifespan. In other words, its significance is not limited to the level of performance and can, therefore, be used for various applications.”

KAIST commented that this research is also meaningful in that the results were reaped through a collaborative study between two world-renowned researchers: Professor Myeongsoo Jung, recognized in the field of computer system storage devices, and Professor Dongjun Kim, a leading researcher in computer architecture and interconnection networks.

This research was funded by the National Research Foundation of Korea, Samsung Electronics, the IC Design Education Center, and Next Generation Semiconductor Technology and Development granted by the Institute of Information & Communications Technology, Planning & Evaluation.

2023.06.23 View 8094

A KAIST research team develops a high-performance modular SSD system semiconductor

In recent years, there has been a rise in demand for large amounts of data to train AI models and, thus, data size has become increasingly important over time. Accordingly, solid state drives (SSDs, storage devices that use a semiconductor memory unit), which are core storage devices for data centers and cloud services, have also seen an increase in demand. However, the internal components of higher performing SSDs have become more tightly coupled, and this tightly-coupled structure limits SSD from maximized performance.

On June 15, a KAIST research team led by Professor Dongjun Kim (John Kim) from the School of Electrical Engineering (EE) announced the development of the first SSD system semiconductor structure that can increase the reading/writing performance of next generation SSDs and extend their lifespan through high-performance modular SSD systems.

Professor Kim’s team identified the limitations of the tightly-coupled structures in existing SSD designs and proposed a de-coupled structure that can maximize SSD performance by configuring an internal on-chip network specialized for flash memory. This technique utilizes on-chip network technology, which can freely send packet-based data within the chip and is often used to design non-memory system semiconductors like CPUs and GPUs. Through this, the team developed a ‘modular SSD’, which shows reduced interdependence between front-end and back-end designs, and allows their independent design and assembly.

*on-chip network: a packet-based connection structure for the internal components of system semiconductors like CPUs/GPUs. On-chip networks are one of the most critical design components for high-performing system semiconductors, and their importance grows with the size of the semiconductor chip.

Professor Kim’s team refers to the components nearer to the CPU as the front-end and the parts closer to the flash memory as back-end. They newly constructed an on-chip network specific to flash memory in order to allow data transmission between the back-end’s flash controller, proposing a de-coupled structure that can minimize performance drop.

The SSD can accelerate some functions of the flash translation layer, a critical element to drive the SSD, in order to allow flash memory to actively overcome its limitations. Another advantage of the de-coupled, modular structure is that the flash translation layer is not limited to the characteristics of specific flash memories. Instead, their front-end and back-end designs can be carried out independently. Through this, the team could produce 21-times faster response times compared to existing systems and extend SSD lifespan by 23% by also applying the DDS defect detection technique.

< Figure 1. Schematic diagram of the structure of a high-performance modular SSD system developed by Professor Dong-Jun Kim's team >

This research, conducted by first author and Ph.D. candidate Jiho Kim from the KAIST School of EE and co-author Professor Myoungsoo Jung, was presented on the 19th of June at the 50th IEEE/ACM International Symposium on Computer Architecture, the most prestigious academic conference in the field of computer architecture, held in Orlando, Florida. (Paper Title: Decoupled SSD: Rethinking SSD Architecture through Network-based Flash Controllers)

< Figure 2. Conceptual diagram of hardware acceleration through high-performance modular SSD system >

Professor Dongjun Kim, who led the research, said, “This research is significant in that it identified the structural limitations of existing SSDs, and showed that on-chip network technology based on system memory semiconductors like CPUs can drive the hardware to actively carry out the necessary actions. We expect this to contribute greatly to the next-generation high-performance SSD market.” He added, “The de-coupled architecture is a structure that can actively operate to extend devices’ lifespan. In other words, its significance is not limited to the level of performance and can, therefore, be used for various applications.”

KAIST commented that this research is also meaningful in that the results were reaped through a collaborative study between two world-renowned researchers: Professor Myeongsoo Jung, recognized in the field of computer system storage devices, and Professor Dongjun Kim, a leading researcher in computer architecture and interconnection networks.

This research was funded by the National Research Foundation of Korea, Samsung Electronics, the IC Design Education Center, and Next Generation Semiconductor Technology and Development granted by the Institute of Information & Communications Technology, Planning & Evaluation.

2023.06.23 View 8094 -

Yuji Roh Awarded 2022 Microsoft Research PhD Fellowship

KAIST PhD candidate Yuji Roh of the School of Electrical Engineering (advisor: Prof. Steven Euijong Whang) was selected as a recipient of the 2022 Microsoft Research PhD Fellowship.

< KAIST PhD candidate Yuji Roh (advisor: Prof. Steven Euijong Whang) >

The Microsoft Research PhD Fellowship is a scholarship program that recognizes outstanding graduate students for their exceptional and innovative research in areas relevant to computer science and related fields. This year, 36 people from around the world received the fellowship, and Yuji Roh from KAIST EE is the only recipient from universities in Korea. Each selected fellow will receive a $10,000 scholarship and an opportunity to intern at Microsoft under the guidance of an experienced researcher.

Yuji Roh was named a fellow in the field of “Machine Learning” for her outstanding achievements in Trustworthy AI. Her research highlights include designing a state-of-the-art fair training framework using batch selection and developing novel algorithms for both fair and robust training. Her works have been presented at the top machine learning conferences ICML, ICLR, and NeurIPS among others. She also co-presented a tutorial on Trustworthy AI at the top data mining conference ACM SIGKDD. She is currently interning at the NVIDIA Research AI Algorithms Group developing large-scale real-world fair AI frameworks.

The list of fellowship recipients and the interview videos are displayed on the Microsoft webpage and Youtube.

The list of recipients: https://www.microsoft.com/en-us/research/academic-program/phd-fellowship/2022-recipients/

Interview (Global): https://www.youtube.com/watch?v=T4Q-XwOOoJc

Interview (Asia): https://www.youtube.com/watch?v=qwq3R1XU8UE

[Highlighted research achievements by Yuji Roh: Fair batch selection framework]

[Highlighted research achievements by Yuji Roh: Fair and robust training framework]

2022.10.28 View 14925

Yuji Roh Awarded 2022 Microsoft Research PhD Fellowship

KAIST PhD candidate Yuji Roh of the School of Electrical Engineering (advisor: Prof. Steven Euijong Whang) was selected as a recipient of the 2022 Microsoft Research PhD Fellowship.

< KAIST PhD candidate Yuji Roh (advisor: Prof. Steven Euijong Whang) >

The Microsoft Research PhD Fellowship is a scholarship program that recognizes outstanding graduate students for their exceptional and innovative research in areas relevant to computer science and related fields. This year, 36 people from around the world received the fellowship, and Yuji Roh from KAIST EE is the only recipient from universities in Korea. Each selected fellow will receive a $10,000 scholarship and an opportunity to intern at Microsoft under the guidance of an experienced researcher.

Yuji Roh was named a fellow in the field of “Machine Learning” for her outstanding achievements in Trustworthy AI. Her research highlights include designing a state-of-the-art fair training framework using batch selection and developing novel algorithms for both fair and robust training. Her works have been presented at the top machine learning conferences ICML, ICLR, and NeurIPS among others. She also co-presented a tutorial on Trustworthy AI at the top data mining conference ACM SIGKDD. She is currently interning at the NVIDIA Research AI Algorithms Group developing large-scale real-world fair AI frameworks.

The list of fellowship recipients and the interview videos are displayed on the Microsoft webpage and Youtube.

The list of recipients: https://www.microsoft.com/en-us/research/academic-program/phd-fellowship/2022-recipients/

Interview (Global): https://www.youtube.com/watch?v=T4Q-XwOOoJc

Interview (Asia): https://www.youtube.com/watch?v=qwq3R1XU8UE

[Highlighted research achievements by Yuji Roh: Fair batch selection framework]

[Highlighted research achievements by Yuji Roh: Fair and robust training framework]

2022.10.28 View 14925 -

Machine Learning-Based Algorithm to Speed up DNA Sequencing

The algorithm presents the first full-fledged, short-read alignment software that leverages learned indices for solving the exact match search problem for efficient seeding

The human genome consists of a complete set of DNA, which is about 6.4 billion letters long. Because of its size, reading the whole genome sequence at once is challenging. So scientists use DNA sequencers to produce hundreds of millions of DNA sequence fragments, or short reads, up to 300 letters long. Then the DNA sequencer assembles all the short reads like a giant jigsaw puzzle to reconstruct the entire genome sequence. Even with very fast computers, this job can take hours to complete.