research

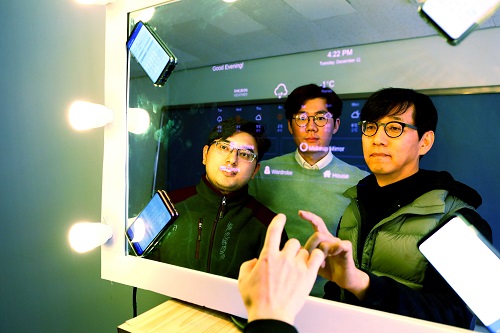

(from left: MS candidate Anish Byanjankar, Research Assistant Professor Hyosu Kim and Professor Insik Shin)

The most important aspect of enabling the sound-based touch input method is to identify the location of touch inputs in a precise manner (within about 1cm error). However, it is challenging to meet these requirements, mainly because this technology can be used in diverse and dynamically changing environments. Users may use objects like desks, walls, or mirrors as touch input tools and the surrounding environments (e.g. location of nearby objects or ambient noise level) can be varied. These environmental changes can affect the characteristics of touch sounds.

-

people Professor Dongsu Han Named Program Chair for ACM CoNEXT 2020

Professor Dongsu Han from the School of Electrical Engineering has been appointed as the program chair for the 16th Association for Computing Machinery’s International Conference on emerging Networking EXperiments and Technologies (ACM CoNEXT 2020). Professor Han is the first program chair to be appointed from an Asian institution. ACM CoNEXT is hosted by ACM SIGCOMM, ACM's Special Interest Group on Data Communications, which specializes in the field of communication and computer networ

2020-06-02 -

research It's Time to 3D Sketch with Air Scaffolding

People often use their hands when describing an object, while pens are great tools for describing objects in detail. Taking this idea, a KAIST team introduced a new 3D sketching workflow, combining the strengths of hand and pen input. This technique will ease the way for ideation in three dimensions, leading to efficient product design in terms of time and cost. For a designer's drawing to become a product in reality, one has to transform a designer's 2D drawing into a 3D shape; however

2018-07-25 -

research A New Theory Improves Button Designs

Pressing a button appears effortless. People easily dismisses how challenging it is. Researchers at KAIST and Aalto University in Finland, created detailed simulations of button-pressing with the goal of producing human-like presses. The researchers argue that the key capability of the brain is a probabilistic model. The brain learns a model that allows it to predict a suitable motor command for a button. If a press fails, it can pick a very good alternat

2018-03-22 -

people Sangeun Oh Recognized as a 2017 Google Fellow

Sangeun Oh, a Ph.D. candidate in the School of Computing was selected as a Google PhD Fellow in 2017. He is one of 47 awardees of the Google PhD Fellowship in the world. The Google PhD Fellowship awards students showing outstanding performance in the field of computer science and related research. Since being established in 2009, the program has provided various benefits, including scholarships worth $10,000 USD and one-to-one research discussion with mentors from Google. His resear

2017-09-27 -

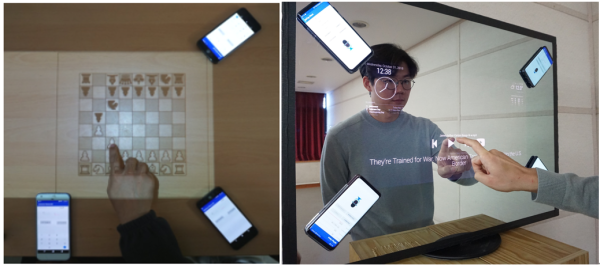

research Multi-Device Mobile Platform for App Functionality Sharing

Case 1. Mr. Kim, an employee, logged on to his SNS account using a tablet PC at the airport while traveling overseas. However, a malicious virus was installed on the tablet PC and some photos posted on his SNS were deleted by someone else. Case 2. Mr. and Mrs. Brown are busy contacting credit card and game companies, because his son, who likes games, purchased a million dollars worth of game items using his smartphone. Case 3. Mr. Park, who enjoys games, bought a sensor-based

2017-08-09