research

KAIST (President Kwang Hyung Lee) announced on the 25th that a research team led by Professor Jemin Hwangbo of the Department of Mechanical Engineering developed a quadrupedal robot control technology that can walk robustly with agility even in deformable terrain such as sandy beach.

< Photo. RAI Lab Team with Professor Hwangbo in the middle of the back row. >

Professor Hwangbo's research team developed a technology to model the force received by a walking robot on the ground made of granular materials such as sand and simulate it via a quadrupedal robot. Also, the team worked on an artificial neural network structure which is suitable in making real-time decisions needed in adapting to various types of ground without prior information while walking at the same time and applied it on to reinforcement learning. The trained neural network controller is expected to expand the scope of application of quadrupedal walking robots by proving its robustness in changing terrain, such as the ability to move in high-speed even on a sandy beach and walk and turn on soft grounds like an air mattress without losing balance.

This research, with Ph.D. Student Soo-Young Choi of KAIST Department of Mechanical Engineering as the first author, was published in January in the “Science Robotics”. (Paper title: Learning quadrupedal locomotion on deformable terrain).

Reinforcement learning is an AI learning method used to create a machine that collects data on the results of various actions in an arbitrary situation and utilizes that set of data to perform a task. Because the amount of data required for reinforcement learning is so vast, a method of collecting data through simulations that approximates physical phenomena in the real environment is widely used.

In particular, learning-based controllers in the field of walking robots have been applied to real environments after learning through data collected in simulations to successfully perform walking controls in various terrains.

However, since the performance of the learning-based controller rapidly decreases when the actual environment has any discrepancy from the learned simulation environment, it is important to implement an environment similar to the real one in the data collection stage. Therefore, in order to create a learning-based controller that can maintain balance in a deforming terrain, the simulator must provide a similar contact experience.

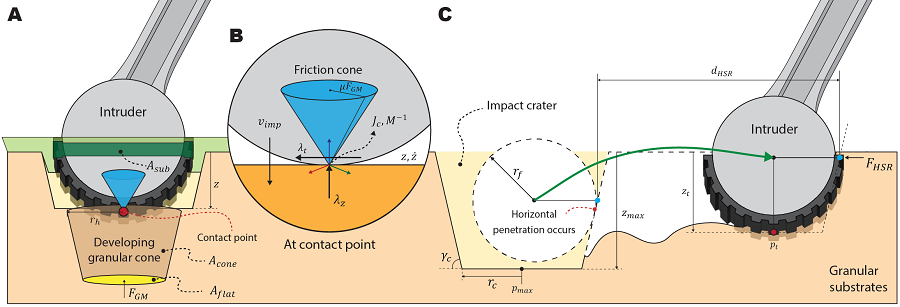

The research team defined a contact model that predicted the force generated upon contact from the motion dynamics of a walking body based on a ground reaction force model that considered the additional mass effect of granular media defined in previous studies.

Furthermore, by calculating the force generated from one or several contacts at each time step, the deforming terrain was efficiently simulated.

The research team also introduced an artificial neural network structure that implicitly predicts ground characteristics by using a recurrent neural network that analyzes time-series data from the robot's sensors.

The learned controller was mounted on the robot 'RaiBo', which was built hands-on by the research team to show high-speed walking of up to 3.03 m/s on a sandy beach where the robot's feet were completely submerged in the sand. Even when applied to harder grounds, such as grassy fields, and a running track, it was able to run stably by adapting to the characteristics of the ground without any additional programming or revision to the controlling algorithm.

In addition, it rotated with stability at 1.54 rad/s (approximately 90° per second) on an air mattress and demonstrated its quick adaptability even in the situation in which the terrain suddenly turned soft.

The research team demonstrated the importance of providing a suitable contact experience during the learning process by comparison with a controller that assumed the ground to be rigid, and proved that the proposed recurrent neural network modifies the controller's walking method according to the ground properties.

The simulation and learning methodology developed by the research team is expected to contribute to robots performing practical tasks as it expands the range of terrains that various walking robots can operate on.

The first author, Suyoung Choi, said, “It has been shown that providing a learning-based controller with a close contact experience with real deforming ground is essential for application to deforming terrain.” He went on to add that “The proposed controller can be used without prior information on the terrain, so it can be applied to various robot walking studies.”

This research was carried out with the support of the Samsung Research Funding & Incubation Center of Samsung Electronics.

< Figure 1. Adaptability of the proposed controller to various ground environments. The controller learned from a wide range of randomized granular media simulations showed adaptability to various natural and artificial terrains, and demonstrated high-speed walking ability and energy efficiency. >

< Figure 2. Contact model definition for simulation of granular substrates. The research team used a model that considered the additional mass effect for the vertical force and a Coulomb friction model for the horizontal direction while approximating the contact with the granular medium as occurring at a point. Furthermore, a model that simulates the ground resistance that can occur on the side of the foot was introduced and used for simulation. >

-

event Team KAIST placed among top two at MBZIRC Maritime Grand Challenge

Representing Korean Robotics at Sea: KAIST’s 26-month strife rewarded Team KAIST placed among top two at MBZIRC Maritime Grand Challenge - Team KAIST, composed of students from the labs of Professor Jinwhan Kim of the Department of Mechanical Engineering and Professor Hyunchul Shim of the School of Electrical and Engineering, came through the challenge as the first runner-up winning the prize money totaling up to $650,000 (KRW 860 million). - Successfully led the autonomous collaborat

2024-02-09 -

research KAIST develops an artificial muscle device that produces force 34 times its weight

- Professor IlKwon Oh’s research team in KAIST’s Department of Mechanical Engineering developed a soft fluidic switch using an ionic polymer artificial muscle that runs with ultra-low power to lift objects 34 times greater than its weight. - Its light weight and small size make it applicable to various industrial fields such as soft electronics, smart textiles, and biomedical devices by controlling fluid flow with high precision, even in narrow spaces. Soft robots, medical devic

2024-01-11 -

research KAIST Research Team Develops World’s First Humanoid Pilot, PIBOT

In the Spring of last year, the legendary, fictional pilot “Maverick” flew his plane in the film “Top Gun: Maverick” that drew crowds to theatres around the world. This year, the appearance of a humanoid pilot, PIBOT, has stolen the spotlight at KAIST. < Photo 1. Humanoid pilot robot, PIBOT > A KAIST research team has developed a humanoid robot that can understand manuals written in natural language and fly a plane on its own. The team also announced their p

2023-08-03 -

people Professor Joseph J. Lim of KAIST receives the Best System Paper Award from RSS 2023, First in Korea

- Professor Joseph J. Lim from the Kim Jaechul Graduate School of AI at KAIST and his team receive an award for the most outstanding paper in the implementation of robot systems. - Professor Lim works on AI-based perception, reasoning, and sequential decision-making to develop systems capable of intelligent decision-making, including robot learning < Photo 1. RSS2023 Best System Paper Award Presentation > The team of Professor Joseph J. Lim from the Kim Jaechul Graduate Scho

2023-07-31 -

research KAIST debuts “DreamWaQer” - a quadrupedal robot that can walk in the dark

- The team led by Professor Hyun Myung of the School of Electrical Engineering developed “DreamWaQ”, a deep reinforcement learning-based walking robot control technology that can walk in an atypical environment without visual and/or tactile information - Utilization of “DreamWaQ” technology can enable mass production of various types of “DreamWaQers” - Expected to be used in exploration of atypical environment involving unique circumstances such as disasters

2023-05-18