research

Machine-learned, light-field camera reads facial expressions from high-contrast illumination invariant 3D facial images

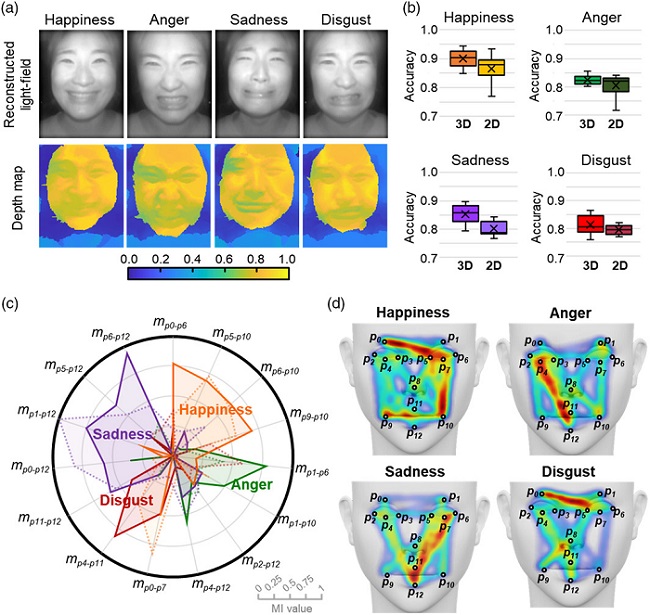

< Image: Facial expression reading based on MLP classification from 3D depth maps and 2D images obtained by NIR-LFC >

A joint research team led by Professors Ki-Hun Jeong and Doheon Lee from the KAIST Department of Bio and Brain Engineering reported the development of a technique for facial expression detection by merging near-infrared light-field camera techniques with artificial intelligence (AI) technology.

Unlike a conventional camera, the light-field camera contains micro-lens arrays in front of the image sensor, which makes the camera small enough to fit into a smart phone, while allowing it to acquire the spatial and directional information of the light with a single shot. The technique has received attention as it can reconstruct images in a variety of ways including multi-views, refocusing, and 3D image acquisition, giving rise to many potential applications.

However, the optical crosstalk between shadows caused by external light sources in the environment and the micro-lens has limited existing light-field cameras from being able to provide accurate image contrast and 3D reconstruction.

The joint research team applied a vertical-cavity surface-emitting laser (VCSEL) in the near-IR range to stabilize the accuracy of 3D image reconstruction that previously depended on environmental light. When an external light source is shone on a face at 0-, 30-, and 60-degree angles, the light field camera reduces 54% of image reconstruction errors. Additionally, by inserting a light-absorbing layer for visible and near-IR wavelengths between the micro-lens arrays, the team could minimize optical crosstalk while increasing the image contrast by 2.1 times.

Through this technique, the team could overcome the limitations of existing light-field cameras and was able to develop their NIR-based light-field camera (NIR-LFC), optimized for the 3D image reconstruction of facial expressions. Using the NIR-LFC, the team acquired high-quality 3D reconstruction images of facial expressions expressing various emotions regardless of the lighting conditions of the surrounding environment.

The facial expressions in the acquired 3D images were distinguished through machine learning with an average of 85% accuracy – a statistically significant figure compared to when 2D images were used. Furthermore, by calculating the interdependency of distance information that varies with facial expression in 3D images, the team could identify the information a light-field camera utilizes to distinguish human expressions.

Professor Ki-Hun Jeong said, “The sub-miniature light-field camera developed by the research team has the potential to become the new platform to quantitatively analyze the facial expressions and emotions of humans.” To highlight the significance of this research, he added, “It could be applied in various fields including mobile healthcare, field diagnosis, social cognition, and human-machine interactions.”

This research was published in Advanced Intelligent Systems online on December 16, under the title, “Machine-Learned Light-field Camera that Reads Facial Expression from High-Contrast and Illumination Invariant 3D Facial Images.” This research was funded by the Ministry of Science and ICT and the Ministry of Trade, Industry and Energy.

-Publication

“Machine-learned light-field camera that reads fascial expression from high-contrast and illumination invariant 3D facial images,” Sang-In Bae, Sangyeon Lee, Jae-Myeong Kwon, Hyun-Kyung Kim. Kyung-Won Jang, Doheon Lee, Ki-Hun Jeong, Advanced Intelligent Systems, December 16, 2021 (doi.org/10.1002/aisy.202100182)

Profile

Professor Ki-Hun Jeong

Biophotonic Laboratory

Department of Bio and Brain Engineering

KAIST

Professor Doheon Lee

Department of Bio and Brain Engineering

KAIST

-

research 'Fingerprint' Machine Learning Technique Identifies Different Bacteria in Seconds

A synergistic combination of surface-enhanced Raman spectroscopy and deep learning serves as an effective platform for separation-free detection of bacteria in arbitrary media Bacterial identification can take hours and often longer, precious time when diagnosing infections and selecting appropriate treatments. There may be a quicker, more accurate process according to researchers at KAIST. By teaching a deep learning algorithm to identify the “fingerprint” spectra of the molecula

2022-03-04 -

research Label-Free Multiplexed Microtomography of Endogenous Subcellular Dynamics Using Deep Learning

AI-based holographic microscopy allows molecular imaging without introducing exogenous labeling agents A research team upgraded the 3D microtomography observing dynamics of label-free live cells in multiplexed fluorescence imaging. The AI-powered 3D holotomographic microscopy extracts various molecular information from live unlabeled biological cells in real time without exogenous labeling or staining agents. Professor YongKeum Park’s team and the startup Tomocube encoded 3D refrac

2022-02-09 -

research Deep Learning Framework to Enable Material Design in Unseen Domain

Researchers propose a deep neural network-based forward design space exploration using active transfer learning and data augmentation A new study proposed a deep neural network-based forward design approach that enables an efficient search for superior materials far beyond the domain of the initial training set. This approach compensates for the weak predictive power of neural networks on an unseen domain through gradual updates of the neural network with active transfer learning and data

2021-09-29 -

research Deep-Learning and 3D Holographic Microscopy Beats Scientists at Analyzing Cancer Immunotherapy

Live tracking and analyzing of the dynamics of chimeric antigen receptor (CAR) T-cells targeting cancer cells can open new avenues for the development of cancer immunotherapy. However, imaging via conventional microscopy approaches can result in cellular damage, and assessments of cell-to-cell interactions are extremely difficult and labor-intensive. When researchers applied deep learning and 3D holographic microscopy to the task, however, they not only avoided these difficultues but found tha

2021-02-24 -

research DeepTFactor Predicts Transcription Factors

A deep learning-based tool predicts transcription factors using protein sequences as inputs A joint research team from KAIST and UCSD has developed a deep neural network named DeepTFactor that predicts transcription factors from protein sequences. DeepTFactor will serve as a useful tool for understanding the regulatory systems of organisms, accelerating the use of deep learning for solving biological problems. A transcription factor is a protein that specifically binds to DNA sequences to cont

2021-01-05