Intel

-

Team KAIST placed among top two at MBZIRC Maritime Grand Challenge

Representing Korean Robotics at Sea: KAIST’s 26-month strife rewarded

Team KAIST placed among top two at MBZIRC Maritime Grand Challenge

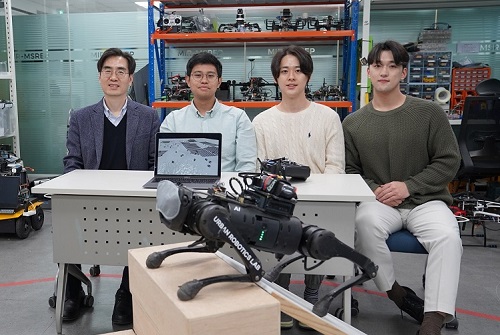

- Team KAIST, composed of students from the labs of Professor Jinwhan Kim of the Department of Mechanical Engineering and Professor Hyunchul Shim of the School of Electrical and Engineering, came through the challenge as the first runner-up winning the prize money totaling up to $650,000 (KRW 860 million).

- Successfully led the autonomous collaboration of unmanned aerial and maritime vehicles using cutting-edge robotics and AI technology through to the final round of the competition held in Abu Dhabi from January 10 to February 6, 2024.

KAIST (President Kwang-Hyung Lee), reported on the 8th that Team KAIST, led by students from the labs of Professor Jinwhan Kim of the Department of Mechanical Engineering and Professor Hyunchul Shim of the School of Electrical Engineering, with Pablo Aviation as a partner, won a total prize money of $650,000 (KRW 860 million) at the Maritime Grand Challenge by the Mohamed Bin Zayed International Robotics Challenge (MBZIRC), finishing first runner-up.

This competition, which is the largest ever robotics competition held over water, is sponsored by the government of the United Arab Emirates and organized by ASPIRE, an organization under the Abu Dhabi Ministry of Science, with a total prize money of $3 million.

In the competition, which started at the end of 2021, 52 teams from around the world participated and five teams were selected to go on to the finals in February 2023 after going through the first and second stages of screening. The final round was held from January 10 to February 6, 2024, using actual unmanned ships and drones in a secluded sea area of 10 km2 off the coast of Abu Dhabi, the capital of the United Arab Emirates. A total of 18 KAIST students and Professor Jinwhan Kim and Professor Hyunchul Shim took part in this competition at the location at Abu Dhabi.

Team KAIST will receive $500,000 in prize money for taking second place in the final, and the team’s prize money totals up to $650,000 including $150,000 that was as special midterm award for finalists.

The final mission scenario is to find the target vessel on the run carrying illegal cargoes among many ships moving within the GPS-disabled marine surface, and inspect the deck for two different types of stolen cargo to recover them using the aerial vehicle to bring the small cargo and the robot manipulator topped on an unmanned ship to retrieve the larger one. The true aim of the mission is to complete it through autonomous collaboration of the unmanned ship and the aerial vehicle without human intervention throughout the entire mission process. In particular, since GPS cannot be used in this competition due to regulations, Professor Jinwhan Kim's research team developed autonomous operation techniques for unmanned ships, including searching and navigating methods using maritime radar, and Professor Hyunchul Shim's research team developed video-based navigation and a technology to combine a small autonomous robot with a drone.

The final mission is to retrieve cargo on board a ship fleeing at sea through autonomous collaboration between unmanned ships and unmanned aerial vehicles without human intervention. The overall mission consists the first stage of conducting the inspection to find the target ship among several ships moving at sea and the second stage of conducting the intervention mission to retrieve the cargoes on the deck of the ship. Each team was given a total of three opportunities, and the team that completed the highest-level mission in the shortest time during the three attempts received the highest score.

In the first attempt, KAIST was the only team to succeed in the first stage search mission, but the competition began in earnest as the Croatian team also completed the first stage mission in the second attempt. As the competition schedule was delayed due to strong winds and high waves that continued for several days, the organizers decided to hold the finals with the three teams, including the Team KAIST and the team from Croatia’s the University of Zagreb, which completed the first stage of the mission, and Team Fly-Eagle, a team of researcher from China and UAE that partially completed the first stage. The three teams were given the chance to proceed to the finals and try for the third attempt, and in the final competition, the Croatian team won, KAIST took the second place, and the combined team of UAE-China combined team took the third place. The final prize to be given for the winning team is set at $2 million with $500,000 for the runner-up team, and $250,000 for the third-place.

Professor Jinwhan Kim of the Department of Mechanical Engineering, who served as the advisor for Team KAIST, said, “I would like to express my gratitude and congratulations to the students who put in a huge academic and physical efforts in preparing for the competition over the past two years. I feel rewarded because, regardless of the results, every bit of efforts put into this up to this point will become the base of their confidence and a valuable asset in their growth into a great researcher.” Sol Han, a doctoral student in mechanical engineering who served as the team leader, said, “I am disappointed of how narrowly we missed out on winning at the end, but I am satisfied with the significance of the output we’ve got and I am grateful to the team members who worked hard together for that.”

HD Hyundai, Rainbow Robotics, Avikus, and FIMS also participated as sponsors for Team KAIST's campaign.

2024.02.09 View 10078

Team KAIST placed among top two at MBZIRC Maritime Grand Challenge

Representing Korean Robotics at Sea: KAIST’s 26-month strife rewarded

Team KAIST placed among top two at MBZIRC Maritime Grand Challenge

- Team KAIST, composed of students from the labs of Professor Jinwhan Kim of the Department of Mechanical Engineering and Professor Hyunchul Shim of the School of Electrical and Engineering, came through the challenge as the first runner-up winning the prize money totaling up to $650,000 (KRW 860 million).

- Successfully led the autonomous collaboration of unmanned aerial and maritime vehicles using cutting-edge robotics and AI technology through to the final round of the competition held in Abu Dhabi from January 10 to February 6, 2024.

KAIST (President Kwang-Hyung Lee), reported on the 8th that Team KAIST, led by students from the labs of Professor Jinwhan Kim of the Department of Mechanical Engineering and Professor Hyunchul Shim of the School of Electrical Engineering, with Pablo Aviation as a partner, won a total prize money of $650,000 (KRW 860 million) at the Maritime Grand Challenge by the Mohamed Bin Zayed International Robotics Challenge (MBZIRC), finishing first runner-up.

This competition, which is the largest ever robotics competition held over water, is sponsored by the government of the United Arab Emirates and organized by ASPIRE, an organization under the Abu Dhabi Ministry of Science, with a total prize money of $3 million.

In the competition, which started at the end of 2021, 52 teams from around the world participated and five teams were selected to go on to the finals in February 2023 after going through the first and second stages of screening. The final round was held from January 10 to February 6, 2024, using actual unmanned ships and drones in a secluded sea area of 10 km2 off the coast of Abu Dhabi, the capital of the United Arab Emirates. A total of 18 KAIST students and Professor Jinwhan Kim and Professor Hyunchul Shim took part in this competition at the location at Abu Dhabi.

Team KAIST will receive $500,000 in prize money for taking second place in the final, and the team’s prize money totals up to $650,000 including $150,000 that was as special midterm award for finalists.

The final mission scenario is to find the target vessel on the run carrying illegal cargoes among many ships moving within the GPS-disabled marine surface, and inspect the deck for two different types of stolen cargo to recover them using the aerial vehicle to bring the small cargo and the robot manipulator topped on an unmanned ship to retrieve the larger one. The true aim of the mission is to complete it through autonomous collaboration of the unmanned ship and the aerial vehicle without human intervention throughout the entire mission process. In particular, since GPS cannot be used in this competition due to regulations, Professor Jinwhan Kim's research team developed autonomous operation techniques for unmanned ships, including searching and navigating methods using maritime radar, and Professor Hyunchul Shim's research team developed video-based navigation and a technology to combine a small autonomous robot with a drone.

The final mission is to retrieve cargo on board a ship fleeing at sea through autonomous collaboration between unmanned ships and unmanned aerial vehicles without human intervention. The overall mission consists the first stage of conducting the inspection to find the target ship among several ships moving at sea and the second stage of conducting the intervention mission to retrieve the cargoes on the deck of the ship. Each team was given a total of three opportunities, and the team that completed the highest-level mission in the shortest time during the three attempts received the highest score.

In the first attempt, KAIST was the only team to succeed in the first stage search mission, but the competition began in earnest as the Croatian team also completed the first stage mission in the second attempt. As the competition schedule was delayed due to strong winds and high waves that continued for several days, the organizers decided to hold the finals with the three teams, including the Team KAIST and the team from Croatia’s the University of Zagreb, which completed the first stage of the mission, and Team Fly-Eagle, a team of researcher from China and UAE that partially completed the first stage. The three teams were given the chance to proceed to the finals and try for the third attempt, and in the final competition, the Croatian team won, KAIST took the second place, and the combined team of UAE-China combined team took the third place. The final prize to be given for the winning team is set at $2 million with $500,000 for the runner-up team, and $250,000 for the third-place.

Professor Jinwhan Kim of the Department of Mechanical Engineering, who served as the advisor for Team KAIST, said, “I would like to express my gratitude and congratulations to the students who put in a huge academic and physical efforts in preparing for the competition over the past two years. I feel rewarded because, regardless of the results, every bit of efforts put into this up to this point will become the base of their confidence and a valuable asset in their growth into a great researcher.” Sol Han, a doctoral student in mechanical engineering who served as the team leader, said, “I am disappointed of how narrowly we missed out on winning at the end, but I am satisfied with the significance of the output we’ve got and I am grateful to the team members who worked hard together for that.”

HD Hyundai, Rainbow Robotics, Avikus, and FIMS also participated as sponsors for Team KAIST's campaign.

2024.02.09 View 10078 -

KAIST Professor Jiyun Lee becomes the first Korean to receive the Thurlow Award from the American Institute of Navigation

< Distinguished Professor Jiyun Lee from the KAIST Department of Aerospace Engineering >

KAIST (President Kwang-Hyung Lee) announced on January 27th that Distinguished Professor Jiyun Lee from the KAIST Department of Aerospace Engineering had won the Colonel Thomas L. Thurlow Award from the American Institute of Navigation (ION) for her achievements in the field of satellite navigation.

The American Institute of Navigation (ION) announced Distinguished Professor Lee as the winner of the Thurlow Award at its annual awards ceremony held in conjunction with its international conference in Long Beach, California on January 25th. This is the first time a person of Korean descent has received the award.

The Thurlow Award was established in 1945 to honor Colonel Thomas L. Thurlow, who made significant contributions to the development of navigation equipment and the training of navigators. This award aims to recognize an individual who has made an outstanding contribution to the development of navigation and it is awarded to one person each year. Past recipients include MIT professor Charles Stark Draper, who is well-known as the father of inertial navigation and who developed the guidance computer for the Apollo moon landing project.

Distinguished Professor Jiyun Lee was recognized for her significant contributions to technological advancements that ensure the safety of satellite-based navigation systems for aviation. In particular, she was recognized as a world authority in the field of navigation integrity architecture design, which is essential for ensuring the stability of intelligent transportation systems and autonomous unmanned systems. Distinguished Professor Lee made a groundbreaking contribution to help ensure the safety of satellite-based navigation systems from ionospheric disturbances, including those affected by sudden changes in external factors such as the solar and space environment.

She has achieved numerous scientific discoveries in the field of ionospheric research, while developing new ionospheric threat modeling methods, ionospheric anomaly monitoring and mitigation techniques, and integrity and availability assessment techniques for next-generation augmented navigation systems. She also contributed to the international standardization of technology through the International Civil Aviation Organization (ICAO).

Distinguished Professor Lee and her research group have pioneered innovative navigation technologies for the safe and autonomous operation of unmanned aerial vehicles (UAVs) and urban air mobility (UAM). She was the first to propose and develop a low-cost navigation satellite system (GNSS) augmented architecture for UAVs with a near-field network operation concept that ensures high integrity, and a networked ground station-based augmented navigation system for UAM. She also contributed to integrity design techniques, including failure monitoring and integrity risk assessment for multi-sensor integrated navigation systems.

< Professor Jiyoon Lee upon receiving the Thurlow Award >

Bradford Parkinson, professor emeritus at Stanford University and winner of the 1986 Thurlow Award, who is known as the father of GPS, congratulated Distinguished Professor Lee upon hearing that she was receiving the Thurlow Award and commented that her innovative research has addressed many important topics in the field of navigation and her solutions are highly innovative and highly regarded.

Distinguished Professor Lee said, “I am very honored and delighted to receive this award with its deep history and tradition in the field of navigation.” She added, “I will strive to help develop the future mobility industry by securing safe and sustainable navigation technology.”

2024.01.26 View 5775

KAIST Professor Jiyun Lee becomes the first Korean to receive the Thurlow Award from the American Institute of Navigation

< Distinguished Professor Jiyun Lee from the KAIST Department of Aerospace Engineering >

KAIST (President Kwang-Hyung Lee) announced on January 27th that Distinguished Professor Jiyun Lee from the KAIST Department of Aerospace Engineering had won the Colonel Thomas L. Thurlow Award from the American Institute of Navigation (ION) for her achievements in the field of satellite navigation.

The American Institute of Navigation (ION) announced Distinguished Professor Lee as the winner of the Thurlow Award at its annual awards ceremony held in conjunction with its international conference in Long Beach, California on January 25th. This is the first time a person of Korean descent has received the award.

The Thurlow Award was established in 1945 to honor Colonel Thomas L. Thurlow, who made significant contributions to the development of navigation equipment and the training of navigators. This award aims to recognize an individual who has made an outstanding contribution to the development of navigation and it is awarded to one person each year. Past recipients include MIT professor Charles Stark Draper, who is well-known as the father of inertial navigation and who developed the guidance computer for the Apollo moon landing project.

Distinguished Professor Jiyun Lee was recognized for her significant contributions to technological advancements that ensure the safety of satellite-based navigation systems for aviation. In particular, she was recognized as a world authority in the field of navigation integrity architecture design, which is essential for ensuring the stability of intelligent transportation systems and autonomous unmanned systems. Distinguished Professor Lee made a groundbreaking contribution to help ensure the safety of satellite-based navigation systems from ionospheric disturbances, including those affected by sudden changes in external factors such as the solar and space environment.

She has achieved numerous scientific discoveries in the field of ionospheric research, while developing new ionospheric threat modeling methods, ionospheric anomaly monitoring and mitigation techniques, and integrity and availability assessment techniques for next-generation augmented navigation systems. She also contributed to the international standardization of technology through the International Civil Aviation Organization (ICAO).

Distinguished Professor Lee and her research group have pioneered innovative navigation technologies for the safe and autonomous operation of unmanned aerial vehicles (UAVs) and urban air mobility (UAM). She was the first to propose and develop a low-cost navigation satellite system (GNSS) augmented architecture for UAVs with a near-field network operation concept that ensures high integrity, and a networked ground station-based augmented navigation system for UAM. She also contributed to integrity design techniques, including failure monitoring and integrity risk assessment for multi-sensor integrated navigation systems.

< Professor Jiyoon Lee upon receiving the Thurlow Award >

Bradford Parkinson, professor emeritus at Stanford University and winner of the 1986 Thurlow Award, who is known as the father of GPS, congratulated Distinguished Professor Lee upon hearing that she was receiving the Thurlow Award and commented that her innovative research has addressed many important topics in the field of navigation and her solutions are highly innovative and highly regarded.

Distinguished Professor Lee said, “I am very honored and delighted to receive this award with its deep history and tradition in the field of navigation.” She added, “I will strive to help develop the future mobility industry by securing safe and sustainable navigation technology.”

2024.01.26 View 5775 -

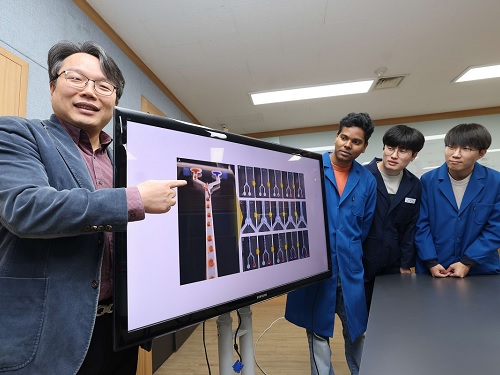

KAIST develops an artificial muscle device that produces force 34 times its weight

- Professor IlKwon Oh’s research team in KAIST’s Department of Mechanical Engineering developed a soft fluidic switch using an ionic polymer artificial muscle that runs with ultra-low power to lift objects 34 times greater than its weight.

- Its light weight and small size make it applicable to various industrial fields such as soft electronics, smart textiles, and biomedical devices by controlling fluid flow with high precision, even in narrow spaces.

Soft robots, medical devices, and wearable devices have permeated our daily lives. KAIST researchers have developed a fluid switch using ionic polymer artificial muscles that operates at ultra-low power and produces a force 34 times greater than its weight. Fluid switches control fluid flow, causing the fluid to flow in a specific direction to invoke various movements.

KAIST (President Kwang-Hyung Lee) announced on the 4th of January that a research team under Professor IlKwon Oh from the Department of Mechanical Engineering has developed a soft fluidic switch that operates at ultra-low voltage and can be used in narrow spaces.

Artificial muscles imitate human muscles and provide flexible and natural movements compared to traditional motors, making them one of the basic elements used in soft robots, medical devices, and wearable devices. These artificial muscles create movements in response to external stimuli such as electricity, air pressure, and temperature changes, and in order to utilize artificial muscles, it is important to control these movements precisely.

Switches based on existing motors were difficult to use within limited spaces due to their rigidity and large size. In order to address these issues, the research team developed an electro-ionic soft actuator that can control fluid flow while producing large amounts of force, even in a narrow pipe, and used it as a soft fluidic switch.

< Figure 1. The separation of fluid droplets using a soft fluid switch at ultra-low voltage. >

The ionic polymer artificial muscle developed by the research team is composed of metal electrodes and ionic polymers, and it generates force and movement in response to electricity. A polysulfonated covalent organic framework (pS-COF) made by combining organic molecules on the surface of the artificial muscle electrode was used to generate an impressive amount of force relative to its weight with ultra-low power (~0.01V).

As a result, the artificial muscle, which was manufactured to be as thin as a hair with a thickness of 180 µm, produced a force more than 34 times greater than its light weight of 10 mg to initiate smooth movement. Through this, the research team was able to precisely control the direction of fluid flow with low power.

< Figure 2. The synthesis and use of pS-COF as a common electrode-electrolyte host for electroactive soft fluid switches. A) The synthesis schematic of pS-COF. B) The schematic diagram of the operating principle of the electrochemical soft switch. C) The schematic diagram of using a pS-COF-based electrochemical soft switch to control fluid flow in dynamic operation. >

Professor IlKwon Oh, who led this research, said, “The electrochemical soft fluidic switch that operate at ultra-low power can open up many possibilities in the fields of soft robots, soft electronics, and microfluidics based on fluid control.” He added, “From smart fibers to biomedical devices, this technology has the potential to be immediately put to use in a variety of industrial settings as it can be easily applied to ultra-small electronic systems in our daily lives.”

The results of this study, in which Dr. Manmatha Mahato, a research professor in the Department of Mechanical Engineering at KAIST, participated as the first author, were published in the international academic journal Science Advances on December 13, 2023. (Paper title: Polysulfonated Covalent Organic Framework as Active Electrode Host for Mobile Cation Guests in Electrochemical Soft Actuator)

This research was conducted with support from the National Research Foundation of Korea's Leader Scientist Support Project (Creative Research Group) and Future Convergence Pioneer Project.

* Paper DOI: https://www.science.org/doi/abs/10.1126/sciadv.adk9752

2024.01.11 View 9028

KAIST develops an artificial muscle device that produces force 34 times its weight

- Professor IlKwon Oh’s research team in KAIST’s Department of Mechanical Engineering developed a soft fluidic switch using an ionic polymer artificial muscle that runs with ultra-low power to lift objects 34 times greater than its weight.

- Its light weight and small size make it applicable to various industrial fields such as soft electronics, smart textiles, and biomedical devices by controlling fluid flow with high precision, even in narrow spaces.

Soft robots, medical devices, and wearable devices have permeated our daily lives. KAIST researchers have developed a fluid switch using ionic polymer artificial muscles that operates at ultra-low power and produces a force 34 times greater than its weight. Fluid switches control fluid flow, causing the fluid to flow in a specific direction to invoke various movements.

KAIST (President Kwang-Hyung Lee) announced on the 4th of January that a research team under Professor IlKwon Oh from the Department of Mechanical Engineering has developed a soft fluidic switch that operates at ultra-low voltage and can be used in narrow spaces.

Artificial muscles imitate human muscles and provide flexible and natural movements compared to traditional motors, making them one of the basic elements used in soft robots, medical devices, and wearable devices. These artificial muscles create movements in response to external stimuli such as electricity, air pressure, and temperature changes, and in order to utilize artificial muscles, it is important to control these movements precisely.

Switches based on existing motors were difficult to use within limited spaces due to their rigidity and large size. In order to address these issues, the research team developed an electro-ionic soft actuator that can control fluid flow while producing large amounts of force, even in a narrow pipe, and used it as a soft fluidic switch.

< Figure 1. The separation of fluid droplets using a soft fluid switch at ultra-low voltage. >

The ionic polymer artificial muscle developed by the research team is composed of metal electrodes and ionic polymers, and it generates force and movement in response to electricity. A polysulfonated covalent organic framework (pS-COF) made by combining organic molecules on the surface of the artificial muscle electrode was used to generate an impressive amount of force relative to its weight with ultra-low power (~0.01V).

As a result, the artificial muscle, which was manufactured to be as thin as a hair with a thickness of 180 µm, produced a force more than 34 times greater than its light weight of 10 mg to initiate smooth movement. Through this, the research team was able to precisely control the direction of fluid flow with low power.

< Figure 2. The synthesis and use of pS-COF as a common electrode-electrolyte host for electroactive soft fluid switches. A) The synthesis schematic of pS-COF. B) The schematic diagram of the operating principle of the electrochemical soft switch. C) The schematic diagram of using a pS-COF-based electrochemical soft switch to control fluid flow in dynamic operation. >

Professor IlKwon Oh, who led this research, said, “The electrochemical soft fluidic switch that operate at ultra-low power can open up many possibilities in the fields of soft robots, soft electronics, and microfluidics based on fluid control.” He added, “From smart fibers to biomedical devices, this technology has the potential to be immediately put to use in a variety of industrial settings as it can be easily applied to ultra-small electronic systems in our daily lives.”

The results of this study, in which Dr. Manmatha Mahato, a research professor in the Department of Mechanical Engineering at KAIST, participated as the first author, were published in the international academic journal Science Advances on December 13, 2023. (Paper title: Polysulfonated Covalent Organic Framework as Active Electrode Host for Mobile Cation Guests in Electrochemical Soft Actuator)

This research was conducted with support from the National Research Foundation of Korea's Leader Scientist Support Project (Creative Research Group) and Future Convergence Pioneer Project.

* Paper DOI: https://www.science.org/doi/abs/10.1126/sciadv.adk9752

2024.01.11 View 9028 -

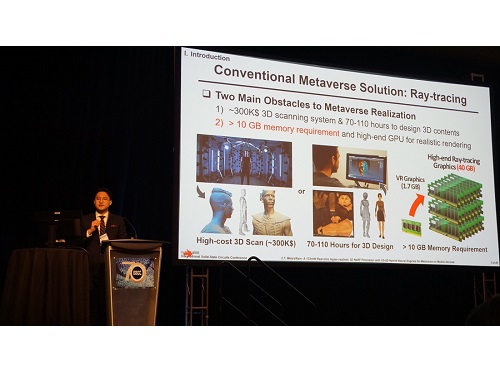

KAIST Demonstrates AI and sustainable technologies at CES 2024

On January 2, KAIST announced it will be participating in the Consumer Electronics Show (CES) 2024, held between January 9 and 12.

CES 2024 is one of the world’s largest tech conferences to take place in Las Vegas. Under the slogan “KAIST, the Global Value Creator” for its exhibition, KAIST has submitted technologies falling under one of following themes: “Expansion of Human Intelligence, Mobility, and Reality”, and “Pursuit of Human Security and Sustainable Development”.

24 startups and pre-startups whose technologies stand out in various fields including artificial intelligence (AI), mobility, virtual reality, healthcare and human security, and sustainable development, will welcome their visitors at an exclusive booth of 232 m2 prepared for KAIST at Eureka Park in Las Vegas.

12 businesses will participate in the first category, “Expansion of Human Intelligence, Mobility, and Reality”, including MicroPix, Panmnesia, DeepAuto, MGL, Reports, Narnia Labs, EL FACTORY, Korea Position Technology, AudAi, Planby Technologies, Movin, and Studio Lab.

In the “Pursuit of Human Security and Sustainable Development” category, 12 businesses including Aldaver, ADNC, Solve, Iris, Blue Device, Barreleye, TR, A2US, Greeners, Iron Boys, Shard Partners and Kingbot, will be introduced.

In particular, Aldaver is a startup that received the Korean Business Award 2023 as well as the presidential award at the Challenge K-Startup with its biomimetic material and printing technology. It has attracted 4.5 billion KRW of investment thus far.

Narnia Labs, with its AI design solution for manufacturing, won the grand prize for K-tech Startups 2022, and has so far attracted 3.5 billion KRW of investments.

Panmnesia is a startup that won the 2024 CES Innovation Award, recognized for their fab-less AI semiconductor technology. They attracted 16 billion KRW of investment through seed round alone.

Meanwhile, student startups will also be presented during the exhibition. Studio Lab received a CES 2024 Best of Innovation Award in the AI category. The team developed the software Seller Canvas, which automatically generates a page for product details when a user uploads an image of a product.

The central stage at the KAIST exhibition booth will be used to interview members of the participating startups between Jan 9 to 11, as well as a networking site for businesses and invited investors during KAIST NIGHT on the evening of 10th, between 5 and 7 PM.

Director Sung-Yool Choi of the KAIST Institute of Technology Value Creation said, “Through CES 2024, KAIST will overcome the limits of human intelligence, mobility, and space with the deep-tech based technologies developed by its startups, and will demonstrate its achievements for realizing its vision as a global value-creating university through the solutions for human security and sustainable development.”

2024.01.05 View 8613

KAIST Demonstrates AI and sustainable technologies at CES 2024

On January 2, KAIST announced it will be participating in the Consumer Electronics Show (CES) 2024, held between January 9 and 12.

CES 2024 is one of the world’s largest tech conferences to take place in Las Vegas. Under the slogan “KAIST, the Global Value Creator” for its exhibition, KAIST has submitted technologies falling under one of following themes: “Expansion of Human Intelligence, Mobility, and Reality”, and “Pursuit of Human Security and Sustainable Development”.

24 startups and pre-startups whose technologies stand out in various fields including artificial intelligence (AI), mobility, virtual reality, healthcare and human security, and sustainable development, will welcome their visitors at an exclusive booth of 232 m2 prepared for KAIST at Eureka Park in Las Vegas.

12 businesses will participate in the first category, “Expansion of Human Intelligence, Mobility, and Reality”, including MicroPix, Panmnesia, DeepAuto, MGL, Reports, Narnia Labs, EL FACTORY, Korea Position Technology, AudAi, Planby Technologies, Movin, and Studio Lab.

In the “Pursuit of Human Security and Sustainable Development” category, 12 businesses including Aldaver, ADNC, Solve, Iris, Blue Device, Barreleye, TR, A2US, Greeners, Iron Boys, Shard Partners and Kingbot, will be introduced.

In particular, Aldaver is a startup that received the Korean Business Award 2023 as well as the presidential award at the Challenge K-Startup with its biomimetic material and printing technology. It has attracted 4.5 billion KRW of investment thus far.

Narnia Labs, with its AI design solution for manufacturing, won the grand prize for K-tech Startups 2022, and has so far attracted 3.5 billion KRW of investments.

Panmnesia is a startup that won the 2024 CES Innovation Award, recognized for their fab-less AI semiconductor technology. They attracted 16 billion KRW of investment through seed round alone.

Meanwhile, student startups will also be presented during the exhibition. Studio Lab received a CES 2024 Best of Innovation Award in the AI category. The team developed the software Seller Canvas, which automatically generates a page for product details when a user uploads an image of a product.

The central stage at the KAIST exhibition booth will be used to interview members of the participating startups between Jan 9 to 11, as well as a networking site for businesses and invited investors during KAIST NIGHT on the evening of 10th, between 5 and 7 PM.

Director Sung-Yool Choi of the KAIST Institute of Technology Value Creation said, “Through CES 2024, KAIST will overcome the limits of human intelligence, mobility, and space with the deep-tech based technologies developed by its startups, and will demonstrate its achievements for realizing its vision as a global value-creating university through the solutions for human security and sustainable development.”

2024.01.05 View 8613 -

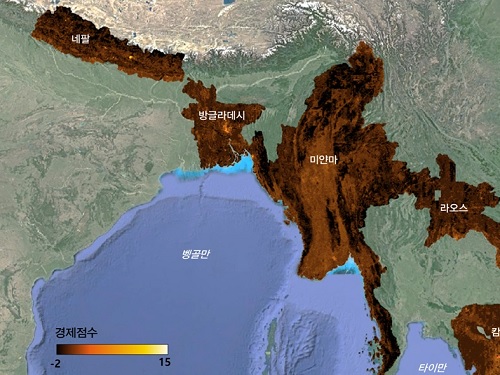

North Korea and Beyond: AI-Powered Satellite Analysis Reveals the Unseen Economic Landscape of Underdeveloped Nations

- A joint research team in computer science, economics, and geography has developed an artificial intelligence (AI) technology to measure grid-level economic development within six-square-kilometer regions.

- This AI technology is applicable in regions with limited statistical data (e.g., North Korea), supporting international efforts to propose policies for economic growth and poverty reduction in underdeveloped countries.

- The research team plans to make this technology freely available for use to contribute to the United Nations' Sustainable Development Goals (SDGs).

The United Nations reports that more than 700 million people are in extreme poverty, earning less than two dollars a day. However, an accurate assessment of poverty remains a global challenge. For example, 53 countries have not conducted agricultural surveys in the past 15 years, and 17 countries have not published a population census. To fill this data gap, new technologies are being explored to estimate poverty using alternative sources such as street views, aerial photos, and satellite images.

The paper published in Nature Communications demonstrates how artificial intelligence (AI) can help analyze economic conditions from daytime satellite imagery. This new technology can even apply to the least developed countries - such as North Korea - that do not have reliable statistical data for typical machine learning training.

The researchers used Sentinel-2 satellite images from the European Space Agency (ESA) that are publicly available. They split these images into small six-square-kilometer grids. At this zoom level, visual information such as buildings, roads, and greenery can be used to quantify economic indicators. As a result, the team obtained the first ever fine-grained economic map of regions like North Korea. The same algorithm was applied to other underdeveloped countries in Asia: North Korea, Nepal, Laos, Myanmar, Bangladesh, and Cambodia (see Image 1).

The key feature of their research model is the "human-machine collaborative approach," which lets researchers combine human input with AI predictions for areas with scarce data. In this research, ten human experts compared satellite images and judged the economic conditions in the area, with the AI learning from this human data and giving economic scores to each image. The results showed that the Human-AI collaborative approach outperformed machine-only learning algorithms.

< Image 1. Nightlight satellite images of North Korea (Top-left: Background photo provided by NASA's Earth Observatory). South Korea appears brightly lit compared to North Korea, which is mostly dark except for Pyongyang. In contrast, the model developed by the research team uses daytime satellite imagery to predict more detailed economic predictions for North Korea (top-right) and five Asian countries (Bottom: Background photo from Google Earth). >

The research was led by an interdisciplinary team of computer scientists, economists, and a geographer from KAIST & IBS (Donghyun Ahn, Meeyoung Cha, Jihee Kim), Sogang University (Hyunjoo Yang), HKUST (Sangyoon Park), and NUS (Jeasurk Yang). Dr Charles Axelsson, Associate Editor at Nature Communications, handled this paper during the peer review process at the journal.

The research team found that the scores showed a strong correlation with traditional socio-economic metrics such as population density, employment, and number of businesses. This demonstrates the wide applicability and scalability of the approach, particularly in data-scarce countries. Furthermore, the model's strength lies in its ability to detect annual changes in economic conditions at a more detailed geospatial level without using any survey data (see Image 2).

< Image 2. Differences in satellite imagery and economic scores in North Korea between 2016 and 2019. Significant development was found in the Wonsan Kalma area (top), one of the tourist development zones, but no changes were observed in the Wiwon Industrial Development Zone (bottom). (Background photo: Sentinel-2 satellite imagery provided by the European Space Agency (ESA)). >

This model would be especially valuable for rapidly monitoring the progress of Sustainable Development Goals such as reducing poverty and promoting more equitable and sustainable growth on an international scale. The model can also be adapted to measure various social and environmental indicators. For example, it can be trained to identify regions with high vulnerability to climate change and disasters to provide timely guidance on disaster relief efforts.

As an example, the researchers explored how North Korea changed before and after the United Nations sanctions against the country. By applying the model to satellite images of North Korea both in 2016 and in 2019, the researchers discovered three key trends in the country's economic development between 2016 and 2019. First, economic growth in North Korea became more concentrated in Pyongyang and major cities, exacerbating the urban-rural divide. Second, satellite imagery revealed significant changes in areas designated for tourism and economic development, such as new building construction and other meaningful alterations. Third, traditional industrial and export development zones showed relatively minor changes.

Meeyoung Cha, a data scientist in the team explained, "This is an important interdisciplinary effort to address global challenges like poverty. We plan to apply our AI algorithm to other international issues, such as monitoring carbon emissions, disaster damage detection, and the impact of climate change."

An economist on the research team, Jihee Kim, commented that this approach would enable detailed examinations of economic conditions in the developing world at a low cost, reducing data disparities between developed and developing nations. She further emphasized that this is most essential because many public policies require economic measurements to achieve their goals, whether they are for growth, equality, or sustainability.

The research team has made the source code publicly available via GitHub and plans to continue improving the technology, applying it to new satellite images updated annually. The results of this study, with Ph.D. candidate Donghyun Ahn at KAIST and Ph.D. candidate Jeasurk Yang at NUS as joint first authors, were published in Nature Communications under the title "A human-machine collaborative approach measures economic development using satellite imagery."

< Photos of the main authors. 1. Donghyun Ahn, PhD candidate at KAIST School of Computing 2. Jeasurk Yang, PhD candidate at the Department of Geography of National University of Singapore 3. Meeyoung Cha, Professor of KAIST School of Computing and CI at IBS 4. Jihee Kim, Professor of KAIST School of Business and Technology Management 5. Sangyoon Park, Professor of the Division of Social Science at Hong Kong University of Science and Technology 6. Hyunjoo Yang, Professor of the Department of Economics at Sogang University >

2023.12.07 View 7051

North Korea and Beyond: AI-Powered Satellite Analysis Reveals the Unseen Economic Landscape of Underdeveloped Nations

- A joint research team in computer science, economics, and geography has developed an artificial intelligence (AI) technology to measure grid-level economic development within six-square-kilometer regions.

- This AI technology is applicable in regions with limited statistical data (e.g., North Korea), supporting international efforts to propose policies for economic growth and poverty reduction in underdeveloped countries.

- The research team plans to make this technology freely available for use to contribute to the United Nations' Sustainable Development Goals (SDGs).

The United Nations reports that more than 700 million people are in extreme poverty, earning less than two dollars a day. However, an accurate assessment of poverty remains a global challenge. For example, 53 countries have not conducted agricultural surveys in the past 15 years, and 17 countries have not published a population census. To fill this data gap, new technologies are being explored to estimate poverty using alternative sources such as street views, aerial photos, and satellite images.

The paper published in Nature Communications demonstrates how artificial intelligence (AI) can help analyze economic conditions from daytime satellite imagery. This new technology can even apply to the least developed countries - such as North Korea - that do not have reliable statistical data for typical machine learning training.

The researchers used Sentinel-2 satellite images from the European Space Agency (ESA) that are publicly available. They split these images into small six-square-kilometer grids. At this zoom level, visual information such as buildings, roads, and greenery can be used to quantify economic indicators. As a result, the team obtained the first ever fine-grained economic map of regions like North Korea. The same algorithm was applied to other underdeveloped countries in Asia: North Korea, Nepal, Laos, Myanmar, Bangladesh, and Cambodia (see Image 1).

The key feature of their research model is the "human-machine collaborative approach," which lets researchers combine human input with AI predictions for areas with scarce data. In this research, ten human experts compared satellite images and judged the economic conditions in the area, with the AI learning from this human data and giving economic scores to each image. The results showed that the Human-AI collaborative approach outperformed machine-only learning algorithms.

< Image 1. Nightlight satellite images of North Korea (Top-left: Background photo provided by NASA's Earth Observatory). South Korea appears brightly lit compared to North Korea, which is mostly dark except for Pyongyang. In contrast, the model developed by the research team uses daytime satellite imagery to predict more detailed economic predictions for North Korea (top-right) and five Asian countries (Bottom: Background photo from Google Earth). >

The research was led by an interdisciplinary team of computer scientists, economists, and a geographer from KAIST & IBS (Donghyun Ahn, Meeyoung Cha, Jihee Kim), Sogang University (Hyunjoo Yang), HKUST (Sangyoon Park), and NUS (Jeasurk Yang). Dr Charles Axelsson, Associate Editor at Nature Communications, handled this paper during the peer review process at the journal.

The research team found that the scores showed a strong correlation with traditional socio-economic metrics such as population density, employment, and number of businesses. This demonstrates the wide applicability and scalability of the approach, particularly in data-scarce countries. Furthermore, the model's strength lies in its ability to detect annual changes in economic conditions at a more detailed geospatial level without using any survey data (see Image 2).

< Image 2. Differences in satellite imagery and economic scores in North Korea between 2016 and 2019. Significant development was found in the Wonsan Kalma area (top), one of the tourist development zones, but no changes were observed in the Wiwon Industrial Development Zone (bottom). (Background photo: Sentinel-2 satellite imagery provided by the European Space Agency (ESA)). >

This model would be especially valuable for rapidly monitoring the progress of Sustainable Development Goals such as reducing poverty and promoting more equitable and sustainable growth on an international scale. The model can also be adapted to measure various social and environmental indicators. For example, it can be trained to identify regions with high vulnerability to climate change and disasters to provide timely guidance on disaster relief efforts.

As an example, the researchers explored how North Korea changed before and after the United Nations sanctions against the country. By applying the model to satellite images of North Korea both in 2016 and in 2019, the researchers discovered three key trends in the country's economic development between 2016 and 2019. First, economic growth in North Korea became more concentrated in Pyongyang and major cities, exacerbating the urban-rural divide. Second, satellite imagery revealed significant changes in areas designated for tourism and economic development, such as new building construction and other meaningful alterations. Third, traditional industrial and export development zones showed relatively minor changes.

Meeyoung Cha, a data scientist in the team explained, "This is an important interdisciplinary effort to address global challenges like poverty. We plan to apply our AI algorithm to other international issues, such as monitoring carbon emissions, disaster damage detection, and the impact of climate change."

An economist on the research team, Jihee Kim, commented that this approach would enable detailed examinations of economic conditions in the developing world at a low cost, reducing data disparities between developed and developing nations. She further emphasized that this is most essential because many public policies require economic measurements to achieve their goals, whether they are for growth, equality, or sustainability.

The research team has made the source code publicly available via GitHub and plans to continue improving the technology, applying it to new satellite images updated annually. The results of this study, with Ph.D. candidate Donghyun Ahn at KAIST and Ph.D. candidate Jeasurk Yang at NUS as joint first authors, were published in Nature Communications under the title "A human-machine collaborative approach measures economic development using satellite imagery."

< Photos of the main authors. 1. Donghyun Ahn, PhD candidate at KAIST School of Computing 2. Jeasurk Yang, PhD candidate at the Department of Geography of National University of Singapore 3. Meeyoung Cha, Professor of KAIST School of Computing and CI at IBS 4. Jihee Kim, Professor of KAIST School of Business and Technology Management 5. Sangyoon Park, Professor of the Division of Social Science at Hong Kong University of Science and Technology 6. Hyunjoo Yang, Professor of the Department of Economics at Sogang University >

2023.12.07 View 7051 -

KAIST-UCSD researchers build an enzyme discovering AI

- A joint research team led by Distinguished Professor Sang Yup Lee of the Department of Chemical and Biomolecular Engineering and Bernhard Palsson of UCSD developed ‘DeepECtransformer’, an artificial intelligence that can predict Enzyme Commission (EC) number of proteins.

- The AI is tasked to discover new enzymes that have not been discovered yet, which would allow prediction for a total of 5,360 types of Enzyme Commission (EC) numbers

- It is expected to be used in the development of microbial cell factories that produce environmentally friendly chemicals as a core technology for analyzing the metabolic network of a genome.

While E. coli is one of the most studied organisms, the function of 30% of proteins that make up E. coli has not yet been clearly revealed. For this, an artificial intelligence was used to discover 464 types of enzymes from the proteins that were unknown, and the researchers went on to verify the predictions of 3 types of proteins were successfully identified through in vitro enzyme assay.

KAIST (President Kwang-Hyung Lee) announced on the 24th that a joint research team comprised of Gi Bae Kim, Ji Yeon Kim, Dr. Jong An Lee and Distinguished Professor Sang Yup Lee of the Department of Chemical and Biomolecular Engineering at KAIST, and Dr. Charles J. Norsigian and Professor Bernhard O. Palsson of the Department of Bioengineering at UCSD has developed DeepECtransformer, an artificial intelligence that can predict the enzyme functions from the protein sequence, and has established a prediction system by utilizing the AI to quickly and accurately identify the enzyme function.

Enzymes are proteins that catalyze biological reactions, and identifying the function of each enzyme is essential to understanding the various chemical reactions that exist in living organisms and the metabolic characteristics of those organisms. Enzyme Commission (EC) number is an enzyme function classification system designed by the International Union of Biochemistry and Molecular Biology, and in order to understand the metabolic characteristics of various organisms, it is necessary to develop a technology that can quickly analyze enzymes and EC numbers of the enzymes present in the genome.

Various methodologies based on deep learning have been developed to analyze the features of biological sequences, including protein function prediction, but most of them have a problem of a black box, where the inference process of AI cannot be interpreted. Various prediction systems that utilize AI for enzyme function prediction have also been reported, but they do not solve this black box problem, or cannot interpret the reasoning process in fine-grained level (e.g., the level of amino acid residues in the enzyme sequence).

The joint team developed DeepECtransformer, an AI that utilizes deep learning and a protein homology analysis module to predict the enzyme function of a given protein sequence. To better understand the features of protein sequences, the transformer architecture, which is commonly used in natural language processing, was additionally used to extract important features about enzyme functions in the context of the entire protein sequence, which enabled the team to accurately predict the EC number of the enzyme. The developed DeepECtransformer can predict a total of 5360 EC numbers.

The joint team further analyzed the transformer architecture to understand the inference process of DeepECtransformer, and found that in the inference process, the AI utilizes information on catalytic active sites and/or the cofactor binding sites which are important for enzyme function. By analyzing the black box of DeepECtransformer, it was confirmed that the AI was able to identify the features that are important for enzyme function on its own during the learning process.

"By utilizing the prediction system we developed, we were able to predict the functions of enzymes that had not yet been identified and verify them experimentally," said Gi Bae Kim, the first author of the paper. "By using DeepECtransformer to identify previously unknown enzymes in living organisms, we will be able to more accurately analyze various facets involved in the metabolic processes of organisms, such as the enzymes needed to biosynthesize various useful compounds or the enzymes needed to biodegrade plastics." he added.

"DeepECtransformer, which quickly and accurately predicts enzyme functions, is a key technology in functional genomics, enabling us to analyze the function of entire enzymes at the systems level," said Professor Sang Yup Lee. He added, “We will be able to use it to develop eco-friendly microbial factories based on comprehensive genome-scale metabolic models, potentially minimizing missing information of metabolism.”

The joint team’s work on DeepECtransformer is described in the paper titled "Functional annotation of enzyme-encoding genes using deep learning with transformer layers" written by Gi Bae Kim, Professor Sang Yup Lee of the Department of Chemical and Biomolecular Engineering of KAIST and their colleagues. The paper was published via peer-review on the 14th of November on “Nature Communications”.

This research was conducted with the support by “the Development of next-generation biorefinery platform technologies for leading bio-based chemicals industry project (2022M3J5A1056072)” and by “Development of platform technologies of microbial cell factories for the next-generation biorefineries project (2022M3J5A1056117)” from National Research Foundation supported by the Korean Ministry of Science and ICT (Project Leader: Distinguished Professor Sang Yup Lee, KAIST).

< Figure 1. The structure of DeepECtransformer's artificial neural network >

2023.11.24 View 5004

KAIST-UCSD researchers build an enzyme discovering AI

- A joint research team led by Distinguished Professor Sang Yup Lee of the Department of Chemical and Biomolecular Engineering and Bernhard Palsson of UCSD developed ‘DeepECtransformer’, an artificial intelligence that can predict Enzyme Commission (EC) number of proteins.

- The AI is tasked to discover new enzymes that have not been discovered yet, which would allow prediction for a total of 5,360 types of Enzyme Commission (EC) numbers

- It is expected to be used in the development of microbial cell factories that produce environmentally friendly chemicals as a core technology for analyzing the metabolic network of a genome.

While E. coli is one of the most studied organisms, the function of 30% of proteins that make up E. coli has not yet been clearly revealed. For this, an artificial intelligence was used to discover 464 types of enzymes from the proteins that were unknown, and the researchers went on to verify the predictions of 3 types of proteins were successfully identified through in vitro enzyme assay.

KAIST (President Kwang-Hyung Lee) announced on the 24th that a joint research team comprised of Gi Bae Kim, Ji Yeon Kim, Dr. Jong An Lee and Distinguished Professor Sang Yup Lee of the Department of Chemical and Biomolecular Engineering at KAIST, and Dr. Charles J. Norsigian and Professor Bernhard O. Palsson of the Department of Bioengineering at UCSD has developed DeepECtransformer, an artificial intelligence that can predict the enzyme functions from the protein sequence, and has established a prediction system by utilizing the AI to quickly and accurately identify the enzyme function.

Enzymes are proteins that catalyze biological reactions, and identifying the function of each enzyme is essential to understanding the various chemical reactions that exist in living organisms and the metabolic characteristics of those organisms. Enzyme Commission (EC) number is an enzyme function classification system designed by the International Union of Biochemistry and Molecular Biology, and in order to understand the metabolic characteristics of various organisms, it is necessary to develop a technology that can quickly analyze enzymes and EC numbers of the enzymes present in the genome.

Various methodologies based on deep learning have been developed to analyze the features of biological sequences, including protein function prediction, but most of them have a problem of a black box, where the inference process of AI cannot be interpreted. Various prediction systems that utilize AI for enzyme function prediction have also been reported, but they do not solve this black box problem, or cannot interpret the reasoning process in fine-grained level (e.g., the level of amino acid residues in the enzyme sequence).

The joint team developed DeepECtransformer, an AI that utilizes deep learning and a protein homology analysis module to predict the enzyme function of a given protein sequence. To better understand the features of protein sequences, the transformer architecture, which is commonly used in natural language processing, was additionally used to extract important features about enzyme functions in the context of the entire protein sequence, which enabled the team to accurately predict the EC number of the enzyme. The developed DeepECtransformer can predict a total of 5360 EC numbers.

The joint team further analyzed the transformer architecture to understand the inference process of DeepECtransformer, and found that in the inference process, the AI utilizes information on catalytic active sites and/or the cofactor binding sites which are important for enzyme function. By analyzing the black box of DeepECtransformer, it was confirmed that the AI was able to identify the features that are important for enzyme function on its own during the learning process.

"By utilizing the prediction system we developed, we were able to predict the functions of enzymes that had not yet been identified and verify them experimentally," said Gi Bae Kim, the first author of the paper. "By using DeepECtransformer to identify previously unknown enzymes in living organisms, we will be able to more accurately analyze various facets involved in the metabolic processes of organisms, such as the enzymes needed to biosynthesize various useful compounds or the enzymes needed to biodegrade plastics." he added.

"DeepECtransformer, which quickly and accurately predicts enzyme functions, is a key technology in functional genomics, enabling us to analyze the function of entire enzymes at the systems level," said Professor Sang Yup Lee. He added, “We will be able to use it to develop eco-friendly microbial factories based on comprehensive genome-scale metabolic models, potentially minimizing missing information of metabolism.”

The joint team’s work on DeepECtransformer is described in the paper titled "Functional annotation of enzyme-encoding genes using deep learning with transformer layers" written by Gi Bae Kim, Professor Sang Yup Lee of the Department of Chemical and Biomolecular Engineering of KAIST and their colleagues. The paper was published via peer-review on the 14th of November on “Nature Communications”.

This research was conducted with the support by “the Development of next-generation biorefinery platform technologies for leading bio-based chemicals industry project (2022M3J5A1056072)” and by “Development of platform technologies of microbial cell factories for the next-generation biorefineries project (2022M3J5A1056117)” from National Research Foundation supported by the Korean Ministry of Science and ICT (Project Leader: Distinguished Professor Sang Yup Lee, KAIST).

< Figure 1. The structure of DeepECtransformer's artificial neural network >

2023.11.24 View 5004 -

NYU-KAIST Global AI & Digital Governance Conference Held

< Photo 1. Opening of NYU-KAIST Global AI & Digital Governance Conference >

In attendance of the Minister of Science and ICT Jong-ho Lee, NYU President Linda G. Mills, and KAIST President Kwang Hyung Lee, KAIST co-hosted the NYU-KAIST Global AI & Digital Governance Conference at the Paulson Center of New York University (NYU) in New York City, USA on September 21st, 9:30 pm.

At the conference, KAIST and NYU discussed the direction and policies for ‘global AI and digital governance’ with participants of upto 300 people which includes scholars, professors, and students involved in the academic field of AI and digitalization from both Korea and the United States and other international backgrounds. This conference was a forum of an international discussion that sought new directions for AI and digital technology take in the future and gathered consensus on regulations.

Following a welcoming address by KAIST President, Kwang Hyung Lee and a congratulatory message from the Minister of Science and ICT, Jong-ho Lee, a panel discussion was held, moderated by Professor Matthew Liao, a graduate of Princeton and Oxford University, currently serving as a professor at NYU and the director at the Center for Bioethics of the NYU School of Global Public Health.

Six prominent scholars took part in the panel discussion. Prof. Kyung-hyun Cho of NYU Applied Mathematics and Data Science Center, a KAIST graduate who has joined the ranks of the world-class in AI language models and Professor Jong Chul Ye, the Director of Promotion Council for Digital Health at KAIST, who is leading innovative research in the field of medical AI working in collaboration with major hospitals at home and abroad was on the panel. Additionally, Professor Luciano Floridi, a founding member of the Yale University Center for Digital Ethics, Professor Shannon Vallor, the Baillie Gifford Professor in the Ethics of Data and Artificial Intelligence at the University of Edinburgh of the UK, Professor Stefaan Verhulst, a Co-Founder and the DIrector of GovLab‘s Data Program at NYU’s Tandon School of Engineering, and Professor Urs Gasser, who is in charge of public policy, governance and innovative technology at the Technical University of Munich, also participated.

Professor Matthew Liao from NYU led the discussion on various topics such as the ways to to regulate AI and digital technologies; the concerns about how deep learning technology being developed in medicinal purposes could be used in warfare; the scope of responsibilities Al scientists' responsibility should carry in ensuring the usage of AI are limited to benign purposes only; the effects of external regulation on the AI model developers and the research they pursue; and on the lessons that can be learned from the regulations in other fields.

During the panel discussion, there was an exchange of ideas about a system of standards that could harmonize digital development and regulatory and social ethics in today’s situation in which digital transformation accelerates technological development at a global level, there is a looming concern that while such advancements are bringing economic vitality it may create digital divides and probles like manipulation of public opinion. Professor Jong-cheol Ye of KAIST (Director of the Promotion Council for Digital Health), in particular, emphasized that it is important to find a point of balance that does not hinder the advancements rather than opting to enforcing strict regulations.

< Photo 2. Panel Discussion in Session at NYU-KAIST Global AI & Digital Governance Conference >

KAIST President Kwang Hyung Lee explained, “At the Digital Governance Forum we had last October, we focused on exploring new governance to solve digital challenges in the time of global digital transition, and this year’s main focus was on regulations.”

“This conference served as an opportunity of immense value as we came to understand that appropriate regulations can be a motivation to spur further developments rather than a hurdle when it comes to technological advancements, and that it is important for us to clearly understand artificial intelligence and consider what should and can be regulated when we are to set regulations on artificial intelligence,” he continued.

Earlier, KAIST signed a cooperation agreement with NYU to build a joint campus, June last year and held a plaque presentation ceremony for the KAIST NYU Joint Campus last September to promote joint research between the two universities. KAIST is currently conducting joint research with NYU in nine fields, including AI and digital research. The KAIST-NYU Joint Campus was conceived with the goal of building an innovative sandbox campus centering aroung science, technology, engineering, and mathematics (STEM) combining NYU's excellent humanities and arts as well as basic science and convergence research capabilities with KAIST's science and technology.

KAIST has contributed to the development of Korea's industry and economy through technological innovation aiding in the nation’s transformation into an innovative nation with scientific and technological prowess. KAIST will now pursue an anchor/base strategy to raise KAIST's awareness in New York through the NYU Joint Campus by establishing a KAIST campus within the campus of NYU, the heart of New York.

2023.09.22 View 8992

NYU-KAIST Global AI & Digital Governance Conference Held

< Photo 1. Opening of NYU-KAIST Global AI & Digital Governance Conference >

In attendance of the Minister of Science and ICT Jong-ho Lee, NYU President Linda G. Mills, and KAIST President Kwang Hyung Lee, KAIST co-hosted the NYU-KAIST Global AI & Digital Governance Conference at the Paulson Center of New York University (NYU) in New York City, USA on September 21st, 9:30 pm.

At the conference, KAIST and NYU discussed the direction and policies for ‘global AI and digital governance’ with participants of upto 300 people which includes scholars, professors, and students involved in the academic field of AI and digitalization from both Korea and the United States and other international backgrounds. This conference was a forum of an international discussion that sought new directions for AI and digital technology take in the future and gathered consensus on regulations.

Following a welcoming address by KAIST President, Kwang Hyung Lee and a congratulatory message from the Minister of Science and ICT, Jong-ho Lee, a panel discussion was held, moderated by Professor Matthew Liao, a graduate of Princeton and Oxford University, currently serving as a professor at NYU and the director at the Center for Bioethics of the NYU School of Global Public Health.

Six prominent scholars took part in the panel discussion. Prof. Kyung-hyun Cho of NYU Applied Mathematics and Data Science Center, a KAIST graduate who has joined the ranks of the world-class in AI language models and Professor Jong Chul Ye, the Director of Promotion Council for Digital Health at KAIST, who is leading innovative research in the field of medical AI working in collaboration with major hospitals at home and abroad was on the panel. Additionally, Professor Luciano Floridi, a founding member of the Yale University Center for Digital Ethics, Professor Shannon Vallor, the Baillie Gifford Professor in the Ethics of Data and Artificial Intelligence at the University of Edinburgh of the UK, Professor Stefaan Verhulst, a Co-Founder and the DIrector of GovLab‘s Data Program at NYU’s Tandon School of Engineering, and Professor Urs Gasser, who is in charge of public policy, governance and innovative technology at the Technical University of Munich, also participated.

Professor Matthew Liao from NYU led the discussion on various topics such as the ways to to regulate AI and digital technologies; the concerns about how deep learning technology being developed in medicinal purposes could be used in warfare; the scope of responsibilities Al scientists' responsibility should carry in ensuring the usage of AI are limited to benign purposes only; the effects of external regulation on the AI model developers and the research they pursue; and on the lessons that can be learned from the regulations in other fields.

During the panel discussion, there was an exchange of ideas about a system of standards that could harmonize digital development and regulatory and social ethics in today’s situation in which digital transformation accelerates technological development at a global level, there is a looming concern that while such advancements are bringing economic vitality it may create digital divides and probles like manipulation of public opinion. Professor Jong-cheol Ye of KAIST (Director of the Promotion Council for Digital Health), in particular, emphasized that it is important to find a point of balance that does not hinder the advancements rather than opting to enforcing strict regulations.

< Photo 2. Panel Discussion in Session at NYU-KAIST Global AI & Digital Governance Conference >

KAIST President Kwang Hyung Lee explained, “At the Digital Governance Forum we had last October, we focused on exploring new governance to solve digital challenges in the time of global digital transition, and this year’s main focus was on regulations.”

“This conference served as an opportunity of immense value as we came to understand that appropriate regulations can be a motivation to spur further developments rather than a hurdle when it comes to technological advancements, and that it is important for us to clearly understand artificial intelligence and consider what should and can be regulated when we are to set regulations on artificial intelligence,” he continued.

Earlier, KAIST signed a cooperation agreement with NYU to build a joint campus, June last year and held a plaque presentation ceremony for the KAIST NYU Joint Campus last September to promote joint research between the two universities. KAIST is currently conducting joint research with NYU in nine fields, including AI and digital research. The KAIST-NYU Joint Campus was conceived with the goal of building an innovative sandbox campus centering aroung science, technology, engineering, and mathematics (STEM) combining NYU's excellent humanities and arts as well as basic science and convergence research capabilities with KAIST's science and technology.

KAIST has contributed to the development of Korea's industry and economy through technological innovation aiding in the nation’s transformation into an innovative nation with scientific and technological prowess. KAIST will now pursue an anchor/base strategy to raise KAIST's awareness in New York through the NYU Joint Campus by establishing a KAIST campus within the campus of NYU, the heart of New York.

2023.09.22 View 8992 -

KAIST holds its first ‘KAIST Tech Fair’ in New York, USA

< Photo 1. 2023 KAIST Tech Fair in New York >

KAIST (President Kwang-Hyung Lee) announced on the 11th that it will hold the ‘2023 KAIST Tech Fair in New York’ at the Kimmel Center at New York University in Manhattan, USA, on the 22nd of this month. It is an event designed to be the starting point for KAIST to expand its startup ecosystem into the global stage, and it is to attract investments and secure global customers in New York by demonstrating the technological value of KAIST startup companies directly at location.

< Photo 2. President Kwang Hyung Lee at the 2023 KAIST Tech Fair in New York >

KAIST has been holding briefing sessions for technology transfer in Korea every year since 2018, and this year is the first time to hold a tech fair overseas for global companies.

KAIST Institute of Technology Value Creation (Director Sung-Yool Choi) has prepared for this event over the past six months with the Korea International Trade Association (hereinafter KITA, CEO Christopher Koo) to survey customer base and investment companies to conduct market analysis.

Among the companies founded with the technologies developed by the faculty and students of KAIST and their partners, 7 companies were selected to be matched with companies overseas that expressed interests in these technologies. Global multinational companies in the fields of IT, artificial intelligence, environment, logistics, distribution, and retail are participating as demand agencies and are testing the marketability of the start-up's technology as of September.

Daim Research, founded by Professor Young Jae Jang of the Department of Industrial and Systems Engineering, is a company specializing in smart factory automation solutions and is knocking on the door of the global market with a platform technology optimized for automated logistics systems.

< Photo 3. Presentation by Professor Young Jae Jang for DAIM Research >

It is a ‘collaborative intelligence’ solution that maximizes work productivity by having a number of robots used in industrial settings collaborate with one another. The strength of their solution is that logistics robots equipped with AI reinforced learning technology can respond to processes and environmental changes on their own, minimizing maintenance costs and the system can achieve excellent performance even with a small amount of data when it is combined with the digital twin technology the company has developed on its own.

A student startup, ‘Aniai’, is entering the US market, the home of hamburgers, with hamburger patty automation equipments and solutions. This is a robot kitchen startup founded by its CEO Gunpil Hwang, a graduate of KAIST’s School of Electrical Engineering which gathered together the experts in the fields of robot control, design, and artificial intelligence and cognitive technology to develop technology to automatically cook hamburger patties.

At the touch of a button, both sides of the patty are cooked simultaneously for consistent taste and quality according to the set condition. Since it can cook about 200 dishes in an hour, it is attracting attention as a technology that can not only solve manpower shortages but also accelerate the digital transformation of the restaurant industry.

Also, at the tech fair to be held at the Kimmel Center of New York University on the 22nd, the following startups who are currently under market verification in the U.S. will be participating: ▴'TheWaveTalk', which developed a water quality management system that can measure external substances and metal ions by transferring original technology from KAIST; ▴‘VIRNECT’, which helps workers improve their skills by remotely managing industrial sites using XR*; ▴‘Datumo’, a solution that helps process and analyze artificial intelligence big data, ▴‘VESSL AI’, the provider of a solution to eliminate the overhead** of machine learning systems; and ▴ ‘DolbomDream’, which developed an inflatable vest that helps the psychological stability of people with developmental disabilities.

* XR (eXtended Reality): Ultra-realistic technology that enhances immersion by utilizing augmented reality, virtual reality, and mixed reality technologies

** Overhead: Additional time required for stable processing of the program

In addition, two companies (Plasmapp and NotaAI) that are participating in the D-Unicorn program with the support of the Daejeon City and two companies (Enget and ILIAS Biologics) that are receiving support from the Scale Up Tips of the Ministry of SMEs and Startups, three companies (WiPowerOne, IDK Lab, and Artificial Photosynthesis Lab) that are continuing to realize the sustainable development goals for a total of 14 KAIST startups, will hold a corporate information session with about 100 invited guests from global companies and venture capital.

< Photo 4. Presentation for AP Lab >

Prior to this event, participating startups will be visiting the New York Economic Development Corporation and large law firms to receive advice on U.S. government support programs and on their attemps to enter the U.S. market. In addition, the participating companies plan to visit a startup support investment institution pursuing sustainable development goals and the Leslie eLab, New York University's one-stop startup support space, to lay the foundation for KAIST's leap forward in global technology commercialization.

< Photo 5. Sung-Yool Choi, the Director of KAIST Institute of Technology Value Creation (left) at the 2023 KAIST Tech Fair in New York with the key participants >

Sung-Yool Choi, the Director of KAIST Institute of Technology Value Creation, said, “KAIST prepared this event to realize its vision of being a leading university in creating global value.” He added, “We hope that our startups founded with KAIST technology would successfully completed market verification to be successful in securing global demands and in attracting investments for their endeavors.”

2023.09.11 View 14560

KAIST holds its first ‘KAIST Tech Fair’ in New York, USA

< Photo 1. 2023 KAIST Tech Fair in New York >

KAIST (President Kwang-Hyung Lee) announced on the 11th that it will hold the ‘2023 KAIST Tech Fair in New York’ at the Kimmel Center at New York University in Manhattan, USA, on the 22nd of this month. It is an event designed to be the starting point for KAIST to expand its startup ecosystem into the global stage, and it is to attract investments and secure global customers in New York by demonstrating the technological value of KAIST startup companies directly at location.

< Photo 2. President Kwang Hyung Lee at the 2023 KAIST Tech Fair in New York >

KAIST has been holding briefing sessions for technology transfer in Korea every year since 2018, and this year is the first time to hold a tech fair overseas for global companies.

KAIST Institute of Technology Value Creation (Director Sung-Yool Choi) has prepared for this event over the past six months with the Korea International Trade Association (hereinafter KITA, CEO Christopher Koo) to survey customer base and investment companies to conduct market analysis.

Among the companies founded with the technologies developed by the faculty and students of KAIST and their partners, 7 companies were selected to be matched with companies overseas that expressed interests in these technologies. Global multinational companies in the fields of IT, artificial intelligence, environment, logistics, distribution, and retail are participating as demand agencies and are testing the marketability of the start-up's technology as of September.

Daim Research, founded by Professor Young Jae Jang of the Department of Industrial and Systems Engineering, is a company specializing in smart factory automation solutions and is knocking on the door of the global market with a platform technology optimized for automated logistics systems.

< Photo 3. Presentation by Professor Young Jae Jang for DAIM Research >