School+of+Electrical+Engineering

-

Professor Shinhyun Choi’s team, selected for Nature Communications Editors’ highlight

[ From left, Ph.D. candidates See-On Park and Hakcheon Jeong, along with Master's student Jong-Yong Park and Professor Shinhyun Choi ]

See-On Park, Hakcheon Jeong, Jong-Yong Park - a team of researchers under the leadership of Professor Shinhyun Choi of the School of Electrical Engineering, developed a highly reliable variable resistor (memristor) array that simulates the behavior of neurons using a metal oxide layer with an oxygen concentration gradient, and published their work in Nature Communications. The study was selected as the Nature Communications' Editor's highlight, and as the featured article posted on the main page of the journal's website.

Link : https://www.nature.com/ncomms/

[ Figure 1. The featured image on the main page of the Nature Communications' website introducing the research by Professor Choi's team on the memristor for artificial neurons ]

Thesis title: Experimental demonstration of highly reliable dynamic memristor for artificial neuron and neuromorphic computing.

( https://doi.org/10.1038/s41467-022-30539-6 )

At KAIST, their research was introduced on the 2022 Fall issue of Breakthroughs, the biannual newsletter published by KAIST College of Engineering.

This research was conducted with the support from the Samsung Research Funding & Incubation Center of Samsung Electronics.

2022.11.01 View 7623

Professor Shinhyun Choi’s team, selected for Nature Communications Editors’ highlight

[ From left, Ph.D. candidates See-On Park and Hakcheon Jeong, along with Master's student Jong-Yong Park and Professor Shinhyun Choi ]

See-On Park, Hakcheon Jeong, Jong-Yong Park - a team of researchers under the leadership of Professor Shinhyun Choi of the School of Electrical Engineering, developed a highly reliable variable resistor (memristor) array that simulates the behavior of neurons using a metal oxide layer with an oxygen concentration gradient, and published their work in Nature Communications. The study was selected as the Nature Communications' Editor's highlight, and as the featured article posted on the main page of the journal's website.

Link : https://www.nature.com/ncomms/

[ Figure 1. The featured image on the main page of the Nature Communications' website introducing the research by Professor Choi's team on the memristor for artificial neurons ]

Thesis title: Experimental demonstration of highly reliable dynamic memristor for artificial neuron and neuromorphic computing.

( https://doi.org/10.1038/s41467-022-30539-6 )

At KAIST, their research was introduced on the 2022 Fall issue of Breakthroughs, the biannual newsletter published by KAIST College of Engineering.

This research was conducted with the support from the Samsung Research Funding & Incubation Center of Samsung Electronics.

2022.11.01 View 7623 -

A New Family of Ducks joins the Feathery KAISTians

In October of this year, KAIST signed an 'Agreement for the Training Program for AI Semiconductor Designers' with Samsung Electronics, to conduct joint research and actively nurture master's and doctorate researchers in the field of Semiconductors designed exclusively for AI devices. To celebrate this commemorative agreement for cooperation bound for mutual success, Samsung Electronics gifted a set of 5 ducks to KAIST.

The Duck Pond and the Geese have been representing KAIST as famous mascots.

It all started back in 2000, when the incumbent President, Professor Kwang Hyung Lee served was then a professor at the Department of Bio and Brain Engineering, he first picked up a pack of ducks from Yuseong Market and started taking care of it on campus around the Carillon pond. While the ducks came and went, eventually being replaced with a pack of geese over the time, for more than 20 years, the pack of feathery KAISTians stole the eyes of the passersby and were loved by both the on-campus members and the visitors, alike.

The representative of the Samsung Electronics said that the pack of ducks comprising of a new breed contains the message of SEC that it hopes that the PIM semiconductor technology will grow to become the super-gap technology that would turn heads and grab attention of the world as the mascot of Korea's technological prowess under the combined care of KAIST and SEC.

Would the ducks find KAIST likable? We will keep you informed of how they are doing!

2022.11.01 View 5064

A New Family of Ducks joins the Feathery KAISTians

In October of this year, KAIST signed an 'Agreement for the Training Program for AI Semiconductor Designers' with Samsung Electronics, to conduct joint research and actively nurture master's and doctorate researchers in the field of Semiconductors designed exclusively for AI devices. To celebrate this commemorative agreement for cooperation bound for mutual success, Samsung Electronics gifted a set of 5 ducks to KAIST.

The Duck Pond and the Geese have been representing KAIST as famous mascots.

It all started back in 2000, when the incumbent President, Professor Kwang Hyung Lee served was then a professor at the Department of Bio and Brain Engineering, he first picked up a pack of ducks from Yuseong Market and started taking care of it on campus around the Carillon pond. While the ducks came and went, eventually being replaced with a pack of geese over the time, for more than 20 years, the pack of feathery KAISTians stole the eyes of the passersby and were loved by both the on-campus members and the visitors, alike.

The representative of the Samsung Electronics said that the pack of ducks comprising of a new breed contains the message of SEC that it hopes that the PIM semiconductor technology will grow to become the super-gap technology that would turn heads and grab attention of the world as the mascot of Korea's technological prowess under the combined care of KAIST and SEC.

Would the ducks find KAIST likable? We will keep you informed of how they are doing!

2022.11.01 View 5064 -

Yuji Roh Awarded 2022 Microsoft Research PhD Fellowship

KAIST PhD candidate Yuji Roh of the School of Electrical Engineering (advisor: Prof. Steven Euijong Whang) was selected as a recipient of the 2022 Microsoft Research PhD Fellowship.

< KAIST PhD candidate Yuji Roh (advisor: Prof. Steven Euijong Whang) >

The Microsoft Research PhD Fellowship is a scholarship program that recognizes outstanding graduate students for their exceptional and innovative research in areas relevant to computer science and related fields. This year, 36 people from around the world received the fellowship, and Yuji Roh from KAIST EE is the only recipient from universities in Korea. Each selected fellow will receive a $10,000 scholarship and an opportunity to intern at Microsoft under the guidance of an experienced researcher.

Yuji Roh was named a fellow in the field of “Machine Learning” for her outstanding achievements in Trustworthy AI. Her research highlights include designing a state-of-the-art fair training framework using batch selection and developing novel algorithms for both fair and robust training. Her works have been presented at the top machine learning conferences ICML, ICLR, and NeurIPS among others. She also co-presented a tutorial on Trustworthy AI at the top data mining conference ACM SIGKDD. She is currently interning at the NVIDIA Research AI Algorithms Group developing large-scale real-world fair AI frameworks.

The list of fellowship recipients and the interview videos are displayed on the Microsoft webpage and Youtube.

The list of recipients: https://www.microsoft.com/en-us/research/academic-program/phd-fellowship/2022-recipients/

Interview (Global): https://www.youtube.com/watch?v=T4Q-XwOOoJc

Interview (Asia): https://www.youtube.com/watch?v=qwq3R1XU8UE

[Highlighted research achievements by Yuji Roh: Fair batch selection framework]

[Highlighted research achievements by Yuji Roh: Fair and robust training framework]

2022.10.28 View 11560

Yuji Roh Awarded 2022 Microsoft Research PhD Fellowship

KAIST PhD candidate Yuji Roh of the School of Electrical Engineering (advisor: Prof. Steven Euijong Whang) was selected as a recipient of the 2022 Microsoft Research PhD Fellowship.

< KAIST PhD candidate Yuji Roh (advisor: Prof. Steven Euijong Whang) >

The Microsoft Research PhD Fellowship is a scholarship program that recognizes outstanding graduate students for their exceptional and innovative research in areas relevant to computer science and related fields. This year, 36 people from around the world received the fellowship, and Yuji Roh from KAIST EE is the only recipient from universities in Korea. Each selected fellow will receive a $10,000 scholarship and an opportunity to intern at Microsoft under the guidance of an experienced researcher.

Yuji Roh was named a fellow in the field of “Machine Learning” for her outstanding achievements in Trustworthy AI. Her research highlights include designing a state-of-the-art fair training framework using batch selection and developing novel algorithms for both fair and robust training. Her works have been presented at the top machine learning conferences ICML, ICLR, and NeurIPS among others. She also co-presented a tutorial on Trustworthy AI at the top data mining conference ACM SIGKDD. She is currently interning at the NVIDIA Research AI Algorithms Group developing large-scale real-world fair AI frameworks.

The list of fellowship recipients and the interview videos are displayed on the Microsoft webpage and Youtube.

The list of recipients: https://www.microsoft.com/en-us/research/academic-program/phd-fellowship/2022-recipients/

Interview (Global): https://www.youtube.com/watch?v=T4Q-XwOOoJc

Interview (Asia): https://www.youtube.com/watch?v=qwq3R1XU8UE

[Highlighted research achievements by Yuji Roh: Fair batch selection framework]

[Highlighted research achievements by Yuji Roh: Fair and robust training framework]

2022.10.28 View 11560 -

Shaping the AI Semiconductor Ecosystem

- As the marriage of AI and semiconductor being highlighted as the strategic technology of national enthusiasm, KAIST's achievements in the related fields accumulated through top-class education and research capabilities that surpass that of peer universities around the world are standing far apart from the rest of the pack.

As Artificial Intelligence Semiconductor, or a system of semiconductors designed for specifically for highly complicated computation need for AI to conduct its learning and deducing calculations, (hereafter AI semiconductors) stand out as a national strategic technology, the related achievements of KAIST, headed by President Kwang Hyung Lee, are also attracting attention. The Ministry of Science, ICT and Future Planning (MSIT) of Korea initiated a program to support the advancement of AI semiconductor last year with the goal of occupying 20% of the global AI semiconductor market by 2030. This year, through industry-university-research discussions, the Ministry expanded to the program with the addition of 1.2 trillion won of investment over five years through 'Support Plan for AI Semiconductor Industry Promotion'. Accordingly, major universities began putting together programs devised to train students to develop expertise in AI semiconductors.

KAIST has accumulated top-notch educational and research capabilities in the two core fields of AI semiconductor - Semiconductor and Artificial Intelligence. Notably, in the field of semiconductors, the International Solid-State Circuit Conference (ISSCC) is the world's most prestigious conference about designing of semiconductor integrated circuit. Established in 1954, with more than 60% of the participants coming from companies including Samsung, Qualcomm, TSMC, and Intel, the conference naturally focuses on practical value of the studies from the industrial point-of-view, earning the nickname the ‘Semiconductor Design Olympics’. At such conference of legacy and influence, KAIST kept its presence widely visible over other participating universities, leading in terms of the number of accepted papers over world-class schools such as Massachusetts Institute of Technology (MIT) and Stanford for the past 17 years.

Number of papers published at the InternationalSolid-State Circuit Conference (ISSCC) in 2022 sorted by nations and by institutions

Number of papers by universities presented at the International Solid-State Circuit Conference (ISCCC) in 2006~2022

In terms of the number of papers accepted at the ISSCC, KAIST ranked among top two universities each year since 2006. Looking at the average number of accepted papers over the past 17 years, KAIST stands out as an unparalleled leader. The average number of KAIST papers adopted during the period of 17 years from 2006 through 2022, was 8.4, which is almost double of that of competitors like MIT (4.6) and UCLA (3.6). In Korea, it maintains the second place overall after Samsung, the undisputed number one in the semiconductor design field. Also, this year, KAIST was ranked first among universities participating at the Symposium on VLSI Technology and Circuits, an academic conference in the field of integrated circuits that rivals the ISSCC.

Number of papers adopted by the Symposium on VLSI Technology and Circuits in 2022 submitted from the universities

With KAIST researchers working and presenting new technologies at the frontiers of all key areas of the semiconductor industry, the quality of KAIST research is also maintained at the highest level. Professor Myoungsoo Jung's research team in the School of Electrical Engineering is actively working to develop heterogeneous computing environment with high energy efficiency in response to the industry's demand for high performance at low power. In the field of materials, a research team led by Professor Byong-Guk Park of the Department of Materials Science and Engineering developed the Spin Orbit Torque (SOT)-based Magnetic RAM (MRAM) memory that operates at least 10 times faster than conventional memories to suggest a way to overcome the limitations of the existing 'von Neumann structure'.

As such, while providing solutions to major challenges in the current semiconductor industry, the development of new technologies necessary to preoccupy new fields in the semiconductor industry are also very actively pursued. In the field of Quantum Computing, which is attracting attention as next-generation computing technology needed in order to take the lead in the fields of cryptography and nonlinear computation, Professor Sanghyeon Kim's research team in the School of Electrical Engineering presented the world's first 3D integrated quantum computing system at 2021 VLSI Symposium. In Neuromorphic Computing, which is expected to bring remarkable advancements in the field of artificial intelligence by utilizing the principles of the neurology, the research team of Professor Shinhyun Choi of School of Electrical Engineering is developing a next-generation memristor that mimics neurons.

The number of papers by the International Conference on Machine Learning (ICML) and the Conference on Neural Information Processing Systems (NeurIPS), two of the world’s most prestigious academic societies in the field of artificial intelligence (KAIST 6th in the world, 1st in Asia, in 2020)

The field of artificial intelligence has also grown rapidly. Based on the number of papers from the International Conference on Machine Learning (ICML) and the Conference on Neural Information Processing Systems (NeurIPS), two of the world's most prestigious conferences in the field of artificial intelligence, KAIST ranked 6th in the world in 2020 and 1st in Asia. Since 2012, KAIST's ranking steadily inclined from 37th to 6th, climbing 31 steps over the period of eight years. In 2021, 129 papers, or about 40%, of Korean papers published at 11 top artificial intelligence conferences were presented by KAIST. Thanks to KAIST's efforts, in 2021, Korea ranked sixth after the United States, China, United Kingdom, Canada, and Germany in terms of the number of papers published by global AI academic societies.

Number of papers from Korea (and by KAIST) published at 11 top conferences in the field of artificial intelligence in 2021

In terms of content, KAIST's AI research is also at the forefront. Professor Hoi-Jun Yoo's research team in the School of Electrical Engineering compensated for the shortcomings of the “edge networks” by implementing artificial intelligence real-time learning networks on mobile devices. In order to materialize artificial intelligence, data accumulation and a huge amount of computation is required. For this, a high-performance server takes care of massive computation, and for the user terminals, the “edge network” that collects data and performs simple computations are used. Professor Yoo's research greatly increased AI’s processing speed and performance by allotting the learning task to the user terminal as well.

In June, a research team led by Professor Min-Soo Kim of the School of Computing presented a solution that is essential for processing super-scale artificial intelligence models. The super-scale machine learning system developed by the research team is expected to achieve speeds up to 8.8 times faster than Google's Tensorflow or IBM's System DS, which are mainly used in the industry.

KAIST is also making remarkable achievements in the field of AI semiconductors. In 2020, Professor Minsoo Rhu's research team in the School of Electrical Engineering succeeded in developing the world's first AI semiconductor optimized for AI recommendation systems. Due to the nature of the AI recommendation system having to handle vast amounts of contents and user information, it quickly meets its limitation because of the information bottleneck when the process is operated through a general-purpose artificial intelligence system. Professor Minsoo Rhu's team developed a semiconductor that can achieve a speed that is 21 times faster than existing systems using the 'Processing-In-Memory (PIM)' technology. PIM is a technology that improves efficiency by performing the calculations in 'RAM', or random-access memory, which is usually only used to store data temporarily just before they are processed. When PIM technology is put out on the market, it is expected that fortify competitiveness of Korean companies in the AI semiconductor market drastically, as they already hold great strength in the memory area.

KAIST does not plan to be complacent with its achievements, but is making various plans to further the distance from the competitors catching on in the fields of artificial intelligence, semiconductors, and AI semiconductors. Following the establishment of the first artificial intelligence research center in Korea in 1990, the Kim Jaechul AI Graduate School was opened in 2019 to sustain the supply chain of the experts in the field. In 2020, Artificial Intelligence Semiconductor System Research Center was launched to conduct convergent research on AI and semiconductors, which was followed by the establishment of the AI Institutes to promote “AI+X” research efforts.

Based on the internal capabilities accumulated through these efforts, KAIST is also making efforts to train human resources needed in these areas. KAIST established joint research centers with companies such as Naver, while collaborating with local governments such as Hwaseong City to simultaneously nurture professional manpower. Back in 2021, KAIST signed an agreement to establish the Semiconductor System Engineering Department with Samsung Electronics and are preparing a new semiconductor specialist training program. The newly established Department of Semiconductor System Engineering will select around 100 new students every year from 2023 and provide special scholarships to all students so that they can develop their professional skills. In addition, through close cooperation with the industry, they will receive special support which includes field trips and internships at Samsung Electronics, and joint workshops and on-site training.

KAIST has made a significant contribution to the growth of the Korean semiconductor industry ecosystem, producing 25% of doctoral workers in the domestic semiconductor field and 20% of CEOs of mid-sized and venture companies with doctoral degrees. With the dawn coming up on the AI semiconductor ecosystem, whether KAIST will reprise the pivotal role seems to be the crucial point of business.

2022.08.05 View 11215

Shaping the AI Semiconductor Ecosystem

- As the marriage of AI and semiconductor being highlighted as the strategic technology of national enthusiasm, KAIST's achievements in the related fields accumulated through top-class education and research capabilities that surpass that of peer universities around the world are standing far apart from the rest of the pack.

As Artificial Intelligence Semiconductor, or a system of semiconductors designed for specifically for highly complicated computation need for AI to conduct its learning and deducing calculations, (hereafter AI semiconductors) stand out as a national strategic technology, the related achievements of KAIST, headed by President Kwang Hyung Lee, are also attracting attention. The Ministry of Science, ICT and Future Planning (MSIT) of Korea initiated a program to support the advancement of AI semiconductor last year with the goal of occupying 20% of the global AI semiconductor market by 2030. This year, through industry-university-research discussions, the Ministry expanded to the program with the addition of 1.2 trillion won of investment over five years through 'Support Plan for AI Semiconductor Industry Promotion'. Accordingly, major universities began putting together programs devised to train students to develop expertise in AI semiconductors.

KAIST has accumulated top-notch educational and research capabilities in the two core fields of AI semiconductor - Semiconductor and Artificial Intelligence. Notably, in the field of semiconductors, the International Solid-State Circuit Conference (ISSCC) is the world's most prestigious conference about designing of semiconductor integrated circuit. Established in 1954, with more than 60% of the participants coming from companies including Samsung, Qualcomm, TSMC, and Intel, the conference naturally focuses on practical value of the studies from the industrial point-of-view, earning the nickname the ‘Semiconductor Design Olympics’. At such conference of legacy and influence, KAIST kept its presence widely visible over other participating universities, leading in terms of the number of accepted papers over world-class schools such as Massachusetts Institute of Technology (MIT) and Stanford for the past 17 years.

Number of papers published at the InternationalSolid-State Circuit Conference (ISSCC) in 2022 sorted by nations and by institutions

Number of papers by universities presented at the International Solid-State Circuit Conference (ISCCC) in 2006~2022

In terms of the number of papers accepted at the ISSCC, KAIST ranked among top two universities each year since 2006. Looking at the average number of accepted papers over the past 17 years, KAIST stands out as an unparalleled leader. The average number of KAIST papers adopted during the period of 17 years from 2006 through 2022, was 8.4, which is almost double of that of competitors like MIT (4.6) and UCLA (3.6). In Korea, it maintains the second place overall after Samsung, the undisputed number one in the semiconductor design field. Also, this year, KAIST was ranked first among universities participating at the Symposium on VLSI Technology and Circuits, an academic conference in the field of integrated circuits that rivals the ISSCC.

Number of papers adopted by the Symposium on VLSI Technology and Circuits in 2022 submitted from the universities

With KAIST researchers working and presenting new technologies at the frontiers of all key areas of the semiconductor industry, the quality of KAIST research is also maintained at the highest level. Professor Myoungsoo Jung's research team in the School of Electrical Engineering is actively working to develop heterogeneous computing environment with high energy efficiency in response to the industry's demand for high performance at low power. In the field of materials, a research team led by Professor Byong-Guk Park of the Department of Materials Science and Engineering developed the Spin Orbit Torque (SOT)-based Magnetic RAM (MRAM) memory that operates at least 10 times faster than conventional memories to suggest a way to overcome the limitations of the existing 'von Neumann structure'.

As such, while providing solutions to major challenges in the current semiconductor industry, the development of new technologies necessary to preoccupy new fields in the semiconductor industry are also very actively pursued. In the field of Quantum Computing, which is attracting attention as next-generation computing technology needed in order to take the lead in the fields of cryptography and nonlinear computation, Professor Sanghyeon Kim's research team in the School of Electrical Engineering presented the world's first 3D integrated quantum computing system at 2021 VLSI Symposium. In Neuromorphic Computing, which is expected to bring remarkable advancements in the field of artificial intelligence by utilizing the principles of the neurology, the research team of Professor Shinhyun Choi of School of Electrical Engineering is developing a next-generation memristor that mimics neurons.

The number of papers by the International Conference on Machine Learning (ICML) and the Conference on Neural Information Processing Systems (NeurIPS), two of the world’s most prestigious academic societies in the field of artificial intelligence (KAIST 6th in the world, 1st in Asia, in 2020)

The field of artificial intelligence has also grown rapidly. Based on the number of papers from the International Conference on Machine Learning (ICML) and the Conference on Neural Information Processing Systems (NeurIPS), two of the world's most prestigious conferences in the field of artificial intelligence, KAIST ranked 6th in the world in 2020 and 1st in Asia. Since 2012, KAIST's ranking steadily inclined from 37th to 6th, climbing 31 steps over the period of eight years. In 2021, 129 papers, or about 40%, of Korean papers published at 11 top artificial intelligence conferences were presented by KAIST. Thanks to KAIST's efforts, in 2021, Korea ranked sixth after the United States, China, United Kingdom, Canada, and Germany in terms of the number of papers published by global AI academic societies.

Number of papers from Korea (and by KAIST) published at 11 top conferences in the field of artificial intelligence in 2021

In terms of content, KAIST's AI research is also at the forefront. Professor Hoi-Jun Yoo's research team in the School of Electrical Engineering compensated for the shortcomings of the “edge networks” by implementing artificial intelligence real-time learning networks on mobile devices. In order to materialize artificial intelligence, data accumulation and a huge amount of computation is required. For this, a high-performance server takes care of massive computation, and for the user terminals, the “edge network” that collects data and performs simple computations are used. Professor Yoo's research greatly increased AI’s processing speed and performance by allotting the learning task to the user terminal as well.

In June, a research team led by Professor Min-Soo Kim of the School of Computing presented a solution that is essential for processing super-scale artificial intelligence models. The super-scale machine learning system developed by the research team is expected to achieve speeds up to 8.8 times faster than Google's Tensorflow or IBM's System DS, which are mainly used in the industry.

KAIST is also making remarkable achievements in the field of AI semiconductors. In 2020, Professor Minsoo Rhu's research team in the School of Electrical Engineering succeeded in developing the world's first AI semiconductor optimized for AI recommendation systems. Due to the nature of the AI recommendation system having to handle vast amounts of contents and user information, it quickly meets its limitation because of the information bottleneck when the process is operated through a general-purpose artificial intelligence system. Professor Minsoo Rhu's team developed a semiconductor that can achieve a speed that is 21 times faster than existing systems using the 'Processing-In-Memory (PIM)' technology. PIM is a technology that improves efficiency by performing the calculations in 'RAM', or random-access memory, which is usually only used to store data temporarily just before they are processed. When PIM technology is put out on the market, it is expected that fortify competitiveness of Korean companies in the AI semiconductor market drastically, as they already hold great strength in the memory area.

KAIST does not plan to be complacent with its achievements, but is making various plans to further the distance from the competitors catching on in the fields of artificial intelligence, semiconductors, and AI semiconductors. Following the establishment of the first artificial intelligence research center in Korea in 1990, the Kim Jaechul AI Graduate School was opened in 2019 to sustain the supply chain of the experts in the field. In 2020, Artificial Intelligence Semiconductor System Research Center was launched to conduct convergent research on AI and semiconductors, which was followed by the establishment of the AI Institutes to promote “AI+X” research efforts.

Based on the internal capabilities accumulated through these efforts, KAIST is also making efforts to train human resources needed in these areas. KAIST established joint research centers with companies such as Naver, while collaborating with local governments such as Hwaseong City to simultaneously nurture professional manpower. Back in 2021, KAIST signed an agreement to establish the Semiconductor System Engineering Department with Samsung Electronics and are preparing a new semiconductor specialist training program. The newly established Department of Semiconductor System Engineering will select around 100 new students every year from 2023 and provide special scholarships to all students so that they can develop their professional skills. In addition, through close cooperation with the industry, they will receive special support which includes field trips and internships at Samsung Electronics, and joint workshops and on-site training.

KAIST has made a significant contribution to the growth of the Korean semiconductor industry ecosystem, producing 25% of doctoral workers in the domestic semiconductor field and 20% of CEOs of mid-sized and venture companies with doctoral degrees. With the dawn coming up on the AI semiconductor ecosystem, whether KAIST will reprise the pivotal role seems to be the crucial point of business.

2022.08.05 View 11215 -

A System for Stable Simultaneous Communication among Thousands of IoT Devices

A mmWave Backscatter System, developed by a team led by Professor Song Min Kim is exciting news for the IoT market as it will be able to provide fast and stable connectivity even for a massive network, which could finally allow IoT devices to reach their full potential.

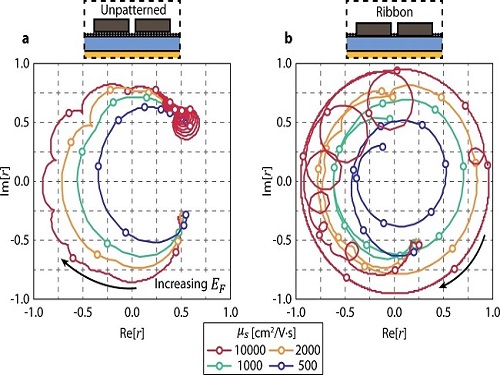

A research team led by Professor Song Min Kim of the KAIST School of Electrical Engineering developed a system that can support concurrent communications for tens of millions of IoT devices using backscattering millimeter-level waves (mmWave).

With their mmWave backscatter method, the research team built a design enabling simultaneous signal demodulation in a complex environment for communication where tens of thousands of IoT devices are arranged indoors. The wide frequency range of mmWave exceeds 10GHz, which provides great scalability. In addition, backscattering reflects radiated signals instead of wirelessly creating its own, which allows operation at ultralow power. Therefore, the mmWave backscatter system offers internet connectivity on a mass scale to IoT devices at a low installation cost.

This research by Kangmin Bae et al. was presented at ACM MobiSys 2022. At this world-renowned conference for mobile systems, the research won the Best Paper Award under the title “OmniScatter: Sensitivity mmWave Backscattering Using Commodity FMCW Radar”. It is meaningful that members of the KAIST School of Electrical Engineering have won the Best Paper Award at ACM MobiSys for two consecutive years, as last year was the first time the award was presented to an institute from Asia.

IoT, as a core component of 5G/6G network, is showing exponential growth, and is expected to be part of a trillion devices by 2035. To support the connection of IoT devices on a mass scale, 5G and 6G each aim to support ten times and 100 times the network density of 4G, respectively. As a result, the importance of practical systems for large-scale communication has been raised.

The mmWave is a next-generation communication technology that can be incorporated in 5G/6G standards, as it utilizes carrier waves at frequencies between 30 to 300GHz. However, due to signal reduction at high frequencies and reflection loss, the current mmWave backscatter system enables communication in limited environments. In other words, it cannot operate in complex environments where various obstacles and reflectors are present. As a result, it is limited to the large-scale connection of IoT devices that require a relatively free arrangement.

The research team found the solution in the high coding gain of an FMCW radar. The team developed a signal processing method that can fundamentally separate backscatter signals from ambient noise while maintaining the coding gain of the radar. They achieved a receiver sensitivity of over 100 thousand times that of previously reported FMCW radars, which can support communication in practical environments. Additionally, given the radar’s property where the frequency of the demodulated signal changes depending on the physical location of the tag, the team designed a system that passively assigns them channels. This lets the ultralow-power backscatter communication system to take full advantage of the frequency range at 10 GHz or higher.

The developed system can use the radar of existing commercial products as gateway, making it easily compatible. In addition, since the backscatter system works at ultralow power levels of 10uW or below, it can operate for over 40 years with a single button cell and drastically reduce installation and maintenance costs.

The research team confirmed that mmWave backscatter devices arranged randomly in an office with various obstacles and reflectors could communicate effectively. The team then took things one step further and conducted a successful trace-driven evaluation where they simultaneously received information sent by 1,100 devices.

Their research presents connectivity that greatly exceeds network density required by next-generation communication like 5G and 6G. The system is expected to become a stepping stone for the hyper-connected future to come.

Professor Kim said, “mmWave backscatter is the technology we’ve dreamt of. The mass scalability and ultralow power at which it can operate IoT devices is unmatched by any existing technology”. He added, “We look forward to this system being actively utilized to enable the wide availability of IoT in the hyper-connected generation to come”.

To demonstrate the massive connectivity of the system, a trace-driven evaluation of 1,100 concurrent tag transmissions are made. Figure shows the demodulation result of each and every 1,100 tags as red triangles, where they successfully communicate without collision.

This work was supported by Samsung Research Funding & Incubation Center of Samsung Electronics and by the ITRC (Information Technology Research Center) support program supervised by the IITP (Institute of Information & Communications Technology Planning & Evaluation).

Profile: Song Min Kim, Ph.D.Professorsongmin@kaist.ac.krhttps://smile.kaist.ac.kr

SMILE Lab.School of Electrical Engineering

2022.07.28 View 9125

A System for Stable Simultaneous Communication among Thousands of IoT Devices

A mmWave Backscatter System, developed by a team led by Professor Song Min Kim is exciting news for the IoT market as it will be able to provide fast and stable connectivity even for a massive network, which could finally allow IoT devices to reach their full potential.

A research team led by Professor Song Min Kim of the KAIST School of Electrical Engineering developed a system that can support concurrent communications for tens of millions of IoT devices using backscattering millimeter-level waves (mmWave).

With their mmWave backscatter method, the research team built a design enabling simultaneous signal demodulation in a complex environment for communication where tens of thousands of IoT devices are arranged indoors. The wide frequency range of mmWave exceeds 10GHz, which provides great scalability. In addition, backscattering reflects radiated signals instead of wirelessly creating its own, which allows operation at ultralow power. Therefore, the mmWave backscatter system offers internet connectivity on a mass scale to IoT devices at a low installation cost.

This research by Kangmin Bae et al. was presented at ACM MobiSys 2022. At this world-renowned conference for mobile systems, the research won the Best Paper Award under the title “OmniScatter: Sensitivity mmWave Backscattering Using Commodity FMCW Radar”. It is meaningful that members of the KAIST School of Electrical Engineering have won the Best Paper Award at ACM MobiSys for two consecutive years, as last year was the first time the award was presented to an institute from Asia.

IoT, as a core component of 5G/6G network, is showing exponential growth, and is expected to be part of a trillion devices by 2035. To support the connection of IoT devices on a mass scale, 5G and 6G each aim to support ten times and 100 times the network density of 4G, respectively. As a result, the importance of practical systems for large-scale communication has been raised.

The mmWave is a next-generation communication technology that can be incorporated in 5G/6G standards, as it utilizes carrier waves at frequencies between 30 to 300GHz. However, due to signal reduction at high frequencies and reflection loss, the current mmWave backscatter system enables communication in limited environments. In other words, it cannot operate in complex environments where various obstacles and reflectors are present. As a result, it is limited to the large-scale connection of IoT devices that require a relatively free arrangement.

The research team found the solution in the high coding gain of an FMCW radar. The team developed a signal processing method that can fundamentally separate backscatter signals from ambient noise while maintaining the coding gain of the radar. They achieved a receiver sensitivity of over 100 thousand times that of previously reported FMCW radars, which can support communication in practical environments. Additionally, given the radar’s property where the frequency of the demodulated signal changes depending on the physical location of the tag, the team designed a system that passively assigns them channels. This lets the ultralow-power backscatter communication system to take full advantage of the frequency range at 10 GHz or higher.

The developed system can use the radar of existing commercial products as gateway, making it easily compatible. In addition, since the backscatter system works at ultralow power levels of 10uW or below, it can operate for over 40 years with a single button cell and drastically reduce installation and maintenance costs.

The research team confirmed that mmWave backscatter devices arranged randomly in an office with various obstacles and reflectors could communicate effectively. The team then took things one step further and conducted a successful trace-driven evaluation where they simultaneously received information sent by 1,100 devices.

Their research presents connectivity that greatly exceeds network density required by next-generation communication like 5G and 6G. The system is expected to become a stepping stone for the hyper-connected future to come.

Professor Kim said, “mmWave backscatter is the technology we’ve dreamt of. The mass scalability and ultralow power at which it can operate IoT devices is unmatched by any existing technology”. He added, “We look forward to this system being actively utilized to enable the wide availability of IoT in the hyper-connected generation to come”.

To demonstrate the massive connectivity of the system, a trace-driven evaluation of 1,100 concurrent tag transmissions are made. Figure shows the demodulation result of each and every 1,100 tags as red triangles, where they successfully communicate without collision.

This work was supported by Samsung Research Funding & Incubation Center of Samsung Electronics and by the ITRC (Information Technology Research Center) support program supervised by the IITP (Institute of Information & Communications Technology Planning & Evaluation).

Profile: Song Min Kim, Ph.D.Professorsongmin@kaist.ac.krhttps://smile.kaist.ac.kr

SMILE Lab.School of Electrical Engineering

2022.07.28 View 9125 -

Atomically-Smooth Gold Crystals Help to Compress Light for Nanophotonic Applications

Highly compressed mid-infrared optical waves in a thin dielectric crystal on monocrystalline gold substrate investigated for the first time using a high-resolution scattering-type scanning near-field optical microscope.

KAIST researchers and their collaborators at home and abroad have successfully demonstrated a new platform for guiding the compressed light waves in very thin van der Waals crystals. Their method to guide the mid-infrared light with minimal loss will provide a breakthrough for the practical applications of ultra-thin dielectric crystals in next-generation optoelectronic devices based on strong light-matter interactions at the nanoscale.

Phonon-polaritons are collective oscillations of ions in polar dielectrics coupled to electromagnetic waves of light, whose electromagnetic field is much more compressed compared to the light wavelength. Recently, it was demonstrated that the phonon-polaritons in thin van der Waals crystals can be compressed even further when the material is placed on top of a highly conductive metal. In such a configuration, charges in the polaritonic crystal are “reflected” in the metal, and their coupling with light results in a new type of polariton waves called the image phonon-polaritons. Highly compressed image modes provide strong light-matter interactions, but are very sensitive to the substrate roughness, which hinders their practical application.

Challenged by these limitations, four research groups combined their efforts to develop a unique experimental platform using advanced fabrication and measurement methods. Their findings were published in Science Advances on July 13.

A KAIST research team led by Professor Min Seok Jang from the School of Electrical Engineering used a highly sensitive scanning near-field optical microscope (SNOM) to directly measure the optical fields of the hyperbolic image phonon-polaritons (HIP) propagating in a 63 nm-thick slab of hexagonal boron nitride (h-BN) on a monocrystalline gold substrate, showing the mid-infrared light waves in dielectric crystal compressed by a hundred times.

Professor Jang and a research professor in his group, Sergey Menabde, successfully obtained direct images of HIP waves propagating for many wavelengths, and detected a signal from the ultra-compressed high-order HIP in a regular h-BN crystals for the first time. They showed that the phonon-polaritons in van der Waals crystals can be significantly more compressed without sacrificing their lifetime.

This became possible due to the atomically-smooth surfaces of the home-grown gold crystals used as a substrate for the h-BN. Practically zero surface scattering and extremely small ohmic loss in gold at mid-infrared frequencies provide a low-loss environment for the HIP propagation. The HIP mode probed by the researchers was 2.4 times more compressed and yet exhibited a similar lifetime compared to the phonon-polaritons with a low-loss dielectric substrate, resulting in a twice higher figure of merit in terms of the normalized propagation length.

The ultra-smooth monocrystalline gold flakes used in the experiment were chemically grown by the team of Professor N. Asger Mortensen from the Center for Nano Optics at the University of Southern Denmark.

Mid-infrared spectrum is particularly important for sensing applications since many important organic molecules have absorption lines in the mid-infrared. However, a large number of molecules is required by the conventional detection methods for successful operation, whereas the ultra-compressed phonon-polariton fields can provide strong light-matter interactions at the microscopic level, thus significantly improving the detection limit down to a single molecule. The long lifetime of the HIP on monocrystalline gold will further improve the detection performance.

Furthermore, the study conducted by Professor Jang and the team demonstrated the striking similarity between the HIP and the image graphene plasmons. Both image modes possess significantly more confined electromagnetic field, yet their lifetime remains unaffected by the shorter polariton wavelength. This observation provides a broader perspective on image polaritons in general, and highlights their superiority in terms of the nanolight waveguiding compared to the conventional low-dimensional polaritons in van der Waals crystals on a dielectric substrate.

Professor Jang said, “Our research demonstrated the advantages of image polaritons, and especially the image phonon-polaritons. These optical modes can be used in the future optoelectronic devices where both the low-loss propagation and the strong light-matter interaction are necessary. I hope that our results will pave the way for the realization of more efficient nanophotonic devices such as metasurfaces, optical switches, sensors, and other applications operating at infrared frequencies.”

This research was funded by the Samsung Research Funding & Incubation Center of Samsung Electronics and the National Research Foundation of Korea (NRF). The Korea Institute of Science and Technology, Ministry of Education, Culture, Sports, Science and Technology of Japan, and The Villum Foundation, Denmark, also supported the work.

Figure. Nano-tip is used for the ultra-high-resolution imaging of the image phonon-polaritons in hBN launched by the gold crystal edge.

Publication:

Menabde, S. G., et al. (2022) Near-field probing of image phonon-polaritons in hexagonal boron nitride on gold crystals. Science Advances 8, Article ID: eabn0627. Available online at https://science.org/doi/10.1126/sciadv.abn0627.

Profile:

Min Seok Jang, MS, PhD

Associate Professor

jang.minseok@kaist.ac.kr

http://janglab.org/

Min Seok Jang Research Group

School of Electrical Engineering

http://kaist.ac.kr/en/

Korea Advanced Institute of Science and Technology (KAIST)

Daejeon, Republic of Korea

2022.07.13 View 11766

Atomically-Smooth Gold Crystals Help to Compress Light for Nanophotonic Applications

Highly compressed mid-infrared optical waves in a thin dielectric crystal on monocrystalline gold substrate investigated for the first time using a high-resolution scattering-type scanning near-field optical microscope.

KAIST researchers and their collaborators at home and abroad have successfully demonstrated a new platform for guiding the compressed light waves in very thin van der Waals crystals. Their method to guide the mid-infrared light with minimal loss will provide a breakthrough for the practical applications of ultra-thin dielectric crystals in next-generation optoelectronic devices based on strong light-matter interactions at the nanoscale.

Phonon-polaritons are collective oscillations of ions in polar dielectrics coupled to electromagnetic waves of light, whose electromagnetic field is much more compressed compared to the light wavelength. Recently, it was demonstrated that the phonon-polaritons in thin van der Waals crystals can be compressed even further when the material is placed on top of a highly conductive metal. In such a configuration, charges in the polaritonic crystal are “reflected” in the metal, and their coupling with light results in a new type of polariton waves called the image phonon-polaritons. Highly compressed image modes provide strong light-matter interactions, but are very sensitive to the substrate roughness, which hinders their practical application.

Challenged by these limitations, four research groups combined their efforts to develop a unique experimental platform using advanced fabrication and measurement methods. Their findings were published in Science Advances on July 13.

A KAIST research team led by Professor Min Seok Jang from the School of Electrical Engineering used a highly sensitive scanning near-field optical microscope (SNOM) to directly measure the optical fields of the hyperbolic image phonon-polaritons (HIP) propagating in a 63 nm-thick slab of hexagonal boron nitride (h-BN) on a monocrystalline gold substrate, showing the mid-infrared light waves in dielectric crystal compressed by a hundred times.

Professor Jang and a research professor in his group, Sergey Menabde, successfully obtained direct images of HIP waves propagating for many wavelengths, and detected a signal from the ultra-compressed high-order HIP in a regular h-BN crystals for the first time. They showed that the phonon-polaritons in van der Waals crystals can be significantly more compressed without sacrificing their lifetime.

This became possible due to the atomically-smooth surfaces of the home-grown gold crystals used as a substrate for the h-BN. Practically zero surface scattering and extremely small ohmic loss in gold at mid-infrared frequencies provide a low-loss environment for the HIP propagation. The HIP mode probed by the researchers was 2.4 times more compressed and yet exhibited a similar lifetime compared to the phonon-polaritons with a low-loss dielectric substrate, resulting in a twice higher figure of merit in terms of the normalized propagation length.

The ultra-smooth monocrystalline gold flakes used in the experiment were chemically grown by the team of Professor N. Asger Mortensen from the Center for Nano Optics at the University of Southern Denmark.

Mid-infrared spectrum is particularly important for sensing applications since many important organic molecules have absorption lines in the mid-infrared. However, a large number of molecules is required by the conventional detection methods for successful operation, whereas the ultra-compressed phonon-polariton fields can provide strong light-matter interactions at the microscopic level, thus significantly improving the detection limit down to a single molecule. The long lifetime of the HIP on monocrystalline gold will further improve the detection performance.

Furthermore, the study conducted by Professor Jang and the team demonstrated the striking similarity between the HIP and the image graphene plasmons. Both image modes possess significantly more confined electromagnetic field, yet their lifetime remains unaffected by the shorter polariton wavelength. This observation provides a broader perspective on image polaritons in general, and highlights their superiority in terms of the nanolight waveguiding compared to the conventional low-dimensional polaritons in van der Waals crystals on a dielectric substrate.

Professor Jang said, “Our research demonstrated the advantages of image polaritons, and especially the image phonon-polaritons. These optical modes can be used in the future optoelectronic devices where both the low-loss propagation and the strong light-matter interaction are necessary. I hope that our results will pave the way for the realization of more efficient nanophotonic devices such as metasurfaces, optical switches, sensors, and other applications operating at infrared frequencies.”

This research was funded by the Samsung Research Funding & Incubation Center of Samsung Electronics and the National Research Foundation of Korea (NRF). The Korea Institute of Science and Technology, Ministry of Education, Culture, Sports, Science and Technology of Japan, and The Villum Foundation, Denmark, also supported the work.

Figure. Nano-tip is used for the ultra-high-resolution imaging of the image phonon-polaritons in hBN launched by the gold crystal edge.

Publication:

Menabde, S. G., et al. (2022) Near-field probing of image phonon-polaritons in hexagonal boron nitride on gold crystals. Science Advances 8, Article ID: eabn0627. Available online at https://science.org/doi/10.1126/sciadv.abn0627.

Profile:

Min Seok Jang, MS, PhD

Associate Professor

jang.minseok@kaist.ac.kr

http://janglab.org/

Min Seok Jang Research Group

School of Electrical Engineering

http://kaist.ac.kr/en/

Korea Advanced Institute of Science and Technology (KAIST)

Daejeon, Republic of Korea

2022.07.13 View 11766 -

KAIST & LG U+ Team Up for Quantum Computing Solution for Ultra-Space 6G Satellite Networking

KAIST quantum computer scientists have optimized ultra-space 6G Low-Earth Orbit (LEO) satellite networking, finding the shortest path to transfer data from a city to another place via multi-satellite hops.

The research team led by Professor June-Koo Kevin Rhee and Professor Dongsu Han in partnership with LG U+ verified the possibility of ultra-performance and precision communication with satellite networks using D-Wave, the first commercialized quantum computer.

Satellite network optimization has remained challenging since the network needs to be reconfigured whenever satellites approach other satellites within the connection range in a three-dimensional space. Moreover, LEO satellites orbiting at 200~2000 km above the Earth change their positions dynamically, whereas Geo-Stationary Orbit (GSO) satellites do not change their positions. Thus, LEO satellite network optimization needs to be solved in real time.

The research groups formulated the problem as a Quadratic Unconstrained Binary Optimization (QUBO) problem and managed to solve the problem, incorporating the connectivity and link distance limits as the constraints.

The proposed optimization algorithm is reported to be much more efficient in terms of hop counts and path length than previously reported studies using classical solutions. These results verify that a satellite network can provide ultra-performance (over 1Gbps user-perceived speed), and ultra-precision (less than 5ms end-to-end latency) network services, which are comparable to terrestrial communication.

Once QUBO is applied, “ultra-space networking” is expected to be realized with 6G. Researchers said that an ultra-space network provides communication services for an object moving at up to 10 km altitude with an extreme speed (~ 1000 km/h). Optimized LEO satellite networks can provide 6G communication services to currently unavailable areas such as air flights and deserts.

Professor Rhee, who is also the CEO of Qunova Computing, noted, “Collaboration with LG U+ was meaningful as we were able to find an industrial application for a quantum computer. We look forward to more quantum application research on real problems such as in communications, drug and material discovery, logistics, and fintech industries.”

2022.06.17 View 8039

KAIST & LG U+ Team Up for Quantum Computing Solution for Ultra-Space 6G Satellite Networking

KAIST quantum computer scientists have optimized ultra-space 6G Low-Earth Orbit (LEO) satellite networking, finding the shortest path to transfer data from a city to another place via multi-satellite hops.

The research team led by Professor June-Koo Kevin Rhee and Professor Dongsu Han in partnership with LG U+ verified the possibility of ultra-performance and precision communication with satellite networks using D-Wave, the first commercialized quantum computer.

Satellite network optimization has remained challenging since the network needs to be reconfigured whenever satellites approach other satellites within the connection range in a three-dimensional space. Moreover, LEO satellites orbiting at 200~2000 km above the Earth change their positions dynamically, whereas Geo-Stationary Orbit (GSO) satellites do not change their positions. Thus, LEO satellite network optimization needs to be solved in real time.

The research groups formulated the problem as a Quadratic Unconstrained Binary Optimization (QUBO) problem and managed to solve the problem, incorporating the connectivity and link distance limits as the constraints.

The proposed optimization algorithm is reported to be much more efficient in terms of hop counts and path length than previously reported studies using classical solutions. These results verify that a satellite network can provide ultra-performance (over 1Gbps user-perceived speed), and ultra-precision (less than 5ms end-to-end latency) network services, which are comparable to terrestrial communication.

Once QUBO is applied, “ultra-space networking” is expected to be realized with 6G. Researchers said that an ultra-space network provides communication services for an object moving at up to 10 km altitude with an extreme speed (~ 1000 km/h). Optimized LEO satellite networks can provide 6G communication services to currently unavailable areas such as air flights and deserts.

Professor Rhee, who is also the CEO of Qunova Computing, noted, “Collaboration with LG U+ was meaningful as we were able to find an industrial application for a quantum computer. We look forward to more quantum application research on real problems such as in communications, drug and material discovery, logistics, and fintech industries.”

2022.06.17 View 8039 -

Professor Jae-Woong Jeong Receives Hyonwoo KAIST Academic Award

Professor Jae-Woong Jeong from the School of Electrical Engineering was selected for the Hyonwoo KAIST Academic Award, funded by the HyonWoo Cultural Foundation (Chairman Soo-il Kwak, honorary professor at Seoul National University Business School).

The Hyonwoo KAIST Academic Award, presented for the first time in 2021, is an award newly founded by the donations of Chairman Soo-il Kwak of the HyonWoo Cultural Foundation, who aims to reward excellent KAIST scholars who have made outstanding academic achievements.

Every year, through the strict evaluations of the selection committee of the HyonWoo Cultural Foundation and the faculty reward recommendation board, KAIST will choose one faculty member that may represent the school with their excellent academic achievement, and reward them with a plaque and 100 million won.

Professor Jae-Woong Jeong, the winner of this year’s award, developed the first IoT-based wireless remote brain neural network control system to overcome brain diseases, and has been leading the field. The research was published in 2021 in Nature Biomedical Engineering, one of world’s best scientific journals, and has been recognized as a novel technology that suggested a new vision for the automation of brain research and disease treatment. This study, led by Professor Jeong’s research team, was part of the KAIST College of Engineering Global Initiative Interdisciplinary Research Project, and was jointly studied by Washington University School of Medicine through an international research collaboration. The technology was introduced more than 60 times through both domestic and international media, including Medical Xpress, MBC News, and Maeil Business News.

Professor Jeong has also developed a wirelessly chargeable soft machine for brain transplants, and the results were published in Nature Communications. He thereby opened a new paradigm for implantable semi-permanent devices for transplants, and is making unprecedented research achievements.

2022.06.13 View 7228

Professor Jae-Woong Jeong Receives Hyonwoo KAIST Academic Award

Professor Jae-Woong Jeong from the School of Electrical Engineering was selected for the Hyonwoo KAIST Academic Award, funded by the HyonWoo Cultural Foundation (Chairman Soo-il Kwak, honorary professor at Seoul National University Business School).

The Hyonwoo KAIST Academic Award, presented for the first time in 2021, is an award newly founded by the donations of Chairman Soo-il Kwak of the HyonWoo Cultural Foundation, who aims to reward excellent KAIST scholars who have made outstanding academic achievements.

Every year, through the strict evaluations of the selection committee of the HyonWoo Cultural Foundation and the faculty reward recommendation board, KAIST will choose one faculty member that may represent the school with their excellent academic achievement, and reward them with a plaque and 100 million won.

Professor Jae-Woong Jeong, the winner of this year’s award, developed the first IoT-based wireless remote brain neural network control system to overcome brain diseases, and has been leading the field. The research was published in 2021 in Nature Biomedical Engineering, one of world’s best scientific journals, and has been recognized as a novel technology that suggested a new vision for the automation of brain research and disease treatment. This study, led by Professor Jeong’s research team, was part of the KAIST College of Engineering Global Initiative Interdisciplinary Research Project, and was jointly studied by Washington University School of Medicine through an international research collaboration. The technology was introduced more than 60 times through both domestic and international media, including Medical Xpress, MBC News, and Maeil Business News.

Professor Jeong has also developed a wirelessly chargeable soft machine for brain transplants, and the results were published in Nature Communications. He thereby opened a new paradigm for implantable semi-permanent devices for transplants, and is making unprecedented research achievements.

2022.06.13 View 7228 -

Professor Iickho Song Publishes a Book on Probability and Random Variables in English

Professor Iickho Song from the School of Electrical Engineering has published a book on probability and random variables in English. This is the translated version of his book in Korean ‘Theory of Random Variables’, which was selected as an Excellent Book of Basic Sciences by the National Academy of Sciences and the Ministry of Education in 2020.

The book discusses diverse concepts, notions, and applications concerning probability and random variables, explaining basic concepts and results in a clearer and more complete manner.

Readers will also find unique results on the explicit general formula of joint moments and the expected values of nonlinear functions for normal random vectors. In addition, interesting applications for the step and impulse functions in discussions on random vectors are presented. Thanks to a wealth of examples and a total of 330 practice problems of varying difficulty, readers will have the opportunity to significantly expand their knowledge and skills. The book includes an extensive index, allowing readers to quickly and easily find what they are looking for. It also offers a valuable reference guide for experienced scholars and professionals, helping them review and refine their expertise.

Link: https://link.springer.com/book/10.1007/978-3-030-97679-8

2022.06.13 View 4268

Professor Iickho Song Publishes a Book on Probability and Random Variables in English

Professor Iickho Song from the School of Electrical Engineering has published a book on probability and random variables in English. This is the translated version of his book in Korean ‘Theory of Random Variables’, which was selected as an Excellent Book of Basic Sciences by the National Academy of Sciences and the Ministry of Education in 2020.

The book discusses diverse concepts, notions, and applications concerning probability and random variables, explaining basic concepts and results in a clearer and more complete manner.

Readers will also find unique results on the explicit general formula of joint moments and the expected values of nonlinear functions for normal random vectors. In addition, interesting applications for the step and impulse functions in discussions on random vectors are presented. Thanks to a wealth of examples and a total of 330 practice problems of varying difficulty, readers will have the opportunity to significantly expand their knowledge and skills. The book includes an extensive index, allowing readers to quickly and easily find what they are looking for. It also offers a valuable reference guide for experienced scholars and professionals, helping them review and refine their expertise.

Link: https://link.springer.com/book/10.1007/978-3-030-97679-8

2022.06.13 View 4268 -

Machine Learning-Based Algorithm to Speed up DNA Sequencing

The algorithm presents the first full-fledged, short-read alignment software that leverages learned indices for solving the exact match search problem for efficient seeding

The human genome consists of a complete set of DNA, which is about 6.4 billion letters long. Because of its size, reading the whole genome sequence at once is challenging. So scientists use DNA sequencers to produce hundreds of millions of DNA sequence fragments, or short reads, up to 300 letters long. Then the DNA sequencer assembles all the short reads like a giant jigsaw puzzle to reconstruct the entire genome sequence. Even with very fast computers, this job can take hours to complete.

A research team at KAIST has achieved up to 3.45x faster speeds by developing the first short-read alignment software that uses a recent advance in machine-learning called a learned index.

The research team reported their findings on March 7, 2022 in the journal Bioinformatics. The software has been released as open source and can be found on github (https://github.com/kaist-ina/BWA-MEME).

Next-generation sequencing (NGS) is a state-of-the-art DNA sequencing method. Projects are underway with the goal of producing genome sequencing at population scale. Modern NGS hardware is capable of generating billions of short reads in a single run. Then the short reads have to be aligned with the reference DNA sequence. With large-scale DNA sequencing operations running hundreds of next-generation sequences, the need for an efficient short read alignment tool has become even more critical. Accelerating the DNA sequence alignment would be a step toward achieving the goal of population-scale sequencing. However, existing algorithms are limited in their performance because of their frequent memory accesses.

BWA-MEM2 is a popular short-read alignment software package currently used to sequence the DNA. However, it has its limitations. The state-of-the-art alignment has two phases – seeding and extending. During the seeding phase, searches find exact matches of short reads in the reference DNA sequence. During the extending phase, the short reads from the seeding phase are extended. In the current process, bottlenecks occur in the seeding phase. Finding the exact matches slows the process.

The researchers set out to solve the problem of accelerating the DNA sequence alignment. To speed the process, they applied machine learning techniques to create an algorithmic improvement. Their algorithm, BWA-MEME (BWA-MEM emulated) leverages learned indices to solve the exact match search problem. The original software compared one character at a time for an exact match search. The team’s new algorithm achieves up to 3.45x faster speeds in seeding throughput over BWA-MEM2 by reducing the number of instructions by 4.60x and memory accesses by 8.77x. “Through this study, it has been shown that full genome big data analysis can be performed faster and less costly than conventional methods by applying machine learning technology,” said Professor Dongsu Han from the School of Electrical Engineering at KAIST.

The researchers’ ultimate goal was to develop efficient software that scientists from academia and industry could use on a daily basis for analyzing big data in genomics. “With the recent advances in artificial intelligence and machine learning, we see so many opportunities for designing better software for genomic data analysis. The potential is there for accelerating existing analysis as well as enabling new types of analysis, and our goal is to develop such software,” added Han.

Whole genome sequencing has traditionally been used for discovering genomic mutations and identifying the root causes of diseases, which leads to the discovery and development of new drugs and cures. There could be many potential applications. Whole genome sequencing is used not only for research, but also for clinical purposes. “The science and technology for analyzing genomic data is making rapid progress to make it more accessible for scientists and patients. This will enhance our understanding about diseases and develop a better cure for patients of various diseases.”

The research was funded by the National Research Foundation of the Korean government’s Ministry of Science and ICT.

-PublicationYoungmok Jung, Dongsu Han, “BWA-MEME:BWA-MEM emulated with a machine learning approach,” Bioinformatics, Volume 38, Issue 9, May 2022

(https://doi.org/10.1093/bioinformatics/btac137)

-ProfileProfessor Dongsu HanSchool of Electrical EngineeringKAIST

2022.05.10 View 8489

Machine Learning-Based Algorithm to Speed up DNA Sequencing

The algorithm presents the first full-fledged, short-read alignment software that leverages learned indices for solving the exact match search problem for efficient seeding

The human genome consists of a complete set of DNA, which is about 6.4 billion letters long. Because of its size, reading the whole genome sequence at once is challenging. So scientists use DNA sequencers to produce hundreds of millions of DNA sequence fragments, or short reads, up to 300 letters long. Then the DNA sequencer assembles all the short reads like a giant jigsaw puzzle to reconstruct the entire genome sequence. Even with very fast computers, this job can take hours to complete.

A research team at KAIST has achieved up to 3.45x faster speeds by developing the first short-read alignment software that uses a recent advance in machine-learning called a learned index.

The research team reported their findings on March 7, 2022 in the journal Bioinformatics. The software has been released as open source and can be found on github (https://github.com/kaist-ina/BWA-MEME).

Next-generation sequencing (NGS) is a state-of-the-art DNA sequencing method. Projects are underway with the goal of producing genome sequencing at population scale. Modern NGS hardware is capable of generating billions of short reads in a single run. Then the short reads have to be aligned with the reference DNA sequence. With large-scale DNA sequencing operations running hundreds of next-generation sequences, the need for an efficient short read alignment tool has become even more critical. Accelerating the DNA sequence alignment would be a step toward achieving the goal of population-scale sequencing. However, existing algorithms are limited in their performance because of their frequent memory accesses.

BWA-MEM2 is a popular short-read alignment software package currently used to sequence the DNA. However, it has its limitations. The state-of-the-art alignment has two phases – seeding and extending. During the seeding phase, searches find exact matches of short reads in the reference DNA sequence. During the extending phase, the short reads from the seeding phase are extended. In the current process, bottlenecks occur in the seeding phase. Finding the exact matches slows the process.

The researchers set out to solve the problem of accelerating the DNA sequence alignment. To speed the process, they applied machine learning techniques to create an algorithmic improvement. Their algorithm, BWA-MEME (BWA-MEM emulated) leverages learned indices to solve the exact match search problem. The original software compared one character at a time for an exact match search. The team’s new algorithm achieves up to 3.45x faster speeds in seeding throughput over BWA-MEM2 by reducing the number of instructions by 4.60x and memory accesses by 8.77x. “Through this study, it has been shown that full genome big data analysis can be performed faster and less costly than conventional methods by applying machine learning technology,” said Professor Dongsu Han from the School of Electrical Engineering at KAIST.

The researchers’ ultimate goal was to develop efficient software that scientists from academia and industry could use on a daily basis for analyzing big data in genomics. “With the recent advances in artificial intelligence and machine learning, we see so many opportunities for designing better software for genomic data analysis. The potential is there for accelerating existing analysis as well as enabling new types of analysis, and our goal is to develop such software,” added Han.

Whole genome sequencing has traditionally been used for discovering genomic mutations and identifying the root causes of diseases, which leads to the discovery and development of new drugs and cures. There could be many potential applications. Whole genome sequencing is used not only for research, but also for clinical purposes. “The science and technology for analyzing genomic data is making rapid progress to make it more accessible for scientists and patients. This will enhance our understanding about diseases and develop a better cure for patients of various diseases.”

The research was funded by the National Research Foundation of the Korean government’s Ministry of Science and ICT.

-PublicationYoungmok Jung, Dongsu Han, “BWA-MEME:BWA-MEM emulated with a machine learning approach,” Bioinformatics, Volume 38, Issue 9, May 2022

(https://doi.org/10.1093/bioinformatics/btac137)

-ProfileProfessor Dongsu HanSchool of Electrical EngineeringKAIST

2022.05.10 View 8489 -

A New Strategy for Active Metasurface Design Provides a Full 360° Phase Tunable Metasurface

The new strategy displays an unprecedented upper limit of dynamic phase modulation with no significant variations in optical amplitude

An international team of researchers led by Professor Min Seok Jang of KAIST and Professor Victor W. Brar of the University of Wisconsin-Madison has demonstrated a widely applicable methodology enabling a full 360° active phase modulation for metasurfaces while maintaining significant levels of uniform light amplitude. This strategy can be fundamentally applied to any spectral region with any structures and resonances that fit the bill.

Metasurfaces are optical components with specialized functionalities indispensable for real-life applications ranging from LIDAR and spectroscopy to futuristic technologies such as invisibility cloaks and holograms. They are known for their compact and micro/nano-sized nature, which enables them to be integrated into electronic computerized systems with sizes that are ever decreasing as predicted by Moore’s law.

In order to allow for such innovations, metasurfaces must be capable of manipulating the impinging light, doing so by manipulating either the light’s amplitude or phase (or both) and emitting it back out. However, dynamically modulating the phase with the full circle range has been a notoriously difficult task, with very few works managing to do so by sacrificing a substantial amount of amplitude control.

Challenged by these limitations, the team proposed a general methodology that enables metasurfaces to implement a dynamic phase modulation with the complete 360° phase range, all the while uniformly maintaining significant levels of amplitude.

The underlying reason for the difficulty achieving such a feat is that there is a fundamental trade-off regarding dynamically controlling the optical phase of light. Metasurfaces generally perform such a function through optical resonances, an excitation of electrons inside the metasurface structure that harmonically oscillate together with the incident light. In order to be able to modulate through the entire range of 0-360°, the optical resonance frequency (the center of the spectrum) must be tuned by a large amount while the linewidth (the width of the spectrum) is kept to a minimum. However, to electrically tune the optical resonance frequency of the metasurface on demand, there needs to be a controllable influx and outflux of electrons into the metasurface and this inevitably leads to a larger linewidth of the aforementioned optical resonance.

The problem is further compounded by the fact that the phase and the amplitude of optical resonances are closely correlated in a complex, non-linear fashion, making it very difficult to hold substantial control over the amplitude while changing the phase.

The team’s work circumvented both problems by using two optical resonances, each with specifically designated properties. One resonance provides the decoupling between the phase and amplitude so that the phase is able to be tuned while significant and uniform levels of amplitude are maintained, as well as providing a narrow linewidth.

The other resonance provides the capability of being sufficiently tuned to a large degree so that the complete full circle range of phase modulation is achievable. The quintessence of the work is then to combine the different properties of the two resonances through a phenomenon called avoided crossing, so that the interactions between the two resonances lead to an amalgamation of the desired traits that achieves and even surpasses the full 360° phase modulation with uniform amplitude.