IEEE

-

Robotic Herding of a Flock of Birds Using Drones

A joint team from KAIST, Caltech, and Imperial College London, presents a drone with a new algorithm to shepherd birds safely away from airports

Researchers made a new algorithm for enabling a single robotic unmanned aerial vehicle to herd a flock of birds away from a designated airspace. This novel approach allows a single autonomous quadrotor drone to herd an entire flock of birds away without breaking their formation.

Professor David Hyunchul Shim at KAIST in collaboration with Professor Soon-Jo Chung of Caltech and Professor Aditya Paranjape of Imperial College London investigated the problem of diverting a flock of birds away from a prescribed area, such as an airport, using a robotic UVA. A novel boundary control strategy called the m-waypoint algorithm was introduced for enabling a single pursuer UAV to safely herd the flock without fragmenting it.

The team developed the herding algorithm on the basis of macroscopic properties of the flocking model and the response of the flock. They tested their robotic autonomous drone by successfully shepherding an entire flock of birds out of a designated airspace near KAIST’s campus in Daejeon, South Korea. This study is published in IEEE Transactions on Robotics.

“It is quite interesting, and even awe-inspiring, to monitor how birds react to threats and collectively behave against threatening objects through the flock. We made careful observations of flock dynamics and interactions between flocks and the pursuer. This allowed us to create a new herding algorithm for ideal flight paths for incoming drones to move the flock away from a protected airspace,” said Professor Shim, who leads the Unmanned Systems Research Group at KAIST.

Bird strikes can threaten the safety of airplanes and their passengers. Korean civil aircraft suffered more than 1,000 bird strikes between 2011 and 2016. In the US, 142,000 bird strikes destroyed 62 civilian airplanes, injured 279 people, and killed 25 between 1990 and 2013. In the UK in 2016, there were 1,835 confirmed bird strikes, about eight for every 10,000 flights. Bird and other wildlife collisions with aircraft cause well over 1.2 billion USD in damages to the aviation industry worldwide annually. In the worst case, Canadian geese knocked out both engines of a US Airway jet in January 2009. The flight had to make an emergency landing on the Hudson River.

Airports and researchers have continued to reduce the risk of bird strikes through a variety of methods. They scare birds away using predators such as falcons or loud noises from small cannons or guns. Some airports try to prevent birds from coming by ridding the surrounding areas of crops that birds eat and hide in.

However, birds are smart. “I was amazed with the birds’ capability to interact with flying objects. We thought that only birds of prey have a strong sense of maneuvering with the prey. But our observation of hundreds of migratory birds such as egrets and loons led us to reach the hypothesis that they all have similar levels of maneuvering with the flying objects. It will be very interesting to collaborate with ornithologists to study further with birds’ behaviors with aerial objects,” said Professor Shim. “Airports are trying to transform into smart airports. This algorithm will help improve safety for the aviation industry. In addition, this will also help control avian influenza that plagues farms nationwide every year,” he stressed.

For this study, two drones were deployed. One drone performed various types of maneuvers around the flocks as a pursuer of herding drone, while a surveillance drone hovered at a high altitude with a camera pointing down for recording the trajectories of the pursuer drone and the birds.

During the experiments on egrets, the birds made frequent visits to a hunting area nearby and a large number of egrets were found to return to their nests at sunset. During the time, the team attempted to fly the herding drone in various directions with respect to the flock.

The drone approached the flock from the side. When the birds noticed the drone, they diverted from their original paths and flew at a 45˚ angle to their right. When the birds noticed the drone while it was still far away, they adjusted their paths horizontally and made smaller changes in the vertical direction. In the second round of the experiment on loons, the drone flew almost parallel to the flight path of a flock of birds, starting from an initial position located just off the nominal flight path. The birds had a nominal flight speed that was considerably higher than that of the drone so the interaction took place over a relatively short period of time.

Professor Shim said, “I think we just completed the first step of the research. For the next step, more systems will be developed and integrated for bird detection, ranging, and automatic deployment of drones.” “Professor Chung at Caltech is a KAIST graduate. And his first student was Professor Paranjape who now teaches at Imperial. It is pretty interesting that this research was made by a KAIST faculty member, an alumnus, and his student on three different continents,” he said.

(Figure A. Case 1: drone approaches the herd with sufficient distance to induce horizontal deviation)

(Figure B. Case 2: drone approaches the herd abruptly to cause vertical deviation)

2018.08.23 View 9994

Robotic Herding of a Flock of Birds Using Drones

A joint team from KAIST, Caltech, and Imperial College London, presents a drone with a new algorithm to shepherd birds safely away from airports

Researchers made a new algorithm for enabling a single robotic unmanned aerial vehicle to herd a flock of birds away from a designated airspace. This novel approach allows a single autonomous quadrotor drone to herd an entire flock of birds away without breaking their formation.

Professor David Hyunchul Shim at KAIST in collaboration with Professor Soon-Jo Chung of Caltech and Professor Aditya Paranjape of Imperial College London investigated the problem of diverting a flock of birds away from a prescribed area, such as an airport, using a robotic UVA. A novel boundary control strategy called the m-waypoint algorithm was introduced for enabling a single pursuer UAV to safely herd the flock without fragmenting it.

The team developed the herding algorithm on the basis of macroscopic properties of the flocking model and the response of the flock. They tested their robotic autonomous drone by successfully shepherding an entire flock of birds out of a designated airspace near KAIST’s campus in Daejeon, South Korea. This study is published in IEEE Transactions on Robotics.

“It is quite interesting, and even awe-inspiring, to monitor how birds react to threats and collectively behave against threatening objects through the flock. We made careful observations of flock dynamics and interactions between flocks and the pursuer. This allowed us to create a new herding algorithm for ideal flight paths for incoming drones to move the flock away from a protected airspace,” said Professor Shim, who leads the Unmanned Systems Research Group at KAIST.

Bird strikes can threaten the safety of airplanes and their passengers. Korean civil aircraft suffered more than 1,000 bird strikes between 2011 and 2016. In the US, 142,000 bird strikes destroyed 62 civilian airplanes, injured 279 people, and killed 25 between 1990 and 2013. In the UK in 2016, there were 1,835 confirmed bird strikes, about eight for every 10,000 flights. Bird and other wildlife collisions with aircraft cause well over 1.2 billion USD in damages to the aviation industry worldwide annually. In the worst case, Canadian geese knocked out both engines of a US Airway jet in January 2009. The flight had to make an emergency landing on the Hudson River.

Airports and researchers have continued to reduce the risk of bird strikes through a variety of methods. They scare birds away using predators such as falcons or loud noises from small cannons or guns. Some airports try to prevent birds from coming by ridding the surrounding areas of crops that birds eat and hide in.

However, birds are smart. “I was amazed with the birds’ capability to interact with flying objects. We thought that only birds of prey have a strong sense of maneuvering with the prey. But our observation of hundreds of migratory birds such as egrets and loons led us to reach the hypothesis that they all have similar levels of maneuvering with the flying objects. It will be very interesting to collaborate with ornithologists to study further with birds’ behaviors with aerial objects,” said Professor Shim. “Airports are trying to transform into smart airports. This algorithm will help improve safety for the aviation industry. In addition, this will also help control avian influenza that plagues farms nationwide every year,” he stressed.

For this study, two drones were deployed. One drone performed various types of maneuvers around the flocks as a pursuer of herding drone, while a surveillance drone hovered at a high altitude with a camera pointing down for recording the trajectories of the pursuer drone and the birds.

During the experiments on egrets, the birds made frequent visits to a hunting area nearby and a large number of egrets were found to return to their nests at sunset. During the time, the team attempted to fly the herding drone in various directions with respect to the flock.

The drone approached the flock from the side. When the birds noticed the drone, they diverted from their original paths and flew at a 45˚ angle to their right. When the birds noticed the drone while it was still far away, they adjusted their paths horizontally and made smaller changes in the vertical direction. In the second round of the experiment on loons, the drone flew almost parallel to the flight path of a flock of birds, starting from an initial position located just off the nominal flight path. The birds had a nominal flight speed that was considerably higher than that of the drone so the interaction took place over a relatively short period of time.

Professor Shim said, “I think we just completed the first step of the research. For the next step, more systems will be developed and integrated for bird detection, ranging, and automatic deployment of drones.” “Professor Chung at Caltech is a KAIST graduate. And his first student was Professor Paranjape who now teaches at Imperial. It is pretty interesting that this research was made by a KAIST faculty member, an alumnus, and his student on three different continents,” he said.

(Figure A. Case 1: drone approaches the herd with sufficient distance to induce horizontal deviation)

(Figure B. Case 2: drone approaches the herd abruptly to cause vertical deviation)

2018.08.23 View 9994 -

Professor Suh Chosen for IT Young Engineer Award

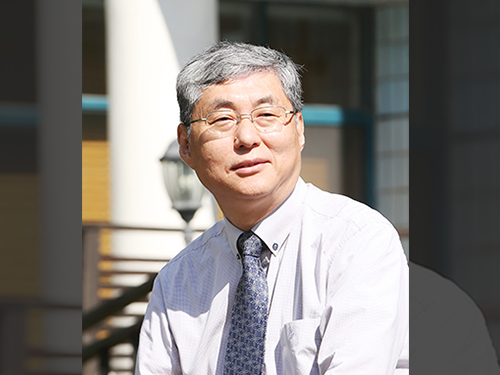

(The ceremony photo of Professor Changho Suh)

Professor Changho Suh from the School of Electrical Engineering received the IT Young Engineer Award on June 28. This award is hosted by the Institute of Electrical and Electronics Engineers (IEEE) and the Institute of Electrical and Information Engineers (IEIE) and funded by the Haedong Science Foundation.

The IT Young Engineer Award is given to researchers under the age of 40 in Korea. The selection criteria include the researches’ technical practicability, their social and environmental contributions, and their creativity.

Professor Suh has shown outstanding academic performance in the field of telecommunications, distributed storage, and artificial intelligence and he has also contributed to technological commercialization. He published 23 papers in SCI journals and ten papers at top-level international conferences including the Conference on Neural Information Processing Systems and the International Conference on Machine Learning. His papers were cited more than 4,100 times. He has also achieved 30 international patent registrations.

Currently, he is developing an autonomous driving system using an AI-tutor and deep learning technology.

Professor Suh said, “It is my great honor to receive the IT Young Engineer Award. I strive to continue guiding students and carrying out research in order to make a contribution to the fields of IT and AI.”

2018.07.04 View 10002

Professor Suh Chosen for IT Young Engineer Award

(The ceremony photo of Professor Changho Suh)

Professor Changho Suh from the School of Electrical Engineering received the IT Young Engineer Award on June 28. This award is hosted by the Institute of Electrical and Electronics Engineers (IEEE) and the Institute of Electrical and Information Engineers (IEIE) and funded by the Haedong Science Foundation.

The IT Young Engineer Award is given to researchers under the age of 40 in Korea. The selection criteria include the researches’ technical practicability, their social and environmental contributions, and their creativity.

Professor Suh has shown outstanding academic performance in the field of telecommunications, distributed storage, and artificial intelligence and he has also contributed to technological commercialization. He published 23 papers in SCI journals and ten papers at top-level international conferences including the Conference on Neural Information Processing Systems and the International Conference on Machine Learning. His papers were cited more than 4,100 times. He has also achieved 30 international patent registrations.

Currently, he is developing an autonomous driving system using an AI-tutor and deep learning technology.

Professor Suh said, “It is my great honor to receive the IT Young Engineer Award. I strive to continue guiding students and carrying out research in order to make a contribution to the fields of IT and AI.”

2018.07.04 View 10002 -

Recognizing Seven Different Face Emotions on a Mobile Platform

(Professor Hoi-Jun Yoo)

A KAIST research team succeeded in achieving face emotion recognition on a mobile platform by developing an AI semiconductor IC that processes two neural networks on a single chip.

Professor Hoi-Jun Yoo and his team (Primary researcher: Jinmook Lee Ph. D. student) from the School of Electrical Engineering developed a unified deep neural network processing unit (UNPU).

Deep learning is a technology for machine learning based on artificial neural networks, which allows a computer to learn by itself, just like a human.

The developed chip adjusts the weight precision (from 1 bit to 16 bit) of a neural network inside of the semiconductor in order to optimize energy efficiency and accuracy. With a single chip, it can process a convolutional neural network (CNN) and recurrent neural network (RNN) simultaneously. CNN is used for categorizing and recognizing images while RNN is for action recognition and speech recognition, such as time-series information.

Moreover, it enables an adjustment in energy efficiency and accuracy dynamically while recognizing objects. To realize mobile AI technology, it needs to process high-speed operations with low energy, otherwise the battery can run out quickly due to processing massive amounts of information at once. According to the team, this chip has better operation performance compared to world-class level mobile AI chips such as Google TPU. The energy efficiency of the new chip is 4 times higher than the TPU.

In order to demonstrate its high performance, the team installed UNPU in a smartphone to facilitate automatic face emotion recognition on the smartphone. This system displays a user’s emotions in real time. The research results for this system were presented at the 2018 International Solid-State Circuits Conference (ISSCC) in San Francisco on February 13.

Professor Yoo said, “We have developed a semiconductor that accelerates with low power requirements in order to realize AI on mobile platforms. We are hoping that this technology will be applied in various areas, such as object recognition, emotion recognition, action recognition, and automatic translation. Within one year, we will commercialize this technology.”

2018.03.09 View 7261

Recognizing Seven Different Face Emotions on a Mobile Platform

(Professor Hoi-Jun Yoo)

A KAIST research team succeeded in achieving face emotion recognition on a mobile platform by developing an AI semiconductor IC that processes two neural networks on a single chip.

Professor Hoi-Jun Yoo and his team (Primary researcher: Jinmook Lee Ph. D. student) from the School of Electrical Engineering developed a unified deep neural network processing unit (UNPU).

Deep learning is a technology for machine learning based on artificial neural networks, which allows a computer to learn by itself, just like a human.

The developed chip adjusts the weight precision (from 1 bit to 16 bit) of a neural network inside of the semiconductor in order to optimize energy efficiency and accuracy. With a single chip, it can process a convolutional neural network (CNN) and recurrent neural network (RNN) simultaneously. CNN is used for categorizing and recognizing images while RNN is for action recognition and speech recognition, such as time-series information.

Moreover, it enables an adjustment in energy efficiency and accuracy dynamically while recognizing objects. To realize mobile AI technology, it needs to process high-speed operations with low energy, otherwise the battery can run out quickly due to processing massive amounts of information at once. According to the team, this chip has better operation performance compared to world-class level mobile AI chips such as Google TPU. The energy efficiency of the new chip is 4 times higher than the TPU.

In order to demonstrate its high performance, the team installed UNPU in a smartphone to facilitate automatic face emotion recognition on the smartphone. This system displays a user’s emotions in real time. The research results for this system were presented at the 2018 International Solid-State Circuits Conference (ISSCC) in San Francisco on February 13.

Professor Yoo said, “We have developed a semiconductor that accelerates with low power requirements in order to realize AI on mobile platforms. We are hoping that this technology will be applied in various areas, such as object recognition, emotion recognition, action recognition, and automatic translation. Within one year, we will commercialize this technology.”

2018.03.09 View 7261 -

Highly Sensitive and Fast Indoor GNSS Signal Acquisition Technology

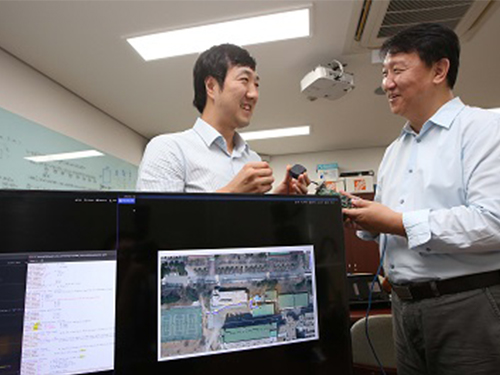

(Professor Seung-Hyun Kong (right) and Research Fellow Tae-Sun Kim)

A research team led by Professor Seung-Hyun Kong at the Cho Chun Shik Graduate School of Green Transportation, KAIST, developed high-speed, high-sensitivity Global Navigation Satellite System (GNSS) signal acquisition (search and detection) technology that can produce GNSS positioning fixes indoors.

Using the team’s new technology, GNSS signals will be sufficient to identify locations anywhere in the world, both indoors and outdoors. This new research finding was published in the international journal IEEE Signal Processing Magazine (IEEE SPM) this September.

Global Positioning System (GPS) developed by the U.S. Department of Defense in the 1990s is the most widely-used satellite-based navigation system, and GNSS is a terminology to indicate conventional satellite based navigation systems, such as GPS and Russian GLONASS, as well as new satellite-based navigation systems under development, such as European GALILEO, Chinese COMPASS, and other regional satellite-based navigation systems.

In general, GNSS signals are transmitted all over the globe from 20,000 km above the Earth and thus a GNSS signal received by a small antennae in an outdoor environment has weak signal power. In addition, GNSS signals penetrating building walls become extremely weak so the signal can be less than 1/1000th of the signal power received outside.

Using conventional acquisition techniques including the frequency-domain correlation technique to acquire an extremely weak GNSS signal causes the computational cost to increase by over a million times and the processing time for acquisition also increases tremendously. Because of this, indoor measurement techniques using GNSS signals were considered practically impossible for the last 20 years.

To resolve such limitations, the research team developed a Synthesized Doppler-frequency Hypothesis Testing (SDHT) technique to dramatically reduce the acquisition time and computational load for extremely weak GNSS signals indoors.

In general, GNSS signal acquisition is a search process in which the instantaneous accurate code phase and Doppler frequency of the incoming GNSS signal are identified. However, the number of Doppler frequency hypotheses grows proportionally to the coherent correlation time that should be necessarily increased to detect weak signals. In practice, the coherent correlation time should be more than 1000 times longer for extremely weak GNSS signals so the number of Doppler frequency hypotheses is greater than 20,000. On the other hand, the SDHT algorithm indirectly tests the Doppler frequency hypothesis utilizing the coherent correlation results of neighboring hypotheses.

Therefore, using SDHT, only around 20 hypotheses are tested using conventional correlation techniques and the remaining 19,980 hypotheses are calculated with simple mathematical operations. As a result, SDHT achieves a huge computational cost reduction (by about 1000 times) and is 800 times faster for signal acquisition compared to conventional techniques. This means only about 15 seconds is required to detect extremely weak GNSS signals in buildings using a personal computer.

The team predicts further studies for strengthening SDHT technology and developing positioning systems robust enough to multipath in indoor environments will allow indoor GNSS measurements within several seconds inside most buildings using GNSS alone.

Professor Kong said, “This development made us the leader in indoor GNSS positioning technology in the world.” He continued, “We hope to commercialize indoor GNSS systems to create a new market.” The research team is currently registering a patent in Korea and applying for patents overseas, as well as planning to commercialize the technology with the help of the Institute for Startup KAIST.

(Figure1. Positioning Results for the GPS Indoor Positioning System using SDHT Technology)

2017.11.02 View 7174

Highly Sensitive and Fast Indoor GNSS Signal Acquisition Technology

(Professor Seung-Hyun Kong (right) and Research Fellow Tae-Sun Kim)

A research team led by Professor Seung-Hyun Kong at the Cho Chun Shik Graduate School of Green Transportation, KAIST, developed high-speed, high-sensitivity Global Navigation Satellite System (GNSS) signal acquisition (search and detection) technology that can produce GNSS positioning fixes indoors.

Using the team’s new technology, GNSS signals will be sufficient to identify locations anywhere in the world, both indoors and outdoors. This new research finding was published in the international journal IEEE Signal Processing Magazine (IEEE SPM) this September.

Global Positioning System (GPS) developed by the U.S. Department of Defense in the 1990s is the most widely-used satellite-based navigation system, and GNSS is a terminology to indicate conventional satellite based navigation systems, such as GPS and Russian GLONASS, as well as new satellite-based navigation systems under development, such as European GALILEO, Chinese COMPASS, and other regional satellite-based navigation systems.

In general, GNSS signals are transmitted all over the globe from 20,000 km above the Earth and thus a GNSS signal received by a small antennae in an outdoor environment has weak signal power. In addition, GNSS signals penetrating building walls become extremely weak so the signal can be less than 1/1000th of the signal power received outside.

Using conventional acquisition techniques including the frequency-domain correlation technique to acquire an extremely weak GNSS signal causes the computational cost to increase by over a million times and the processing time for acquisition also increases tremendously. Because of this, indoor measurement techniques using GNSS signals were considered practically impossible for the last 20 years.

To resolve such limitations, the research team developed a Synthesized Doppler-frequency Hypothesis Testing (SDHT) technique to dramatically reduce the acquisition time and computational load for extremely weak GNSS signals indoors.

In general, GNSS signal acquisition is a search process in which the instantaneous accurate code phase and Doppler frequency of the incoming GNSS signal are identified. However, the number of Doppler frequency hypotheses grows proportionally to the coherent correlation time that should be necessarily increased to detect weak signals. In practice, the coherent correlation time should be more than 1000 times longer for extremely weak GNSS signals so the number of Doppler frequency hypotheses is greater than 20,000. On the other hand, the SDHT algorithm indirectly tests the Doppler frequency hypothesis utilizing the coherent correlation results of neighboring hypotheses.

Therefore, using SDHT, only around 20 hypotheses are tested using conventional correlation techniques and the remaining 19,980 hypotheses are calculated with simple mathematical operations. As a result, SDHT achieves a huge computational cost reduction (by about 1000 times) and is 800 times faster for signal acquisition compared to conventional techniques. This means only about 15 seconds is required to detect extremely weak GNSS signals in buildings using a personal computer.

The team predicts further studies for strengthening SDHT technology and developing positioning systems robust enough to multipath in indoor environments will allow indoor GNSS measurements within several seconds inside most buildings using GNSS alone.

Professor Kong said, “This development made us the leader in indoor GNSS positioning technology in the world.” He continued, “We hope to commercialize indoor GNSS systems to create a new market.” The research team is currently registering a patent in Korea and applying for patents overseas, as well as planning to commercialize the technology with the help of the Institute for Startup KAIST.

(Figure1. Positioning Results for the GPS Indoor Positioning System using SDHT Technology)

2017.11.02 View 7174 -

Face Recognition System 'K-Eye' Presented by KAIST

Artificial intelligence (AI) is one of the key emerging technologies. Global IT companies are competitively launching the newest technologies and competition is heating up more than ever. However, most AI technologies focus on software and their operating speeds are low, making them a poor fit for mobile devices. Therefore, many big companies are investing to develop semiconductor chips for running AI programs with low power requirements but at high speeds.

A research team led by Professor Hoi-Jun Yoo of the Department of Electrical Engineering has developed a semiconductor chip, CNNP (CNN Processor), that runs AI algorithms with ultra-low power, and K-Eye, a face recognition system using CNNP. The system was made in collaboration with a start-up company, UX Factory Co.

The K-Eye series consists of two types: a wearable type and a dongle type. The wearable type device can be used with a smartphone via Bluetooth, and it can operate for more than 24 hours with its internal battery. Users hanging K-Eye around their necks can conveniently check information about people by using their smartphone or smart watch, which connects K-Eye and allows users to access a database via their smart devices. A smartphone with K-EyeQ, the dongle type device, can recognize and share information about users at any time.

When recognizing that an authorized user is looking at its screen, the smartphone automatically turns on without a passcode, fingerprint, or iris authentication. Since it can distinguish whether an input face is coming from a saved photograph versus a real person, the smartphone cannot be tricked by the user’s photograph.

The K-Eye series carries other distinct features. It can detect a face at first and then recognize it, and it is possible to maintain “Always-on” status with low power consumption of less than 1mW. To accomplish this, the research team proposed two key technologies: an image sensor with “Always-on” face detection and the CNNP face recognition chip.

The first key technology, the “Always-on” image sensor, can determine if there is a face in its camera range. Then, it can capture frames and set the device to operate only when a face exists, reducing the standby power significantly. The face detection sensor combines analog and digital processing to reduce power consumption. With this approach, the analog processor, combined with the CMOS Image Sensor array, distinguishes the background area from the area likely to include a face, and the digital processor then detects the face only in the selected area. Hence, it becomes effective in terms of frame capture, face detection processing, and memory usage.

The second key technology, CNNP, achieved incredibly low power consumption by optimizing a convolutional neural network (CNN) in the areas of circuitry, architecture, and algorithms. First, the on-chip memory integrated in CNNP is specially designed to enable data to be read in a vertical direction as well as in a horizontal direction. Second, it has immense computational power with 1024 multipliers and accumulators operating in parallel and is capable of directly transferring the temporal results to each other without accessing to the external memory or on-chip communication network. Third, convolution calculations with a two-dimensional filter in the CNN algorithm are approximated into two sequential calculations of one-dimensional filters to achieve higher speeds and lower power consumption.

With these new technologies, CNNP achieved 97% high accuracy but consumed only 1/5000 power of the GPU. Face recognition can be performed with only 0.62mW of power consumption, and the chip can show higher performance than the GPU by using more power.

These chips were developed by Kyeongryeol Bong, a Ph. D. student under Professor Yoo and presented at the International Solid-State Circuit Conference (ISSCC) held in San Francisco in February. CNNP, which has the lowest reported power consumption in the world, has achieved a great deal of attention and has led to the development of the present K-Eye series for face recognition.

Professor Yoo said “AI - processors will lead the era of the Fourth Industrial Revolution. With the development of this AI chip, we expect Korea to take the lead in global AI technology.”

The research team and UX Factory Co. are preparing to commercialize the K-Eye series by the end of this year. According to a market researcher IDC, the market scale of the AI industry will grow from $127 billion last year to $165 billion in this year.

(Photo caption: Schematic diagram of K-Eye system)

2017.06.14 View 17092

Face Recognition System 'K-Eye' Presented by KAIST

Artificial intelligence (AI) is one of the key emerging technologies. Global IT companies are competitively launching the newest technologies and competition is heating up more than ever. However, most AI technologies focus on software and their operating speeds are low, making them a poor fit for mobile devices. Therefore, many big companies are investing to develop semiconductor chips for running AI programs with low power requirements but at high speeds.

A research team led by Professor Hoi-Jun Yoo of the Department of Electrical Engineering has developed a semiconductor chip, CNNP (CNN Processor), that runs AI algorithms with ultra-low power, and K-Eye, a face recognition system using CNNP. The system was made in collaboration with a start-up company, UX Factory Co.

The K-Eye series consists of two types: a wearable type and a dongle type. The wearable type device can be used with a smartphone via Bluetooth, and it can operate for more than 24 hours with its internal battery. Users hanging K-Eye around their necks can conveniently check information about people by using their smartphone or smart watch, which connects K-Eye and allows users to access a database via their smart devices. A smartphone with K-EyeQ, the dongle type device, can recognize and share information about users at any time.

When recognizing that an authorized user is looking at its screen, the smartphone automatically turns on without a passcode, fingerprint, or iris authentication. Since it can distinguish whether an input face is coming from a saved photograph versus a real person, the smartphone cannot be tricked by the user’s photograph.

The K-Eye series carries other distinct features. It can detect a face at first and then recognize it, and it is possible to maintain “Always-on” status with low power consumption of less than 1mW. To accomplish this, the research team proposed two key technologies: an image sensor with “Always-on” face detection and the CNNP face recognition chip.

The first key technology, the “Always-on” image sensor, can determine if there is a face in its camera range. Then, it can capture frames and set the device to operate only when a face exists, reducing the standby power significantly. The face detection sensor combines analog and digital processing to reduce power consumption. With this approach, the analog processor, combined with the CMOS Image Sensor array, distinguishes the background area from the area likely to include a face, and the digital processor then detects the face only in the selected area. Hence, it becomes effective in terms of frame capture, face detection processing, and memory usage.

The second key technology, CNNP, achieved incredibly low power consumption by optimizing a convolutional neural network (CNN) in the areas of circuitry, architecture, and algorithms. First, the on-chip memory integrated in CNNP is specially designed to enable data to be read in a vertical direction as well as in a horizontal direction. Second, it has immense computational power with 1024 multipliers and accumulators operating in parallel and is capable of directly transferring the temporal results to each other without accessing to the external memory or on-chip communication network. Third, convolution calculations with a two-dimensional filter in the CNN algorithm are approximated into two sequential calculations of one-dimensional filters to achieve higher speeds and lower power consumption.

With these new technologies, CNNP achieved 97% high accuracy but consumed only 1/5000 power of the GPU. Face recognition can be performed with only 0.62mW of power consumption, and the chip can show higher performance than the GPU by using more power.

These chips were developed by Kyeongryeol Bong, a Ph. D. student under Professor Yoo and presented at the International Solid-State Circuit Conference (ISSCC) held in San Francisco in February. CNNP, which has the lowest reported power consumption in the world, has achieved a great deal of attention and has led to the development of the present K-Eye series for face recognition.

Professor Yoo said “AI - processors will lead the era of the Fourth Industrial Revolution. With the development of this AI chip, we expect Korea to take the lead in global AI technology.”

The research team and UX Factory Co. are preparing to commercialize the K-Eye series by the end of this year. According to a market researcher IDC, the market scale of the AI industry will grow from $127 billion last year to $165 billion in this year.

(Photo caption: Schematic diagram of K-Eye system)

2017.06.14 View 17092 -

Crowdsourcing-Based Global Indoor Positioning System

Research team of Professor Dong-Soo Han of the School of Computing Intelligent Service Lab at KAIST developed a system for providing global indoor localization using Wi-Fi signals. The technology uses numerous smartphones to collect fingerprints of location data and label them automatically, significantly reducing the cost of constructing an indoor localization system while maintaining high accuracy.

The method can be used in any building in the world, provided the floor plan is available and there are Wi-Fi fingerprints to collect. To accurately collect and label the location information of the Wi-Fi fingerprints, the research team analyzed indoor space utilization. This led to technology that classified indoor spaces into places used for stationary tasks (resting spaces) and spaces used to reach said places (transient spaces), and utilized separate algorithms to optimally and automatically collect location labelling data.

Years ago, the team implemented a way to automatically label resting space locations from signals collected in various contexts such as homes, shops, and offices via the users’ home or office address information. The latest method allows for the automatic labelling of transient space locations such as hallways, lobbies, and stairs using unsupervised learning, without any additional location information. Testing in KAIST’s N5 building and the 7th floor of N1 building manifested the technology is capable of accuracy up to three or four meters given enough training data. The accuracy level is comparable to technology using manually-labeled location information.

Google, Microsoft, and other multinational corporations collected tens of thousands of floor plans for their indoor localization projects. Indoor radio map construction was also attempted by the firms but proved more difficult. As a result, existing indoor localization services were often plagued by inaccuracies. In Korea, COEX, Lotte World Tower, and other landmarks provide comparatively accurate indoor localization, but most buildings suffer from the lack of radio maps, preventing indoor localization services.

Professor Han said, “This technology allows the easy deployment of highly accurate indoor localization systems in any building in the world. In the near future, most indoor spaces will be able to provide localization services, just like outdoor spaces.” He further added that smartphone-collected Wi-Fi fingerprints have been unutilized and often discarded, but now they should be treated as invaluable resources, which create a new big data field of Wi-Fi fingerprints. This new indoor navigation technology is likely to be valuable to Google, Apple, or other global firms providing indoor positioning services globally. The technology will also be valuable for helping domestic firms provide positioning services.

Professor Han added that “the new global indoor localization system deployment technology will be added to KAILOS, KAIST’s indoor localization system.” KAILOS was released in 2014 as KAIST’s open platform for indoor localization service, allowing anyone in the world to add floor plans to KAILOS, and collect the building’s Wi-Fi fingerprints for a universal indoor localization service. As localization accuracy improves in indoor environments, despite the absence of GPS signals, applications such as location-based SNS, location-based IoT, and location-based O2O are expected to take off, leading to various improvements in convenience and safety. Integrated indoor-outdoor navigation services are also visible on the horizon, fusing vehicular navigation technology with indoor navigation.

Professor Han’s research was published in IEEE Transactions on Mobile Computing (TMC) in November in 2016.

For more, please visit http://ieeexplore.ieee.org/stamp/stamp.jsp?arnumber=7349230http://ieeexplore.ieee.org/document/7805133/

2017.04.06 View 9979

Crowdsourcing-Based Global Indoor Positioning System

Research team of Professor Dong-Soo Han of the School of Computing Intelligent Service Lab at KAIST developed a system for providing global indoor localization using Wi-Fi signals. The technology uses numerous smartphones to collect fingerprints of location data and label them automatically, significantly reducing the cost of constructing an indoor localization system while maintaining high accuracy.

The method can be used in any building in the world, provided the floor plan is available and there are Wi-Fi fingerprints to collect. To accurately collect and label the location information of the Wi-Fi fingerprints, the research team analyzed indoor space utilization. This led to technology that classified indoor spaces into places used for stationary tasks (resting spaces) and spaces used to reach said places (transient spaces), and utilized separate algorithms to optimally and automatically collect location labelling data.

Years ago, the team implemented a way to automatically label resting space locations from signals collected in various contexts such as homes, shops, and offices via the users’ home or office address information. The latest method allows for the automatic labelling of transient space locations such as hallways, lobbies, and stairs using unsupervised learning, without any additional location information. Testing in KAIST’s N5 building and the 7th floor of N1 building manifested the technology is capable of accuracy up to three or four meters given enough training data. The accuracy level is comparable to technology using manually-labeled location information.

Google, Microsoft, and other multinational corporations collected tens of thousands of floor plans for their indoor localization projects. Indoor radio map construction was also attempted by the firms but proved more difficult. As a result, existing indoor localization services were often plagued by inaccuracies. In Korea, COEX, Lotte World Tower, and other landmarks provide comparatively accurate indoor localization, but most buildings suffer from the lack of radio maps, preventing indoor localization services.

Professor Han said, “This technology allows the easy deployment of highly accurate indoor localization systems in any building in the world. In the near future, most indoor spaces will be able to provide localization services, just like outdoor spaces.” He further added that smartphone-collected Wi-Fi fingerprints have been unutilized and often discarded, but now they should be treated as invaluable resources, which create a new big data field of Wi-Fi fingerprints. This new indoor navigation technology is likely to be valuable to Google, Apple, or other global firms providing indoor positioning services globally. The technology will also be valuable for helping domestic firms provide positioning services.

Professor Han added that “the new global indoor localization system deployment technology will be added to KAILOS, KAIST’s indoor localization system.” KAILOS was released in 2014 as KAIST’s open platform for indoor localization service, allowing anyone in the world to add floor plans to KAILOS, and collect the building’s Wi-Fi fingerprints for a universal indoor localization service. As localization accuracy improves in indoor environments, despite the absence of GPS signals, applications such as location-based SNS, location-based IoT, and location-based O2O are expected to take off, leading to various improvements in convenience and safety. Integrated indoor-outdoor navigation services are also visible on the horizon, fusing vehicular navigation technology with indoor navigation.

Professor Han’s research was published in IEEE Transactions on Mobile Computing (TMC) in November in 2016.

For more, please visit http://ieeexplore.ieee.org/stamp/stamp.jsp?arnumber=7349230http://ieeexplore.ieee.org/document/7805133/

2017.04.06 View 9979 -

Professor Kwon to Represent the Asia-Pacific Region of the IEEE RAS

Professor Dong-Soon Kwon of the Mechanical Engineering Department at KAIST has been reappointed to the Administrative Committee of the Institute of Electrical and Electronics Engineers (IEEE) Robotics and Automation Society (IEEE RAS). Beginning January 1, 2017, he will serve his second three-year term, which will end in 2019. In 2014, he was the first Korean appointed to the committee, representing the Asia-Pacific community of the IEEE Society.

Professor Kwon said, “I feel thankful but, at the same time, it is a great responsibility to serve the Asian research community within the Society. I hope I can contribute to the development of robotics engineering in the region and in Korea as well.”

Consisted of 18 elected members, the administrative committee manages the major activities of IEEE RAS including hosting its annual flagship meeting, the International Conference on Robotics and Automation.

The IEEE RAS fosters the advancement in the theory and practice of robotics and automation engineering and facilitates the exchange of scientific and technological knowledge that supports the maintenance of high professional standards among its members.

2016.12.06 View 10256

Professor Kwon to Represent the Asia-Pacific Region of the IEEE RAS

Professor Dong-Soon Kwon of the Mechanical Engineering Department at KAIST has been reappointed to the Administrative Committee of the Institute of Electrical and Electronics Engineers (IEEE) Robotics and Automation Society (IEEE RAS). Beginning January 1, 2017, he will serve his second three-year term, which will end in 2019. In 2014, he was the first Korean appointed to the committee, representing the Asia-Pacific community of the IEEE Society.

Professor Kwon said, “I feel thankful but, at the same time, it is a great responsibility to serve the Asian research community within the Society. I hope I can contribute to the development of robotics engineering in the region and in Korea as well.”

Consisted of 18 elected members, the administrative committee manages the major activities of IEEE RAS including hosting its annual flagship meeting, the International Conference on Robotics and Automation.

The IEEE RAS fosters the advancement in the theory and practice of robotics and automation engineering and facilitates the exchange of scientific and technological knowledge that supports the maintenance of high professional standards among its members.

2016.12.06 View 10256 -

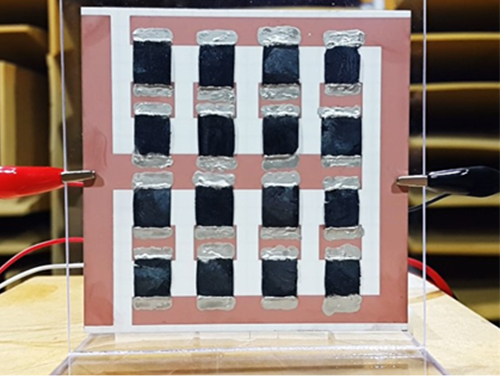

Extremely Thin and Highly Flexible Graphene-Based Thermoacoustic Speakers

A joint research team led by Professors Jung-Woo Choi and Byung Jin Cho of the School of Electrical Engineering and Professor Sang Ouk Kim of the Material Science and Engineering Department, all on the faculty of the Korea Advanced Institute of Science and Technology (KAIST), has developed a simpler way to mass-produce ultra-thin graphene thermosacoustic speakers.

Their research results were published online on August 17, 2016 in a journal called Applied Materials & Interfaces. The IEEE Spectrum, a monthly magazine published by the Institute of Electrical and Electronics Engineers, reported on the research on September 9, 2016, in an article titled, “Graphene Enables Flat Speakers for Mobile Audio Systems.” The American Chemical Society also drew attention to the team’s work in its article dated September 7, 2016, “Bringing Graphene Speakers to the Mobile Market.”

Thermoacoustic speakers generate sound waves from temperature fluctuations by rapidly heating and cooling conducting materials. Unlike conventional voice-coil speakers, thermoacoustic speakers do not rely on vibrations to produce sound, and thus do not need bulky acoustic boxes to keep complicated mechanical parts for sound production. They also generate good quality sound in all directions, enabling them to be placed on any surface including curved ones without canceling out sounds generated from opposite sides.

Based on a two-step, template-free fabrication method that involved freeze-drying a solution of graphene oxide flakes and the reduction/doping of oxidized graphene to improve electrical properties, the research team produced a N-doped, three-dimensional (3D), reduced graphene oxide aerogel (N-rGOA) with a porous macroscopic structure that permitted easy modulation for many potential applications.

Using 3D graphene aerogels, the team succeeded in fabricating an array of loudspeakers that were able to withstand over 40 W input power and that showed excellent sound pressure level (SPL), comparable to those of previously reported 2D and 3D graphene loudspeakers.

Choong Sun Kim, the lead author of the research paper and a doctoral student in the School of Electrical Engineering at KAIST, said:

“Thermoacoustic speakers have a higher efficiency when conducting materials have a smaller heat capacity. Nanomaterials such as graphene are an ideal candidate for conductors, but they require a substrate to support their extremely thinness. The substrate’s tendency to lose heat lowers the speakers’ efficiency. Here, we developed 3D graphene aerogels without a substrate by using a simple two-step process. With graphene aerogels, we have fabricated an array of loudspeakers that demonstrated stable performance. This is a practical technology that will enable mass-production of thermosacoustic speakers including on mobile platforms.”

The research paper is entitled “Application of N-Doped Three-Dimensional Reduced Graphene Oxide Aerogel to Thin Film Loudspeaker.” (DOI: 10.1021/acsami.6b03618)

Figure 1: A Thermoacoustic Loudspeaker Consisted of an Array of 16 3D Graphene Aerogels

Figure 2: Two-step Fabrication Process of 3D Reduced Graphene Oxide Aerogel Using Freeze-Drying and Reduction/Doping

Figure 3: X-ray Photoelectron Spectroscopy Graph of the 3D Reduced Graphene Oxide Aerogel and Its Scanning Electron Microscope Image

2016.10.05 View 14205

Extremely Thin and Highly Flexible Graphene-Based Thermoacoustic Speakers

A joint research team led by Professors Jung-Woo Choi and Byung Jin Cho of the School of Electrical Engineering and Professor Sang Ouk Kim of the Material Science and Engineering Department, all on the faculty of the Korea Advanced Institute of Science and Technology (KAIST), has developed a simpler way to mass-produce ultra-thin graphene thermosacoustic speakers.

Their research results were published online on August 17, 2016 in a journal called Applied Materials & Interfaces. The IEEE Spectrum, a monthly magazine published by the Institute of Electrical and Electronics Engineers, reported on the research on September 9, 2016, in an article titled, “Graphene Enables Flat Speakers for Mobile Audio Systems.” The American Chemical Society also drew attention to the team’s work in its article dated September 7, 2016, “Bringing Graphene Speakers to the Mobile Market.”

Thermoacoustic speakers generate sound waves from temperature fluctuations by rapidly heating and cooling conducting materials. Unlike conventional voice-coil speakers, thermoacoustic speakers do not rely on vibrations to produce sound, and thus do not need bulky acoustic boxes to keep complicated mechanical parts for sound production. They also generate good quality sound in all directions, enabling them to be placed on any surface including curved ones without canceling out sounds generated from opposite sides.

Based on a two-step, template-free fabrication method that involved freeze-drying a solution of graphene oxide flakes and the reduction/doping of oxidized graphene to improve electrical properties, the research team produced a N-doped, three-dimensional (3D), reduced graphene oxide aerogel (N-rGOA) with a porous macroscopic structure that permitted easy modulation for many potential applications.

Using 3D graphene aerogels, the team succeeded in fabricating an array of loudspeakers that were able to withstand over 40 W input power and that showed excellent sound pressure level (SPL), comparable to those of previously reported 2D and 3D graphene loudspeakers.

Choong Sun Kim, the lead author of the research paper and a doctoral student in the School of Electrical Engineering at KAIST, said:

“Thermoacoustic speakers have a higher efficiency when conducting materials have a smaller heat capacity. Nanomaterials such as graphene are an ideal candidate for conductors, but they require a substrate to support their extremely thinness. The substrate’s tendency to lose heat lowers the speakers’ efficiency. Here, we developed 3D graphene aerogels without a substrate by using a simple two-step process. With graphene aerogels, we have fabricated an array of loudspeakers that demonstrated stable performance. This is a practical technology that will enable mass-production of thermosacoustic speakers including on mobile platforms.”

The research paper is entitled “Application of N-Doped Three-Dimensional Reduced Graphene Oxide Aerogel to Thin Film Loudspeaker.” (DOI: 10.1021/acsami.6b03618)

Figure 1: A Thermoacoustic Loudspeaker Consisted of an Array of 16 3D Graphene Aerogels

Figure 2: Two-step Fabrication Process of 3D Reduced Graphene Oxide Aerogel Using Freeze-Drying and Reduction/Doping

Figure 3: X-ray Photoelectron Spectroscopy Graph of the 3D Reduced Graphene Oxide Aerogel and Its Scanning Electron Microscope Image

2016.10.05 View 14205 -

KAIST Researchers Receive the 2016 IEEE William R. Bennett Prize

A research team led by Professors Yung Yi and Song Chong from the Electrical Engineering Department at KAIST has been awarded the 2016 William R. Bennett Prize of the Institute of Electrical and Electronics Engineers (IEEE), which is the most prestigious award in the field of communications network. The IEEE bestows the honor annually and selects winning papers from among those published in the past three years for its quality, originality, scientific citation index, and peer reviews.

The IEEE award ceremony will take place on May 24, 2016 at the IEEE International Conference on Communications in Kuala Lumpur, Malaysia.

The team members include Dr. Kyoung-Han Lee, a KAIST graduate, who is currently a professor at Ulsan National Institute of Science and Technology (UNIST) in Korea, Dr. Joo-Hyun Lee, a postdoctoral researcher at Ohio State University in the United States, and In-Jong Rhee, a vice president of the Mobile Division at Samsung Electronics. The same KAIST team previously received the award back in 2013, making them the second recipient ever to win the IEEE William R. Bennett Prize twice.

Past winners include Professors Robert Gallager of the Massachusetts Institute of Technology (MIT), Sachin Katti of Stanford University, and Ion Stoica of the University of California at Berkeley.

The research team received the Bennett award for their work on “Mobile Data Offloading: How Much Can WiFi Deliver?” Their research paper has been cited more than 500 times since its publication in 2013. They proposed an original method to effectively offload the cellular network and maximize the Wi-Fi network usage by analyzing the pattern of individual human mobility in daily life.

2016.05.02 View 13985

KAIST Researchers Receive the 2016 IEEE William R. Bennett Prize

A research team led by Professors Yung Yi and Song Chong from the Electrical Engineering Department at KAIST has been awarded the 2016 William R. Bennett Prize of the Institute of Electrical and Electronics Engineers (IEEE), which is the most prestigious award in the field of communications network. The IEEE bestows the honor annually and selects winning papers from among those published in the past three years for its quality, originality, scientific citation index, and peer reviews.

The IEEE award ceremony will take place on May 24, 2016 at the IEEE International Conference on Communications in Kuala Lumpur, Malaysia.

The team members include Dr. Kyoung-Han Lee, a KAIST graduate, who is currently a professor at Ulsan National Institute of Science and Technology (UNIST) in Korea, Dr. Joo-Hyun Lee, a postdoctoral researcher at Ohio State University in the United States, and In-Jong Rhee, a vice president of the Mobile Division at Samsung Electronics. The same KAIST team previously received the award back in 2013, making them the second recipient ever to win the IEEE William R. Bennett Prize twice.

Past winners include Professors Robert Gallager of the Massachusetts Institute of Technology (MIT), Sachin Katti of Stanford University, and Ion Stoica of the University of California at Berkeley.

The research team received the Bennett award for their work on “Mobile Data Offloading: How Much Can WiFi Deliver?” Their research paper has been cited more than 500 times since its publication in 2013. They proposed an original method to effectively offload the cellular network and maximize the Wi-Fi network usage by analyzing the pattern of individual human mobility in daily life.

2016.05.02 View 13985 -

K-Glass 3 Offers Users a Keyboard to Type Text

KAIST researchers upgraded their smart glasses with a low-power multicore processor to employ stereo vision and deep-learning algorithms, making the user interface and experience more intuitive and convenient.

K-Glass, smart glasses reinforced with augmented reality (AR) that were first developed by KAIST in 2014, with the second version released in 2015, is back with an even stronger model. The latest version, which KAIST researchers are calling K-Glass 3, allows users to text a message or type in key words for Internet surfing by offering a virtual keyboard for text and even one for a piano.

Currently, most wearable head-mounted displays (HMDs) suffer from a lack of rich user interfaces, short battery lives, and heavy weight. Some HMDs, such as Google Glass, use a touch panel and voice commands as an interface, but they are considered merely an extension of smartphones and are not optimized for wearable smart glasses. Recently, gaze recognition was proposed for HMDs including K-Glass 2, but gaze cannot be realized as a natural user interface (UI) and experience (UX) due to its limited interactivity and lengthy gaze-calibration time, which can be up to several minutes.

As a solution, Professor Hoi-Jun Yoo and his team from the Electrical Engineering Department recently developed K-Glass 3 with a low-power natural UI and UX processor. This processor is composed of a pre-processing core to implement stereo vision, seven deep-learning cores to accelerate real-time scene recognition within 33 milliseconds, and one rendering engine for the display.

The stereo-vision camera, located on the front of K-Glass 3, works in a manner similar to three dimension (3D) sensing in human vision. The camera’s two lenses, displayed horizontally from one another just like depth perception produced by left and right eyes, take pictures of the same objects or scenes and combine these two different images to extract spatial depth information, which is necessary to reconstruct 3D environments. The camera’s vision algorithm has an energy efficiency of 20 milliwatts on average, allowing it to operate in the Glass more than 24 hours without interruption.

The research team adopted deep-learning-multi core technology dedicated for mobile devices. This technology has greatly improved the Glass’s recognition accuracy with images and speech, while shortening the time needed to process and analyze data. In addition, the Glass’s multi-core processor is advanced enough to become idle when it detects no motion from users. Instead, it executes complex deep-learning algorithms with a minimal power to achieve high performance.

Professor Yoo said, “We have succeeded in fabricating a low-power multi-core processer that consumes only 126 milliwatts of power with a high efficiency rate. It is essential to develop a smaller, lighter, and low-power processor if we want to incorporate the widespread use of smart glasses and wearable devices into everyday life. K-Glass 3’s more intuitive UI and convenient UX permit users to enjoy enhanced AR experiences such as a keyboard or a better, more responsive mouse.”

Along with the research team, UX Factory, a Korean UI and UX developer, participated in the K-Glass 3 project.

These research results entitled “A 126.1mW Real-Time Natural UI/UX Processor with Embedded Deep-Learning Core for Low-Power Smart Glasses” (lead author: Seong-Wook Park, a doctoral student in the Electrical Engineering Department, KAIST) were presented at the 2016 IEEE (Institute of Electrical and Electronics Engineers) International Solid-State Circuits Conference (ISSCC) that took place January 31-February 4, 2016 in San Francisco, California.

YouTube Link: https://youtu.be/If_anx5NerQ

Figure 1: K-Glass 3

K-Glass 3 is equipped with a stereo camera, dual microphones, a WiFi module, and eight batteries to offer higher recognition accuracy and enhanced augmented reality experiences than previous models.

Figure 2: Architecture of the Low-Power Multi-Core Processor

K-Glass 3’s processor is designed to include several cores for pre-processing, deep-learning, and graphic rendering.

Figure 3: Virtual Text and Piano Keyboard

K-Glass 3 can detect hands and recognize their movements to provide users with such augmented reality applications as a virtual text or piano keyboard.

2016.02.26 View 13461

K-Glass 3 Offers Users a Keyboard to Type Text

KAIST researchers upgraded their smart glasses with a low-power multicore processor to employ stereo vision and deep-learning algorithms, making the user interface and experience more intuitive and convenient.

K-Glass, smart glasses reinforced with augmented reality (AR) that were first developed by KAIST in 2014, with the second version released in 2015, is back with an even stronger model. The latest version, which KAIST researchers are calling K-Glass 3, allows users to text a message or type in key words for Internet surfing by offering a virtual keyboard for text and even one for a piano.

Currently, most wearable head-mounted displays (HMDs) suffer from a lack of rich user interfaces, short battery lives, and heavy weight. Some HMDs, such as Google Glass, use a touch panel and voice commands as an interface, but they are considered merely an extension of smartphones and are not optimized for wearable smart glasses. Recently, gaze recognition was proposed for HMDs including K-Glass 2, but gaze cannot be realized as a natural user interface (UI) and experience (UX) due to its limited interactivity and lengthy gaze-calibration time, which can be up to several minutes.

As a solution, Professor Hoi-Jun Yoo and his team from the Electrical Engineering Department recently developed K-Glass 3 with a low-power natural UI and UX processor. This processor is composed of a pre-processing core to implement stereo vision, seven deep-learning cores to accelerate real-time scene recognition within 33 milliseconds, and one rendering engine for the display.

The stereo-vision camera, located on the front of K-Glass 3, works in a manner similar to three dimension (3D) sensing in human vision. The camera’s two lenses, displayed horizontally from one another just like depth perception produced by left and right eyes, take pictures of the same objects or scenes and combine these two different images to extract spatial depth information, which is necessary to reconstruct 3D environments. The camera’s vision algorithm has an energy efficiency of 20 milliwatts on average, allowing it to operate in the Glass more than 24 hours without interruption.

The research team adopted deep-learning-multi core technology dedicated for mobile devices. This technology has greatly improved the Glass’s recognition accuracy with images and speech, while shortening the time needed to process and analyze data. In addition, the Glass’s multi-core processor is advanced enough to become idle when it detects no motion from users. Instead, it executes complex deep-learning algorithms with a minimal power to achieve high performance.

Professor Yoo said, “We have succeeded in fabricating a low-power multi-core processer that consumes only 126 milliwatts of power with a high efficiency rate. It is essential to develop a smaller, lighter, and low-power processor if we want to incorporate the widespread use of smart glasses and wearable devices into everyday life. K-Glass 3’s more intuitive UI and convenient UX permit users to enjoy enhanced AR experiences such as a keyboard or a better, more responsive mouse.”

Along with the research team, UX Factory, a Korean UI and UX developer, participated in the K-Glass 3 project.

These research results entitled “A 126.1mW Real-Time Natural UI/UX Processor with Embedded Deep-Learning Core for Low-Power Smart Glasses” (lead author: Seong-Wook Park, a doctoral student in the Electrical Engineering Department, KAIST) were presented at the 2016 IEEE (Institute of Electrical and Electronics Engineers) International Solid-State Circuits Conference (ISSCC) that took place January 31-February 4, 2016 in San Francisco, California.

YouTube Link: https://youtu.be/If_anx5NerQ

Figure 1: K-Glass 3

K-Glass 3 is equipped with a stereo camera, dual microphones, a WiFi module, and eight batteries to offer higher recognition accuracy and enhanced augmented reality experiences than previous models.

Figure 2: Architecture of the Low-Power Multi-Core Processor

K-Glass 3’s processor is designed to include several cores for pre-processing, deep-learning, and graphic rendering.

Figure 3: Virtual Text and Piano Keyboard

K-Glass 3 can detect hands and recognize their movements to provide users with such augmented reality applications as a virtual text or piano keyboard.

2016.02.26 View 13461 -

Professor Keon-Jae Lee Lectures at IEDM and ISSCC Forums

Professor Keon-Jae Lee of KAIST’s Materials Science and Engineering Department delivered a speech at the 2015 Institute of Electrical and Electronics Engineers (IEEE) International Electron Devices Meeting (IEDM) held on December 7-9, 2015 in Washington, D.C.

He will also present a speech at the 2016 International Solid-State Circuits Conference scheduled on January 31-February 4, 2016 in San Francisco, California.

Both professional gatherings are considered the world’s most renowned forums in electronic devices and semiconductor technology. It is rare for a Korean researcher to be invited to speak at these global conferences.

Professor Lee was recognized for his research on flexible NAND chips. The Korea Times, an English language daily newspaper in Korea, reported on his participation in the forums and his recent work. An excerpt of the article follows below:

“KAIST Professor to Lecture at Renowned Tech Forums”

By Lee Min-hyung, The Korea Times, November 26, 2015

Recently he has focused on delivering technologies for producing flexible materials that can be applied to everyday life. The flexible NAND flash memory chips are expected to be widely used for developing flexible handsets. His latest research also includes flexible light-emitting diodes (LED) for implantable biomedical applications.

Lee is currently running a special laboratory focused on developing new flexible nano-materials. The research group is working to develop what it calls “self-powered flexible electronic systems” using nanomaterials and electronic technology.

Lee’s achievement with flexible NAND chips was published in the October edition of Nano Letters, the renowned U.S.-based scientific journal.

He said that flexible memory chips will be used to develop wearable computers that can be installed anywhere.

2015.11.26 View 11986

Professor Keon-Jae Lee Lectures at IEDM and ISSCC Forums

Professor Keon-Jae Lee of KAIST’s Materials Science and Engineering Department delivered a speech at the 2015 Institute of Electrical and Electronics Engineers (IEEE) International Electron Devices Meeting (IEDM) held on December 7-9, 2015 in Washington, D.C.

He will also present a speech at the 2016 International Solid-State Circuits Conference scheduled on January 31-February 4, 2016 in San Francisco, California.

Both professional gatherings are considered the world’s most renowned forums in electronic devices and semiconductor technology. It is rare for a Korean researcher to be invited to speak at these global conferences.

Professor Lee was recognized for his research on flexible NAND chips. The Korea Times, an English language daily newspaper in Korea, reported on his participation in the forums and his recent work. An excerpt of the article follows below:

“KAIST Professor to Lecture at Renowned Tech Forums”

By Lee Min-hyung, The Korea Times, November 26, 2015

Recently he has focused on delivering technologies for producing flexible materials that can be applied to everyday life. The flexible NAND flash memory chips are expected to be widely used for developing flexible handsets. His latest research also includes flexible light-emitting diodes (LED) for implantable biomedical applications.

Lee is currently running a special laboratory focused on developing new flexible nano-materials. The research group is working to develop what it calls “self-powered flexible electronic systems” using nanomaterials and electronic technology.

Lee’s achievement with flexible NAND chips was published in the October edition of Nano Letters, the renowned U.S.-based scientific journal.

He said that flexible memory chips will be used to develop wearable computers that can be installed anywhere.

2015.11.26 View 11986 -

KAIST's Research Team Receives the Best Paper Award from the IEEE Transaction on Power Electronics

A research team led by Professor Chun T. Rim of the Department of Nuclear and Quantum Engineering at the Korea Advanced Institute of Science and Technology (KAIST) has received the First Prize Papers Award from the IEEE (Institute of Electrical and Electronics Engineers) Transactions on Power Electronics (TPEL), a peer-reviewed journal that covers fundamental technologies used in the control and conversion of electric power.

A total of three research papers received this award in 2015.

Each year, TPEL’s editors select three best papers among those published in the journal during the preceding calendar year. In 2014, the TPEL published 579 papers. Professor Rim’s paper was picked out as one of the three papers published last year for the First Prize Papers Award.

Entitled “Generalized Active EMF (electromagnetic field) Cancel Methods for Wireless Electric Vehicles (http://ieeexplore.ieee.org/xpls/abs_all.jsp?arnumber=6684288&tag=1),” the paper proposed, for the first time in the world, three generalized design methods for cancelling the total EMF generated from wireless electric vehicles. This technology, researchers said, can be applied to any wireless power transfer systems.

The award ceremony will be held at the upcoming conference of the 2015 IEEE Energy Conversion Congress and Expo in September in Montreal, Canada.

2015.08.27 View 11063

KAIST's Research Team Receives the Best Paper Award from the IEEE Transaction on Power Electronics

A research team led by Professor Chun T. Rim of the Department of Nuclear and Quantum Engineering at the Korea Advanced Institute of Science and Technology (KAIST) has received the First Prize Papers Award from the IEEE (Institute of Electrical and Electronics Engineers) Transactions on Power Electronics (TPEL), a peer-reviewed journal that covers fundamental technologies used in the control and conversion of electric power.

A total of three research papers received this award in 2015.

Each year, TPEL’s editors select three best papers among those published in the journal during the preceding calendar year. In 2014, the TPEL published 579 papers. Professor Rim’s paper was picked out as one of the three papers published last year for the First Prize Papers Award.

Entitled “Generalized Active EMF (electromagnetic field) Cancel Methods for Wireless Electric Vehicles (http://ieeexplore.ieee.org/xpls/abs_all.jsp?arnumber=6684288&tag=1),” the paper proposed, for the first time in the world, three generalized design methods for cancelling the total EMF generated from wireless electric vehicles. This technology, researchers said, can be applied to any wireless power transfer systems.

The award ceremony will be held at the upcoming conference of the 2015 IEEE Energy Conversion Congress and Expo in September in Montreal, Canada.

2015.08.27 View 11063