CHI

-

KAIST Professor Jiyun Lee becomes the first Korean to receive the Thurlow Award from the American Institute of Navigation

< Distinguished Professor Jiyun Lee from the KAIST Department of Aerospace Engineering >

KAIST (President Kwang-Hyung Lee) announced on January 27th that Distinguished Professor Jiyun Lee from the KAIST Department of Aerospace Engineering had won the Colonel Thomas L. Thurlow Award from the American Institute of Navigation (ION) for her achievements in the field of satellite navigation.

The American Institute of Navigation (ION) announced Distinguished Professor Lee as the winner of the Thurlow Award at its annual awards ceremony held in conjunction with its international conference in Long Beach, California on January 25th. This is the first time a person of Korean descent has received the award.

The Thurlow Award was established in 1945 to honor Colonel Thomas L. Thurlow, who made significant contributions to the development of navigation equipment and the training of navigators. This award aims to recognize an individual who has made an outstanding contribution to the development of navigation and it is awarded to one person each year. Past recipients include MIT professor Charles Stark Draper, who is well-known as the father of inertial navigation and who developed the guidance computer for the Apollo moon landing project.

Distinguished Professor Jiyun Lee was recognized for her significant contributions to technological advancements that ensure the safety of satellite-based navigation systems for aviation. In particular, she was recognized as a world authority in the field of navigation integrity architecture design, which is essential for ensuring the stability of intelligent transportation systems and autonomous unmanned systems. Distinguished Professor Lee made a groundbreaking contribution to help ensure the safety of satellite-based navigation systems from ionospheric disturbances, including those affected by sudden changes in external factors such as the solar and space environment.

She has achieved numerous scientific discoveries in the field of ionospheric research, while developing new ionospheric threat modeling methods, ionospheric anomaly monitoring and mitigation techniques, and integrity and availability assessment techniques for next-generation augmented navigation systems. She also contributed to the international standardization of technology through the International Civil Aviation Organization (ICAO).

Distinguished Professor Lee and her research group have pioneered innovative navigation technologies for the safe and autonomous operation of unmanned aerial vehicles (UAVs) and urban air mobility (UAM). She was the first to propose and develop a low-cost navigation satellite system (GNSS) augmented architecture for UAVs with a near-field network operation concept that ensures high integrity, and a networked ground station-based augmented navigation system for UAM. She also contributed to integrity design techniques, including failure monitoring and integrity risk assessment for multi-sensor integrated navigation systems.

< Professor Jiyoon Lee upon receiving the Thurlow Award >

Bradford Parkinson, professor emeritus at Stanford University and winner of the 1986 Thurlow Award, who is known as the father of GPS, congratulated Distinguished Professor Lee upon hearing that she was receiving the Thurlow Award and commented that her innovative research has addressed many important topics in the field of navigation and her solutions are highly innovative and highly regarded.

Distinguished Professor Lee said, “I am very honored and delighted to receive this award with its deep history and tradition in the field of navigation.” She added, “I will strive to help develop the future mobility industry by securing safe and sustainable navigation technology.”

2024.01.26 View 7542

KAIST Professor Jiyun Lee becomes the first Korean to receive the Thurlow Award from the American Institute of Navigation

< Distinguished Professor Jiyun Lee from the KAIST Department of Aerospace Engineering >

KAIST (President Kwang-Hyung Lee) announced on January 27th that Distinguished Professor Jiyun Lee from the KAIST Department of Aerospace Engineering had won the Colonel Thomas L. Thurlow Award from the American Institute of Navigation (ION) for her achievements in the field of satellite navigation.

The American Institute of Navigation (ION) announced Distinguished Professor Lee as the winner of the Thurlow Award at its annual awards ceremony held in conjunction with its international conference in Long Beach, California on January 25th. This is the first time a person of Korean descent has received the award.

The Thurlow Award was established in 1945 to honor Colonel Thomas L. Thurlow, who made significant contributions to the development of navigation equipment and the training of navigators. This award aims to recognize an individual who has made an outstanding contribution to the development of navigation and it is awarded to one person each year. Past recipients include MIT professor Charles Stark Draper, who is well-known as the father of inertial navigation and who developed the guidance computer for the Apollo moon landing project.

Distinguished Professor Jiyun Lee was recognized for her significant contributions to technological advancements that ensure the safety of satellite-based navigation systems for aviation. In particular, she was recognized as a world authority in the field of navigation integrity architecture design, which is essential for ensuring the stability of intelligent transportation systems and autonomous unmanned systems. Distinguished Professor Lee made a groundbreaking contribution to help ensure the safety of satellite-based navigation systems from ionospheric disturbances, including those affected by sudden changes in external factors such as the solar and space environment.

She has achieved numerous scientific discoveries in the field of ionospheric research, while developing new ionospheric threat modeling methods, ionospheric anomaly monitoring and mitigation techniques, and integrity and availability assessment techniques for next-generation augmented navigation systems. She also contributed to the international standardization of technology through the International Civil Aviation Organization (ICAO).

Distinguished Professor Lee and her research group have pioneered innovative navigation technologies for the safe and autonomous operation of unmanned aerial vehicles (UAVs) and urban air mobility (UAM). She was the first to propose and develop a low-cost navigation satellite system (GNSS) augmented architecture for UAVs with a near-field network operation concept that ensures high integrity, and a networked ground station-based augmented navigation system for UAM. She also contributed to integrity design techniques, including failure monitoring and integrity risk assessment for multi-sensor integrated navigation systems.

< Professor Jiyoon Lee upon receiving the Thurlow Award >

Bradford Parkinson, professor emeritus at Stanford University and winner of the 1986 Thurlow Award, who is known as the father of GPS, congratulated Distinguished Professor Lee upon hearing that she was receiving the Thurlow Award and commented that her innovative research has addressed many important topics in the field of navigation and her solutions are highly innovative and highly regarded.

Distinguished Professor Lee said, “I am very honored and delighted to receive this award with its deep history and tradition in the field of navigation.” She added, “I will strive to help develop the future mobility industry by securing safe and sustainable navigation technology.”

2024.01.26 View 7542 -

North Korea and Beyond: AI-Powered Satellite Analysis Reveals the Unseen Economic Landscape of Underdeveloped Nations

- A joint research team in computer science, economics, and geography has developed an artificial intelligence (AI) technology to measure grid-level economic development within six-square-kilometer regions.

- This AI technology is applicable in regions with limited statistical data (e.g., North Korea), supporting international efforts to propose policies for economic growth and poverty reduction in underdeveloped countries.

- The research team plans to make this technology freely available for use to contribute to the United Nations' Sustainable Development Goals (SDGs).

The United Nations reports that more than 700 million people are in extreme poverty, earning less than two dollars a day. However, an accurate assessment of poverty remains a global challenge. For example, 53 countries have not conducted agricultural surveys in the past 15 years, and 17 countries have not published a population census. To fill this data gap, new technologies are being explored to estimate poverty using alternative sources such as street views, aerial photos, and satellite images.

The paper published in Nature Communications demonstrates how artificial intelligence (AI) can help analyze economic conditions from daytime satellite imagery. This new technology can even apply to the least developed countries - such as North Korea - that do not have reliable statistical data for typical machine learning training.

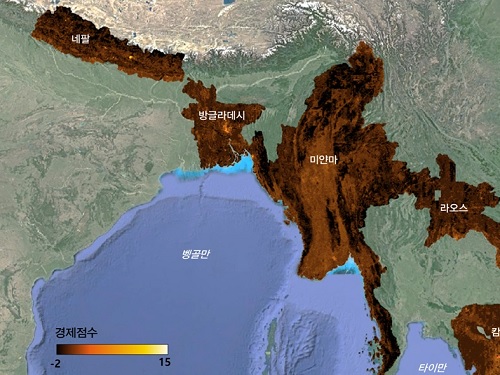

The researchers used Sentinel-2 satellite images from the European Space Agency (ESA) that are publicly available. They split these images into small six-square-kilometer grids. At this zoom level, visual information such as buildings, roads, and greenery can be used to quantify economic indicators. As a result, the team obtained the first ever fine-grained economic map of regions like North Korea. The same algorithm was applied to other underdeveloped countries in Asia: North Korea, Nepal, Laos, Myanmar, Bangladesh, and Cambodia (see Image 1).

The key feature of their research model is the "human-machine collaborative approach," which lets researchers combine human input with AI predictions for areas with scarce data. In this research, ten human experts compared satellite images and judged the economic conditions in the area, with the AI learning from this human data and giving economic scores to each image. The results showed that the Human-AI collaborative approach outperformed machine-only learning algorithms.

< Image 1. Nightlight satellite images of North Korea (Top-left: Background photo provided by NASA's Earth Observatory). South Korea appears brightly lit compared to North Korea, which is mostly dark except for Pyongyang. In contrast, the model developed by the research team uses daytime satellite imagery to predict more detailed economic predictions for North Korea (top-right) and five Asian countries (Bottom: Background photo from Google Earth). >

The research was led by an interdisciplinary team of computer scientists, economists, and a geographer from KAIST & IBS (Donghyun Ahn, Meeyoung Cha, Jihee Kim), Sogang University (Hyunjoo Yang), HKUST (Sangyoon Park), and NUS (Jeasurk Yang). Dr Charles Axelsson, Associate Editor at Nature Communications, handled this paper during the peer review process at the journal.

The research team found that the scores showed a strong correlation with traditional socio-economic metrics such as population density, employment, and number of businesses. This demonstrates the wide applicability and scalability of the approach, particularly in data-scarce countries. Furthermore, the model's strength lies in its ability to detect annual changes in economic conditions at a more detailed geospatial level without using any survey data (see Image 2).

< Image 2. Differences in satellite imagery and economic scores in North Korea between 2016 and 2019. Significant development was found in the Wonsan Kalma area (top), one of the tourist development zones, but no changes were observed in the Wiwon Industrial Development Zone (bottom). (Background photo: Sentinel-2 satellite imagery provided by the European Space Agency (ESA)). >

This model would be especially valuable for rapidly monitoring the progress of Sustainable Development Goals such as reducing poverty and promoting more equitable and sustainable growth on an international scale. The model can also be adapted to measure various social and environmental indicators. For example, it can be trained to identify regions with high vulnerability to climate change and disasters to provide timely guidance on disaster relief efforts.

As an example, the researchers explored how North Korea changed before and after the United Nations sanctions against the country. By applying the model to satellite images of North Korea both in 2016 and in 2019, the researchers discovered three key trends in the country's economic development between 2016 and 2019. First, economic growth in North Korea became more concentrated in Pyongyang and major cities, exacerbating the urban-rural divide. Second, satellite imagery revealed significant changes in areas designated for tourism and economic development, such as new building construction and other meaningful alterations. Third, traditional industrial and export development zones showed relatively minor changes.

Meeyoung Cha, a data scientist in the team explained, "This is an important interdisciplinary effort to address global challenges like poverty. We plan to apply our AI algorithm to other international issues, such as monitoring carbon emissions, disaster damage detection, and the impact of climate change."

An economist on the research team, Jihee Kim, commented that this approach would enable detailed examinations of economic conditions in the developing world at a low cost, reducing data disparities between developed and developing nations. She further emphasized that this is most essential because many public policies require economic measurements to achieve their goals, whether they are for growth, equality, or sustainability.

The research team has made the source code publicly available via GitHub and plans to continue improving the technology, applying it to new satellite images updated annually. The results of this study, with Ph.D. candidate Donghyun Ahn at KAIST and Ph.D. candidate Jeasurk Yang at NUS as joint first authors, were published in Nature Communications under the title "A human-machine collaborative approach measures economic development using satellite imagery."

< Photos of the main authors. 1. Donghyun Ahn, PhD candidate at KAIST School of Computing 2. Jeasurk Yang, PhD candidate at the Department of Geography of National University of Singapore 3. Meeyoung Cha, Professor of KAIST School of Computing and CI at IBS 4. Jihee Kim, Professor of KAIST School of Business and Technology Management 5. Sangyoon Park, Professor of the Division of Social Science at Hong Kong University of Science and Technology 6. Hyunjoo Yang, Professor of the Department of Economics at Sogang University >

2023.12.07 View 8997

North Korea and Beyond: AI-Powered Satellite Analysis Reveals the Unseen Economic Landscape of Underdeveloped Nations

- A joint research team in computer science, economics, and geography has developed an artificial intelligence (AI) technology to measure grid-level economic development within six-square-kilometer regions.

- This AI technology is applicable in regions with limited statistical data (e.g., North Korea), supporting international efforts to propose policies for economic growth and poverty reduction in underdeveloped countries.

- The research team plans to make this technology freely available for use to contribute to the United Nations' Sustainable Development Goals (SDGs).

The United Nations reports that more than 700 million people are in extreme poverty, earning less than two dollars a day. However, an accurate assessment of poverty remains a global challenge. For example, 53 countries have not conducted agricultural surveys in the past 15 years, and 17 countries have not published a population census. To fill this data gap, new technologies are being explored to estimate poverty using alternative sources such as street views, aerial photos, and satellite images.

The paper published in Nature Communications demonstrates how artificial intelligence (AI) can help analyze economic conditions from daytime satellite imagery. This new technology can even apply to the least developed countries - such as North Korea - that do not have reliable statistical data for typical machine learning training.

The researchers used Sentinel-2 satellite images from the European Space Agency (ESA) that are publicly available. They split these images into small six-square-kilometer grids. At this zoom level, visual information such as buildings, roads, and greenery can be used to quantify economic indicators. As a result, the team obtained the first ever fine-grained economic map of regions like North Korea. The same algorithm was applied to other underdeveloped countries in Asia: North Korea, Nepal, Laos, Myanmar, Bangladesh, and Cambodia (see Image 1).

The key feature of their research model is the "human-machine collaborative approach," which lets researchers combine human input with AI predictions for areas with scarce data. In this research, ten human experts compared satellite images and judged the economic conditions in the area, with the AI learning from this human data and giving economic scores to each image. The results showed that the Human-AI collaborative approach outperformed machine-only learning algorithms.

< Image 1. Nightlight satellite images of North Korea (Top-left: Background photo provided by NASA's Earth Observatory). South Korea appears brightly lit compared to North Korea, which is mostly dark except for Pyongyang. In contrast, the model developed by the research team uses daytime satellite imagery to predict more detailed economic predictions for North Korea (top-right) and five Asian countries (Bottom: Background photo from Google Earth). >

The research was led by an interdisciplinary team of computer scientists, economists, and a geographer from KAIST & IBS (Donghyun Ahn, Meeyoung Cha, Jihee Kim), Sogang University (Hyunjoo Yang), HKUST (Sangyoon Park), and NUS (Jeasurk Yang). Dr Charles Axelsson, Associate Editor at Nature Communications, handled this paper during the peer review process at the journal.

The research team found that the scores showed a strong correlation with traditional socio-economic metrics such as population density, employment, and number of businesses. This demonstrates the wide applicability and scalability of the approach, particularly in data-scarce countries. Furthermore, the model's strength lies in its ability to detect annual changes in economic conditions at a more detailed geospatial level without using any survey data (see Image 2).

< Image 2. Differences in satellite imagery and economic scores in North Korea between 2016 and 2019. Significant development was found in the Wonsan Kalma area (top), one of the tourist development zones, but no changes were observed in the Wiwon Industrial Development Zone (bottom). (Background photo: Sentinel-2 satellite imagery provided by the European Space Agency (ESA)). >

This model would be especially valuable for rapidly monitoring the progress of Sustainable Development Goals such as reducing poverty and promoting more equitable and sustainable growth on an international scale. The model can also be adapted to measure various social and environmental indicators. For example, it can be trained to identify regions with high vulnerability to climate change and disasters to provide timely guidance on disaster relief efforts.

As an example, the researchers explored how North Korea changed before and after the United Nations sanctions against the country. By applying the model to satellite images of North Korea both in 2016 and in 2019, the researchers discovered three key trends in the country's economic development between 2016 and 2019. First, economic growth in North Korea became more concentrated in Pyongyang and major cities, exacerbating the urban-rural divide. Second, satellite imagery revealed significant changes in areas designated for tourism and economic development, such as new building construction and other meaningful alterations. Third, traditional industrial and export development zones showed relatively minor changes.

Meeyoung Cha, a data scientist in the team explained, "This is an important interdisciplinary effort to address global challenges like poverty. We plan to apply our AI algorithm to other international issues, such as monitoring carbon emissions, disaster damage detection, and the impact of climate change."

An economist on the research team, Jihee Kim, commented that this approach would enable detailed examinations of economic conditions in the developing world at a low cost, reducing data disparities between developed and developing nations. She further emphasized that this is most essential because many public policies require economic measurements to achieve their goals, whether they are for growth, equality, or sustainability.

The research team has made the source code publicly available via GitHub and plans to continue improving the technology, applying it to new satellite images updated annually. The results of this study, with Ph.D. candidate Donghyun Ahn at KAIST and Ph.D. candidate Jeasurk Yang at NUS as joint first authors, were published in Nature Communications under the title "A human-machine collaborative approach measures economic development using satellite imagery."

< Photos of the main authors. 1. Donghyun Ahn, PhD candidate at KAIST School of Computing 2. Jeasurk Yang, PhD candidate at the Department of Geography of National University of Singapore 3. Meeyoung Cha, Professor of KAIST School of Computing and CI at IBS 4. Jihee Kim, Professor of KAIST School of Business and Technology Management 5. Sangyoon Park, Professor of the Division of Social Science at Hong Kong University of Science and Technology 6. Hyunjoo Yang, Professor of the Department of Economics at Sogang University >

2023.12.07 View 8997 -

A KAIST research team identifies a cause of mental diseases induced by childhood abuse

Childhood neglect and/or abuse can induce extreme stress that significantly changes neural networks and functions during growth. This can lead to mental illnesses, including depression and schizophrenia, but the exact mechanism and means to control it were yet to be discovered.

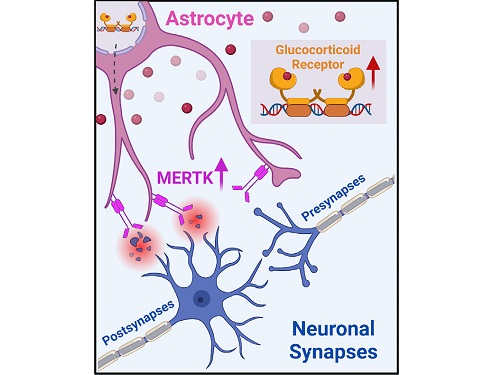

On August 1, a KAIST research team led by Professor Won-Suk Chung from the Department of Biological Sciences announced the identification of excessive synapse removal mediated by astrocytes as the cause of mental diseases induced by childhood abuse trauma. Their research was published in Immunity, a top international journal in the field of immunology.

The research team discovered that the excessive astrocyte-mediated removal of excitatory synapses in the brain in response to stress hormones is a cause of mental diseases induced by childhood neglect and abuse. Clinical data have previously shown that high levels of stress can lead to various mental diseases, but the exact mechanism has been unknown. The results of this research therefore are expected to be widely applied to the prevention and treatment of such diseases.

The research team clinically screened an FDA-approved drug to uncover the mechanism that regulates the phagocytotic role of astrocytes, in which they capture external substances and eliminate them. As a result, the team found that synthetic glucocorticoids, namely stress hormones, enhanced astrocyte-mediated phagocytosis to an abnormal level. Glucocorticoids play essential roles in processes that maintain life, such as carbohydrate metabolism and anti-inflammation, but are also secreted in response to external stimuli such as stress, allowing the body to respond appropriately. However, excessive and long-term exposure to glucocorticoids caused by chronic stress can lead to various mental diseases including depression, cognitive disorders, and anxiety.

< Figure 1. Results of screening for compounds that increase astrocyte phagocytosis

(A) Discovered that synthetic glucocorticoid (stress hormone) increases the phagocytosis of astrocytes through screening of FDA-approved clinical compounds. (B-C) When treated with stress hormones, the phagocytosis of astrocytes is greatly increased, but this phenomenon is strongly suppressed by the GR antagonist (Mifepristone). CORT: corticosterone (stress hormone), Eplerenone: mineralocorticoid receptor (MR) antagonist, Mifepristone: glucocorticoid receptor (GR) antagonist >

To understand the changes in astrocyte functions caused by childhood stress, the research team used mice models with early social deprivation, and discovered that stress hormones bind to the glucocorticoid receptors (GRs) of astrocytes. This significantly increased the expression of Mer tyrosine kinase (MERK), which plays an essential role in astrocyte phagocytosis. Surprisingly, out of the various neurons in the cerebral cortex, astrocytes would eliminate only the excitatory synapses of specific neurons. The team found that this builds abnormal neural networks, which can lead to complex behavioral abnormalities such as social deficiencies and depression in adulthood.

The team also observed that microglia, which also play an important role in cerebral immunity, did not contribute to synapse removal in the mice models with early social deprivation. This confirms that the response to stress hormones during childhood is specifically astrocyte-mediated.

To find out whether these results are also applicable in humans, the research team used a brain organoid grown from human-induced pluripotent stem cells to observe human responses to stress hormones. The team observed that the stress hormones induced astrocyte GRs and phagocyte activation in the human brain organoid as well, and confirmed that the astrocytes subsequently eliminated excessive amounts of excitatory synapses. By showing that mice and humans both showed the same synapse control mechanism in response to stress, the team suggested that this discovery is applicable to mental disorders in humans.

< Figure 2. A schematic diagram of the study published in Immunity. Excessive stress hormone secretion in childhood increases the expression of the MERTK phagocytic receptor through the glucocorticoid receptor (GR) of astrocytes, resulting in excessive elimination of excitatory synapses. Excessive synaptic elimination by astrocytes during brain development causes permanent damage to brain circuits, resulting in abnormal neural activity in the adult brain and psychiatric behaviors such as depression and anti-social tendencies. >

Prof. Won-Suk Chung said, “Until now, we did not know the exact mechanism for how childhood stress caused brain diseases. This research was the first to show that the excessive phagocytosis of astrocytes could be an important cause of such diseases.” He added, “In the future, controlling the immune response of astrocytes will be used as a fundamental target for understanding and treating brain diseases.”

This research, written by co-first authors Youkyeong Byun (Ph.D. candidate) and Nam-Shik Kim (post-doctoral associate) from the KAIST Department of Biological Sciences, was published in the internationally renowned journal Immunity, a sister magazine of Cell and one of the best journal in the field of immunology, on July 31 under the title "Stress induces behavioral abnormalities by increasing expression of phagocytic receptor MERTK in astrocytes to promote synapse phagocytosis."

This work was supported by a National Research Foundation of Korea grant, the Korea Health Industry Development Institute (KHIDI), and the Korea Dementia Research Center (KDRC).

2023.08.04 View 8176

A KAIST research team identifies a cause of mental diseases induced by childhood abuse

Childhood neglect and/or abuse can induce extreme stress that significantly changes neural networks and functions during growth. This can lead to mental illnesses, including depression and schizophrenia, but the exact mechanism and means to control it were yet to be discovered.

On August 1, a KAIST research team led by Professor Won-Suk Chung from the Department of Biological Sciences announced the identification of excessive synapse removal mediated by astrocytes as the cause of mental diseases induced by childhood abuse trauma. Their research was published in Immunity, a top international journal in the field of immunology.

The research team discovered that the excessive astrocyte-mediated removal of excitatory synapses in the brain in response to stress hormones is a cause of mental diseases induced by childhood neglect and abuse. Clinical data have previously shown that high levels of stress can lead to various mental diseases, but the exact mechanism has been unknown. The results of this research therefore are expected to be widely applied to the prevention and treatment of such diseases.

The research team clinically screened an FDA-approved drug to uncover the mechanism that regulates the phagocytotic role of astrocytes, in which they capture external substances and eliminate them. As a result, the team found that synthetic glucocorticoids, namely stress hormones, enhanced astrocyte-mediated phagocytosis to an abnormal level. Glucocorticoids play essential roles in processes that maintain life, such as carbohydrate metabolism and anti-inflammation, but are also secreted in response to external stimuli such as stress, allowing the body to respond appropriately. However, excessive and long-term exposure to glucocorticoids caused by chronic stress can lead to various mental diseases including depression, cognitive disorders, and anxiety.

< Figure 1. Results of screening for compounds that increase astrocyte phagocytosis

(A) Discovered that synthetic glucocorticoid (stress hormone) increases the phagocytosis of astrocytes through screening of FDA-approved clinical compounds. (B-C) When treated with stress hormones, the phagocytosis of astrocytes is greatly increased, but this phenomenon is strongly suppressed by the GR antagonist (Mifepristone). CORT: corticosterone (stress hormone), Eplerenone: mineralocorticoid receptor (MR) antagonist, Mifepristone: glucocorticoid receptor (GR) antagonist >

To understand the changes in astrocyte functions caused by childhood stress, the research team used mice models with early social deprivation, and discovered that stress hormones bind to the glucocorticoid receptors (GRs) of astrocytes. This significantly increased the expression of Mer tyrosine kinase (MERK), which plays an essential role in astrocyte phagocytosis. Surprisingly, out of the various neurons in the cerebral cortex, astrocytes would eliminate only the excitatory synapses of specific neurons. The team found that this builds abnormal neural networks, which can lead to complex behavioral abnormalities such as social deficiencies and depression in adulthood.

The team also observed that microglia, which also play an important role in cerebral immunity, did not contribute to synapse removal in the mice models with early social deprivation. This confirms that the response to stress hormones during childhood is specifically astrocyte-mediated.

To find out whether these results are also applicable in humans, the research team used a brain organoid grown from human-induced pluripotent stem cells to observe human responses to stress hormones. The team observed that the stress hormones induced astrocyte GRs and phagocyte activation in the human brain organoid as well, and confirmed that the astrocytes subsequently eliminated excessive amounts of excitatory synapses. By showing that mice and humans both showed the same synapse control mechanism in response to stress, the team suggested that this discovery is applicable to mental disorders in humans.

< Figure 2. A schematic diagram of the study published in Immunity. Excessive stress hormone secretion in childhood increases the expression of the MERTK phagocytic receptor through the glucocorticoid receptor (GR) of astrocytes, resulting in excessive elimination of excitatory synapses. Excessive synaptic elimination by astrocytes during brain development causes permanent damage to brain circuits, resulting in abnormal neural activity in the adult brain and psychiatric behaviors such as depression and anti-social tendencies. >

Prof. Won-Suk Chung said, “Until now, we did not know the exact mechanism for how childhood stress caused brain diseases. This research was the first to show that the excessive phagocytosis of astrocytes could be an important cause of such diseases.” He added, “In the future, controlling the immune response of astrocytes will be used as a fundamental target for understanding and treating brain diseases.”

This research, written by co-first authors Youkyeong Byun (Ph.D. candidate) and Nam-Shik Kim (post-doctoral associate) from the KAIST Department of Biological Sciences, was published in the internationally renowned journal Immunity, a sister magazine of Cell and one of the best journal in the field of immunology, on July 31 under the title "Stress induces behavioral abnormalities by increasing expression of phagocytic receptor MERTK in astrocytes to promote synapse phagocytosis."

This work was supported by a National Research Foundation of Korea grant, the Korea Health Industry Development Institute (KHIDI), and the Korea Dementia Research Center (KDRC).

2023.08.04 View 8176 -

A KAIST research team develops a high-performance modular SSD system semiconductor

In recent years, there has been a rise in demand for large amounts of data to train AI models and, thus, data size has become increasingly important over time. Accordingly, solid state drives (SSDs, storage devices that use a semiconductor memory unit), which are core storage devices for data centers and cloud services, have also seen an increase in demand. However, the internal components of higher performing SSDs have become more tightly coupled, and this tightly-coupled structure limits SSD from maximized performance.

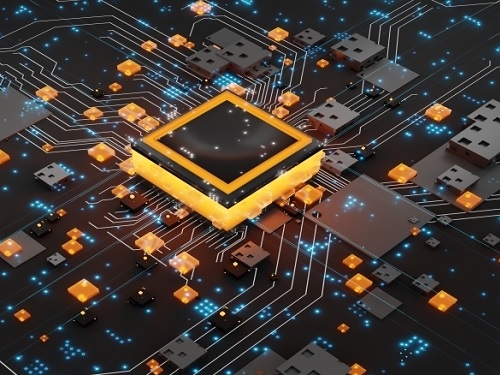

On June 15, a KAIST research team led by Professor Dongjun Kim (John Kim) from the School of Electrical Engineering (EE) announced the development of the first SSD system semiconductor structure that can increase the reading/writing performance of next generation SSDs and extend their lifespan through high-performance modular SSD systems.

Professor Kim’s team identified the limitations of the tightly-coupled structures in existing SSD designs and proposed a de-coupled structure that can maximize SSD performance by configuring an internal on-chip network specialized for flash memory. This technique utilizes on-chip network technology, which can freely send packet-based data within the chip and is often used to design non-memory system semiconductors like CPUs and GPUs. Through this, the team developed a ‘modular SSD’, which shows reduced interdependence between front-end and back-end designs, and allows their independent design and assembly.

*on-chip network: a packet-based connection structure for the internal components of system semiconductors like CPUs/GPUs. On-chip networks are one of the most critical design components for high-performing system semiconductors, and their importance grows with the size of the semiconductor chip.

Professor Kim’s team refers to the components nearer to the CPU as the front-end and the parts closer to the flash memory as back-end. They newly constructed an on-chip network specific to flash memory in order to allow data transmission between the back-end’s flash controller, proposing a de-coupled structure that can minimize performance drop.

The SSD can accelerate some functions of the flash translation layer, a critical element to drive the SSD, in order to allow flash memory to actively overcome its limitations. Another advantage of the de-coupled, modular structure is that the flash translation layer is not limited to the characteristics of specific flash memories. Instead, their front-end and back-end designs can be carried out independently. Through this, the team could produce 21-times faster response times compared to existing systems and extend SSD lifespan by 23% by also applying the DDS defect detection technique.

< Figure 1. Schematic diagram of the structure of a high-performance modular SSD system developed by Professor Dong-Jun Kim's team >

This research, conducted by first author and Ph.D. candidate Jiho Kim from the KAIST School of EE and co-author Professor Myoungsoo Jung, was presented on the 19th of June at the 50th IEEE/ACM International Symposium on Computer Architecture, the most prestigious academic conference in the field of computer architecture, held in Orlando, Florida. (Paper Title: Decoupled SSD: Rethinking SSD Architecture through Network-based Flash Controllers)

< Figure 2. Conceptual diagram of hardware acceleration through high-performance modular SSD system >

Professor Dongjun Kim, who led the research, said, “This research is significant in that it identified the structural limitations of existing SSDs, and showed that on-chip network technology based on system memory semiconductors like CPUs can drive the hardware to actively carry out the necessary actions. We expect this to contribute greatly to the next-generation high-performance SSD market.” He added, “The de-coupled architecture is a structure that can actively operate to extend devices’ lifespan. In other words, its significance is not limited to the level of performance and can, therefore, be used for various applications.”

KAIST commented that this research is also meaningful in that the results were reaped through a collaborative study between two world-renowned researchers: Professor Myeongsoo Jung, recognized in the field of computer system storage devices, and Professor Dongjun Kim, a leading researcher in computer architecture and interconnection networks.

This research was funded by the National Research Foundation of Korea, Samsung Electronics, the IC Design Education Center, and Next Generation Semiconductor Technology and Development granted by the Institute of Information & Communications Technology, Planning & Evaluation.

2023.06.23 View 8094

A KAIST research team develops a high-performance modular SSD system semiconductor

In recent years, there has been a rise in demand for large amounts of data to train AI models and, thus, data size has become increasingly important over time. Accordingly, solid state drives (SSDs, storage devices that use a semiconductor memory unit), which are core storage devices for data centers and cloud services, have also seen an increase in demand. However, the internal components of higher performing SSDs have become more tightly coupled, and this tightly-coupled structure limits SSD from maximized performance.

On June 15, a KAIST research team led by Professor Dongjun Kim (John Kim) from the School of Electrical Engineering (EE) announced the development of the first SSD system semiconductor structure that can increase the reading/writing performance of next generation SSDs and extend their lifespan through high-performance modular SSD systems.

Professor Kim’s team identified the limitations of the tightly-coupled structures in existing SSD designs and proposed a de-coupled structure that can maximize SSD performance by configuring an internal on-chip network specialized for flash memory. This technique utilizes on-chip network technology, which can freely send packet-based data within the chip and is often used to design non-memory system semiconductors like CPUs and GPUs. Through this, the team developed a ‘modular SSD’, which shows reduced interdependence between front-end and back-end designs, and allows their independent design and assembly.

*on-chip network: a packet-based connection structure for the internal components of system semiconductors like CPUs/GPUs. On-chip networks are one of the most critical design components for high-performing system semiconductors, and their importance grows with the size of the semiconductor chip.

Professor Kim’s team refers to the components nearer to the CPU as the front-end and the parts closer to the flash memory as back-end. They newly constructed an on-chip network specific to flash memory in order to allow data transmission between the back-end’s flash controller, proposing a de-coupled structure that can minimize performance drop.

The SSD can accelerate some functions of the flash translation layer, a critical element to drive the SSD, in order to allow flash memory to actively overcome its limitations. Another advantage of the de-coupled, modular structure is that the flash translation layer is not limited to the characteristics of specific flash memories. Instead, their front-end and back-end designs can be carried out independently. Through this, the team could produce 21-times faster response times compared to existing systems and extend SSD lifespan by 23% by also applying the DDS defect detection technique.

< Figure 1. Schematic diagram of the structure of a high-performance modular SSD system developed by Professor Dong-Jun Kim's team >

This research, conducted by first author and Ph.D. candidate Jiho Kim from the KAIST School of EE and co-author Professor Myoungsoo Jung, was presented on the 19th of June at the 50th IEEE/ACM International Symposium on Computer Architecture, the most prestigious academic conference in the field of computer architecture, held in Orlando, Florida. (Paper Title: Decoupled SSD: Rethinking SSD Architecture through Network-based Flash Controllers)

< Figure 2. Conceptual diagram of hardware acceleration through high-performance modular SSD system >

Professor Dongjun Kim, who led the research, said, “This research is significant in that it identified the structural limitations of existing SSDs, and showed that on-chip network technology based on system memory semiconductors like CPUs can drive the hardware to actively carry out the necessary actions. We expect this to contribute greatly to the next-generation high-performance SSD market.” He added, “The de-coupled architecture is a structure that can actively operate to extend devices’ lifespan. In other words, its significance is not limited to the level of performance and can, therefore, be used for various applications.”

KAIST commented that this research is also meaningful in that the results were reaped through a collaborative study between two world-renowned researchers: Professor Myeongsoo Jung, recognized in the field of computer system storage devices, and Professor Dongjun Kim, a leading researcher in computer architecture and interconnection networks.

This research was funded by the National Research Foundation of Korea, Samsung Electronics, the IC Design Education Center, and Next Generation Semiconductor Technology and Development granted by the Institute of Information & Communications Technology, Planning & Evaluation.

2023.06.23 View 8094 -

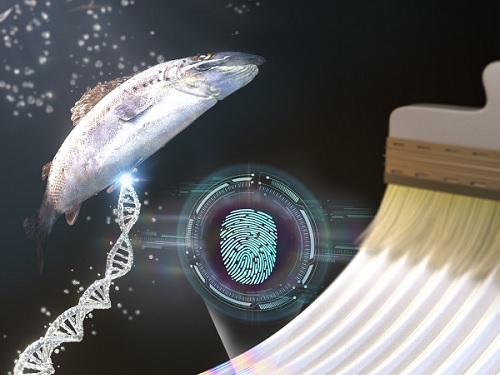

KAIST research team develops a forgery prevention technique using salmon DNA

The authenticity scandal that plagued the artwork “Beautiful Woman” by Kyung-ja Chun for 30 years shows how concerns about replicas can become a burden to artists, as most of them are not experts in the field of anti-counterfeiting. To solve this problem, artist-friendly physical unclonable functions (PUFs) based on optical techniques instead of electronic ones, which can be applied immediately onto artwork through brushstrokes are needed.

On May 23, a KAIST research team led by Professor Dong Ki Yoon in the Department of Chemistry revealed the development of a proprietary technology for security and certification using random patterns that occur during the self-assembly of soft materials.

With the development of the Internet of Things in recent years, various electronic devices and services can now be connected to the internet and carry out new innovative functions. However, counterfeiting technologies that infringe on individuals’ privacy have also entered the marketplace.

The technique developed by the research team involves random and spontaneous patterns that naturally occur during the self-assembly of two different types of soft materials, which can be used in the same way as human fingerprints for non-replicable security. This is very significant in that even non-experts in the field of security can construct anti-counterfeiting systems through simple actions like drawing a picture.

The team developed two unique methods. The first method uses liquid crystals. When liquid crystals become trapped in patterned substrates, they induce the symmetrical destruction of the structure and create a maze-like topology (Figure 1). The research team defined the pathways open to the right as 0 (blue), and those open to the left as 1 (red), and confirmed that the structure could be converted into a digital code composed of 0’s and 1’s that can serve as a type of fingerprint through object recognition using machine learning. This groundbreaking technique can be utilized by non-experts, as it does not require complex semiconductor patterns that are required by existing technology, and can be observed through the level of resolution of a smartphone camera. In particular, this technique can reconstruct information more easily than conventional methods that use semiconductor chips.

< Figure 1. Security technology using the maze made up of magnetically-assembled structures formed on a substrate patterned with liquid crystal materials. >

The second method uses DNA extracted from salmon. The DNA can be dissolved in water and applied with a brush to induce bulking instability, which forms random patterns similar to a zebra’s stripes. Here, the patterns create ridge endings and bifurcation, which are characteristics in fingerprints, and these can also be digitalized into 0’s and 1’s through machine learning. The research team applied conventional fingerprint recognition technology to this patterning technique and demonstrated its use as an artificial fingerprint. This method can be easily carried out using a brush, and the solution can be mixed into various colors and used as a new security ink.

< Figure 2. Technology to produce security ink using DNA polymers extracted from salmon >

This new security technology developed by the research team uses only simple organic materials and requires basic manufacturing processes, making it possible to enhance security at a low cost. In addition, users can produce patterns in the shapes and sizes they want, and even if the patterns are made in the same way, their randomness makes each individual pattern different. This provides high levels of security and gives the technique enhanced marketability.

Professor Dong Ki Yoon said, “These studies have taken the randomness that naturally occurs during self-assembly to create non-replicable patterns that can act like human fingerprints.” He added, “These ideas will be the cornerstone of technology that applies the many randomities that exist in nature to security systems.”

The two studies were published in the journal Advanced Materials under the titles “1Planar Spin Glass with Topologically-Protected Mazes in the Liquid Crystal Targeting for Reconfigurable Micro Security Media” and “2Paintable Physical Unclonable Function Using DNA” on May 6 and 5, respectively.

Author Information: 1Geonhyeong Park, Yun-Seok Choi, S. Joon Kwon*, and Dong Ki Yoon*/ 2Soon Mo Park†, Geonhyeong Park†, Dong Ki Yoon*: †co-first authors, *corresponding author

This research was funded by the Center for Multiscale Chiral Architectures and supported by the Ministry of Science and ICT-Korea Research Foundation, BRIDGE Convergent Research and Development Program, the Running Together Project, and the Samsung Future Technology Development Program.

< Figure 1-1. A scene from the schematic animation of the process of Blues (0) and Reds (1) forming the PUF by exploring the maze. From "Planar Spin Glass with Topologically-Protected Mazes in the Liquid Crystal Targeting for Reconfigurable Micro Security Media" by Geonhyeong Park, Yun-Seok Choi, S. Joon Kwon, Dong Ki Yoon. https://doi.org/10.1002/adma.202303077 >

< Figure 2-1. A schematic diagram of the formation of digital fingerprints formed using the DNA ink. From "Paintable Physical Unclonable Function Using DNA" by Soon Mo Park, Geonhyeong Park, Dong Ki Yoon. https://doi.org/10.1002/adma.202302135 >

2023.06.08 View 8498

KAIST research team develops a forgery prevention technique using salmon DNA

The authenticity scandal that plagued the artwork “Beautiful Woman” by Kyung-ja Chun for 30 years shows how concerns about replicas can become a burden to artists, as most of them are not experts in the field of anti-counterfeiting. To solve this problem, artist-friendly physical unclonable functions (PUFs) based on optical techniques instead of electronic ones, which can be applied immediately onto artwork through brushstrokes are needed.

On May 23, a KAIST research team led by Professor Dong Ki Yoon in the Department of Chemistry revealed the development of a proprietary technology for security and certification using random patterns that occur during the self-assembly of soft materials.

With the development of the Internet of Things in recent years, various electronic devices and services can now be connected to the internet and carry out new innovative functions. However, counterfeiting technologies that infringe on individuals’ privacy have also entered the marketplace.

The technique developed by the research team involves random and spontaneous patterns that naturally occur during the self-assembly of two different types of soft materials, which can be used in the same way as human fingerprints for non-replicable security. This is very significant in that even non-experts in the field of security can construct anti-counterfeiting systems through simple actions like drawing a picture.

The team developed two unique methods. The first method uses liquid crystals. When liquid crystals become trapped in patterned substrates, they induce the symmetrical destruction of the structure and create a maze-like topology (Figure 1). The research team defined the pathways open to the right as 0 (blue), and those open to the left as 1 (red), and confirmed that the structure could be converted into a digital code composed of 0’s and 1’s that can serve as a type of fingerprint through object recognition using machine learning. This groundbreaking technique can be utilized by non-experts, as it does not require complex semiconductor patterns that are required by existing technology, and can be observed through the level of resolution of a smartphone camera. In particular, this technique can reconstruct information more easily than conventional methods that use semiconductor chips.

< Figure 1. Security technology using the maze made up of magnetically-assembled structures formed on a substrate patterned with liquid crystal materials. >

The second method uses DNA extracted from salmon. The DNA can be dissolved in water and applied with a brush to induce bulking instability, which forms random patterns similar to a zebra’s stripes. Here, the patterns create ridge endings and bifurcation, which are characteristics in fingerprints, and these can also be digitalized into 0’s and 1’s through machine learning. The research team applied conventional fingerprint recognition technology to this patterning technique and demonstrated its use as an artificial fingerprint. This method can be easily carried out using a brush, and the solution can be mixed into various colors and used as a new security ink.

< Figure 2. Technology to produce security ink using DNA polymers extracted from salmon >

This new security technology developed by the research team uses only simple organic materials and requires basic manufacturing processes, making it possible to enhance security at a low cost. In addition, users can produce patterns in the shapes and sizes they want, and even if the patterns are made in the same way, their randomness makes each individual pattern different. This provides high levels of security and gives the technique enhanced marketability.

Professor Dong Ki Yoon said, “These studies have taken the randomness that naturally occurs during self-assembly to create non-replicable patterns that can act like human fingerprints.” He added, “These ideas will be the cornerstone of technology that applies the many randomities that exist in nature to security systems.”

The two studies were published in the journal Advanced Materials under the titles “1Planar Spin Glass with Topologically-Protected Mazes in the Liquid Crystal Targeting for Reconfigurable Micro Security Media” and “2Paintable Physical Unclonable Function Using DNA” on May 6 and 5, respectively.

Author Information: 1Geonhyeong Park, Yun-Seok Choi, S. Joon Kwon*, and Dong Ki Yoon*/ 2Soon Mo Park†, Geonhyeong Park†, Dong Ki Yoon*: †co-first authors, *corresponding author

This research was funded by the Center for Multiscale Chiral Architectures and supported by the Ministry of Science and ICT-Korea Research Foundation, BRIDGE Convergent Research and Development Program, the Running Together Project, and the Samsung Future Technology Development Program.

< Figure 1-1. A scene from the schematic animation of the process of Blues (0) and Reds (1) forming the PUF by exploring the maze. From "Planar Spin Glass with Topologically-Protected Mazes in the Liquid Crystal Targeting for Reconfigurable Micro Security Media" by Geonhyeong Park, Yun-Seok Choi, S. Joon Kwon, Dong Ki Yoon. https://doi.org/10.1002/adma.202303077 >

< Figure 2-1. A schematic diagram of the formation of digital fingerprints formed using the DNA ink. From "Paintable Physical Unclonable Function Using DNA" by Soon Mo Park, Geonhyeong Park, Dong Ki Yoon. https://doi.org/10.1002/adma.202302135 >

2023.06.08 View 8498 -

Seanie Lee of KAIST Kim Jaechul Graduate School of AI, named the 2023 Apple Scholars in AI Machine Learning

Seanie Lee, a Ph.D. candidate at the Kim Jaechul Graduate School of AI, has been selected as one of the Apple Scholars in AI/ML PhD fellowship program recipients for 2023. Lee, advised by Sung Ju Hwang and Juho Lee, is a rising star in AI.

< Seanie Lee of KAIST Kim Jaechul Graduate School of AI >

The Apple Scholars in AI/ML PhD fellowship program, launched in 2020, aims to discover and support young researchers with a promising future in computer science. Each year, a handful of graduate students in related fields worldwide are selected for the program. For the following two years, the selected students are provided with financial support for research, international conference attendance, internship opportunities, and mentorship by an Apple engineer.

This year, 22 PhD students were selected from leading universities worldwide, including Johns Hopkins University, MIT, Stanford University, Imperial College London, Edinburgh University, Tsinghua University, HKUST, and Technion. Seanie Lee is the first Korean student to be selected for the program.

Lee’s research focuses on transfer learning, a subfield of AI that reuses pre-trained AI models on large datasets such as images or text corpora to train them for new purposes.

(*text corpus: a collection of text resources in computer-readable forms)

His work aims to improve the performance of transfer learning by developing new data augmentation methods that allow for more effective training using few training data samples and new regularization techniques that prevent the overfitting of large AI models to training data. He has published 11 papers, all of which were accepted to top-tier conferences such as the Annual Meeting of the Association for Computational Linguistics (ACL), International Conference on Learning Representations (ICLR), and Annual Conference on Neural Information Processing Systems (NeurIPS).

“Being selected as one of the Apple Scholars in AI/ML PhD fellowship program is a great motivation for me,” said Lee. “So far, AI research has been largely focused on computer vision and natural language processing, but I want to push the boundaries now and use modern tools of AI to solve problems in natural science, like physics.”

2023.04.20 View 8050

Seanie Lee of KAIST Kim Jaechul Graduate School of AI, named the 2023 Apple Scholars in AI Machine Learning

Seanie Lee, a Ph.D. candidate at the Kim Jaechul Graduate School of AI, has been selected as one of the Apple Scholars in AI/ML PhD fellowship program recipients for 2023. Lee, advised by Sung Ju Hwang and Juho Lee, is a rising star in AI.

< Seanie Lee of KAIST Kim Jaechul Graduate School of AI >

The Apple Scholars in AI/ML PhD fellowship program, launched in 2020, aims to discover and support young researchers with a promising future in computer science. Each year, a handful of graduate students in related fields worldwide are selected for the program. For the following two years, the selected students are provided with financial support for research, international conference attendance, internship opportunities, and mentorship by an Apple engineer.

This year, 22 PhD students were selected from leading universities worldwide, including Johns Hopkins University, MIT, Stanford University, Imperial College London, Edinburgh University, Tsinghua University, HKUST, and Technion. Seanie Lee is the first Korean student to be selected for the program.

Lee’s research focuses on transfer learning, a subfield of AI that reuses pre-trained AI models on large datasets such as images or text corpora to train them for new purposes.

(*text corpus: a collection of text resources in computer-readable forms)

His work aims to improve the performance of transfer learning by developing new data augmentation methods that allow for more effective training using few training data samples and new regularization techniques that prevent the overfitting of large AI models to training data. He has published 11 papers, all of which were accepted to top-tier conferences such as the Annual Meeting of the Association for Computational Linguistics (ACL), International Conference on Learning Representations (ICLR), and Annual Conference on Neural Information Processing Systems (NeurIPS).

“Being selected as one of the Apple Scholars in AI/ML PhD fellowship program is a great motivation for me,” said Lee. “So far, AI research has been largely focused on computer vision and natural language processing, but I want to push the boundaries now and use modern tools of AI to solve problems in natural science, like physics.”

2023.04.20 View 8050 -

KAIST team develops smart immune system that can pin down on malignant tumors

A joint research team led by Professor Jung Kyoon Choi of the KAIST Department of Bio and Brain Engineering and Professor Jong-Eun Park of the KAIST Graduate School of Medical Science and Engineering (GSMSE) announced the development of the key technologies to treat cancers using smart immune cells designed based on AI and big data analysis. This technology is expected to be a next-generation immunotherapy that allows precision targeting of tumor cells by having the chimeric antigen receptors (CARs) operate through a logical circuit. Professor Hee Jung An of CHA Bundang Medical Center and Professor Hae-Ock Lee of the Catholic University of Korea also participated in this research to contribute joint effort.

Professor Jung Kyoon Choi’s team built a gene expression database from millions of cells, and used this to successfully develop and verify a deep-learning algorithm that could detect the differences in gene expression patterns between tumor cells and normal cells through a logical circuit. CAR immune cells that were fitted with the logic circuits discovered through this methodology could distinguish between tumorous and normal cells as a computer would, and therefore showed potentials to strike only on tumor cells accurately without causing unwanted side effects.

This research, conducted by co-first authors Dr. Joonha Kwon of the KAIST Department of Bio and Brain Engineering and Ph.D. candidate Junho Kang of KAIST GSMSE, was published by Nature Biotechnology on February 16, under the title Single-cell mapping of combinatorial target antigens for CAR switches using logic gates.

An area in cancer research where the most attempts and advances have been made in recent years is immunotherapy. This field of treatment, which utilizes the patient’s own immune system in order to overcome cancer, has several methods including immune checkpoint inhibitors, cancer vaccines and cellular treatments. Immune cells like CAR-T or CAR-NK equipped with chimera antigen receptors, in particular, can recognize cancer antigens and directly destroy cancer cells.

Starting with its success in blood cancer treatment, scientists have been trying to expand the application of CAR cell therapy to treat solid cancer. But there have been difficulties to develop CAR cells with effective killing abilities against solid cancer cells with minimized side effects. Accordingly, in recent years, the development of smarter CAR engineering technologies, i.e., computational logic gates such as AND, OR, and NOT, to effectively target cancer cells has been underway.

At this point in time, the research team built a large-scale database for cancer and normal cells to discover the exact genes that are expressed only from cancer cells at a single-cell level. The team followed this up by developing an AI algorithm that could search for a combination of genes that best distinguishes cancer cells from normal cells. This algorithm, in particular, has been used to find a logic circuit that can specifically target cancer cells through cell-level simulations of all gene combinations. CAR-T cells equipped with logic circuits discovered through this methodology are expected to distinguish cancerous cells from normal cells like computers, thereby minimizing side effects and maximizing the effects of chemotherapy.

Dr. Joonha Kwon, who is the first author of this paper, said, “this research suggests a new method that hasn’t been tried before. What’s particularly noteworthy is the process in which we found the optimal CAR cell circuit through simulations of millions of individual tumors and normal cells.” He added, “This is an innovative technology that can apply AI and computer logic circuits to immune cell engineering. It would contribute greatly to expanding CAR therapy, which is being successfully used for blood cancer, to solid cancers as well.”

This research was funded by the Original Technology Development Project and Research Program for Next Generation Applied Omic of the Korea Research Foundation.

Figure 1. A schematic diagram of manufacturing and administration process of CAR therapy and of cancer cell-specific dual targeting using CAR.

Figure 2. Deep learning (convolutional neural networks, CNNs) algorithm for selection of dual targets based on gene combination (left) and algorithm for calculating expressing cell fractions by gene combination according to logical circuit (right).

2023.03.09 View 11374

KAIST team develops smart immune system that can pin down on malignant tumors

A joint research team led by Professor Jung Kyoon Choi of the KAIST Department of Bio and Brain Engineering and Professor Jong-Eun Park of the KAIST Graduate School of Medical Science and Engineering (GSMSE) announced the development of the key technologies to treat cancers using smart immune cells designed based on AI and big data analysis. This technology is expected to be a next-generation immunotherapy that allows precision targeting of tumor cells by having the chimeric antigen receptors (CARs) operate through a logical circuit. Professor Hee Jung An of CHA Bundang Medical Center and Professor Hae-Ock Lee of the Catholic University of Korea also participated in this research to contribute joint effort.

Professor Jung Kyoon Choi’s team built a gene expression database from millions of cells, and used this to successfully develop and verify a deep-learning algorithm that could detect the differences in gene expression patterns between tumor cells and normal cells through a logical circuit. CAR immune cells that were fitted with the logic circuits discovered through this methodology could distinguish between tumorous and normal cells as a computer would, and therefore showed potentials to strike only on tumor cells accurately without causing unwanted side effects.

This research, conducted by co-first authors Dr. Joonha Kwon of the KAIST Department of Bio and Brain Engineering and Ph.D. candidate Junho Kang of KAIST GSMSE, was published by Nature Biotechnology on February 16, under the title Single-cell mapping of combinatorial target antigens for CAR switches using logic gates.

An area in cancer research where the most attempts and advances have been made in recent years is immunotherapy. This field of treatment, which utilizes the patient’s own immune system in order to overcome cancer, has several methods including immune checkpoint inhibitors, cancer vaccines and cellular treatments. Immune cells like CAR-T or CAR-NK equipped with chimera antigen receptors, in particular, can recognize cancer antigens and directly destroy cancer cells.

Starting with its success in blood cancer treatment, scientists have been trying to expand the application of CAR cell therapy to treat solid cancer. But there have been difficulties to develop CAR cells with effective killing abilities against solid cancer cells with minimized side effects. Accordingly, in recent years, the development of smarter CAR engineering technologies, i.e., computational logic gates such as AND, OR, and NOT, to effectively target cancer cells has been underway.

At this point in time, the research team built a large-scale database for cancer and normal cells to discover the exact genes that are expressed only from cancer cells at a single-cell level. The team followed this up by developing an AI algorithm that could search for a combination of genes that best distinguishes cancer cells from normal cells. This algorithm, in particular, has been used to find a logic circuit that can specifically target cancer cells through cell-level simulations of all gene combinations. CAR-T cells equipped with logic circuits discovered through this methodology are expected to distinguish cancerous cells from normal cells like computers, thereby minimizing side effects and maximizing the effects of chemotherapy.

Dr. Joonha Kwon, who is the first author of this paper, said, “this research suggests a new method that hasn’t been tried before. What’s particularly noteworthy is the process in which we found the optimal CAR cell circuit through simulations of millions of individual tumors and normal cells.” He added, “This is an innovative technology that can apply AI and computer logic circuits to immune cell engineering. It would contribute greatly to expanding CAR therapy, which is being successfully used for blood cancer, to solid cancers as well.”

This research was funded by the Original Technology Development Project and Research Program for Next Generation Applied Omic of the Korea Research Foundation.

Figure 1. A schematic diagram of manufacturing and administration process of CAR therapy and of cancer cell-specific dual targeting using CAR.

Figure 2. Deep learning (convolutional neural networks, CNNs) algorithm for selection of dual targets based on gene combination (left) and algorithm for calculating expressing cell fractions by gene combination according to logical circuit (right).

2023.03.09 View 11374 -

Yuji Roh Awarded 2022 Microsoft Research PhD Fellowship

KAIST PhD candidate Yuji Roh of the School of Electrical Engineering (advisor: Prof. Steven Euijong Whang) was selected as a recipient of the 2022 Microsoft Research PhD Fellowship.

< KAIST PhD candidate Yuji Roh (advisor: Prof. Steven Euijong Whang) >

The Microsoft Research PhD Fellowship is a scholarship program that recognizes outstanding graduate students for their exceptional and innovative research in areas relevant to computer science and related fields. This year, 36 people from around the world received the fellowship, and Yuji Roh from KAIST EE is the only recipient from universities in Korea. Each selected fellow will receive a $10,000 scholarship and an opportunity to intern at Microsoft under the guidance of an experienced researcher.

Yuji Roh was named a fellow in the field of “Machine Learning” for her outstanding achievements in Trustworthy AI. Her research highlights include designing a state-of-the-art fair training framework using batch selection and developing novel algorithms for both fair and robust training. Her works have been presented at the top machine learning conferences ICML, ICLR, and NeurIPS among others. She also co-presented a tutorial on Trustworthy AI at the top data mining conference ACM SIGKDD. She is currently interning at the NVIDIA Research AI Algorithms Group developing large-scale real-world fair AI frameworks.

The list of fellowship recipients and the interview videos are displayed on the Microsoft webpage and Youtube.

The list of recipients: https://www.microsoft.com/en-us/research/academic-program/phd-fellowship/2022-recipients/

Interview (Global): https://www.youtube.com/watch?v=T4Q-XwOOoJc

Interview (Asia): https://www.youtube.com/watch?v=qwq3R1XU8UE

[Highlighted research achievements by Yuji Roh: Fair batch selection framework]

[Highlighted research achievements by Yuji Roh: Fair and robust training framework]

2022.10.28 View 14931

Yuji Roh Awarded 2022 Microsoft Research PhD Fellowship

KAIST PhD candidate Yuji Roh of the School of Electrical Engineering (advisor: Prof. Steven Euijong Whang) was selected as a recipient of the 2022 Microsoft Research PhD Fellowship.

< KAIST PhD candidate Yuji Roh (advisor: Prof. Steven Euijong Whang) >

The Microsoft Research PhD Fellowship is a scholarship program that recognizes outstanding graduate students for their exceptional and innovative research in areas relevant to computer science and related fields. This year, 36 people from around the world received the fellowship, and Yuji Roh from KAIST EE is the only recipient from universities in Korea. Each selected fellow will receive a $10,000 scholarship and an opportunity to intern at Microsoft under the guidance of an experienced researcher.

Yuji Roh was named a fellow in the field of “Machine Learning” for her outstanding achievements in Trustworthy AI. Her research highlights include designing a state-of-the-art fair training framework using batch selection and developing novel algorithms for both fair and robust training. Her works have been presented at the top machine learning conferences ICML, ICLR, and NeurIPS among others. She also co-presented a tutorial on Trustworthy AI at the top data mining conference ACM SIGKDD. She is currently interning at the NVIDIA Research AI Algorithms Group developing large-scale real-world fair AI frameworks.

The list of fellowship recipients and the interview videos are displayed on the Microsoft webpage and Youtube.

The list of recipients: https://www.microsoft.com/en-us/research/academic-program/phd-fellowship/2022-recipients/

Interview (Global): https://www.youtube.com/watch?v=T4Q-XwOOoJc

Interview (Asia): https://www.youtube.com/watch?v=qwq3R1XU8UE

[Highlighted research achievements by Yuji Roh: Fair batch selection framework]

[Highlighted research achievements by Yuji Roh: Fair and robust training framework]

2022.10.28 View 14931 -

Phage resistant Escherichia coli strains developed to reduce fermentation failure

A genome engineering-based systematic strategy for developing phage resistant Escherichia coli strains has been successfully developed through the collaborative efforts of a team led by Professor Sang Yup Lee, Professor Shi Chen, and Professor Lianrong Wang. This study by Xuan Zou et al. was published in Nature Communications in August 2022 and featured in Nature Communications Editors’ Highlights. The collaboration by the School of Pharmaceutical Sciences at Wuhan University, the First Affiliated Hospital of Shenzhen University, and the KAIST Department of Chemical and Biomolecular Engineering has made an important advance in the metabolic engineering and fermentation industry as it solves a big problem of phage infection causing fermentation failure.

Systems metabolic engineering is a highly interdisciplinary field that has made the development of microbial cell factories to produce various bioproducts including chemicals, fuels, and materials possible in a sustainable and environmentally friendly way, mitigating the impact of worldwide resource depletion and climate change. Escherichia coli is one of the most important chassis microbial strains, given its wide applications in the bio-based production of a diverse range of chemicals and materials. With the development of tools and strategies for systems metabolic engineering using E. coli, a highly optimized and well-characterized cell factory will play a crucial role in converting cheap and readily available raw materials into products of great economic and industrial value.

However, the consistent problem of phage contamination in fermentation imposes a devastating impact on host cells and threatens the productivity of bacterial bioprocesses in biotechnology facilities, which can lead to widespread fermentation failure and immeasurable economic loss. Host-controlled defense systems can be developed into effective genetic engineering solutions to address bacteriophage contamination in industrial-scale fermentation; however, most of the resistance mechanisms only narrowly restrict phages and their effect on phage contamination will be limited.

There have been attempts to develop diverse abilities/systems for environmental adaptation or antiviral defense. The team’s collaborative efforts developed a new type II single-stranded DNA phosphorothioation (Ssp) defense system derived from E. coli 3234/A, which can be used in multiple industrial E. coli strains (e.g., E. coli K-12, B and W) to provide broad protection against various types of dsDNA coliphages. Furthermore, they developed a systematic genome engineering strategy involving the simultaneous genomic integration of the Ssp defense module and mutations in components that are essential to the phage life cycle. This strategy can be used to transform E. coli hosts that are highly susceptible to phage attack into strains with powerful restriction effects on the tested bacteriophages. This endows hosts with strong resistance against a wide spectrum of phage infections without affecting bacterial growth and normal physiological function. More importantly, the resulting engineered phage-resistant strains maintained the capabilities of producing the desired chemicals and recombinant proteins even under high levels of phage cocktail challenge, which provides crucial protection against phage attacks.

This is a major step forward, as it provides a systematic solution for engineering phage-resistant bacterial strains, especially industrial bioproduction strains, to protect cells from a wide range of bacteriophages. Considering the functionality of this engineering strategy with diverse E. coli strains, the strategy reported in this study can be widely extended to other bacterial species and industrial applications, which will be of great interest to researchers in academia and industry alike.

Fig. A schematic model of the systematic strategy for engineering phage-sensitive industrial E. coli strains into strains with broad antiphage activities. Through the simultaneous genomic integration of a DNA phosphorothioation-based Ssp defense module and mutations of components essential for the phage life cycle, the engineered E. coli strains show strong resistance against diverse phages tested and maintain the capabilities of producing example recombinant proteins, even under high levels of phage cocktail challenge.

2022.08.23 View 15114

Phage resistant Escherichia coli strains developed to reduce fermentation failure

A genome engineering-based systematic strategy for developing phage resistant Escherichia coli strains has been successfully developed through the collaborative efforts of a team led by Professor Sang Yup Lee, Professor Shi Chen, and Professor Lianrong Wang. This study by Xuan Zou et al. was published in Nature Communications in August 2022 and featured in Nature Communications Editors’ Highlights. The collaboration by the School of Pharmaceutical Sciences at Wuhan University, the First Affiliated Hospital of Shenzhen University, and the KAIST Department of Chemical and Biomolecular Engineering has made an important advance in the metabolic engineering and fermentation industry as it solves a big problem of phage infection causing fermentation failure.

Systems metabolic engineering is a highly interdisciplinary field that has made the development of microbial cell factories to produce various bioproducts including chemicals, fuels, and materials possible in a sustainable and environmentally friendly way, mitigating the impact of worldwide resource depletion and climate change. Escherichia coli is one of the most important chassis microbial strains, given its wide applications in the bio-based production of a diverse range of chemicals and materials. With the development of tools and strategies for systems metabolic engineering using E. coli, a highly optimized and well-characterized cell factory will play a crucial role in converting cheap and readily available raw materials into products of great economic and industrial value.

However, the consistent problem of phage contamination in fermentation imposes a devastating impact on host cells and threatens the productivity of bacterial bioprocesses in biotechnology facilities, which can lead to widespread fermentation failure and immeasurable economic loss. Host-controlled defense systems can be developed into effective genetic engineering solutions to address bacteriophage contamination in industrial-scale fermentation; however, most of the resistance mechanisms only narrowly restrict phages and their effect on phage contamination will be limited.