research

-

Repurposed Drugs Present New Strategy for Treating COVID-19

Virtual screening of 6,218 drugs and cell-based assays identifies best therapeutic medication candidates

A joint research group from KAIST and Institut Pasteur Korea has identified repurposed drugs for COVID-19 treatment through virtual screening and cell-based assays. The research team suggested the strategy for virtual screening with greatly reduced false positives by incorporating pre-docking filtering based on shape similarity and post-docking filtering based on interaction similarity. This strategy will help develop therapeutic medications for COVID-19 and other antiviral diseases more rapidly. This study was reported at the Proceedings of the National Academy of Sciences of the United States of America (PNAS).

Researchers screened 6,218 drugs from a collection of FDA-approved drugs or those under clinical trial and identified 38 potential repurposed drugs for COVID-19 with this strategy. Among them, seven compounds inhibited SARS-CoV-2 replication in Vero cells. Three of these drugs, emodin, omipalisib, and tipifarnib, showed anti-SARS-CoV-2 activity in human lung cells, Calu-3.

Drug repurposing is a practical strategy for developing antiviral drugs in a short period of time, especially during a global pandemic. In many instances, drug repurposing starts with the virtual screening of approved drugs. However, the actual hit rate of virtual screening is low and most of the predicted drug candidates are false positives.

The research group developed effective filtering algorithms before and after the docking simulations to improve the hit rates. In the pre-docking filtering process, compounds with similar shapes to the known active compounds for each target protein were selected and used for docking simulations. In the post-docking filtering process, the chemicals identified through their docking simulations were evaluated considering the docking energy and the similarity of the protein-ligand interactions with the known active compounds.

The experimental results showed that the virtual screening strategy reached a high hit rate of 18.4%, leading to the identification of seven potential drugs out of the 38 drugs initially selected.

“We plan to conduct further preclinical trials for optimizing drug concentrations as one of the three candidates didn’t resolve the toxicity issues in preclinical trials,” said Woo Dae Jang, one of the researchers from KAIST.

“The most important part of this research is that we developed a platform technology that can rapidly identify novel compounds for COVID-19 treatment. If we use this technology, we will be able to quickly respond to new infectious diseases as well as variants of the coronavirus,” said Distinguished Professor Sang Yup Lee.

This work was supported by the KAIST Mobile Clinic Module Project funded by the Ministry of Science and ICT (MSIT) and the National Research Foundation of Korea (NRF). The National Culture Collection for Pathogens in Korea provided the SARS-CoV-2 (NCCP43326).

-PublicationWoo Dae Jang, Sangeun Jeon, Seungtaek Kim, and Sang Yup Lee. Drugs repurposed for COVID-19 by virtual screening of 6,218 drugs and cell-based assay. Proc. Natl. Acad. Sci. U.S.A. (https://doi/org/10.1073/pnas.2024302118)

-ProfileDistinguished Professor Sang Yup LeeMetabolic &Biomolecular Engineering National Research Laboratoryhttp://mbel.kaist.ac.kr

Department of Chemical and Biomolecular EngineeringKAIST

2021.07.08 View 14010

Repurposed Drugs Present New Strategy for Treating COVID-19

Virtual screening of 6,218 drugs and cell-based assays identifies best therapeutic medication candidates

A joint research group from KAIST and Institut Pasteur Korea has identified repurposed drugs for COVID-19 treatment through virtual screening and cell-based assays. The research team suggested the strategy for virtual screening with greatly reduced false positives by incorporating pre-docking filtering based on shape similarity and post-docking filtering based on interaction similarity. This strategy will help develop therapeutic medications for COVID-19 and other antiviral diseases more rapidly. This study was reported at the Proceedings of the National Academy of Sciences of the United States of America (PNAS).

Researchers screened 6,218 drugs from a collection of FDA-approved drugs or those under clinical trial and identified 38 potential repurposed drugs for COVID-19 with this strategy. Among them, seven compounds inhibited SARS-CoV-2 replication in Vero cells. Three of these drugs, emodin, omipalisib, and tipifarnib, showed anti-SARS-CoV-2 activity in human lung cells, Calu-3.

Drug repurposing is a practical strategy for developing antiviral drugs in a short period of time, especially during a global pandemic. In many instances, drug repurposing starts with the virtual screening of approved drugs. However, the actual hit rate of virtual screening is low and most of the predicted drug candidates are false positives.

The research group developed effective filtering algorithms before and after the docking simulations to improve the hit rates. In the pre-docking filtering process, compounds with similar shapes to the known active compounds for each target protein were selected and used for docking simulations. In the post-docking filtering process, the chemicals identified through their docking simulations were evaluated considering the docking energy and the similarity of the protein-ligand interactions with the known active compounds.

The experimental results showed that the virtual screening strategy reached a high hit rate of 18.4%, leading to the identification of seven potential drugs out of the 38 drugs initially selected.

“We plan to conduct further preclinical trials for optimizing drug concentrations as one of the three candidates didn’t resolve the toxicity issues in preclinical trials,” said Woo Dae Jang, one of the researchers from KAIST.

“The most important part of this research is that we developed a platform technology that can rapidly identify novel compounds for COVID-19 treatment. If we use this technology, we will be able to quickly respond to new infectious diseases as well as variants of the coronavirus,” said Distinguished Professor Sang Yup Lee.

This work was supported by the KAIST Mobile Clinic Module Project funded by the Ministry of Science and ICT (MSIT) and the National Research Foundation of Korea (NRF). The National Culture Collection for Pathogens in Korea provided the SARS-CoV-2 (NCCP43326).

-PublicationWoo Dae Jang, Sangeun Jeon, Seungtaek Kim, and Sang Yup Lee. Drugs repurposed for COVID-19 by virtual screening of 6,218 drugs and cell-based assay. Proc. Natl. Acad. Sci. U.S.A. (https://doi/org/10.1073/pnas.2024302118)

-ProfileDistinguished Professor Sang Yup LeeMetabolic &Biomolecular Engineering National Research Laboratoryhttp://mbel.kaist.ac.kr

Department of Chemical and Biomolecular EngineeringKAIST

2021.07.08 View 14010 -

Quantum Laser Turns Energy Loss into Gain

A new laser that generates quantum particles can recycle lost energy for highly efficient, low threshold laser applications

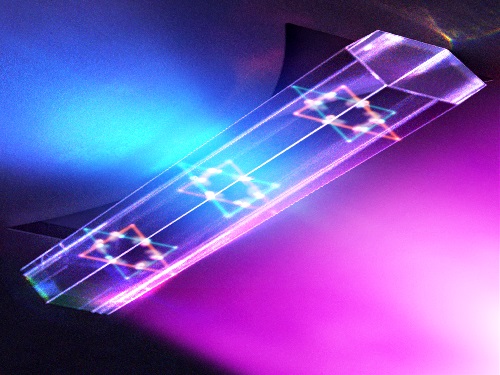

Scientists at KAIST have fabricated a laser system that generates highly interactive quantum particles at room temperature. Their findings, published in the journal Nature Photonics, could lead to a single microcavity laser system that requires lower threshold energy as its energy loss increases.

The system, developed by KAIST physicist Yong-Hoon Cho and colleagues, involves shining light through a single hexagonal-shaped microcavity treated with a loss-modulated silicon nitride substrate. The system design leads to the generation of a polariton laser at room temperature, which is exciting because this usually requires cryogenic temperatures.

The researchers found another unique and counter-intuitive feature of this design. Normally, energy is lost during laser operation. But in this system, as energy loss increased, the amount of energy needed to induce lasing decreased. Exploiting this phenomenon could lead to the development of high efficiency, low threshold lasers for future quantum optical devices.

“This system applies a concept of quantum physics known as parity-time reversal symmetry,” explains Professor Cho. “This is an important platform that allows energy loss to be used as gain. It can be used to reduce laser threshold energy for classical optical devices and sensors, as well as quantum devices and controlling the direction of light.”

The key is the design and materials. The hexagonal microcavity divides light particles into two different modes: one that passes through the upward-facing triangle of the hexagon and another that passes through its downward-facing triangle. Both modes of light particles have the same energy and path but don’t interact with each other.

However, the light particles do interact with other particles called excitons, provided by the hexagonal microcavity, which is made of semiconductors. This interaction leads to the generation of new quantum particles called polaritons that then interact with each other to generate the polariton laser. By controlling the degree of loss between the microcavity and the semiconductor substrate, an intriguing phenomenon arises, with the threshold energy becoming smaller as energy loss increases. This research was supported by the Samsung Science and Technology Foundation and Korea’s National Research Foundation.

-PublicationSong,H.G, Choi, M, Woo, K.Y. Yong-Hoon Cho Room-temperature polaritonic non-Hermitian system with single microcavityNature Photonics (https://doi.org/10.1038/s41566-021-00820-z)

-ProfileProfessor Yong-Hoon ChoQuantum & Nanobio Photonics Laboratoryhttp://qnp.kaist.ac.kr/

Department of PhysicsKAIST

2021.07.07 View 10436

Quantum Laser Turns Energy Loss into Gain

A new laser that generates quantum particles can recycle lost energy for highly efficient, low threshold laser applications

Scientists at KAIST have fabricated a laser system that generates highly interactive quantum particles at room temperature. Their findings, published in the journal Nature Photonics, could lead to a single microcavity laser system that requires lower threshold energy as its energy loss increases.

The system, developed by KAIST physicist Yong-Hoon Cho and colleagues, involves shining light through a single hexagonal-shaped microcavity treated with a loss-modulated silicon nitride substrate. The system design leads to the generation of a polariton laser at room temperature, which is exciting because this usually requires cryogenic temperatures.

The researchers found another unique and counter-intuitive feature of this design. Normally, energy is lost during laser operation. But in this system, as energy loss increased, the amount of energy needed to induce lasing decreased. Exploiting this phenomenon could lead to the development of high efficiency, low threshold lasers for future quantum optical devices.

“This system applies a concept of quantum physics known as parity-time reversal symmetry,” explains Professor Cho. “This is an important platform that allows energy loss to be used as gain. It can be used to reduce laser threshold energy for classical optical devices and sensors, as well as quantum devices and controlling the direction of light.”

The key is the design and materials. The hexagonal microcavity divides light particles into two different modes: one that passes through the upward-facing triangle of the hexagon and another that passes through its downward-facing triangle. Both modes of light particles have the same energy and path but don’t interact with each other.

However, the light particles do interact with other particles called excitons, provided by the hexagonal microcavity, which is made of semiconductors. This interaction leads to the generation of new quantum particles called polaritons that then interact with each other to generate the polariton laser. By controlling the degree of loss between the microcavity and the semiconductor substrate, an intriguing phenomenon arises, with the threshold energy becoming smaller as energy loss increases. This research was supported by the Samsung Science and Technology Foundation and Korea’s National Research Foundation.

-PublicationSong,H.G, Choi, M, Woo, K.Y. Yong-Hoon Cho Room-temperature polaritonic non-Hermitian system with single microcavityNature Photonics (https://doi.org/10.1038/s41566-021-00820-z)

-ProfileProfessor Yong-Hoon ChoQuantum & Nanobio Photonics Laboratoryhttp://qnp.kaist.ac.kr/

Department of PhysicsKAIST

2021.07.07 View 10436 -

Study of T Cells from COVID-19 Convalescents Guides Vaccine Strategies

Researchers confirm that most COVID-19 patients in their convalescent stage carry stem cell-like memory T cells for months

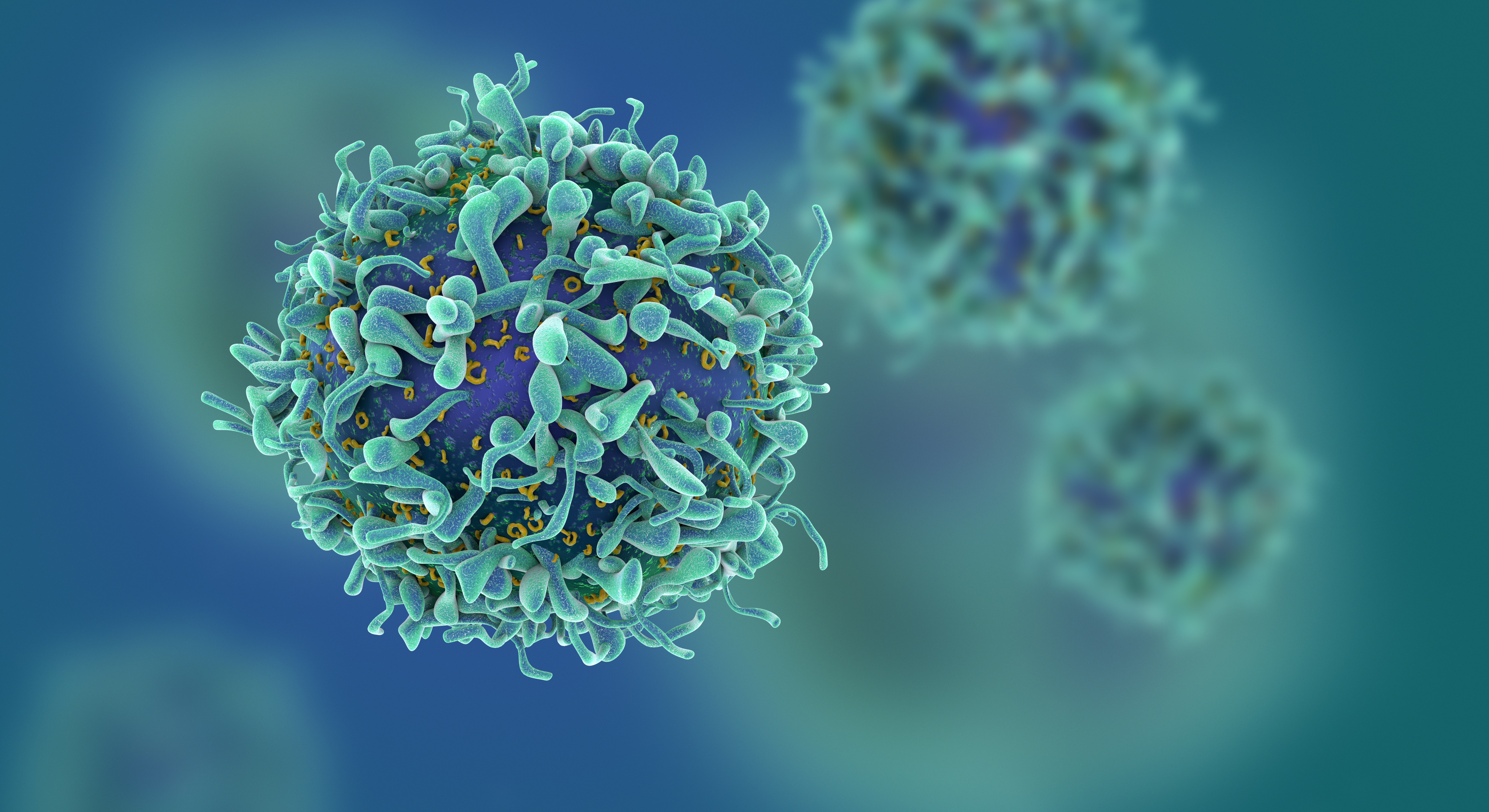

A KAIST immunology research team found that most convalescent patients of COVID-19 develop and maintain T cell memory for over 10 months regardless of the severity of their symptoms. In addition, memory T cells proliferate rapidly after encountering their cognate antigen and accomplish their multifunctional roles. This study provides new insights for effective vaccine strategies against COVID-19, considering the self-renewal capacity and multipotency of memory T cells.

COVID-19 is a disease caused by severe acute respiratory syndrome coronavirus-2 (SARS-CoV-2) infection. When patients recover from COVID-19, SARS-CoV-2-specific adaptive immune memory is developed. The adaptive immune system consists of two principal components: B cells that produce antibodies and T cells that eliminate infected cells. The current results suggest that the protective immune function of memory T cells will be implemented upon re-exposure to SARS-CoV-2.

Recently, the role of memory T cells against SARS-CoV-2 has been gaining attention as neutralizing antibodies wane after recovery. Although memory T cells cannot prevent the infection itself, they play a central role in preventing the severe progression of COVID-19. However, the longevity and functional maintenance of SARS-CoV-2-specific memory T cells remain unknown.

Professor Eui-Cheol Shin and his collaborators investigated the characteristics and functions of stem cell-like memory T cells, which are expected to play a crucial role in long-term immunity. Researchers analyzed the generation of stem cell-like memory T cells and multi-cytokine producing polyfunctional memory T cells, using cutting-edge immunological techniques.

This research is significant in that revealing the long-term immunity of COVID-19 convalescent patients provides an indicator regarding the long-term persistence of T cell immunity, one of the main goals of future vaccine development, as well as evaluating the long-term efficacy of currently available COVID-19 vaccines.

The research team is presently conducting a follow-up study to identify the memory T cell formation and functional characteristics of those who received COVID-19 vaccines, and to understand the immunological effect of COVID-19 vaccines by comparing the characteristics of memory T cells from vaccinated individuals with those of COVID-19 convalescent patients.

PhD candidate Jae Hyung Jung and Dr. Min-Seok Rha, a clinical fellow at Yonsei Severance Hospital, who led the study together explained, “Our analysis will enhance the understanding of COVID-19 immunity and establish an index for COVID-19 vaccine-induced memory T cells.”

“This study is the world’s longest longitudinal study on differentiation and functions of memory T cells among COVID-19 convalescent patients. The research on the temporal dynamics of immune responses has laid the groundwork for building a strategy for next-generation vaccine development,” Professor Shin added. This work was supported by the Samsung Science and Technology Foundation and KAIST, and was published in Nature Communications on June 30.

-Publication:

Jung, J.H., Rha, MS., Sa, M. et al. SARS-CoV-2-specific T cell memory is sustained in COVID-19 convalescent patients for 10 months with successful development of stem cell-like memory T cells. Nat Communications 12, 4043 (2021). https://doi.org/10.1038/s41467-021-24377-1

-Profile:

Professor Eui-Cheol Shin

Laboratory of Immunology & Infectious Diseases (http://liid.kaist.ac.kr/)

Graduate School of Medical Science and Engineering

KAIST

2021.07.05 View 13314

Study of T Cells from COVID-19 Convalescents Guides Vaccine Strategies

Researchers confirm that most COVID-19 patients in their convalescent stage carry stem cell-like memory T cells for months

A KAIST immunology research team found that most convalescent patients of COVID-19 develop and maintain T cell memory for over 10 months regardless of the severity of their symptoms. In addition, memory T cells proliferate rapidly after encountering their cognate antigen and accomplish their multifunctional roles. This study provides new insights for effective vaccine strategies against COVID-19, considering the self-renewal capacity and multipotency of memory T cells.

COVID-19 is a disease caused by severe acute respiratory syndrome coronavirus-2 (SARS-CoV-2) infection. When patients recover from COVID-19, SARS-CoV-2-specific adaptive immune memory is developed. The adaptive immune system consists of two principal components: B cells that produce antibodies and T cells that eliminate infected cells. The current results suggest that the protective immune function of memory T cells will be implemented upon re-exposure to SARS-CoV-2.

Recently, the role of memory T cells against SARS-CoV-2 has been gaining attention as neutralizing antibodies wane after recovery. Although memory T cells cannot prevent the infection itself, they play a central role in preventing the severe progression of COVID-19. However, the longevity and functional maintenance of SARS-CoV-2-specific memory T cells remain unknown.

Professor Eui-Cheol Shin and his collaborators investigated the characteristics and functions of stem cell-like memory T cells, which are expected to play a crucial role in long-term immunity. Researchers analyzed the generation of stem cell-like memory T cells and multi-cytokine producing polyfunctional memory T cells, using cutting-edge immunological techniques.

This research is significant in that revealing the long-term immunity of COVID-19 convalescent patients provides an indicator regarding the long-term persistence of T cell immunity, one of the main goals of future vaccine development, as well as evaluating the long-term efficacy of currently available COVID-19 vaccines.

The research team is presently conducting a follow-up study to identify the memory T cell formation and functional characteristics of those who received COVID-19 vaccines, and to understand the immunological effect of COVID-19 vaccines by comparing the characteristics of memory T cells from vaccinated individuals with those of COVID-19 convalescent patients.

PhD candidate Jae Hyung Jung and Dr. Min-Seok Rha, a clinical fellow at Yonsei Severance Hospital, who led the study together explained, “Our analysis will enhance the understanding of COVID-19 immunity and establish an index for COVID-19 vaccine-induced memory T cells.”

“This study is the world’s longest longitudinal study on differentiation and functions of memory T cells among COVID-19 convalescent patients. The research on the temporal dynamics of immune responses has laid the groundwork for building a strategy for next-generation vaccine development,” Professor Shin added. This work was supported by the Samsung Science and Technology Foundation and KAIST, and was published in Nature Communications on June 30.

-Publication:

Jung, J.H., Rha, MS., Sa, M. et al. SARS-CoV-2-specific T cell memory is sustained in COVID-19 convalescent patients for 10 months with successful development of stem cell-like memory T cells. Nat Communications 12, 4043 (2021). https://doi.org/10.1038/s41467-021-24377-1

-Profile:

Professor Eui-Cheol Shin

Laboratory of Immunology & Infectious Diseases (http://liid.kaist.ac.kr/)

Graduate School of Medical Science and Engineering

KAIST

2021.07.05 View 13314 -

Wearable Device to Monitor Sweat in Real Time

An on-skin platform for the wireless monitoring of flow rate, cumulative loss, and temperature of sweat in real time

An electronic patch can monitor your sweating and check your health status. Even more, the soft microfluidic device that adheres to the surface of the skin, captures, stores, and performs biomarker analysis of sweat as it is released through the eccrine glands.

This wearable and wireless electronic device developed by Professor Kyeongha Kwon and her collaborators is a digital and wireless platform that could help track the so-called ‘filling process’ of sweat without having to visually examine the device. The platform was integrated with microfluidic systems to analyze the sweat’s components.

To monitor the sweat release rate in real time, the researchers created a ‘thermal flow sensing module.’ They designed a sophisticated microfluidic channel to allow the collected sweat to flow through a narrow passage and a heat source was placed on the outer surface of the channel to induce a heat exchange between the sweat and the heated channel.

As a result, the researchers could develop a wireless electronic patch that can measure the temperature difference in a specific location upstream and downstream of the heat source with an electronic circuit and convert it into a digital signal to measure the sweat release rate in real time. The patch accurately measured the perspiration rate in the range of 0-5 microliters/minute (μl/min), which was considered physiologically significant. The sensor can measure the flow of sweat directly and then use the information it collected to quantify total sweat loss. Moreover, the device features advanced microfluidic systems and colorimetric chemical reagents to gather pH measurements and determine the concentration of chloride, creatinine, and glucose in a user's sweat.

Professor Kwon said that these indicators could be used to diagnose various diseases related with sweating such as cystic fibrosis, diabetes, kidney dysfunction, and metabolic alkalosis. “As the sweat flowing in the microfluidic channel is completely separated from the electronic circuit, the new patch overcame the shortcomings of existing flow rate measuring devices, which were vulnerable to corrosion and aging,” she explained.

The patch can be easily attached to the skin with flexible circuit board printing technology and silicone sealing technology. It has an additional sensor that detects changes in skin temperature. Using a smartphone app, a user can check the data measured by the wearable patch in real time.

Professor Kwon added, “This patch can be widely used for personal hydration strategies, the detection of dehydration symptoms, and other health management purposes. It can also be used in a systematic drug delivery system, such as for measuring the blood flow rate in blood vessels near the skin’s surface or measuring a drug’s release rate in real time to calculate the exact dosage.”

-PublicationKyeongha Kwon, Jong Uk Kim, John A. Rogers, et al. “An on-skin platform for wireless monitoring of flow rate, cumulative loss and temperature of sweat in real time.” Nature Electronics (doi.org/10.1038/s41928-021-00556-2)

-ProfileProfessor Kyeongha KwonSchool of Electrical EngineeringKAIST

2021.06.25 View 10676

Wearable Device to Monitor Sweat in Real Time

An on-skin platform for the wireless monitoring of flow rate, cumulative loss, and temperature of sweat in real time

An electronic patch can monitor your sweating and check your health status. Even more, the soft microfluidic device that adheres to the surface of the skin, captures, stores, and performs biomarker analysis of sweat as it is released through the eccrine glands.

This wearable and wireless electronic device developed by Professor Kyeongha Kwon and her collaborators is a digital and wireless platform that could help track the so-called ‘filling process’ of sweat without having to visually examine the device. The platform was integrated with microfluidic systems to analyze the sweat’s components.

To monitor the sweat release rate in real time, the researchers created a ‘thermal flow sensing module.’ They designed a sophisticated microfluidic channel to allow the collected sweat to flow through a narrow passage and a heat source was placed on the outer surface of the channel to induce a heat exchange between the sweat and the heated channel.

As a result, the researchers could develop a wireless electronic patch that can measure the temperature difference in a specific location upstream and downstream of the heat source with an electronic circuit and convert it into a digital signal to measure the sweat release rate in real time. The patch accurately measured the perspiration rate in the range of 0-5 microliters/minute (μl/min), which was considered physiologically significant. The sensor can measure the flow of sweat directly and then use the information it collected to quantify total sweat loss. Moreover, the device features advanced microfluidic systems and colorimetric chemical reagents to gather pH measurements and determine the concentration of chloride, creatinine, and glucose in a user's sweat.

Professor Kwon said that these indicators could be used to diagnose various diseases related with sweating such as cystic fibrosis, diabetes, kidney dysfunction, and metabolic alkalosis. “As the sweat flowing in the microfluidic channel is completely separated from the electronic circuit, the new patch overcame the shortcomings of existing flow rate measuring devices, which were vulnerable to corrosion and aging,” she explained.

The patch can be easily attached to the skin with flexible circuit board printing technology and silicone sealing technology. It has an additional sensor that detects changes in skin temperature. Using a smartphone app, a user can check the data measured by the wearable patch in real time.

Professor Kwon added, “This patch can be widely used for personal hydration strategies, the detection of dehydration symptoms, and other health management purposes. It can also be used in a systematic drug delivery system, such as for measuring the blood flow rate in blood vessels near the skin’s surface or measuring a drug’s release rate in real time to calculate the exact dosage.”

-PublicationKyeongha Kwon, Jong Uk Kim, John A. Rogers, et al. “An on-skin platform for wireless monitoring of flow rate, cumulative loss and temperature of sweat in real time.” Nature Electronics (doi.org/10.1038/s41928-021-00556-2)

-ProfileProfessor Kyeongha KwonSchool of Electrical EngineeringKAIST

2021.06.25 View 10676 -

‘Urban Green Space Affects Citizens’ Happiness’

Study finds the relationship between green space, the economy, and happiness

A recent study revealed that as a city becomes more economically developed, its citizens’ happiness becomes more directly related to the area of urban green space.

A joint research project by Professor Meeyoung Cha of the School of Computing and her collaborators studied the relationship between green space and citizen happiness by analyzing big data from satellite images of 60 different countries.

Urban green space, including parks, gardens, and riversides not only provides aesthetic pleasure, but also positively affects our health by promoting physical activity and social interactions. Most of the previous research attempting to verify the correlation between urban green space and citizen happiness was based on few developed countries. Therefore, it was difficult to identify whether the positive effects of green space are global, or merely phenomena that depended on the economic state of the country. There have also been limitations in data collection, as it is difficult to visit each location or carry out investigations on a large scale based on aerial photographs.

The research team used data collected by Sentinel-2, a high-resolution satellite operated by the European Space Agency (ESA) to investigate 90 green spaces from 60 different countries around the world. The subjects of analysis were cities with the highest population densities (cities that contain at least 10% of the national population), and the images were obtained during the summer of each region for clarity. Images from the northern hemisphere were obtained between June and September of 2018, and those from the southern hemisphere were obtained between December of 2017 and February of 2018.

The areas of urban green space were then quantified and crossed with data from the World Happiness Report and GDP by country reported by the United Nations in 2018. Using these data, the relationships between green space, the economy, and citizen happiness were analyzed.

The results showed that in all cities, citizen happiness was positively correlated with the area of urban green space regardless of the country’s economic state. However, out of the 60 countries studied, the happiness index of the bottom 30 by GDP showed a stronger correlation with economic growth. In countries whose gross national income (GDP per capita) was higher than 38,000 USD, the area of green space acted as a more important factor affecting happiness than economic growth. Data from Seoul was analyzed to represent South Korea, and showed an increased happiness index with increased green areas compared to the past.

The authors point out their work has several policy-level implications. First, public green space should be made accessible to urban dwellers to enhance social support. If public safety in urban parks is not guaranteed, its positive role in social support and happiness may diminish. Also, the meaning of public safety may change; for example, ensuring biological safety will be a priority in keeping urban parks accessible during the COVID-19 pandemic.

Second, urban planning for public green space is needed for both developed and developing countries. As it is challenging or nearly impossible to secure land for green space after the area is developed, urban planning for parks and green space should be considered in developing economies where new cities and suburban areas are rapidly expanding.

Third, recent climate changes can present substantial difficulty in sustaining urban green space. Extreme events such as wildfires, floods, droughts, and cold waves could endanger urban forests while global warming could conversely accelerate tree growth in cities due to the urban heat island effect. Thus, more attention must be paid to predict climate changes and discovering their impact on the maintenance of urban green space.

“There has recently been an increase in the number of studies using big data from satellite images to solve social conundrums,” said Professor Cha. “The tool developed for this investigation can also be used to quantify the area of aquatic environments like lakes and the seaside, and it will now be possible to analyze the relationship between citizen happiness and aquatic environments in future studies,” she added.

Professor Woo Sung Jung from POSTECH and Professor Donghee Wohn from the New Jersey Institute of Technology also joined this research. It was reported in the online issue of EPJ Data Science on May 30.

-PublicationOh-Hyun Kwon, Inho Hong, Jeasurk Yang, Donghee Y. Wohn, Woo-Sung Jung, andMeeyoung Cha, 2021. Urban green space and happiness in developed countries. EPJ Data Science. DOI: https://doi.org/10.1140/epjds/s13688-021-00278-7

-ProfileProfessor Meeyoung ChaData Science Labhttps://ds.ibs.re.kr/

School of Computing

KAIST

2021.06.21 View 11876

‘Urban Green Space Affects Citizens’ Happiness’

Study finds the relationship between green space, the economy, and happiness

A recent study revealed that as a city becomes more economically developed, its citizens’ happiness becomes more directly related to the area of urban green space.

A joint research project by Professor Meeyoung Cha of the School of Computing and her collaborators studied the relationship between green space and citizen happiness by analyzing big data from satellite images of 60 different countries.

Urban green space, including parks, gardens, and riversides not only provides aesthetic pleasure, but also positively affects our health by promoting physical activity and social interactions. Most of the previous research attempting to verify the correlation between urban green space and citizen happiness was based on few developed countries. Therefore, it was difficult to identify whether the positive effects of green space are global, or merely phenomena that depended on the economic state of the country. There have also been limitations in data collection, as it is difficult to visit each location or carry out investigations on a large scale based on aerial photographs.

The research team used data collected by Sentinel-2, a high-resolution satellite operated by the European Space Agency (ESA) to investigate 90 green spaces from 60 different countries around the world. The subjects of analysis were cities with the highest population densities (cities that contain at least 10% of the national population), and the images were obtained during the summer of each region for clarity. Images from the northern hemisphere were obtained between June and September of 2018, and those from the southern hemisphere were obtained between December of 2017 and February of 2018.

The areas of urban green space were then quantified and crossed with data from the World Happiness Report and GDP by country reported by the United Nations in 2018. Using these data, the relationships between green space, the economy, and citizen happiness were analyzed.

The results showed that in all cities, citizen happiness was positively correlated with the area of urban green space regardless of the country’s economic state. However, out of the 60 countries studied, the happiness index of the bottom 30 by GDP showed a stronger correlation with economic growth. In countries whose gross national income (GDP per capita) was higher than 38,000 USD, the area of green space acted as a more important factor affecting happiness than economic growth. Data from Seoul was analyzed to represent South Korea, and showed an increased happiness index with increased green areas compared to the past.

The authors point out their work has several policy-level implications. First, public green space should be made accessible to urban dwellers to enhance social support. If public safety in urban parks is not guaranteed, its positive role in social support and happiness may diminish. Also, the meaning of public safety may change; for example, ensuring biological safety will be a priority in keeping urban parks accessible during the COVID-19 pandemic.

Second, urban planning for public green space is needed for both developed and developing countries. As it is challenging or nearly impossible to secure land for green space after the area is developed, urban planning for parks and green space should be considered in developing economies where new cities and suburban areas are rapidly expanding.

Third, recent climate changes can present substantial difficulty in sustaining urban green space. Extreme events such as wildfires, floods, droughts, and cold waves could endanger urban forests while global warming could conversely accelerate tree growth in cities due to the urban heat island effect. Thus, more attention must be paid to predict climate changes and discovering their impact on the maintenance of urban green space.

“There has recently been an increase in the number of studies using big data from satellite images to solve social conundrums,” said Professor Cha. “The tool developed for this investigation can also be used to quantify the area of aquatic environments like lakes and the seaside, and it will now be possible to analyze the relationship between citizen happiness and aquatic environments in future studies,” she added.

Professor Woo Sung Jung from POSTECH and Professor Donghee Wohn from the New Jersey Institute of Technology also joined this research. It was reported in the online issue of EPJ Data Science on May 30.

-PublicationOh-Hyun Kwon, Inho Hong, Jeasurk Yang, Donghee Y. Wohn, Woo-Sung Jung, andMeeyoung Cha, 2021. Urban green space and happiness in developed countries. EPJ Data Science. DOI: https://doi.org/10.1140/epjds/s13688-021-00278-7

-ProfileProfessor Meeyoung ChaData Science Labhttps://ds.ibs.re.kr/

School of Computing

KAIST

2021.06.21 View 11876 -

Defining the Hund Physics Landscape of Two-Orbital Systems

Researchers identify exotic metals in unexpected quantum systems

Electrons are ubiquitous among atoms, subatomic tokens of energy that can independently change how a system behaves—but they also can change each other. An international research collaboration found that collectively measuring electrons revealed unique and unanticipated findings. The researchers published their results on May 17 in Physical Review Letters.

“It is not feasible to obtain the solution just by tracing the behavior of each individual electron,” said paper author Myung Joon Han, professor of physics at KAIST. “Instead, one should describe or track all the entangled electrons at once. This requires a clever way of treating this entanglement.”

Professor Han and the researchers used a recently developed “many-particle” theory to account for the entangled nature of electrons in solids, which approximates how electrons locally interact with one another to predict their global activity.

Through this approach, the researchers examined systems with two orbitals — the space in which electrons can inhabit. They found that the electrons locked into parallel arrangements within atom sites in solids. This phenomenon, known as Hund’s coupling, results in a Hund’s metal. This metallic phase, which can give rise to such properties as superconductivity, was thought only to exist in three-orbital systems.

“Our finding overturns a conventional viewpoint that at least three orbitals are needed for Hund’s metallicity to emerge,” Professor Han said, noting that two-orbital systems have not been a focus of attention for many physicists. “In addition to this finding of a Hund’s metal, we identified various metallic regimes that can naturally occur in generic, correlated electron materials.”

The researchers found four different correlated metals. One stems from the proximity to a Mott insulator, a state of a solid material that should be conductive but actually prevents conduction due to how the electrons interact. The other three metals form as electrons align their magnetic moments — or phases of producing a magnetic field — at various distances from the Mott insulator. Beyond identifying the metal phases, the researchers also suggested classification criteria to define each metal phase in other systems.

“This research will help scientists better characterize and understand the deeper nature of so-called ‘strongly correlated materials,’ in which the standard theory of solids breaks down due to the presence of strong Coulomb interactions between electrons,” Professor Han said, referring to the force with which the electrons attract or repel each other. These interactions are not typically present in solid materials but appear in materials with metallic phases.

The revelation of metals in two-orbital systems and the ability to determine whole system electron behavior could lead to even more discoveries, according to Professor Han.

“This will ultimately enable us to manipulate and control a variety of electron correlation phenomena,” Professor Han said.

Co-authors include Siheon Ryee from KAIST and Sangkook Choi from the Condensed Matter Physics and Materials Science Department, Brookhaven National Laboratory in the United States. Korea’s National Research Foundation and the U.S. Department of Energy’s (DOE) Office of Science, Basic Energy Sciences, supported this work.

-PublicationSiheon Ryee, Myung Joon Han, and SangKook Choi, 2021.Hund Physics Landscape of Two-Orbital Systems, Physical Review Letters, DOI: 10.1103/PhysRevLett.126.206401

-ProfileProfessor Myung Joon HanDepartment of PhysicsCollege of Natural ScienceKAIST

2021.06.17 View 9408

Defining the Hund Physics Landscape of Two-Orbital Systems

Researchers identify exotic metals in unexpected quantum systems

Electrons are ubiquitous among atoms, subatomic tokens of energy that can independently change how a system behaves—but they also can change each other. An international research collaboration found that collectively measuring electrons revealed unique and unanticipated findings. The researchers published their results on May 17 in Physical Review Letters.

“It is not feasible to obtain the solution just by tracing the behavior of each individual electron,” said paper author Myung Joon Han, professor of physics at KAIST. “Instead, one should describe or track all the entangled electrons at once. This requires a clever way of treating this entanglement.”

Professor Han and the researchers used a recently developed “many-particle” theory to account for the entangled nature of electrons in solids, which approximates how electrons locally interact with one another to predict their global activity.

Through this approach, the researchers examined systems with two orbitals — the space in which electrons can inhabit. They found that the electrons locked into parallel arrangements within atom sites in solids. This phenomenon, known as Hund’s coupling, results in a Hund’s metal. This metallic phase, which can give rise to such properties as superconductivity, was thought only to exist in three-orbital systems.

“Our finding overturns a conventional viewpoint that at least three orbitals are needed for Hund’s metallicity to emerge,” Professor Han said, noting that two-orbital systems have not been a focus of attention for many physicists. “In addition to this finding of a Hund’s metal, we identified various metallic regimes that can naturally occur in generic, correlated electron materials.”

The researchers found four different correlated metals. One stems from the proximity to a Mott insulator, a state of a solid material that should be conductive but actually prevents conduction due to how the electrons interact. The other three metals form as electrons align their magnetic moments — or phases of producing a magnetic field — at various distances from the Mott insulator. Beyond identifying the metal phases, the researchers also suggested classification criteria to define each metal phase in other systems.

“This research will help scientists better characterize and understand the deeper nature of so-called ‘strongly correlated materials,’ in which the standard theory of solids breaks down due to the presence of strong Coulomb interactions between electrons,” Professor Han said, referring to the force with which the electrons attract or repel each other. These interactions are not typically present in solid materials but appear in materials with metallic phases.

The revelation of metals in two-orbital systems and the ability to determine whole system electron behavior could lead to even more discoveries, according to Professor Han.

“This will ultimately enable us to manipulate and control a variety of electron correlation phenomena,” Professor Han said.

Co-authors include Siheon Ryee from KAIST and Sangkook Choi from the Condensed Matter Physics and Materials Science Department, Brookhaven National Laboratory in the United States. Korea’s National Research Foundation and the U.S. Department of Energy’s (DOE) Office of Science, Basic Energy Sciences, supported this work.

-PublicationSiheon Ryee, Myung Joon Han, and SangKook Choi, 2021.Hund Physics Landscape of Two-Orbital Systems, Physical Review Letters, DOI: 10.1103/PhysRevLett.126.206401

-ProfileProfessor Myung Joon HanDepartment of PhysicsCollege of Natural ScienceKAIST

2021.06.17 View 9408 -

Biomimetic Resonant Acoustic Sensor Detecting Far-Distant Voices Accurately to Hit the Market

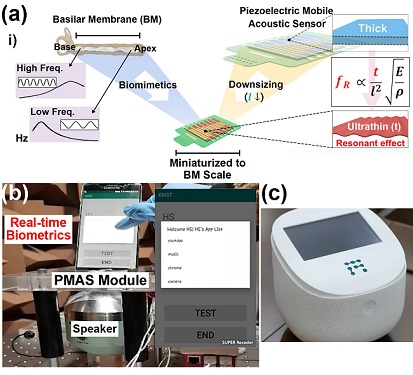

A KAIST research team led by Professor Keon Jae Lee from the Department of Materials Science and Engineering has developed a bioinspired flexible piezoelectric acoustic sensor with multi-resonant ultrathin piezoelectric membrane mimicking the basilar membrane of the human cochlea. The flexible acoustic sensor has been miniaturized for embedding into smartphones and the first commercial prototype is ready for accurate and far-distant voice detection.

In 2018, Professor Lee presented the first concept of a flexible piezoelectric acoustic sensor, inspired by the fact that humans can accurately detect far-distant voices using a multi-resonant trapezoidal membrane with 20,000 hair cells. However, previous acoustic sensors could not be integrated into commercial products like smartphones and AI speakers due to their large device size.

In this work, the research team fabricated a mobile-sized acoustic sensor by adopting ultrathin piezoelectric membranes with high sensitivity. Simulation studies proved that the ultrathin polymer underneath inorganic piezoelectric thin film can broaden the resonant bandwidth to cover the entire voice frequency range using seven channels. Based on this theory, the research team successfully demonstrated the miniaturized acoustic sensor mounted in commercial smartphones and AI speakers for machine learning-based biometric authentication and voice processing. (Please refer to the explanatory movie KAIST Flexible Piezoelectric Mobile Acoustic Sensor).

The resonant mobile acoustic sensor has superior sensitivity and multi-channel signals compared to conventional condenser microphones with a single channel, and it has shown highly accurate and far-distant speaker identification with a small amount of voice training data. The error rate of speaker identification was significantly reduced by 56% (with 150 training datasets) and 75% (with 2,800 training datasets) compared to that of a MEMS condenser device.

Professor Lee said, “Recently, Google has been targeting the ‘Wolverine Project’ on far-distant voice separation from multi-users for next-generation AI user interfaces. I expect that our multi-channel resonant acoustic sensor with abundant voice information is the best fit for this application. Currently, the mass production process is on the verge of completion, so we hope that this will be used in our daily lives very soon.”

Professor Lee also established a startup company called Fronics Inc., located both in Korea and U.S. (branch office) to commercialize this flexible acoustic sensor and is seeking collaborations with global AI companies.

These research results entitled “Biomimetic and Flexible Piezoelectric Mobile Acoustic Sensors with Multi-Resonant Ultrathin Structures for Machine Learning Biometrics” were published in Science Advances in 2021 (7, eabe5683).

-Publication

“Biomimetic and flexible piezoelectric mobile acoustic sensors with multiresonant ultrathin structures for machine learning biometrics,” Science Advances (DOI: 10.1126/sciadv.abe5683)

-Profile

Professor Keon Jae Lee

Department of Materials Science and Engineering

Flexible and Nanobio Device Lab

http://fand.kaist.ac.kr/

KAIST

2021.06.14 View 10227

Biomimetic Resonant Acoustic Sensor Detecting Far-Distant Voices Accurately to Hit the Market

A KAIST research team led by Professor Keon Jae Lee from the Department of Materials Science and Engineering has developed a bioinspired flexible piezoelectric acoustic sensor with multi-resonant ultrathin piezoelectric membrane mimicking the basilar membrane of the human cochlea. The flexible acoustic sensor has been miniaturized for embedding into smartphones and the first commercial prototype is ready for accurate and far-distant voice detection.

In 2018, Professor Lee presented the first concept of a flexible piezoelectric acoustic sensor, inspired by the fact that humans can accurately detect far-distant voices using a multi-resonant trapezoidal membrane with 20,000 hair cells. However, previous acoustic sensors could not be integrated into commercial products like smartphones and AI speakers due to their large device size.

In this work, the research team fabricated a mobile-sized acoustic sensor by adopting ultrathin piezoelectric membranes with high sensitivity. Simulation studies proved that the ultrathin polymer underneath inorganic piezoelectric thin film can broaden the resonant bandwidth to cover the entire voice frequency range using seven channels. Based on this theory, the research team successfully demonstrated the miniaturized acoustic sensor mounted in commercial smartphones and AI speakers for machine learning-based biometric authentication and voice processing. (Please refer to the explanatory movie KAIST Flexible Piezoelectric Mobile Acoustic Sensor).

The resonant mobile acoustic sensor has superior sensitivity and multi-channel signals compared to conventional condenser microphones with a single channel, and it has shown highly accurate and far-distant speaker identification with a small amount of voice training data. The error rate of speaker identification was significantly reduced by 56% (with 150 training datasets) and 75% (with 2,800 training datasets) compared to that of a MEMS condenser device.

Professor Lee said, “Recently, Google has been targeting the ‘Wolverine Project’ on far-distant voice separation from multi-users for next-generation AI user interfaces. I expect that our multi-channel resonant acoustic sensor with abundant voice information is the best fit for this application. Currently, the mass production process is on the verge of completion, so we hope that this will be used in our daily lives very soon.”

Professor Lee also established a startup company called Fronics Inc., located both in Korea and U.S. (branch office) to commercialize this flexible acoustic sensor and is seeking collaborations with global AI companies.

These research results entitled “Biomimetic and Flexible Piezoelectric Mobile Acoustic Sensors with Multi-Resonant Ultrathin Structures for Machine Learning Biometrics” were published in Science Advances in 2021 (7, eabe5683).

-Publication

“Biomimetic and flexible piezoelectric mobile acoustic sensors with multiresonant ultrathin structures for machine learning biometrics,” Science Advances (DOI: 10.1126/sciadv.abe5683)

-Profile

Professor Keon Jae Lee

Department of Materials Science and Engineering

Flexible and Nanobio Device Lab

http://fand.kaist.ac.kr/

KAIST

2021.06.14 View 10227 -

Natural Rainbow Colorants Microbially Produced

Integrated strategies of systems metabolic engineering and membrane engineering led to the production of natural rainbow colorants comprising seven natural colorants from bacteria for the first time

A research group at KAIST has engineered bacterial strains capable of producing three carotenoids and four violacein derivatives, completing the seven colors in the rainbow spectrum. The research team integrated systems metabolic engineering and membrane engineering strategies for the production of seven natural rainbow colorants in engineered Escherichia coli strains. The strategies will be also useful for the efficient production of other industrially important natural products used in the food, pharmaceutical, and cosmetic industries.

Colorants are widely used in our lives and are directly related to human health when we eat food additives and wear cosmetics. However, most of these colorants are made from petroleum, causing unexpected side effects and health problems. Furthermore, they raise environmental concerns such as water pollution from dyeing fabric in the textiles industry. For these reasons, the demand for the production of natural colorants using microorganisms has increased, but could not be readily realized due to the high cost and low yield of the bioprocesses.

These challenges inspired the metabolic engineers at KAIST including researchers Dr. Dongsoo Yang and Dr. Seon Young Park, and Distinguished Professor Sang Yup Lee from the Department of Chemical and Biomolecular Engineering. The team reported the study entitled “Production of rainbow colorants by metabolically engineered Escherichia coli” in Advanced Science online on May 5. It was selected as the journal cover of the July 7 issue.

This research reports for the first time the production of rainbow colorants comprising three carotenoids and four violacein derivatives from glucose or glycerol via systems metabolic engineering and membrane engineering. The research group focused on the production of hydrophobic natural colorants useful for lipophilic food and dyeing garments. First, using systems metabolic engineering, which is an integrated technology to engineer the metabolism of a microorganism, three carotenoids comprising astaxanthin (red), -carotene (orange), and zeaxanthin (yellow), and four violacein derivatives comprising proviolacein (green), prodeoxyviolacein (blue), violacein (navy), and deoxyviolacein (purple) could be produced. Thus, the production of natural colorants covering the complete rainbow spectrum was achieved.

When hydrophobic colorants are produced from microorganisms, the colorants are accumulated inside the cell. As the accumulation capacity is limited, the hydrophobic colorants could not be produced with concentrations higher than the limit. In this regard, the researchers engineered the cell morphology and generated inner-membrane vesicles (spherical membranous structures) to increase the intracellular capacity for accumulating the natural colorants. To further promote production, the researchers generated outer-membrane vesicles to secrete the natural colorants, thus succeeding in efficiently producing all of seven rainbow colorants. It was even more impressive that the production of natural green and navy colorants was achieved for the first time.

“The production of the seven natural rainbow colorants that can replace the current petroleum-based synthetic colorants was achieved for the first time,” said Dr. Dongsoo Yang. He explained that another important point of the research is that integrated metabolic engineering strategies developed from this study can be generally applicable for the efficient production of other natural products useful as pharmaceuticals or nutraceuticals. “As maintaining good health in an aging society is becoming increasingly important, we expect that the technology and strategies developed here will play pivotal roles in producing other valuable natural products of medical or nutritional importance,” explained Distinguished Professor Lee.

This work was supported by the "Cooperative Research Program for Agriculture Science & Technology Development (Project No. PJ01550602)" Rural Development Administration, Republic of Korea.

-Publication:Dongsoo Yang, Seon Young Park, and Sang Yup Lee. Production of rainbow colorants by metabolically engineered Escherichia coli. Advanced Science, 2100743.

-Profile Distinguished Professor Sang Yup LeeMetabolic &Biomolecular Engineering National Research Laboratoryhttp://mbel.kaist.ac.kr

Department of Chemical and Biomolecular EngineeringKAIST

2021.06.09 View 11178

Natural Rainbow Colorants Microbially Produced

Integrated strategies of systems metabolic engineering and membrane engineering led to the production of natural rainbow colorants comprising seven natural colorants from bacteria for the first time

A research group at KAIST has engineered bacterial strains capable of producing three carotenoids and four violacein derivatives, completing the seven colors in the rainbow spectrum. The research team integrated systems metabolic engineering and membrane engineering strategies for the production of seven natural rainbow colorants in engineered Escherichia coli strains. The strategies will be also useful for the efficient production of other industrially important natural products used in the food, pharmaceutical, and cosmetic industries.

Colorants are widely used in our lives and are directly related to human health when we eat food additives and wear cosmetics. However, most of these colorants are made from petroleum, causing unexpected side effects and health problems. Furthermore, they raise environmental concerns such as water pollution from dyeing fabric in the textiles industry. For these reasons, the demand for the production of natural colorants using microorganisms has increased, but could not be readily realized due to the high cost and low yield of the bioprocesses.

These challenges inspired the metabolic engineers at KAIST including researchers Dr. Dongsoo Yang and Dr. Seon Young Park, and Distinguished Professor Sang Yup Lee from the Department of Chemical and Biomolecular Engineering. The team reported the study entitled “Production of rainbow colorants by metabolically engineered Escherichia coli” in Advanced Science online on May 5. It was selected as the journal cover of the July 7 issue.

This research reports for the first time the production of rainbow colorants comprising three carotenoids and four violacein derivatives from glucose or glycerol via systems metabolic engineering and membrane engineering. The research group focused on the production of hydrophobic natural colorants useful for lipophilic food and dyeing garments. First, using systems metabolic engineering, which is an integrated technology to engineer the metabolism of a microorganism, three carotenoids comprising astaxanthin (red), -carotene (orange), and zeaxanthin (yellow), and four violacein derivatives comprising proviolacein (green), prodeoxyviolacein (blue), violacein (navy), and deoxyviolacein (purple) could be produced. Thus, the production of natural colorants covering the complete rainbow spectrum was achieved.

When hydrophobic colorants are produced from microorganisms, the colorants are accumulated inside the cell. As the accumulation capacity is limited, the hydrophobic colorants could not be produced with concentrations higher than the limit. In this regard, the researchers engineered the cell morphology and generated inner-membrane vesicles (spherical membranous structures) to increase the intracellular capacity for accumulating the natural colorants. To further promote production, the researchers generated outer-membrane vesicles to secrete the natural colorants, thus succeeding in efficiently producing all of seven rainbow colorants. It was even more impressive that the production of natural green and navy colorants was achieved for the first time.

“The production of the seven natural rainbow colorants that can replace the current petroleum-based synthetic colorants was achieved for the first time,” said Dr. Dongsoo Yang. He explained that another important point of the research is that integrated metabolic engineering strategies developed from this study can be generally applicable for the efficient production of other natural products useful as pharmaceuticals or nutraceuticals. “As maintaining good health in an aging society is becoming increasingly important, we expect that the technology and strategies developed here will play pivotal roles in producing other valuable natural products of medical or nutritional importance,” explained Distinguished Professor Lee.

This work was supported by the "Cooperative Research Program for Agriculture Science & Technology Development (Project No. PJ01550602)" Rural Development Administration, Republic of Korea.

-Publication:Dongsoo Yang, Seon Young Park, and Sang Yup Lee. Production of rainbow colorants by metabolically engineered Escherichia coli. Advanced Science, 2100743.

-Profile Distinguished Professor Sang Yup LeeMetabolic &Biomolecular Engineering National Research Laboratoryhttp://mbel.kaist.ac.kr

Department of Chemical and Biomolecular EngineeringKAIST

2021.06.09 View 11178 -

Ultrafast, on-Chip PCR Could Speed Up Diagnoses during Pandemics

A rapid point-of-care diagnostic plasmofluidic chip can deliver result in only 8 minutes

Reverse transcription-polymerase chain reaction (RT-PCR) has been the gold standard for diagnosis during the COVID-19 pandemic. However, the PCR portion of the test requires bulky, expensive machines and takes about an hour to complete, making it difficult to quickly diagnose someone at a testing site. Now, researchers at KAIST have developed a plasmofluidic chip that can perform PCR in only about 8 minutes, which could speed up diagnoses during current and future pandemics.

The rapid diagnosis of COVID-19 and other highly contagious viral diseases is important for timely medical care, quarantining and contact tracing. Currently, RT-PCR uses enzymes to reverse transcribe tiny amounts of viral RNA to DNA, and then amplifies the DNA so that it can be detected by a fluorescent probe. It is the most sensitive and reliable diagnostic method.

But because the PCR portion of the test requires 30-40 cycles of heating and cooling in special machines, it takes about an hour to perform, and samples must typically be sent away to a lab, meaning that a patient usually has to wait a day or two to receive their diagnosis.

Professor Ki-Hun Jeong at the Department of Bio and Brain Engineering and his colleagues wanted to develop a plasmofluidic PCR chip that could quickly heat and cool miniscule volumes of liquids, allowing accurate point-of-care diagnoses in a fraction of the time. The research was reported in ACS Nano on May 19.

The researchers devised a postage stamp-sized polydimethylsiloxane chip with a microchamber array for the PCR reactions. When a drop of a sample is added to the chip, a vacuum pulls the liquid into the microchambers, which are positioned above glass nanopillars with gold nanoislands. Any microbubbles, which could interfere with the PCR reaction, diffuse out through an air-permeable wall. When a white LED is turned on beneath the chip, the gold nanoislands on the nanopillars quickly convert light to heat, and then rapidly cool when the light is switched off.

The researchers tested the device on a piece of DNA containing a SARS-CoV-2 gene, accomplishing 40 heating and cooling cycles and fluorescence detection in only 5 minutes, with an additional 3 minutes for sample loading. The amplification efficiency was 91%, whereas a comparable conventional PCR process has an efficiency of 98%. With the reverse transcriptase step added prior to sample loading, the entire testing time with the new method could take 10-13 minutes, as opposed to about an hour for typical RT-PCR testing. The new device could provide many opportunities for rapid point-of-care diagnostics during a pandemic, the researchers say.

-Publication

Ultrafast and Real-Time Nanoplasmonic On-Chip Polymerase Chain Reaction for Rapid and Quantitative Molecular Diagnostics

ACS Nano (https://doi.org/10.1021/acsnano.1c02154)

-Professor

Ki-Hun Jeong

Biophotonics Laboratory

https://biophotonics.kaist.ac.kr/

Department of Bio and Brain Engineeinrg

KAIST

2021.06.08 View 11216

Ultrafast, on-Chip PCR Could Speed Up Diagnoses during Pandemics

A rapid point-of-care diagnostic plasmofluidic chip can deliver result in only 8 minutes

Reverse transcription-polymerase chain reaction (RT-PCR) has been the gold standard for diagnosis during the COVID-19 pandemic. However, the PCR portion of the test requires bulky, expensive machines and takes about an hour to complete, making it difficult to quickly diagnose someone at a testing site. Now, researchers at KAIST have developed a plasmofluidic chip that can perform PCR in only about 8 minutes, which could speed up diagnoses during current and future pandemics.

The rapid diagnosis of COVID-19 and other highly contagious viral diseases is important for timely medical care, quarantining and contact tracing. Currently, RT-PCR uses enzymes to reverse transcribe tiny amounts of viral RNA to DNA, and then amplifies the DNA so that it can be detected by a fluorescent probe. It is the most sensitive and reliable diagnostic method.

But because the PCR portion of the test requires 30-40 cycles of heating and cooling in special machines, it takes about an hour to perform, and samples must typically be sent away to a lab, meaning that a patient usually has to wait a day or two to receive their diagnosis.

Professor Ki-Hun Jeong at the Department of Bio and Brain Engineering and his colleagues wanted to develop a plasmofluidic PCR chip that could quickly heat and cool miniscule volumes of liquids, allowing accurate point-of-care diagnoses in a fraction of the time. The research was reported in ACS Nano on May 19.

The researchers devised a postage stamp-sized polydimethylsiloxane chip with a microchamber array for the PCR reactions. When a drop of a sample is added to the chip, a vacuum pulls the liquid into the microchambers, which are positioned above glass nanopillars with gold nanoislands. Any microbubbles, which could interfere with the PCR reaction, diffuse out through an air-permeable wall. When a white LED is turned on beneath the chip, the gold nanoislands on the nanopillars quickly convert light to heat, and then rapidly cool when the light is switched off.

The researchers tested the device on a piece of DNA containing a SARS-CoV-2 gene, accomplishing 40 heating and cooling cycles and fluorescence detection in only 5 minutes, with an additional 3 minutes for sample loading. The amplification efficiency was 91%, whereas a comparable conventional PCR process has an efficiency of 98%. With the reverse transcriptase step added prior to sample loading, the entire testing time with the new method could take 10-13 minutes, as opposed to about an hour for typical RT-PCR testing. The new device could provide many opportunities for rapid point-of-care diagnostics during a pandemic, the researchers say.

-Publication

Ultrafast and Real-Time Nanoplasmonic On-Chip Polymerase Chain Reaction for Rapid and Quantitative Molecular Diagnostics

ACS Nano (https://doi.org/10.1021/acsnano.1c02154)

-Professor

Ki-Hun Jeong

Biophotonics Laboratory

https://biophotonics.kaist.ac.kr/

Department of Bio and Brain Engineeinrg

KAIST

2021.06.08 View 11216 -

What Guides Habitual Seeking Behavior Explained

A new role of the ventral striatum explains habitual seeking behavior

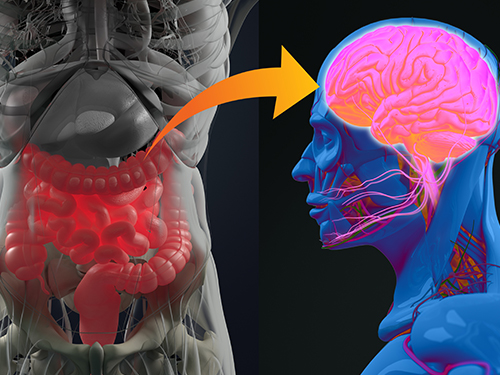

Researchers have been investigating how the brain controls habitual seeking behaviors such as addiction. A recent study by Professor Sue-Hyun Lee from the Department of Bio and Brain Engineering revealed that a long-term value memory maintained in the ventral striatum in the brain is a neural basis of our habitual seeking behavior. This research was conducted in collaboration with the research team lead by Professor Hyoung F. Kim from Seoul National University. Given that addictive behavior is deemed a habitual one, this research provides new insights for developing therapeutic interventions for addiction.

Habitual seeking behavior involves strong stimulus responses, mostly rapid and automatic ones. The ventral striatum in the brain has been thought to be important for value learning and addictive behaviors. However, it was unclear if the ventral striatum processes and retains long-term memories that guide habitual seeking.

Professor Lee’s team reported a new role of the human ventral striatum where long-term memory of high-valued objects are retained as a single representation and may be used to evaluate visual stimuli automatically to guide habitual behavior.

“Our findings propose a role of the ventral striatum as a director that guides habitual behavior with the script of value information written in the past,” said Professor Lee.

The research team investigated whether learned values were retained in the ventral striatum while the subjects passively viewed previously learned objects in the absence of any immediate outcome. Neural responses in the ventral striatum during the incidental perception of learned objects were examined using fMRI and single-unit recording.

The study found significant value discrimination responses in the ventral striatum after learning and a retention period of several days. Moreover, the similarity of neural representations for good objects increased after learning, an outcome positively correlated with the habitual seeking response for good objects.

“These findings suggest that the ventral striatum plays a role in automatic evaluations of objects based on the neural representation of positive values retained since learning, to guide habitual seeking behaviors,” explained Professor Lee.

“We will fully investigate the function of different parts of the entire basal ganglia including the ventral striatum. We also expect that this understanding may lead to the development of better treatment for mental illnesses related to habitual behaviors or addiction problems.”

This study, supported by the National Research Foundation of Korea, was reported at Nature Communications (https://doi.org/10.1038/s41467-021-22335-5.)

-ProfileProfessor Sue-Hyun LeeDepartment of Bio and Brain EngineeringMemory and Cognition Laboratoryhttp://memory.kaist.ac.kr/lecture

KAIST

2021.06.03 View 10262

What Guides Habitual Seeking Behavior Explained

A new role of the ventral striatum explains habitual seeking behavior

Researchers have been investigating how the brain controls habitual seeking behaviors such as addiction. A recent study by Professor Sue-Hyun Lee from the Department of Bio and Brain Engineering revealed that a long-term value memory maintained in the ventral striatum in the brain is a neural basis of our habitual seeking behavior. This research was conducted in collaboration with the research team lead by Professor Hyoung F. Kim from Seoul National University. Given that addictive behavior is deemed a habitual one, this research provides new insights for developing therapeutic interventions for addiction.

Habitual seeking behavior involves strong stimulus responses, mostly rapid and automatic ones. The ventral striatum in the brain has been thought to be important for value learning and addictive behaviors. However, it was unclear if the ventral striatum processes and retains long-term memories that guide habitual seeking.

Professor Lee’s team reported a new role of the human ventral striatum where long-term memory of high-valued objects are retained as a single representation and may be used to evaluate visual stimuli automatically to guide habitual behavior.

“Our findings propose a role of the ventral striatum as a director that guides habitual behavior with the script of value information written in the past,” said Professor Lee.

The research team investigated whether learned values were retained in the ventral striatum while the subjects passively viewed previously learned objects in the absence of any immediate outcome. Neural responses in the ventral striatum during the incidental perception of learned objects were examined using fMRI and single-unit recording.

The study found significant value discrimination responses in the ventral striatum after learning and a retention period of several days. Moreover, the similarity of neural representations for good objects increased after learning, an outcome positively correlated with the habitual seeking response for good objects.

“These findings suggest that the ventral striatum plays a role in automatic evaluations of objects based on the neural representation of positive values retained since learning, to guide habitual seeking behaviors,” explained Professor Lee.

“We will fully investigate the function of different parts of the entire basal ganglia including the ventral striatum. We also expect that this understanding may lead to the development of better treatment for mental illnesses related to habitual behaviors or addiction problems.”

This study, supported by the National Research Foundation of Korea, was reported at Nature Communications (https://doi.org/10.1038/s41467-021-22335-5.)

-ProfileProfessor Sue-Hyun LeeDepartment of Bio and Brain EngineeringMemory and Cognition Laboratoryhttp://memory.kaist.ac.kr/lecture

KAIST

2021.06.03 View 10262 -

Identification of How Chemotherapy Drug Works Could Deliver Personalized Cancer Treatment

The chemotherapy drug decitabine is commonly used to treat patients with blood cancers, but its response rate is somewhat low. Researchers have now identified why this is the case, opening the door to more personalized cancer therapies for those with these types of cancers, and perhaps further afield.

Researchers have identified the genetic and molecular mechanisms within cells that make the chemotherapy drug decitabine—used to treat patients with myelodysplastic syndrome (MDS) and acute myeloid leukemia (AML) —work for some patients but not others. The findings should assist clinicians in developing more patient-specific treatment strategies.

The findings were published in the Proceedings of the National Academies of Science on March 30.

The chemotherapy drug decitabine, also known by its brand name Dacogen, works by modifying our DNA that in turn switches on genes that stop the cancer cells from growing and replicating. However, decitabine’s response rate is somewhat low (showing improvement in just 30-35% of patients), which leaves something of a mystery as to why it works well for some patients but not for others. To find out why this happens, researchers from the KAIST investigated the molecular mediators that are involved with regulating the effects of the drug.

Decitabine works to activate the production of endogenous retroviruses (ERVs), which in turn induces an immune response. ERVs are viruses that long ago inserted dormant copies of themselves into the human genome. Decitabine in essence, ‘reactivates’ these viral elements and produces double-stranded RNAs (dsRNAs) that the immune system views as a foreign body.

“However, the mechanisms involved in this process, in particular how production and transport of these ERV dsRNAs were regulated within the cell were understudied,” said corresponding author Yoosik Kim, professor in the Department of Chemical and Biomolecular Engineering at KAIST.

“So to explain why decitabine works in some patients but not others, we investigated what these molecular mechanisms were,” added Kim.

To do so, the researchers used image-based RNA interference (RNAi) screening. This is a relatively new technique in which specific sequences within a genome are knocked out of action or “downregulated.” Large-scale screening, which can be performed in cultured cells or within live organisms, works to investigate the function of different genes. The KAIST researchers collaborated with the Institut Pasteur Korea to analyze the effect of downregulating genes that recognize ERV dsRNAs and could be involved in the cellular response to decitabine.

From these initial screening results, they performed an even more detailed downregulation screening analysis. Through the screening, they were able to identify two particular gene sequences involved in the production of an RNA-binding protein called Staufen1 and the production of a strand of RNA that does not in turn produce any proteins called TINCR that play a key regulatory role in response to the drug. Staufen1 binds directly to dsRNAs and stabilizes them in concert with the TINCR.

If a patient is not producing sufficient Staufen1 and TINCR, then the dsRNA viral mimics quickly degrade before the immune system can spot them. And, crucially for cancer therapy, this means that patients with lower expression (activation) of these sequences will show inferior response to decitabine. Indeed, the researchers confirmed that MDS/AML patients with low Staufen1 and TINCR expression did not benefit from decitabine therapy.

“We can now isolate patients who will not benefit from the therapy and direct them to a different type of therapy,” said first author Yongsuk Ku. “This serves as an important step toward developing a patient-specific treatment cancer strategy.”

As the researchers used patient samples taken from bone marrow, the next step will be to try to develop a testing method that can identify the problem from just blood samples, which are much easier to acquire from patients.

The team plans to investigate if the analysis can be extended to patients with solid tumors in addition to those with blood cancers.

-Profile

Professor Yoosik Kim

https://qcbio.kaist.ac.kr/

Department of Chemical and Biomolecular Engineering

KAIST

-Publication

Noncanonical immune response to the inhibition of DNA methylation by Staufen1 via stabilization of endogenous retrovirus RNAs, PNAS

2021.05.24 View 10496

Identification of How Chemotherapy Drug Works Could Deliver Personalized Cancer Treatment

The chemotherapy drug decitabine is commonly used to treat patients with blood cancers, but its response rate is somewhat low. Researchers have now identified why this is the case, opening the door to more personalized cancer therapies for those with these types of cancers, and perhaps further afield.

Researchers have identified the genetic and molecular mechanisms within cells that make the chemotherapy drug decitabine—used to treat patients with myelodysplastic syndrome (MDS) and acute myeloid leukemia (AML) —work for some patients but not others. The findings should assist clinicians in developing more patient-specific treatment strategies.

The findings were published in the Proceedings of the National Academies of Science on March 30.

The chemotherapy drug decitabine, also known by its brand name Dacogen, works by modifying our DNA that in turn switches on genes that stop the cancer cells from growing and replicating. However, decitabine’s response rate is somewhat low (showing improvement in just 30-35% of patients), which leaves something of a mystery as to why it works well for some patients but not for others. To find out why this happens, researchers from the KAIST investigated the molecular mediators that are involved with regulating the effects of the drug.