Urban+Robotics

-

Professor Hyun Myung's Team Wins First Place in a Challenge at ICRA by IEEE

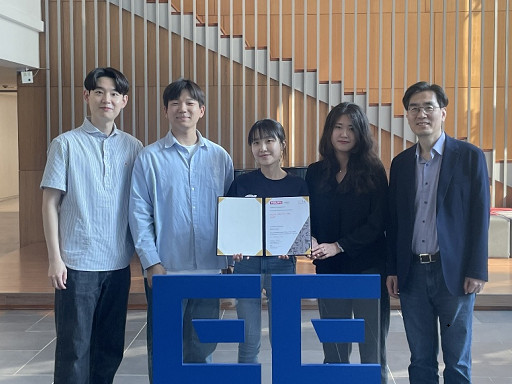

< Photo 1. (From left) Daebeom Kim (Team Leader, Ph.D. student), Seungjae Lee (Ph.D. student), Seoyeon Jang (Ph.D. student), Jei Kong (Master's student), Professor Hyun Myung >

A team of the Urban Robotics Lab, led by Professor Hyun Myung from the KAIST School of Electrical Engineering, achieved a remarkable first-place overall victory in the Nothing Stands Still Challenge (NSS Challenge) 2025, held at the 2025 IEEE International Conference on Robotics and Automation (ICRA), the world's most prestigious robotics conference, from May 19 to 23 in Atlanta, USA.

The NSS Challenge was co-hosted by HILTI, a global construction company based in Liechtenstein, and Stanford University's Gradient Spaces Group. It is an expanded version of the HILTI SLAM (Simultaneous Localization and Mapping)* Challenge, which has been held since 2021, and is considered one of the most prominent challenges at 2025 IEEE ICRA.*SLAM: Refers to Simultaneous Localization and Mapping, a technology where robots, drones, autonomous vehicles, etc., determine their own position and simultaneously create a map of their surroundings.

< Photo 2. A scene from the oral presentation on the winning team's technology (Speakers: Seungjae Lee and Seoyeon Jang, Ph.D. candidates of KAIST School of Electrical Engineering) >

This challenge primarily evaluates how accurately and robustly LiDAR scan data, collected at various times, can be registered in situations with frequent structural changes, such as construction and industrial environments. In particular, it is regarded as a highly technical competition because it deals with multi-session localization and mapping (Multi-session SLAM) technology that responds to structural changes occurring over multiple timeframes, rather than just single-point registration accuracy.

The Urban Robotics Lab team secured first place overall, surpassing National Taiwan University (3rd place) and Northwestern Polytechnical University of China (2nd place) by a significant margin, with their unique localization and mapping technology that solves the problem of registering LiDAR data collected across multiple times and spaces. The winning team will be awarded a prize of $4,000.

< Figure 1. Example of Multiway-Registration for Registering Multiple Scans >

The Urban Robotics Lab team independently developed a multiway-registration framework that can robustly register multiple scans even without prior connection information. This framework consists of an algorithm for summarizing feature points within scans and finding correspondences (CubicFeat), an algorithm for performing global registration based on the found correspondences (Quatro), and an algorithm for refining results based on change detection (Chamelion). This combination of technologies ensures stable registration performance based on fixed structures, even in highly dynamic industrial environments.

< Figure 2. Example of Change Detection Using the Chamelion Algorithm>

LiDAR scan registration technology is a core component of SLAM (Simultaneous Localization And Mapping) in various autonomous systems such as autonomous vehicles, autonomous robots, autonomous walking systems, and autonomous flying vehicles.

Professor Hyun Myung of the School of Electrical Engineering stated, "This award-winning technology is evaluated as a case that simultaneously proves both academic value and industrial applicability by maximizing the performance of precisely estimating the relative positions between different scans even in complex environments. I am grateful to the students who challenged themselves and never gave up, even when many teams abandoned due to the high difficulty."

< Figure 3. Competition Result Board, Lower RMSE (Root Mean Squared Error) Indicates Higher Score (Unit: meters)>

The Urban Robotics Lab team first participated in the SLAM Challenge in 2022, winning second place among academic teams, and in 2023, they secured first place overall in the LiDAR category and first place among academic teams in the vision category.

2025.05.30 View 2333

Professor Hyun Myung's Team Wins First Place in a Challenge at ICRA by IEEE

< Photo 1. (From left) Daebeom Kim (Team Leader, Ph.D. student), Seungjae Lee (Ph.D. student), Seoyeon Jang (Ph.D. student), Jei Kong (Master's student), Professor Hyun Myung >

A team of the Urban Robotics Lab, led by Professor Hyun Myung from the KAIST School of Electrical Engineering, achieved a remarkable first-place overall victory in the Nothing Stands Still Challenge (NSS Challenge) 2025, held at the 2025 IEEE International Conference on Robotics and Automation (ICRA), the world's most prestigious robotics conference, from May 19 to 23 in Atlanta, USA.

The NSS Challenge was co-hosted by HILTI, a global construction company based in Liechtenstein, and Stanford University's Gradient Spaces Group. It is an expanded version of the HILTI SLAM (Simultaneous Localization and Mapping)* Challenge, which has been held since 2021, and is considered one of the most prominent challenges at 2025 IEEE ICRA.*SLAM: Refers to Simultaneous Localization and Mapping, a technology where robots, drones, autonomous vehicles, etc., determine their own position and simultaneously create a map of their surroundings.

< Photo 2. A scene from the oral presentation on the winning team's technology (Speakers: Seungjae Lee and Seoyeon Jang, Ph.D. candidates of KAIST School of Electrical Engineering) >

This challenge primarily evaluates how accurately and robustly LiDAR scan data, collected at various times, can be registered in situations with frequent structural changes, such as construction and industrial environments. In particular, it is regarded as a highly technical competition because it deals with multi-session localization and mapping (Multi-session SLAM) technology that responds to structural changes occurring over multiple timeframes, rather than just single-point registration accuracy.

The Urban Robotics Lab team secured first place overall, surpassing National Taiwan University (3rd place) and Northwestern Polytechnical University of China (2nd place) by a significant margin, with their unique localization and mapping technology that solves the problem of registering LiDAR data collected across multiple times and spaces. The winning team will be awarded a prize of $4,000.

< Figure 1. Example of Multiway-Registration for Registering Multiple Scans >

The Urban Robotics Lab team independently developed a multiway-registration framework that can robustly register multiple scans even without prior connection information. This framework consists of an algorithm for summarizing feature points within scans and finding correspondences (CubicFeat), an algorithm for performing global registration based on the found correspondences (Quatro), and an algorithm for refining results based on change detection (Chamelion). This combination of technologies ensures stable registration performance based on fixed structures, even in highly dynamic industrial environments.

< Figure 2. Example of Change Detection Using the Chamelion Algorithm>

LiDAR scan registration technology is a core component of SLAM (Simultaneous Localization And Mapping) in various autonomous systems such as autonomous vehicles, autonomous robots, autonomous walking systems, and autonomous flying vehicles.

Professor Hyun Myung of the School of Electrical Engineering stated, "This award-winning technology is evaluated as a case that simultaneously proves both academic value and industrial applicability by maximizing the performance of precisely estimating the relative positions between different scans even in complex environments. I am grateful to the students who challenged themselves and never gave up, even when many teams abandoned due to the high difficulty."

< Figure 3. Competition Result Board, Lower RMSE (Root Mean Squared Error) Indicates Higher Score (Unit: meters)>

The Urban Robotics Lab team first participated in the SLAM Challenge in 2022, winning second place among academic teams, and in 2023, they secured first place overall in the LiDAR category and first place among academic teams in the vision category.

2025.05.30 View 2333 -

KAIST debuts “DreamWaQer” - a quadrupedal robot that can walk in the dark

- The team led by Professor Hyun Myung of the School of Electrical Engineering developed “DreamWaQ”, a deep reinforcement learning-based walking robot control technology that can walk in an atypical environment without visual and/or tactile information

- Utilization of “DreamWaQ” technology can enable mass production of various types of “DreamWaQers”

- Expected to be used in exploration of atypical environment involving unique circumstances such as disasters by fire.

A team of Korean engineering researchers has developed a quadrupedal robot technology that can climb up and down the steps and moves without falling over in uneven environments such as tree roots without the help of visual or tactile sensors even in disastrous situations in which visual confirmation is impeded due to darkness or thick smoke from the flames.

KAIST (President Kwang Hyung Lee) announced on the 29th of March that Professor Hyun Myung's research team at the Urban Robotics Lab in the School of Electrical Engineering developed a walking robot control technology that enables robust 'blind locomotion' in various atypical environments.

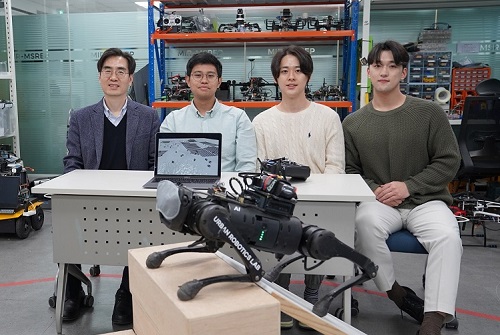

< (From left) Prof. Hyun Myung, Doctoral Candidates I Made Aswin Nahrendra, Byeongho Yu, and Minho Oh. In the foreground is the DreamWaQer, a quadrupedal robot equipped with DreamWaQ technology. >

The KAIST research team developed "DreamWaQ" technology, which was named so as it enables walking robots to move about even in the dark, just as a person can walk without visual help fresh out of bed and going to the bathroom in the dark. With this technology installed atop any legged robots, it will be possible to create various types of "DreamWaQers".

Existing walking robot controllers are based on kinematics and/or dynamics models. This is expressed as a model-based control method. In particular, on atypical environments like the open, uneven fields, it is necessary to obtain the feature information of the terrain more quickly in order to maintain stability as it walks. However, it has been shown to depend heavily on the cognitive ability to survey the surrounding environment.

In contrast, the controller developed by Professor Hyun Myung's research team based on deep reinforcement learning (RL) methods can quickly calculate appropriate control commands for each motor of the walking robot through data of various environments obtained from the simulator. Whereas the existing controllers that learned from simulations required a separate re-orchestration to make it work with an actual robot, this controller developed by the research team is expected to be easily applied to various walking robots because it does not require an additional tuning process.

DreamWaQ, the controller developed by the research team, is largely composed of a context estimation network that estimates the ground and robot information and a policy network that computes control commands. The context-aided estimator network estimates the ground information implicitly and the robot’s status explicitly through inertial information and joint information. This information is fed into the policy network to be used to generate optimal control commands. Both networks are learned together in the simulation.

While the context-aided estimator network is learned through supervised learning, the policy network is learned through an actor-critic architecture, a deep RL methodology. The actor network can only implicitly infer surrounding terrain information. In the simulation, the surrounding terrain information is known, and the critic, or the value network, that has the exact terrain information evaluates the policy of the actor network.

This whole learning process takes only about an hour in a GPU-enabled PC, and the actual robot is equipped with only the network of learned actors. Without looking at the surrounding terrain, it goes through the process of imagining which environment is similar to one of the various environments learned in the simulation using only the inertial sensor (IMU) inside the robot and the measurement of joint angles. If it suddenly encounters an offset, such as a staircase, it will not know until its foot touches the step, but it will quickly draw up terrain information the moment its foot touches the surface. Then the control command suitable for the estimated terrain information is transmitted to each motor, enabling rapidly adapted walking.

The DreamWaQer robot walked not only in the laboratory environment, but also in an outdoor environment around the campus with many curbs and speed bumps, and over a field with many tree roots and gravel, demonstrating its abilities by overcoming a staircase with a difference of a height that is two-thirds of its body. In addition, regardless of the environment, the research team confirmed that it was capable of stable walking ranging from a slow speed of 0.3 m/s to a rather fast speed of 1.0 m/s.

The results of this study were produced by a student in doctorate course, I Made Aswin Nahrendra, as the first author, and his colleague Byeongho Yu as a co-author. It has been accepted to be presented at the upcoming IEEE International Conference on Robotics and Automation (ICRA) scheduled to be held in London at the end of May. (Paper title: DreamWaQ: Learning Robust Quadrupedal Locomotion With Implicit Terrain Imagination via Deep Reinforcement Learning)

The videos of the walking robot DreamWaQer equipped with the developed DreamWaQ can be found at the address below.

Main Introduction: https://youtu.be/JC1_bnTxPiQ Experiment Sketches: https://youtu.be/mhUUZVbeDA0

Meanwhile, this research was carried out with the support from the Robot Industry Core Technology Development Program of the Ministry of Trade, Industry and Energy (MOTIE). (Task title: Development of Mobile Intelligence SW for Autonomous Navigation of Legged Robots in Dynamic and Atypical Environments for Real Application)

< Figure 1. Overview of DreamWaQ, a controller developed by this research team. This network consists of an estimator network that learns implicit and explicit estimates together, a policy network that acts as a controller, and a value network that provides guides to the policies during training. When implemented in a real robot, only the estimator and policy network are used. Both networks run in less than 1 ms on the robot's on-board computer. >

< Figure 2. Since the estimator can implicitly estimate the ground information as the foot touches the surface, it is possible to adapt quickly to rapidly changing ground conditions. >

< Figure 3. Results showing that even a small walking robot was able to overcome steps with height differences of about 20cm. >

2023.05.18 View 11957

KAIST debuts “DreamWaQer” - a quadrupedal robot that can walk in the dark

- The team led by Professor Hyun Myung of the School of Electrical Engineering developed “DreamWaQ”, a deep reinforcement learning-based walking robot control technology that can walk in an atypical environment without visual and/or tactile information

- Utilization of “DreamWaQ” technology can enable mass production of various types of “DreamWaQers”

- Expected to be used in exploration of atypical environment involving unique circumstances such as disasters by fire.

A team of Korean engineering researchers has developed a quadrupedal robot technology that can climb up and down the steps and moves without falling over in uneven environments such as tree roots without the help of visual or tactile sensors even in disastrous situations in which visual confirmation is impeded due to darkness or thick smoke from the flames.

KAIST (President Kwang Hyung Lee) announced on the 29th of March that Professor Hyun Myung's research team at the Urban Robotics Lab in the School of Electrical Engineering developed a walking robot control technology that enables robust 'blind locomotion' in various atypical environments.

< (From left) Prof. Hyun Myung, Doctoral Candidates I Made Aswin Nahrendra, Byeongho Yu, and Minho Oh. In the foreground is the DreamWaQer, a quadrupedal robot equipped with DreamWaQ technology. >

The KAIST research team developed "DreamWaQ" technology, which was named so as it enables walking robots to move about even in the dark, just as a person can walk without visual help fresh out of bed and going to the bathroom in the dark. With this technology installed atop any legged robots, it will be possible to create various types of "DreamWaQers".

Existing walking robot controllers are based on kinematics and/or dynamics models. This is expressed as a model-based control method. In particular, on atypical environments like the open, uneven fields, it is necessary to obtain the feature information of the terrain more quickly in order to maintain stability as it walks. However, it has been shown to depend heavily on the cognitive ability to survey the surrounding environment.

In contrast, the controller developed by Professor Hyun Myung's research team based on deep reinforcement learning (RL) methods can quickly calculate appropriate control commands for each motor of the walking robot through data of various environments obtained from the simulator. Whereas the existing controllers that learned from simulations required a separate re-orchestration to make it work with an actual robot, this controller developed by the research team is expected to be easily applied to various walking robots because it does not require an additional tuning process.

DreamWaQ, the controller developed by the research team, is largely composed of a context estimation network that estimates the ground and robot information and a policy network that computes control commands. The context-aided estimator network estimates the ground information implicitly and the robot’s status explicitly through inertial information and joint information. This information is fed into the policy network to be used to generate optimal control commands. Both networks are learned together in the simulation.

While the context-aided estimator network is learned through supervised learning, the policy network is learned through an actor-critic architecture, a deep RL methodology. The actor network can only implicitly infer surrounding terrain information. In the simulation, the surrounding terrain information is known, and the critic, or the value network, that has the exact terrain information evaluates the policy of the actor network.

This whole learning process takes only about an hour in a GPU-enabled PC, and the actual robot is equipped with only the network of learned actors. Without looking at the surrounding terrain, it goes through the process of imagining which environment is similar to one of the various environments learned in the simulation using only the inertial sensor (IMU) inside the robot and the measurement of joint angles. If it suddenly encounters an offset, such as a staircase, it will not know until its foot touches the step, but it will quickly draw up terrain information the moment its foot touches the surface. Then the control command suitable for the estimated terrain information is transmitted to each motor, enabling rapidly adapted walking.

The DreamWaQer robot walked not only in the laboratory environment, but also in an outdoor environment around the campus with many curbs and speed bumps, and over a field with many tree roots and gravel, demonstrating its abilities by overcoming a staircase with a difference of a height that is two-thirds of its body. In addition, regardless of the environment, the research team confirmed that it was capable of stable walking ranging from a slow speed of 0.3 m/s to a rather fast speed of 1.0 m/s.

The results of this study were produced by a student in doctorate course, I Made Aswin Nahrendra, as the first author, and his colleague Byeongho Yu as a co-author. It has been accepted to be presented at the upcoming IEEE International Conference on Robotics and Automation (ICRA) scheduled to be held in London at the end of May. (Paper title: DreamWaQ: Learning Robust Quadrupedal Locomotion With Implicit Terrain Imagination via Deep Reinforcement Learning)

The videos of the walking robot DreamWaQer equipped with the developed DreamWaQ can be found at the address below.

Main Introduction: https://youtu.be/JC1_bnTxPiQ Experiment Sketches: https://youtu.be/mhUUZVbeDA0

Meanwhile, this research was carried out with the support from the Robot Industry Core Technology Development Program of the Ministry of Trade, Industry and Energy (MOTIE). (Task title: Development of Mobile Intelligence SW for Autonomous Navigation of Legged Robots in Dynamic and Atypical Environments for Real Application)

< Figure 1. Overview of DreamWaQ, a controller developed by this research team. This network consists of an estimator network that learns implicit and explicit estimates together, a policy network that acts as a controller, and a value network that provides guides to the policies during training. When implemented in a real robot, only the estimator and policy network are used. Both networks run in less than 1 ms on the robot's on-board computer. >

< Figure 2. Since the estimator can implicitly estimate the ground information as the foot touches the surface, it is possible to adapt quickly to rapidly changing ground conditions. >

< Figure 3. Results showing that even a small walking robot was able to overcome steps with height differences of about 20cm. >

2023.05.18 View 11957 -

‘Mole-bot’ Optimized for Underground and Space Exploration

Biomimetic drilling robot provides new insights into the development of efficient drilling technologies

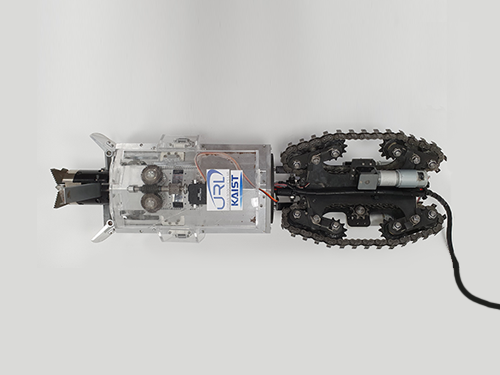

Mole-bot, a drilling biomimetic robot designed by KAIST, boasts a stout scapula, a waist inclinable on all sides, and powerful forelimbs. Most of all, the powerful torque from the expandable drilling bit mimicking the chiseling ability of a mole’s front teeth highlights the best feature of the drilling robot.

The Mole-bot is expected to be used for space exploration and mining for underground resources such as coalbed methane and Rare Earth Elements (REE), which require highly advanced drilling technologies in complex environments.

The research team, led by Professor Hyun Myung from the School of Electrical Engineering, found inspiration for their drilling bot from two striking features of the African mole-rat and European mole.

“The crushing power of the African mole-rat’s teeth is so powerful that they can dig a hole with 48 times more power than their body weight. We used this characteristic for building the main excavation tool. And its expandable drill is designed not to collide with its forelimbs,” said Professor Myung.

The 25-cm wide and 84-cm long Mole-bot can excavate three times faster with six times higher directional accuracy than conventional models. The Mole-bot weighs 26 kg.

After digging, the robot removes the excavated soil and debris using its forelimbs. This embedded muscle feature, inspired by the European mole’s scapula, converts linear motion into a powerful rotational force. For directional drilling, the robot’s elongated waist changes its direction 360° like living mammals.

For exploring underground environments, the research team developed and applied new sensor systems and algorithms to identify the robot’s position and orientation using graph-based 3D Simultaneous Localization and Mapping (SLAM) technology that matches the Earth’s magnetic field sequence, which enables 3D autonomous navigation underground.

According to Market & Market’s survey, the directional drilling market in 2016 is estimated to be 83.3 billion USD and is expected to grow to 103 billion USD in 2021. The growth of the drilling market, starting with the Shale Revolution, is likely to expand into the future development of space and polar resources. As initiated by Space X recently, more attention for planetary exploration will be on the rise and its related technology and equipment market will also increase.

The Mole-bot is a huge step forward for efficient underground drilling and exploration technologies. Unlike conventional drilling processes that use environmentally unfriendly mud compounds for cleaning debris, Mole-bot can mitigate environmental destruction. The researchers said their system saves on cost and labor and does not require additional pipelines or other ancillary equipment.

“We look forward to a more efficient resource exploration with this type of drilling robot. We also hope Mole-bot will have a very positive impact on the robotics market in terms of its extensive application spectra and economic feasibility,” said Professor Myung.

This research, made in collaboration with Professor Jung-Wuk Hong and Professor Tae-Hyuk Kwon’s team in the Department of Civil and Environmental Engineering for robot structure analysis and geotechnical experiments, was supported by the Ministry of Trade, Industry and Energy’s Industrial Technology Innovation Project.

Profile

Professor Hyun Myung

Urban Robotics Lab

http://urobot.kaist.ac.kr/

School of Electrical Engineering

KAIST

2020.06.05 View 12278

‘Mole-bot’ Optimized for Underground and Space Exploration

Biomimetic drilling robot provides new insights into the development of efficient drilling technologies

Mole-bot, a drilling biomimetic robot designed by KAIST, boasts a stout scapula, a waist inclinable on all sides, and powerful forelimbs. Most of all, the powerful torque from the expandable drilling bit mimicking the chiseling ability of a mole’s front teeth highlights the best feature of the drilling robot.

The Mole-bot is expected to be used for space exploration and mining for underground resources such as coalbed methane and Rare Earth Elements (REE), which require highly advanced drilling technologies in complex environments.

The research team, led by Professor Hyun Myung from the School of Electrical Engineering, found inspiration for their drilling bot from two striking features of the African mole-rat and European mole.

“The crushing power of the African mole-rat’s teeth is so powerful that they can dig a hole with 48 times more power than their body weight. We used this characteristic for building the main excavation tool. And its expandable drill is designed not to collide with its forelimbs,” said Professor Myung.

The 25-cm wide and 84-cm long Mole-bot can excavate three times faster with six times higher directional accuracy than conventional models. The Mole-bot weighs 26 kg.

After digging, the robot removes the excavated soil and debris using its forelimbs. This embedded muscle feature, inspired by the European mole’s scapula, converts linear motion into a powerful rotational force. For directional drilling, the robot’s elongated waist changes its direction 360° like living mammals.

For exploring underground environments, the research team developed and applied new sensor systems and algorithms to identify the robot’s position and orientation using graph-based 3D Simultaneous Localization and Mapping (SLAM) technology that matches the Earth’s magnetic field sequence, which enables 3D autonomous navigation underground.

According to Market & Market’s survey, the directional drilling market in 2016 is estimated to be 83.3 billion USD and is expected to grow to 103 billion USD in 2021. The growth of the drilling market, starting with the Shale Revolution, is likely to expand into the future development of space and polar resources. As initiated by Space X recently, more attention for planetary exploration will be on the rise and its related technology and equipment market will also increase.

The Mole-bot is a huge step forward for efficient underground drilling and exploration technologies. Unlike conventional drilling processes that use environmentally unfriendly mud compounds for cleaning debris, Mole-bot can mitigate environmental destruction. The researchers said their system saves on cost and labor and does not require additional pipelines or other ancillary equipment.

“We look forward to a more efficient resource exploration with this type of drilling robot. We also hope Mole-bot will have a very positive impact on the robotics market in terms of its extensive application spectra and economic feasibility,” said Professor Myung.

This research, made in collaboration with Professor Jung-Wuk Hong and Professor Tae-Hyuk Kwon’s team in the Department of Civil and Environmental Engineering for robot structure analysis and geotechnical experiments, was supported by the Ministry of Trade, Industry and Energy’s Industrial Technology Innovation Project.

Profile

Professor Hyun Myung

Urban Robotics Lab

http://urobot.kaist.ac.kr/

School of Electrical Engineering

KAIST

2020.06.05 View 12278 -

BBC Feautres KAIST's Jellyfish Robot

Click, a weekly BBC television program covering news and recent developments in science and technology, introduced KAIST’s robotics project, JEROS, which has been conducted by Professor Hyun Myung of the Urban Robotics Lab (http://urobot.kaist.ac.kr/). JEROS is a robotics system that detects, captures, and removes jellyfish in the ocean. For the show, please click the link below:

BBC News, Click, June 2, 2015

The Robot Jellyfish Shredders

http://www.bbc.com/news/technology-32965841

2015.06.03 View 10286

BBC Feautres KAIST's Jellyfish Robot

Click, a weekly BBC television program covering news and recent developments in science and technology, introduced KAIST’s robotics project, JEROS, which has been conducted by Professor Hyun Myung of the Urban Robotics Lab (http://urobot.kaist.ac.kr/). JEROS is a robotics system that detects, captures, and removes jellyfish in the ocean. For the show, please click the link below:

BBC News, Click, June 2, 2015

The Robot Jellyfish Shredders

http://www.bbc.com/news/technology-32965841

2015.06.03 View 10286 -

Wall Climbing Quadcopter by KAIST Urban Robotics Lab

Popular Science, an American monthly magazine devoted to general readers of science and technology, published “Watch This Creepy Drone Climb A Wall” online describing a drone that can fly and climb walls on March 19, 2015. The drone is the product of research conducted by Professor Hyun Myung of the Department of Civil and Environmental Engineering at KAIST. The flying quadcopters can turn into wall-crawling robots, or vice versa, when carrying out such assignments as cleaning windows or inspecting a building’s infrastructure. Professor Myung leads the KAIST Urban Robotics Lab (http://urobot.kaist.ac.kr/). For a link to the article, see http://www.popsci.com/watch-drone-climb-wall-video.

Another Popular Science article (posted on April 3, 2015), entitled “South Korea Gets Ready for Drone-on-Drone Warfare with North Korea,” describes a combat system of drones against hostile drones. Professor Hyunchul Shim of the Aerospace Engineering Department at KAIST developed the anti-drone system. He currently heads the Unmanned System Research Group, FDCL, http://unmanned.kaist.ac.kr/) and the Center of Field Robotics for Innovation, Exploration, aNd Defense (C-FRIEND).

2015.04.07 View 13522

Wall Climbing Quadcopter by KAIST Urban Robotics Lab

Popular Science, an American monthly magazine devoted to general readers of science and technology, published “Watch This Creepy Drone Climb A Wall” online describing a drone that can fly and climb walls on March 19, 2015. The drone is the product of research conducted by Professor Hyun Myung of the Department of Civil and Environmental Engineering at KAIST. The flying quadcopters can turn into wall-crawling robots, or vice versa, when carrying out such assignments as cleaning windows or inspecting a building’s infrastructure. Professor Myung leads the KAIST Urban Robotics Lab (http://urobot.kaist.ac.kr/). For a link to the article, see http://www.popsci.com/watch-drone-climb-wall-video.

Another Popular Science article (posted on April 3, 2015), entitled “South Korea Gets Ready for Drone-on-Drone Warfare with North Korea,” describes a combat system of drones against hostile drones. Professor Hyunchul Shim of the Aerospace Engineering Department at KAIST developed the anti-drone system. He currently heads the Unmanned System Research Group, FDCL, http://unmanned.kaist.ac.kr/) and the Center of Field Robotics for Innovation, Exploration, aNd Defense (C-FRIEND).

2015.04.07 View 13522 -

JEROS, a jellyfish exterminating robot, appears in a US business and technology news

Business Insider, a US business and technology news website launched in 2006 and based in New York City, published a story about JEROS, a robot that disposes of ever-increasing jellyfish in the ocean.

JEROS was the brainchild of Professor Hyun Myung at the Department of Civil and Environmental Engineering, KAIST. It can shred almost one tons worth of jellyfish per hour.

For the story, please visit the following link:

Business Insider, June 24, 2014

“These Jellyfish-Killing Robots Could Save the Fishing Industry Billions Per Year”

http://www.businessinsider.com/jellyfish-killing-robot-2014-6

JEROS in action

2014.06.26 View 9966

JEROS, a jellyfish exterminating robot, appears in a US business and technology news

Business Insider, a US business and technology news website launched in 2006 and based in New York City, published a story about JEROS, a robot that disposes of ever-increasing jellyfish in the ocean.

JEROS was the brainchild of Professor Hyun Myung at the Department of Civil and Environmental Engineering, KAIST. It can shred almost one tons worth of jellyfish per hour.

For the story, please visit the following link:

Business Insider, June 24, 2014

“These Jellyfish-Killing Robots Could Save the Fishing Industry Billions Per Year”

http://www.businessinsider.com/jellyfish-killing-robot-2014-6

JEROS in action

2014.06.26 View 9966 -

Jellyfish Exterminator Robot Developed

Formation Control demonstrated by JEROS

- Trial performance successfully completed with three assembly robots -

A team led by KAIST Civil and Environmental Engineering Department’s Professor Hyeon Myeong has just finished testing the cooperative assembly robot for jellyfish population control, named JEROS, in the field.

The rising number of accidents and financial losses by fishing industry, estimated at 300 billion won per year, caused by the recent swarm of jellyfish in coastal waters has been a major problem for many years. The research team led by Prof. Hyeon Myeong began developing an unmanned automated system capable of eradicating jellyfishin in 2009, and has since completed field-tests last year with success.

This year, JEROS’s performance and speed has been improved with the ability to work in formation as a cooperative group to efficiently exterminate jellyfish.

An unmanned aquatic robot JEROS with a mountable grinding part is buoyed by two cylindrical bodies that utilizes propulsion motors to move forward and reverse, as well as rotate 360 degrees. Furthermore, GIS (geographic information system)-based map data is used to specify the region for jellyfish extermination, which automatically calculates the path for the task. JEROS then navigates autonomously using a GPS (Global Positioning System) receiver and an INS(inertial navigation system).

The assembly robots maintain a set formation pattern, while calculating its course to perform jellyfish extermination. The advantage of this method is that there is no need for individual control of the robots. Only the leader robot requires the calculated path, and the other robots can simply follow in a formation by exchanging their location information via wireless communication (ZigBee method).

JEROS uses its propulsion speed to capture jellyfish into the grinding part on the bottom, which then suctions the jellyfish toward the propeller to be exterminated.

The field test results show that three assembly robots operating at 4 knots (7.2km/h) disposes jellyfish at the rate of about 900kg/h.

The research team has currently completed testing JEROS at Gyeongnam Masan Bay and is expected to further experiment and improve the performance at various environment and conditions.

JEROS may also be utilized for other purposes including marine patrols, prevention of oil spills and waste removal in the sea.

JEROS research has been funded by Ministry of Science, ICT and Future Planning and Ministry of Trade, Industry and Energy.

2013.09.27 View 18040

Jellyfish Exterminator Robot Developed

Formation Control demonstrated by JEROS

- Trial performance successfully completed with three assembly robots -

A team led by KAIST Civil and Environmental Engineering Department’s Professor Hyeon Myeong has just finished testing the cooperative assembly robot for jellyfish population control, named JEROS, in the field.

The rising number of accidents and financial losses by fishing industry, estimated at 300 billion won per year, caused by the recent swarm of jellyfish in coastal waters has been a major problem for many years. The research team led by Prof. Hyeon Myeong began developing an unmanned automated system capable of eradicating jellyfishin in 2009, and has since completed field-tests last year with success.

This year, JEROS’s performance and speed has been improved with the ability to work in formation as a cooperative group to efficiently exterminate jellyfish.

An unmanned aquatic robot JEROS with a mountable grinding part is buoyed by two cylindrical bodies that utilizes propulsion motors to move forward and reverse, as well as rotate 360 degrees. Furthermore, GIS (geographic information system)-based map data is used to specify the region for jellyfish extermination, which automatically calculates the path for the task. JEROS then navigates autonomously using a GPS (Global Positioning System) receiver and an INS(inertial navigation system).

The assembly robots maintain a set formation pattern, while calculating its course to perform jellyfish extermination. The advantage of this method is that there is no need for individual control of the robots. Only the leader robot requires the calculated path, and the other robots can simply follow in a formation by exchanging their location information via wireless communication (ZigBee method).

JEROS uses its propulsion speed to capture jellyfish into the grinding part on the bottom, which then suctions the jellyfish toward the propeller to be exterminated.

The field test results show that three assembly robots operating at 4 knots (7.2km/h) disposes jellyfish at the rate of about 900kg/h.

The research team has currently completed testing JEROS at Gyeongnam Masan Bay and is expected to further experiment and improve the performance at various environment and conditions.

JEROS may also be utilized for other purposes including marine patrols, prevention of oil spills and waste removal in the sea.

JEROS research has been funded by Ministry of Science, ICT and Future Planning and Ministry of Trade, Industry and Energy.

2013.09.27 View 18040 -

Jellyfish removal robot developed

Professor Myung Hyun’s research team from the Department of Civil and Environmental Engineering at KAIST has developed a jellyfish removal robot named ‘JEROS’ (JEROS: Jellyfish Elimination RObotic Swarm).

With jellyfish attacks around the south-west coast of Korea becoming a serious problem, causing deaths and operational losses (around 3 billion won a year), Professor Myung’s team started the development of this unmanned automatic jellyfish removal system 3 years ago.

JEROS floats on the surface of the water using two long cylindrical bodies. Motors are attached to the bodies such that the robot can move back and forth as well as rotate on water. A camera and GPS system allows the JEROS to detect jellyfish swarm as well as plan and calculate its work path relative to its position.

The jellyfish are removed by a submerged net that sucks them up using the velocity created by the unmanned sailing. Once caught, the jellyfish are pulverized using a special propeller.

JEROS is estimated to be 3 times more economical than manual removal. Upon experimentation, it showed a removal rate of 400kg per hour at 6 knots. To reach similar effectiveness as manual net removal, which removes up to 1 ton per hour, the research team designed the robot such that 3 or more individual robots could be grouped together and controlled as one.

The research team has finished conducting removal tests in Gunsan and Masan and plan to commercialize the robot next April after improving the removal technology. JEROS technology can also be used for a wide range of purposes such as patrolling and guarding, preventing oil spills or removing floating waste. This research was funded by the Ministry of Education, Science and Technology since 2010.

2012.08.29 View 13467

Jellyfish removal robot developed

Professor Myung Hyun’s research team from the Department of Civil and Environmental Engineering at KAIST has developed a jellyfish removal robot named ‘JEROS’ (JEROS: Jellyfish Elimination RObotic Swarm).

With jellyfish attacks around the south-west coast of Korea becoming a serious problem, causing deaths and operational losses (around 3 billion won a year), Professor Myung’s team started the development of this unmanned automatic jellyfish removal system 3 years ago.

JEROS floats on the surface of the water using two long cylindrical bodies. Motors are attached to the bodies such that the robot can move back and forth as well as rotate on water. A camera and GPS system allows the JEROS to detect jellyfish swarm as well as plan and calculate its work path relative to its position.

The jellyfish are removed by a submerged net that sucks them up using the velocity created by the unmanned sailing. Once caught, the jellyfish are pulverized using a special propeller.

JEROS is estimated to be 3 times more economical than manual removal. Upon experimentation, it showed a removal rate of 400kg per hour at 6 knots. To reach similar effectiveness as manual net removal, which removes up to 1 ton per hour, the research team designed the robot such that 3 or more individual robots could be grouped together and controlled as one.

The research team has finished conducting removal tests in Gunsan and Masan and plan to commercialize the robot next April after improving the removal technology. JEROS technology can also be used for a wide range of purposes such as patrolling and guarding, preventing oil spills or removing floating waste. This research was funded by the Ministry of Education, Science and Technology since 2010.

2012.08.29 View 13467