interaction

-

“For the First Time, We Shared a Meaningful Exchange”: KAIST Develops an AI App for Parents and Minimally Verbal Autistic Children Connect

• KAIST team up with NAVER AI Lab and Dodakim Child Development Center Develop ‘AAcessTalk’, an AI-driven Communication Tool bridging the gap Between Children with Autism and their Parents

• The project earned the prestigious Best Paper Award at the ACM CHI 2025, the Premier International Conference in Human-Computer Interaction

• Families share heartwarming stories of breakthrough communication and newfound understanding.

< Photo 1. (From left) Professor Hwajung Hong and Doctoral candidate Dasom Choi of the Department of Industrial Design with SoHyun Park and Young-Ho Kim of Naver Cloud AI Lab >

For many families of minimally verbal autistic (MVA) children, communication often feels like an uphill battle. But now, thanks to a new AI-powered app developed by researchers at KAIST in collaboration with NAVER AI Lab and Dodakim Child Development Center, parents are finally experiencing moments of genuine connection with their children.

On the 16th, the KAIST (President Kwang Hyung Lee) research team, led by Professor Hwajung Hong of the Department of Industrial Design, announced the development of ‘AAcessTalk,’ an artificial intelligence (AI)-based communication tool that enables genuine communication between children with autism and their parents.

This research was recognized for its human-centered AI approach and received international attention, earning the Best Paper Award at the ACM CHI 2025*, an international conference held in Yokohama, Japan.*ACM CHI (ACM Conference on Human Factors in Computing Systems) 2025: One of the world's most prestigious academic conference in the field of Human-Computer Interaction (HCI).

This year, approximately 1,200 papers were selected out of about 5,000 submissions, with the Best Paper Award given to only the top 1%. The conference, which drew over 5,000 researchers, was the largest in its history, reflecting the growing interest in ‘Human-AI Interaction.’

Called AACessTalk, the app offers personalized vocabulary cards tailored to each child’s interests and context, while guiding parents through conversations with customized prompts. This creates a space where children’s voices can finally be heard—and where parents and children can connect on a deeper level.

Traditional augmentative and alternative communication (AAC) tools have relied heavily on fixed card systems that often fail to capture the subtle emotions and shifting interests of children with autism. AACessTalk breaks new ground by integrating AI technology that adapts in real time to the child’s mood and environment.

< Figure. Schematics of AACessTalk system. It provides personalized vocabulary cards for children with autism and context-based conversation guides for parents to focus on practical communication. Large ‘Turn Pass Button’ is placed at the child’s side to allow the child to lead the conversation. >

Among its standout features is a large ‘Turn Pass Button’ that gives children control over when to start or end conversations—allowing them to lead with agency. Another feature, the “What about Mom/Dad?” button, encourages children to ask about their parents’ thoughts, fostering mutual engagement in dialogue, something many children had never done before.

One parent shared, “For the first time, we shared a meaningful exchange.” Such stories were common among the 11 families who participated in a two-week pilot study, where children used the app to take more initiative in conversations and parents discovered new layers of their children’s language abilities.

Parents also reported moments of surprise and joy when their children used unexpected words or took the lead in conversations, breaking free from repetitive patterns. “I was amazed when my child used a word I hadn’t heard before. It helped me understand them in a whole new way,” recalled one caregiver.

Professor Hwajung Hong, who led the research at KAIST’s Department of Industrial Design, emphasized the importance of empowering children to express their own voices. “This study shows that AI can be more than a communication aid—it can be a bridge to genuine connection and understanding within families,” she said.

Looking ahead, the team plans to refine and expand human-centered AI technologies that honor neurodiversity, with a focus on bringing practical solutions to socially vulnerable groups and enriching user experiences.

This research is the result of KAIST Department of Industrial Design doctoral student Dasom Choi's internship at NAVER AI Lab.* Thesis Title: AACessTalk: Fostering Communication between Minimally Verbal Autistic Children and Parents with Contextual Guidance and Card Recommendation* DOI: 10.1145/3706598.3713792* Main Author Information: Dasom Choi (KAIST, NAVER AI Lab, First Author), SoHyun Park (NAVER AI Lab) , Kyungah Lee (Dodakim Child Development Center), Hwajung Hong (KAIST), and Young-Ho Kim (NAVER AI Lab, Corresponding Author)

This research was supported by the NAVER AI Lab internship program and grants from the National Research Foundation of Korea: the Doctoral Student Research Encouragement Grant (NRF-2024S1A5B5A19043580) and the Mid-Career Researcher Support Program for the Development of a Generative AI-Based Augmentative and Alternative Communication System for Autism Spectrum Disorder (RS-2024-00458557).

2025.05.19 View 3708

“For the First Time, We Shared a Meaningful Exchange”: KAIST Develops an AI App for Parents and Minimally Verbal Autistic Children Connect

• KAIST team up with NAVER AI Lab and Dodakim Child Development Center Develop ‘AAcessTalk’, an AI-driven Communication Tool bridging the gap Between Children with Autism and their Parents

• The project earned the prestigious Best Paper Award at the ACM CHI 2025, the Premier International Conference in Human-Computer Interaction

• Families share heartwarming stories of breakthrough communication and newfound understanding.

< Photo 1. (From left) Professor Hwajung Hong and Doctoral candidate Dasom Choi of the Department of Industrial Design with SoHyun Park and Young-Ho Kim of Naver Cloud AI Lab >

For many families of minimally verbal autistic (MVA) children, communication often feels like an uphill battle. But now, thanks to a new AI-powered app developed by researchers at KAIST in collaboration with NAVER AI Lab and Dodakim Child Development Center, parents are finally experiencing moments of genuine connection with their children.

On the 16th, the KAIST (President Kwang Hyung Lee) research team, led by Professor Hwajung Hong of the Department of Industrial Design, announced the development of ‘AAcessTalk,’ an artificial intelligence (AI)-based communication tool that enables genuine communication between children with autism and their parents.

This research was recognized for its human-centered AI approach and received international attention, earning the Best Paper Award at the ACM CHI 2025*, an international conference held in Yokohama, Japan.*ACM CHI (ACM Conference on Human Factors in Computing Systems) 2025: One of the world's most prestigious academic conference in the field of Human-Computer Interaction (HCI).

This year, approximately 1,200 papers were selected out of about 5,000 submissions, with the Best Paper Award given to only the top 1%. The conference, which drew over 5,000 researchers, was the largest in its history, reflecting the growing interest in ‘Human-AI Interaction.’

Called AACessTalk, the app offers personalized vocabulary cards tailored to each child’s interests and context, while guiding parents through conversations with customized prompts. This creates a space where children’s voices can finally be heard—and where parents and children can connect on a deeper level.

Traditional augmentative and alternative communication (AAC) tools have relied heavily on fixed card systems that often fail to capture the subtle emotions and shifting interests of children with autism. AACessTalk breaks new ground by integrating AI technology that adapts in real time to the child’s mood and environment.

< Figure. Schematics of AACessTalk system. It provides personalized vocabulary cards for children with autism and context-based conversation guides for parents to focus on practical communication. Large ‘Turn Pass Button’ is placed at the child’s side to allow the child to lead the conversation. >

Among its standout features is a large ‘Turn Pass Button’ that gives children control over when to start or end conversations—allowing them to lead with agency. Another feature, the “What about Mom/Dad?” button, encourages children to ask about their parents’ thoughts, fostering mutual engagement in dialogue, something many children had never done before.

One parent shared, “For the first time, we shared a meaningful exchange.” Such stories were common among the 11 families who participated in a two-week pilot study, where children used the app to take more initiative in conversations and parents discovered new layers of their children’s language abilities.

Parents also reported moments of surprise and joy when their children used unexpected words or took the lead in conversations, breaking free from repetitive patterns. “I was amazed when my child used a word I hadn’t heard before. It helped me understand them in a whole new way,” recalled one caregiver.

Professor Hwajung Hong, who led the research at KAIST’s Department of Industrial Design, emphasized the importance of empowering children to express their own voices. “This study shows that AI can be more than a communication aid—it can be a bridge to genuine connection and understanding within families,” she said.

Looking ahead, the team plans to refine and expand human-centered AI technologies that honor neurodiversity, with a focus on bringing practical solutions to socially vulnerable groups and enriching user experiences.

This research is the result of KAIST Department of Industrial Design doctoral student Dasom Choi's internship at NAVER AI Lab.* Thesis Title: AACessTalk: Fostering Communication between Minimally Verbal Autistic Children and Parents with Contextual Guidance and Card Recommendation* DOI: 10.1145/3706598.3713792* Main Author Information: Dasom Choi (KAIST, NAVER AI Lab, First Author), SoHyun Park (NAVER AI Lab) , Kyungah Lee (Dodakim Child Development Center), Hwajung Hong (KAIST), and Young-Ho Kim (NAVER AI Lab, Corresponding Author)

This research was supported by the NAVER AI Lab internship program and grants from the National Research Foundation of Korea: the Doctoral Student Research Encouragement Grant (NRF-2024S1A5B5A19043580) and the Mid-Career Researcher Support Program for the Development of a Generative AI-Based Augmentative and Alternative Communication System for Autism Spectrum Disorder (RS-2024-00458557).

2025.05.19 View 3708 -

KAIST's Pioneering VR Precision Technology & Choreography Tool Receive Spotlights at CHI 2025

Accurate pointing in virtual spaces is essential for seamless interaction. If pointing is not precise, selecting the desired object becomes challenging, breaking user immersion and reducing overall experience quality. KAIST researchers have developed a technology that offers a vivid, lifelike experience in virtual space, alongside a new tool that assists choreographers throughout the creative process.

KAIST (President Kwang-Hyung Lee) announced on May 13th that a research team led by Professor Sang Ho Yoon of the Graduate School of Culture Technology, in collaboration with Professor Yang Zhang of the University of California, Los Angeles (UCLA), has developed the ‘T2IRay’ technology and the ‘ChoreoCraft’ platform, which enables choreographers to work more freely and creatively in virtual reality. These technologies received two Honorable Mention awards, recognizing the top 5% of papers, at CHI 2025*, the best international conference in the field of human-computer interaction, hosted by the Association for Computing Machinery (ACM) from April 25 to May 1.

< (From left) PhD candidates Jina Kim and Kyungeun Jung along with Master's candidate, Hyunyoung Han and Professor Sang Ho Yoon of KAIST Graduate School of Culture Technology and Professor Yang Zhang (top) of UCLA >

T2IRay: Enabling Virtual Input with Precision

T2IRay introduces a novel input method that allows for precise object pointing in virtual environments by expanding traditional thumb-to-index gestures. This approach overcomes previous limitations, such as interruptions or reduced accuracy due to changes in hand position or orientation.

The technology uses a local coordinate system based on finger relationships, ensuring continuous input even as hand positions shift. It accurately captures subtle thumb movements within this coordinate system, integrating natural head movements to allow fluid, intuitive control across a wide range.

< Figure 1. T2IRay framework utilizing the delicate movements of the thumb and index fingers for AR/VR pointing >

Professor Sang Ho Yoon explained, “T2IRay can significantly enhance the user experience in AR/VR by enabling smooth, stable control even when the user’s hands are in motion.”

This study, led by first author Jina Kim, was supported by the Excellent New Researcher Support Project of the National Research Foundation of Korea under the Ministry of Science and ICT, as well as the University ICT Research Center (ITRC) Support Project of the Institute of Information and Communications Technology Planning and Evaluation (IITP).

▴ Paper title: T2IRay: Design of Thumb-to-Index Based Indirect Pointing for Continuous and Robust AR/VR Input▴ Paper link: https://doi.org/10.1145/3706598.3713442

▴ T2IRay demo video: https://youtu.be/ElJlcJbkJPY

ChoreoCraft: Creativity Support through VR for Choreographers

In addition, Professor Yoon’s team developed ‘ChoreoCraft,’ a virtual reality tool designed to support choreographers by addressing the unique challenges they face, such as memorizing complex movements, overcoming creative blocks, and managing subjective feedback.

ChoreoCraft reduces reliance on memory by allowing choreographers to save and refine movements directly within a VR space, using a motion-capture avatar for real-time interaction. It also enhances creativity by suggesting movements that naturally fit with prior choreography and musical elements. Furthermore, the system provides quantitative feedback by analyzing kinematic factors like motion stability and engagement, helping choreographers make data-driven creative decisions.

< Figure 2. ChoreoCraft's approaches to encourage creative process >

Professor Yoon noted, “ChoreoCraft is a tool designed to address the core challenges faced by choreographers, enhancing both creativity and efficiency. In user tests with professional choreographers, it received high marks for its ability to spark creative ideas and provide valuable quantitative feedback.”

This research was conducted in collaboration with doctoral candidate Kyungeun Jung and master’s candidate Hyunyoung Han, alongside the Electronics and Telecommunications Research Institute (ETRI) and One Million Co., Ltd. (CEO Hye-rang Kim), with support from the Cultural and Arts Immersive Service Development Project by the Ministry of Culture, Sports and Tourism.

▴ Paper title: ChoreoCraft: In-situ Crafting of Choreography in Virtual Reality through Creativity Support Tools▴ Paper link: https://doi.org/10.1145/3706598.3714220

▴ ChoreoCraft demo video: https://youtu.be/Ms1fwiSBjjw

*CHI (Conference on Human Factors in Computing Systems): The premier international conference on human-computer interaction, organized by the ACM, was held this year from April 25 to May 1, 2025.

2025.05.13 View 3429

KAIST's Pioneering VR Precision Technology & Choreography Tool Receive Spotlights at CHI 2025

Accurate pointing in virtual spaces is essential for seamless interaction. If pointing is not precise, selecting the desired object becomes challenging, breaking user immersion and reducing overall experience quality. KAIST researchers have developed a technology that offers a vivid, lifelike experience in virtual space, alongside a new tool that assists choreographers throughout the creative process.

KAIST (President Kwang-Hyung Lee) announced on May 13th that a research team led by Professor Sang Ho Yoon of the Graduate School of Culture Technology, in collaboration with Professor Yang Zhang of the University of California, Los Angeles (UCLA), has developed the ‘T2IRay’ technology and the ‘ChoreoCraft’ platform, which enables choreographers to work more freely and creatively in virtual reality. These technologies received two Honorable Mention awards, recognizing the top 5% of papers, at CHI 2025*, the best international conference in the field of human-computer interaction, hosted by the Association for Computing Machinery (ACM) from April 25 to May 1.

< (From left) PhD candidates Jina Kim and Kyungeun Jung along with Master's candidate, Hyunyoung Han and Professor Sang Ho Yoon of KAIST Graduate School of Culture Technology and Professor Yang Zhang (top) of UCLA >

T2IRay: Enabling Virtual Input with Precision

T2IRay introduces a novel input method that allows for precise object pointing in virtual environments by expanding traditional thumb-to-index gestures. This approach overcomes previous limitations, such as interruptions or reduced accuracy due to changes in hand position or orientation.

The technology uses a local coordinate system based on finger relationships, ensuring continuous input even as hand positions shift. It accurately captures subtle thumb movements within this coordinate system, integrating natural head movements to allow fluid, intuitive control across a wide range.

< Figure 1. T2IRay framework utilizing the delicate movements of the thumb and index fingers for AR/VR pointing >

Professor Sang Ho Yoon explained, “T2IRay can significantly enhance the user experience in AR/VR by enabling smooth, stable control even when the user’s hands are in motion.”

This study, led by first author Jina Kim, was supported by the Excellent New Researcher Support Project of the National Research Foundation of Korea under the Ministry of Science and ICT, as well as the University ICT Research Center (ITRC) Support Project of the Institute of Information and Communications Technology Planning and Evaluation (IITP).

▴ Paper title: T2IRay: Design of Thumb-to-Index Based Indirect Pointing for Continuous and Robust AR/VR Input▴ Paper link: https://doi.org/10.1145/3706598.3713442

▴ T2IRay demo video: https://youtu.be/ElJlcJbkJPY

ChoreoCraft: Creativity Support through VR for Choreographers

In addition, Professor Yoon’s team developed ‘ChoreoCraft,’ a virtual reality tool designed to support choreographers by addressing the unique challenges they face, such as memorizing complex movements, overcoming creative blocks, and managing subjective feedback.

ChoreoCraft reduces reliance on memory by allowing choreographers to save and refine movements directly within a VR space, using a motion-capture avatar for real-time interaction. It also enhances creativity by suggesting movements that naturally fit with prior choreography and musical elements. Furthermore, the system provides quantitative feedback by analyzing kinematic factors like motion stability and engagement, helping choreographers make data-driven creative decisions.

< Figure 2. ChoreoCraft's approaches to encourage creative process >

Professor Yoon noted, “ChoreoCraft is a tool designed to address the core challenges faced by choreographers, enhancing both creativity and efficiency. In user tests with professional choreographers, it received high marks for its ability to spark creative ideas and provide valuable quantitative feedback.”

This research was conducted in collaboration with doctoral candidate Kyungeun Jung and master’s candidate Hyunyoung Han, alongside the Electronics and Telecommunications Research Institute (ETRI) and One Million Co., Ltd. (CEO Hye-rang Kim), with support from the Cultural and Arts Immersive Service Development Project by the Ministry of Culture, Sports and Tourism.

▴ Paper title: ChoreoCraft: In-situ Crafting of Choreography in Virtual Reality through Creativity Support Tools▴ Paper link: https://doi.org/10.1145/3706598.3714220

▴ ChoreoCraft demo video: https://youtu.be/Ms1fwiSBjjw

*CHI (Conference on Human Factors in Computing Systems): The premier international conference on human-computer interaction, organized by the ACM, was held this year from April 25 to May 1, 2025.

2025.05.13 View 3429 -

KAIST & CMU Unveils Amuse, a Songwriting AI-Collaborator to Help Create Music

Wouldn't it be great if music creators had someone to brainstorm with, help them when they're stuck, and explore different musical directions together? Researchers of KAIST and Carnegie Mellon University (CMU) have developed AI technology similar to a fellow songwriter who helps create music.

KAIST (President Kwang-Hyung Lee) has developed an AI-based music creation support system, Amuse, by a research team led by Professor Sung-Ju Lee of the School of Electrical Engineering in collaboration with CMU. The research was presented at the ACM Conference on Human Factors in Computing Systems (CHI), one of the world’s top conferences in human-computer interaction, held in Yokohama, Japan from April 26 to May 1. It received the Best Paper Award, given to only the top 1% of all submissions.

< (From left) Professor Chris Donahue of Carnegie Mellon University, Ph.D. Student Yewon Kim and Professor Sung-Ju Lee of the School of Electrical Engineering >

The system developed by Professor Sung-Ju Lee’s research team, Amuse, is an AI-based system that converts various forms of inspiration such as text, images, and audio into harmonic structures (chord progressions) to support composition.

For example, if a user inputs a phrase, image, or sound clip such as “memories of a warm summer beach”, Amuse automatically generates and suggests chord progressions that match the inspiration.

Unlike existing generative AI, Amuse is differentiated in that it respects the user's creative flow and naturally induces creative exploration through an interactive method that allows flexible integration and modification of AI suggestions.

The core technology of the Amuse system is a generation method that blends two approaches: a large language model creates music code based on the user's prompt and inspiration, while another AI model, trained on real music data, filters out awkward or unnatural results using rejection sampling.

< Figure 1. Amuse system configuration. After extracting music keywords from user input, a large language model-based code progression is generated and refined through rejection sampling (left). Code extraction from audio input is also possible (right). The bottom is an example visualizing the chord structure of the generated code. >

The research team conducted a user study targeting actual musicians and evaluated that Amuse has high potential as a creative companion, or a Co-Creative AI, a concept in which people and AI collaborate, rather than having a generative AI simply put together a song.

The paper, in which a Ph.D. student Yewon Kim and Professor Sung-Ju Lee of KAIST School of Electrical and Electronic Engineering and Carnegie Mellon University Professor Chris Donahue participated, demonstrated the potential of creative AI system design in both academia and industry. ※ Paper title: Amuse: Human-AI Collaborative Songwriting with Multimodal Inspirations DOI: https://doi.org/10.1145/3706598.3713818

※ Research demo video: https://youtu.be/udilkRSnftI?si=FNXccC9EjxHOCrm1

※ Research homepage: https://nmsl.kaist.ac.kr/projects/amuse/

Professor Sung-Ju Lee said, “Recent generative AI technology has raised concerns in that it directly imitates copyrighted content, thereby violating the copyright of the creator, or generating results one-way regardless of the creator’s intention. Accordingly, the research team was aware of this trend, paid attention to what the creator actually needs, and focused on designing an AI system centered on the creator.”

He continued, “Amuse is an attempt to explore the possibility of collaboration with AI while maintaining the initiative of the creator, and is expected to be a starting point for suggesting a more creator-friendly direction in the development of music creation tools and generative AI systems in the future.”

This research was conducted with the support of the National Research Foundation of Korea with funding from the government (Ministry of Science and ICT). (RS-2024-00337007)

2025.05.07 View 4891

KAIST & CMU Unveils Amuse, a Songwriting AI-Collaborator to Help Create Music

Wouldn't it be great if music creators had someone to brainstorm with, help them when they're stuck, and explore different musical directions together? Researchers of KAIST and Carnegie Mellon University (CMU) have developed AI technology similar to a fellow songwriter who helps create music.

KAIST (President Kwang-Hyung Lee) has developed an AI-based music creation support system, Amuse, by a research team led by Professor Sung-Ju Lee of the School of Electrical Engineering in collaboration with CMU. The research was presented at the ACM Conference on Human Factors in Computing Systems (CHI), one of the world’s top conferences in human-computer interaction, held in Yokohama, Japan from April 26 to May 1. It received the Best Paper Award, given to only the top 1% of all submissions.

< (From left) Professor Chris Donahue of Carnegie Mellon University, Ph.D. Student Yewon Kim and Professor Sung-Ju Lee of the School of Electrical Engineering >

The system developed by Professor Sung-Ju Lee’s research team, Amuse, is an AI-based system that converts various forms of inspiration such as text, images, and audio into harmonic structures (chord progressions) to support composition.

For example, if a user inputs a phrase, image, or sound clip such as “memories of a warm summer beach”, Amuse automatically generates and suggests chord progressions that match the inspiration.

Unlike existing generative AI, Amuse is differentiated in that it respects the user's creative flow and naturally induces creative exploration through an interactive method that allows flexible integration and modification of AI suggestions.

The core technology of the Amuse system is a generation method that blends two approaches: a large language model creates music code based on the user's prompt and inspiration, while another AI model, trained on real music data, filters out awkward or unnatural results using rejection sampling.

< Figure 1. Amuse system configuration. After extracting music keywords from user input, a large language model-based code progression is generated and refined through rejection sampling (left). Code extraction from audio input is also possible (right). The bottom is an example visualizing the chord structure of the generated code. >

The research team conducted a user study targeting actual musicians and evaluated that Amuse has high potential as a creative companion, or a Co-Creative AI, a concept in which people and AI collaborate, rather than having a generative AI simply put together a song.

The paper, in which a Ph.D. student Yewon Kim and Professor Sung-Ju Lee of KAIST School of Electrical and Electronic Engineering and Carnegie Mellon University Professor Chris Donahue participated, demonstrated the potential of creative AI system design in both academia and industry. ※ Paper title: Amuse: Human-AI Collaborative Songwriting with Multimodal Inspirations DOI: https://doi.org/10.1145/3706598.3713818

※ Research demo video: https://youtu.be/udilkRSnftI?si=FNXccC9EjxHOCrm1

※ Research homepage: https://nmsl.kaist.ac.kr/projects/amuse/

Professor Sung-Ju Lee said, “Recent generative AI technology has raised concerns in that it directly imitates copyrighted content, thereby violating the copyright of the creator, or generating results one-way regardless of the creator’s intention. Accordingly, the research team was aware of this trend, paid attention to what the creator actually needs, and focused on designing an AI system centered on the creator.”

He continued, “Amuse is an attempt to explore the possibility of collaboration with AI while maintaining the initiative of the creator, and is expected to be a starting point for suggesting a more creator-friendly direction in the development of music creation tools and generative AI systems in the future.”

This research was conducted with the support of the National Research Foundation of Korea with funding from the government (Ministry of Science and ICT). (RS-2024-00337007)

2025.05.07 View 4891 -

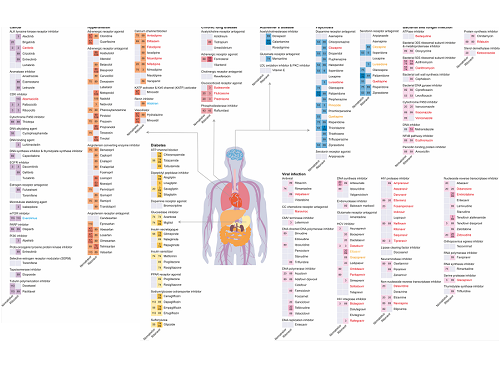

KAIST leads AI-based analysis on drug-drug interactions involving Paxlovid

KAIST (President Kwang Hyung Lee) announced on the 16th that an advanced AI-based drug interaction prediction technology developed by the Distinguished Professor Sang Yup Lee's research team in the Department of Biochemical Engineering that analyzed the interaction between the PaxlovidTM ingredients that are used as COVID-19 treatment and other prescription drugs was published as a thesis. This paper was published in the online edition of 「Proceedings of the National Academy of Sciences of America」 (PNAS), an internationally renowned academic journal, on the 13th of March.

* Thesis Title: Computational prediction of interactions between Paxlovid and prescription drugs (Authored by Yeji Kim (KAIST, co-first author), Jae Yong Ryu (Duksung Women's University, co-first author), Hyun Uk Kim (KAIST, co-first author), and Sang Yup Lee (KAIST, corresponding author))

In this study, the research team developed DeepDDI2, an advanced version of DeepDDI, an AI-based drug interaction prediction model they developed in 2018. DeepDDI2 is able to compute for and process a total of 113 drug-drug interaction (DDI) types, more than the 86 DDI types covered by the existing DeepDDI.

The research team used DeepDDI2 to predict possible interactions between the ingredients (ritonavir, nirmatrelvir) of Paxlovid*, a COVID-19 treatment, and other prescription drugs. The research team said that while among COVID-19 patients, high-risk patients with chronic diseases such as high blood pressure and diabetes are likely to be taking other drugs, drug-drug interactions and adverse drug reactions for Paxlovid have not been sufficiently analyzed, yet. This study was pursued in light of seeing how continued usage of the drug may lead to serious and unwanted complications.

* Paxlovid: Paxlovid is a COVID-19 treatment developed by Pfizer, an American pharmaceutical company, and received emergency use approval (EUA) from the US Food and Drug Administration (FDA) in December 2021.

The research team used DeepDDI2 to predict how Paxrovid's components, ritonavir and nirmatrelvir, would interact with 2,248 prescription drugs. As a result of the prediction, ritonavir was predicted to interact with 1,403 prescription drugs and nirmatrelvir with 673 drugs.

Using the prediction results, the research team proposed alternative drugs with the same mechanism but low drug interaction potential for prescription drugs with high adverse drug events (ADEs). Accordingly, 124 alternative drugs that could reduce the possible adverse DDI with ritonavir and 239 alternative drugs for nirmatrelvir were identified.

Through this research achievement, it became possible to use an deep learning technology to accurately predict drug-drug interactions (DDIs), and this is expected to play an important role in the digital healthcare, precision medicine and pharmaceutical industries by providing useful information in the process of developing new drugs and making prescriptions.

Distinguished Professor Sang Yup Lee said, "The results of this study are meaningful at times like when we would have to resort to using drugs that are developed in a hurry in the face of an urgent situations like the COVID-19 pandemic, that it is now possible to identify and take necessary actions against adverse drug reactions caused by drug-drug interactions very quickly.”

This research was carried out with the support of the KAIST New-Deal Project for COVID-19 Science and Technology and the Bio·Medical Technology Development Project supported by the Ministry of Science and ICT.

Figure 1. Results of drug interaction prediction between Paxlovid ingredients and representative approved drugs using DeepDDI2

2023.03.16 View 9642

KAIST leads AI-based analysis on drug-drug interactions involving Paxlovid

KAIST (President Kwang Hyung Lee) announced on the 16th that an advanced AI-based drug interaction prediction technology developed by the Distinguished Professor Sang Yup Lee's research team in the Department of Biochemical Engineering that analyzed the interaction between the PaxlovidTM ingredients that are used as COVID-19 treatment and other prescription drugs was published as a thesis. This paper was published in the online edition of 「Proceedings of the National Academy of Sciences of America」 (PNAS), an internationally renowned academic journal, on the 13th of March.

* Thesis Title: Computational prediction of interactions between Paxlovid and prescription drugs (Authored by Yeji Kim (KAIST, co-first author), Jae Yong Ryu (Duksung Women's University, co-first author), Hyun Uk Kim (KAIST, co-first author), and Sang Yup Lee (KAIST, corresponding author))

In this study, the research team developed DeepDDI2, an advanced version of DeepDDI, an AI-based drug interaction prediction model they developed in 2018. DeepDDI2 is able to compute for and process a total of 113 drug-drug interaction (DDI) types, more than the 86 DDI types covered by the existing DeepDDI.

The research team used DeepDDI2 to predict possible interactions between the ingredients (ritonavir, nirmatrelvir) of Paxlovid*, a COVID-19 treatment, and other prescription drugs. The research team said that while among COVID-19 patients, high-risk patients with chronic diseases such as high blood pressure and diabetes are likely to be taking other drugs, drug-drug interactions and adverse drug reactions for Paxlovid have not been sufficiently analyzed, yet. This study was pursued in light of seeing how continued usage of the drug may lead to serious and unwanted complications.

* Paxlovid: Paxlovid is a COVID-19 treatment developed by Pfizer, an American pharmaceutical company, and received emergency use approval (EUA) from the US Food and Drug Administration (FDA) in December 2021.

The research team used DeepDDI2 to predict how Paxrovid's components, ritonavir and nirmatrelvir, would interact with 2,248 prescription drugs. As a result of the prediction, ritonavir was predicted to interact with 1,403 prescription drugs and nirmatrelvir with 673 drugs.

Using the prediction results, the research team proposed alternative drugs with the same mechanism but low drug interaction potential for prescription drugs with high adverse drug events (ADEs). Accordingly, 124 alternative drugs that could reduce the possible adverse DDI with ritonavir and 239 alternative drugs for nirmatrelvir were identified.

Through this research achievement, it became possible to use an deep learning technology to accurately predict drug-drug interactions (DDIs), and this is expected to play an important role in the digital healthcare, precision medicine and pharmaceutical industries by providing useful information in the process of developing new drugs and making prescriptions.

Distinguished Professor Sang Yup Lee said, "The results of this study are meaningful at times like when we would have to resort to using drugs that are developed in a hurry in the face of an urgent situations like the COVID-19 pandemic, that it is now possible to identify and take necessary actions against adverse drug reactions caused by drug-drug interactions very quickly.”

This research was carried out with the support of the KAIST New-Deal Project for COVID-19 Science and Technology and the Bio·Medical Technology Development Project supported by the Ministry of Science and ICT.

Figure 1. Results of drug interaction prediction between Paxlovid ingredients and representative approved drugs using DeepDDI2

2023.03.16 View 9642 -

Scientists re-writes FDA-recommended equation to improve estimation of drug-drug interaction

Drugs absorbed into the body are metabolized and thus removed by enzymes from several organs like the liver. How fast a drug is cleared out of the system can be affected by other drugs that are taken together because added substance can increase the amount of enzyme secretion in the body. This dramatically decreases the concentration of a drug, reducing its efficacy, often leading to the failure of having any effect at all. Therefore, accurately predicting the clearance rate in the presence of drug-drug interaction* is critical in the process of drug prescription and development of a new drug in order to ensure its efficacy and/or to avoid unwanted side-effects.

*Drug-drug interaction: In terms of metabolism, drug-drug interaction is a phenomenon in which one drug changes the metabolism of another drug to promote or inhibit its excretion from the body when two or more drugs are taken together. As a result, it increases the toxicity of medicines or causes loss of efficacy.

Since it is practically impossible to evaluate all interactions between new drug candidates and all marketed drugs during the development process, the FDA recommends indirect evaluation of drug interactions using a formula suggested in their guidance, first published in 1997, revised in January of 2020, in order to evaluate drug interactions and minimize side effects of having to use more than one type of drugs at once.

The formula relies on the 110-year-old Michaelis-Menten (MM) model, which has a fundamental limit of making a very broad and groundless assumption on the part of the presence of the enzymes that metabolizes the drug. While MM equation has been one of the most widely known equations in biochemistry used in more than 220,000 published papers, the MM equation is accurate only when the concentration of the enzyme that metabolizes the drug is almost non-existent, causing the accuracy of the equation highly unsatisfactory – only 38 percent of the predictions had less than two-fold errors.

“To make up for the gap, researcher resorted to plugging in scientifically unjustified constants into the equation,” Professor Jung-woo Chae of Chungnam National University College of Pharmacy said. “This is comparable to having to have the epicyclic orbits introduced to explain the motion of the planets back in the days in order to explain the now-defunct Ptolemaic theory, because it was 'THE' theory back then.”

< (From left) Ph.D. student Yun Min Song (KAIST, co-first authors), Professor Sang Kyum Kim (Chungnam National University, co-corresponding author), Jae Kyoung Kim, CI (KAIST, co-corresponding author), Professor Jung-woo Chae (Chungnam National University, co-corresponding author), Ph.D. students Quyen Thi Tran and Ngoc-Anh Thi Vu (Chungnam National University, co-first authors) >

A joint research team composed of mathematicians from the Biomedical Mathematics Group within the Institute for Basic Science (IBS) and the Korea Advanced Institute of Science and Technology (KAIST) and pharmacological scientists from the Chungnam National University reported that they identified the major causes of the FDA-recommended equation’s inaccuracies and presented a solution.

When estimating the gut bioavailability (Fg), which is the key parameter of the equation, the fraction absorbed from the gut lumen (Fa) is usually assumed to be 1. However, many experiments have shown that Fa is less than 1, obviously since it can’t be expected that all of the orally taken drugs to be completely absorbed by the intestines. To solve this problem, the research team used an “estimated Fa” value based on factors such as the drug’s transit time, intestine radius, and permeability values and used it to re-calculate Fg.

Also, taking a different approach from the MM equation, the team used an alternative model they derived in a previous study back in 2020, which can more accurately predict the drug metabolism rate regardless of the enzyme concentration. Combining these changes, the modified equation with re-calculated Fg had a dramatically increased accuracy of the resulting estimate. The existing FDA formula predicted drug interactions within a 2-fold margin of error at the rate of 38%, whereas the accuracy rate of the revised formula reached 80%.

“Such drastic improvement in drug-drug interaction prediction accuracy is expected to make great contribution to increasing the success rate of new drug development and drug efficacy in clinical trials. As the results of this study were published in one of the top clinical pharmacology journal, it is expected that the FDA guidance will be revised according to the results of this study.” said Professor Sang Kyum Kim from Chungnam National University College of Pharmacy.

Furthermore, this study highlights the importance of collaborative research between research groups in vastly different disciplines, in a field that is as dynamic as drug interactions.

“Thanks to the collaborative research between mathematics and pharmacy, we were able to recify the formula that we have accepted to be the right answer for so long to finally grasp on the leads toward healthier life for mankind.,” said Professor Jae Kyung Kim. He continued, “I hope seeing a ‘K-formula’ entered into the US FDA guidance one day.”

The results of this study were published in the online edition of Clinical Pharmacology and Therapeutics (IF 7.051), an authoritative journal in the field of clinical pharmacology, on December 15, 2022 (Korean time).

Thesis Title: Beyond the Michaelis-Menten: Accurate Prediction of Drug Interactions through Cytochrome P450 3A4 Induction (doi: 10.1002/cpt.2824)

< Figure 1. The formula proposed by the FDA guidance for predicting drug-drug interactions (top) and the formula newly derived by the researchers (bottom). AUCR (the ratio of substrate area under the plasma concentration-time curve) represents the rate of change in drug concentration due to drug interactions. The research team more than doubled the accuracy of drug interaction prediction compared to the existing formula. >

< Figure 2. Existing FDA formulas tend to underestimate the extent of drug-drug interactions (gray dots) than the actual measured values. On the other hand, the newly derived equation (red dot) has a prediction rate that is within the error range of 2 times (0.5 to 2 times) of the measured value, and is more than twice as high as the existing equation. The solid line in the figure represents the predicted value that matches the measured value. The dotted line represents the predicted value with an error of 0.5 to 2 times. >

For further information or to request media assistance, please contact Jae Kyoung Kim at Biomedical Mathematics Group, Institute for Basic Science (IBS) (jaekkim@ibs.re.kr) or William I. Suh at the IBS Communications Team (willisuh@ibs.re.kr).

- About the Institute for Basic Science (IBS)

IBS was founded in 2011 by the government of the Republic of Korea with the sole purpose of driving forward the development of basic science in South Korea. IBS has 4 research institutes and 33 research centers as of January 2023. There are eleven physics, three mathematics, five chemistry, nine life science, two earth science, and three interdisciplinary research centers.

2023.01.18 View 14624

Scientists re-writes FDA-recommended equation to improve estimation of drug-drug interaction

Drugs absorbed into the body are metabolized and thus removed by enzymes from several organs like the liver. How fast a drug is cleared out of the system can be affected by other drugs that are taken together because added substance can increase the amount of enzyme secretion in the body. This dramatically decreases the concentration of a drug, reducing its efficacy, often leading to the failure of having any effect at all. Therefore, accurately predicting the clearance rate in the presence of drug-drug interaction* is critical in the process of drug prescription and development of a new drug in order to ensure its efficacy and/or to avoid unwanted side-effects.

*Drug-drug interaction: In terms of metabolism, drug-drug interaction is a phenomenon in which one drug changes the metabolism of another drug to promote or inhibit its excretion from the body when two or more drugs are taken together. As a result, it increases the toxicity of medicines or causes loss of efficacy.

Since it is practically impossible to evaluate all interactions between new drug candidates and all marketed drugs during the development process, the FDA recommends indirect evaluation of drug interactions using a formula suggested in their guidance, first published in 1997, revised in January of 2020, in order to evaluate drug interactions and minimize side effects of having to use more than one type of drugs at once.

The formula relies on the 110-year-old Michaelis-Menten (MM) model, which has a fundamental limit of making a very broad and groundless assumption on the part of the presence of the enzymes that metabolizes the drug. While MM equation has been one of the most widely known equations in biochemistry used in more than 220,000 published papers, the MM equation is accurate only when the concentration of the enzyme that metabolizes the drug is almost non-existent, causing the accuracy of the equation highly unsatisfactory – only 38 percent of the predictions had less than two-fold errors.

“To make up for the gap, researcher resorted to plugging in scientifically unjustified constants into the equation,” Professor Jung-woo Chae of Chungnam National University College of Pharmacy said. “This is comparable to having to have the epicyclic orbits introduced to explain the motion of the planets back in the days in order to explain the now-defunct Ptolemaic theory, because it was 'THE' theory back then.”

< (From left) Ph.D. student Yun Min Song (KAIST, co-first authors), Professor Sang Kyum Kim (Chungnam National University, co-corresponding author), Jae Kyoung Kim, CI (KAIST, co-corresponding author), Professor Jung-woo Chae (Chungnam National University, co-corresponding author), Ph.D. students Quyen Thi Tran and Ngoc-Anh Thi Vu (Chungnam National University, co-first authors) >

A joint research team composed of mathematicians from the Biomedical Mathematics Group within the Institute for Basic Science (IBS) and the Korea Advanced Institute of Science and Technology (KAIST) and pharmacological scientists from the Chungnam National University reported that they identified the major causes of the FDA-recommended equation’s inaccuracies and presented a solution.

When estimating the gut bioavailability (Fg), which is the key parameter of the equation, the fraction absorbed from the gut lumen (Fa) is usually assumed to be 1. However, many experiments have shown that Fa is less than 1, obviously since it can’t be expected that all of the orally taken drugs to be completely absorbed by the intestines. To solve this problem, the research team used an “estimated Fa” value based on factors such as the drug’s transit time, intestine radius, and permeability values and used it to re-calculate Fg.

Also, taking a different approach from the MM equation, the team used an alternative model they derived in a previous study back in 2020, which can more accurately predict the drug metabolism rate regardless of the enzyme concentration. Combining these changes, the modified equation with re-calculated Fg had a dramatically increased accuracy of the resulting estimate. The existing FDA formula predicted drug interactions within a 2-fold margin of error at the rate of 38%, whereas the accuracy rate of the revised formula reached 80%.

“Such drastic improvement in drug-drug interaction prediction accuracy is expected to make great contribution to increasing the success rate of new drug development and drug efficacy in clinical trials. As the results of this study were published in one of the top clinical pharmacology journal, it is expected that the FDA guidance will be revised according to the results of this study.” said Professor Sang Kyum Kim from Chungnam National University College of Pharmacy.

Furthermore, this study highlights the importance of collaborative research between research groups in vastly different disciplines, in a field that is as dynamic as drug interactions.

“Thanks to the collaborative research between mathematics and pharmacy, we were able to recify the formula that we have accepted to be the right answer for so long to finally grasp on the leads toward healthier life for mankind.,” said Professor Jae Kyung Kim. He continued, “I hope seeing a ‘K-formula’ entered into the US FDA guidance one day.”

The results of this study were published in the online edition of Clinical Pharmacology and Therapeutics (IF 7.051), an authoritative journal in the field of clinical pharmacology, on December 15, 2022 (Korean time).

Thesis Title: Beyond the Michaelis-Menten: Accurate Prediction of Drug Interactions through Cytochrome P450 3A4 Induction (doi: 10.1002/cpt.2824)

< Figure 1. The formula proposed by the FDA guidance for predicting drug-drug interactions (top) and the formula newly derived by the researchers (bottom). AUCR (the ratio of substrate area under the plasma concentration-time curve) represents the rate of change in drug concentration due to drug interactions. The research team more than doubled the accuracy of drug interaction prediction compared to the existing formula. >

< Figure 2. Existing FDA formulas tend to underestimate the extent of drug-drug interactions (gray dots) than the actual measured values. On the other hand, the newly derived equation (red dot) has a prediction rate that is within the error range of 2 times (0.5 to 2 times) of the measured value, and is more than twice as high as the existing equation. The solid line in the figure represents the predicted value that matches the measured value. The dotted line represents the predicted value with an error of 0.5 to 2 times. >

For further information or to request media assistance, please contact Jae Kyoung Kim at Biomedical Mathematics Group, Institute for Basic Science (IBS) (jaekkim@ibs.re.kr) or William I. Suh at the IBS Communications Team (willisuh@ibs.re.kr).

- About the Institute for Basic Science (IBS)

IBS was founded in 2011 by the government of the Republic of Korea with the sole purpose of driving forward the development of basic science in South Korea. IBS has 4 research institutes and 33 research centers as of January 2023. There are eleven physics, three mathematics, five chemistry, nine life science, two earth science, and three interdisciplinary research centers.

2023.01.18 View 14624 -

Professor Juho Kim’s Team Wins Best Paper Award at ACM CHI 2022

The research team led by Professor Juho Kim from the KAIST School of Computing won a Best Paper Award and an Honorable Mention Award at the Association for Computing Machinery Conference on Human Factors in Computing Systems (ACM CHI) held between April 30 and May 6.

ACM CHI is the world’s most recognized conference in the field of human computer interactions (HCI), and is ranked number one out of all HCI-related journals and conferences based on Google Scholar’s h-5 index. Best paper awards are given to works that rank in the top one percent, and honorable mention awards are given to the top five percent of the papers accepted by the conference.

Professor Juho Kim presented a total of seven papers at ACM CHI 2022, and tied for the largest number of papers. A total of 19 papers were affiliated with KAIST, putting it fifth out of all participating institutes and thereby proving KAIST’s competence in research.

One of Professor Kim’s research teams composed of Jeongyeon Kim (first author, MS graduate) from the School of Computing, MS candidate Yubin Choi from the School of Electrical Engineering, and Dr. Meng Xia (post-doctoral associate in the School of Computing, currently a post-doctoral associate at Carnegie Mellon University) received a best paper award for their paper, “Mobile-Friendly Content Design for MOOCs: Challenges, Requirements, and Design Opportunities”.

The study analyzed the difficulties experienced by learners watching video-based educational content in a mobile environment and suggests guidelines for solutions. The research team analyzed 134 survey responses and 21 interviews, and revealed that texts that are too small or overcrowded are what mainly brings down the legibility of video contents. Additionally, lighting, noise, and surrounding environments that change frequently are also important factors that may disturb a learning experience.

Based on these findings, the team analyzed the aptness of 41,722 frames from 101 video lectures for mobile environments, and confirmed that they generally show low levels of adequacy. For instance, in the case of text sizes, only 24.5% of the frames were shown to be adequate for learning in mobile environments. To overcome this issue, the research team suggested a guideline that may improve the legibility of video contents and help overcome the difficulties arising from mobile learning environments.

The importance of and dependency on video-based learning continue to rise, especially in the wake of the pandemic, and it is meaningful that this research suggested a means to analyze and tackle the difficulties of users that learn from the small screens of mobile devices. Furthermore, the paper also suggested technology that can solve problems related to video-based learning through human-AI collaborations, enhancing existing video lectures and improving learning experiences. This technology can be applied to various video-based platforms and content creation.

Meanwhile, a research team composed of Ph.D. candidate Tae Soo Kim (first author), MS candidate DaEun Choi, and Ph.D. candidate Yoonseo Choi from the School of Computing received an honorable mention award for their paper, “Stylette: styling the Web with Natural Language”.

The research team developed a novel interface technology that allows nonexperts who are unfamiliar with technical jargon to edit website features through speech. People often find it difficult to use or find the information they need from various websites due to accessibility issues, device-related constraints, inconvenient design, style preferences, etc. However, it is not easy for laymen to edit website features without expertise in programming or design, and most end up just putting up with the inconveniences. But what if the system could read the intentions of its users from their everyday language like “emphasize this part a little more”, or “I want a more modern design”, and edit the features automatically?

Based on this question, Professor Kim’s research team developed ‘Stylette’, a system in which AI analyses its users’ speech expressed in their natural language and automatically recommends a new style that best fits their intentions. The research team created a new system by putting together language AI, visual AI, and user interface technologies. On the linguistic side, a large-scale language model AI converts the intentions of the users expressed through their everyday language into adequate style elements. On the visual side, computer vision AI compares 1.7 million existing web design features and recommends a style adequate for the current website. In an experiment where 40 nonexperts were asked to edit a website design, the subjects that used this system showed double the success rate in a time span that was 35% shorter compared to the control group.

It is meaningful that this research proposed a practical case in which AI technology constructs intuitive interactions with users. The developed technology can be applied to existing design applications and web browsers in a plug-in format, and can be utilized to improve websites or for advertisements by collecting the natural intention data of users on a large scale.

2022.06.13 View 9793

Professor Juho Kim’s Team Wins Best Paper Award at ACM CHI 2022

The research team led by Professor Juho Kim from the KAIST School of Computing won a Best Paper Award and an Honorable Mention Award at the Association for Computing Machinery Conference on Human Factors in Computing Systems (ACM CHI) held between April 30 and May 6.

ACM CHI is the world’s most recognized conference in the field of human computer interactions (HCI), and is ranked number one out of all HCI-related journals and conferences based on Google Scholar’s h-5 index. Best paper awards are given to works that rank in the top one percent, and honorable mention awards are given to the top five percent of the papers accepted by the conference.

Professor Juho Kim presented a total of seven papers at ACM CHI 2022, and tied for the largest number of papers. A total of 19 papers were affiliated with KAIST, putting it fifth out of all participating institutes and thereby proving KAIST’s competence in research.

One of Professor Kim’s research teams composed of Jeongyeon Kim (first author, MS graduate) from the School of Computing, MS candidate Yubin Choi from the School of Electrical Engineering, and Dr. Meng Xia (post-doctoral associate in the School of Computing, currently a post-doctoral associate at Carnegie Mellon University) received a best paper award for their paper, “Mobile-Friendly Content Design for MOOCs: Challenges, Requirements, and Design Opportunities”.

The study analyzed the difficulties experienced by learners watching video-based educational content in a mobile environment and suggests guidelines for solutions. The research team analyzed 134 survey responses and 21 interviews, and revealed that texts that are too small or overcrowded are what mainly brings down the legibility of video contents. Additionally, lighting, noise, and surrounding environments that change frequently are also important factors that may disturb a learning experience.

Based on these findings, the team analyzed the aptness of 41,722 frames from 101 video lectures for mobile environments, and confirmed that they generally show low levels of adequacy. For instance, in the case of text sizes, only 24.5% of the frames were shown to be adequate for learning in mobile environments. To overcome this issue, the research team suggested a guideline that may improve the legibility of video contents and help overcome the difficulties arising from mobile learning environments.

The importance of and dependency on video-based learning continue to rise, especially in the wake of the pandemic, and it is meaningful that this research suggested a means to analyze and tackle the difficulties of users that learn from the small screens of mobile devices. Furthermore, the paper also suggested technology that can solve problems related to video-based learning through human-AI collaborations, enhancing existing video lectures and improving learning experiences. This technology can be applied to various video-based platforms and content creation.

Meanwhile, a research team composed of Ph.D. candidate Tae Soo Kim (first author), MS candidate DaEun Choi, and Ph.D. candidate Yoonseo Choi from the School of Computing received an honorable mention award for their paper, “Stylette: styling the Web with Natural Language”.

The research team developed a novel interface technology that allows nonexperts who are unfamiliar with technical jargon to edit website features through speech. People often find it difficult to use or find the information they need from various websites due to accessibility issues, device-related constraints, inconvenient design, style preferences, etc. However, it is not easy for laymen to edit website features without expertise in programming or design, and most end up just putting up with the inconveniences. But what if the system could read the intentions of its users from their everyday language like “emphasize this part a little more”, or “I want a more modern design”, and edit the features automatically?

Based on this question, Professor Kim’s research team developed ‘Stylette’, a system in which AI analyses its users’ speech expressed in their natural language and automatically recommends a new style that best fits their intentions. The research team created a new system by putting together language AI, visual AI, and user interface technologies. On the linguistic side, a large-scale language model AI converts the intentions of the users expressed through their everyday language into adequate style elements. On the visual side, computer vision AI compares 1.7 million existing web design features and recommends a style adequate for the current website. In an experiment where 40 nonexperts were asked to edit a website design, the subjects that used this system showed double the success rate in a time span that was 35% shorter compared to the control group.

It is meaningful that this research proposed a practical case in which AI technology constructs intuitive interactions with users. The developed technology can be applied to existing design applications and web browsers in a plug-in format, and can be utilized to improve websites or for advertisements by collecting the natural intention data of users on a large scale.

2022.06.13 View 9793 -

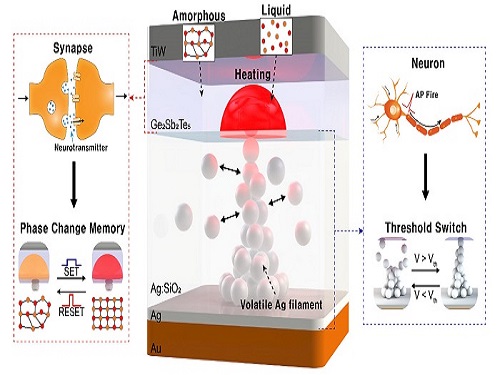

Neuromorphic Memory Device Simulates Neurons and Synapses

Simultaneous emulation of neuronal and synaptic properties promotes the development of brain-like artificial intelligence

Researchers have reported a nano-sized neuromorphic memory device that emulates neurons and synapses simultaneously in a unit cell, another step toward completing the goal of neuromorphic computing designed to rigorously mimic the human brain with semiconductor devices.

Neuromorphic computing aims to realize artificial intelligence (AI) by mimicking the mechanisms of neurons and synapses that make up the human brain. Inspired by the cognitive functions of the human brain that current computers cannot provide, neuromorphic devices have been widely investigated. However, current Complementary Metal-Oxide Semiconductor (CMOS)-based neuromorphic circuits simply connect artificial neurons and synapses without synergistic interactions, and the concomitant implementation of neurons and synapses still remains a challenge. To address these issues, a research team led by Professor Keon Jae Lee from the Department of Materials Science and Engineering implemented the biological working mechanisms of humans by introducing the neuron-synapse interactions in a single memory cell, rather than the conventional approach of electrically connecting artificial neuronal and synaptic devices.

Similar to commercial graphics cards, the artificial synaptic devices previously studied often used to accelerate parallel computations, which shows clear differences from the operational mechanisms of the human brain. The research team implemented the synergistic interactions between neurons and synapses in the neuromorphic memory device, emulating the mechanisms of the biological neural network. In addition, the developed neuromorphic device can replace complex CMOS neuron circuits with a single device, providing high scalability and cost efficiency.

The human brain consists of a complex network of 100 billion neurons and 100 trillion synapses. The functions and structures of neurons and synapses can flexibly change according to the external stimuli, adapting to the surrounding environment. The research team developed a neuromorphic device in which short-term and long-term memories coexist using volatile and non-volatile memory devices that mimic the characteristics of neurons and synapses, respectively. A threshold switch device is used as volatile memory and phase-change memory is used as a non-volatile device. Two thin-film devices are integrated without intermediate electrodes, implementing the functional adaptability of neurons and synapses in the neuromorphic memory.

Professor Keon Jae Lee explained, "Neurons and synapses interact with each other to establish cognitive functions such as memory and learning, so simulating both is an essential element for brain-inspired artificial intelligence. The developed neuromorphic memory device also mimics the retraining effect that allows quick learning of the forgotten information by implementing a positive feedback effect between neurons and synapses.”

This result entitled “Simultaneous emulation of synaptic and intrinsic plasticity using a memristive synapse” was published in the May 19, 2022 issue of Nature Communications.

-Publication:Sang Hyun Sung, Tae Jin Kim, Hyera Shin, Tae Hong Im, and Keon Jae Lee (2022) “Simultaneous emulation of synaptic and intrinsic plasticity using a memristive synapse,” Nature Communications May 19, 2022 (DOI: 10.1038/s41467-022-30432-2)

-Profile:Professor Keon Jae Leehttp://fand.kaist.ac.kr

Department of Materials Science and EngineeringKAIST

2022.05.20 View 15353

Neuromorphic Memory Device Simulates Neurons and Synapses

Simultaneous emulation of neuronal and synaptic properties promotes the development of brain-like artificial intelligence

Researchers have reported a nano-sized neuromorphic memory device that emulates neurons and synapses simultaneously in a unit cell, another step toward completing the goal of neuromorphic computing designed to rigorously mimic the human brain with semiconductor devices.

Neuromorphic computing aims to realize artificial intelligence (AI) by mimicking the mechanisms of neurons and synapses that make up the human brain. Inspired by the cognitive functions of the human brain that current computers cannot provide, neuromorphic devices have been widely investigated. However, current Complementary Metal-Oxide Semiconductor (CMOS)-based neuromorphic circuits simply connect artificial neurons and synapses without synergistic interactions, and the concomitant implementation of neurons and synapses still remains a challenge. To address these issues, a research team led by Professor Keon Jae Lee from the Department of Materials Science and Engineering implemented the biological working mechanisms of humans by introducing the neuron-synapse interactions in a single memory cell, rather than the conventional approach of electrically connecting artificial neuronal and synaptic devices.

Similar to commercial graphics cards, the artificial synaptic devices previously studied often used to accelerate parallel computations, which shows clear differences from the operational mechanisms of the human brain. The research team implemented the synergistic interactions between neurons and synapses in the neuromorphic memory device, emulating the mechanisms of the biological neural network. In addition, the developed neuromorphic device can replace complex CMOS neuron circuits with a single device, providing high scalability and cost efficiency.

The human brain consists of a complex network of 100 billion neurons and 100 trillion synapses. The functions and structures of neurons and synapses can flexibly change according to the external stimuli, adapting to the surrounding environment. The research team developed a neuromorphic device in which short-term and long-term memories coexist using volatile and non-volatile memory devices that mimic the characteristics of neurons and synapses, respectively. A threshold switch device is used as volatile memory and phase-change memory is used as a non-volatile device. Two thin-film devices are integrated without intermediate electrodes, implementing the functional adaptability of neurons and synapses in the neuromorphic memory.

Professor Keon Jae Lee explained, "Neurons and synapses interact with each other to establish cognitive functions such as memory and learning, so simulating both is an essential element for brain-inspired artificial intelligence. The developed neuromorphic memory device also mimics the retraining effect that allows quick learning of the forgotten information by implementing a positive feedback effect between neurons and synapses.”

This result entitled “Simultaneous emulation of synaptic and intrinsic plasticity using a memristive synapse” was published in the May 19, 2022 issue of Nature Communications.

-Publication:Sang Hyun Sung, Tae Jin Kim, Hyera Shin, Tae Hong Im, and Keon Jae Lee (2022) “Simultaneous emulation of synaptic and intrinsic plasticity using a memristive synapse,” Nature Communications May 19, 2022 (DOI: 10.1038/s41467-022-30432-2)

-Profile:Professor Keon Jae Leehttp://fand.kaist.ac.kr

Department of Materials Science and EngineeringKAIST

2022.05.20 View 15353 -

Professor Sung-Ju Lee’s Team Wins the Best Paper and the Methods Recognition Awards at the ACM CSCW

A research team led by Professor Sung-Ju Lee at the School of Electrical Engineering won the Best Paper Award and the Methods Recognition Award from ACM CSCW (International Conference on Computer-Supported Cooperative Work and Social Computing) 2021 for their paper “Reflect, not Regret: Understanding Regretful Smartphone Use with App Feature-Level Analysis”.

Founded in 1986, CSCW has been a premier conference on HCI (Human Computer Interaction) and Social Computing. This year, 340 full papers were presented and the best paper awards are given to the top 1% papers of the submitted. Methods Recognition, which is a new award, is given “for strong examples of work that includes well developed, explained, or implemented methods, and methodological innovation.”

Hyunsung Cho (KAIST alumus and currently a PhD candidate at Carnegie Mellon University), Daeun Choi (KAIST undergraduate researcher), Donghwi Kim (KAIST PhD Candidate), Wan Ju Kang (KAIST PhD Candidate), and Professor Eun Kyoung Choe (University of Maryland and KAIST alumna) collaborated on this research.

The authors developed a tool that tracks and analyzes which features of a mobile app (e.g., Instagram’s following post, following story, recommended post, post upload, direct messaging, etc.) are in use based on a smartphone’s User Interface (UI) layout. Utilizing this novel method, the authors revealed which feature usage patterns result in regretful smartphone use.

Professor Lee said, “Although many people enjoy the benefits of smartphones, issues have emerged from the overuse of smartphones. With this feature level analysis, users can reflect on their smartphone usage based on finer grained analysis and this could contribute to digital wellbeing.”

2021.11.22 View 8974

Professor Sung-Ju Lee’s Team Wins the Best Paper and the Methods Recognition Awards at the ACM CSCW

A research team led by Professor Sung-Ju Lee at the School of Electrical Engineering won the Best Paper Award and the Methods Recognition Award from ACM CSCW (International Conference on Computer-Supported Cooperative Work and Social Computing) 2021 for their paper “Reflect, not Regret: Understanding Regretful Smartphone Use with App Feature-Level Analysis”.

Founded in 1986, CSCW has been a premier conference on HCI (Human Computer Interaction) and Social Computing. This year, 340 full papers were presented and the best paper awards are given to the top 1% papers of the submitted. Methods Recognition, which is a new award, is given “for strong examples of work that includes well developed, explained, or implemented methods, and methodological innovation.”

Hyunsung Cho (KAIST alumus and currently a PhD candidate at Carnegie Mellon University), Daeun Choi (KAIST undergraduate researcher), Donghwi Kim (KAIST PhD Candidate), Wan Ju Kang (KAIST PhD Candidate), and Professor Eun Kyoung Choe (University of Maryland and KAIST alumna) collaborated on this research.

The authors developed a tool that tracks and analyzes which features of a mobile app (e.g., Instagram’s following post, following story, recommended post, post upload, direct messaging, etc.) are in use based on a smartphone’s User Interface (UI) layout. Utilizing this novel method, the authors revealed which feature usage patterns result in regretful smartphone use.

Professor Lee said, “Although many people enjoy the benefits of smartphones, issues have emerged from the overuse of smartphones. With this feature level analysis, users can reflect on their smartphone usage based on finer grained analysis and this could contribute to digital wellbeing.”

2021.11.22 View 8974 -

How Stingrays Became the Most Efficient Swimmers in Nature

Study shows the hydrodynamic benefits of protruding eyes and mouth in a self-propelled flexible stingray

With their compressed bodies and flexible pectoral fins, stingrays have evolved to become one of nature’s most efficient swimmers. Scientists have long wondered about the role played by their protruding eyes and mouths, which one might expect to be hydrodynamic disadvantages.

Professor Hyung Jin Sung and his colleagues have discovered how such features on simulated stingrays affect a range of forces involved in propulsion, such as pressure and vorticity. Despite what one might expect, their research team found these protruding features actually help streamline the stingrays.

‘The influence of the 3D protruding eyes and mouth on a self-propelled flexible stingray and its underlying hydrodynamic mechanism are not yet fully understood,” said Professor Sung. “In the present study, the hydrodynamic benefit of protruding eyes and mouth was explored for the first time, revealing their hydrodynamic role.”

To illustrate the complex interplay between hydrodynamic forces, the researchers set to work creating a computer model of a self-propelled flexible plate. They clamped the front end of the model and then forced it to mimic the up-and-down harmonic oscillations stingrays use to propel themselves.

To re-create the effect of the eyes and mouth on the surrounding water, the team simulated multiple rigid plates on the model. They compared this model to one without eyes and a mouth using a technique called the penalty immersed boundary method.

“Managing random fish swimming and isolating the desired purpose of the measurements from numerous factors was difficult,” Sung said. “To overcome these limitations, the penalty immersed boundary method was adopted to find the hydrodynamic benefits of the protruding eyes and mouth.”

The team discovered that the eyes and mouth generated a vortex of flow in the forward-backward , which increased negative pressure at the simulated animal’s front, and a side-to-side vortex that increased the pressure difference above and below the stingray. The result was increased thrust and accelerated cruising.

Further analysis showed that the eyes and mouth increased overall propulsion efficiency by more than 20.5% and 10.6%, respectively.

Researchers hope their work, driven by curiosity, further stokes interest in exploring fluid phenomena in nature. They are hoping to find ways to adapt this for next-generation water vehicle designs based more closely on marine animals.

This study was supported by the National Research Foundation of Korea and the State Scholar Fund from the China Scholarship Council.

-ProfileProfessor Hyung Jin SungDepartment of Mechanical EngineeringKAIST

-PublicationHyung Jin Sung, Qian Mao, Ziazhen Zhao, Yingzheng Liu, “Hydrodynamic benefits of protruding eyes and mouth in a self-propelled flexible stingray,” Aug.31, 2021, Physics of Fluids

(https://doi.org/10.1063/5.0061287)

-News release from the American Institute of Physics, Aug.31, 2021

2021.09.06 View 8620

How Stingrays Became the Most Efficient Swimmers in Nature

Study shows the hydrodynamic benefits of protruding eyes and mouth in a self-propelled flexible stingray

With their compressed bodies and flexible pectoral fins, stingrays have evolved to become one of nature’s most efficient swimmers. Scientists have long wondered about the role played by their protruding eyes and mouths, which one might expect to be hydrodynamic disadvantages.

Professor Hyung Jin Sung and his colleagues have discovered how such features on simulated stingrays affect a range of forces involved in propulsion, such as pressure and vorticity. Despite what one might expect, their research team found these protruding features actually help streamline the stingrays.

‘The influence of the 3D protruding eyes and mouth on a self-propelled flexible stingray and its underlying hydrodynamic mechanism are not yet fully understood,” said Professor Sung. “In the present study, the hydrodynamic benefit of protruding eyes and mouth was explored for the first time, revealing their hydrodynamic role.”

To illustrate the complex interplay between hydrodynamic forces, the researchers set to work creating a computer model of a self-propelled flexible plate. They clamped the front end of the model and then forced it to mimic the up-and-down harmonic oscillations stingrays use to propel themselves.

To re-create the effect of the eyes and mouth on the surrounding water, the team simulated multiple rigid plates on the model. They compared this model to one without eyes and a mouth using a technique called the penalty immersed boundary method.

“Managing random fish swimming and isolating the desired purpose of the measurements from numerous factors was difficult,” Sung said. “To overcome these limitations, the penalty immersed boundary method was adopted to find the hydrodynamic benefits of the protruding eyes and mouth.”

The team discovered that the eyes and mouth generated a vortex of flow in the forward-backward , which increased negative pressure at the simulated animal’s front, and a side-to-side vortex that increased the pressure difference above and below the stingray. The result was increased thrust and accelerated cruising.

Further analysis showed that the eyes and mouth increased overall propulsion efficiency by more than 20.5% and 10.6%, respectively.

Researchers hope their work, driven by curiosity, further stokes interest in exploring fluid phenomena in nature. They are hoping to find ways to adapt this for next-generation water vehicle designs based more closely on marine animals.