Visual+System

-

KAIST Develops Insect-Eye-Inspired Camera Capturing 9,120 Frames Per Second

< (From left) Bio and Brain Engineering PhD Student Jae-Myeong Kwon, Professor Ki-Hun Jeong, PhD Student Hyun-Kyung Kim, PhD Student Young-Gil Cha, and Professor Min H. Kim of the School of Computing >

The compound eyes of insects can detect fast-moving objects in parallel and, in low-light conditions, enhance sensitivity by integrating signals over time to determine motion. Inspired by these biological mechanisms, KAIST researchers have successfully developed a low-cost, high-speed camera that overcomes the limitations of frame rate and sensitivity faced by conventional high-speed cameras.

KAIST (represented by President Kwang Hyung Lee) announced on the 16th of January that a research team led by Professors Ki-Hun Jeong (Department of Bio and Brain Engineering) and Min H. Kim (School of Computing) has developed a novel bio-inspired camera capable of ultra-high-speed imaging with high sensitivity by mimicking the visual structure of insect eyes.

High-quality imaging under high-speed and low-light conditions is a critical challenge in many applications. While conventional high-speed cameras excel in capturing fast motion, their sensitivity decreases as frame rates increase because the time available to collect light is reduced.

To address this issue, the research team adopted an approach similar to insect vision, utilizing multiple optical channels and temporal summation. Unlike traditional monocular camera systems, the bio-inspired camera employs a compound-eye-like structure that allows for the parallel acquisition of frames from different time intervals.

< Figure 1. (A) Vision in a fast-eyed insect. Reflected light from swiftly moving objects sequentially stimulates the photoreceptors along the individual optical channels called ommatidia, of which the visual signals are separately and parallelly processed via the lamina and medulla. Each neural response is temporally summed to enhance the visual signals. The parallel processing and temporal summation allow fast and low-light imaging in dim light. (B) High-speed and high-sensitivity microlens array camera (HS-MAC). A rolling shutter image sensor is utilized to simultaneously acquire multiple frames by channel division, and temporal summation is performed in parallel to realize high speed and sensitivity even in a low-light environment. In addition, the frame components of a single fragmented array image are stitched into a single blurred frame, which is subsequently deblurred by compressive image reconstruction. >

During this process, light is accumulated over overlapping time periods for each frame, increasing the signal-to-noise ratio. The researchers demonstrated that their bio-inspired camera could capture objects up to 40 times dimmer than those detectable by conventional high-speed cameras.

The team also introduced a "channel-splitting" technique to significantly enhance the camera's speed, achieving frame rates thousands of times faster than those supported by the image sensors used in packaging. Additionally, a "compressed image restoration" algorithm was employed to eliminate blur caused by frame integration and reconstruct sharp images.

The resulting bio-inspired camera is less than one millimeter thick and extremely compact, capable of capturing 9,120 frames per second while providing clear images in low-light conditions.

< Figure 2. A high-speed, high-sensitivity biomimetic camera packaged in an image sensor. It is made small enough to fit on a finger, with a thickness of less than 1 mm. >

The research team plans to extend this technology to develop advanced image processing algorithms for 3D imaging and super-resolution imaging, aiming for applications in biomedical imaging, mobile devices, and various other camera technologies.

Hyun-Kyung Kim, a doctoral student in the Department of Bio and Brain Engineering at KAIST and the study's first author, stated, “We have experimentally validated that the insect-eye-inspired camera delivers outstanding performance in high-speed and low-light imaging despite its small size. This camera opens up possibilities for diverse applications in portable camera systems, security surveillance, and medical imaging.”

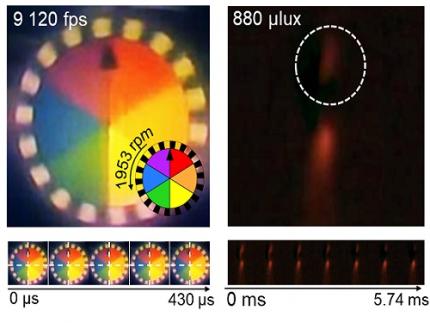

< Figure 3. Rotating plate and flame captured using the high-speed, high-sensitivity biomimetic camera. The rotating plate at 1,950 rpm was accurately captured at 9,120 fps. In addition, the pinch-off of the flame with a faint intensity of 880 µlux was accurately captured at 1,020 fps. >

This research was published in the international journal Science Advances in January 2025 (Paper Title: “Biologically-inspired microlens array camera for high-speed and high-sensitivity imaging”).

DOI: https://doi.org/10.1126/sciadv.ads3389

This study was supported by the Korea Research Institute for Defense Technology Planning and Advancement (KRIT) of the Defense Acquisition Program Administration (DAPA), the Ministry of Science and ICT, and the Ministry of Trade, Industry and Energy (MOTIE).

2025.01.16 View 5874

KAIST Develops Insect-Eye-Inspired Camera Capturing 9,120 Frames Per Second

< (From left) Bio and Brain Engineering PhD Student Jae-Myeong Kwon, Professor Ki-Hun Jeong, PhD Student Hyun-Kyung Kim, PhD Student Young-Gil Cha, and Professor Min H. Kim of the School of Computing >

The compound eyes of insects can detect fast-moving objects in parallel and, in low-light conditions, enhance sensitivity by integrating signals over time to determine motion. Inspired by these biological mechanisms, KAIST researchers have successfully developed a low-cost, high-speed camera that overcomes the limitations of frame rate and sensitivity faced by conventional high-speed cameras.

KAIST (represented by President Kwang Hyung Lee) announced on the 16th of January that a research team led by Professors Ki-Hun Jeong (Department of Bio and Brain Engineering) and Min H. Kim (School of Computing) has developed a novel bio-inspired camera capable of ultra-high-speed imaging with high sensitivity by mimicking the visual structure of insect eyes.

High-quality imaging under high-speed and low-light conditions is a critical challenge in many applications. While conventional high-speed cameras excel in capturing fast motion, their sensitivity decreases as frame rates increase because the time available to collect light is reduced.

To address this issue, the research team adopted an approach similar to insect vision, utilizing multiple optical channels and temporal summation. Unlike traditional monocular camera systems, the bio-inspired camera employs a compound-eye-like structure that allows for the parallel acquisition of frames from different time intervals.

< Figure 1. (A) Vision in a fast-eyed insect. Reflected light from swiftly moving objects sequentially stimulates the photoreceptors along the individual optical channels called ommatidia, of which the visual signals are separately and parallelly processed via the lamina and medulla. Each neural response is temporally summed to enhance the visual signals. The parallel processing and temporal summation allow fast and low-light imaging in dim light. (B) High-speed and high-sensitivity microlens array camera (HS-MAC). A rolling shutter image sensor is utilized to simultaneously acquire multiple frames by channel division, and temporal summation is performed in parallel to realize high speed and sensitivity even in a low-light environment. In addition, the frame components of a single fragmented array image are stitched into a single blurred frame, which is subsequently deblurred by compressive image reconstruction. >

During this process, light is accumulated over overlapping time periods for each frame, increasing the signal-to-noise ratio. The researchers demonstrated that their bio-inspired camera could capture objects up to 40 times dimmer than those detectable by conventional high-speed cameras.

The team also introduced a "channel-splitting" technique to significantly enhance the camera's speed, achieving frame rates thousands of times faster than those supported by the image sensors used in packaging. Additionally, a "compressed image restoration" algorithm was employed to eliminate blur caused by frame integration and reconstruct sharp images.

The resulting bio-inspired camera is less than one millimeter thick and extremely compact, capable of capturing 9,120 frames per second while providing clear images in low-light conditions.

< Figure 2. A high-speed, high-sensitivity biomimetic camera packaged in an image sensor. It is made small enough to fit on a finger, with a thickness of less than 1 mm. >

The research team plans to extend this technology to develop advanced image processing algorithms for 3D imaging and super-resolution imaging, aiming for applications in biomedical imaging, mobile devices, and various other camera technologies.

Hyun-Kyung Kim, a doctoral student in the Department of Bio and Brain Engineering at KAIST and the study's first author, stated, “We have experimentally validated that the insect-eye-inspired camera delivers outstanding performance in high-speed and low-light imaging despite its small size. This camera opens up possibilities for diverse applications in portable camera systems, security surveillance, and medical imaging.”

< Figure 3. Rotating plate and flame captured using the high-speed, high-sensitivity biomimetic camera. The rotating plate at 1,950 rpm was accurately captured at 9,120 fps. In addition, the pinch-off of the flame with a faint intensity of 880 µlux was accurately captured at 1,020 fps. >

This research was published in the international journal Science Advances in January 2025 (Paper Title: “Biologically-inspired microlens array camera for high-speed and high-sensitivity imaging”).

DOI: https://doi.org/10.1126/sciadv.ads3389

This study was supported by the Korea Research Institute for Defense Technology Planning and Advancement (KRIT) of the Defense Acquisition Program Administration (DAPA), the Ministry of Science and ICT, and the Ministry of Trade, Industry and Energy (MOTIE).

2025.01.16 View 5874 -

Before Eyes Open, They Get Ready to See

- Spontaneous retinal waves can generate long-range horizontal connectivity in visual cortex. -

A KAIST research team’s computational simulations demonstrated that the waves of spontaneous neural activity in the retinas of still-closed eyes in mammals develop long-range horizontal connections in the visual cortex during early developmental stages.

This new finding featured in the August 19 edition of Journal of Neuroscience as a cover article has resolved a long-standing puzzle for understanding visual neuroscience regarding the early organization of functional architectures in the mammalian visual cortex before eye-opening, especially the long-range horizontal connectivity known as “feature-specific” circuitry.

To prepare the animal to see when its eyes open, neural circuits in the brain’s visual system must begin developing earlier. However, the proper development of many brain regions involved in vision generally requires sensory input through the eyes.

In the primary visual cortex of the higher mammalian taxa, cortical neurons of similar functional tuning to a visual feature are linked together by long-range horizontal circuits that play a crucial role in visual information processing.

Surprisingly, these long-range horizontal connections in the primary visual cortex of higher mammals emerge before the onset of sensory experience, and the mechanism underlying this phenomenon has remained elusive.

To investigate this mechanism, a group of researchers led by Professor Se-Bum Paik from the Department of Bio and Brain Engineering at KAIST implemented computational simulations of early visual pathways using data obtained from the retinal circuits in young animals before eye-opening, including cats, monkeys, and mice.

From these simulations, the researchers found that spontaneous waves propagating in ON and OFF retinal mosaics can initialize the wiring of long-range horizontal connections by selectively co-activating cortical neurons of similar functional tuning, whereas equivalent random activities cannot induce such organizations.

The simulations also showed that emerged long-range horizontal connections can induce the patterned cortical activities, matching the topography of underlying functional maps even in salt-and-pepper type organizations observed in rodents. This result implies that the model developed by Professor Paik and his group can provide a universal principle for the developmental mechanism of long-range horizontal connections in both higher mammals as well as rodents.

Professor Paik said, “Our model provides a deeper understanding of how the functional architectures in the visual cortex can originate from the spatial organization of the periphery, without sensory experience during early developmental periods.”

He continued, “We believe that our findings will be of great interest to scientists working in a wide range of fields such as neuroscience, vision science, and developmental biology.”

This work was supported by the National Research Foundation of Korea (NRF). Undergraduate student Jinwoo Kim participated in this research project and presented the findings as the lead author as part of the Undergraduate Research Participation (URP) Program at KAIST.

Figures and image credit: Professor Se-Bum Paik, KAIST

Image usage restrictions: News organizations may use or redistribute these figures and image, with proper attribution, as part of news coverage of this paper only.

Publication:

Jinwoo Kim, Min Song, and Se-Bum Paik. (2020). Spontaneous retinal waves generate long-range horizontal connectivity in visual cortex. Journal of Neuroscience, Available online athttps://www.jneurosci.org/content/early/2020/07/17/JNEUROSCI.0649-20.2020

Profile: Se-Bum Paik

Assistant Professor

sbpaik@kaist.ac.kr

http://vs.kaist.ac.kr/

VSNN Laboratory

Department of Bio and Brain Engineering

Program of Brain and Cognitive Engineering

http://kaist.ac.kr

Korea Advanced Institute of Science and Technology (KAIST) Daejeon, Republic of Korea

Profile: Jinwoo Kim

Undergraduate Student

bugkjw@kaist.ac.kr

Department of Bio and Brain Engineering, KAIST

Profile: Min Song

Ph.D. Candidate

night@kaist.ac.kr

Program of Brain and Cognitive Engineering, KAIST

(END)

2020.08.25 View 13519

Before Eyes Open, They Get Ready to See

- Spontaneous retinal waves can generate long-range horizontal connectivity in visual cortex. -

A KAIST research team’s computational simulations demonstrated that the waves of spontaneous neural activity in the retinas of still-closed eyes in mammals develop long-range horizontal connections in the visual cortex during early developmental stages.

This new finding featured in the August 19 edition of Journal of Neuroscience as a cover article has resolved a long-standing puzzle for understanding visual neuroscience regarding the early organization of functional architectures in the mammalian visual cortex before eye-opening, especially the long-range horizontal connectivity known as “feature-specific” circuitry.

To prepare the animal to see when its eyes open, neural circuits in the brain’s visual system must begin developing earlier. However, the proper development of many brain regions involved in vision generally requires sensory input through the eyes.

In the primary visual cortex of the higher mammalian taxa, cortical neurons of similar functional tuning to a visual feature are linked together by long-range horizontal circuits that play a crucial role in visual information processing.

Surprisingly, these long-range horizontal connections in the primary visual cortex of higher mammals emerge before the onset of sensory experience, and the mechanism underlying this phenomenon has remained elusive.

To investigate this mechanism, a group of researchers led by Professor Se-Bum Paik from the Department of Bio and Brain Engineering at KAIST implemented computational simulations of early visual pathways using data obtained from the retinal circuits in young animals before eye-opening, including cats, monkeys, and mice.

From these simulations, the researchers found that spontaneous waves propagating in ON and OFF retinal mosaics can initialize the wiring of long-range horizontal connections by selectively co-activating cortical neurons of similar functional tuning, whereas equivalent random activities cannot induce such organizations.

The simulations also showed that emerged long-range horizontal connections can induce the patterned cortical activities, matching the topography of underlying functional maps even in salt-and-pepper type organizations observed in rodents. This result implies that the model developed by Professor Paik and his group can provide a universal principle for the developmental mechanism of long-range horizontal connections in both higher mammals as well as rodents.

Professor Paik said, “Our model provides a deeper understanding of how the functional architectures in the visual cortex can originate from the spatial organization of the periphery, without sensory experience during early developmental periods.”

He continued, “We believe that our findings will be of great interest to scientists working in a wide range of fields such as neuroscience, vision science, and developmental biology.”

This work was supported by the National Research Foundation of Korea (NRF). Undergraduate student Jinwoo Kim participated in this research project and presented the findings as the lead author as part of the Undergraduate Research Participation (URP) Program at KAIST.

Figures and image credit: Professor Se-Bum Paik, KAIST

Image usage restrictions: News organizations may use or redistribute these figures and image, with proper attribution, as part of news coverage of this paper only.

Publication:

Jinwoo Kim, Min Song, and Se-Bum Paik. (2020). Spontaneous retinal waves generate long-range horizontal connectivity in visual cortex. Journal of Neuroscience, Available online athttps://www.jneurosci.org/content/early/2020/07/17/JNEUROSCI.0649-20.2020

Profile: Se-Bum Paik

Assistant Professor

sbpaik@kaist.ac.kr

http://vs.kaist.ac.kr/

VSNN Laboratory

Department of Bio and Brain Engineering

Program of Brain and Cognitive Engineering

http://kaist.ac.kr

Korea Advanced Institute of Science and Technology (KAIST) Daejeon, Republic of Korea

Profile: Jinwoo Kim

Undergraduate Student

bugkjw@kaist.ac.kr

Department of Bio and Brain Engineering, KAIST

Profile: Min Song

Ph.D. Candidate

night@kaist.ac.kr

Program of Brain and Cognitive Engineering, KAIST

(END)

2020.08.25 View 13519