MAG

-

KAIST Uncovers the Principles of Gene Expression Regulation in Cancer and Cellular Functions

< (From left) Professor Seyun Kim, Professor Gwangrog Lee, Dr. Hyoungjoon Ahn, Dr. Jeongmin Yu, Professor Won-Ki Cho, and (below) PhD candidate Kwangmin Ryu of the Department of Biological Sciences>

A research team at KAIST has identified the core gene expression networks regulated by key proteins that fundamentally drive phenomena such as cancer development, metastasis, tissue differentiation from stem cells, and neural activation processes. This discovery lays the foundation for developing innovative therapeutic technologies.

On the 22nd of January, KAIST (represented by President Kwang Hyung Lee) announced that the joint research team led by Professors Seyun Kim, Gwangrog Lee, and Won-Ki Cho from the Department of Biological Sciences had uncovered essential mechanisms controlling gene expression in animal cells.

Inositol phosphate metabolites produced by inositol metabolism enzymes serve as vital secondary messengers in eukaryotic cell signaling systems and are broadly implicated in cancer, obesity, diabetes, and neurological disorders.

The research team demonstrated that the inositol polyphosphate multikinase (IPMK) enzyme, a key player in the inositol metabolism system, acts as a critical transcriptional activator within the core gene expression networks of animal cells. Notably, although IPMK was previously reported to play an important role in the transcription process governed by serum response factor (SRF), a representative transcription factor in animal cells, the precise mechanism of its action was unclear.

SRF is a transcription factor directly controlling the expression of at least 200–300 genes, regulating cell growth, proliferation, apoptosis, and motility, and is indispensable for organ development, such as in the heart.

The team discovered that IPMK binds directly to SRF, altering the three-dimensional structure of the SRF protein. This interaction facilitates the transcriptional activity of various genes through the SRF activated by IPMK, demonstrating that IPMK acts as a critical regulatory switch to enhance SRF's protein activity.

< Figure 1. The serum response factor (SRF) protein, a key transcription factor in animal cells, directly binds to inositol polyphosphate multikinase (IPMK) enzyme and undergoes structural change to acquire DNA binding ability, and precisely regulates growth and differentiation of animal cells through transcriptional activation. >

The team further verified that disruptions in the direct interaction between IPMK and SRF lead to the reduced functionality and activity of SRF, causing severe impairments in gene expression.

By highlighting the significance of the intrinsically disordered region (IDR) in SRF, the researchers underscored the biological importance of intrinsically disordered proteins (IDPs). Unlike most proteins that adopt distinct structures through folding, IDPs, including those with IDRs, do not exhibit specific structures but play crucial biological roles, attracting significant attention in the scientific community.

Professor Seyun Kim commented, "This study provides a vital mechanism proving that IPMK, a key enzyme in the inositol metabolism system, is a major transcriptional activator in the core gene expression network of animal cells. By understanding fundamental processes such as cancer development and metastasis, tissue differentiation from stem cells, and neural activation through SRF, we hope this discovery will lead to the broad application of innovative therapeutic technologies."

The findings were published on January 7th in the international journal Nucleic Acids Research (IF=16.7, top 1.8% in Biochemistry and Molecular Biology), under the title “Single-molecule analysis reveals that IPMK enhances the DNA-binding activity of the transcription factor SRF" (DOI: 10.1093/nar/gkae1281).

This research was supported by the National Research Foundation of Korea's Mid-career Research Program, Leading Research Center Program, and Global Research Laboratory Program, as well as by the Suh Kyungbae Science Foundation and the Samsung Future Technology Development Program.

2025.01.24 View 7432

KAIST Uncovers the Principles of Gene Expression Regulation in Cancer and Cellular Functions

< (From left) Professor Seyun Kim, Professor Gwangrog Lee, Dr. Hyoungjoon Ahn, Dr. Jeongmin Yu, Professor Won-Ki Cho, and (below) PhD candidate Kwangmin Ryu of the Department of Biological Sciences>

A research team at KAIST has identified the core gene expression networks regulated by key proteins that fundamentally drive phenomena such as cancer development, metastasis, tissue differentiation from stem cells, and neural activation processes. This discovery lays the foundation for developing innovative therapeutic technologies.

On the 22nd of January, KAIST (represented by President Kwang Hyung Lee) announced that the joint research team led by Professors Seyun Kim, Gwangrog Lee, and Won-Ki Cho from the Department of Biological Sciences had uncovered essential mechanisms controlling gene expression in animal cells.

Inositol phosphate metabolites produced by inositol metabolism enzymes serve as vital secondary messengers in eukaryotic cell signaling systems and are broadly implicated in cancer, obesity, diabetes, and neurological disorders.

The research team demonstrated that the inositol polyphosphate multikinase (IPMK) enzyme, a key player in the inositol metabolism system, acts as a critical transcriptional activator within the core gene expression networks of animal cells. Notably, although IPMK was previously reported to play an important role in the transcription process governed by serum response factor (SRF), a representative transcription factor in animal cells, the precise mechanism of its action was unclear.

SRF is a transcription factor directly controlling the expression of at least 200–300 genes, regulating cell growth, proliferation, apoptosis, and motility, and is indispensable for organ development, such as in the heart.

The team discovered that IPMK binds directly to SRF, altering the three-dimensional structure of the SRF protein. This interaction facilitates the transcriptional activity of various genes through the SRF activated by IPMK, demonstrating that IPMK acts as a critical regulatory switch to enhance SRF's protein activity.

< Figure 1. The serum response factor (SRF) protein, a key transcription factor in animal cells, directly binds to inositol polyphosphate multikinase (IPMK) enzyme and undergoes structural change to acquire DNA binding ability, and precisely regulates growth and differentiation of animal cells through transcriptional activation. >

The team further verified that disruptions in the direct interaction between IPMK and SRF lead to the reduced functionality and activity of SRF, causing severe impairments in gene expression.

By highlighting the significance of the intrinsically disordered region (IDR) in SRF, the researchers underscored the biological importance of intrinsically disordered proteins (IDPs). Unlike most proteins that adopt distinct structures through folding, IDPs, including those with IDRs, do not exhibit specific structures but play crucial biological roles, attracting significant attention in the scientific community.

Professor Seyun Kim commented, "This study provides a vital mechanism proving that IPMK, a key enzyme in the inositol metabolism system, is a major transcriptional activator in the core gene expression network of animal cells. By understanding fundamental processes such as cancer development and metastasis, tissue differentiation from stem cells, and neural activation through SRF, we hope this discovery will lead to the broad application of innovative therapeutic technologies."

The findings were published on January 7th in the international journal Nucleic Acids Research (IF=16.7, top 1.8% in Biochemistry and Molecular Biology), under the title “Single-molecule analysis reveals that IPMK enhances the DNA-binding activity of the transcription factor SRF" (DOI: 10.1093/nar/gkae1281).

This research was supported by the National Research Foundation of Korea's Mid-career Research Program, Leading Research Center Program, and Global Research Laboratory Program, as well as by the Suh Kyungbae Science Foundation and the Samsung Future Technology Development Program.

2025.01.24 View 7432 -

KAIST to Collaborate with AT&C to Take Dominance over Dementia

< Photo 1. (From left) KAIST Dean of the College of Natural Sciences Daesoo Kim, KAIST President Kwang Hyung Lee, AT&C Chairman Ki Tae Lee, AT&C CEO Jong-won Lee >

KAIST (President Kwang Hyung Lee) announced on January 9th that it signed a memorandum of understanding for a comprehensive mutual cooperation with AT&C (CEO Jong-won Lee) at its Seoul Dogok Campus to expand research investment and industry-academia cooperation in preparation for the future cutting-edge digital bio era.

Senile dementia is a rapidly increasing brain disease that affects 10% of the elderly population aged 65 and older, and approximately 38% of those aged 85 and older suffer from dementia. Alzheimer's disease is the most common dementia in the elderly and its prevalence has been increasing rapidly in the population of over 40 years of age. However, an effective treatment is yet to be found.

The Korean government is investing a total of KRW 1.1 trillion in dementia R&D projects from 2020 to 2029, with the goal of reducing the rate of increase of dementia patients by 50%. Since it takes a lot of time and money to develop effective and affordable medicinal dementia treatments, it is urgent to work on the development of digital treatments for dementia that can be applied more quickly.

AT&C, a digital healthcare company, has already received approval from the Ministry of Food and Drug Safety (MFDS) for its device for antidepressant treatment based on transcranial magnetic stimulation (TMS) using magnetic fields and is selling it domestically and internationally. In addition, it has developed the first Alzheimer's dementia treatment device in Korea and received MFDS approval for clinical trials. After passing phase 1 to evaluate safety and phase 2 to test efficacy on some patients, it is currently conducting phase 3 clinical trials to test efficacy on a larger group of patients.

This dementia treatment device is equipped with a system that combines non-invasive electronic stimulations (TMS electromagnetic stimulator) and digital therapeutic prescription (cognitive learning programs) to provide precise, automated treatment by applying AI image analysis and robotics technology.

Through this agreement, KAIST and AT&C have agreed to cooperate with each other in the development of innovative digital treatment equipment for brain diseases. Through research collaboration with KAIST, AT&C will be able to develop technology that can be widely applied to Parkinson's disease, stroke, mild cognitive impairment, sleep disorders, etc., and will develop portable equipment that can improve brain function and prevent dementia at home by utilizing KAIST's wearable technology.

To this end, AT&C plans to establish a digital healthcare research center at KAIST by supporting research personnel and research expenses worth approximately 3 billion won with the goal of developing cutting-edge digital equipment within 3 years.

The digital equipment market is expected to grow at a compounded annual growth rate of 22.1% from 2023 to 2033, reaching a market size of $1.9209 trillion by 2033.

< Photo 2. (From left) Dean of the KAIST College of Natural Sciences Daesoo Kim, Professor Young-joon Lee, Professor Minee Choi of the KAIST Department of Brain and Cognitive Sciences, KAIST President Kwang Hyung Lee, Chairman Ki Tae Lee, CEO Jong-won Lee, and Headquarters Director Ki-yong Na of AT&C >

CEO Jong-won Lee said, “AT&C is playing a leading role in the treatment of Alzheimer’s disease using TMS (transcranial magnetic stimulation) technology. Through this agreement with KAIST, we will do our best to create a new paradigm for brain disease treatment and become a platform company that can lead future medical devices and medical technology.”

Former Samsung Electronics Vice Chairman Ki Tae Lee, a strong supporter of this R&D project, said, “Through this agreement with KAIST, we plan to prepare for a new future by combining the technologies AT&C has developed so far with KAIST’s innovative and differentiated technologies.”

KAIST President Kwang Hyung Lee emphasized, “Through this collaboration, KAIST expects to build a world-class digital therapeutics infrastructure for treating brain diseases and contribute greatly to further strengthening Korea’s competitiveness in the biomedical field.”

The signing ceremony was attended by KAIST President Kwang Hyung Lee, the Dean of KAIST College of Natural Sciences Daesoo Kim, AT&C CEO Lee Jong-won, and the current Chairman of AT&C, Ki Tae Lee, former Vice Chairman of Samsung Electronics.

2025.01.09 View 2922

KAIST to Collaborate with AT&C to Take Dominance over Dementia

< Photo 1. (From left) KAIST Dean of the College of Natural Sciences Daesoo Kim, KAIST President Kwang Hyung Lee, AT&C Chairman Ki Tae Lee, AT&C CEO Jong-won Lee >

KAIST (President Kwang Hyung Lee) announced on January 9th that it signed a memorandum of understanding for a comprehensive mutual cooperation with AT&C (CEO Jong-won Lee) at its Seoul Dogok Campus to expand research investment and industry-academia cooperation in preparation for the future cutting-edge digital bio era.

Senile dementia is a rapidly increasing brain disease that affects 10% of the elderly population aged 65 and older, and approximately 38% of those aged 85 and older suffer from dementia. Alzheimer's disease is the most common dementia in the elderly and its prevalence has been increasing rapidly in the population of over 40 years of age. However, an effective treatment is yet to be found.

The Korean government is investing a total of KRW 1.1 trillion in dementia R&D projects from 2020 to 2029, with the goal of reducing the rate of increase of dementia patients by 50%. Since it takes a lot of time and money to develop effective and affordable medicinal dementia treatments, it is urgent to work on the development of digital treatments for dementia that can be applied more quickly.

AT&C, a digital healthcare company, has already received approval from the Ministry of Food and Drug Safety (MFDS) for its device for antidepressant treatment based on transcranial magnetic stimulation (TMS) using magnetic fields and is selling it domestically and internationally. In addition, it has developed the first Alzheimer's dementia treatment device in Korea and received MFDS approval for clinical trials. After passing phase 1 to evaluate safety and phase 2 to test efficacy on some patients, it is currently conducting phase 3 clinical trials to test efficacy on a larger group of patients.

This dementia treatment device is equipped with a system that combines non-invasive electronic stimulations (TMS electromagnetic stimulator) and digital therapeutic prescription (cognitive learning programs) to provide precise, automated treatment by applying AI image analysis and robotics technology.

Through this agreement, KAIST and AT&C have agreed to cooperate with each other in the development of innovative digital treatment equipment for brain diseases. Through research collaboration with KAIST, AT&C will be able to develop technology that can be widely applied to Parkinson's disease, stroke, mild cognitive impairment, sleep disorders, etc., and will develop portable equipment that can improve brain function and prevent dementia at home by utilizing KAIST's wearable technology.

To this end, AT&C plans to establish a digital healthcare research center at KAIST by supporting research personnel and research expenses worth approximately 3 billion won with the goal of developing cutting-edge digital equipment within 3 years.

The digital equipment market is expected to grow at a compounded annual growth rate of 22.1% from 2023 to 2033, reaching a market size of $1.9209 trillion by 2033.

< Photo 2. (From left) Dean of the KAIST College of Natural Sciences Daesoo Kim, Professor Young-joon Lee, Professor Minee Choi of the KAIST Department of Brain and Cognitive Sciences, KAIST President Kwang Hyung Lee, Chairman Ki Tae Lee, CEO Jong-won Lee, and Headquarters Director Ki-yong Na of AT&C >

CEO Jong-won Lee said, “AT&C is playing a leading role in the treatment of Alzheimer’s disease using TMS (transcranial magnetic stimulation) technology. Through this agreement with KAIST, we will do our best to create a new paradigm for brain disease treatment and become a platform company that can lead future medical devices and medical technology.”

Former Samsung Electronics Vice Chairman Ki Tae Lee, a strong supporter of this R&D project, said, “Through this agreement with KAIST, we plan to prepare for a new future by combining the technologies AT&C has developed so far with KAIST’s innovative and differentiated technologies.”

KAIST President Kwang Hyung Lee emphasized, “Through this collaboration, KAIST expects to build a world-class digital therapeutics infrastructure for treating brain diseases and contribute greatly to further strengthening Korea’s competitiveness in the biomedical field.”

The signing ceremony was attended by KAIST President Kwang Hyung Lee, the Dean of KAIST College of Natural Sciences Daesoo Kim, AT&C CEO Lee Jong-won, and the current Chairman of AT&C, Ki Tae Lee, former Vice Chairman of Samsung Electronics.

2025.01.09 View 2922 -

KAIST Secures Core Technology for Ultra-High-Resolution Image Sensors

A joint research team from Korea and the United States has developed next-generation, high-resolution image sensor technology with higher power efficiency and a smaller size compared to existing sensors. Notably, they have secured foundational technology for ultra-high-resolution shortwave infrared (SWIR) image sensors, an area currently dominated by Sony, paving the way for future market entry.

KAIST (represented by President Kwang Hyung Lee) announced on the 20th of November that a research team led by Professor SangHyeon Kim from the School of Electrical Engineering, in collaboration with Inha University and Yale University in the U.S., has developed an ultra-thin broadband photodiode (PD), marking a significant breakthrough in high-performance image sensor technology.

This research drastically improves the trade-off between the absorption layer thickness and quantum efficiency found in conventional photodiode technology. Specifically, it achieved high quantum efficiency of over 70% even in an absorption layer thinner than one micrometer (μm), reducing the thickness of the absorption layer by approximately 70% compared to existing technologies.

A thinner absorption layer simplifies pixel processing, allowing for higher resolution and smoother carrier diffusion, which is advantageous for light carrier acquisition while also reducing the cost. However, a fundamental issue with thinner absorption layers is the reduced absorption of long-wavelength light.

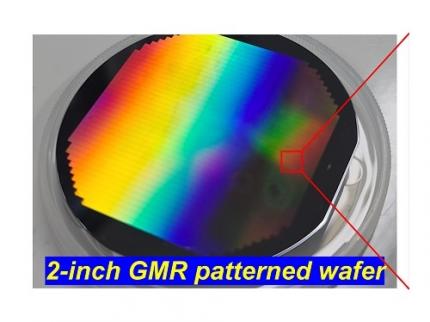

< Figure 1. Schematic diagram of the InGaAs photodiode image sensor integrated on the Guided-Mode Resonance (GMR) structure proposed in this study (left), a photograph of the fabricated wafer, and a scanning electron microscope (SEM) image of the periodic patterns (right) >

The research team introduced a guided-mode resonance (GMR) structure* that enables high-efficiency light absorption across a wide spectral range from 400 nanometers (nm) to 1,700 nanometers (nm). This wavelength range includes not only visible light but also light the SWIR region, making it valuable for various industrial applications.

*Guided-Mode Resonance (GMR) Structure: A concept used in electromagnetics, a phenomenon in which a specific (light) wave resonates (forming a strong electric/magnetic field) at a specific wavelength. Since energy is maximized under these conditions, it has been used to increase antenna or radar efficiency.

The improved performance in the SWIR region is expected to play a significant role in developing next-generation image sensors with increasingly high resolutions. The GMR structure, in particular, holds potential for further enhancing resolution and other performance metrics through hybrid integration and monolithic 3D integration with complementary metal-oxide-semiconductor (CMOS)-based readout integrated circuits (ROIC).

< Figure 2. Benchmark for state-of-the-art InGaAs-based SWIR pixels with simulated EQE lines as a function of TAL variation. Performance is maintained while reducing the absorption layer thickness from 2.1 micrometers or more to 1 micrometer or less while reducing it by 50% to 70% >

The research team has significantly enhanced international competitiveness in low-power devices and ultra-high-resolution imaging technology, opening up possibilities for applications in digital cameras, security systems, medical and industrial image sensors, as well as future ultra-high-resolution sensors for autonomous driving, aerospace, and satellite observation.

Professor Sang Hyun Kim, the lead researcher, commented, “This research demonstrates that significantly higher performance than existing technologies can be achieved even with ultra-thin absorption layers.”

< Figure 3. Top optical microscope image and cross-sectional scanning electron microscope image of the InGaAs photodiode image sensor fabricated on the GMR structure (left). Improved quantum efficiency performance of the ultra-thin image sensor (red) fabricated with the technology proposed in this study (right) >

The results of this research were published on 15th of November, in the prestigious international journal Light: Science & Applications (JCR 2.9%, IF=20.6), with Professor Dae-Myung Geum of Inha University (formerly a KAIST postdoctoral researcher) and Dr. Jinha Lim (currently a postdoctoral researcher at Yale University) as co-first authors. (Paper title: “Highly-efficient (>70%) and Wide-spectral (400 nm -1700 nm) sub-micron-thick InGaAs photodiodes for future high-resolution image sensors”)

This study was supported by the National Research Foundation of Korea.

2024.11.22 View 3595

KAIST Secures Core Technology for Ultra-High-Resolution Image Sensors

A joint research team from Korea and the United States has developed next-generation, high-resolution image sensor technology with higher power efficiency and a smaller size compared to existing sensors. Notably, they have secured foundational technology for ultra-high-resolution shortwave infrared (SWIR) image sensors, an area currently dominated by Sony, paving the way for future market entry.

KAIST (represented by President Kwang Hyung Lee) announced on the 20th of November that a research team led by Professor SangHyeon Kim from the School of Electrical Engineering, in collaboration with Inha University and Yale University in the U.S., has developed an ultra-thin broadband photodiode (PD), marking a significant breakthrough in high-performance image sensor technology.

This research drastically improves the trade-off between the absorption layer thickness and quantum efficiency found in conventional photodiode technology. Specifically, it achieved high quantum efficiency of over 70% even in an absorption layer thinner than one micrometer (μm), reducing the thickness of the absorption layer by approximately 70% compared to existing technologies.

A thinner absorption layer simplifies pixel processing, allowing for higher resolution and smoother carrier diffusion, which is advantageous for light carrier acquisition while also reducing the cost. However, a fundamental issue with thinner absorption layers is the reduced absorption of long-wavelength light.

< Figure 1. Schematic diagram of the InGaAs photodiode image sensor integrated on the Guided-Mode Resonance (GMR) structure proposed in this study (left), a photograph of the fabricated wafer, and a scanning electron microscope (SEM) image of the periodic patterns (right) >

The research team introduced a guided-mode resonance (GMR) structure* that enables high-efficiency light absorption across a wide spectral range from 400 nanometers (nm) to 1,700 nanometers (nm). This wavelength range includes not only visible light but also light the SWIR region, making it valuable for various industrial applications.

*Guided-Mode Resonance (GMR) Structure: A concept used in electromagnetics, a phenomenon in which a specific (light) wave resonates (forming a strong electric/magnetic field) at a specific wavelength. Since energy is maximized under these conditions, it has been used to increase antenna or radar efficiency.

The improved performance in the SWIR region is expected to play a significant role in developing next-generation image sensors with increasingly high resolutions. The GMR structure, in particular, holds potential for further enhancing resolution and other performance metrics through hybrid integration and monolithic 3D integration with complementary metal-oxide-semiconductor (CMOS)-based readout integrated circuits (ROIC).

< Figure 2. Benchmark for state-of-the-art InGaAs-based SWIR pixels with simulated EQE lines as a function of TAL variation. Performance is maintained while reducing the absorption layer thickness from 2.1 micrometers or more to 1 micrometer or less while reducing it by 50% to 70% >

The research team has significantly enhanced international competitiveness in low-power devices and ultra-high-resolution imaging technology, opening up possibilities for applications in digital cameras, security systems, medical and industrial image sensors, as well as future ultra-high-resolution sensors for autonomous driving, aerospace, and satellite observation.

Professor Sang Hyun Kim, the lead researcher, commented, “This research demonstrates that significantly higher performance than existing technologies can be achieved even with ultra-thin absorption layers.”

< Figure 3. Top optical microscope image and cross-sectional scanning electron microscope image of the InGaAs photodiode image sensor fabricated on the GMR structure (left). Improved quantum efficiency performance of the ultra-thin image sensor (red) fabricated with the technology proposed in this study (right) >

The results of this research were published on 15th of November, in the prestigious international journal Light: Science & Applications (JCR 2.9%, IF=20.6), with Professor Dae-Myung Geum of Inha University (formerly a KAIST postdoctoral researcher) and Dr. Jinha Lim (currently a postdoctoral researcher at Yale University) as co-first authors. (Paper title: “Highly-efficient (>70%) and Wide-spectral (400 nm -1700 nm) sub-micron-thick InGaAs photodiodes for future high-resolution image sensors”)

This study was supported by the National Research Foundation of Korea.

2024.11.22 View 3595 -

KAIST Succeeds in the Real-time Observation of Organoids using Holotomography

Organoids, which are 3D miniature organs that mimic the structure and function of human organs, play an essential role in disease research and drug development. A Korean research team has overcome the limitations of existing imaging technologies, succeeding in the real-time, high-resolution observation of living organoids.

KAIST (represented by President Kwang Hyung Lee) announced on the 14th of October that Professor YongKeun Park’s research team from the Department of Physics, in collaboration with the Genome Editing Research Center (Director Bon-Kyoung Koo) of the Institute for Basic Science (IBS President Do-Young Noh) and Tomocube Inc., has developed an imaging technology using holotomography to observe live, small intestinal organoids in real time at a high resolution.

Existing imaging techniques have struggled to observe living organoids in high resolution over extended periods and often required additional treatments like fluorescent staining.

< Figure 1. Overview of the low-coherence HT workflow. Using holotomography, 3D morphological restoration and quantitative analysis of organoids can be performed. In order to improve the limited field of view, which is a limitation of the microscope, our research team utilized a large-area field of view combination algorithm and made a 3D restoration by acquiring multi-focus holographic images for 3D measurements. After that, the organoids were compartmentalized to divide the parts necessary for analysis and quantitatively evaluated the protein concentration measurable from the refractive index and the survival rate of the organoids. >

The research team introduced holotomography technology to address these issues, which provides high-resolution images without the need for fluorescent staining and allows for the long-term observation of dynamic changes in real time without causing cell damage.

The team validated this technology using small intestinal organoids from experimental mice and were able to observe various cell structures inside the organoids in detail. They also captured dynamic changes such as growth processes, cell division, and cell death in real time using holotomography.

Additionally, the technology allowed for the precise analysis of the organoids' responses to drug treatments, verifying the survival of the cells.

The researchers believe that this breakthrough will open new horizons in organoid research, enabling the greater utilization of organoids in drug development, personalized medicine, and regenerative medicine.

Future research is expected to more accurately replicate the in vivo environment of organoids, contributing significantly to a more detailed understanding of various life phenomena at the cellular level through more precise 3D imaging.

< Figure 2. Real-time organoid morphology analysis. Using holotomography, it is possible to observe the lumen and villus development process of intestinal organoids in real time, which was difficult to observe with a conventional microscope. In addition, various information about intestinal organoids can be obtained by quantifying the size and protein amount of intestinal organoids through image analysis. >

Dr. Mahn Jae Lee, a graduate of KAIST's Graduate School of Medical Science and Engineering, currently at Chungnam National University Hospital and the first author of the paper, commented, "This research represents a new imaging technology that surpasses previous limitations and is expected to make a major contribution to disease modeling, personalized treatments, and drug development research using organoids."

The research results were published online in the international journal Experimental & Molecular Medicine on October 1, 2024, and the technology has been recognized for its applicability in various fields of life sciences. (Paper title: “Long-term three-dimensional high-resolution imaging of live unlabeled small intestinal organoids via low-coherence holotomography”)

This research was supported by the National Research Foundation of Korea, KAIST Institutes, and the Institute for Basic Science.

2024.10.14 View 3493

KAIST Succeeds in the Real-time Observation of Organoids using Holotomography

Organoids, which are 3D miniature organs that mimic the structure and function of human organs, play an essential role in disease research and drug development. A Korean research team has overcome the limitations of existing imaging technologies, succeeding in the real-time, high-resolution observation of living organoids.

KAIST (represented by President Kwang Hyung Lee) announced on the 14th of October that Professor YongKeun Park’s research team from the Department of Physics, in collaboration with the Genome Editing Research Center (Director Bon-Kyoung Koo) of the Institute for Basic Science (IBS President Do-Young Noh) and Tomocube Inc., has developed an imaging technology using holotomography to observe live, small intestinal organoids in real time at a high resolution.

Existing imaging techniques have struggled to observe living organoids in high resolution over extended periods and often required additional treatments like fluorescent staining.

< Figure 1. Overview of the low-coherence HT workflow. Using holotomography, 3D morphological restoration and quantitative analysis of organoids can be performed. In order to improve the limited field of view, which is a limitation of the microscope, our research team utilized a large-area field of view combination algorithm and made a 3D restoration by acquiring multi-focus holographic images for 3D measurements. After that, the organoids were compartmentalized to divide the parts necessary for analysis and quantitatively evaluated the protein concentration measurable from the refractive index and the survival rate of the organoids. >

The research team introduced holotomography technology to address these issues, which provides high-resolution images without the need for fluorescent staining and allows for the long-term observation of dynamic changes in real time without causing cell damage.

The team validated this technology using small intestinal organoids from experimental mice and were able to observe various cell structures inside the organoids in detail. They also captured dynamic changes such as growth processes, cell division, and cell death in real time using holotomography.

Additionally, the technology allowed for the precise analysis of the organoids' responses to drug treatments, verifying the survival of the cells.

The researchers believe that this breakthrough will open new horizons in organoid research, enabling the greater utilization of organoids in drug development, personalized medicine, and regenerative medicine.

Future research is expected to more accurately replicate the in vivo environment of organoids, contributing significantly to a more detailed understanding of various life phenomena at the cellular level through more precise 3D imaging.

< Figure 2. Real-time organoid morphology analysis. Using holotomography, it is possible to observe the lumen and villus development process of intestinal organoids in real time, which was difficult to observe with a conventional microscope. In addition, various information about intestinal organoids can be obtained by quantifying the size and protein amount of intestinal organoids through image analysis. >

Dr. Mahn Jae Lee, a graduate of KAIST's Graduate School of Medical Science and Engineering, currently at Chungnam National University Hospital and the first author of the paper, commented, "This research represents a new imaging technology that surpasses previous limitations and is expected to make a major contribution to disease modeling, personalized treatments, and drug development research using organoids."

The research results were published online in the international journal Experimental & Molecular Medicine on October 1, 2024, and the technology has been recognized for its applicability in various fields of life sciences. (Paper title: “Long-term three-dimensional high-resolution imaging of live unlabeled small intestinal organoids via low-coherence holotomography”)

This research was supported by the National Research Foundation of Korea, KAIST Institutes, and the Institute for Basic Science.

2024.10.14 View 3493 -

KAIST Employs Image-recognition AI to Determine Battery Composition and Conditions

An international collaborative research team has developed an image recognition technology that can accurately determine the elemental composition and the number of charge and discharge cycles of a battery by examining only its surface morphology using AI learning.

KAIST (President Kwang-Hyung Lee) announced on July 2nd that Professor Seungbum Hong from the Department of Materials Science and Engineering, in collaboration with the Electronics and Telecommunications Research Institute (ETRI) and Drexel University in the United States, has developed a method to predict the major elemental composition and charge-discharge state of NCM cathode materials with 99.6% accuracy using convolutional neural networks (CNN)*.

*Convolutional Neural Network (CNN): A type of multi-layer, feed-forward, artificial neural network used for analyzing visual images.

The research team noted that while scanning electron microscopy (SEM) is used in semiconductor manufacturing to inspect wafer defects, it is rarely used in battery inspections. SEM is used for batteries to analyze the size of particles only at research sites, and reliability is predicted from the broken particles and the shape of the breakage in the case of deteriorated battery materials.

The research team decided that it would be groundbreaking if an automated SEM can be used in the process of battery production, just like in the semiconductor manufacturing, to inspect the surface of the cathode material to determine whether it was synthesized according to the desired composition and that the lifespan would be reliable, thereby reducing the defect rate.

< Figure 1. Example images of true cases and their grad-CAM overlays from the best trained network. >

The researchers trained a CNN-based AI applicable to autonomous vehicles to learn the surface images of battery materials, enabling it to predict the major elemental composition and charge-discharge cycle states of the cathode materials. They found that while the method could accurately predict the composition of materials with additives, it had lower accuracy for predicting charge-discharge states. The team plans to further train the AI with various battery material morphologies produced through different processes and ultimately use it for inspecting the compositional uniformity and predicting the lifespan of next-generation batteries.

Professor Joshua C. Agar, one of the collaborating researchers of the project from the Department of Mechanical Engineering and Mechanics of Drexel University, said, "In the future, artificial intelligence is expected to be applied not only to battery materials but also to various dynamic processes in functional materials synthesis, clean energy generation in fusion, and understanding foundations of particles and the universe."

Professor Seungbum Hong from KAIST, who led the research, stated, "This research is significant as it is the first in the world to develop an AI-based methodology that can quickly and accurately predict the major elemental composition and the state of the battery from the structural data of micron-scale SEM images. The methodology developed in this study for identifying the composition and state of battery materials based on microscopic images is expected to play a crucial role in improving the performance and quality of battery materials in the future."

< Figure 2. Accuracies of CNN Model predictions on SEM images of NCM cathode materials with additives under various conditions. >

This research was conducted by KAIST’s Materials Science and Engineering Department graduates Dr. Jimin Oh and Dr. Jiwon Yeom, the co-first authors, in collaboration with Professor Josh Agar and Dr. Kwang Man Kim from ETRI. It was supported by the National Research Foundation of Korea, the KAIST Global Singularity project, and international collaboration with the US research team. The results were published in the international journal npj Computational Materials on May 4. (Paper Title: “Composition and state prediction of lithium-ion cathode via convolutional neural network trained on scanning electron microscopy images”)

2024.07.02 View 5133

KAIST Employs Image-recognition AI to Determine Battery Composition and Conditions

An international collaborative research team has developed an image recognition technology that can accurately determine the elemental composition and the number of charge and discharge cycles of a battery by examining only its surface morphology using AI learning.

KAIST (President Kwang-Hyung Lee) announced on July 2nd that Professor Seungbum Hong from the Department of Materials Science and Engineering, in collaboration with the Electronics and Telecommunications Research Institute (ETRI) and Drexel University in the United States, has developed a method to predict the major elemental composition and charge-discharge state of NCM cathode materials with 99.6% accuracy using convolutional neural networks (CNN)*.

*Convolutional Neural Network (CNN): A type of multi-layer, feed-forward, artificial neural network used for analyzing visual images.

The research team noted that while scanning electron microscopy (SEM) is used in semiconductor manufacturing to inspect wafer defects, it is rarely used in battery inspections. SEM is used for batteries to analyze the size of particles only at research sites, and reliability is predicted from the broken particles and the shape of the breakage in the case of deteriorated battery materials.

The research team decided that it would be groundbreaking if an automated SEM can be used in the process of battery production, just like in the semiconductor manufacturing, to inspect the surface of the cathode material to determine whether it was synthesized according to the desired composition and that the lifespan would be reliable, thereby reducing the defect rate.

< Figure 1. Example images of true cases and their grad-CAM overlays from the best trained network. >

The researchers trained a CNN-based AI applicable to autonomous vehicles to learn the surface images of battery materials, enabling it to predict the major elemental composition and charge-discharge cycle states of the cathode materials. They found that while the method could accurately predict the composition of materials with additives, it had lower accuracy for predicting charge-discharge states. The team plans to further train the AI with various battery material morphologies produced through different processes and ultimately use it for inspecting the compositional uniformity and predicting the lifespan of next-generation batteries.

Professor Joshua C. Agar, one of the collaborating researchers of the project from the Department of Mechanical Engineering and Mechanics of Drexel University, said, "In the future, artificial intelligence is expected to be applied not only to battery materials but also to various dynamic processes in functional materials synthesis, clean energy generation in fusion, and understanding foundations of particles and the universe."

Professor Seungbum Hong from KAIST, who led the research, stated, "This research is significant as it is the first in the world to develop an AI-based methodology that can quickly and accurately predict the major elemental composition and the state of the battery from the structural data of micron-scale SEM images. The methodology developed in this study for identifying the composition and state of battery materials based on microscopic images is expected to play a crucial role in improving the performance and quality of battery materials in the future."

< Figure 2. Accuracies of CNN Model predictions on SEM images of NCM cathode materials with additives under various conditions. >

This research was conducted by KAIST’s Materials Science and Engineering Department graduates Dr. Jimin Oh and Dr. Jiwon Yeom, the co-first authors, in collaboration with Professor Josh Agar and Dr. Kwang Man Kim from ETRI. It was supported by the National Research Foundation of Korea, the KAIST Global Singularity project, and international collaboration with the US research team. The results were published in the international journal npj Computational Materials on May 4. (Paper Title: “Composition and state prediction of lithium-ion cathode via convolutional neural network trained on scanning electron microscopy images”)

2024.07.02 View 5133 -

North Korea and Beyond: AI-Powered Satellite Analysis Reveals the Unseen Economic Landscape of Underdeveloped Nations

- A joint research team in computer science, economics, and geography has developed an artificial intelligence (AI) technology to measure grid-level economic development within six-square-kilometer regions.

- This AI technology is applicable in regions with limited statistical data (e.g., North Korea), supporting international efforts to propose policies for economic growth and poverty reduction in underdeveloped countries.

- The research team plans to make this technology freely available for use to contribute to the United Nations' Sustainable Development Goals (SDGs).

The United Nations reports that more than 700 million people are in extreme poverty, earning less than two dollars a day. However, an accurate assessment of poverty remains a global challenge. For example, 53 countries have not conducted agricultural surveys in the past 15 years, and 17 countries have not published a population census. To fill this data gap, new technologies are being explored to estimate poverty using alternative sources such as street views, aerial photos, and satellite images.

The paper published in Nature Communications demonstrates how artificial intelligence (AI) can help analyze economic conditions from daytime satellite imagery. This new technology can even apply to the least developed countries - such as North Korea - that do not have reliable statistical data for typical machine learning training.

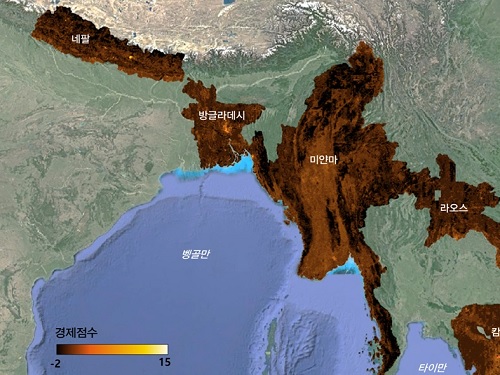

The researchers used Sentinel-2 satellite images from the European Space Agency (ESA) that are publicly available. They split these images into small six-square-kilometer grids. At this zoom level, visual information such as buildings, roads, and greenery can be used to quantify economic indicators. As a result, the team obtained the first ever fine-grained economic map of regions like North Korea. The same algorithm was applied to other underdeveloped countries in Asia: North Korea, Nepal, Laos, Myanmar, Bangladesh, and Cambodia (see Image 1).

The key feature of their research model is the "human-machine collaborative approach," which lets researchers combine human input with AI predictions for areas with scarce data. In this research, ten human experts compared satellite images and judged the economic conditions in the area, with the AI learning from this human data and giving economic scores to each image. The results showed that the Human-AI collaborative approach outperformed machine-only learning algorithms.

< Image 1. Nightlight satellite images of North Korea (Top-left: Background photo provided by NASA's Earth Observatory). South Korea appears brightly lit compared to North Korea, which is mostly dark except for Pyongyang. In contrast, the model developed by the research team uses daytime satellite imagery to predict more detailed economic predictions for North Korea (top-right) and five Asian countries (Bottom: Background photo from Google Earth). >

The research was led by an interdisciplinary team of computer scientists, economists, and a geographer from KAIST & IBS (Donghyun Ahn, Meeyoung Cha, Jihee Kim), Sogang University (Hyunjoo Yang), HKUST (Sangyoon Park), and NUS (Jeasurk Yang). Dr Charles Axelsson, Associate Editor at Nature Communications, handled this paper during the peer review process at the journal.

The research team found that the scores showed a strong correlation with traditional socio-economic metrics such as population density, employment, and number of businesses. This demonstrates the wide applicability and scalability of the approach, particularly in data-scarce countries. Furthermore, the model's strength lies in its ability to detect annual changes in economic conditions at a more detailed geospatial level without using any survey data (see Image 2).

< Image 2. Differences in satellite imagery and economic scores in North Korea between 2016 and 2019. Significant development was found in the Wonsan Kalma area (top), one of the tourist development zones, but no changes were observed in the Wiwon Industrial Development Zone (bottom). (Background photo: Sentinel-2 satellite imagery provided by the European Space Agency (ESA)). >

This model would be especially valuable for rapidly monitoring the progress of Sustainable Development Goals such as reducing poverty and promoting more equitable and sustainable growth on an international scale. The model can also be adapted to measure various social and environmental indicators. For example, it can be trained to identify regions with high vulnerability to climate change and disasters to provide timely guidance on disaster relief efforts.

As an example, the researchers explored how North Korea changed before and after the United Nations sanctions against the country. By applying the model to satellite images of North Korea both in 2016 and in 2019, the researchers discovered three key trends in the country's economic development between 2016 and 2019. First, economic growth in North Korea became more concentrated in Pyongyang and major cities, exacerbating the urban-rural divide. Second, satellite imagery revealed significant changes in areas designated for tourism and economic development, such as new building construction and other meaningful alterations. Third, traditional industrial and export development zones showed relatively minor changes.

Meeyoung Cha, a data scientist in the team explained, "This is an important interdisciplinary effort to address global challenges like poverty. We plan to apply our AI algorithm to other international issues, such as monitoring carbon emissions, disaster damage detection, and the impact of climate change."

An economist on the research team, Jihee Kim, commented that this approach would enable detailed examinations of economic conditions in the developing world at a low cost, reducing data disparities between developed and developing nations. She further emphasized that this is most essential because many public policies require economic measurements to achieve their goals, whether they are for growth, equality, or sustainability.

The research team has made the source code publicly available via GitHub and plans to continue improving the technology, applying it to new satellite images updated annually. The results of this study, with Ph.D. candidate Donghyun Ahn at KAIST and Ph.D. candidate Jeasurk Yang at NUS as joint first authors, were published in Nature Communications under the title "A human-machine collaborative approach measures economic development using satellite imagery."

< Photos of the main authors. 1. Donghyun Ahn, PhD candidate at KAIST School of Computing 2. Jeasurk Yang, PhD candidate at the Department of Geography of National University of Singapore 3. Meeyoung Cha, Professor of KAIST School of Computing and CI at IBS 4. Jihee Kim, Professor of KAIST School of Business and Technology Management 5. Sangyoon Park, Professor of the Division of Social Science at Hong Kong University of Science and Technology 6. Hyunjoo Yang, Professor of the Department of Economics at Sogang University >

2023.12.07 View 7428

North Korea and Beyond: AI-Powered Satellite Analysis Reveals the Unseen Economic Landscape of Underdeveloped Nations

- A joint research team in computer science, economics, and geography has developed an artificial intelligence (AI) technology to measure grid-level economic development within six-square-kilometer regions.

- This AI technology is applicable in regions with limited statistical data (e.g., North Korea), supporting international efforts to propose policies for economic growth and poverty reduction in underdeveloped countries.

- The research team plans to make this technology freely available for use to contribute to the United Nations' Sustainable Development Goals (SDGs).

The United Nations reports that more than 700 million people are in extreme poverty, earning less than two dollars a day. However, an accurate assessment of poverty remains a global challenge. For example, 53 countries have not conducted agricultural surveys in the past 15 years, and 17 countries have not published a population census. To fill this data gap, new technologies are being explored to estimate poverty using alternative sources such as street views, aerial photos, and satellite images.

The paper published in Nature Communications demonstrates how artificial intelligence (AI) can help analyze economic conditions from daytime satellite imagery. This new technology can even apply to the least developed countries - such as North Korea - that do not have reliable statistical data for typical machine learning training.

The researchers used Sentinel-2 satellite images from the European Space Agency (ESA) that are publicly available. They split these images into small six-square-kilometer grids. At this zoom level, visual information such as buildings, roads, and greenery can be used to quantify economic indicators. As a result, the team obtained the first ever fine-grained economic map of regions like North Korea. The same algorithm was applied to other underdeveloped countries in Asia: North Korea, Nepal, Laos, Myanmar, Bangladesh, and Cambodia (see Image 1).

The key feature of their research model is the "human-machine collaborative approach," which lets researchers combine human input with AI predictions for areas with scarce data. In this research, ten human experts compared satellite images and judged the economic conditions in the area, with the AI learning from this human data and giving economic scores to each image. The results showed that the Human-AI collaborative approach outperformed machine-only learning algorithms.

< Image 1. Nightlight satellite images of North Korea (Top-left: Background photo provided by NASA's Earth Observatory). South Korea appears brightly lit compared to North Korea, which is mostly dark except for Pyongyang. In contrast, the model developed by the research team uses daytime satellite imagery to predict more detailed economic predictions for North Korea (top-right) and five Asian countries (Bottom: Background photo from Google Earth). >

The research was led by an interdisciplinary team of computer scientists, economists, and a geographer from KAIST & IBS (Donghyun Ahn, Meeyoung Cha, Jihee Kim), Sogang University (Hyunjoo Yang), HKUST (Sangyoon Park), and NUS (Jeasurk Yang). Dr Charles Axelsson, Associate Editor at Nature Communications, handled this paper during the peer review process at the journal.

The research team found that the scores showed a strong correlation with traditional socio-economic metrics such as population density, employment, and number of businesses. This demonstrates the wide applicability and scalability of the approach, particularly in data-scarce countries. Furthermore, the model's strength lies in its ability to detect annual changes in economic conditions at a more detailed geospatial level without using any survey data (see Image 2).

< Image 2. Differences in satellite imagery and economic scores in North Korea between 2016 and 2019. Significant development was found in the Wonsan Kalma area (top), one of the tourist development zones, but no changes were observed in the Wiwon Industrial Development Zone (bottom). (Background photo: Sentinel-2 satellite imagery provided by the European Space Agency (ESA)). >

This model would be especially valuable for rapidly monitoring the progress of Sustainable Development Goals such as reducing poverty and promoting more equitable and sustainable growth on an international scale. The model can also be adapted to measure various social and environmental indicators. For example, it can be trained to identify regions with high vulnerability to climate change and disasters to provide timely guidance on disaster relief efforts.

As an example, the researchers explored how North Korea changed before and after the United Nations sanctions against the country. By applying the model to satellite images of North Korea both in 2016 and in 2019, the researchers discovered three key trends in the country's economic development between 2016 and 2019. First, economic growth in North Korea became more concentrated in Pyongyang and major cities, exacerbating the urban-rural divide. Second, satellite imagery revealed significant changes in areas designated for tourism and economic development, such as new building construction and other meaningful alterations. Third, traditional industrial and export development zones showed relatively minor changes.

Meeyoung Cha, a data scientist in the team explained, "This is an important interdisciplinary effort to address global challenges like poverty. We plan to apply our AI algorithm to other international issues, such as monitoring carbon emissions, disaster damage detection, and the impact of climate change."

An economist on the research team, Jihee Kim, commented that this approach would enable detailed examinations of economic conditions in the developing world at a low cost, reducing data disparities between developed and developing nations. She further emphasized that this is most essential because many public policies require economic measurements to achieve their goals, whether they are for growth, equality, or sustainability.

The research team has made the source code publicly available via GitHub and plans to continue improving the technology, applying it to new satellite images updated annually. The results of this study, with Ph.D. candidate Donghyun Ahn at KAIST and Ph.D. candidate Jeasurk Yang at NUS as joint first authors, were published in Nature Communications under the title "A human-machine collaborative approach measures economic development using satellite imagery."

< Photos of the main authors. 1. Donghyun Ahn, PhD candidate at KAIST School of Computing 2. Jeasurk Yang, PhD candidate at the Department of Geography of National University of Singapore 3. Meeyoung Cha, Professor of KAIST School of Computing and CI at IBS 4. Jihee Kim, Professor of KAIST School of Business and Technology Management 5. Sangyoon Park, Professor of the Division of Social Science at Hong Kong University of Science and Technology 6. Hyunjoo Yang, Professor of the Department of Economics at Sogang University >

2023.12.07 View 7428 -

KAIST builds a high-resolution 3D holographic sensor using a single mask

Holographic cameras can provide more realistic images than ordinary cameras thanks to their ability to acquire 3D information about objects. However, existing holographic cameras use interferometers that measure the wavelength and refraction of light through the interference of light waves, which makes them complex and sensitive to their surrounding environment.

On August 23, a KAIST research team led by Professor YongKeun Park from the Department of Physics announced a new leap forward in 3D holographic imaging sensor technology.

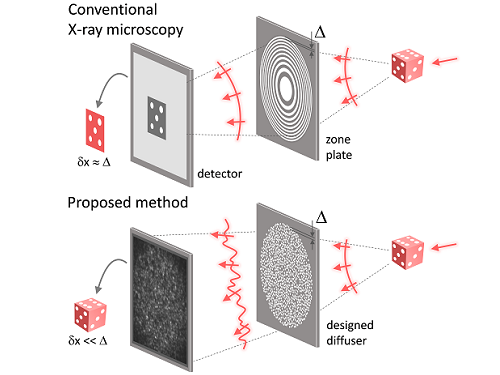

The team proposed an innovative holographic camera technology that does not use complex interferometry. Instead, it uses a mask to precisely measure the phase information of light and reconstruct the 3D information of an object with higher accuracy.

< Figure 1. Structure and principle of the proposed holographic camera. The amplitude and phase information of light scattered from a holographic camera can be measured. >

The team used a mask that fulfills certain mathematical conditions and incorporated it into an ordinary camera, and the light scattered from a laser is measured through the mask and analyzed using a computer. This does not require a complex interferometer and allows the phase information of light to be collected through a simplified optical system. With this technique, the mask that is placed between the two lenses and behind an object plays an important role. The mask selectively filters specific parts of light,, and the intensity of the light passing through the lens can be measured using an ordinary commercial camera. This technique combines the image data received from the camera with the unique pattern received from the mask and reconstructs an object’s precise 3D information using an algorithm.

This method allows a high-resolution 3D image of an object to be captured in any position. In practical situations, one can construct a laser-based holographic 3D image sensor by adding a mask with a simple design to a general image sensor. This makes the design and construction of the optical system much easier. In particular, this novel technology can capture high-resolution holographic images of objects moving at high speeds, which widens its potential field of application.

< Figure 2. A moving doll captured by a conventional camera and the proposed holographic camera. When taking a picture without focusing on the object, only a blurred image of the doll can be obtained from a general camera, but the proposed holographic camera can restore the blurred image of the doll into a clear image. >

The results of this study, conducted by Dr. Jeonghun Oh from the KAIST Department of Physics as the first author, were published in Nature Communications on August 12 under the title, "Non-interferometric stand-alone single-shot holographic camera using reciprocal diffractive imaging".

Dr. Oh said, “The holographic camera module we are suggesting can be built by adding a filter to an ordinary camera, which would allow even non-experts to handle it easily in everyday life if it were to be commercialized.” He added, “In particular, it is a promising candidate with the potential to replace existing remote sensing technologies.”

This research was supported by the National Research Foundation’s Leader Research Project, the Korean Ministry of Science and ICT’s Core Hologram Technology Support Project, and the Nano and Material Technology Development Project.

2023.09.05 View 7157

KAIST builds a high-resolution 3D holographic sensor using a single mask

Holographic cameras can provide more realistic images than ordinary cameras thanks to their ability to acquire 3D information about objects. However, existing holographic cameras use interferometers that measure the wavelength and refraction of light through the interference of light waves, which makes them complex and sensitive to their surrounding environment.

On August 23, a KAIST research team led by Professor YongKeun Park from the Department of Physics announced a new leap forward in 3D holographic imaging sensor technology.

The team proposed an innovative holographic camera technology that does not use complex interferometry. Instead, it uses a mask to precisely measure the phase information of light and reconstruct the 3D information of an object with higher accuracy.

< Figure 1. Structure and principle of the proposed holographic camera. The amplitude and phase information of light scattered from a holographic camera can be measured. >

The team used a mask that fulfills certain mathematical conditions and incorporated it into an ordinary camera, and the light scattered from a laser is measured through the mask and analyzed using a computer. This does not require a complex interferometer and allows the phase information of light to be collected through a simplified optical system. With this technique, the mask that is placed between the two lenses and behind an object plays an important role. The mask selectively filters specific parts of light,, and the intensity of the light passing through the lens can be measured using an ordinary commercial camera. This technique combines the image data received from the camera with the unique pattern received from the mask and reconstructs an object’s precise 3D information using an algorithm.

This method allows a high-resolution 3D image of an object to be captured in any position. In practical situations, one can construct a laser-based holographic 3D image sensor by adding a mask with a simple design to a general image sensor. This makes the design and construction of the optical system much easier. In particular, this novel technology can capture high-resolution holographic images of objects moving at high speeds, which widens its potential field of application.

< Figure 2. A moving doll captured by a conventional camera and the proposed holographic camera. When taking a picture without focusing on the object, only a blurred image of the doll can be obtained from a general camera, but the proposed holographic camera can restore the blurred image of the doll into a clear image. >

The results of this study, conducted by Dr. Jeonghun Oh from the KAIST Department of Physics as the first author, were published in Nature Communications on August 12 under the title, "Non-interferometric stand-alone single-shot holographic camera using reciprocal diffractive imaging".

Dr. Oh said, “The holographic camera module we are suggesting can be built by adding a filter to an ordinary camera, which would allow even non-experts to handle it easily in everyday life if it were to be commercialized.” He added, “In particular, it is a promising candidate with the potential to replace existing remote sensing technologies.”

This research was supported by the National Research Foundation’s Leader Research Project, the Korean Ministry of Science and ICT’s Core Hologram Technology Support Project, and the Nano and Material Technology Development Project.

2023.09.05 View 7157 -

A KAIST research team unveils new path for dense photonic integration

Integrated optical semiconductor (hereinafter referred to as optical semiconductor) technology is a next-generation semiconductor technology for which many researches and investments are being made worldwide because it can make complex optical systems such as LiDAR and quantum sensors and computers into a single small chip. In the existing semiconductor technology, the key was how small it was to make it in units of 5 nanometers or 2 nanometers, but increasing the degree of integration in optical semiconductor devices can be said to be a key technology that determines performance, price, and energy efficiency.

KAIST (President Kwang-Hyung Lee) announced on the 19th that a research team led by Professor Sangsik Kim of the Department of Electrical and Electronic Engineering discovered a new optical coupling mechanism that can increase the degree of integration of optical semiconductor devices by more than 100 times.

The degree of the number of elements that can be configured per chip is called the degree of integration. However, it is very difficult to increase the degree of integration of optical semiconductor devices, because crosstalk occurs between photons between adjacent devices due to the wave nature of light.

In previous studies, it was possible to reduce crosstalk of light only in specific polarizations, but in this study, the research team developed a method to increase the degree of integration even under polarization conditions, which were previously considered impossible, by discovering a new light coupling mechanism.

This study, led by Professor Sangsik Kim as a corresponding author and conducted with students he taught at Texas Tech University, was published in the international journal 'Light: Science & Applications' [IF=20.257] on June 2nd. done. (Paper title: Anisotropic leaky-like perturbation with subwavelength gratings enables zero crosstalk).

Professor Sangsik Kim said, "The interesting thing about this study is that it paradoxically eliminated the confusion through leaky waves (light tends to spread sideways), which was previously thought to increase the crosstalk." He went on to add, “If the optical coupling method using the leaky wave revealed in this study is applied, it will be possible to develop various optical semiconductor devices that are smaller and that has less noise.”

Professor Sangsik Kim is a researcher recognized for his expertise and research in optical semiconductor integration. Through his previous research, he developed an all-dielectric metamaterial that can control the degree of light spreading laterally by patterning a semiconductor structure at a size smaller than the wavelength, and proved this through experiments to improve the degree of integration of optical semiconductors. These studies were reported in ‘Nature Communications’ (Vol. 9, Article 1893, 2018) and ‘Optica’ (Vol. 7, pp. 881-887, 2020). In recognition of these achievements, Professor Kim has received the NSF Career Award from the National Science Foundation (NSF) and the Young Scientist Award from the Association of Korean-American Scientists and Engineers.

Meanwhile, this research was carried out with the support from the New Research Project of Excellence of the National Research Foundation of Korea and and the National Science Foundation of the US.

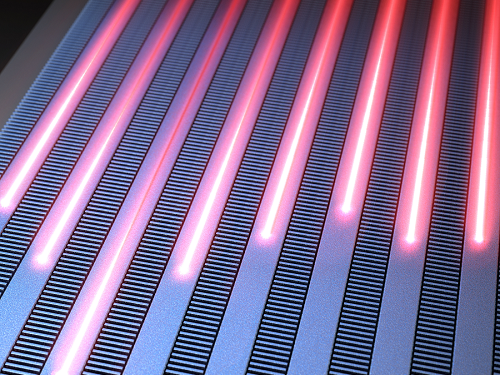

< Figure 1. Illustration depicting light propagation without crosstalk in the waveguide array of the developed metamaterial-based optical semiconductor >

2023.06.21 View 6785

A KAIST research team unveils new path for dense photonic integration

Integrated optical semiconductor (hereinafter referred to as optical semiconductor) technology is a next-generation semiconductor technology for which many researches and investments are being made worldwide because it can make complex optical systems such as LiDAR and quantum sensors and computers into a single small chip. In the existing semiconductor technology, the key was how small it was to make it in units of 5 nanometers or 2 nanometers, but increasing the degree of integration in optical semiconductor devices can be said to be a key technology that determines performance, price, and energy efficiency.

KAIST (President Kwang-Hyung Lee) announced on the 19th that a research team led by Professor Sangsik Kim of the Department of Electrical and Electronic Engineering discovered a new optical coupling mechanism that can increase the degree of integration of optical semiconductor devices by more than 100 times.

The degree of the number of elements that can be configured per chip is called the degree of integration. However, it is very difficult to increase the degree of integration of optical semiconductor devices, because crosstalk occurs between photons between adjacent devices due to the wave nature of light.

In previous studies, it was possible to reduce crosstalk of light only in specific polarizations, but in this study, the research team developed a method to increase the degree of integration even under polarization conditions, which were previously considered impossible, by discovering a new light coupling mechanism.

This study, led by Professor Sangsik Kim as a corresponding author and conducted with students he taught at Texas Tech University, was published in the international journal 'Light: Science & Applications' [IF=20.257] on June 2nd. done. (Paper title: Anisotropic leaky-like perturbation with subwavelength gratings enables zero crosstalk).

Professor Sangsik Kim said, "The interesting thing about this study is that it paradoxically eliminated the confusion through leaky waves (light tends to spread sideways), which was previously thought to increase the crosstalk." He went on to add, “If the optical coupling method using the leaky wave revealed in this study is applied, it will be possible to develop various optical semiconductor devices that are smaller and that has less noise.”

Professor Sangsik Kim is a researcher recognized for his expertise and research in optical semiconductor integration. Through his previous research, he developed an all-dielectric metamaterial that can control the degree of light spreading laterally by patterning a semiconductor structure at a size smaller than the wavelength, and proved this through experiments to improve the degree of integration of optical semiconductors. These studies were reported in ‘Nature Communications’ (Vol. 9, Article 1893, 2018) and ‘Optica’ (Vol. 7, pp. 881-887, 2020). In recognition of these achievements, Professor Kim has received the NSF Career Award from the National Science Foundation (NSF) and the Young Scientist Award from the Association of Korean-American Scientists and Engineers.

Meanwhile, this research was carried out with the support from the New Research Project of Excellence of the National Research Foundation of Korea and and the National Science Foundation of the US.

< Figure 1. Illustration depicting light propagation without crosstalk in the waveguide array of the developed metamaterial-based optical semiconductor >

2023.06.21 View 6785 -

KAIST debuts “DreamWaQer” - a quadrupedal robot that can walk in the dark

- The team led by Professor Hyun Myung of the School of Electrical Engineering developed “DreamWaQ”, a deep reinforcement learning-based walking robot control technology that can walk in an atypical environment without visual and/or tactile information

- Utilization of “DreamWaQ” technology can enable mass production of various types of “DreamWaQers”

- Expected to be used in exploration of atypical environment involving unique circumstances such as disasters by fire.

A team of Korean engineering researchers has developed a quadrupedal robot technology that can climb up and down the steps and moves without falling over in uneven environments such as tree roots without the help of visual or tactile sensors even in disastrous situations in which visual confirmation is impeded due to darkness or thick smoke from the flames.

KAIST (President Kwang Hyung Lee) announced on the 29th of March that Professor Hyun Myung's research team at the Urban Robotics Lab in the School of Electrical Engineering developed a walking robot control technology that enables robust 'blind locomotion' in various atypical environments.

< (From left) Prof. Hyun Myung, Doctoral Candidates I Made Aswin Nahrendra, Byeongho Yu, and Minho Oh. In the foreground is the DreamWaQer, a quadrupedal robot equipped with DreamWaQ technology. >

The KAIST research team developed "DreamWaQ" technology, which was named so as it enables walking robots to move about even in the dark, just as a person can walk without visual help fresh out of bed and going to the bathroom in the dark. With this technology installed atop any legged robots, it will be possible to create various types of "DreamWaQers".

Existing walking robot controllers are based on kinematics and/or dynamics models. This is expressed as a model-based control method. In particular, on atypical environments like the open, uneven fields, it is necessary to obtain the feature information of the terrain more quickly in order to maintain stability as it walks. However, it has been shown to depend heavily on the cognitive ability to survey the surrounding environment.

In contrast, the controller developed by Professor Hyun Myung's research team based on deep reinforcement learning (RL) methods can quickly calculate appropriate control commands for each motor of the walking robot through data of various environments obtained from the simulator. Whereas the existing controllers that learned from simulations required a separate re-orchestration to make it work with an actual robot, this controller developed by the research team is expected to be easily applied to various walking robots because it does not require an additional tuning process.

DreamWaQ, the controller developed by the research team, is largely composed of a context estimation network that estimates the ground and robot information and a policy network that computes control commands. The context-aided estimator network estimates the ground information implicitly and the robot’s status explicitly through inertial information and joint information. This information is fed into the policy network to be used to generate optimal control commands. Both networks are learned together in the simulation.

While the context-aided estimator network is learned through supervised learning, the policy network is learned through an actor-critic architecture, a deep RL methodology. The actor network can only implicitly infer surrounding terrain information. In the simulation, the surrounding terrain information is known, and the critic, or the value network, that has the exact terrain information evaluates the policy of the actor network.

This whole learning process takes only about an hour in a GPU-enabled PC, and the actual robot is equipped with only the network of learned actors. Without looking at the surrounding terrain, it goes through the process of imagining which environment is similar to one of the various environments learned in the simulation using only the inertial sensor (IMU) inside the robot and the measurement of joint angles. If it suddenly encounters an offset, such as a staircase, it will not know until its foot touches the step, but it will quickly draw up terrain information the moment its foot touches the surface. Then the control command suitable for the estimated terrain information is transmitted to each motor, enabling rapidly adapted walking.

The DreamWaQer robot walked not only in the laboratory environment, but also in an outdoor environment around the campus with many curbs and speed bumps, and over a field with many tree roots and gravel, demonstrating its abilities by overcoming a staircase with a difference of a height that is two-thirds of its body. In addition, regardless of the environment, the research team confirmed that it was capable of stable walking ranging from a slow speed of 0.3 m/s to a rather fast speed of 1.0 m/s.

The results of this study were produced by a student in doctorate course, I Made Aswin Nahrendra, as the first author, and his colleague Byeongho Yu as a co-author. It has been accepted to be presented at the upcoming IEEE International Conference on Robotics and Automation (ICRA) scheduled to be held in London at the end of May. (Paper title: DreamWaQ: Learning Robust Quadrupedal Locomotion With Implicit Terrain Imagination via Deep Reinforcement Learning)

The videos of the walking robot DreamWaQer equipped with the developed DreamWaQ can be found at the address below.

Main Introduction: https://youtu.be/JC1_bnTxPiQ Experiment Sketches: https://youtu.be/mhUUZVbeDA0

Meanwhile, this research was carried out with the support from the Robot Industry Core Technology Development Program of the Ministry of Trade, Industry and Energy (MOTIE). (Task title: Development of Mobile Intelligence SW for Autonomous Navigation of Legged Robots in Dynamic and Atypical Environments for Real Application)