Department+of+Electrical+Engineering

-

A Parallel MRI Method Accelerating Imaging Time Proposed

KAIST researchers proposed new technology that reduces MRI (magnetic resonance imaging) acquisition time to less than a sixth of the conventional method. They made a reconstruction method using machine learning of multilayer perception (MLP) algorithm to accelerate imaging time.

High-quality image can be reconstructed from subsampled data using the proposed method. This method can be further applied to various k-space subsampling patterns in a phase encoding direction, and its processing can be performed in real time.

The research, led by Professor Hyun Wook Park from the Department of Electrical Engineering, was described in Medical Physics as the cover paper last December. Ph.D. candidate Kinam Kwon is the first author.

MRI is an imaging technique that allows various contrasts of soft tissues without using radioactivity. Since MRI could image not only anatomical structures, but also functional and physiological features, it is widely used in medical diagnoses. However, one of the major shortcomings of MRI is its long imaging time. It induces patients’ discomfort, which is closely related to voluntary and involuntary motions, thereby deteriorating the quality of the MR images. In addition, lengthy imaging times limit the system’s throughput, which results in the long waiting times of patients as well as the increased medical expenses.

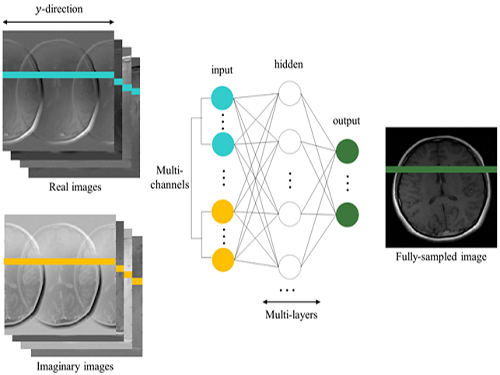

To reconstruct MR images from subsampled data, the team applied the MLP to reduce aliasing artifacts generated by subsampling in k-space. The MLP is learned from training data to map aliased input images into desired alias-free images. The input of the MLP is all voxels in the aliased lines of multichannel real and imaginary images from the subsampled k-space data, and the desired output is all voxels in the corresponding alias-free line of the root-sum-of-squares of multichannel images from fully sampled k-space data. Aliasing artifacts in an image reconstructed from subsampled data were reduced by line-by-line processing of the learned MLP architecture.

Reconstructed images from the proposed method are better than those from compared methods in terms of normalized root-mean-square error. The proposed method can be applied to image reconstruction for any k-space subsampling patterns in a phase encoding direction. Moreover, to further reduce the reconstruction time, it is easily implemented by parallel processing.

To address the aliasing artifact phenomenon, the team employed a parallel imaging technique using several receiver coils of various sensitivities and a compressed sensing technique using sparsity of signals.

Existing methods are heavily affected by sub-sampling patterns, but the team’s technique is applicable for various sub-sampling patterns, resulting in superior reconstructed images compared to existing methods, as well as allowing real-time reconstruction.

Professor Park said, "MRIs have become essential equipment in clinical diagnosis. However, the time consumption and the cost led to many inconveniences." He continued, "This method using machine learning could greatly improve the patients’ satisfaction with medical service." This research was funded by the Ministry of Science and ICT.

(Firgure 1. Cover of Medical Physics for December 2017)

(Figure 2. Concept map for the suggested network)

(Figure 3. Concept map for conventional MRI image acquisition and accelerated image acquisiton)

2018.01.16 View 6916

A Parallel MRI Method Accelerating Imaging Time Proposed

KAIST researchers proposed new technology that reduces MRI (magnetic resonance imaging) acquisition time to less than a sixth of the conventional method. They made a reconstruction method using machine learning of multilayer perception (MLP) algorithm to accelerate imaging time.

High-quality image can be reconstructed from subsampled data using the proposed method. This method can be further applied to various k-space subsampling patterns in a phase encoding direction, and its processing can be performed in real time.

The research, led by Professor Hyun Wook Park from the Department of Electrical Engineering, was described in Medical Physics as the cover paper last December. Ph.D. candidate Kinam Kwon is the first author.

MRI is an imaging technique that allows various contrasts of soft tissues without using radioactivity. Since MRI could image not only anatomical structures, but also functional and physiological features, it is widely used in medical diagnoses. However, one of the major shortcomings of MRI is its long imaging time. It induces patients’ discomfort, which is closely related to voluntary and involuntary motions, thereby deteriorating the quality of the MR images. In addition, lengthy imaging times limit the system’s throughput, which results in the long waiting times of patients as well as the increased medical expenses.

To reconstruct MR images from subsampled data, the team applied the MLP to reduce aliasing artifacts generated by subsampling in k-space. The MLP is learned from training data to map aliased input images into desired alias-free images. The input of the MLP is all voxels in the aliased lines of multichannel real and imaginary images from the subsampled k-space data, and the desired output is all voxels in the corresponding alias-free line of the root-sum-of-squares of multichannel images from fully sampled k-space data. Aliasing artifacts in an image reconstructed from subsampled data were reduced by line-by-line processing of the learned MLP architecture.

Reconstructed images from the proposed method are better than those from compared methods in terms of normalized root-mean-square error. The proposed method can be applied to image reconstruction for any k-space subsampling patterns in a phase encoding direction. Moreover, to further reduce the reconstruction time, it is easily implemented by parallel processing.

To address the aliasing artifact phenomenon, the team employed a parallel imaging technique using several receiver coils of various sensitivities and a compressed sensing technique using sparsity of signals.

Existing methods are heavily affected by sub-sampling patterns, but the team’s technique is applicable for various sub-sampling patterns, resulting in superior reconstructed images compared to existing methods, as well as allowing real-time reconstruction.

Professor Park said, "MRIs have become essential equipment in clinical diagnosis. However, the time consumption and the cost led to many inconveniences." He continued, "This method using machine learning could greatly improve the patients’ satisfaction with medical service." This research was funded by the Ministry of Science and ICT.

(Firgure 1. Cover of Medical Physics for December 2017)

(Figure 2. Concept map for the suggested network)

(Figure 3. Concept map for conventional MRI image acquisition and accelerated image acquisiton)

2018.01.16 View 6916 -

Face Recognition System 'K-Eye' Presented by KAIST

Artificial intelligence (AI) is one of the key emerging technologies. Global IT companies are competitively launching the newest technologies and competition is heating up more than ever. However, most AI technologies focus on software and their operating speeds are low, making them a poor fit for mobile devices. Therefore, many big companies are investing to develop semiconductor chips for running AI programs with low power requirements but at high speeds.

A research team led by Professor Hoi-Jun Yoo of the Department of Electrical Engineering has developed a semiconductor chip, CNNP (CNN Processor), that runs AI algorithms with ultra-low power, and K-Eye, a face recognition system using CNNP. The system was made in collaboration with a start-up company, UX Factory Co.

The K-Eye series consists of two types: a wearable type and a dongle type. The wearable type device can be used with a smartphone via Bluetooth, and it can operate for more than 24 hours with its internal battery. Users hanging K-Eye around their necks can conveniently check information about people by using their smartphone or smart watch, which connects K-Eye and allows users to access a database via their smart devices. A smartphone with K-EyeQ, the dongle type device, can recognize and share information about users at any time.

When recognizing that an authorized user is looking at its screen, the smartphone automatically turns on without a passcode, fingerprint, or iris authentication. Since it can distinguish whether an input face is coming from a saved photograph versus a real person, the smartphone cannot be tricked by the user’s photograph.

The K-Eye series carries other distinct features. It can detect a face at first and then recognize it, and it is possible to maintain “Always-on” status with low power consumption of less than 1mW. To accomplish this, the research team proposed two key technologies: an image sensor with “Always-on” face detection and the CNNP face recognition chip.

The first key technology, the “Always-on” image sensor, can determine if there is a face in its camera range. Then, it can capture frames and set the device to operate only when a face exists, reducing the standby power significantly. The face detection sensor combines analog and digital processing to reduce power consumption. With this approach, the analog processor, combined with the CMOS Image Sensor array, distinguishes the background area from the area likely to include a face, and the digital processor then detects the face only in the selected area. Hence, it becomes effective in terms of frame capture, face detection processing, and memory usage.

The second key technology, CNNP, achieved incredibly low power consumption by optimizing a convolutional neural network (CNN) in the areas of circuitry, architecture, and algorithms. First, the on-chip memory integrated in CNNP is specially designed to enable data to be read in a vertical direction as well as in a horizontal direction. Second, it has immense computational power with 1024 multipliers and accumulators operating in parallel and is capable of directly transferring the temporal results to each other without accessing to the external memory or on-chip communication network. Third, convolution calculations with a two-dimensional filter in the CNN algorithm are approximated into two sequential calculations of one-dimensional filters to achieve higher speeds and lower power consumption.

With these new technologies, CNNP achieved 97% high accuracy but consumed only 1/5000 power of the GPU. Face recognition can be performed with only 0.62mW of power consumption, and the chip can show higher performance than the GPU by using more power.

These chips were developed by Kyeongryeol Bong, a Ph. D. student under Professor Yoo and presented at the International Solid-State Circuit Conference (ISSCC) held in San Francisco in February. CNNP, which has the lowest reported power consumption in the world, has achieved a great deal of attention and has led to the development of the present K-Eye series for face recognition.

Professor Yoo said “AI - processors will lead the era of the Fourth Industrial Revolution. With the development of this AI chip, we expect Korea to take the lead in global AI technology.”

The research team and UX Factory Co. are preparing to commercialize the K-Eye series by the end of this year. According to a market researcher IDC, the market scale of the AI industry will grow from $127 billion last year to $165 billion in this year.

(Photo caption: Schematic diagram of K-Eye system)

2017.06.14 View 17627

Face Recognition System 'K-Eye' Presented by KAIST

Artificial intelligence (AI) is one of the key emerging technologies. Global IT companies are competitively launching the newest technologies and competition is heating up more than ever. However, most AI technologies focus on software and their operating speeds are low, making them a poor fit for mobile devices. Therefore, many big companies are investing to develop semiconductor chips for running AI programs with low power requirements but at high speeds.

A research team led by Professor Hoi-Jun Yoo of the Department of Electrical Engineering has developed a semiconductor chip, CNNP (CNN Processor), that runs AI algorithms with ultra-low power, and K-Eye, a face recognition system using CNNP. The system was made in collaboration with a start-up company, UX Factory Co.

The K-Eye series consists of two types: a wearable type and a dongle type. The wearable type device can be used with a smartphone via Bluetooth, and it can operate for more than 24 hours with its internal battery. Users hanging K-Eye around their necks can conveniently check information about people by using their smartphone or smart watch, which connects K-Eye and allows users to access a database via their smart devices. A smartphone with K-EyeQ, the dongle type device, can recognize and share information about users at any time.

When recognizing that an authorized user is looking at its screen, the smartphone automatically turns on without a passcode, fingerprint, or iris authentication. Since it can distinguish whether an input face is coming from a saved photograph versus a real person, the smartphone cannot be tricked by the user’s photograph.

The K-Eye series carries other distinct features. It can detect a face at first and then recognize it, and it is possible to maintain “Always-on” status with low power consumption of less than 1mW. To accomplish this, the research team proposed two key technologies: an image sensor with “Always-on” face detection and the CNNP face recognition chip.

The first key technology, the “Always-on” image sensor, can determine if there is a face in its camera range. Then, it can capture frames and set the device to operate only when a face exists, reducing the standby power significantly. The face detection sensor combines analog and digital processing to reduce power consumption. With this approach, the analog processor, combined with the CMOS Image Sensor array, distinguishes the background area from the area likely to include a face, and the digital processor then detects the face only in the selected area. Hence, it becomes effective in terms of frame capture, face detection processing, and memory usage.

The second key technology, CNNP, achieved incredibly low power consumption by optimizing a convolutional neural network (CNN) in the areas of circuitry, architecture, and algorithms. First, the on-chip memory integrated in CNNP is specially designed to enable data to be read in a vertical direction as well as in a horizontal direction. Second, it has immense computational power with 1024 multipliers and accumulators operating in parallel and is capable of directly transferring the temporal results to each other without accessing to the external memory or on-chip communication network. Third, convolution calculations with a two-dimensional filter in the CNN algorithm are approximated into two sequential calculations of one-dimensional filters to achieve higher speeds and lower power consumption.

With these new technologies, CNNP achieved 97% high accuracy but consumed only 1/5000 power of the GPU. Face recognition can be performed with only 0.62mW of power consumption, and the chip can show higher performance than the GPU by using more power.

These chips were developed by Kyeongryeol Bong, a Ph. D. student under Professor Yoo and presented at the International Solid-State Circuit Conference (ISSCC) held in San Francisco in February. CNNP, which has the lowest reported power consumption in the world, has achieved a great deal of attention and has led to the development of the present K-Eye series for face recognition.

Professor Yoo said “AI - processors will lead the era of the Fourth Industrial Revolution. With the development of this AI chip, we expect Korea to take the lead in global AI technology.”

The research team and UX Factory Co. are preparing to commercialize the K-Eye series by the end of this year. According to a market researcher IDC, the market scale of the AI industry will grow from $127 billion last year to $165 billion in this year.

(Photo caption: Schematic diagram of K-Eye system)

2017.06.14 View 17627 -

A Transport Technology for Nanowires Thermally Treated at 700 Celsius Degrees

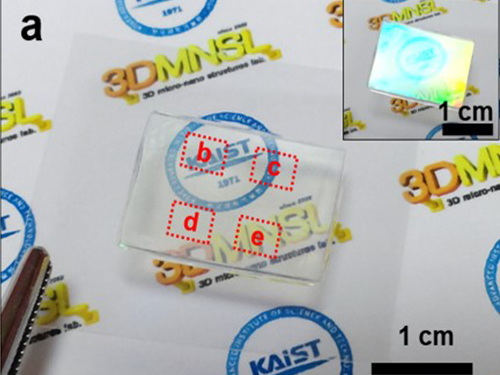

Professor Jun-Bo Yoon and his research team of the Department of Electrical Engineering at KAIST developed a technology for transporting thermally treated nanowires to a flexible substrate and created a high performance device for collecting flexible energy by using the new technology.

Mr. Min-Ho Seo, a Ph.D. candidate, participated in this study as the first author. The results were published online on January 30th in ACS Nano, an international journal in the field of nanoscience and engineering. (“Versatile Transfer of an Ultralong and Seamless Nanowire Array Crystallized at High Temperature for Use in High-performance Flexible Devices,” DOI: 10.1021/acsnano.6b06842)

Nanowires are one of the most representative nanomaterials. They have wire structures with dimensions in nanometers. The nanowires are widely used in the scientific and engineering fields due to their prominent physical and chemical properties that depend on a one-dimensional structure, and their high applicability.

Nanowires have much higher performance if their structure has unique features such as an excellent arrangement and a longer-than-average length. Many researchers are thus actively participating in the research for making nanowires without much difficulty, analyzing them, and developing them for high performance application devices.

Scientists have recently favored a research topic on making nanowires chemically and physically on a flexible substrate and applies the nanowires to a flexible electric device such as a high performance wearable sensor.

The existing technology, however, mixed nanowires from a chemical synthesis with a solution and spread the mixture on a flexible substrate. The resultant distribution was random, and it was difficult to produce a high performance device based on the structural advantages of nanowires. In addition, the technology used a cutting edge nano-process and flexible materials, but this was not economically beneficial. The production of stable materials at a temperature of 700 Celsius degrees or higher is unattainable, a great challenge for the application.

To solve this problem, the research team developed a new nano-transfer technology that combines a silicon nano-grating board with a large surface area and a nano-sacrificial layer process. A nano-sacrificial layer exists between nanowires and a nano-grating board, which acts as the mold for the nano-transfer. The new technology allows the device undergo thermal treatment. After this, the layer disappears when the nanowires are transported to a flexible substrate.

This technology also permits the stable production of nanowires with secured properties at an extremely high temperature. In this case, the nanowires are neatly organized on a flexible substrate. The research team used the technology to manufacture barium carbonate nanowires on top of the flexible substrate. The wires secured their properties at a temperature of 700℃ or above. The team employed the collection of wearable energy to obtain much higher electrical energy than that of an energy collecting device designed based on regular barium titanate nanowires.

The researchers said that their technology is built upon a semiconductor process, known as Physical Vapor Deposition that allows various materials such as ceramics and semiconductors to be used for flexible substrates of nanowires. They expected that high performance flexible electric devices such as flexible transistors and thermoelectric elements can be produced with this method.

Mr. Seo said, “In this study, we transported nanowire materials with developed properties on a flexible substrate and showed an increase in device performance. Our technology will be fundamental to the production of various nanowires on a flexible substrate as well as the feasibility of making high performance wearable electric devices.”

This research was supported by the Leap Research Support Program of the National Research Foundation of Korea.

Fig. 1. Transcription process of new, developed nanowires (a) and a fundamental mimetic diagram of a nano-sacrificial layer (b)

Fig. 2. Transcription results from using gold (AU) nanowires. The categories of the results were (a) optical images, (b) physical signals, (c) cross-sectional images from a scanning electron microscope (SEM), and (d-f) an electric verification of whether the perfectly arranged nanowires were made on a large surface.

Fig. 3. Transcription from using barium titanate (BaTiO3) nanowires. The results were (a) optical images, (b-e) top images taken from an SEM in various locations, and (f, g) property analysis.

Fig. 4. Mimetic diagram of the energy collecting device from using a BaTiO3 nanowire substrate and an optical image of the experiment for the miniature energy collecting device attached to an index finger.

2017.03.22 View 10273

A Transport Technology for Nanowires Thermally Treated at 700 Celsius Degrees

Professor Jun-Bo Yoon and his research team of the Department of Electrical Engineering at KAIST developed a technology for transporting thermally treated nanowires to a flexible substrate and created a high performance device for collecting flexible energy by using the new technology.

Mr. Min-Ho Seo, a Ph.D. candidate, participated in this study as the first author. The results were published online on January 30th in ACS Nano, an international journal in the field of nanoscience and engineering. (“Versatile Transfer of an Ultralong and Seamless Nanowire Array Crystallized at High Temperature for Use in High-performance Flexible Devices,” DOI: 10.1021/acsnano.6b06842)

Nanowires are one of the most representative nanomaterials. They have wire structures with dimensions in nanometers. The nanowires are widely used in the scientific and engineering fields due to their prominent physical and chemical properties that depend on a one-dimensional structure, and their high applicability.

Nanowires have much higher performance if their structure has unique features such as an excellent arrangement and a longer-than-average length. Many researchers are thus actively participating in the research for making nanowires without much difficulty, analyzing them, and developing them for high performance application devices.

Scientists have recently favored a research topic on making nanowires chemically and physically on a flexible substrate and applies the nanowires to a flexible electric device such as a high performance wearable sensor.

The existing technology, however, mixed nanowires from a chemical synthesis with a solution and spread the mixture on a flexible substrate. The resultant distribution was random, and it was difficult to produce a high performance device based on the structural advantages of nanowires. In addition, the technology used a cutting edge nano-process and flexible materials, but this was not economically beneficial. The production of stable materials at a temperature of 700 Celsius degrees or higher is unattainable, a great challenge for the application.

To solve this problem, the research team developed a new nano-transfer technology that combines a silicon nano-grating board with a large surface area and a nano-sacrificial layer process. A nano-sacrificial layer exists between nanowires and a nano-grating board, which acts as the mold for the nano-transfer. The new technology allows the device undergo thermal treatment. After this, the layer disappears when the nanowires are transported to a flexible substrate.

This technology also permits the stable production of nanowires with secured properties at an extremely high temperature. In this case, the nanowires are neatly organized on a flexible substrate. The research team used the technology to manufacture barium carbonate nanowires on top of the flexible substrate. The wires secured their properties at a temperature of 700℃ or above. The team employed the collection of wearable energy to obtain much higher electrical energy than that of an energy collecting device designed based on regular barium titanate nanowires.

The researchers said that their technology is built upon a semiconductor process, known as Physical Vapor Deposition that allows various materials such as ceramics and semiconductors to be used for flexible substrates of nanowires. They expected that high performance flexible electric devices such as flexible transistors and thermoelectric elements can be produced with this method.

Mr. Seo said, “In this study, we transported nanowire materials with developed properties on a flexible substrate and showed an increase in device performance. Our technology will be fundamental to the production of various nanowires on a flexible substrate as well as the feasibility of making high performance wearable electric devices.”

This research was supported by the Leap Research Support Program of the National Research Foundation of Korea.

Fig. 1. Transcription process of new, developed nanowires (a) and a fundamental mimetic diagram of a nano-sacrificial layer (b)

Fig. 2. Transcription results from using gold (AU) nanowires. The categories of the results were (a) optical images, (b) physical signals, (c) cross-sectional images from a scanning electron microscope (SEM), and (d-f) an electric verification of whether the perfectly arranged nanowires were made on a large surface.

Fig. 3. Transcription from using barium titanate (BaTiO3) nanowires. The results were (a) optical images, (b-e) top images taken from an SEM in various locations, and (f, g) property analysis.

Fig. 4. Mimetic diagram of the energy collecting device from using a BaTiO3 nanowire substrate and an optical image of the experiment for the miniature energy collecting device attached to an index finger.

2017.03.22 View 10273 -

K-Glass 3 Offers Users a Keyboard to Type Text

KAIST researchers upgraded their smart glasses with a low-power multicore processor to employ stereo vision and deep-learning algorithms, making the user interface and experience more intuitive and convenient.

K-Glass, smart glasses reinforced with augmented reality (AR) that were first developed by KAIST in 2014, with the second version released in 2015, is back with an even stronger model. The latest version, which KAIST researchers are calling K-Glass 3, allows users to text a message or type in key words for Internet surfing by offering a virtual keyboard for text and even one for a piano.

Currently, most wearable head-mounted displays (HMDs) suffer from a lack of rich user interfaces, short battery lives, and heavy weight. Some HMDs, such as Google Glass, use a touch panel and voice commands as an interface, but they are considered merely an extension of smartphones and are not optimized for wearable smart glasses. Recently, gaze recognition was proposed for HMDs including K-Glass 2, but gaze cannot be realized as a natural user interface (UI) and experience (UX) due to its limited interactivity and lengthy gaze-calibration time, which can be up to several minutes.

As a solution, Professor Hoi-Jun Yoo and his team from the Electrical Engineering Department recently developed K-Glass 3 with a low-power natural UI and UX processor. This processor is composed of a pre-processing core to implement stereo vision, seven deep-learning cores to accelerate real-time scene recognition within 33 milliseconds, and one rendering engine for the display.

The stereo-vision camera, located on the front of K-Glass 3, works in a manner similar to three dimension (3D) sensing in human vision. The camera’s two lenses, displayed horizontally from one another just like depth perception produced by left and right eyes, take pictures of the same objects or scenes and combine these two different images to extract spatial depth information, which is necessary to reconstruct 3D environments. The camera’s vision algorithm has an energy efficiency of 20 milliwatts on average, allowing it to operate in the Glass more than 24 hours without interruption.

The research team adopted deep-learning-multi core technology dedicated for mobile devices. This technology has greatly improved the Glass’s recognition accuracy with images and speech, while shortening the time needed to process and analyze data. In addition, the Glass’s multi-core processor is advanced enough to become idle when it detects no motion from users. Instead, it executes complex deep-learning algorithms with a minimal power to achieve high performance.

Professor Yoo said, “We have succeeded in fabricating a low-power multi-core processer that consumes only 126 milliwatts of power with a high efficiency rate. It is essential to develop a smaller, lighter, and low-power processor if we want to incorporate the widespread use of smart glasses and wearable devices into everyday life. K-Glass 3’s more intuitive UI and convenient UX permit users to enjoy enhanced AR experiences such as a keyboard or a better, more responsive mouse.”

Along with the research team, UX Factory, a Korean UI and UX developer, participated in the K-Glass 3 project.

These research results entitled “A 126.1mW Real-Time Natural UI/UX Processor with Embedded Deep-Learning Core for Low-Power Smart Glasses” (lead author: Seong-Wook Park, a doctoral student in the Electrical Engineering Department, KAIST) were presented at the 2016 IEEE (Institute of Electrical and Electronics Engineers) International Solid-State Circuits Conference (ISSCC) that took place January 31-February 4, 2016 in San Francisco, California.

YouTube Link: https://youtu.be/If_anx5NerQ

Figure 1: K-Glass 3

K-Glass 3 is equipped with a stereo camera, dual microphones, a WiFi module, and eight batteries to offer higher recognition accuracy and enhanced augmented reality experiences than previous models.

Figure 2: Architecture of the Low-Power Multi-Core Processor

K-Glass 3’s processor is designed to include several cores for pre-processing, deep-learning, and graphic rendering.

Figure 3: Virtual Text and Piano Keyboard

K-Glass 3 can detect hands and recognize their movements to provide users with such augmented reality applications as a virtual text or piano keyboard.

2016.02.26 View 14270

K-Glass 3 Offers Users a Keyboard to Type Text

KAIST researchers upgraded their smart glasses with a low-power multicore processor to employ stereo vision and deep-learning algorithms, making the user interface and experience more intuitive and convenient.

K-Glass, smart glasses reinforced with augmented reality (AR) that were first developed by KAIST in 2014, with the second version released in 2015, is back with an even stronger model. The latest version, which KAIST researchers are calling K-Glass 3, allows users to text a message or type in key words for Internet surfing by offering a virtual keyboard for text and even one for a piano.

Currently, most wearable head-mounted displays (HMDs) suffer from a lack of rich user interfaces, short battery lives, and heavy weight. Some HMDs, such as Google Glass, use a touch panel and voice commands as an interface, but they are considered merely an extension of smartphones and are not optimized for wearable smart glasses. Recently, gaze recognition was proposed for HMDs including K-Glass 2, but gaze cannot be realized as a natural user interface (UI) and experience (UX) due to its limited interactivity and lengthy gaze-calibration time, which can be up to several minutes.

As a solution, Professor Hoi-Jun Yoo and his team from the Electrical Engineering Department recently developed K-Glass 3 with a low-power natural UI and UX processor. This processor is composed of a pre-processing core to implement stereo vision, seven deep-learning cores to accelerate real-time scene recognition within 33 milliseconds, and one rendering engine for the display.

The stereo-vision camera, located on the front of K-Glass 3, works in a manner similar to three dimension (3D) sensing in human vision. The camera’s two lenses, displayed horizontally from one another just like depth perception produced by left and right eyes, take pictures of the same objects or scenes and combine these two different images to extract spatial depth information, which is necessary to reconstruct 3D environments. The camera’s vision algorithm has an energy efficiency of 20 milliwatts on average, allowing it to operate in the Glass more than 24 hours without interruption.

The research team adopted deep-learning-multi core technology dedicated for mobile devices. This technology has greatly improved the Glass’s recognition accuracy with images and speech, while shortening the time needed to process and analyze data. In addition, the Glass’s multi-core processor is advanced enough to become idle when it detects no motion from users. Instead, it executes complex deep-learning algorithms with a minimal power to achieve high performance.

Professor Yoo said, “We have succeeded in fabricating a low-power multi-core processer that consumes only 126 milliwatts of power with a high efficiency rate. It is essential to develop a smaller, lighter, and low-power processor if we want to incorporate the widespread use of smart glasses and wearable devices into everyday life. K-Glass 3’s more intuitive UI and convenient UX permit users to enjoy enhanced AR experiences such as a keyboard or a better, more responsive mouse.”

Along with the research team, UX Factory, a Korean UI and UX developer, participated in the K-Glass 3 project.

These research results entitled “A 126.1mW Real-Time Natural UI/UX Processor with Embedded Deep-Learning Core for Low-Power Smart Glasses” (lead author: Seong-Wook Park, a doctoral student in the Electrical Engineering Department, KAIST) were presented at the 2016 IEEE (Institute of Electrical and Electronics Engineers) International Solid-State Circuits Conference (ISSCC) that took place January 31-February 4, 2016 in San Francisco, California.

YouTube Link: https://youtu.be/If_anx5NerQ

Figure 1: K-Glass 3

K-Glass 3 is equipped with a stereo camera, dual microphones, a WiFi module, and eight batteries to offer higher recognition accuracy and enhanced augmented reality experiences than previous models.

Figure 2: Architecture of the Low-Power Multi-Core Processor

K-Glass 3’s processor is designed to include several cores for pre-processing, deep-learning, and graphic rendering.

Figure 3: Virtual Text and Piano Keyboard

K-Glass 3 can detect hands and recognize their movements to provide users with such augmented reality applications as a virtual text or piano keyboard.

2016.02.26 View 14270 -

The 2015 Intelligent SoC Robot War Finals

The final round of the 2015 Intelligent SoC (System on Chip) Robot War took place from October 29, 2015 to November 1, 2015 at Kintex in Ilsan, Korea.

A “SoC robot” refers to an intelligent robot capable of autonomous object recognition and decision making by employing advanced semiconductor and information technology.

First hosted in 2002, the Intelligent SoC Robot War cultivates top talents in the field of semiconductors and seeks to revitalize Korea’s information technology (IT) and semiconductor industries.

The event consists of two competitions: HURO and the Tae Kwon Do Robot.

In the HURO competition, participating robots sequentially complete eight assignments without outside controls. Whichever robot finishes the highest number of tasks and spends the shortest amount of time for the completion of assignments wins the competition.

At the HURO competition, a SoC robot overcomes obstacles.

The Tae Kwon Do Robot competition includes Korea’s traditional martial arts into robotics. Here, the winner is selected by sparring between a pair of competitors. The camera attached to the robot’s head recognizes the position of the opponent and the distance between them. From that, the robot takes actions such as punching or kicking.

Two robots are vying for the title of the Tae Kwon Do Robot.

This year, 570 people from 104 teams from all over the nation applied, and after preliminaries, 26 teams entered the finals.

The winners of the HURO and Tae Kwon Do Robot competitions receive awards from the president and prime minister of Korea, respectively.

The Chairman of the Intelligent SoC Robot War, Professor Hoi-Jun Yoo of Electrical Engineering Department at KAIST, said,

“Korea’s strength in semiconductors and information technology can serve as a great potential to advance the development of intelligent robots. We hope that our experiences in this competition will allow Korea to excel in this field.”

2015.11.01 View 10133

The 2015 Intelligent SoC Robot War Finals

The final round of the 2015 Intelligent SoC (System on Chip) Robot War took place from October 29, 2015 to November 1, 2015 at Kintex in Ilsan, Korea.

A “SoC robot” refers to an intelligent robot capable of autonomous object recognition and decision making by employing advanced semiconductor and information technology.

First hosted in 2002, the Intelligent SoC Robot War cultivates top talents in the field of semiconductors and seeks to revitalize Korea’s information technology (IT) and semiconductor industries.

The event consists of two competitions: HURO and the Tae Kwon Do Robot.

In the HURO competition, participating robots sequentially complete eight assignments without outside controls. Whichever robot finishes the highest number of tasks and spends the shortest amount of time for the completion of assignments wins the competition.

At the HURO competition, a SoC robot overcomes obstacles.

The Tae Kwon Do Robot competition includes Korea’s traditional martial arts into robotics. Here, the winner is selected by sparring between a pair of competitors. The camera attached to the robot’s head recognizes the position of the opponent and the distance between them. From that, the robot takes actions such as punching or kicking.

Two robots are vying for the title of the Tae Kwon Do Robot.

This year, 570 people from 104 teams from all over the nation applied, and after preliminaries, 26 teams entered the finals.

The winners of the HURO and Tae Kwon Do Robot competitions receive awards from the president and prime minister of Korea, respectively.

The Chairman of the Intelligent SoC Robot War, Professor Hoi-Jun Yoo of Electrical Engineering Department at KAIST, said,

“Korea’s strength in semiconductors and information technology can serve as a great potential to advance the development of intelligent robots. We hope that our experiences in this competition will allow Korea to excel in this field.”

2015.11.01 View 10133 -

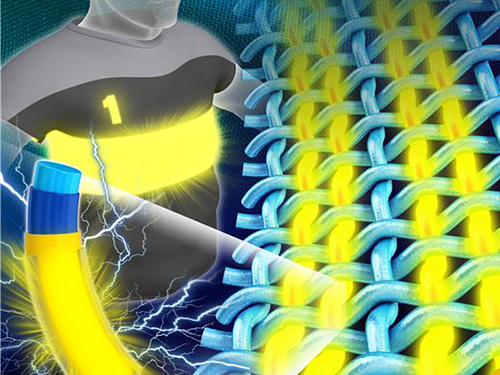

KAIST Develops Fiber-Like Light-Emitting Diodes for Wearable Displays

Professor Kyung-Cheol Choi and his research team from the School of Electrical Engineering at KAIST have developed fiber-like light-emitting diodes (LEDs), which can be applied in wearable displays. The research findings were published online in the July 14th issue of Advanced Electronic Materials.

Traditional wearable displays were manufactured on a hard substrate, which was later attached to the surface of clothes. This technique had limited applications for wearable displays because they were inflexible and ignored the characteristics of fabric.

To solve this problem, the research team discarded the notion of creating light-emitting diode displays on a plane. Instead, they focused on fibers, a component of fabrics, and developed a fiber-like LED that shared the characteristics of both fabrics and displays.

The essence of this technology, the dip-coating process, is to immerse and extract a three dimensional (3-D) rod (a polyethylene terephthalate fiber) from a solution, which functions like thread. Then, the regular levels of organic materials are formed as layers on the thread.

The dip-coating process allows the layers of organic materials to be easily created on the fibers with a 3-D cylindrical structure, which had been difficult in existing processes such as heat-coating process. By controlling of the withdrawal rate of the fiber, the coating's thickness can also be adjusted to the hundreds of thousandths of a nanometer.

The researchers said that this technology would accelerate the commercialization of fiber-based wearable displays because it offers low-cost mass production using roll-to-roll processing, a technology applied to create electronic devices on a roll of flexible plastics or metal foils.

Professor Choi said, “Our research will become a core technology in developing light emitting diodes on fibers, which are fundamental elements of fabrics. We hope we can lower the barrier of wearable displays entering the market.”

The lead author of the published paper, Seon-Il Kwon, added, “This technology will eventually allow the production of wearable displays to be as easy as making clothes.”

Picture 1: The Next Generation Wearable Display Using Fiber-Based Light-Emitting Diodes

Picture 2: Dip-Coating Process to Create Fiber-Based Light-Emitting Diodes

Picture 3: Fiber-Based Light-Emitting Diodes

2015.08.11 View 13840

KAIST Develops Fiber-Like Light-Emitting Diodes for Wearable Displays

Professor Kyung-Cheol Choi and his research team from the School of Electrical Engineering at KAIST have developed fiber-like light-emitting diodes (LEDs), which can be applied in wearable displays. The research findings were published online in the July 14th issue of Advanced Electronic Materials.

Traditional wearable displays were manufactured on a hard substrate, which was later attached to the surface of clothes. This technique had limited applications for wearable displays because they were inflexible and ignored the characteristics of fabric.

To solve this problem, the research team discarded the notion of creating light-emitting diode displays on a plane. Instead, they focused on fibers, a component of fabrics, and developed a fiber-like LED that shared the characteristics of both fabrics and displays.

The essence of this technology, the dip-coating process, is to immerse and extract a three dimensional (3-D) rod (a polyethylene terephthalate fiber) from a solution, which functions like thread. Then, the regular levels of organic materials are formed as layers on the thread.

The dip-coating process allows the layers of organic materials to be easily created on the fibers with a 3-D cylindrical structure, which had been difficult in existing processes such as heat-coating process. By controlling of the withdrawal rate of the fiber, the coating's thickness can also be adjusted to the hundreds of thousandths of a nanometer.

The researchers said that this technology would accelerate the commercialization of fiber-based wearable displays because it offers low-cost mass production using roll-to-roll processing, a technology applied to create electronic devices on a roll of flexible plastics or metal foils.

Professor Choi said, “Our research will become a core technology in developing light emitting diodes on fibers, which are fundamental elements of fabrics. We hope we can lower the barrier of wearable displays entering the market.”

The lead author of the published paper, Seon-Il Kwon, added, “This technology will eventually allow the production of wearable displays to be as easy as making clothes.”

Picture 1: The Next Generation Wearable Display Using Fiber-Based Light-Emitting Diodes

Picture 2: Dip-Coating Process to Create Fiber-Based Light-Emitting Diodes

Picture 3: Fiber-Based Light-Emitting Diodes

2015.08.11 View 13840 -

KAIST Introduces New UI for K-Glass 2

A newly developed user interface, the “i-Mouse,” in the K-Glass 2 tracks the user’s gaze and connects the device to the Internet through blinking eyes such as winks. This low-power interface provides smart glasses with an excellent user experience, with a long-lasting battery and augmented reality.

Smart glasses are wearable computers that will likely lead to the growth of the Internet of Things. Currently available smart glasses, however, reveal a set of problems for commercialization, such as short battery life and low energy efficiency. In addition, glasses that use voice commands have raised the issue of privacy concerns.

A research team led by Professor Hoi-Jun Yoo of the Electrical Engineering Department at the Korea Advanced Institute of Science and Technology (KAIST) has recently developed an upgraded model of the K-Glass (http://www.eurekalert.org/pub_releases/2014-02/tkai-kdl021714.php) called “K-Glass 2.”

K-Glass 2 detects users’ eye movements to point the cursor to recognize computer icons or objects in the Internet, and uses winks for commands. The researchers call this interface the “i-Mouse,” which removes the need to use hands or voice to control a mouse or touchpad. Like its predecessor, K-Glass 2 also employs augmented reality, displaying in real time the relevant, complementary information in the form of text, 3D graphics, images, and audio over the target objects selected by users.

The research results were presented, and K-Glass 2’s successful operation was demonstrated on-site to the 2015 Institute of Electrical and Electronics Engineers (IEEE) International Solid-State Circuits Conference (ISSCC) held on February 23-25, 2015 in San Francisco. The title of the paper was “A 2.71nJ/Pixel 3D-Stacked Gaze-Activated Object Recognition System for Low-power Mobile HMD Applications” (http://ieeexplore.ieee.org/Xplore/home.jsp).

The i-Mouse is a new user interface for smart glasses in which the gaze-image sensor (GIS) and object recognition processor (ORP) are stacked vertically to form a small chip. When three infrared LEDs (light-emitting diodes) built into the K-Glass 2 are projected into the user’s eyes, GIS recognizes their focal point and estimates the possible locations of the gaze as the user glances over the display screen. Then the electro-oculography sensor embedded on the nose pads reads the user’s eyelid movements, for example, winks, to click the selection. It is worth noting that the ORP is wired to perform only within the selected region of interest (ROI) by users. This results in a significant saving of battery life. Compared to the previous ORP chips, this chip uses 3.4 times less power, consuming on average 75 milliwatts (mW), thereby helping K-Glass 2 to run for almost 24 hours on a single charge.

Professor Yoo said, “The smart glass industry will surely grow as we see the Internet of Things becomes commonplace in the future. In order to expedite the commercial use of smart glasses, improving the user interface (UI) and the user experience (UX) are just as important as the development of compact-size, low-power wearable platforms with high energy efficiency. We have demonstrated such advancement through our K-Glass 2. Using the i-Mouse, K-Glass 2 can provide complicated augmented reality with low power through eye clicking.”

Professor Yoo and his doctoral student, Injoon Hong, conducted this research under the sponsorship of the Brain-mimicking Artificial Intelligence Many-core Processor project by the Ministry of Science, ICT and Future Planning in the Republic of Korea.

Youtube Link:

https://www.youtube.com/watchv=JaYtYK9E7p0&list=PLXmuftxI6pTW2jdIf69teY7QDXdI3Ougr

Picture 1: K-Glass 2

K-Glass 2 can detect eye movements and click computer icons via users’ winking.

Picture 2: Object Recognition Processor Chip

This picture shows a gaze-activated object-recognition system.

Picture 3: Augmented Reality Integrated into K-Glass 2

Users receive additional visual information overlaid on the objects they select.

2015.03.13 View 17442

KAIST Introduces New UI for K-Glass 2

A newly developed user interface, the “i-Mouse,” in the K-Glass 2 tracks the user’s gaze and connects the device to the Internet through blinking eyes such as winks. This low-power interface provides smart glasses with an excellent user experience, with a long-lasting battery and augmented reality.

Smart glasses are wearable computers that will likely lead to the growth of the Internet of Things. Currently available smart glasses, however, reveal a set of problems for commercialization, such as short battery life and low energy efficiency. In addition, glasses that use voice commands have raised the issue of privacy concerns.

A research team led by Professor Hoi-Jun Yoo of the Electrical Engineering Department at the Korea Advanced Institute of Science and Technology (KAIST) has recently developed an upgraded model of the K-Glass (http://www.eurekalert.org/pub_releases/2014-02/tkai-kdl021714.php) called “K-Glass 2.”

K-Glass 2 detects users’ eye movements to point the cursor to recognize computer icons or objects in the Internet, and uses winks for commands. The researchers call this interface the “i-Mouse,” which removes the need to use hands or voice to control a mouse or touchpad. Like its predecessor, K-Glass 2 also employs augmented reality, displaying in real time the relevant, complementary information in the form of text, 3D graphics, images, and audio over the target objects selected by users.

The research results were presented, and K-Glass 2’s successful operation was demonstrated on-site to the 2015 Institute of Electrical and Electronics Engineers (IEEE) International Solid-State Circuits Conference (ISSCC) held on February 23-25, 2015 in San Francisco. The title of the paper was “A 2.71nJ/Pixel 3D-Stacked Gaze-Activated Object Recognition System for Low-power Mobile HMD Applications” (http://ieeexplore.ieee.org/Xplore/home.jsp).

The i-Mouse is a new user interface for smart glasses in which the gaze-image sensor (GIS) and object recognition processor (ORP) are stacked vertically to form a small chip. When three infrared LEDs (light-emitting diodes) built into the K-Glass 2 are projected into the user’s eyes, GIS recognizes their focal point and estimates the possible locations of the gaze as the user glances over the display screen. Then the electro-oculography sensor embedded on the nose pads reads the user’s eyelid movements, for example, winks, to click the selection. It is worth noting that the ORP is wired to perform only within the selected region of interest (ROI) by users. This results in a significant saving of battery life. Compared to the previous ORP chips, this chip uses 3.4 times less power, consuming on average 75 milliwatts (mW), thereby helping K-Glass 2 to run for almost 24 hours on a single charge.

Professor Yoo said, “The smart glass industry will surely grow as we see the Internet of Things becomes commonplace in the future. In order to expedite the commercial use of smart glasses, improving the user interface (UI) and the user experience (UX) are just as important as the development of compact-size, low-power wearable platforms with high energy efficiency. We have demonstrated such advancement through our K-Glass 2. Using the i-Mouse, K-Glass 2 can provide complicated augmented reality with low power through eye clicking.”

Professor Yoo and his doctoral student, Injoon Hong, conducted this research under the sponsorship of the Brain-mimicking Artificial Intelligence Many-core Processor project by the Ministry of Science, ICT and Future Planning in the Republic of Korea.

Youtube Link:

https://www.youtube.com/watchv=JaYtYK9E7p0&list=PLXmuftxI6pTW2jdIf69teY7QDXdI3Ougr

Picture 1: K-Glass 2

K-Glass 2 can detect eye movements and click computer icons via users’ winking.

Picture 2: Object Recognition Processor Chip

This picture shows a gaze-activated object-recognition system.

Picture 3: Augmented Reality Integrated into K-Glass 2

Users receive additional visual information overlaid on the objects they select.

2015.03.13 View 17442 -

News Article: Flexible, High-performance Nonvolatile Memory Developed with SONOS Technology

Professor Yang-Kyu Choi of KAIST’s Department of Electrical Engineering and his team presented a research paper entitled “Flexible High-performance Nonvolatile Memory by Transferring GAA Silicon Nanowire SONOS onto a Plastic Substrate” at the conference of the International Electron Devices Meeting that took place on December 15-17, 2014 in San Francisco.

The Electronic Engineering Journal (http://www.eejournal.com/) recently posted an article on the paper:

Electronic Engineering Journal, February 2, 2015

“A Flat-Earth Memory”

Another Way to Make the Brittle Flexible

http://www.techfocusmedia.net/archives/articles/20150202-flexiblegaa/?printView=true

2015.02.03 View 8469

News Article: Flexible, High-performance Nonvolatile Memory Developed with SONOS Technology

Professor Yang-Kyu Choi of KAIST’s Department of Electrical Engineering and his team presented a research paper entitled “Flexible High-performance Nonvolatile Memory by Transferring GAA Silicon Nanowire SONOS onto a Plastic Substrate” at the conference of the International Electron Devices Meeting that took place on December 15-17, 2014 in San Francisco.

The Electronic Engineering Journal (http://www.eejournal.com/) recently posted an article on the paper:

Electronic Engineering Journal, February 2, 2015

“A Flat-Earth Memory”

Another Way to Make the Brittle Flexible

http://www.techfocusmedia.net/archives/articles/20150202-flexiblegaa/?printView=true

2015.02.03 View 8469 -

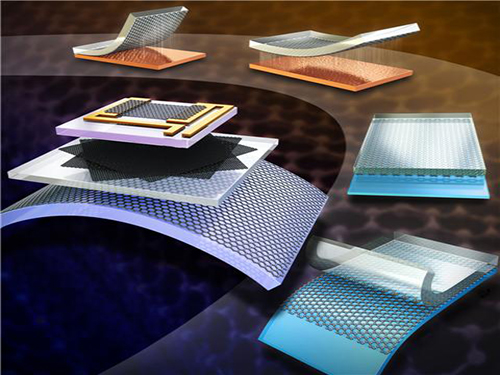

KAIST Develops a Method to Transfer Graphene by Stamping

Professor Sung-Yool Choi’s research team from KAIST's Department of Electrical Engineering has developed a technique that can produce a single-layer graphene from a metal etching. Through this, transferring a graphene layer onto a circuit board can be done as easily as stamping a seal on paper.

The research findings were published in the January 14th issue of Small as the lead article.

This technology will allow different types of wafer transfer methods such as transfer onto a surface of a device or a curved surface, and large surface transfer onto a 4 inch wafer. It will be applied in the field of wearable smart gadgets through commercialization of graphene electronic devices.

The traditional method used to transfer graphene onto a circuit board is a wet transfer. However, it has some drawbacks as the graphene layer can be damaged or contaminated during the transfer process from residue from the metal etching. This may affect the electrical properties of the transferred graphene.

After a graphene growth substrate formed on a catalytic metal substrate is pretreated in an aqueous poly vinyl alcohol (PVA) solution, a PVA film forms on the pretreated substrate. The substrate and the graphene layers bond strongly. The graphene is lifted from the growth substrate by means of an elastomeric stamp.

The delaminated graphene layer is isolated state from the elastomeric stamp and thus can be freely transferred onto a circuit board. As the catalytic metal substrate can be reused and does not contain harmful chemical substances, such transfer method is very eco-friendly.

Professor Choi said, “As the new graphene transfer method has a wide range of applications and allows a large surface transfer, it will contribute to the commercialization of graphene electronic devices.” He added that “because this technique has a high degree of freedom in transfer process, it has a variety of usages for graphene and 2 dimensional nano-devices.”

This research was sponsored by the Ministry of Science, ICT and Future Planning, the Republic of Korea.

Figure 1. Cover photo of the journal Small which illustrates the research findings

Figure 2. Above view of Graphene layer transferred through the new method

Figure 3. Large surface transfer of Graphene

2015.01.23 View 12347

KAIST Develops a Method to Transfer Graphene by Stamping

Professor Sung-Yool Choi’s research team from KAIST's Department of Electrical Engineering has developed a technique that can produce a single-layer graphene from a metal etching. Through this, transferring a graphene layer onto a circuit board can be done as easily as stamping a seal on paper.

The research findings were published in the January 14th issue of Small as the lead article.

This technology will allow different types of wafer transfer methods such as transfer onto a surface of a device or a curved surface, and large surface transfer onto a 4 inch wafer. It will be applied in the field of wearable smart gadgets through commercialization of graphene electronic devices.

The traditional method used to transfer graphene onto a circuit board is a wet transfer. However, it has some drawbacks as the graphene layer can be damaged or contaminated during the transfer process from residue from the metal etching. This may affect the electrical properties of the transferred graphene.

After a graphene growth substrate formed on a catalytic metal substrate is pretreated in an aqueous poly vinyl alcohol (PVA) solution, a PVA film forms on the pretreated substrate. The substrate and the graphene layers bond strongly. The graphene is lifted from the growth substrate by means of an elastomeric stamp.

The delaminated graphene layer is isolated state from the elastomeric stamp and thus can be freely transferred onto a circuit board. As the catalytic metal substrate can be reused and does not contain harmful chemical substances, such transfer method is very eco-friendly.

Professor Choi said, “As the new graphene transfer method has a wide range of applications and allows a large surface transfer, it will contribute to the commercialization of graphene electronic devices.” He added that “because this technique has a high degree of freedom in transfer process, it has a variety of usages for graphene and 2 dimensional nano-devices.”

This research was sponsored by the Ministry of Science, ICT and Future Planning, the Republic of Korea.

Figure 1. Cover photo of the journal Small which illustrates the research findings

Figure 2. Above view of Graphene layer transferred through the new method

Figure 3. Large surface transfer of Graphene

2015.01.23 View 12347 -

The 2014 Wearable Computer Competition Takes Place at KAIST

“This is a smart wig for patients who are reluctant to go outdoors because their hair is falling out from cancer treatment.”

A graduate student from Sungkyunkwan University, Jee-Hoon Lee enthusiastically explains his project at the KAIST KI Building where the 2014 Wearable Computer Competition was held. He said, “The sensor embedded inside the wig monitors the heart rate and the body temperature, and during an emergency, the device warns the patient about the situation. The product emphasizes two aspects; it notifies the patient in emergency situations, and it encourages patients to perform outdoor activities by enhancing their looks.”

The the tenth anniversary meeting of the 2014 Wearable Computer Competition took place at the KAIST campus on November 13-14, 2014.

A wearable computer is a mobile device designed to be put on the body or clothes so that a user can comfortably use it while walking. Recently, these devices that are able to support versatile internet-based services through smartphones are receiving a great deal of attention.

Wearable devices have been employed in two categorizes: health checks and information-entertainment. In this year’s competition, six healthcare products and nine information-entertainment products were exhibited.

Among these products, participants favored a smart helmet for motorcycle drivers. The driver can see through a rear camera with a navigation screen of the smartphone and text messages through the screen installed in the front glass of the helmet. Another product included a uniform that can control presentation slides by means of motion detection and voice recognition technology. Yet another popular device offered an insole to guide travelers to their destination with the help of motion sensors.

The chairman of the competition, Professor Hoi-Jun Yoo from the Department of Electrical Engineering at KAIST said, “Wearable devices such as smart watches, glasses, and clothes are gaining interest these days. Through this event, people will have a chance to look at the creativity of our students through the display of their wearable devices. In turn, these devices will advance computer technology.”

The third annual wearable computer workshop on convergence technology of wearable computers followed the competition. In the workshop, experts from leading information technology companies such as Samsung Electronics, LG Electronics, and KT Corporation addressed the convergence technology of wearable computers and trends in the field.

2014.11.19 View 11669

The 2014 Wearable Computer Competition Takes Place at KAIST

“This is a smart wig for patients who are reluctant to go outdoors because their hair is falling out from cancer treatment.”

A graduate student from Sungkyunkwan University, Jee-Hoon Lee enthusiastically explains his project at the KAIST KI Building where the 2014 Wearable Computer Competition was held. He said, “The sensor embedded inside the wig monitors the heart rate and the body temperature, and during an emergency, the device warns the patient about the situation. The product emphasizes two aspects; it notifies the patient in emergency situations, and it encourages patients to perform outdoor activities by enhancing their looks.”

The the tenth anniversary meeting of the 2014 Wearable Computer Competition took place at the KAIST campus on November 13-14, 2014.

A wearable computer is a mobile device designed to be put on the body or clothes so that a user can comfortably use it while walking. Recently, these devices that are able to support versatile internet-based services through smartphones are receiving a great deal of attention.

Wearable devices have been employed in two categorizes: health checks and information-entertainment. In this year’s competition, six healthcare products and nine information-entertainment products were exhibited.

Among these products, participants favored a smart helmet for motorcycle drivers. The driver can see through a rear camera with a navigation screen of the smartphone and text messages through the screen installed in the front glass of the helmet. Another product included a uniform that can control presentation slides by means of motion detection and voice recognition technology. Yet another popular device offered an insole to guide travelers to their destination with the help of motion sensors.

The chairman of the competition, Professor Hoi-Jun Yoo from the Department of Electrical Engineering at KAIST said, “Wearable devices such as smart watches, glasses, and clothes are gaining interest these days. Through this event, people will have a chance to look at the creativity of our students through the display of their wearable devices. In turn, these devices will advance computer technology.”

The third annual wearable computer workshop on convergence technology of wearable computers followed the competition. In the workshop, experts from leading information technology companies such as Samsung Electronics, LG Electronics, and KT Corporation addressed the convergence technology of wearable computers and trends in the field.

2014.11.19 View 11669 -

The 2014 SoC Robot Competition Took Place

Professor Hoi-Jun Yoo of the Department of Electrical Engineering at KAIST and his research team hosted a competition for miniature robots with artificial intelligence at KINTEX in Ilsan, Korea, on October 23-26, 2014.

The competition, called the 2014 SoC Robot War, showed the latest developments of semiconductor and robot technology through the robots’ presentations of the Korean martial art, Taekwondo, and hurdles race. SoC is a system on ship, an integrated circuit that holds all components of a computer or other electronic systems in a single chip. SoC robots are equipped with an artificial intelligence system, and therefore, can recognize things on their own or respond automatically to environmental changes. SoC robots are developed with the integration of semiconductor technology and robotics engineering.

Marking the thirteenth competition this year since its inception, the Robot War featured two competitions between HURO and Taekwon Robots. Under the HURO competition, participating robots were required to run a hurdle race, pass through barricades, and cross a bridge. The winning team received an award from the president of the Republic of Korea. Robots participating in the Taekwon Robot competition performed some of the main movements of Taekwondo such as front and side kicks and fist techniques. The winning team received an award from the prime minster of the Republic of Korea.

A total of 105 teams with 530 students and researchers from different universities across the country participated in preliminaries, and 30 teams qualified for the final competition.

2014.10.27 View 9638

The 2014 SoC Robot Competition Took Place

Professor Hoi-Jun Yoo of the Department of Electrical Engineering at KAIST and his research team hosted a competition for miniature robots with artificial intelligence at KINTEX in Ilsan, Korea, on October 23-26, 2014.

The competition, called the 2014 SoC Robot War, showed the latest developments of semiconductor and robot technology through the robots’ presentations of the Korean martial art, Taekwondo, and hurdles race. SoC is a system on ship, an integrated circuit that holds all components of a computer or other electronic systems in a single chip. SoC robots are equipped with an artificial intelligence system, and therefore, can recognize things on their own or respond automatically to environmental changes. SoC robots are developed with the integration of semiconductor technology and robotics engineering.

Marking the thirteenth competition this year since its inception, the Robot War featured two competitions between HURO and Taekwon Robots. Under the HURO competition, participating robots were required to run a hurdle race, pass through barricades, and cross a bridge. The winning team received an award from the president of the Republic of Korea. Robots participating in the Taekwon Robot competition performed some of the main movements of Taekwondo such as front and side kicks and fist techniques. The winning team received an award from the prime minster of the Republic of Korea.

A total of 105 teams with 530 students and researchers from different universities across the country participated in preliminaries, and 30 teams qualified for the final competition.

2014.10.27 View 9638