locomotion

-

KAIST debuts “DreamWaQer” - a quadrupedal robot that can walk in the dark

- The team led by Professor Hyun Myung of the School of Electrical Engineering developed “DreamWaQ”, a deep reinforcement learning-based walking robot control technology that can walk in an atypical environment without visual and/or tactile information

- Utilization of “DreamWaQ” technology can enable mass production of various types of “DreamWaQers”

- Expected to be used in exploration of atypical environment involving unique circumstances such as disasters by fire.

A team of Korean engineering researchers has developed a quadrupedal robot technology that can climb up and down the steps and moves without falling over in uneven environments such as tree roots without the help of visual or tactile sensors even in disastrous situations in which visual confirmation is impeded due to darkness or thick smoke from the flames.

KAIST (President Kwang Hyung Lee) announced on the 29th of March that Professor Hyun Myung's research team at the Urban Robotics Lab in the School of Electrical Engineering developed a walking robot control technology that enables robust 'blind locomotion' in various atypical environments.

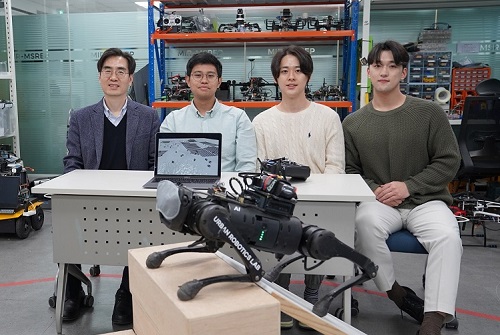

< (From left) Prof. Hyun Myung, Doctoral Candidates I Made Aswin Nahrendra, Byeongho Yu, and Minho Oh. In the foreground is the DreamWaQer, a quadrupedal robot equipped with DreamWaQ technology. >

The KAIST research team developed "DreamWaQ" technology, which was named so as it enables walking robots to move about even in the dark, just as a person can walk without visual help fresh out of bed and going to the bathroom in the dark. With this technology installed atop any legged robots, it will be possible to create various types of "DreamWaQers".

Existing walking robot controllers are based on kinematics and/or dynamics models. This is expressed as a model-based control method. In particular, on atypical environments like the open, uneven fields, it is necessary to obtain the feature information of the terrain more quickly in order to maintain stability as it walks. However, it has been shown to depend heavily on the cognitive ability to survey the surrounding environment.

In contrast, the controller developed by Professor Hyun Myung's research team based on deep reinforcement learning (RL) methods can quickly calculate appropriate control commands for each motor of the walking robot through data of various environments obtained from the simulator. Whereas the existing controllers that learned from simulations required a separate re-orchestration to make it work with an actual robot, this controller developed by the research team is expected to be easily applied to various walking robots because it does not require an additional tuning process.

DreamWaQ, the controller developed by the research team, is largely composed of a context estimation network that estimates the ground and robot information and a policy network that computes control commands. The context-aided estimator network estimates the ground information implicitly and the robot’s status explicitly through inertial information and joint information. This information is fed into the policy network to be used to generate optimal control commands. Both networks are learned together in the simulation.

While the context-aided estimator network is learned through supervised learning, the policy network is learned through an actor-critic architecture, a deep RL methodology. The actor network can only implicitly infer surrounding terrain information. In the simulation, the surrounding terrain information is known, and the critic, or the value network, that has the exact terrain information evaluates the policy of the actor network.

This whole learning process takes only about an hour in a GPU-enabled PC, and the actual robot is equipped with only the network of learned actors. Without looking at the surrounding terrain, it goes through the process of imagining which environment is similar to one of the various environments learned in the simulation using only the inertial sensor (IMU) inside the robot and the measurement of joint angles. If it suddenly encounters an offset, such as a staircase, it will not know until its foot touches the step, but it will quickly draw up terrain information the moment its foot touches the surface. Then the control command suitable for the estimated terrain information is transmitted to each motor, enabling rapidly adapted walking.

The DreamWaQer robot walked not only in the laboratory environment, but also in an outdoor environment around the campus with many curbs and speed bumps, and over a field with many tree roots and gravel, demonstrating its abilities by overcoming a staircase with a difference of a height that is two-thirds of its body. In addition, regardless of the environment, the research team confirmed that it was capable of stable walking ranging from a slow speed of 0.3 m/s to a rather fast speed of 1.0 m/s.

The results of this study were produced by a student in doctorate course, I Made Aswin Nahrendra, as the first author, and his colleague Byeongho Yu as a co-author. It has been accepted to be presented at the upcoming IEEE International Conference on Robotics and Automation (ICRA) scheduled to be held in London at the end of May. (Paper title: DreamWaQ: Learning Robust Quadrupedal Locomotion With Implicit Terrain Imagination via Deep Reinforcement Learning)

The videos of the walking robot DreamWaQer equipped with the developed DreamWaQ can be found at the address below.

Main Introduction: https://youtu.be/JC1_bnTxPiQ Experiment Sketches: https://youtu.be/mhUUZVbeDA0

Meanwhile, this research was carried out with the support from the Robot Industry Core Technology Development Program of the Ministry of Trade, Industry and Energy (MOTIE). (Task title: Development of Mobile Intelligence SW for Autonomous Navigation of Legged Robots in Dynamic and Atypical Environments for Real Application)

< Figure 1. Overview of DreamWaQ, a controller developed by this research team. This network consists of an estimator network that learns implicit and explicit estimates together, a policy network that acts as a controller, and a value network that provides guides to the policies during training. When implemented in a real robot, only the estimator and policy network are used. Both networks run in less than 1 ms on the robot's on-board computer. >

< Figure 2. Since the estimator can implicitly estimate the ground information as the foot touches the surface, it is possible to adapt quickly to rapidly changing ground conditions. >

< Figure 3. Results showing that even a small walking robot was able to overcome steps with height differences of about 20cm. >

2023.05.18 View 11957

KAIST debuts “DreamWaQer” - a quadrupedal robot that can walk in the dark

- The team led by Professor Hyun Myung of the School of Electrical Engineering developed “DreamWaQ”, a deep reinforcement learning-based walking robot control technology that can walk in an atypical environment without visual and/or tactile information

- Utilization of “DreamWaQ” technology can enable mass production of various types of “DreamWaQers”

- Expected to be used in exploration of atypical environment involving unique circumstances such as disasters by fire.

A team of Korean engineering researchers has developed a quadrupedal robot technology that can climb up and down the steps and moves without falling over in uneven environments such as tree roots without the help of visual or tactile sensors even in disastrous situations in which visual confirmation is impeded due to darkness or thick smoke from the flames.

KAIST (President Kwang Hyung Lee) announced on the 29th of March that Professor Hyun Myung's research team at the Urban Robotics Lab in the School of Electrical Engineering developed a walking robot control technology that enables robust 'blind locomotion' in various atypical environments.

< (From left) Prof. Hyun Myung, Doctoral Candidates I Made Aswin Nahrendra, Byeongho Yu, and Minho Oh. In the foreground is the DreamWaQer, a quadrupedal robot equipped with DreamWaQ technology. >

The KAIST research team developed "DreamWaQ" technology, which was named so as it enables walking robots to move about even in the dark, just as a person can walk without visual help fresh out of bed and going to the bathroom in the dark. With this technology installed atop any legged robots, it will be possible to create various types of "DreamWaQers".

Existing walking robot controllers are based on kinematics and/or dynamics models. This is expressed as a model-based control method. In particular, on atypical environments like the open, uneven fields, it is necessary to obtain the feature information of the terrain more quickly in order to maintain stability as it walks. However, it has been shown to depend heavily on the cognitive ability to survey the surrounding environment.

In contrast, the controller developed by Professor Hyun Myung's research team based on deep reinforcement learning (RL) methods can quickly calculate appropriate control commands for each motor of the walking robot through data of various environments obtained from the simulator. Whereas the existing controllers that learned from simulations required a separate re-orchestration to make it work with an actual robot, this controller developed by the research team is expected to be easily applied to various walking robots because it does not require an additional tuning process.

DreamWaQ, the controller developed by the research team, is largely composed of a context estimation network that estimates the ground and robot information and a policy network that computes control commands. The context-aided estimator network estimates the ground information implicitly and the robot’s status explicitly through inertial information and joint information. This information is fed into the policy network to be used to generate optimal control commands. Both networks are learned together in the simulation.

While the context-aided estimator network is learned through supervised learning, the policy network is learned through an actor-critic architecture, a deep RL methodology. The actor network can only implicitly infer surrounding terrain information. In the simulation, the surrounding terrain information is known, and the critic, or the value network, that has the exact terrain information evaluates the policy of the actor network.

This whole learning process takes only about an hour in a GPU-enabled PC, and the actual robot is equipped with only the network of learned actors. Without looking at the surrounding terrain, it goes through the process of imagining which environment is similar to one of the various environments learned in the simulation using only the inertial sensor (IMU) inside the robot and the measurement of joint angles. If it suddenly encounters an offset, such as a staircase, it will not know until its foot touches the step, but it will quickly draw up terrain information the moment its foot touches the surface. Then the control command suitable for the estimated terrain information is transmitted to each motor, enabling rapidly adapted walking.

The DreamWaQer robot walked not only in the laboratory environment, but also in an outdoor environment around the campus with many curbs and speed bumps, and over a field with many tree roots and gravel, demonstrating its abilities by overcoming a staircase with a difference of a height that is two-thirds of its body. In addition, regardless of the environment, the research team confirmed that it was capable of stable walking ranging from a slow speed of 0.3 m/s to a rather fast speed of 1.0 m/s.

The results of this study were produced by a student in doctorate course, I Made Aswin Nahrendra, as the first author, and his colleague Byeongho Yu as a co-author. It has been accepted to be presented at the upcoming IEEE International Conference on Robotics and Automation (ICRA) scheduled to be held in London at the end of May. (Paper title: DreamWaQ: Learning Robust Quadrupedal Locomotion With Implicit Terrain Imagination via Deep Reinforcement Learning)

The videos of the walking robot DreamWaQer equipped with the developed DreamWaQ can be found at the address below.

Main Introduction: https://youtu.be/JC1_bnTxPiQ Experiment Sketches: https://youtu.be/mhUUZVbeDA0

Meanwhile, this research was carried out with the support from the Robot Industry Core Technology Development Program of the Ministry of Trade, Industry and Energy (MOTIE). (Task title: Development of Mobile Intelligence SW for Autonomous Navigation of Legged Robots in Dynamic and Atypical Environments for Real Application)

< Figure 1. Overview of DreamWaQ, a controller developed by this research team. This network consists of an estimator network that learns implicit and explicit estimates together, a policy network that acts as a controller, and a value network that provides guides to the policies during training. When implemented in a real robot, only the estimator and policy network are used. Both networks run in less than 1 ms on the robot's on-board computer. >

< Figure 2. Since the estimator can implicitly estimate the ground information as the foot touches the surface, it is possible to adapt quickly to rapidly changing ground conditions. >

< Figure 3. Results showing that even a small walking robot was able to overcome steps with height differences of about 20cm. >

2023.05.18 View 11957 -

KAIST’s Robo-Dog “RaiBo” runs through the sandy beach

KAIST (President Kwang Hyung Lee) announced on the 25th that a research team led by Professor Jemin Hwangbo of the Department of Mechanical Engineering developed a quadrupedal robot control technology that can walk robustly with agility even in deformable terrain such as sandy beach.

< Photo. RAI Lab Team with Professor Hwangbo in the middle of the back row. >

Professor Hwangbo's research team developed a technology to model the force received by a walking robot on the ground made of granular materials such as sand and simulate it via a quadrupedal robot. Also, the team worked on an artificial neural network structure which is suitable in making real-time decisions needed in adapting to various types of ground without prior information while walking at the same time and applied it on to reinforcement learning. The trained neural network controller is expected to expand the scope of application of quadrupedal walking robots by proving its robustness in changing terrain, such as the ability to move in high-speed even on a sandy beach and walk and turn on soft grounds like an air mattress without losing balance.

This research, with Ph.D. Student Soo-Young Choi of KAIST Department of Mechanical Engineering as the first author, was published in January in the “Science Robotics”. (Paper title: Learning quadrupedal locomotion on deformable terrain).

Reinforcement learning is an AI learning method used to create a machine that collects data on the results of various actions in an arbitrary situation and utilizes that set of data to perform a task. Because the amount of data required for reinforcement learning is so vast, a method of collecting data through simulations that approximates physical phenomena in the real environment is widely used.

In particular, learning-based controllers in the field of walking robots have been applied to real environments after learning through data collected in simulations to successfully perform walking controls in various terrains.

However, since the performance of the learning-based controller rapidly decreases when the actual environment has any discrepancy from the learned simulation environment, it is important to implement an environment similar to the real one in the data collection stage. Therefore, in order to create a learning-based controller that can maintain balance in a deforming terrain, the simulator must provide a similar contact experience.

The research team defined a contact model that predicted the force generated upon contact from the motion dynamics of a walking body based on a ground reaction force model that considered the additional mass effect of granular media defined in previous studies.

Furthermore, by calculating the force generated from one or several contacts at each time step, the deforming terrain was efficiently simulated.

The research team also introduced an artificial neural network structure that implicitly predicts ground characteristics by using a recurrent neural network that analyzes time-series data from the robot's sensors.

The learned controller was mounted on the robot 'RaiBo', which was built hands-on by the research team to show high-speed walking of up to 3.03 m/s on a sandy beach where the robot's feet were completely submerged in the sand. Even when applied to harder grounds, such as grassy fields, and a running track, it was able to run stably by adapting to the characteristics of the ground without any additional programming or revision to the controlling algorithm.

In addition, it rotated with stability at 1.54 rad/s (approximately 90° per second) on an air mattress and demonstrated its quick adaptability even in the situation in which the terrain suddenly turned soft.

The research team demonstrated the importance of providing a suitable contact experience during the learning process by comparison with a controller that assumed the ground to be rigid, and proved that the proposed recurrent neural network modifies the controller's walking method according to the ground properties.

The simulation and learning methodology developed by the research team is expected to contribute to robots performing practical tasks as it expands the range of terrains that various walking robots can operate on.

The first author, Suyoung Choi, said, “It has been shown that providing a learning-based controller with a close contact experience with real deforming ground is essential for application to deforming terrain.” He went on to add that “The proposed controller can be used without prior information on the terrain, so it can be applied to various robot walking studies.”

This research was carried out with the support of the Samsung Research Funding & Incubation Center of Samsung Electronics.

< Figure 1. Adaptability of the proposed controller to various ground environments. The controller learned from a wide range of randomized granular media simulations showed adaptability to various natural and artificial terrains, and demonstrated high-speed walking ability and energy efficiency. >

< Figure 2. Contact model definition for simulation of granular substrates. The research team used a model that considered the additional mass effect for the vertical force and a Coulomb friction model for the horizontal direction while approximating the contact with the granular medium as occurring at a point. Furthermore, a model that simulates the ground resistance that can occur on the side of the foot was introduced and used for simulation. >

2023.01.26 View 17115

KAIST’s Robo-Dog “RaiBo” runs through the sandy beach

KAIST (President Kwang Hyung Lee) announced on the 25th that a research team led by Professor Jemin Hwangbo of the Department of Mechanical Engineering developed a quadrupedal robot control technology that can walk robustly with agility even in deformable terrain such as sandy beach.

< Photo. RAI Lab Team with Professor Hwangbo in the middle of the back row. >

Professor Hwangbo's research team developed a technology to model the force received by a walking robot on the ground made of granular materials such as sand and simulate it via a quadrupedal robot. Also, the team worked on an artificial neural network structure which is suitable in making real-time decisions needed in adapting to various types of ground without prior information while walking at the same time and applied it on to reinforcement learning. The trained neural network controller is expected to expand the scope of application of quadrupedal walking robots by proving its robustness in changing terrain, such as the ability to move in high-speed even on a sandy beach and walk and turn on soft grounds like an air mattress without losing balance.

This research, with Ph.D. Student Soo-Young Choi of KAIST Department of Mechanical Engineering as the first author, was published in January in the “Science Robotics”. (Paper title: Learning quadrupedal locomotion on deformable terrain).

Reinforcement learning is an AI learning method used to create a machine that collects data on the results of various actions in an arbitrary situation and utilizes that set of data to perform a task. Because the amount of data required for reinforcement learning is so vast, a method of collecting data through simulations that approximates physical phenomena in the real environment is widely used.

In particular, learning-based controllers in the field of walking robots have been applied to real environments after learning through data collected in simulations to successfully perform walking controls in various terrains.

However, since the performance of the learning-based controller rapidly decreases when the actual environment has any discrepancy from the learned simulation environment, it is important to implement an environment similar to the real one in the data collection stage. Therefore, in order to create a learning-based controller that can maintain balance in a deforming terrain, the simulator must provide a similar contact experience.

The research team defined a contact model that predicted the force generated upon contact from the motion dynamics of a walking body based on a ground reaction force model that considered the additional mass effect of granular media defined in previous studies.

Furthermore, by calculating the force generated from one or several contacts at each time step, the deforming terrain was efficiently simulated.

The research team also introduced an artificial neural network structure that implicitly predicts ground characteristics by using a recurrent neural network that analyzes time-series data from the robot's sensors.

The learned controller was mounted on the robot 'RaiBo', which was built hands-on by the research team to show high-speed walking of up to 3.03 m/s on a sandy beach where the robot's feet were completely submerged in the sand. Even when applied to harder grounds, such as grassy fields, and a running track, it was able to run stably by adapting to the characteristics of the ground without any additional programming or revision to the controlling algorithm.

In addition, it rotated with stability at 1.54 rad/s (approximately 90° per second) on an air mattress and demonstrated its quick adaptability even in the situation in which the terrain suddenly turned soft.

The research team demonstrated the importance of providing a suitable contact experience during the learning process by comparison with a controller that assumed the ground to be rigid, and proved that the proposed recurrent neural network modifies the controller's walking method according to the ground properties.

The simulation and learning methodology developed by the research team is expected to contribute to robots performing practical tasks as it expands the range of terrains that various walking robots can operate on.

The first author, Suyoung Choi, said, “It has been shown that providing a learning-based controller with a close contact experience with real deforming ground is essential for application to deforming terrain.” He went on to add that “The proposed controller can be used without prior information on the terrain, so it can be applied to various robot walking studies.”

This research was carried out with the support of the Samsung Research Funding & Incubation Center of Samsung Electronics.

< Figure 1. Adaptability of the proposed controller to various ground environments. The controller learned from a wide range of randomized granular media simulations showed adaptability to various natural and artificial terrains, and demonstrated high-speed walking ability and energy efficiency. >

< Figure 2. Contact model definition for simulation of granular substrates. The research team used a model that considered the additional mass effect for the vertical force and a Coulomb friction model for the horizontal direction while approximating the contact with the granular medium as occurring at a point. Furthermore, a model that simulates the ground resistance that can occur on the side of the foot was introduced and used for simulation. >

2023.01.26 View 17115