college+of+engineering

-

Researchers finds a way to reduce the overheating of semiconductor devices

The demand to shrink the size of semiconductors coupled with the problem of the heat generated at the hot spots of the devices not being effectively dispersed has negatively affected the reliability and durability of modern devices. Existing thermal management technologies have not been up to the task. Thus, the discovery of a new way of dispersing heat by using surface waves generated on the thin metal films over the substrate is an important breakthrough.

KAIST (President Kwang Hyung Lee) announced that Professor Bong Jae Lee's research team in the Department of Mechanical Engineering succeeded in measuring a newly observed transference of heat induced by 'surface plasmon polariton' (SPP) in a thin metal film deposited on a substrate for the first time in the world.

☞ Surface plasmon polariton (SPP) refers to a surface wave formed on the surface of a metal as a result of strong interaction between the electromagnetic field at the interface between the dielectric and the metal and the free electrons on the metal surface and similar collectively vibrating particles.

The research team utilized surface plasmon polaritons (SPP), which are surface waves generated at the metal-dielectric interface, to improve thermal diffusion in nanoscale thin metal films. Since this new heat transfer mode occurs when a thin film of metal is deposited on a substrate, it is highly usable in the device manufacturing process and has the advantage of being able to be manufactured over a large area. The research team showed that the thermal conductivity increased by about 25% due to surface waves generated over a 100-nm-thick titanium (Ti) film with a radius of about 3 cm.

KAIST Professor Bong Jae Lee, who led the research, said, "The significance of this research is that a new heat transfer mode using surface waves over a thin metal film deposited on a substrate with low processing difficulty was identified for the first time in the world. It can be applied as a nanoscale heat spreader to efficiently dissipate heat near the hot spots for easily overheatable semiconductor devices.”

The result has great implications for the development of high-performance semiconductor devices in the future in that it can be applied to rapidly dissipate heat on a nanoscale thin film. In particular, this new heat transfer mode identified by the research team is expected to solve the fundamental problem of thermal management in semiconductor devices as it enables even more effective heat transfer at nanoscale thickness while the thermal conductivity of the thin film usually decreases due to the boundary scattering effect.

This study was published online on April 26 in 'Physical Review Letters' and was selected as an Editors' Suggestion. The research was carried out with support from the Basic Research Laboratory Support Program of the National Research Foundation of Korea.

< Figure. Schematic diagram of the principle of measuring the thermal conductivity of thin Titanium (TI) films and the thermal conductivity of surface plasmon polariton measured on the Ti film >

2023.06.01 View 9582

Researchers finds a way to reduce the overheating of semiconductor devices

The demand to shrink the size of semiconductors coupled with the problem of the heat generated at the hot spots of the devices not being effectively dispersed has negatively affected the reliability and durability of modern devices. Existing thermal management technologies have not been up to the task. Thus, the discovery of a new way of dispersing heat by using surface waves generated on the thin metal films over the substrate is an important breakthrough.

KAIST (President Kwang Hyung Lee) announced that Professor Bong Jae Lee's research team in the Department of Mechanical Engineering succeeded in measuring a newly observed transference of heat induced by 'surface plasmon polariton' (SPP) in a thin metal film deposited on a substrate for the first time in the world.

☞ Surface plasmon polariton (SPP) refers to a surface wave formed on the surface of a metal as a result of strong interaction between the electromagnetic field at the interface between the dielectric and the metal and the free electrons on the metal surface and similar collectively vibrating particles.

The research team utilized surface plasmon polaritons (SPP), which are surface waves generated at the metal-dielectric interface, to improve thermal diffusion in nanoscale thin metal films. Since this new heat transfer mode occurs when a thin film of metal is deposited on a substrate, it is highly usable in the device manufacturing process and has the advantage of being able to be manufactured over a large area. The research team showed that the thermal conductivity increased by about 25% due to surface waves generated over a 100-nm-thick titanium (Ti) film with a radius of about 3 cm.

KAIST Professor Bong Jae Lee, who led the research, said, "The significance of this research is that a new heat transfer mode using surface waves over a thin metal film deposited on a substrate with low processing difficulty was identified for the first time in the world. It can be applied as a nanoscale heat spreader to efficiently dissipate heat near the hot spots for easily overheatable semiconductor devices.”

The result has great implications for the development of high-performance semiconductor devices in the future in that it can be applied to rapidly dissipate heat on a nanoscale thin film. In particular, this new heat transfer mode identified by the research team is expected to solve the fundamental problem of thermal management in semiconductor devices as it enables even more effective heat transfer at nanoscale thickness while the thermal conductivity of the thin film usually decreases due to the boundary scattering effect.

This study was published online on April 26 in 'Physical Review Letters' and was selected as an Editors' Suggestion. The research was carried out with support from the Basic Research Laboratory Support Program of the National Research Foundation of Korea.

< Figure. Schematic diagram of the principle of measuring the thermal conductivity of thin Titanium (TI) films and the thermal conductivity of surface plasmon polariton measured on the Ti film >

2023.06.01 View 9582 -

'Jumping Genes' Found to Alter Human Colon Genomes, Offering Insights into Aging and Tumorigenesis

The Korea Advanced Institute of Science and Technology (KAIST) and their collaborators have conducted a groundbreaking study targeting 'jumping genes' in the entire genomes of the human large intestine. Published in Nature on May 18 2023, the research unveils the surprising activity of 'Long interspersed nuclear element-1 (L1),' a type of jumping gene previously thought to be mostly dormant in human genomes. The study shows that L1 genes can become activated and disrupt genomic functions throughout an individual's lifetime, particularly in the colorectal epithelium.

(Paper Title: Widespread somatic L1 retrotransposition in normal colorectal epithelium, https://www.nature.com/articles/s41586-023-06046-z)

With approximately 500,000 L1 jumping genes, accounting for 17% of the human genome, they have long been recognized for their contribution to the evolution of the human species by introducing 'disruptive innovation' to genome sequences. Until now, it was believed that most L1 elements had lost their ability to jump in normal tissues of modern humans. However, this study reveals that some L1 jumping genes can be widely activated in normal cells, leading to the accumulation of genomic mutations over an individual's lifetime. The rate of L1 jumping and resulting genomic changes vary among different cell types, with a notable concentration observed in aged colon epithelial cells. The study illustrates that every colonic epithelial cell experiences an L1 jumping event by the age of 40 on average.

The research, led by co-first authors Chang Hyun Nam (a graduate student at KAIST) and Dr. Jeonghwan Youk (former graduate student at KAIST and assistant clinical professor at Seoul National University Hospital), involved the analysis of whole-genome sequences from 899 single cells obtained from skin (fibroblasts), blood, and colon epithelial tissues collected from 28 individuals. The study uncovers the activation of L1 jumping genes in normal cells, resulting in the gradual accumulation of genomic mutations over time. Additionally, the team explored epigenomic (DNA methylation) sequences to understand the mechanism behind L1 jumping gene activation. They found that cells with activated L1 jumping genes exhibit epigenetic instability, suggesting the critical role of epigenetic changes in regulating L1 jumping gene activity. Most of these epigenomic instabilities were found to arise during the early stages of embryogenesis. The study provides valuable insights into the aging process and the development of diseases in human colorectal tissues.

"This study illustrates that genomic damage in normal cells is acquired not only through exposure to carcinogens but also through the activity of endogenous components whose impact was previously unclear. Genomes of apparently healthy aged cells, particularly in the colorectal epithelium, become mosaic due to the activity of L1 jumping genes," said Prof. Young Seok Ju at KAIST.

"We emphasize the essential and ongoing collaboration among researchers in clinical medicine and basic medical sciences," said Prof. Min Jung Kim of the Department of Surgery at Seoul National University Hospital. "This case highlights the critical role of systematically collected human tissues from clinical settings in unraveling the complex process of disease development in humans."

"I am delighted that the research team's advancements in single-cell genome technology have come to fruition. We will persistently strive to lead in single-cell genome technology," said Prof. Hyun Woo Kwon of the Department of Nuclear Medicine at Korea University School of Medicine.

The research team received support from the Research Leader Program and the Young Researcher Program of the National Research Foundation of Korea, a grant from the MD-PhD/Medical Scientist Training Program through the Korea Health Industry Development Institute, and the Suh Kyungbae Foundation.

< Figure 1. Experimental design of the study >

< Figure 2. Schematic diagram illustrating factors influencing the soL1R landscape. >

Genetic composition of rc-L1s is inherited from the parents. The methylation landscape of rc-L1 promoters is predominantly determined by global DNA demethylation, followed by remethylation processes in the developmental stages. Then, when an rc-L1 is promoter demethylated in a specific cell lineage, the source expresses L1 transcripts thus making possible the induction of soL1Rs.

2023.05.22 View 10313

'Jumping Genes' Found to Alter Human Colon Genomes, Offering Insights into Aging and Tumorigenesis

The Korea Advanced Institute of Science and Technology (KAIST) and their collaborators have conducted a groundbreaking study targeting 'jumping genes' in the entire genomes of the human large intestine. Published in Nature on May 18 2023, the research unveils the surprising activity of 'Long interspersed nuclear element-1 (L1),' a type of jumping gene previously thought to be mostly dormant in human genomes. The study shows that L1 genes can become activated and disrupt genomic functions throughout an individual's lifetime, particularly in the colorectal epithelium.

(Paper Title: Widespread somatic L1 retrotransposition in normal colorectal epithelium, https://www.nature.com/articles/s41586-023-06046-z)

With approximately 500,000 L1 jumping genes, accounting for 17% of the human genome, they have long been recognized for their contribution to the evolution of the human species by introducing 'disruptive innovation' to genome sequences. Until now, it was believed that most L1 elements had lost their ability to jump in normal tissues of modern humans. However, this study reveals that some L1 jumping genes can be widely activated in normal cells, leading to the accumulation of genomic mutations over an individual's lifetime. The rate of L1 jumping and resulting genomic changes vary among different cell types, with a notable concentration observed in aged colon epithelial cells. The study illustrates that every colonic epithelial cell experiences an L1 jumping event by the age of 40 on average.

The research, led by co-first authors Chang Hyun Nam (a graduate student at KAIST) and Dr. Jeonghwan Youk (former graduate student at KAIST and assistant clinical professor at Seoul National University Hospital), involved the analysis of whole-genome sequences from 899 single cells obtained from skin (fibroblasts), blood, and colon epithelial tissues collected from 28 individuals. The study uncovers the activation of L1 jumping genes in normal cells, resulting in the gradual accumulation of genomic mutations over time. Additionally, the team explored epigenomic (DNA methylation) sequences to understand the mechanism behind L1 jumping gene activation. They found that cells with activated L1 jumping genes exhibit epigenetic instability, suggesting the critical role of epigenetic changes in regulating L1 jumping gene activity. Most of these epigenomic instabilities were found to arise during the early stages of embryogenesis. The study provides valuable insights into the aging process and the development of diseases in human colorectal tissues.

"This study illustrates that genomic damage in normal cells is acquired not only through exposure to carcinogens but also through the activity of endogenous components whose impact was previously unclear. Genomes of apparently healthy aged cells, particularly in the colorectal epithelium, become mosaic due to the activity of L1 jumping genes," said Prof. Young Seok Ju at KAIST.

"We emphasize the essential and ongoing collaboration among researchers in clinical medicine and basic medical sciences," said Prof. Min Jung Kim of the Department of Surgery at Seoul National University Hospital. "This case highlights the critical role of systematically collected human tissues from clinical settings in unraveling the complex process of disease development in humans."

"I am delighted that the research team's advancements in single-cell genome technology have come to fruition. We will persistently strive to lead in single-cell genome technology," said Prof. Hyun Woo Kwon of the Department of Nuclear Medicine at Korea University School of Medicine.

The research team received support from the Research Leader Program and the Young Researcher Program of the National Research Foundation of Korea, a grant from the MD-PhD/Medical Scientist Training Program through the Korea Health Industry Development Institute, and the Suh Kyungbae Foundation.

< Figure 1. Experimental design of the study >

< Figure 2. Schematic diagram illustrating factors influencing the soL1R landscape. >

Genetic composition of rc-L1s is inherited from the parents. The methylation landscape of rc-L1 promoters is predominantly determined by global DNA demethylation, followed by remethylation processes in the developmental stages. Then, when an rc-L1 is promoter demethylated in a specific cell lineage, the source expresses L1 transcripts thus making possible the induction of soL1Rs.

2023.05.22 View 10313 -

KAIST debuts “DreamWaQer” - a quadrupedal robot that can walk in the dark

- The team led by Professor Hyun Myung of the School of Electrical Engineering developed “DreamWaQ”, a deep reinforcement learning-based walking robot control technology that can walk in an atypical environment without visual and/or tactile information

- Utilization of “DreamWaQ” technology can enable mass production of various types of “DreamWaQers”

- Expected to be used in exploration of atypical environment involving unique circumstances such as disasters by fire.

A team of Korean engineering researchers has developed a quadrupedal robot technology that can climb up and down the steps and moves without falling over in uneven environments such as tree roots without the help of visual or tactile sensors even in disastrous situations in which visual confirmation is impeded due to darkness or thick smoke from the flames.

KAIST (President Kwang Hyung Lee) announced on the 29th of March that Professor Hyun Myung's research team at the Urban Robotics Lab in the School of Electrical Engineering developed a walking robot control technology that enables robust 'blind locomotion' in various atypical environments.

< (From left) Prof. Hyun Myung, Doctoral Candidates I Made Aswin Nahrendra, Byeongho Yu, and Minho Oh. In the foreground is the DreamWaQer, a quadrupedal robot equipped with DreamWaQ technology. >

The KAIST research team developed "DreamWaQ" technology, which was named so as it enables walking robots to move about even in the dark, just as a person can walk without visual help fresh out of bed and going to the bathroom in the dark. With this technology installed atop any legged robots, it will be possible to create various types of "DreamWaQers".

Existing walking robot controllers are based on kinematics and/or dynamics models. This is expressed as a model-based control method. In particular, on atypical environments like the open, uneven fields, it is necessary to obtain the feature information of the terrain more quickly in order to maintain stability as it walks. However, it has been shown to depend heavily on the cognitive ability to survey the surrounding environment.

In contrast, the controller developed by Professor Hyun Myung's research team based on deep reinforcement learning (RL) methods can quickly calculate appropriate control commands for each motor of the walking robot through data of various environments obtained from the simulator. Whereas the existing controllers that learned from simulations required a separate re-orchestration to make it work with an actual robot, this controller developed by the research team is expected to be easily applied to various walking robots because it does not require an additional tuning process.

DreamWaQ, the controller developed by the research team, is largely composed of a context estimation network that estimates the ground and robot information and a policy network that computes control commands. The context-aided estimator network estimates the ground information implicitly and the robot’s status explicitly through inertial information and joint information. This information is fed into the policy network to be used to generate optimal control commands. Both networks are learned together in the simulation.

While the context-aided estimator network is learned through supervised learning, the policy network is learned through an actor-critic architecture, a deep RL methodology. The actor network can only implicitly infer surrounding terrain information. In the simulation, the surrounding terrain information is known, and the critic, or the value network, that has the exact terrain information evaluates the policy of the actor network.

This whole learning process takes only about an hour in a GPU-enabled PC, and the actual robot is equipped with only the network of learned actors. Without looking at the surrounding terrain, it goes through the process of imagining which environment is similar to one of the various environments learned in the simulation using only the inertial sensor (IMU) inside the robot and the measurement of joint angles. If it suddenly encounters an offset, such as a staircase, it will not know until its foot touches the step, but it will quickly draw up terrain information the moment its foot touches the surface. Then the control command suitable for the estimated terrain information is transmitted to each motor, enabling rapidly adapted walking.

The DreamWaQer robot walked not only in the laboratory environment, but also in an outdoor environment around the campus with many curbs and speed bumps, and over a field with many tree roots and gravel, demonstrating its abilities by overcoming a staircase with a difference of a height that is two-thirds of its body. In addition, regardless of the environment, the research team confirmed that it was capable of stable walking ranging from a slow speed of 0.3 m/s to a rather fast speed of 1.0 m/s.

The results of this study were produced by a student in doctorate course, I Made Aswin Nahrendra, as the first author, and his colleague Byeongho Yu as a co-author. It has been accepted to be presented at the upcoming IEEE International Conference on Robotics and Automation (ICRA) scheduled to be held in London at the end of May. (Paper title: DreamWaQ: Learning Robust Quadrupedal Locomotion With Implicit Terrain Imagination via Deep Reinforcement Learning)

The videos of the walking robot DreamWaQer equipped with the developed DreamWaQ can be found at the address below.

Main Introduction: https://youtu.be/JC1_bnTxPiQ Experiment Sketches: https://youtu.be/mhUUZVbeDA0

Meanwhile, this research was carried out with the support from the Robot Industry Core Technology Development Program of the Ministry of Trade, Industry and Energy (MOTIE). (Task title: Development of Mobile Intelligence SW for Autonomous Navigation of Legged Robots in Dynamic and Atypical Environments for Real Application)

< Figure 1. Overview of DreamWaQ, a controller developed by this research team. This network consists of an estimator network that learns implicit and explicit estimates together, a policy network that acts as a controller, and a value network that provides guides to the policies during training. When implemented in a real robot, only the estimator and policy network are used. Both networks run in less than 1 ms on the robot's on-board computer. >

< Figure 2. Since the estimator can implicitly estimate the ground information as the foot touches the surface, it is possible to adapt quickly to rapidly changing ground conditions. >

< Figure 3. Results showing that even a small walking robot was able to overcome steps with height differences of about 20cm. >

2023.05.18 View 13253

KAIST debuts “DreamWaQer” - a quadrupedal robot that can walk in the dark

- The team led by Professor Hyun Myung of the School of Electrical Engineering developed “DreamWaQ”, a deep reinforcement learning-based walking robot control technology that can walk in an atypical environment without visual and/or tactile information

- Utilization of “DreamWaQ” technology can enable mass production of various types of “DreamWaQers”

- Expected to be used in exploration of atypical environment involving unique circumstances such as disasters by fire.

A team of Korean engineering researchers has developed a quadrupedal robot technology that can climb up and down the steps and moves without falling over in uneven environments such as tree roots without the help of visual or tactile sensors even in disastrous situations in which visual confirmation is impeded due to darkness or thick smoke from the flames.

KAIST (President Kwang Hyung Lee) announced on the 29th of March that Professor Hyun Myung's research team at the Urban Robotics Lab in the School of Electrical Engineering developed a walking robot control technology that enables robust 'blind locomotion' in various atypical environments.

< (From left) Prof. Hyun Myung, Doctoral Candidates I Made Aswin Nahrendra, Byeongho Yu, and Minho Oh. In the foreground is the DreamWaQer, a quadrupedal robot equipped with DreamWaQ technology. >

The KAIST research team developed "DreamWaQ" technology, which was named so as it enables walking robots to move about even in the dark, just as a person can walk without visual help fresh out of bed and going to the bathroom in the dark. With this technology installed atop any legged robots, it will be possible to create various types of "DreamWaQers".

Existing walking robot controllers are based on kinematics and/or dynamics models. This is expressed as a model-based control method. In particular, on atypical environments like the open, uneven fields, it is necessary to obtain the feature information of the terrain more quickly in order to maintain stability as it walks. However, it has been shown to depend heavily on the cognitive ability to survey the surrounding environment.

In contrast, the controller developed by Professor Hyun Myung's research team based on deep reinforcement learning (RL) methods can quickly calculate appropriate control commands for each motor of the walking robot through data of various environments obtained from the simulator. Whereas the existing controllers that learned from simulations required a separate re-orchestration to make it work with an actual robot, this controller developed by the research team is expected to be easily applied to various walking robots because it does not require an additional tuning process.

DreamWaQ, the controller developed by the research team, is largely composed of a context estimation network that estimates the ground and robot information and a policy network that computes control commands. The context-aided estimator network estimates the ground information implicitly and the robot’s status explicitly through inertial information and joint information. This information is fed into the policy network to be used to generate optimal control commands. Both networks are learned together in the simulation.

While the context-aided estimator network is learned through supervised learning, the policy network is learned through an actor-critic architecture, a deep RL methodology. The actor network can only implicitly infer surrounding terrain information. In the simulation, the surrounding terrain information is known, and the critic, or the value network, that has the exact terrain information evaluates the policy of the actor network.

This whole learning process takes only about an hour in a GPU-enabled PC, and the actual robot is equipped with only the network of learned actors. Without looking at the surrounding terrain, it goes through the process of imagining which environment is similar to one of the various environments learned in the simulation using only the inertial sensor (IMU) inside the robot and the measurement of joint angles. If it suddenly encounters an offset, such as a staircase, it will not know until its foot touches the step, but it will quickly draw up terrain information the moment its foot touches the surface. Then the control command suitable for the estimated terrain information is transmitted to each motor, enabling rapidly adapted walking.

The DreamWaQer robot walked not only in the laboratory environment, but also in an outdoor environment around the campus with many curbs and speed bumps, and over a field with many tree roots and gravel, demonstrating its abilities by overcoming a staircase with a difference of a height that is two-thirds of its body. In addition, regardless of the environment, the research team confirmed that it was capable of stable walking ranging from a slow speed of 0.3 m/s to a rather fast speed of 1.0 m/s.

The results of this study were produced by a student in doctorate course, I Made Aswin Nahrendra, as the first author, and his colleague Byeongho Yu as a co-author. It has been accepted to be presented at the upcoming IEEE International Conference on Robotics and Automation (ICRA) scheduled to be held in London at the end of May. (Paper title: DreamWaQ: Learning Robust Quadrupedal Locomotion With Implicit Terrain Imagination via Deep Reinforcement Learning)

The videos of the walking robot DreamWaQer equipped with the developed DreamWaQ can be found at the address below.

Main Introduction: https://youtu.be/JC1_bnTxPiQ Experiment Sketches: https://youtu.be/mhUUZVbeDA0

Meanwhile, this research was carried out with the support from the Robot Industry Core Technology Development Program of the Ministry of Trade, Industry and Energy (MOTIE). (Task title: Development of Mobile Intelligence SW for Autonomous Navigation of Legged Robots in Dynamic and Atypical Environments for Real Application)

< Figure 1. Overview of DreamWaQ, a controller developed by this research team. This network consists of an estimator network that learns implicit and explicit estimates together, a policy network that acts as a controller, and a value network that provides guides to the policies during training. When implemented in a real robot, only the estimator and policy network are used. Both networks run in less than 1 ms on the robot's on-board computer. >

< Figure 2. Since the estimator can implicitly estimate the ground information as the foot touches the surface, it is possible to adapt quickly to rapidly changing ground conditions. >

< Figure 3. Results showing that even a small walking robot was able to overcome steps with height differences of about 20cm. >

2023.05.18 View 13253 -

Synthetic sRNAs to knockdown genes in medical and industrial bacteria

Bacteria are intimately involved in our daily lives. These microorganisms have been used in human history for food such as cheese, yogurt, and wine, In more recent years, through metabolic engineering, microorganisms been used extensively as microbial cell factories to manufacture plastics, feed for livestock, dietary supplements, and drugs. However, in addition to these bacteria that are beneficial to human lives, pathogens such as Pneumonia, Salmonella, and Staphylococcus that cause various infectious diseases are also ubiquitously present. It is important to be able to metabolically control these beneficial industrial bacteria for high value-added chemicals production and to manipulate harmful pathogens to suppress its pathogenic traits.

KAIST (President Kwang Hyung Lee) announced on the 10th that a research team led by Distinguished Professor Sang Yup Lee of the Department of Biochemical Engineering has developed a new sRNA tool that can effectively inhibit target genes in various bacteria, including both Gram-negative and Gram-positive bacteria. The research results were published online on April 24 in Nature Communications.

※ Thesis title: Targeted and high-throughput gene knockdown in diverse bacteria using synthetic sRNAs

※ Author information : Jae Sung Cho (co-1st), Dongsoo Yang (co-1st), Cindy Pricilia Surya Prabowo (co-author), Mohammad Rifqi Ghiffary (co-author), Taehee Han (co-author), Kyeong Rok Choi (co-author), Cheon Woo Moon (co-author), Hengrui Zhou (co-author), Jae Yong Ryu (co-author), Hyun Uk Kim (co-author) and Sang Yup Lee (corresponding author).

sRNA is an effective tool for synthesizing and regulating target genes in E. coli, but it has been difficult to apply to industrially useful Gram-positive bacteria such as Bacillus subtilis and Corynebacterium in addition to Gram-negative bacteria such as E. coli.

To address this issue, a research team led by Distinguished Professor Lee Sang Yup Lee of the Department of Chemical and Biomolecular Engineering at KAIST developed a new sRNA platform that can effectively suppress target genes in various bacteria, including both Gram-negative and positive bacteria. The research team surveyed thousands of microbial-derived sRNA systems in the microbial database, and eventually designated the sRNA system derived from 'Bacillus subtilis' that showed the highest gene knockdown efficiency, and designated it as “Broad-Host-Range sRNA”, or BHR-sRNA.

A similar well-known system is the CRISPR interference (CRISPRi) system, which is a modified CRISPR system that knocks down gene expression by suppressing the gene transcription process. However, the Cas9 protein in the CRISPRi system has a very high molecular weight, and there have been reports growth inhibition in bacteria. The BHR-sRNA system developed in this study did not affect bacterial growth while showing similar gene knockdown efficiencies to CRISPRi.

< Figure 1. a) Schematic illustration demonstrating the mechanism of syntetic sRNA b) Phylogenetic tree of the 16 Gram-negative and Gram-positive bacterial species tested for gene knockdown by the BHR-sRNA system. >

To validate the versatility of the BHR-sRNA system, 16 different gram-negative and gram-positive bacteria were selected and tested, where the BHR-sRNA system worked successfully in 15 of them. In addition, it was demonstrated that the gene knockdown capability was more effective than that of the existing E. coli-based sRNA system in 10 bacteria. The BHR-sRNA system proved to be a universal tool capable of effectively inhibiting gene expression in various bacteria.

In order to address the problem of antibiotic-resistant pathogens that have recently become more serious, the BHR-sRNA was demonstrated to suppress the pathogenicity by suppressing the gene producing the virulence factor. By using BHR-sRNA, biofilm formation, one of the factors resulting in antibiotic resistance, was inhibited by 73% in Staphylococcus epidermidis a pathogen that can cause hospital-acquired infections. Antibiotic resistance was also weakened by 58% in the pneumonia causing bacteria Klebsiella pneumoniae. In addition, BHR-sRNA was applied to industrial bacteria to develop microbial cell factories to produce high value-added chemicals with better production performance. Notably, superior industrial strains were constructed with the aid of BHR-sRNA to produce the following chemicals: valerolactam, a raw material for polyamide polymers, methyl-anthranilate, a grape-flavor food additive, and indigoidine, a blue-toned natural dye.

The BHR-sRNA developed through this study will help expedite the commercialization of bioprocesses to produce high value-added compounds and materials such as artificial meat, jet fuel, health supplements, pharmaceuticals, and plastics. It is also anticipated that to help eradicating antibiotic-resistant pathogens in preparation for another upcoming pandemic. “In the past, we could only develop new tools for gene knockdown for each bacterium, but now we have developed a tool that works for a variety of bacteria” said Distinguished Professor Sang Yup Lee.

This work was supported by the Development of Next-generation Biorefinery Platform Technologies for Leading Bio-based Chemicals Industry Project and the Development of Platform Technologies of Microbial Cell Factories for the Next-generation Biorefineries Project from NRF supported by the Korean MSIT.

2023.05.10 View 9372

Synthetic sRNAs to knockdown genes in medical and industrial bacteria

Bacteria are intimately involved in our daily lives. These microorganisms have been used in human history for food such as cheese, yogurt, and wine, In more recent years, through metabolic engineering, microorganisms been used extensively as microbial cell factories to manufacture plastics, feed for livestock, dietary supplements, and drugs. However, in addition to these bacteria that are beneficial to human lives, pathogens such as Pneumonia, Salmonella, and Staphylococcus that cause various infectious diseases are also ubiquitously present. It is important to be able to metabolically control these beneficial industrial bacteria for high value-added chemicals production and to manipulate harmful pathogens to suppress its pathogenic traits.

KAIST (President Kwang Hyung Lee) announced on the 10th that a research team led by Distinguished Professor Sang Yup Lee of the Department of Biochemical Engineering has developed a new sRNA tool that can effectively inhibit target genes in various bacteria, including both Gram-negative and Gram-positive bacteria. The research results were published online on April 24 in Nature Communications.

※ Thesis title: Targeted and high-throughput gene knockdown in diverse bacteria using synthetic sRNAs

※ Author information : Jae Sung Cho (co-1st), Dongsoo Yang (co-1st), Cindy Pricilia Surya Prabowo (co-author), Mohammad Rifqi Ghiffary (co-author), Taehee Han (co-author), Kyeong Rok Choi (co-author), Cheon Woo Moon (co-author), Hengrui Zhou (co-author), Jae Yong Ryu (co-author), Hyun Uk Kim (co-author) and Sang Yup Lee (corresponding author).

sRNA is an effective tool for synthesizing and regulating target genes in E. coli, but it has been difficult to apply to industrially useful Gram-positive bacteria such as Bacillus subtilis and Corynebacterium in addition to Gram-negative bacteria such as E. coli.

To address this issue, a research team led by Distinguished Professor Lee Sang Yup Lee of the Department of Chemical and Biomolecular Engineering at KAIST developed a new sRNA platform that can effectively suppress target genes in various bacteria, including both Gram-negative and positive bacteria. The research team surveyed thousands of microbial-derived sRNA systems in the microbial database, and eventually designated the sRNA system derived from 'Bacillus subtilis' that showed the highest gene knockdown efficiency, and designated it as “Broad-Host-Range sRNA”, or BHR-sRNA.

A similar well-known system is the CRISPR interference (CRISPRi) system, which is a modified CRISPR system that knocks down gene expression by suppressing the gene transcription process. However, the Cas9 protein in the CRISPRi system has a very high molecular weight, and there have been reports growth inhibition in bacteria. The BHR-sRNA system developed in this study did not affect bacterial growth while showing similar gene knockdown efficiencies to CRISPRi.

< Figure 1. a) Schematic illustration demonstrating the mechanism of syntetic sRNA b) Phylogenetic tree of the 16 Gram-negative and Gram-positive bacterial species tested for gene knockdown by the BHR-sRNA system. >

To validate the versatility of the BHR-sRNA system, 16 different gram-negative and gram-positive bacteria were selected and tested, where the BHR-sRNA system worked successfully in 15 of them. In addition, it was demonstrated that the gene knockdown capability was more effective than that of the existing E. coli-based sRNA system in 10 bacteria. The BHR-sRNA system proved to be a universal tool capable of effectively inhibiting gene expression in various bacteria.

In order to address the problem of antibiotic-resistant pathogens that have recently become more serious, the BHR-sRNA was demonstrated to suppress the pathogenicity by suppressing the gene producing the virulence factor. By using BHR-sRNA, biofilm formation, one of the factors resulting in antibiotic resistance, was inhibited by 73% in Staphylococcus epidermidis a pathogen that can cause hospital-acquired infections. Antibiotic resistance was also weakened by 58% in the pneumonia causing bacteria Klebsiella pneumoniae. In addition, BHR-sRNA was applied to industrial bacteria to develop microbial cell factories to produce high value-added chemicals with better production performance. Notably, superior industrial strains were constructed with the aid of BHR-sRNA to produce the following chemicals: valerolactam, a raw material for polyamide polymers, methyl-anthranilate, a grape-flavor food additive, and indigoidine, a blue-toned natural dye.

The BHR-sRNA developed through this study will help expedite the commercialization of bioprocesses to produce high value-added compounds and materials such as artificial meat, jet fuel, health supplements, pharmaceuticals, and plastics. It is also anticipated that to help eradicating antibiotic-resistant pathogens in preparation for another upcoming pandemic. “In the past, we could only develop new tools for gene knockdown for each bacterium, but now we have developed a tool that works for a variety of bacteria” said Distinguished Professor Sang Yup Lee.

This work was supported by the Development of Next-generation Biorefinery Platform Technologies for Leading Bio-based Chemicals Industry Project and the Development of Platform Technologies of Microbial Cell Factories for the Next-generation Biorefineries Project from NRF supported by the Korean MSIT.

2023.05.10 View 9372 -

Seanie Lee of KAIST Kim Jaechul Graduate School of AI, named the 2023 Apple Scholars in AI Machine Learning

Seanie Lee, a Ph.D. candidate at the Kim Jaechul Graduate School of AI, has been selected as one of the Apple Scholars in AI/ML PhD fellowship program recipients for 2023. Lee, advised by Sung Ju Hwang and Juho Lee, is a rising star in AI.

< Seanie Lee of KAIST Kim Jaechul Graduate School of AI >

The Apple Scholars in AI/ML PhD fellowship program, launched in 2020, aims to discover and support young researchers with a promising future in computer science. Each year, a handful of graduate students in related fields worldwide are selected for the program. For the following two years, the selected students are provided with financial support for research, international conference attendance, internship opportunities, and mentorship by an Apple engineer.

This year, 22 PhD students were selected from leading universities worldwide, including Johns Hopkins University, MIT, Stanford University, Imperial College London, Edinburgh University, Tsinghua University, HKUST, and Technion. Seanie Lee is the first Korean student to be selected for the program.

Lee’s research focuses on transfer learning, a subfield of AI that reuses pre-trained AI models on large datasets such as images or text corpora to train them for new purposes.

(*text corpus: a collection of text resources in computer-readable forms)

His work aims to improve the performance of transfer learning by developing new data augmentation methods that allow for more effective training using few training data samples and new regularization techniques that prevent the overfitting of large AI models to training data. He has published 11 papers, all of which were accepted to top-tier conferences such as the Annual Meeting of the Association for Computational Linguistics (ACL), International Conference on Learning Representations (ICLR), and Annual Conference on Neural Information Processing Systems (NeurIPS).

“Being selected as one of the Apple Scholars in AI/ML PhD fellowship program is a great motivation for me,” said Lee. “So far, AI research has been largely focused on computer vision and natural language processing, but I want to push the boundaries now and use modern tools of AI to solve problems in natural science, like physics.”

2023.04.20 View 8822

Seanie Lee of KAIST Kim Jaechul Graduate School of AI, named the 2023 Apple Scholars in AI Machine Learning

Seanie Lee, a Ph.D. candidate at the Kim Jaechul Graduate School of AI, has been selected as one of the Apple Scholars in AI/ML PhD fellowship program recipients for 2023. Lee, advised by Sung Ju Hwang and Juho Lee, is a rising star in AI.

< Seanie Lee of KAIST Kim Jaechul Graduate School of AI >

The Apple Scholars in AI/ML PhD fellowship program, launched in 2020, aims to discover and support young researchers with a promising future in computer science. Each year, a handful of graduate students in related fields worldwide are selected for the program. For the following two years, the selected students are provided with financial support for research, international conference attendance, internship opportunities, and mentorship by an Apple engineer.

This year, 22 PhD students were selected from leading universities worldwide, including Johns Hopkins University, MIT, Stanford University, Imperial College London, Edinburgh University, Tsinghua University, HKUST, and Technion. Seanie Lee is the first Korean student to be selected for the program.

Lee’s research focuses on transfer learning, a subfield of AI that reuses pre-trained AI models on large datasets such as images or text corpora to train them for new purposes.

(*text corpus: a collection of text resources in computer-readable forms)

His work aims to improve the performance of transfer learning by developing new data augmentation methods that allow for more effective training using few training data samples and new regularization techniques that prevent the overfitting of large AI models to training data. He has published 11 papers, all of which were accepted to top-tier conferences such as the Annual Meeting of the Association for Computational Linguistics (ACL), International Conference on Learning Representations (ICLR), and Annual Conference on Neural Information Processing Systems (NeurIPS).

“Being selected as one of the Apple Scholars in AI/ML PhD fellowship program is a great motivation for me,” said Lee. “So far, AI research has been largely focused on computer vision and natural language processing, but I want to push the boundaries now and use modern tools of AI to solve problems in natural science, like physics.”

2023.04.20 View 8822 -

KAIST Team Develops Highly-Sensitive Wearable Piezoelectric Blood Pressure Sensor for Continuous Health Monitoring

- A collaborative research team led by KAIST Professor Keon Jae Lee verifies the accuracy of the highly-sensitive sensor through clinical trials

- Commercialization of the watch and patch-type sensor is in progress

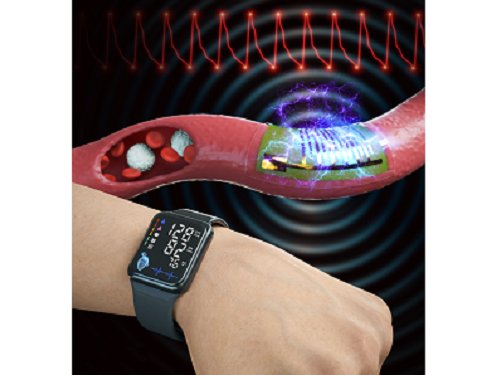

A KAIST research team led by Professor Keon Jae Lee from the Department of Materials Science and Engineering and the College of Medicine of the Catholic University of Korea has developed a highly sensitive, wearable piezoelectric blood pressure sensor.

Blood pressure is a critical indicator for assessing general health and predicting stroke or heart failure. In particular, cardiovascular disease is the leading cause of global death, therefore, periodic measurement of blood pressure is crucial for personal healthcare.

Recently, there has been a growing interest in healthcare devices for continuous blood pressure monitoring. Although smart watches using LED-based photoplethysmography (PPG) technology have been on market, these devices have been limited by the accuracy constraints of optical sensors, making it hard to meet the international standards of automatic sphygmomanometers.

Professor Lee’s team has developed the wearable piezoelectric blood pressure sensor by transferring a highly sensitive, inorganic piezoelectric membrane from bulk sapphire substrates to flexible substrates. Ultrathin piezoelectric sensors with a thickness of several micrometers (one hundredth of the human hair) exhibit conformal contact with the skin to successfully collect accurate blood pressure from the subtle pulsation of the blood vessels.

Clinical trial at the St. Mary’s Hospital of the Catholic University validated the accuracy of blood pressure sensor at par with international standard with errors within ±5 mmHg and a standard deviation under 8 mmHg for both systolic and diastolic blood pressure. In addition, the research team successfully embedded the sensor on a watch-type product to enable continuous monitoring of blood pressure.

Prof. Keon Jae Lee said, “Major target of our healthcare devices is hypertensive patients for their daily medical check-up. We plan to develop a comfortable patch-type sensor to monitor blood pressure during sleep and have a start-up company commercialize these watch and patch-type products soon.”

This result titled “Clinical validation of wearable piezoelectric blood pressure sensor for health monitoring” was published in the online issue of Advanced Materials on March 24th, 2023. (DOI: 10.1002/adma.202301627)

Figure 1. Schematic illustration of the overall concept for a wearable piezoelectric blood pressure sensor (WPBPS).

Figure 2. Wearable piezoelectric blood pressure sensor (WPBPS) mounted on a watch (a) Schematic design of the WPBPS-embedded wristwatch. (b) Block diagram of the wireless communication circuit, which filters, amplifies, and transmits wireless data to portable devices. (c) Pulse waveforms transmitted from the wristwatch to the portable device by the wireless communication circuit. The inset shows a photograph of monitoring a user’s beat-to-beat pulses and their corresponding BP values in real time using the developed WPBPS-mounted wristwatch.

2023.04.17 View 10579

KAIST Team Develops Highly-Sensitive Wearable Piezoelectric Blood Pressure Sensor for Continuous Health Monitoring

- A collaborative research team led by KAIST Professor Keon Jae Lee verifies the accuracy of the highly-sensitive sensor through clinical trials

- Commercialization of the watch and patch-type sensor is in progress

A KAIST research team led by Professor Keon Jae Lee from the Department of Materials Science and Engineering and the College of Medicine of the Catholic University of Korea has developed a highly sensitive, wearable piezoelectric blood pressure sensor.

Blood pressure is a critical indicator for assessing general health and predicting stroke or heart failure. In particular, cardiovascular disease is the leading cause of global death, therefore, periodic measurement of blood pressure is crucial for personal healthcare.

Recently, there has been a growing interest in healthcare devices for continuous blood pressure monitoring. Although smart watches using LED-based photoplethysmography (PPG) technology have been on market, these devices have been limited by the accuracy constraints of optical sensors, making it hard to meet the international standards of automatic sphygmomanometers.

Professor Lee’s team has developed the wearable piezoelectric blood pressure sensor by transferring a highly sensitive, inorganic piezoelectric membrane from bulk sapphire substrates to flexible substrates. Ultrathin piezoelectric sensors with a thickness of several micrometers (one hundredth of the human hair) exhibit conformal contact with the skin to successfully collect accurate blood pressure from the subtle pulsation of the blood vessels.

Clinical trial at the St. Mary’s Hospital of the Catholic University validated the accuracy of blood pressure sensor at par with international standard with errors within ±5 mmHg and a standard deviation under 8 mmHg for both systolic and diastolic blood pressure. In addition, the research team successfully embedded the sensor on a watch-type product to enable continuous monitoring of blood pressure.

Prof. Keon Jae Lee said, “Major target of our healthcare devices is hypertensive patients for their daily medical check-up. We plan to develop a comfortable patch-type sensor to monitor blood pressure during sleep and have a start-up company commercialize these watch and patch-type products soon.”

This result titled “Clinical validation of wearable piezoelectric blood pressure sensor for health monitoring” was published in the online issue of Advanced Materials on March 24th, 2023. (DOI: 10.1002/adma.202301627)

Figure 1. Schematic illustration of the overall concept for a wearable piezoelectric blood pressure sensor (WPBPS).

Figure 2. Wearable piezoelectric blood pressure sensor (WPBPS) mounted on a watch (a) Schematic design of the WPBPS-embedded wristwatch. (b) Block diagram of the wireless communication circuit, which filters, amplifies, and transmits wireless data to portable devices. (c) Pulse waveforms transmitted from the wristwatch to the portable device by the wireless communication circuit. The inset shows a photograph of monitoring a user’s beat-to-beat pulses and their corresponding BP values in real time using the developed WPBPS-mounted wristwatch.

2023.04.17 View 10579 -

A biohybrid system to extract 20 times more bioplastic from CO2 developed by KAIST researchers

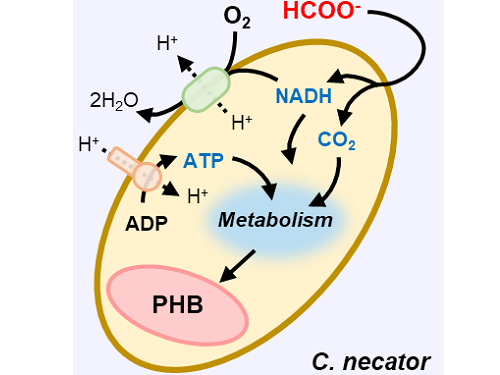

As the issues surrounding global climate change intensify, more attention and determined efforts are required to re-grasp the issue as a state of “crisis” and respond to it properly. Among the various methods of recycling CO2, the electrochemical CO2 conversion technology is a technology that can convert CO2 into useful chemical substances using electrical energy. Since it is easy to operate facilities and can use the electricity from renewable sources like the solar cells or the wind power, it has received a lot of attention as an eco-friendly technology can contribute to reducing greenhouse gases and achieve carbon neutrality.

KAIST (President Kwang Hyung Lee) announced on the 30th that the joint research team led by Professor Hyunjoo Lee and Distinguished Professor Sang Yup Lee of the Department of Chemical and Biomolecular Engineering succeeded in developing a technology that produces bioplastics from CO2 with high efficiency by developing a hybrid system that interlinked the electrochemical CO2 conversion and microbial bio conversion methods together. The results of the research, which showed the world's highest productivity by more than 20 times compared to similar systems, were published online on March 27th in the "Proceedings of the National Academy of Sciences (PNAS)".

※ Paper title: Biohybrid CO2 electrolysis for the direct synthesis of polyesters from CO2

※ Author information: Jinkyu Lim (currently at Stanford Linear Accelerator Center, co-first author), So Young Choi (KAIST, co-first author), Jae Won Lee (KAIST, co-first author), Hyunjoo Lee (KAIST, corresponding author), Sang Yup Lee (KAIST, corresponding author)

For the efficient conversion of CO2, high-efficiency electrode catalysts and systems are actively being developed. As conversion products, only compounds containing one or up to three carbon atoms are produced on a limited basis. Compounds of one carbon, such as CO, formic acid, and ethylene, are produced with relatively high efficiency. Liquid compounds of several carbons, such as ethanol, acetic acid, and propanol, can also be produced by these systems, but due to the nature of the chemical reaction that requires more electrons, there are limitations involving the conversion efficiency and the product selection.

Accordingly, a joint research team led by Professor Hyunjoo Lee and Distinguished Professor Sang Yup Lee of the Department of Chemical and Biomolecular Engineering at KAIST developed a technology to produce bioplastics from CO2 by linking electrochemical conversion technology with bioconversion method that uses microorganisms.

This electrochemical-bio hybrid system is in the form of having an electrolyzer, in which electrochemical conversion reactions occur, connected to a fermenter, in which microorganisms are cultured. When CO2 is converted to formic acid in the electrolyzer, and it is fed into the fermenter in which the microbes like the Cupriavidus necator, in this case, consumes the carbon source to produce polyhydroxyalkanoate (PHA), a microbial-derived bioplastic.

According to the research results of the existing hybrid concepts, there was a disadvantage of having low productivity or stopping at a non-continuous process due to problems of low efficiency of the electrolysis and irregular results arising from the culturing conditions of the microbes.

In order to overcome these problems, the joint research team made formic acid with a gas diffusion electrode using gaseous CO2. In addition, the team developed a 'physiologically compatible catholyte' that can be used as a culture medium for microorganisms as well as an electrolyte that allows the electrolysis to occur sufficiently without inhibiting the growth of microorganisms, without having to have a additional separation and purification process, which allowed the acide to be supplied directly to microorganisms.

Through this, the electrolyte solution containing formic acid made from CO2 enters the fermentation tank, is used for microbial culture, and enters the electrolyzer to be circulated, maximizing the utilization of the electrolyte solution and remaining formic acid. In addition, a filter was installed to ensure that only the electrolyte solution with any and all microorganisms that can affect the electrosis filtered out is supplied back to the electrolyzer, and that the microorganisms exist only in the fermenter, designing the two system to work well together with utmost efficiency.

Through the developed hybrid system, the produced bioplastic, poly-3-hydroxybutyrate (PHB), of up to 83% of the cell dry weight was produced from CO2, which produced 1.38g of PHB from a 4 cm2 electrode, which is the world's first gram(g) level production and is more than 20 times more productive than previous research. In addition, the hybrid system is expected to be applied to various industrial processes in the future as it shows promises of the continuous culture system.

The corresponding authors, Professor Hyunjoo Lee and Distinguished Professor Sang Yup Lee noted that “The results of this research are technologies that can be applied to the production of various chemical substances as well as bioplastics, and are expected to be used as key parts needed in achieving carbon neutrality in the future.”

This research was received and performed with the supports from the CO2 Reduction Catalyst and Energy Device Technology Development Project, the Heterogeneous Atomic Catalyst Control Project, and the Next-generation Biorefinery Source Technology Development Project to lead the Biochemical Industry of the Oil-replacement Eco-friendly Chemical Technology Development Program by the Ministry of Science and ICT.

Figure 1. Schematic diagram and photo of the biohybrid CO2 electrolysis system.

(A) A conceptual scheme and (B) a photograph of the biohybrid CO2 electrolysis system. (C) A detailed scheme of reaction inside the system. Gaseous CO2 was converted to formate in the electrolyzer, and the formate was converted to PHB by the cells in the fermenter. The catholyte was developed so that it is compatible with both CO2 electrolysis and fermentation and was continuously circulated.

2023.03.30 View 12927

A biohybrid system to extract 20 times more bioplastic from CO2 developed by KAIST researchers

As the issues surrounding global climate change intensify, more attention and determined efforts are required to re-grasp the issue as a state of “crisis” and respond to it properly. Among the various methods of recycling CO2, the electrochemical CO2 conversion technology is a technology that can convert CO2 into useful chemical substances using electrical energy. Since it is easy to operate facilities and can use the electricity from renewable sources like the solar cells or the wind power, it has received a lot of attention as an eco-friendly technology can contribute to reducing greenhouse gases and achieve carbon neutrality.

KAIST (President Kwang Hyung Lee) announced on the 30th that the joint research team led by Professor Hyunjoo Lee and Distinguished Professor Sang Yup Lee of the Department of Chemical and Biomolecular Engineering succeeded in developing a technology that produces bioplastics from CO2 with high efficiency by developing a hybrid system that interlinked the electrochemical CO2 conversion and microbial bio conversion methods together. The results of the research, which showed the world's highest productivity by more than 20 times compared to similar systems, were published online on March 27th in the "Proceedings of the National Academy of Sciences (PNAS)".

※ Paper title: Biohybrid CO2 electrolysis for the direct synthesis of polyesters from CO2

※ Author information: Jinkyu Lim (currently at Stanford Linear Accelerator Center, co-first author), So Young Choi (KAIST, co-first author), Jae Won Lee (KAIST, co-first author), Hyunjoo Lee (KAIST, corresponding author), Sang Yup Lee (KAIST, corresponding author)

For the efficient conversion of CO2, high-efficiency electrode catalysts and systems are actively being developed. As conversion products, only compounds containing one or up to three carbon atoms are produced on a limited basis. Compounds of one carbon, such as CO, formic acid, and ethylene, are produced with relatively high efficiency. Liquid compounds of several carbons, such as ethanol, acetic acid, and propanol, can also be produced by these systems, but due to the nature of the chemical reaction that requires more electrons, there are limitations involving the conversion efficiency and the product selection.

Accordingly, a joint research team led by Professor Hyunjoo Lee and Distinguished Professor Sang Yup Lee of the Department of Chemical and Biomolecular Engineering at KAIST developed a technology to produce bioplastics from CO2 by linking electrochemical conversion technology with bioconversion method that uses microorganisms.

This electrochemical-bio hybrid system is in the form of having an electrolyzer, in which electrochemical conversion reactions occur, connected to a fermenter, in which microorganisms are cultured. When CO2 is converted to formic acid in the electrolyzer, and it is fed into the fermenter in which the microbes like the Cupriavidus necator, in this case, consumes the carbon source to produce polyhydroxyalkanoate (PHA), a microbial-derived bioplastic.

According to the research results of the existing hybrid concepts, there was a disadvantage of having low productivity or stopping at a non-continuous process due to problems of low efficiency of the electrolysis and irregular results arising from the culturing conditions of the microbes.

In order to overcome these problems, the joint research team made formic acid with a gas diffusion electrode using gaseous CO2. In addition, the team developed a 'physiologically compatible catholyte' that can be used as a culture medium for microorganisms as well as an electrolyte that allows the electrolysis to occur sufficiently without inhibiting the growth of microorganisms, without having to have a additional separation and purification process, which allowed the acide to be supplied directly to microorganisms.

Through this, the electrolyte solution containing formic acid made from CO2 enters the fermentation tank, is used for microbial culture, and enters the electrolyzer to be circulated, maximizing the utilization of the electrolyte solution and remaining formic acid. In addition, a filter was installed to ensure that only the electrolyte solution with any and all microorganisms that can affect the electrosis filtered out is supplied back to the electrolyzer, and that the microorganisms exist only in the fermenter, designing the two system to work well together with utmost efficiency.

Through the developed hybrid system, the produced bioplastic, poly-3-hydroxybutyrate (PHB), of up to 83% of the cell dry weight was produced from CO2, which produced 1.38g of PHB from a 4 cm2 electrode, which is the world's first gram(g) level production and is more than 20 times more productive than previous research. In addition, the hybrid system is expected to be applied to various industrial processes in the future as it shows promises of the continuous culture system.

The corresponding authors, Professor Hyunjoo Lee and Distinguished Professor Sang Yup Lee noted that “The results of this research are technologies that can be applied to the production of various chemical substances as well as bioplastics, and are expected to be used as key parts needed in achieving carbon neutrality in the future.”

This research was received and performed with the supports from the CO2 Reduction Catalyst and Energy Device Technology Development Project, the Heterogeneous Atomic Catalyst Control Project, and the Next-generation Biorefinery Source Technology Development Project to lead the Biochemical Industry of the Oil-replacement Eco-friendly Chemical Technology Development Program by the Ministry of Science and ICT.

Figure 1. Schematic diagram and photo of the biohybrid CO2 electrolysis system.

(A) A conceptual scheme and (B) a photograph of the biohybrid CO2 electrolysis system. (C) A detailed scheme of reaction inside the system. Gaseous CO2 was converted to formate in the electrolyzer, and the formate was converted to PHB by the cells in the fermenter. The catholyte was developed so that it is compatible with both CO2 electrolysis and fermentation and was continuously circulated.

2023.03.30 View 12927 -

KAIST researchers devises a technology to utilize ultrahigh-resolution micro-LED with 40% reduced self-generated heat

In the digitized modern life, various forms of future displays, such as wearable and rollable displays are required. More and more people are wanting to connect to the virtual world whenever and wherever with the use of their smartglasses or smartwatches. Even further, we’ve been hearing about medical diagnosis kit on a shirt and a theatre-hat. However, it is not quite here in our hands yet due to technical limitations of being unable to fit as many pixels as a limited surface area of a glasses while keeping the power consumption at the a level that a hand held battery can supply, all the while the resolution of 4K+ is needed in order to perfectly immerse the users into the augmented or virtual reality through a wireless smartglasses or whatever the device.

KAIST (President Kwang Hyung Lee) announced on the 22nd that Professor Sang Hyeon Kim's research team of the Department of Electrical and Electronic Engineering re-examined the phenomenon of efficiency degradation of micro-LEDs with pixels in a size of micrometers (μm, one millionth of a meter) and found that it was possible to fundamentally resolve the problem by the use of epitaxial structure engineering.

Epitaxy refers to the process of stacking gallium nitride crystals that are used as a light emitting body on top of an ultrapure silicon or sapphire substrate used for μLEDs as a medium.

μLED is being actively studied because it has the advantages of superior brightness, contrast ratio, and lifespan compared to OLED. In 2018, Samsung Electronics commercialized a product equipped with μLED called 'The Wall'. And there is a prospect that Apple may be launching a μLED-mounted product in 2025.

In order to manufacture μLEDs, pixels are formed by cutting the epitaxial structure grown on a wafer into a cylinder or cuboid shape through an etching process, and this etching process is accompanied by a plasma-based process. However, these plasmas generate defects on the side of the pixel during the pixel formation process.

Therefore, as the pixel size becomes smaller and the resolution increases, the ratio of the surface area to the volume of the pixel increases, and defects on the side of the device that occur during processing further reduce the device efficiency of the μLED. Accordingly, a considerable amount of research has been conducted on mitigating or removing sidewall defects, but this method has a limit to the degree of improvement as it must be done at the post-processing stage after the grown of the epitaxial structure is finished.

The research team identified that there is a difference in the current moving to the sidewall of the μLED depending on the epitaxial structure during μLED device operation, and based on the findings, the team built a structure that is not sensitive to sidewall defects to solve the problem of reduced efficiency due to miniaturization of μLED devices. In addition, the proposed structure reduced the self-generated heat while the device was running by about 40% compared to the existing structure, which is also of great significance in commercialization of ultrahigh-resolution μLED displays.

This study, which was led by Woo Jin Baek of Professor Sang Hyeon Kim's research team at the KAIST School of Electrical and Electronic Engineering as the first author with guidance by Professor Sang Hyeon Kim and Professor Dae-Myeong Geum of the Chungbuk National University (who was with the team as a postdoctoral researcher at the time) as corresponding authors, was published in the international journal, 'Nature Communications' on March 17th. (Title of the paper: Ultra-low-current driven InGaN blue micro light-emitting diodes for electrically efficient and self-heating relaxed microdisplay).

Professor Sang Hyeon Kim said, "This technological development has great meaning in identifying the cause of the drop in efficiency, which was an obstacle to miniaturization of μLED, and solving it with the design of the epitaxial structure.“ He added, ”We are looking forward to it being used in manufacturing of ultrahigh-resolution displays in the future."

This research was carried out with the support of the Samsung Future Technology Incubation Center.

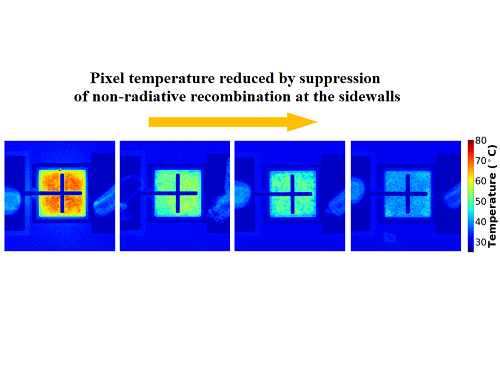

Figure 1. Image of electroluminescence distribution of μLEDs fabricated from epitaxial structures with quantum barriers of different thicknesses while the current is running

Figure 2. Thermal distribution images of devices fabricated with different epitaxial structures under the same amount of light.

Figure 3. Normalized external quantum efficiency of the device fabricated with the optimized epitaxial structure by sizes.

2023.03.23 View 9009

KAIST researchers devises a technology to utilize ultrahigh-resolution micro-LED with 40% reduced self-generated heat

In the digitized modern life, various forms of future displays, such as wearable and rollable displays are required. More and more people are wanting to connect to the virtual world whenever and wherever with the use of their smartglasses or smartwatches. Even further, we’ve been hearing about medical diagnosis kit on a shirt and a theatre-hat. However, it is not quite here in our hands yet due to technical limitations of being unable to fit as many pixels as a limited surface area of a glasses while keeping the power consumption at the a level that a hand held battery can supply, all the while the resolution of 4K+ is needed in order to perfectly immerse the users into the augmented or virtual reality through a wireless smartglasses or whatever the device.

KAIST (President Kwang Hyung Lee) announced on the 22nd that Professor Sang Hyeon Kim's research team of the Department of Electrical and Electronic Engineering re-examined the phenomenon of efficiency degradation of micro-LEDs with pixels in a size of micrometers (μm, one millionth of a meter) and found that it was possible to fundamentally resolve the problem by the use of epitaxial structure engineering.

Epitaxy refers to the process of stacking gallium nitride crystals that are used as a light emitting body on top of an ultrapure silicon or sapphire substrate used for μLEDs as a medium.

μLED is being actively studied because it has the advantages of superior brightness, contrast ratio, and lifespan compared to OLED. In 2018, Samsung Electronics commercialized a product equipped with μLED called 'The Wall'. And there is a prospect that Apple may be launching a μLED-mounted product in 2025.

In order to manufacture μLEDs, pixels are formed by cutting the epitaxial structure grown on a wafer into a cylinder or cuboid shape through an etching process, and this etching process is accompanied by a plasma-based process. However, these plasmas generate defects on the side of the pixel during the pixel formation process.

Therefore, as the pixel size becomes smaller and the resolution increases, the ratio of the surface area to the volume of the pixel increases, and defects on the side of the device that occur during processing further reduce the device efficiency of the μLED. Accordingly, a considerable amount of research has been conducted on mitigating or removing sidewall defects, but this method has a limit to the degree of improvement as it must be done at the post-processing stage after the grown of the epitaxial structure is finished.

The research team identified that there is a difference in the current moving to the sidewall of the μLED depending on the epitaxial structure during μLED device operation, and based on the findings, the team built a structure that is not sensitive to sidewall defects to solve the problem of reduced efficiency due to miniaturization of μLED devices. In addition, the proposed structure reduced the self-generated heat while the device was running by about 40% compared to the existing structure, which is also of great significance in commercialization of ultrahigh-resolution μLED displays.

This study, which was led by Woo Jin Baek of Professor Sang Hyeon Kim's research team at the KAIST School of Electrical and Electronic Engineering as the first author with guidance by Professor Sang Hyeon Kim and Professor Dae-Myeong Geum of the Chungbuk National University (who was with the team as a postdoctoral researcher at the time) as corresponding authors, was published in the international journal, 'Nature Communications' on March 17th. (Title of the paper: Ultra-low-current driven InGaN blue micro light-emitting diodes for electrically efficient and self-heating relaxed microdisplay).

Professor Sang Hyeon Kim said, "This technological development has great meaning in identifying the cause of the drop in efficiency, which was an obstacle to miniaturization of μLED, and solving it with the design of the epitaxial structure.“ He added, ”We are looking forward to it being used in manufacturing of ultrahigh-resolution displays in the future."

This research was carried out with the support of the Samsung Future Technology Incubation Center.

Figure 1. Image of electroluminescence distribution of μLEDs fabricated from epitaxial structures with quantum barriers of different thicknesses while the current is running

Figure 2. Thermal distribution images of devices fabricated with different epitaxial structures under the same amount of light.

Figure 3. Normalized external quantum efficiency of the device fabricated with the optimized epitaxial structure by sizes.

2023.03.23 View 9009 -

KAIST leads AI-based analysis on drug-drug interactions involving Paxlovid

KAIST (President Kwang Hyung Lee) announced on the 16th that an advanced AI-based drug interaction prediction technology developed by the Distinguished Professor Sang Yup Lee's research team in the Department of Biochemical Engineering that analyzed the interaction between the PaxlovidTM ingredients that are used as COVID-19 treatment and other prescription drugs was published as a thesis. This paper was published in the online edition of 「Proceedings of the National Academy of Sciences of America」 (PNAS), an internationally renowned academic journal, on the 13th of March.

* Thesis Title: Computational prediction of interactions between Paxlovid and prescription drugs (Authored by Yeji Kim (KAIST, co-first author), Jae Yong Ryu (Duksung Women's University, co-first author), Hyun Uk Kim (KAIST, co-first author), and Sang Yup Lee (KAIST, corresponding author))

In this study, the research team developed DeepDDI2, an advanced version of DeepDDI, an AI-based drug interaction prediction model they developed in 2018. DeepDDI2 is able to compute for and process a total of 113 drug-drug interaction (DDI) types, more than the 86 DDI types covered by the existing DeepDDI.

The research team used DeepDDI2 to predict possible interactions between the ingredients (ritonavir, nirmatrelvir) of Paxlovid*, a COVID-19 treatment, and other prescription drugs. The research team said that while among COVID-19 patients, high-risk patients with chronic diseases such as high blood pressure and diabetes are likely to be taking other drugs, drug-drug interactions and adverse drug reactions for Paxlovid have not been sufficiently analyzed, yet. This study was pursued in light of seeing how continued usage of the drug may lead to serious and unwanted complications.

* Paxlovid: Paxlovid is a COVID-19 treatment developed by Pfizer, an American pharmaceutical company, and received emergency use approval (EUA) from the US Food and Drug Administration (FDA) in December 2021.

The research team used DeepDDI2 to predict how Paxrovid's components, ritonavir and nirmatrelvir, would interact with 2,248 prescription drugs. As a result of the prediction, ritonavir was predicted to interact with 1,403 prescription drugs and nirmatrelvir with 673 drugs.

Using the prediction results, the research team proposed alternative drugs with the same mechanism but low drug interaction potential for prescription drugs with high adverse drug events (ADEs). Accordingly, 124 alternative drugs that could reduce the possible adverse DDI with ritonavir and 239 alternative drugs for nirmatrelvir were identified.

Through this research achievement, it became possible to use an deep learning technology to accurately predict drug-drug interactions (DDIs), and this is expected to play an important role in the digital healthcare, precision medicine and pharmaceutical industries by providing useful information in the process of developing new drugs and making prescriptions.

Distinguished Professor Sang Yup Lee said, "The results of this study are meaningful at times like when we would have to resort to using drugs that are developed in a hurry in the face of an urgent situations like the COVID-19 pandemic, that it is now possible to identify and take necessary actions against adverse drug reactions caused by drug-drug interactions very quickly.”

This research was carried out with the support of the KAIST New-Deal Project for COVID-19 Science and Technology and the Bio·Medical Technology Development Project supported by the Ministry of Science and ICT.

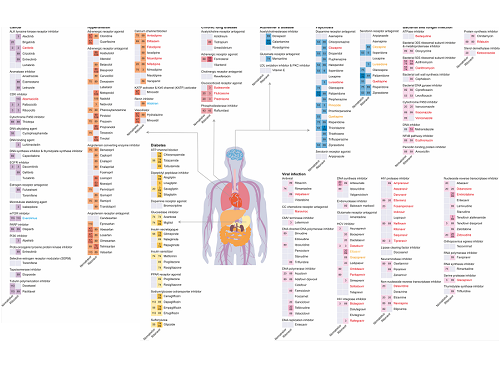

Figure 1. Results of drug interaction prediction between Paxlovid ingredients and representative approved drugs using DeepDDI2

2023.03.16 View 10484

KAIST leads AI-based analysis on drug-drug interactions involving Paxlovid

KAIST (President Kwang Hyung Lee) announced on the 16th that an advanced AI-based drug interaction prediction technology developed by the Distinguished Professor Sang Yup Lee's research team in the Department of Biochemical Engineering that analyzed the interaction between the PaxlovidTM ingredients that are used as COVID-19 treatment and other prescription drugs was published as a thesis. This paper was published in the online edition of 「Proceedings of the National Academy of Sciences of America」 (PNAS), an internationally renowned academic journal, on the 13th of March.

* Thesis Title: Computational prediction of interactions between Paxlovid and prescription drugs (Authored by Yeji Kim (KAIST, co-first author), Jae Yong Ryu (Duksung Women's University, co-first author), Hyun Uk Kim (KAIST, co-first author), and Sang Yup Lee (KAIST, corresponding author))

In this study, the research team developed DeepDDI2, an advanced version of DeepDDI, an AI-based drug interaction prediction model they developed in 2018. DeepDDI2 is able to compute for and process a total of 113 drug-drug interaction (DDI) types, more than the 86 DDI types covered by the existing DeepDDI.