interactive

-

KAIST Professor Uichin Lee Receives Distinguished Paper Award from ACM

< Photo. Professor Uichin Lee (left) receiving the award >

KAIST (President Kwang Hyung Lee) announced on the 25th of October that Professor Uichin Lee’s research team from the School of Computing received the Distinguished Paper Award at the International Joint Conference on Pervasive and Ubiquitous Computing and International Symposium on Wearable Computing (Ubicomp / ISWC) hosted by the Association for Computing Machinery (ACM) in Melbourne, Australia on October 8.

The ACM Ubiquitous Computing Conference is the most prestigious international conference where leading universities and global companies from around the world present the latest research results on ubiquitous computing and wearable technologies in the field of human-computer interaction (HCI).

The main conference program is composed of invited papers published in the Proceedings of the ACM (PACM) on Interactive, Mobile, Wearable and Ubiquitous Technologies (IMWUT), which covers the latest research in the field of ubiquitous and wearable computing.

The Distinguished Paper Award Selection Committee selected eight papers among 205 papers published in Vol. 7 of the ACM Proceedings (PACM IMWUT) that made outstanding and exemplary contributions to the research community. The committee consists of 16 prominent experts who are current and former members of the journal's editorial board which made the selection after a rigorous review of all papers for a period that stretched over a month.

< Figure 1. BeActive mobile app to promote physical activity to form active lifestyle habits >

The research that won the Distinguished Paper Award was conducted by Dr. Junyoung Park, a graduate of the KAIST Graduate School of Data Science, as the 1st author, and was titled “Understanding Disengagement in Just-in-Time Mobile Health Interventions”

Professor Uichin Lee’s research team explored user engagement of ‘Just-in-Time Mobile Health Interventions’ that actively provide interventions in opportune situations by utilizing sensor data collected from health management apps, based on the premise that these apps are aptly in use to ensure effectiveness.

< Figure 2. Traditional user-requested digital behavior change intervention (DBCI) delivery (Pull) vs. Automatic transmission (Push) for Just-in-Time (JIT) mobile DBCI using smartphone sensing technologies >

The research team conducted a systematic analysis of user disengagement or the decline in user engagement in digital behavior change interventions. They developed the BeActive system, an app that promotes physical activities designed to help forming active lifestyle habits, and systematically analyzed the effects of users’ self-control ability and boredom-proneness on compliance with behavioral interventions over time.

The results of an 8-week field trial revealed that even if just-in-time interventions are provided according to the user’s situation, it is impossible to avoid a decline in participation. However, for users with high self-control and low boredom tendency, the compliance with just-in-time interventions delivered through the app was significantly higher than that of users in other groups.

In particular, users with high boredom proneness easily got tired of the repeated push interventions, and their compliance with the app decreased more quickly than in other groups.

< Figure 3. Just-in-time Mobile Health Intervention: a demonstrative case of the BeActive system: When a user is identified to be sitting for more than 50 mins, an automatic push notification is sent to recommend a short active break to complete for reward points. >

Professor Uichin Lee explained, “As the first study on user engagement in digital therapeutics and wellness services utilizing mobile just-in-time health interventions, this research provides a foundation for exploring ways to empower user engagement.” He further added, “By leveraging large language models (LLMs) and comprehensive context-aware technologies, it will be possible to develop user-centered AI technologies that can significantly boost engagement."

< Figure 4. A conceptual illustration of user engagement in digital health apps. Engagement in digital health apps consists of (1) engagement in using digital health apps and (2) engagement in behavioral interventions provided by digital health apps, i.e., compliance with behavioral interventions. Repeated adherences to behavioral interventions recommended by digital health apps can help achieve the distal health goals. >

This study was conducted with the support of the 2021 Biomedical Technology Development Program and the 2022 Basic Research and Development Program of the National Research Foundation of Korea funded by the Ministry of Science and ICT.

< Figure 5. A conceptual illustration of user disengagement and engagement of digital behavior change intervention (DBCI) apps. In general, user engagement of digital health intervention apps consists of two components: engagement in digital health apps and engagement in behavioral interventions recommended by such apps (known as behavioral compliance or intervention adherence). The distinctive stages of user can be divided into adoption, abandonment, and attrition. >

< Figure 6. Trends of changes in frequency of app usage and adherence to behavioral intervention over 8 weeks, ● SC: Self-Control Ability (High-SC: user group with high self-control, Low-SC: user group with low self-control) ● BD: Boredom-Proneness (High-BD: user group with high boredom-proneness, Low-BD: user group with low boredom-proneness). The app usage frequencies were declined over time, but the adherence rates of those participants with High-SC and Low-BD were significantly higher than other groups. >

2024.10.25 View 949

KAIST Professor Uichin Lee Receives Distinguished Paper Award from ACM

< Photo. Professor Uichin Lee (left) receiving the award >

KAIST (President Kwang Hyung Lee) announced on the 25th of October that Professor Uichin Lee’s research team from the School of Computing received the Distinguished Paper Award at the International Joint Conference on Pervasive and Ubiquitous Computing and International Symposium on Wearable Computing (Ubicomp / ISWC) hosted by the Association for Computing Machinery (ACM) in Melbourne, Australia on October 8.

The ACM Ubiquitous Computing Conference is the most prestigious international conference where leading universities and global companies from around the world present the latest research results on ubiquitous computing and wearable technologies in the field of human-computer interaction (HCI).

The main conference program is composed of invited papers published in the Proceedings of the ACM (PACM) on Interactive, Mobile, Wearable and Ubiquitous Technologies (IMWUT), which covers the latest research in the field of ubiquitous and wearable computing.

The Distinguished Paper Award Selection Committee selected eight papers among 205 papers published in Vol. 7 of the ACM Proceedings (PACM IMWUT) that made outstanding and exemplary contributions to the research community. The committee consists of 16 prominent experts who are current and former members of the journal's editorial board which made the selection after a rigorous review of all papers for a period that stretched over a month.

< Figure 1. BeActive mobile app to promote physical activity to form active lifestyle habits >

The research that won the Distinguished Paper Award was conducted by Dr. Junyoung Park, a graduate of the KAIST Graduate School of Data Science, as the 1st author, and was titled “Understanding Disengagement in Just-in-Time Mobile Health Interventions”

Professor Uichin Lee’s research team explored user engagement of ‘Just-in-Time Mobile Health Interventions’ that actively provide interventions in opportune situations by utilizing sensor data collected from health management apps, based on the premise that these apps are aptly in use to ensure effectiveness.

< Figure 2. Traditional user-requested digital behavior change intervention (DBCI) delivery (Pull) vs. Automatic transmission (Push) for Just-in-Time (JIT) mobile DBCI using smartphone sensing technologies >

The research team conducted a systematic analysis of user disengagement or the decline in user engagement in digital behavior change interventions. They developed the BeActive system, an app that promotes physical activities designed to help forming active lifestyle habits, and systematically analyzed the effects of users’ self-control ability and boredom-proneness on compliance with behavioral interventions over time.

The results of an 8-week field trial revealed that even if just-in-time interventions are provided according to the user’s situation, it is impossible to avoid a decline in participation. However, for users with high self-control and low boredom tendency, the compliance with just-in-time interventions delivered through the app was significantly higher than that of users in other groups.

In particular, users with high boredom proneness easily got tired of the repeated push interventions, and their compliance with the app decreased more quickly than in other groups.

< Figure 3. Just-in-time Mobile Health Intervention: a demonstrative case of the BeActive system: When a user is identified to be sitting for more than 50 mins, an automatic push notification is sent to recommend a short active break to complete for reward points. >

Professor Uichin Lee explained, “As the first study on user engagement in digital therapeutics and wellness services utilizing mobile just-in-time health interventions, this research provides a foundation for exploring ways to empower user engagement.” He further added, “By leveraging large language models (LLMs) and comprehensive context-aware technologies, it will be possible to develop user-centered AI technologies that can significantly boost engagement."

< Figure 4. A conceptual illustration of user engagement in digital health apps. Engagement in digital health apps consists of (1) engagement in using digital health apps and (2) engagement in behavioral interventions provided by digital health apps, i.e., compliance with behavioral interventions. Repeated adherences to behavioral interventions recommended by digital health apps can help achieve the distal health goals. >

This study was conducted with the support of the 2021 Biomedical Technology Development Program and the 2022 Basic Research and Development Program of the National Research Foundation of Korea funded by the Ministry of Science and ICT.

< Figure 5. A conceptual illustration of user disengagement and engagement of digital behavior change intervention (DBCI) apps. In general, user engagement of digital health intervention apps consists of two components: engagement in digital health apps and engagement in behavioral interventions recommended by such apps (known as behavioral compliance or intervention adherence). The distinctive stages of user can be divided into adoption, abandonment, and attrition. >

< Figure 6. Trends of changes in frequency of app usage and adherence to behavioral intervention over 8 weeks, ● SC: Self-Control Ability (High-SC: user group with high self-control, Low-SC: user group with low self-control) ● BD: Boredom-Proneness (High-BD: user group with high boredom-proneness, Low-BD: user group with low boredom-proneness). The app usage frequencies were declined over time, but the adherence rates of those participants with High-SC and Low-BD were significantly higher than other groups. >

2024.10.25 View 949 -

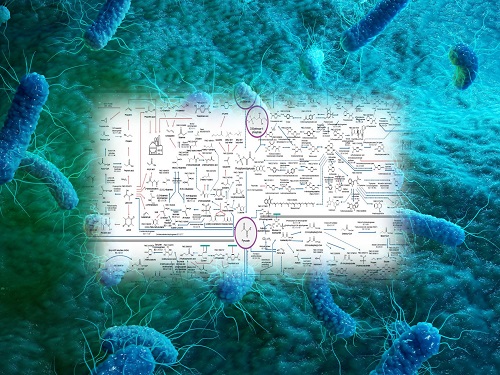

Interactive Map of Metabolical Synthesis of Chemicals

An interactive map that compiled the chemicals produced by biological, chemical and combined reactions has been distributed on the web

- A team led by Distinguished Professor Sang Yup Lee of the Department of Chemical and Biomolecular Engineering, organized and distributed an all-inclusive listing of chemical substances that can be synthesized using microorganisms

- It is expected to be used by researchers around the world as it enables easy assessment of the synthetic pathway through the web.

A research team comprised of Woo Dae Jang, Gi Bae Kim, and Distinguished Professor Sang Yup Lee of the Department of Chemical and Biomolecular Engineering at KAIST reported an interactive metabolic map of bio-based chemicals. Their research paper “An interactive metabolic map of bio-based chemicals” was published online in Trends in Biotechnology on August 10, 2022.

As a response to rapid climate change and environmental pollution, research on the production of petrochemical products using microorganisms is receiving attention as a sustainable alternative to existing methods of productions. In order to synthesize various chemical substances, materials, and fuel using microorganisms, it is necessary to first construct the biosynthetic pathway toward desired product by exploration and discovery and introduce them into microorganisms. In addition, in order to efficiently synthesize various chemical substances, it is sometimes necessary to employ chemical methods along with bioengineering methods using microorganisms at the same time. For the production of non-native chemicals, novel pathways are designed by recruiting enzymes from heterologous sources or employing enzymes designed though rational engineering, directed evolution, or ab initio design.

The research team had completed a map of chemicals which compiled all available pathways of biological and/or chemical reactions that lead to the production of various bio-based chemicals back in 2019 and published the map in Nature Catalysis. The map was distributed in the form of a poster to industries and academia so that the synthesis paths of bio-based chemicals could be checked at a glance.

The research team has expanded the bio-based chemicals map this time in the form of an interactive map on the web so that anyone with internet access can quickly explore efficient paths to synthesize desired products. The web-based map provides interactive visual tools to allow interactive visualization, exploration, and analysis of complex networks of biological and/or chemical reactions toward the desired products. In addition, the reported paper also discusses the production of natural compounds that are used for diverse purposes such as food and medicine, which will help designing novel pathways through similar approaches or by exploiting the promiscuity of enzymes described in the map. The published bio-based chemicals map is also available at http://systemsbiotech.co.kr.

The co-first authors, Dr. Woo Dae Jang and Ph.D. student Gi Bae Kim, said, “We conducted this study to address the demand for updating the previously distributed chemicals map and enhancing its versatility.” “The map is expected to be utilized in a variety of research and in efforts to set strategies and prospects for chemical production incorporating bio and chemical methods that are detailed in the map.”

Distinguished Professor Sang Yup Lee said, “The interactive bio-based chemicals map is expected to help design and optimization of the metabolic pathways for the biosynthesis of target chemicals together with the strategies of chemical conversions, serving as a blueprint for developing further ideas on the production of desired chemicals through biological and/or chemical reactions.”

The interactive metabolic map of bio-based chemicals.

2022.08.11 View 10113

Interactive Map of Metabolical Synthesis of Chemicals

An interactive map that compiled the chemicals produced by biological, chemical and combined reactions has been distributed on the web

- A team led by Distinguished Professor Sang Yup Lee of the Department of Chemical and Biomolecular Engineering, organized and distributed an all-inclusive listing of chemical substances that can be synthesized using microorganisms

- It is expected to be used by researchers around the world as it enables easy assessment of the synthetic pathway through the web.

A research team comprised of Woo Dae Jang, Gi Bae Kim, and Distinguished Professor Sang Yup Lee of the Department of Chemical and Biomolecular Engineering at KAIST reported an interactive metabolic map of bio-based chemicals. Their research paper “An interactive metabolic map of bio-based chemicals” was published online in Trends in Biotechnology on August 10, 2022.

As a response to rapid climate change and environmental pollution, research on the production of petrochemical products using microorganisms is receiving attention as a sustainable alternative to existing methods of productions. In order to synthesize various chemical substances, materials, and fuel using microorganisms, it is necessary to first construct the biosynthetic pathway toward desired product by exploration and discovery and introduce them into microorganisms. In addition, in order to efficiently synthesize various chemical substances, it is sometimes necessary to employ chemical methods along with bioengineering methods using microorganisms at the same time. For the production of non-native chemicals, novel pathways are designed by recruiting enzymes from heterologous sources or employing enzymes designed though rational engineering, directed evolution, or ab initio design.

The research team had completed a map of chemicals which compiled all available pathways of biological and/or chemical reactions that lead to the production of various bio-based chemicals back in 2019 and published the map in Nature Catalysis. The map was distributed in the form of a poster to industries and academia so that the synthesis paths of bio-based chemicals could be checked at a glance.

The research team has expanded the bio-based chemicals map this time in the form of an interactive map on the web so that anyone with internet access can quickly explore efficient paths to synthesize desired products. The web-based map provides interactive visual tools to allow interactive visualization, exploration, and analysis of complex networks of biological and/or chemical reactions toward the desired products. In addition, the reported paper also discusses the production of natural compounds that are used for diverse purposes such as food and medicine, which will help designing novel pathways through similar approaches or by exploiting the promiscuity of enzymes described in the map. The published bio-based chemicals map is also available at http://systemsbiotech.co.kr.

The co-first authors, Dr. Woo Dae Jang and Ph.D. student Gi Bae Kim, said, “We conducted this study to address the demand for updating the previously distributed chemicals map and enhancing its versatility.” “The map is expected to be utilized in a variety of research and in efforts to set strategies and prospects for chemical production incorporating bio and chemical methods that are detailed in the map.”

Distinguished Professor Sang Yup Lee said, “The interactive bio-based chemicals map is expected to help design and optimization of the metabolic pathways for the biosynthesis of target chemicals together with the strategies of chemical conversions, serving as a blueprint for developing further ideas on the production of desired chemicals through biological and/or chemical reactions.”

The interactive metabolic map of bio-based chemicals.

2022.08.11 View 10113 -

Two Professors Receive Awards from the Korea Robotics Society

< Professor Jee-Hwan Ryu and Professor Ayoung Kim >

The Korea Robotics Society (KROS) conferred awards onto two KAIST professors from the Department of Civil and Environmental Engineering in recognition of their achievements and contributions to the development of the robotics industry in 2019. Professor Jee-Hwan Ryu has been actively engaged in researching the field of teleoperation, and this led him to win the KROS Robotics Innovation (KRI) Award. The KRI Award was newly established in 2019 by the KROS, in order to encourage researchers who have made innovative achievements in robotics. Professor Ryu shared the honor of being the first winner of this award with Professor Jaeheung Park of Seoul National University. Professor Ayoung Kim, from the same department, received the Young Investigator Award presented to emerging robitics researchers under 40 years of age. (END)

2019.12.19 View 7796

Two Professors Receive Awards from the Korea Robotics Society

< Professor Jee-Hwan Ryu and Professor Ayoung Kim >

The Korea Robotics Society (KROS) conferred awards onto two KAIST professors from the Department of Civil and Environmental Engineering in recognition of their achievements and contributions to the development of the robotics industry in 2019. Professor Jee-Hwan Ryu has been actively engaged in researching the field of teleoperation, and this led him to win the KROS Robotics Innovation (KRI) Award. The KRI Award was newly established in 2019 by the KROS, in order to encourage researchers who have made innovative achievements in robotics. Professor Ryu shared the honor of being the first winner of this award with Professor Jaeheung Park of Seoul National University. Professor Ayoung Kim, from the same department, received the Young Investigator Award presented to emerging robitics researchers under 40 years of age. (END)

2019.12.19 View 7796 -

AI to Determine When to Intervene with Your Driving

(Professor Uichin Lee (left) and PhD candidate Auk Kim)

Can your AI agent judge when to talk to you while you are driving? According to a KAIST research team, their in-vehicle conservation service technology will judge when it is appropriate to contact you to ensure your safety.

Professor Uichin Lee from the Department of Industrial and Systems Engineering at KAIST and his research team have developed AI technology that automatically detects safe moments for AI agents to provide conversation services to drivers.

Their research focuses on solving the potential problems of distraction created by in-vehicle conversation services. If an AI agent talks to a driver at an inopportune moment, such as while making a turn, a car accident will be more likely to occur.

In-vehicle conversation services need to be convenient as well as safe. However, the cognitive burden of multitasking negatively influences the quality of the service. Users tend to be more distracted during certain traffic conditions. To address this long-standing challenge of the in-vehicle conversation services, the team introduced a composite cognitive model that considers both safe driving and auditory-verbal service performance and used a machine-learning model for all collected data.

The combination of these individual measures is able to determine the appropriate moments for conversation and most appropriate types of conversational services. For instance, in the case of delivering simple-context information, such as a weather forecast, driver safety alone would be the most appropriate consideration. Meanwhile, when delivering information that requires a driver response, such as a “Yes” or “No,” the combination of driver safety and auditory-verbal performance should be considered.

The research team developed a prototype of an in-vehicle conversation service based on a navigation app that can be used in real driving environments. The app was also connected to the vehicle to collect in-vehicle OBD-II/CAN data, such as the steering wheel angle and brake pedal position, and mobility and environmental data such as the distance between successive cars and traffic flow.

Using pseudo-conversation services, the research team collected a real-world driving dataset consisting of 1,388 interactions and sensor data from 29 drivers who interacted with AI conversational agents. Machine learning analysis based on the dataset demonstrated that the opportune moments for driver interruption could be correctly inferred with 87% accuracy.

The safety enhancement technology developed by the team is expected to minimize driver distractions caused by in-vehicle conversation services. This technology can be directly applied to current in-vehicle systems that provide conversation services. It can also be extended and applied to the real-time detection of driver distraction problems caused by the use of a smartphone while driving.

Professor Lee said, “In the near future, cars will proactively deliver various in-vehicle conversation services. This technology will certainly help vehicles interact with their drivers safely as it can fairly accurately determine when to provide conversation services using only basic sensor data generated by cars.”

The researchers presented their findings at the ACM International Joint Conference on Pervasive and Ubiquitous Computing (Ubicomp’19) in London, UK. This research was supported in part by Hyundai NGV and by the Next-Generation Information Computing Development Program through the National Research Foundation of Korea (NRF) funded by the Ministry of Science and ICT.

(Figure: Visual description of safe enhancement technology for in-vehicle conversation services)

2019.11.13 View 14771

AI to Determine When to Intervene with Your Driving

(Professor Uichin Lee (left) and PhD candidate Auk Kim)

Can your AI agent judge when to talk to you while you are driving? According to a KAIST research team, their in-vehicle conservation service technology will judge when it is appropriate to contact you to ensure your safety.

Professor Uichin Lee from the Department of Industrial and Systems Engineering at KAIST and his research team have developed AI technology that automatically detects safe moments for AI agents to provide conversation services to drivers.

Their research focuses on solving the potential problems of distraction created by in-vehicle conversation services. If an AI agent talks to a driver at an inopportune moment, such as while making a turn, a car accident will be more likely to occur.

In-vehicle conversation services need to be convenient as well as safe. However, the cognitive burden of multitasking negatively influences the quality of the service. Users tend to be more distracted during certain traffic conditions. To address this long-standing challenge of the in-vehicle conversation services, the team introduced a composite cognitive model that considers both safe driving and auditory-verbal service performance and used a machine-learning model for all collected data.

The combination of these individual measures is able to determine the appropriate moments for conversation and most appropriate types of conversational services. For instance, in the case of delivering simple-context information, such as a weather forecast, driver safety alone would be the most appropriate consideration. Meanwhile, when delivering information that requires a driver response, such as a “Yes” or “No,” the combination of driver safety and auditory-verbal performance should be considered.

The research team developed a prototype of an in-vehicle conversation service based on a navigation app that can be used in real driving environments. The app was also connected to the vehicle to collect in-vehicle OBD-II/CAN data, such as the steering wheel angle and brake pedal position, and mobility and environmental data such as the distance between successive cars and traffic flow.

Using pseudo-conversation services, the research team collected a real-world driving dataset consisting of 1,388 interactions and sensor data from 29 drivers who interacted with AI conversational agents. Machine learning analysis based on the dataset demonstrated that the opportune moments for driver interruption could be correctly inferred with 87% accuracy.

The safety enhancement technology developed by the team is expected to minimize driver distractions caused by in-vehicle conversation services. This technology can be directly applied to current in-vehicle systems that provide conversation services. It can also be extended and applied to the real-time detection of driver distraction problems caused by the use of a smartphone while driving.

Professor Lee said, “In the near future, cars will proactively deliver various in-vehicle conversation services. This technology will certainly help vehicles interact with their drivers safely as it can fairly accurately determine when to provide conversation services using only basic sensor data generated by cars.”

The researchers presented their findings at the ACM International Joint Conference on Pervasive and Ubiquitous Computing (Ubicomp’19) in London, UK. This research was supported in part by Hyundai NGV and by the Next-Generation Information Computing Development Program through the National Research Foundation of Korea (NRF) funded by the Ministry of Science and ICT.

(Figure: Visual description of safe enhancement technology for in-vehicle conversation services)

2019.11.13 View 14771 -

Image Analysis to Automatically Quantify Gender Bias in Movies

Many commercial films worldwide continue to express womanhood in a stereotypical manner, a recent study using image analysis showed. A KAIST research team developed a novel image analysis method for automatically quantifying the degree of gender bias in films.

The ‘Bechdel Test’ has been the most representative and general method of evaluating gender bias in films. This test indicates the degree of gender bias in a film by measuring how active the presence of women is in a film. A film passes the Bechdel Test if the film (1) has at least two female characters, (2) who talk to each other, and (3) their conversation is not related to the male characters.

However, the Bechdel Test has fundamental limitations regarding the accuracy and practicality of the evaluation. Firstly, the Bechdel Test requires considerable human resources, as it is performed subjectively by a person. More importantly, the Bechdel Test analyzes only a single aspect of the film, the dialogues between characters in the script, and provides only a dichotomous result of passing the test, neglecting the fact that a film is a visual art form reflecting multi-layered and complicated gender bias phenomena. It is also difficult to fully represent today’s various discourse on gender bias, which is much more diverse than in 1985 when the Bechdel Test was first presented.

Inspired by these limitations, a KAIST research team led by Professor Byungjoo Lee from the Graduate School of Culture Technology proposed an advanced system that uses computer vision technology to automatically analyzes the visual information of each frame of the film. This allows the system to more accurately and practically evaluate the degree to which female and male characters are discriminatingly depicted in a film in quantitative terms, and further enables the revealing of gender bias that conventional analysis methods could not yet detect.

Professor Lee and his researchers Ji Yoon Jang and Sangyoon Lee analyzed 40 films from Hollywood and South Korea released between 2017 and 2018. They downsampled the films from 24 to 3 frames per second, and used Microsoft’s Face API facial recognition technology and object detection technology YOLO9000 to verify the details of the characters and their surrounding objects in the scenes.

Using the new system, the team computed eight quantitative indices that describe the representation of a particular gender in the films. They are: emotional diversity, spatial staticity, spatial occupancy, temporal occupancy, mean age, intellectual image, emphasis on appearance, and type and frequency of surrounding objects.

Figure 1. System Diagram

Figure 2. 40 Hollywood and Korean Films Analyzed in the Study

According to the emotional diversity index, the depicted women were found to be more prone to expressing passive emotions, such as sadness, fear, and surprise. In contrast, male characters in the same films were more likely to demonstrate active emotions, such as anger and hatred.

Figure 3. Difference in Emotional Diversity between Female and Male Characters

The type and frequency of surrounding objects index revealed that female characters and automobiles were tracked together only 55.7 % as much as that of male characters, while they were more likely to appear with furniture and in a household, with 123.9% probability.

In cases of temporal occupancy and mean age, female characters appeared less frequently in films than males at the rate of 56%, and were on average younger in 79.1% of the cases. These two indices were especially conspicuous in Korean films.

Professor Lee said, “Our research confirmed that many commercial films depict women from a stereotypical perspective. I hope this result promotes public awareness of the importance of taking prudence when filmmakers create characters in films.”

This study was supported by KAIST College of Liberal Arts and Convergence Science as part of the Venture Research Program for Master’s and PhD Students, and will be presented at the 22nd ACM Conference on Computer-Supported Cooperative Work and Social Computing (CSCW) on November 11 to be held in Austin, Texas.

Publication:

Ji Yoon Jang, Sangyoon Lee, and Byungjoo Lee. 2019. Quantification of Gender Representation Bias in Commercial Films based on Image Analysis. In Proceedings of the 22nd ACM Conference on Computer-Supported Cooperative Work and Social Computing (CSCW). ACM, New York, NY, USA, Article 198, 29 pages. https://doi.org/10.1145/3359300

Link to download the full-text paper:

https://files.cargocollective.com/611692/cscw198-jangA--1-.pdf

Profile: Prof. Byungjoo Lee, MD, PhD

byungjoo.lee@kaist.ac.kr

http://kiml.org/

Assistant Professor

Graduate School of Culture Technology (CT)

Korea Advanced Institute of Science and Technology (KAIST)

https://www.kaist.ac.kr Daejeon 34141, Korea

Profile: Ji Yoon Jang, M.S.

yoone3422@kaist.ac.kr

Interactive Media Lab

Graduate School of Culture Technology (CT)

Korea Advanced Institute of Science and Technology (KAIST)

https://www.kaist.ac.kr Daejeon 34141, Korea

Profile: Sangyoon Lee, M.S. Candidate

sl2820@kaist.ac.kr

Interactive Media Lab

Graduate School of Culture Technology (CT)

Korea Advanced Institute of Science and Technology (KAIST)

https://www.kaist.ac.kr Daejeon 34141, Korea

(END)

2019.10.17 View 22619

Image Analysis to Automatically Quantify Gender Bias in Movies

Many commercial films worldwide continue to express womanhood in a stereotypical manner, a recent study using image analysis showed. A KAIST research team developed a novel image analysis method for automatically quantifying the degree of gender bias in films.

The ‘Bechdel Test’ has been the most representative and general method of evaluating gender bias in films. This test indicates the degree of gender bias in a film by measuring how active the presence of women is in a film. A film passes the Bechdel Test if the film (1) has at least two female characters, (2) who talk to each other, and (3) their conversation is not related to the male characters.

However, the Bechdel Test has fundamental limitations regarding the accuracy and practicality of the evaluation. Firstly, the Bechdel Test requires considerable human resources, as it is performed subjectively by a person. More importantly, the Bechdel Test analyzes only a single aspect of the film, the dialogues between characters in the script, and provides only a dichotomous result of passing the test, neglecting the fact that a film is a visual art form reflecting multi-layered and complicated gender bias phenomena. It is also difficult to fully represent today’s various discourse on gender bias, which is much more diverse than in 1985 when the Bechdel Test was first presented.

Inspired by these limitations, a KAIST research team led by Professor Byungjoo Lee from the Graduate School of Culture Technology proposed an advanced system that uses computer vision technology to automatically analyzes the visual information of each frame of the film. This allows the system to more accurately and practically evaluate the degree to which female and male characters are discriminatingly depicted in a film in quantitative terms, and further enables the revealing of gender bias that conventional analysis methods could not yet detect.

Professor Lee and his researchers Ji Yoon Jang and Sangyoon Lee analyzed 40 films from Hollywood and South Korea released between 2017 and 2018. They downsampled the films from 24 to 3 frames per second, and used Microsoft’s Face API facial recognition technology and object detection technology YOLO9000 to verify the details of the characters and their surrounding objects in the scenes.

Using the new system, the team computed eight quantitative indices that describe the representation of a particular gender in the films. They are: emotional diversity, spatial staticity, spatial occupancy, temporal occupancy, mean age, intellectual image, emphasis on appearance, and type and frequency of surrounding objects.

Figure 1. System Diagram

Figure 2. 40 Hollywood and Korean Films Analyzed in the Study

According to the emotional diversity index, the depicted women were found to be more prone to expressing passive emotions, such as sadness, fear, and surprise. In contrast, male characters in the same films were more likely to demonstrate active emotions, such as anger and hatred.

Figure 3. Difference in Emotional Diversity between Female and Male Characters

The type and frequency of surrounding objects index revealed that female characters and automobiles were tracked together only 55.7 % as much as that of male characters, while they were more likely to appear with furniture and in a household, with 123.9% probability.

In cases of temporal occupancy and mean age, female characters appeared less frequently in films than males at the rate of 56%, and were on average younger in 79.1% of the cases. These two indices were especially conspicuous in Korean films.

Professor Lee said, “Our research confirmed that many commercial films depict women from a stereotypical perspective. I hope this result promotes public awareness of the importance of taking prudence when filmmakers create characters in films.”

This study was supported by KAIST College of Liberal Arts and Convergence Science as part of the Venture Research Program for Master’s and PhD Students, and will be presented at the 22nd ACM Conference on Computer-Supported Cooperative Work and Social Computing (CSCW) on November 11 to be held in Austin, Texas.

Publication:

Ji Yoon Jang, Sangyoon Lee, and Byungjoo Lee. 2019. Quantification of Gender Representation Bias in Commercial Films based on Image Analysis. In Proceedings of the 22nd ACM Conference on Computer-Supported Cooperative Work and Social Computing (CSCW). ACM, New York, NY, USA, Article 198, 29 pages. https://doi.org/10.1145/3359300

Link to download the full-text paper:

https://files.cargocollective.com/611692/cscw198-jangA--1-.pdf

Profile: Prof. Byungjoo Lee, MD, PhD

byungjoo.lee@kaist.ac.kr

http://kiml.org/

Assistant Professor

Graduate School of Culture Technology (CT)

Korea Advanced Institute of Science and Technology (KAIST)

https://www.kaist.ac.kr Daejeon 34141, Korea

Profile: Ji Yoon Jang, M.S.

yoone3422@kaist.ac.kr

Interactive Media Lab

Graduate School of Culture Technology (CT)

Korea Advanced Institute of Science and Technology (KAIST)

https://www.kaist.ac.kr Daejeon 34141, Korea

Profile: Sangyoon Lee, M.S. Candidate

sl2820@kaist.ac.kr

Interactive Media Lab

Graduate School of Culture Technology (CT)

Korea Advanced Institute of Science and Technology (KAIST)

https://www.kaist.ac.kr Daejeon 34141, Korea

(END)

2019.10.17 View 22619 -

A graduate-level education for working professionals in science programs and exhibitions will be available from mid-August this year.

The Graduate School of Culture Technology (GSCT), KAIST, has created a new course for professionals who purse their career in science programs and exhibitions, which will start on August 19 and continue through the end of November 2010. The course will be held at Digital Media City in Seoul.

The course, also co-sponsored by National Science Museum, will offer students tuition-free opportunities to brush up their knowledge on the administration, policy, culture, technology, planning, contents development, and technology & design development, of science programs and exhibitions. Such subjects as science contents, interaction exhibitions, and utilization of new media will be studied and discussed during the course. Students will also have a class that is interactive, engaging, and visual, as well as provides hands-on learning activities.

A total of 30 candidates will be chosen for the course. Eligible applicants are graduates with a B.S. degree in the relevant filed, science program designers and exhibitors, curators for science and engineering museums, and policy planners for public and private science development programs.

2010.08.12 View 10988

A graduate-level education for working professionals in science programs and exhibitions will be available from mid-August this year.

The Graduate School of Culture Technology (GSCT), KAIST, has created a new course for professionals who purse their career in science programs and exhibitions, which will start on August 19 and continue through the end of November 2010. The course will be held at Digital Media City in Seoul.

The course, also co-sponsored by National Science Museum, will offer students tuition-free opportunities to brush up their knowledge on the administration, policy, culture, technology, planning, contents development, and technology & design development, of science programs and exhibitions. Such subjects as science contents, interaction exhibitions, and utilization of new media will be studied and discussed during the course. Students will also have a class that is interactive, engaging, and visual, as well as provides hands-on learning activities.

A total of 30 candidates will be chosen for the course. Eligible applicants are graduates with a B.S. degree in the relevant filed, science program designers and exhibitors, curators for science and engineering museums, and policy planners for public and private science development programs.

2010.08.12 View 10988 -

Sona Kwak wins first prize in international robot design contest

Sona Kwak wins first prize in international robot design contest

Sona Kwak (Doctor’s course, Department of Industrial Design) won the first prize in an international robot design contest.

Kwak exhibited an emotional robot of ‘Hamie’ at ‘Robot Design Contest for Students’ in Ro-Man 2006/ The 15th IEEE International Symposium on Robot and Human Interactive Communication, which was held at University of Hertfordshire, United Kingdom for three days from September 6 (Wed) and obtained the glory of the first prize.

‘Hamie’, the work of the first prize, has been devised in terms of emotional communication among human beings. The design concept of ‘Hamie’ is a portable emotional robot that can convey even ‘intimacy’ using senses of seeing, hearing, and touching beyond a simple communication function. The design of ‘Hamie’ was estimated to best coincide with the topic of the contest in consideration of its function that allows emotional mutual action between human beings as well as mutual action between human and robot, or robot and robot. ‘Hamie’ is not an actual embodiment but proposed as ‘a concept and design of a robot’.

‘Ro-man’ is a world-famous academic conference in the research field of mutual action between robot and human being, and ‘Robot Design Contest for Students’ is a contest to scout for creative and artistic ideas on the design and structure of future robots and exhibits works from all over world.

Kwak is now seeking to develop the contents and designs of various next-generation service robots such as ▲ ottoro ? cleaning robot ▲ robot for blind ▲ robot for the old ▲ robot for education assistance ▲ robot for office affairs ▲ ubiquitos robot in her lab (PES Design Lab) led by Professor Myungseok Kim.

“I’ve considered and been disappointed about the role of designers in robot engineering while I’ve been designing robots. I am very proud that my robot design has been recognized in an academic conference of world-famous robot engineers and gained confidence,” Kwak said.

2006.09.27 View 15261

Sona Kwak wins first prize in international robot design contest

Sona Kwak wins first prize in international robot design contest

Sona Kwak (Doctor’s course, Department of Industrial Design) won the first prize in an international robot design contest.

Kwak exhibited an emotional robot of ‘Hamie’ at ‘Robot Design Contest for Students’ in Ro-Man 2006/ The 15th IEEE International Symposium on Robot and Human Interactive Communication, which was held at University of Hertfordshire, United Kingdom for three days from September 6 (Wed) and obtained the glory of the first prize.

‘Hamie’, the work of the first prize, has been devised in terms of emotional communication among human beings. The design concept of ‘Hamie’ is a portable emotional robot that can convey even ‘intimacy’ using senses of seeing, hearing, and touching beyond a simple communication function. The design of ‘Hamie’ was estimated to best coincide with the topic of the contest in consideration of its function that allows emotional mutual action between human beings as well as mutual action between human and robot, or robot and robot. ‘Hamie’ is not an actual embodiment but proposed as ‘a concept and design of a robot’.

‘Ro-man’ is a world-famous academic conference in the research field of mutual action between robot and human being, and ‘Robot Design Contest for Students’ is a contest to scout for creative and artistic ideas on the design and structure of future robots and exhibits works from all over world.

Kwak is now seeking to develop the contents and designs of various next-generation service robots such as ▲ ottoro ? cleaning robot ▲ robot for blind ▲ robot for the old ▲ robot for education assistance ▲ robot for office affairs ▲ ubiquitos robot in her lab (PES Design Lab) led by Professor Myungseok Kim.

“I’ve considered and been disappointed about the role of designers in robot engineering while I’ve been designing robots. I am very proud that my robot design has been recognized in an academic conference of world-famous robot engineers and gained confidence,” Kwak said.

2006.09.27 View 15261