research

Enhanced Video Quality despite Poor Network Conditions

View : 4838

Date : 2019-01-22

Writer : ed_news

Professor Jinwoo Shin and Professor Dongsu Han from the School of Electrical Engineering developed neural adaptive content-aware internet video delivery. This technology is a novel method that combines adaptive streaming over HTTP, the video transmission system adopted by YouTube and Netflix, with a deep learning model.

This technology is expected to create an internet environment where users can enjoy watching 4K and AV/VR videos with high-quality and high-definition (HD) videos even with weak internet connections.

Thanks to video streaming services, internet video has experienced remarkable growth; nevertheless, users often suffer from low video quality due to unfavorable network conditions. Currently, existing adaptive streaming systems adjust the quality of the video in real time, accommodating the continuously changing internet bandwidth. Various algorithms are being researched for adaptive streaming systems, but there is an inherent limitation; that is, high-quality videos cannot be streamed in poor network environments regardless of which algorithm is used.

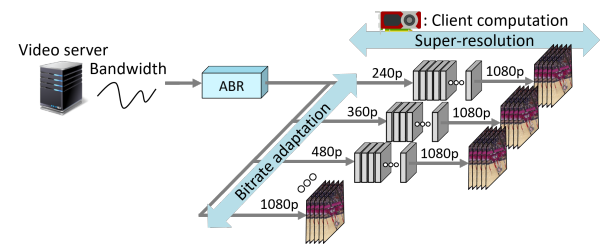

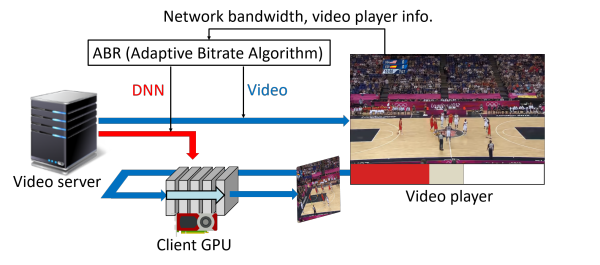

By incorporating super-resolution in adaptive streaming, the team overcame the limit of existing content distribution networks, of which their quality relies too much on the bandwidth. In the conventional method, the server that provides the video splits a video into certain lengths of time in advance. But the novel system introduced by the team allows the downloading of neural network segments. To facilitate this method, the video server needs to provide deep neural networks for each video segment as well as sizes of Deep Neural Networks (DNN) according to the specifications of the user’s computing capacity.

The largest neural network size is two megabytes, which is considerably smaller than video. When downloading the neural network from the user’s video player, it is split into several segments. Even its partial download is sufficient for a slightly comprised super-resolution.

While playing the video, the system converts the low quality video to a high-quality version by employing super-resolution based on deep convolution neural networks (CNN). The entire process is done in real time, and users can enjoy the high-definition video.

Even with a 17% smaller bandwidth, the system can provide the Quality of Experience equivalent to the latest adaptive streaming service. At a given internet bandwidth, it can provide 43% higher average QoE than the latest service.

Using a deep learning method allows this system to achieve a higher level of compression than the existing video compression methods. Their technology was recognized as a next-generation internet video system that applies super-resolution based on a deep convolution neural network to online videos.

Professor Han said, “So far, it has only been implemented on desktops, but we will further develop applications that work in mobile devices as well. This technology has been applied to the same video transmission systems used by streaming channels such as YouTube and Netflix, and thus shows good signs for practicability.”

This research, led by Hyunho Yeo, Youngmok Jung and Jaehong Kim, was presented at the 13th UNSENIX OSDI conference on October 10 2018 and completed for filing international patent application.

For further information, please click here.

Figure 1. Image quality before (left) and after (right) the technology application

Figure 2. The technology Concept

Figure 3. A transition from low-quality to high quality video after video transmission from the video server

Releated news

- No Data