research

Researchers propose a deep neural network-based forward design space exploration using active transfer learning and data augmentation

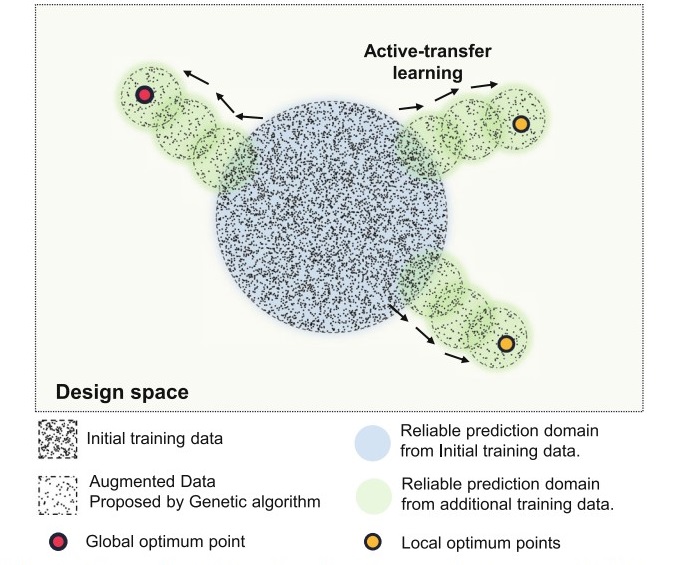

< Figure 1: Schematic of deep learning framework for material design space exploration. Schematic of gradual expansion of reliable prediction domain of DNN based on the addition of data generated from the hyper-heuristic genetic algorithm and active transfer learning. >

-

research Seanie Lee of KAIST Kim Jaechul Graduate School of AI, named the 2023 Apple Scholars in AI Machine Learning

Seanie Lee, a Ph.D. candidate at the Kim Jaechul Graduate School of AI, has been selected as one of the Apple Scholars in AI/ML PhD fellowship program recipients for 2023. Lee, advised by Sung Ju Hwang and Juho Lee, is a rising star in AI. < Seanie Lee of KAIST Kim Jaechul Graduate School of AI > The Apple Scholars in AI/ML PhD fellowship program, launched in 2020, aims to discover and support young researchers with a promising future in computer science. Each year, a handful of

2023-04-20 -

people Yuji Roh Awarded 2022 Microsoft Research PhD Fellowship

KAIST PhD candidate Yuji Roh of the School of Electrical Engineering (advisor: Prof. Steven Euijong Whang) was selected as a recipient of the 2022 Microsoft Research PhD Fellowship. < KAIST PhD candidate Yuji Roh (advisor: Prof. Steven Euijong Whang) > The Microsoft Research PhD Fellowship is a scholarship program that recognizes outstanding graduate students for their exceptional and innovative research in areas relevant to computer science and related fields. This year, 36 peop

2022-10-28 -

research Decoding Brain Signals to Control a Robotic Arm

Advanced brain-machine interface system successfully interprets arm movement directions from neural signals in the brain Researchers have developed a mind-reading system for decoding neural signals from the brain during arm movement. The method, described in the journal Applied Soft Computing, can be used by a person to control a robotic arm through a brain-machine interface (BMI). A BMI is a device that translates nerve signals into commands to control a machine, such as a computer or a rob

2022-03-18 -

research CXL-Based Memory Disaggregation Technology Opens Up a New Direction for Big Data Solution Frameworks

A KAIST team’s compute express link (CXL) provides new insights on memory disaggregation and ensures direct access and high-performance capabilities A team from the Computer Architecture and Memory Systems Laboratory (CAMEL) at KAIST presented a new compute express link (CXL) solution whose directly accessible, and high-performance memory disaggregation opens new directions for big data memory processing. Professor Myoungsoo Jung said the team’s technology significantly improves p

2022-03-16 -

research AI Light-Field Camera Reads 3D Facial Expressions

Machine-learned, light-field camera reads facial expressions from high-contrast illumination invariant 3D facial images A joint research team led by Professors Ki-Hun Jeong and Doheon Lee from the KAIST Department of Bio and Brain Engineering reported the development of a technique for facial expression detection by merging near-infrared light-field camera techniques with artificial intelligence (AI) technology. Unlike a conventional camera, the light-field camera contains micro-lens arrays

2022-01-21